1. Introduction

A three-dimensional (3D) geographical scene is an important way to understand the real world. In the 3D geographic information system (GIS) field, the modelling and visualization of 3D geographical scenes has been a hot issue. Researchers have made great efforts to build 3D models by software. Some work focused on integrating building information modeling (BIM) [

1] and computer-aided design (CAD) [

2] models in the architecture and design domain into GIS [

3]. The main work was employing spatial information in BIM and CAD models. The City Geopraphy Markup Language (CityGML), an open, exchangeable, and XML-based 3D city model, was developed by the Open Geospatial Consortium (OGC) for the global geospatial community [

4]. Those modelling methods promote the development of 3D GIS. However, many of the 3D models and 3D GIS are restricted to a two-dimensional (2D) visualization platform, hindering the understanding and expression of the real world from 3D space [

5]. To overcome the limitations, some efforts have been made to display 3D models/scene/systems through wearable devices. Stereoscopic visualization with 3D glasses is one type. For example, the GeoWall a Cave Automatic Virtual Environment (CAVE) displays a 3D scene with 3D glasses for geoscience research and education [

6]. This method displays 3D scenes well, but the interaction ability between the user and the system is poor.

With the developments of information technologies and hardware technologies, virtual reality (VR), augmented reality (AR), and mixed reality (MR) have been developed [

7]. VR is a kind of realistic 3D virtual scene generated by a computer. Users can interact with and perceive the virtual scene with the help of necessary hardware devices, and thus produce an immersive computer system. AR is the extension and expansion of VR. Through AR equipment, the computer-generated virtual environment and objective real world coexist in the same AR system, which makes users present an enhanced reality environment integrating virtual objects. MR is a further development and combination of AR and VR. By introducing real environment information into the virtual reality, users can construct an interactive information loop among the virtual world, real world, and users, thus enhancing users’ experience. VR, AR, and MR adopt 3D display technology, which enables users to recognize and understand a computer-generated world from the perspective of 3D glasses and solves the problem that users are restricted to the perspective of a 2D screen [

7].

MR has applications in 3D GIS. Some applications are actual common 3D GIS systems with VR ideas, but their visualizations are based on the 2D screens of a computer [

8,

9,

10,

11,

12]. Some MR systems in GIS visualized with VR devices are emerging [

13,

14,

15,

16]. MR can fix the virtual object in real space to give people a sense of reality. Well-known MR devices and systems are Google Cardboard [

17], Microsoft HoloLens [

18], HTC Vive [

19], Facebook Oculus Rift [

20], and Sony PlayStation [

21].

The Microsoft HoloLens is the first untethered and advanced MR headset [

22,

23,

24]. The HoloLens has advanced features in 3D stereoscopic displays, such as gaze, gesture capture, spatial sound, and spatial mapping. Therefore, the HoloLens is widely used in the research of visual applications, such as film [

24], education [

25], robots [

26,

27], disaster and emergency management [

28], virtual laboratories [

29], the Mars exploration project [

7], and medical treatment and care [

23,

30,

31,

32,

33,

34]. Also, the HoloLens is currently applied to the study of 3D geographic scenes [

5]. Since the HoloLens is a general MR device, there is a gap between the device and its application in holographic 3D geographic scenes. To fill the gap, it should, especially dynamically, model and visualize a holographic 3D geographical scene with GIS data/information under the development mechanism of the HoloLens. The work modelling and visualizing a 3D geographical scene with prepared models has been done [

5]. However, the work modelling and visualizing a 3D geographical scene with timely GIS data has not been done yet.

This paper furthers our previous work, which only studied the visualization of 3D geographic scenes with prepared models based on the HoloLens glasses [

5]. The main goal of this paper was to propose an architecture using HoloLens glasses to model and visualize holographic 3D geographic scenes with timely data. Here, the timely data mainly refers to the data obtained from the web service at the best possible moment. To test the feasibility of the design architecture, this paper used the HoloLens developer version as the experimental platform, using OpenStreetMap data [

35] and Bing Map Server data [

36] as test data to carry out experiments. The experimental results show that the HoloLens can be used to create and display 3D geographic scenes, bringing useful human–computer physical interaction experiences and visual impact experiences to users. The contribution of this paper is that it shows an approach to model and visualize a holographic 3D geographical scene with timely GIS data/information under the development mechanism of the HoloLens. It would be meaningful to broaden the applications of the HoloLens in GIS.

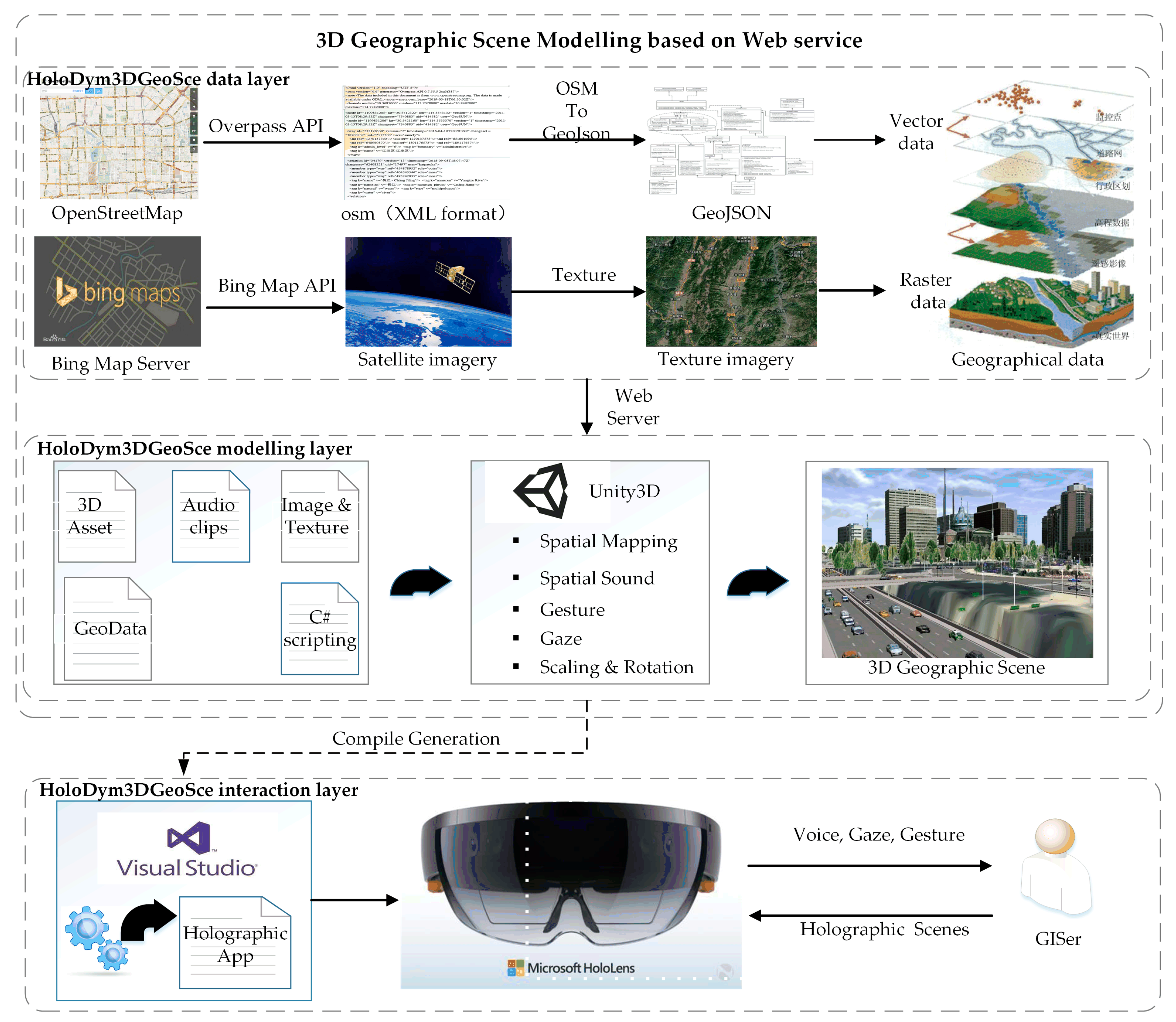

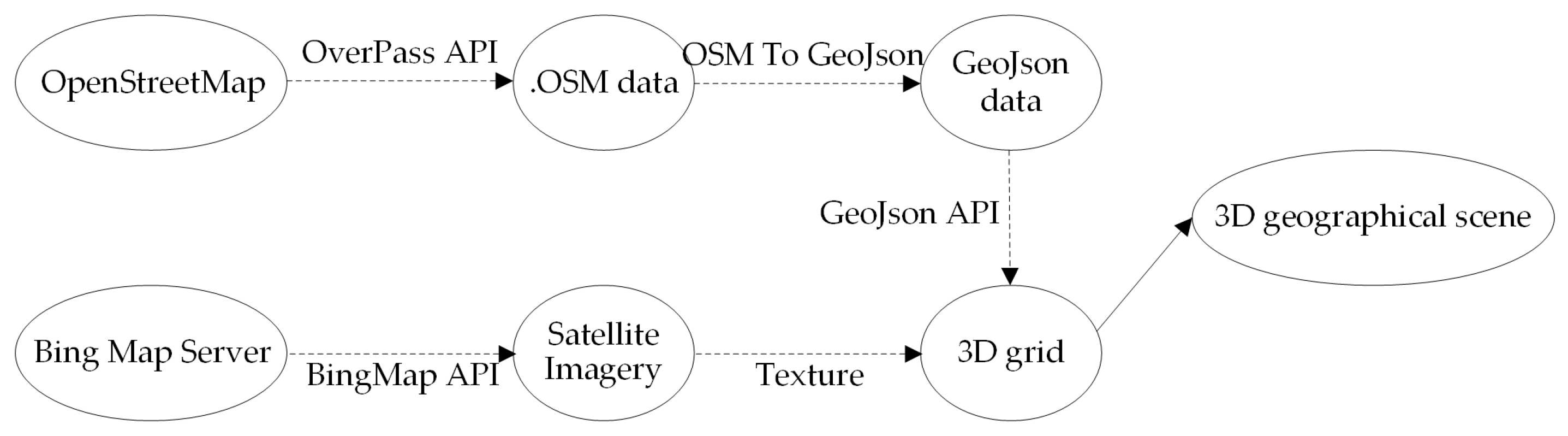

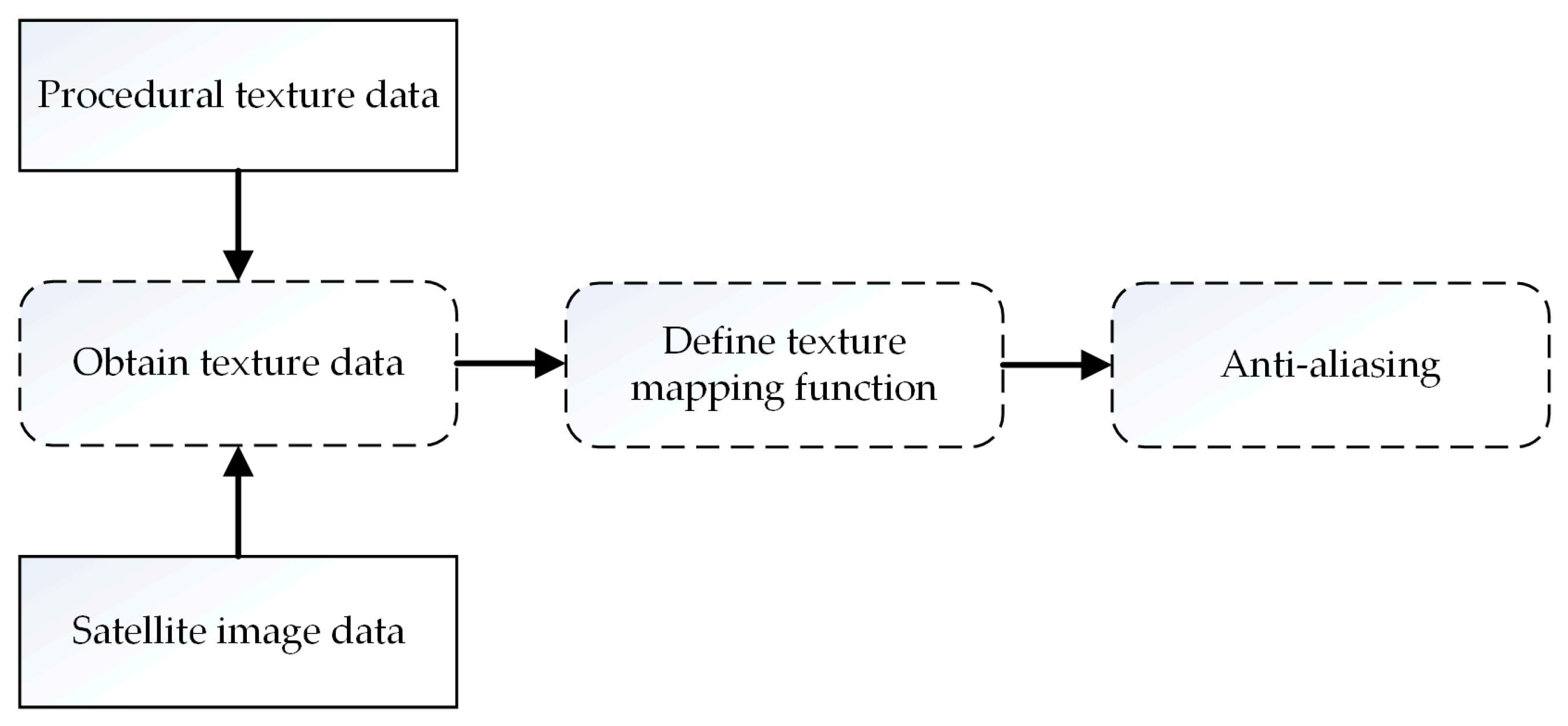

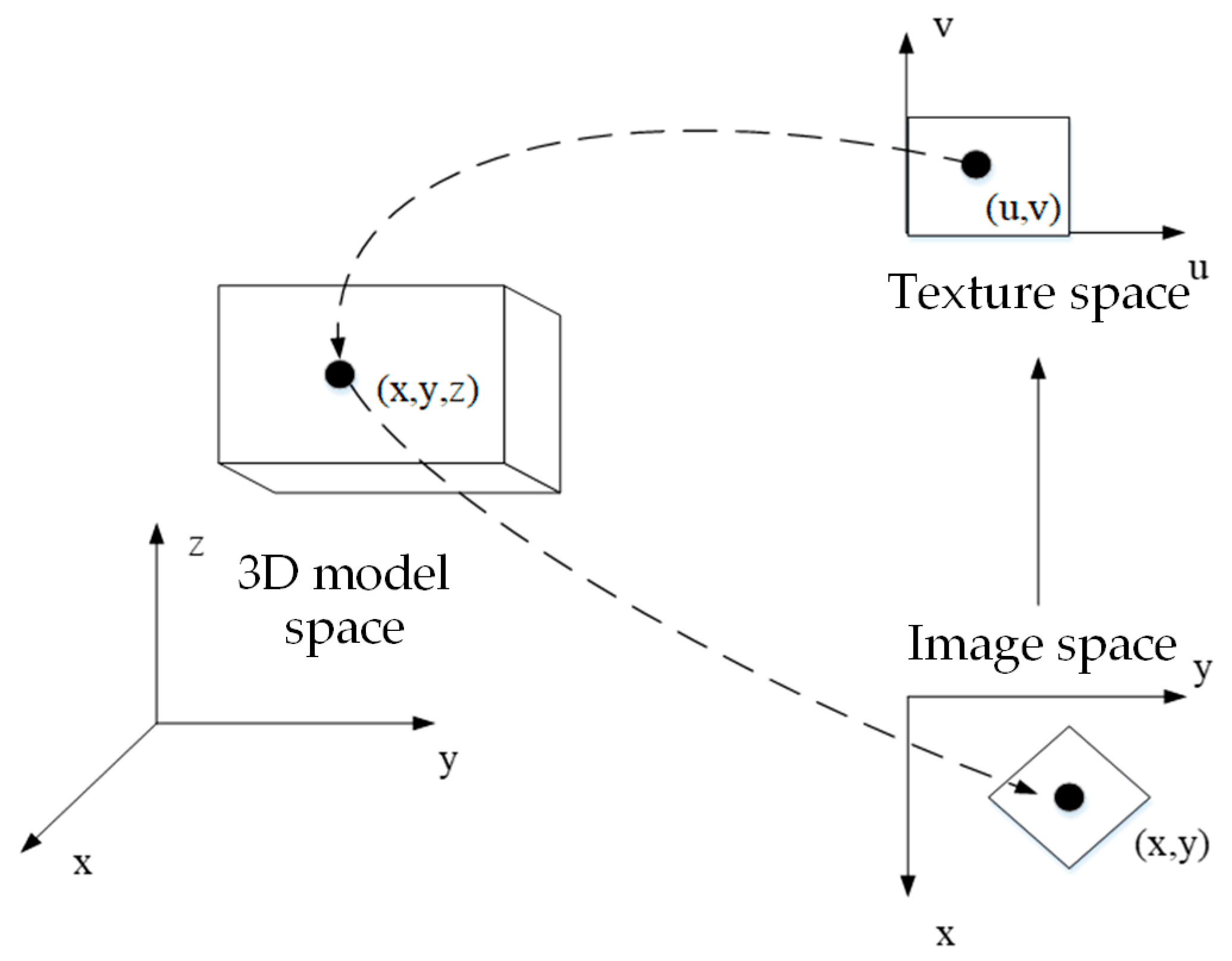

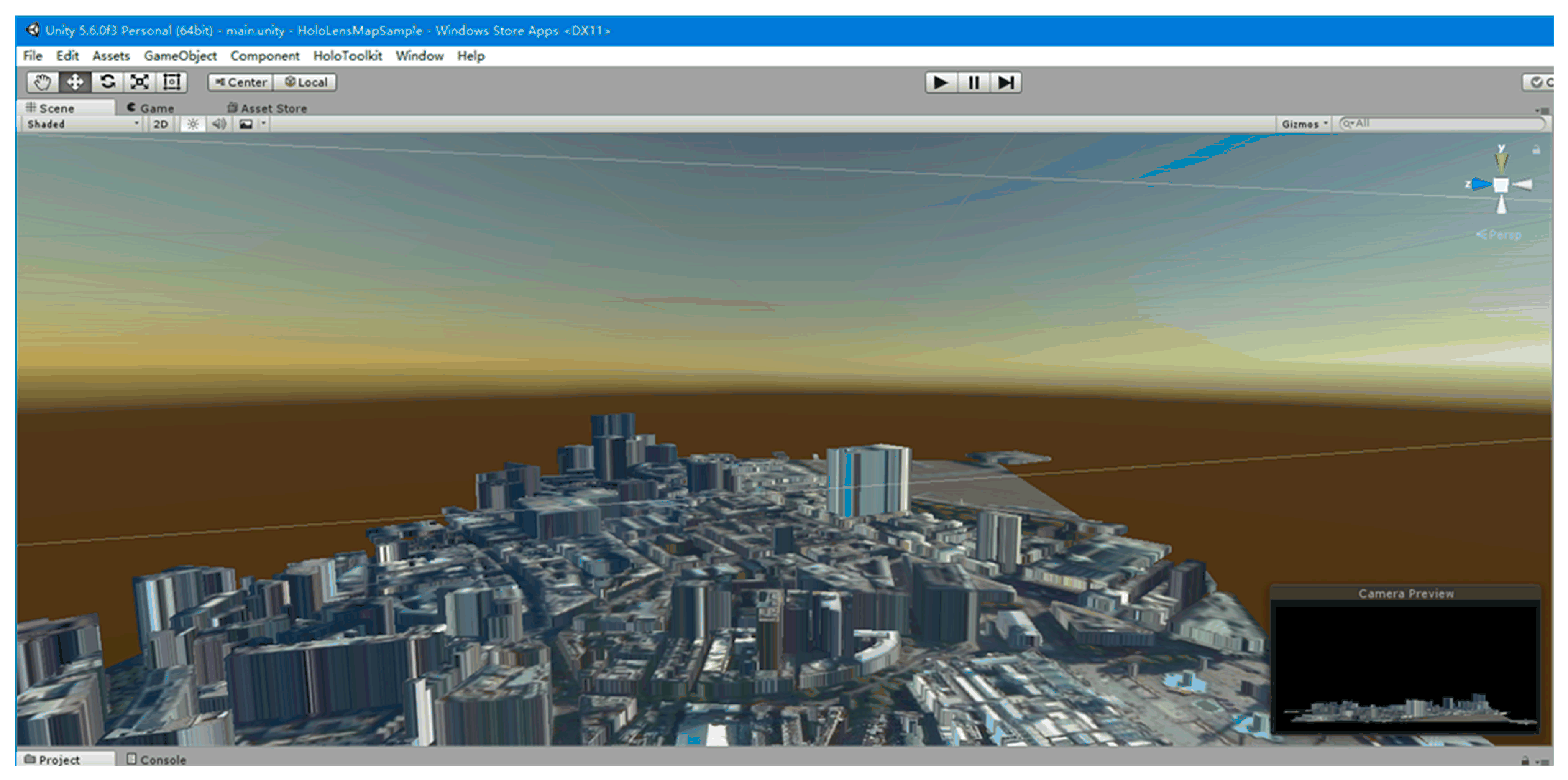

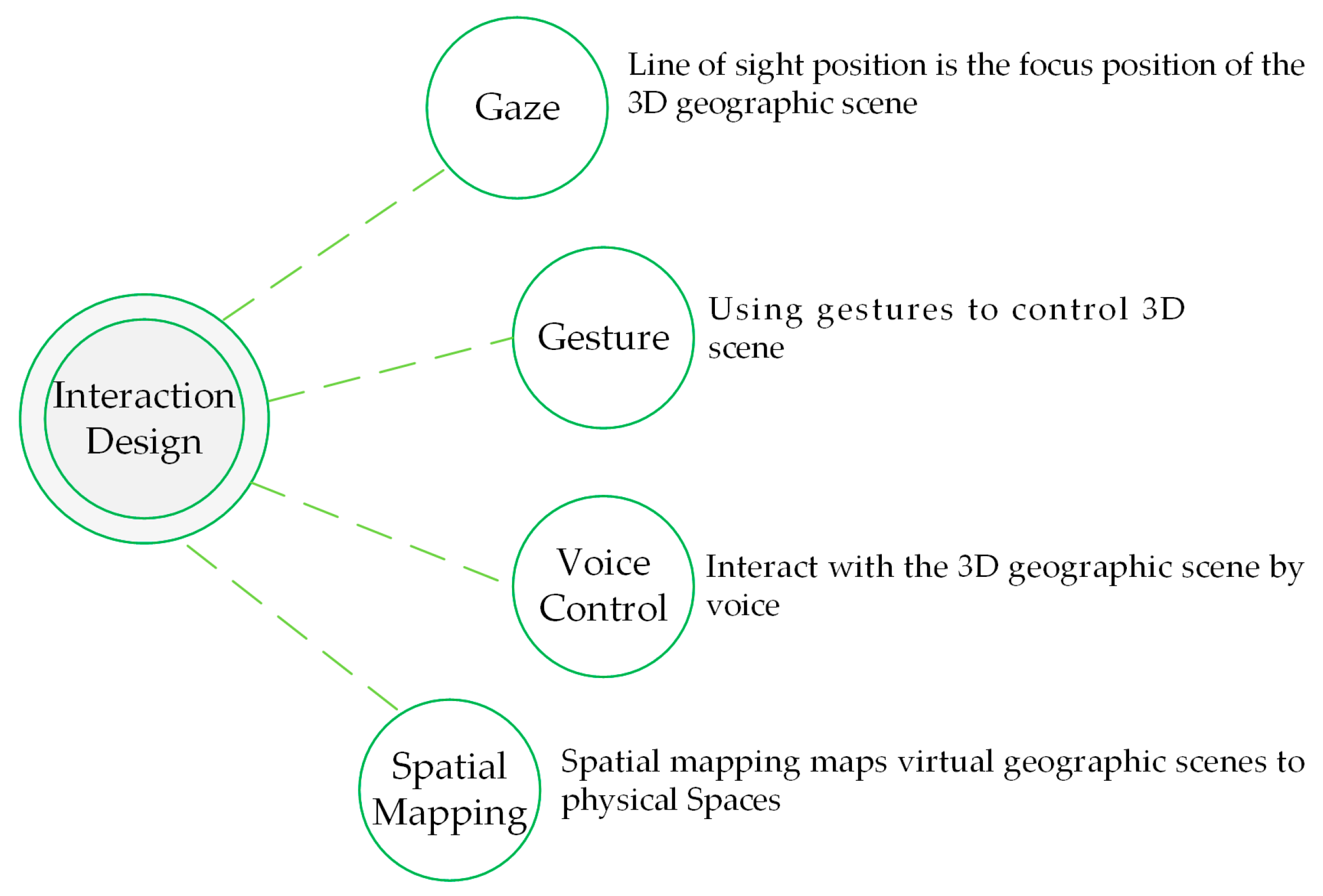

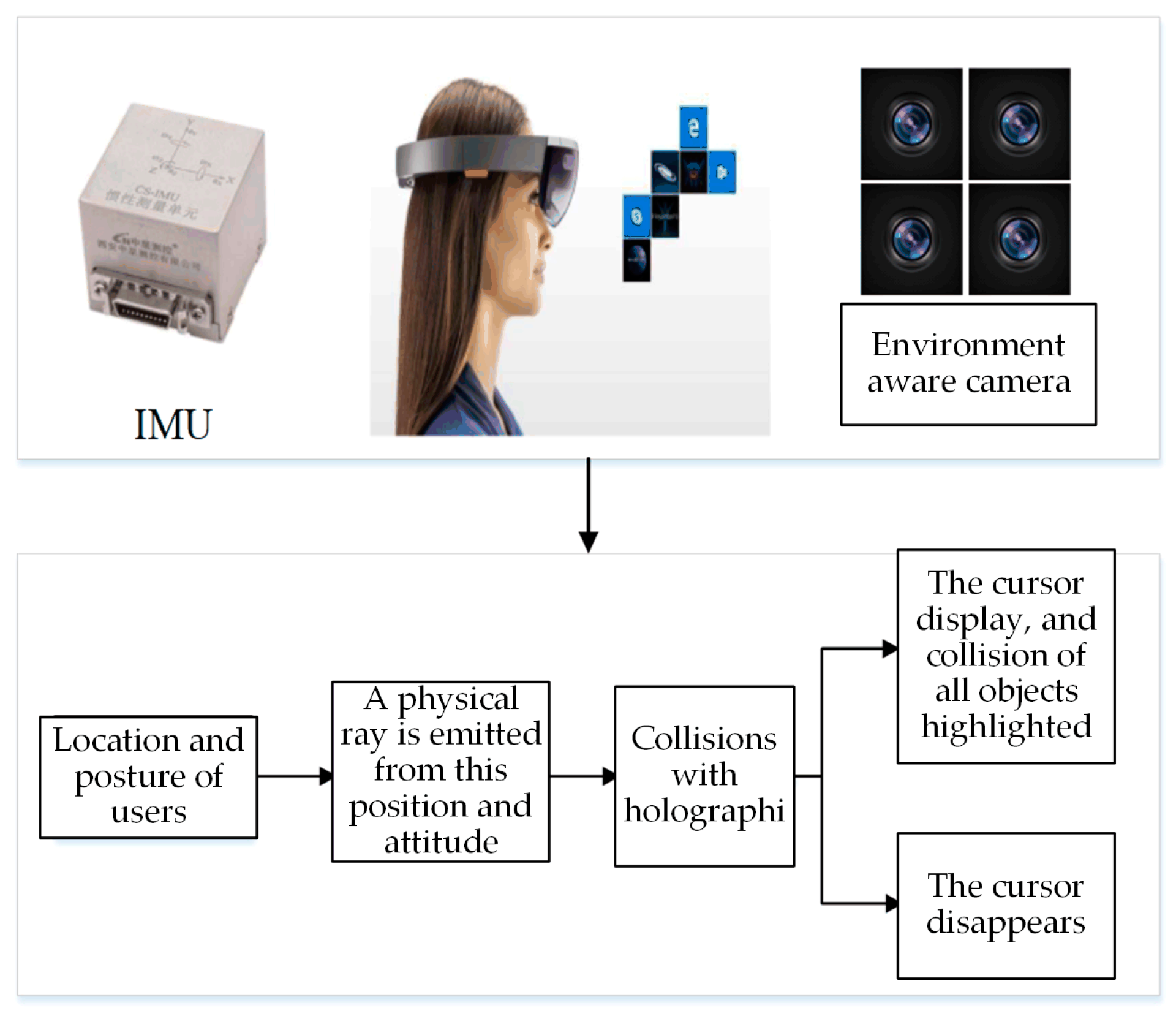

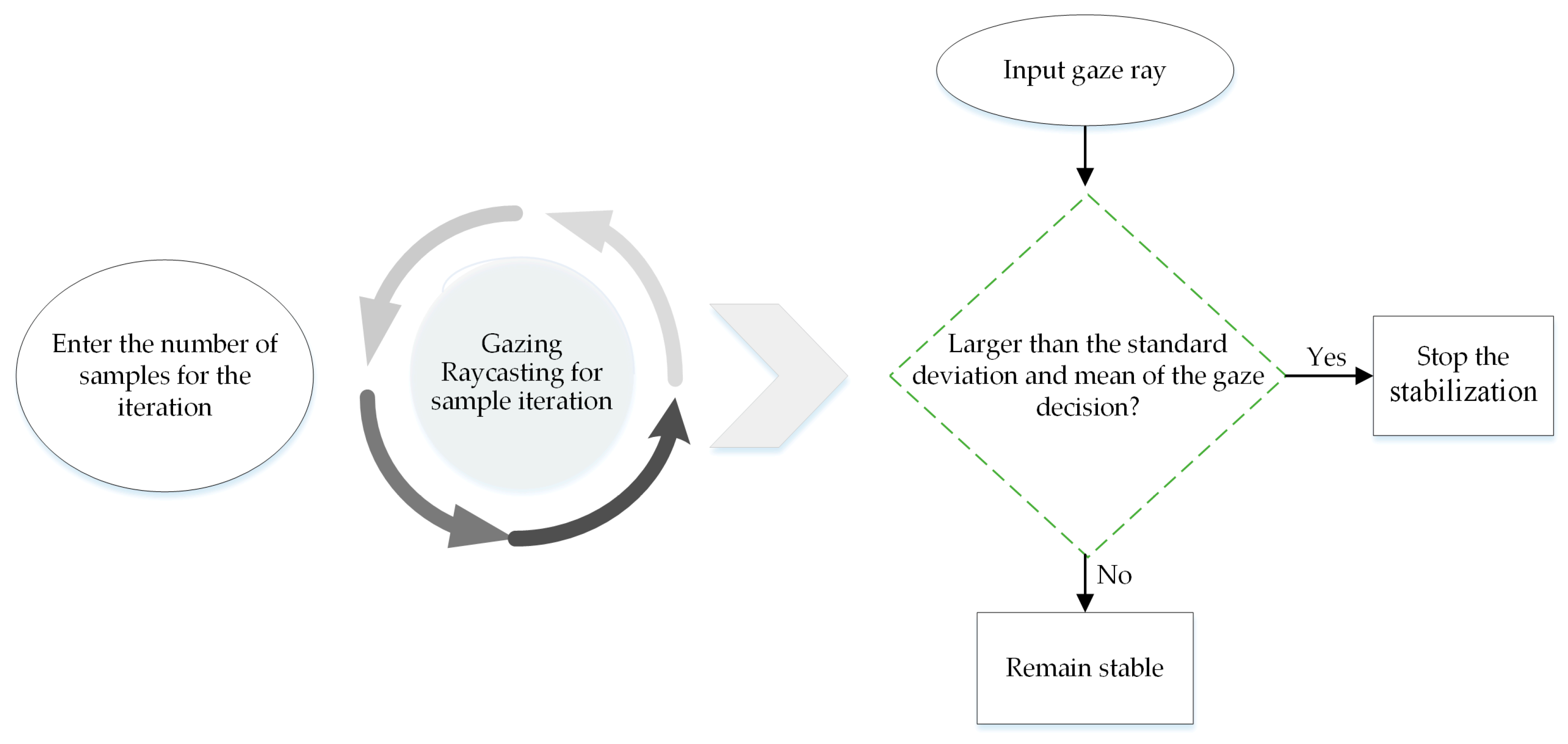

3. HoloDym3DGeoSce Experiments

According to the framework and method proposed above, this paper designed an experimental scheme based on holographic glasses, the HoloLens, to model and render 3D geographic scenes with timely data. This scheme was developed using the HoloDym3DGeoSce app. The experiment mainly uses GIS technology, Web service technology, and mixed reality as well as other technologies. The above technologies were implemented by OpenStreetMap, BingMap Server, Unity3D, and the HoloLens platform. The specific objectives and contents of the experiment were as follows: (1) Virtual reality fusion: Virtual geographic scenes are brought from the computer screen into the real world and map a virtual 3D digital city to the actual world through spatial mapping technology; (2) 3D GIS new human–computer physical interaction mode: The traditional 3D GIS mouse-based and keyboard-based human–computer interaction mode is changed and manipulation of holographic 3D geographic scenes is accomplished by means of gaze, gestures, and voice; (3) modelling holographic geography scenes with timely data: The geographic scene model is dynamically loaded through the Web service and textures are applied to the model to create a more realistic and dynamic 3D geographic scene in the real world; and (4) dynamic holographic geographic scene performance analysis and problems: The problems of this method are analyzed. The overall goal was to solve the problem of geographic information visualization provided by a traditional 3D GIS 2D screen by using HoloLens 3D stereo display technology, to bring users a new true 3D visual experience.

3.1. Experimental Preparation

The experimental preparation mainly includes three parts: Data, software, and hardware. Among these, the data are the basic content of the experiment. The software is used to develop, debug, and deploy the HoloDym3DGeoSce system. The hardware provides a platform for running, computing, and 3D rendering the visualization for the HoloDym3DGeoSce system.

Data: OpenStreetMap [

35], BingMap Server map data and image data [

36].

Software: Unity3D 5.4.0f3 (the main engine developed by HoloDym3DGeoSce), Visual Studio Community 2015 (application development, compilation and deployment platform), and Win10 Professional Edition (HoloLens development requires Windows 10 Professional, Enterprise and Education).

Hardware: Microsoft HoloLens Developer Edition, PC (Dell, Intel (R) Xeon (R) CPU E3-1220 v3 @ 3.10 GHz (4 CPUs), ~3.1 GHz).

3.2. Experimental Results and Analysis

This experiment developed the HoloDym3DGeoSce system, which achieves mapping of 3D geographic scenes to the physical world, providing users with holographic 3D geographic scenes, and bringing users a new 3D GIS human–computer interaction. HoloDym3DGeoSce users need to wear HoloLens glasses to obtain holographic geographic scene images from a first-person perspective. To obtain experimental results, the HoloDym3DGeoSce application holographic view of the user’s first-person view is projected onto a computer screen to form a video stream by using the Mixed Reality Capture system in HoloLens Device Portal. All results in this article are from the video stream captured by the Mixed Reality Capture system. Because the Mixed Reality Capture system uses a 2-megapixel RGB (Red-Green-Blue) camera, there is no effect based on the first-person perspective of the HoloLens glasses. Therefore, the spatial mapping result graphs and the holographic geographic scene result graphs in the experiment simultaneously capture the real physical world and the holographic image.

3.2.1. Dynamic Holographic Geographic Scenario

A dynamic holographic geographic scenario is a geographical scenario created by dynamically loading geographic data through a Web service. In this experiment, according to the method of

Section 2.1, for Web-based service 3D geographic scene creations, the user accomplishes switching of the target area by acquiring the geographic scene of the target area on demand by using the HoloLens holographic glasses.

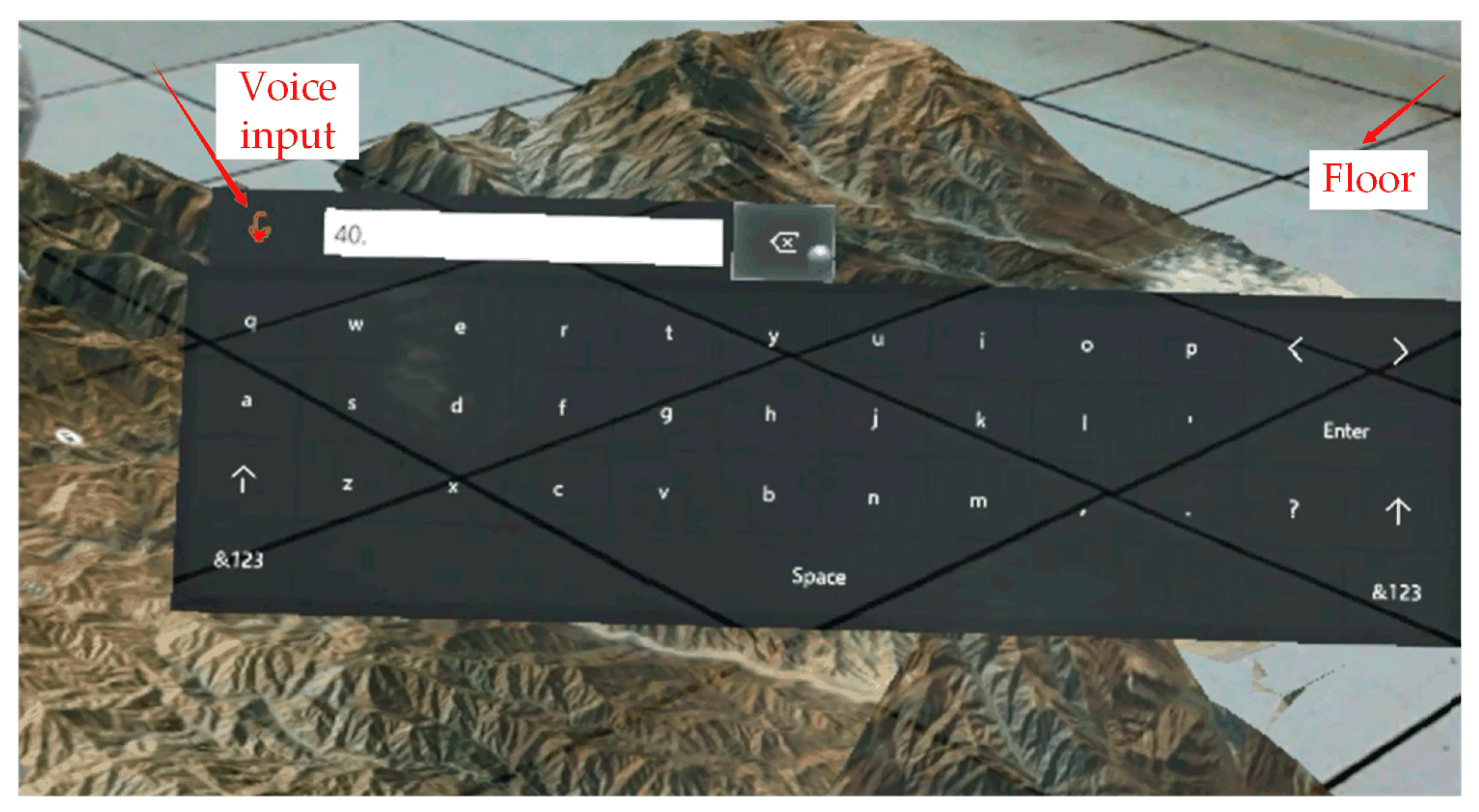

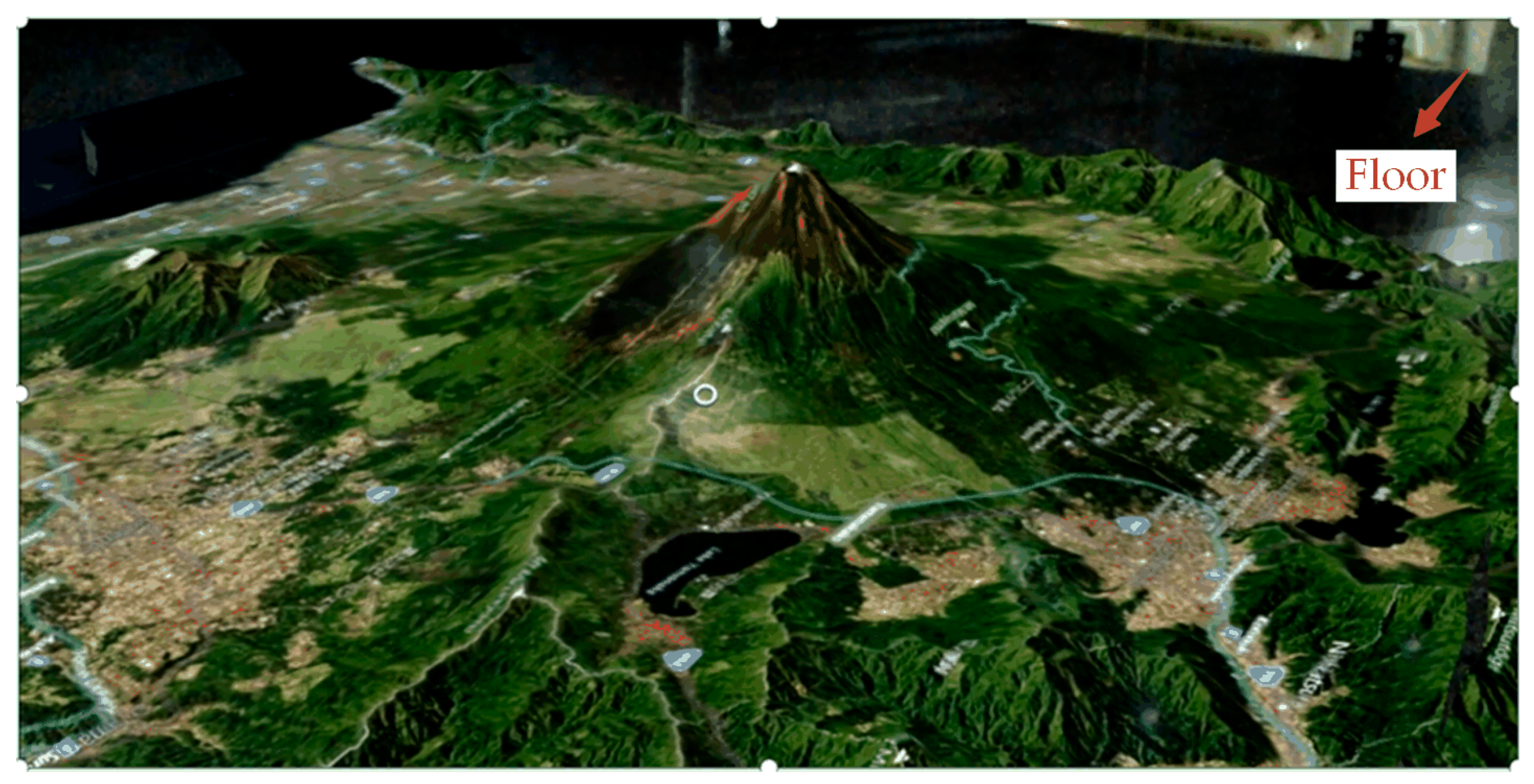

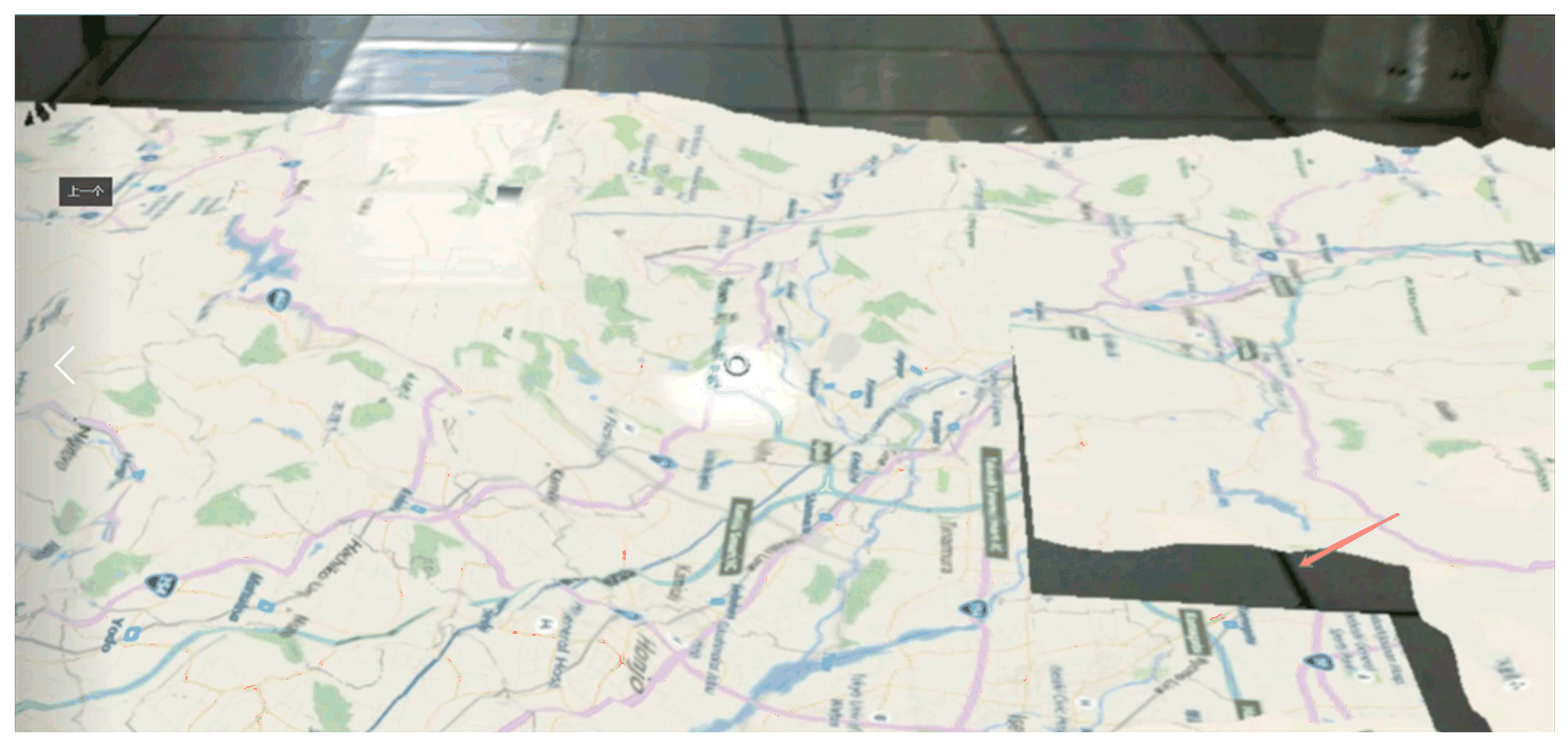

Figure 9 and

Figure 10 are schematic diagrams of a holographic geographic scene dynamically projected onto the floor, where the red arrow points to the physical floor and the floor is a virtual geographic scene, and the two are spatially mapped to achieve virtual and real fusion.

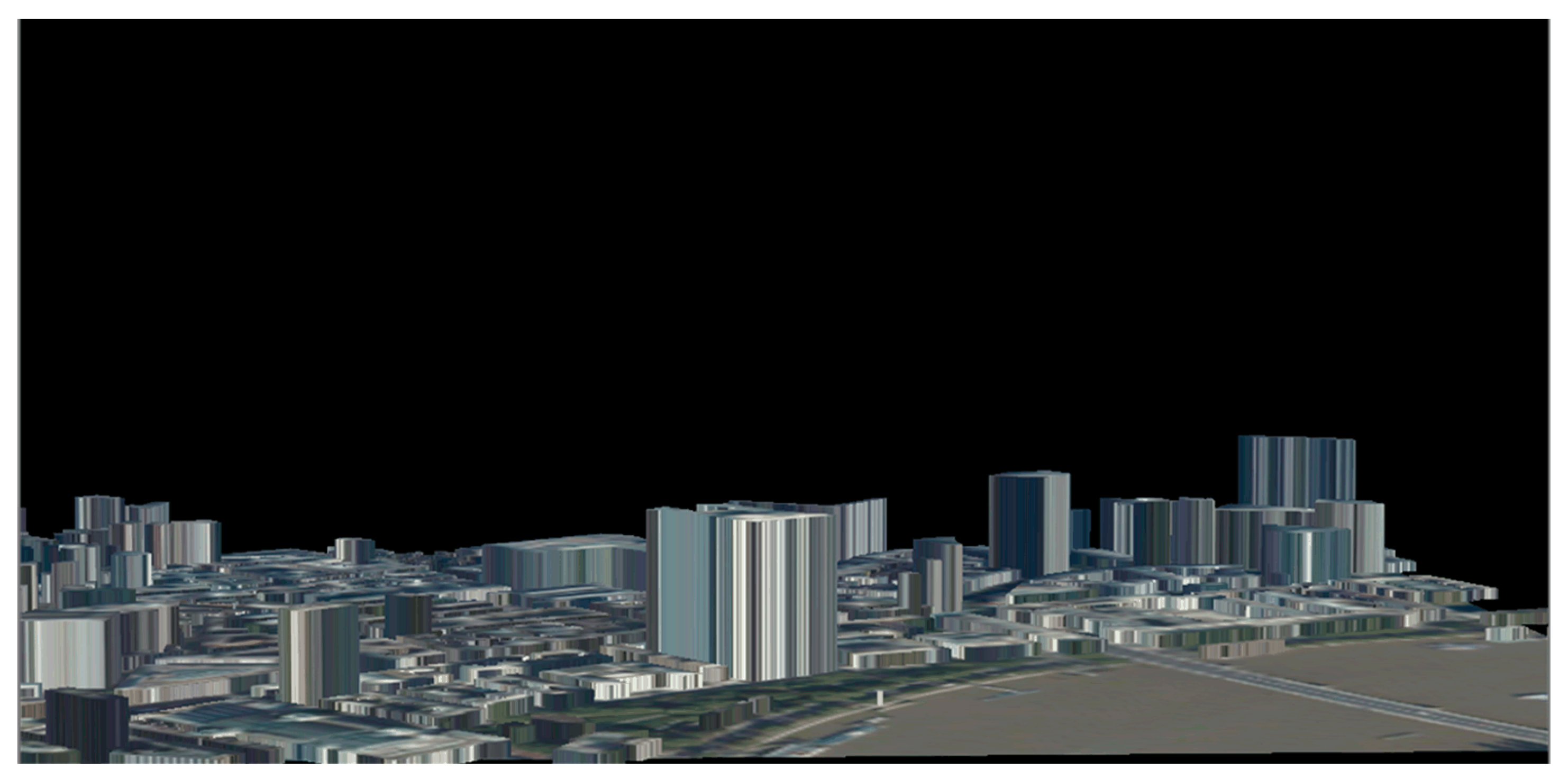

Figure 11 is a schematic diagram of a 3D digital city dynamically created by the HoloLens Emulator. The Emulator is a HoloLens virtual machine. In the absence of a HoloLens device, an Emulator can be used instead of completing the experiment. Static holographic 3D geographic scenes can only load pre-created 3D geographic scenes. The creation of a holographic geographic scene is based on user input of a target location, and scene switching is implemented through network loading. As shown in

Figure 9, this experiment designed two kinds of human–computer physical interaction modes, voice control and manual input, to allow the input of position information. Manual input means that the user can transfer the text information of the target area to the HoloLens application through a virtual keyboard provided by HoloLens and convert the position information into latitude and longitude through geo-inverse coding, thereby realizing the positioning, data acquisition, and 3D scene modelling of the target area.

Voice control is a component of the Dictation Recognizer, as discussed in

Section 2.3.3. The dictation of the user’s voice information is converted into text information, and the dictation result is presented to the user. If the dictation result is information that the user wishes to input, it is submitted to achieve positioning; if the voice recognition is incorrect, the dictation results can be changed by re-entering the voice message or in a manual fashion. As shown in

Figure 9, when the voice mark is red, the system begins to monitor the user’s voice input information, and the dictation result is automatically updated in the input box of the virtual keyboard. Voice control is essentially the same as manual input. The former is more convenient and faster, but the recognition accuracy is not high. In this experiment, two states are designed by holographic geographic scenes created by human–computer physical interaction positioning and data acquisition. The first holographic geographic scene is user-centered and dynamically moves and updates geographic scenes in the physical space as the user moves beyond a set distance. The second state is a fixed geographical scene; the holographic geographic scene is locked in the physical space, and its position does not move and change. The user can observe the holographic scene from different perspectives by moving his position and experience depth and perspective information.

3.2.2. Analysis and Problems of Holographic Geographic Scenes

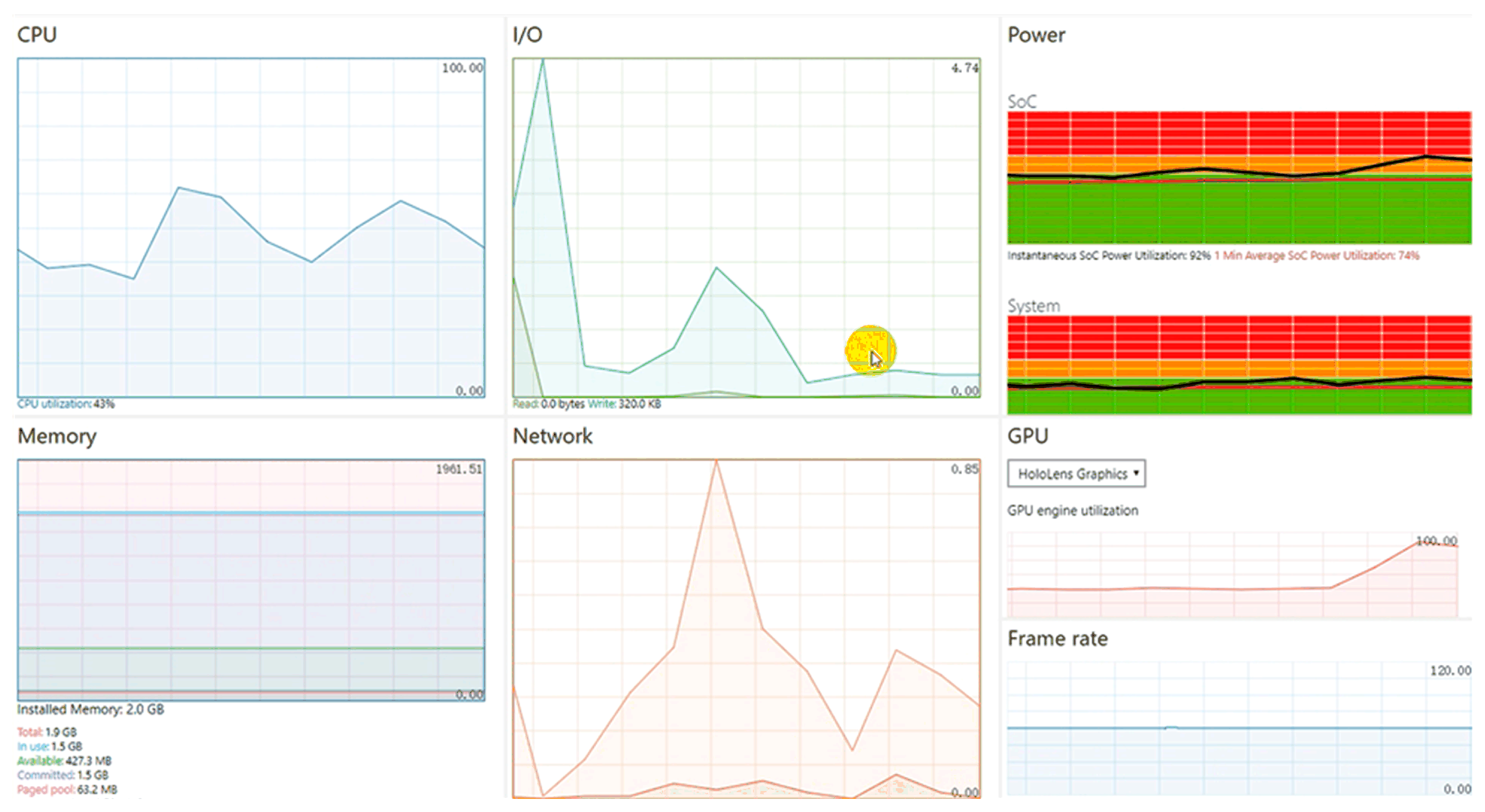

As shown in

Figure 12, performance graphs based on the dynamic holographic geography of the Web service were created. The data are organized in this paper. The results are shown in

Table 1. As it can be seen from the chart, HoloLens uses 0 Mb, I/O throughput is 0 MB, GPU usage is 40%, and CPU usage is 20% before the geographic scene is created. When the geographical scene starts to be created, the network usage rises to 0.85 Mb, I/O throughput rises to 4.74 MB, GPU utilization increases to 90%, and CPU usage increases to 43%. From this data, it can be determined that the dynamic creation of geographic scenes creates the greatest network and GPU demand, so a good network environment and high-performance GPUs are crucial for this experiment. In addition, it can be seen from the figure that the increases in the usage rates of the network, I/O, and GPU are delayed, mainly because the data are first loaded through the network, the data are then loaded into the memory, and the GPU finally renders the data. As seen from the experimental results in

Section 3.2.1 and in the performance graph in

Figure 12, it is feasible for HoloLens to dynamically create a 3D geographic scenario based on Web services.

Although it is possible to dynamically create a geographic scene through the network, there are some problems. As shown in

Figure 13, the main problem relates to the OpenStreetMap map data loaded by HoloLens through the network. The red mark in the figure indicates the missing portion of the map data. The missing data is due to data transmission failure during the process of loading data over the network. On the other hand, real-time data rendering has high requirements for hardware performance. Currently, the HoloLens developer version used in this experiment does not meet this requirement very well.

3.2.3. Human Computer Interact Test

To show the users’ experience with the HoloDym3DGeoSce application, an un-strict human computer interact (HCI) Test was done. The tasks of the test were to see the 3D digital city scenario described in

Section 3.2.2. Ten people were selected as shown in

Table 2. The evaluation index system with six indexes as visual clarity (the clarity of the three-dimensional picture presented by the system), system efficiency (the smoothness of the picture presented by the system), easy learning (how easy is the system to use under the instructions of professionals), interoperability (how easy is the system to operate through human–computer interaction), comfort (the visual and stress comfort of people when use the system with a headset), and flexibility (display the required scale of three-dimensional picture according to the user’s needs) adopted by [

5] was followed. Each index was evaluated with a score by a number among 1 to 10. The number of the scores indicated the degree of satisfaction, where 1 was the worst and 10 was the best. The testers gave the score according to the satisfaction of each index. The scores were asked by the author according to the six indexes. The test results are shown in

Table 2. From the results, we can see that all six indexes have high scores (four in indexes are 10, one index is 9.8, and one index is 9.7). The results demonstrate that the HoloDym3DGeoSce gives users a very good experience. However, the old man and the old woman said the system could not catch what they said occasionally. This may be caused by the voice function of the HoloLens, which is not powerful enough to handle the local accent. Also, the old woman and the schoolchild felt the HoloLens headset was a bit heavy when they wore the device for a long time. These two problems need further improvements.

3.2.4. Brief Comparisons with Previous Studies

The work of this paper furthers the previous work, and the work has inherited the advantages of the previous work compared with traditional 3D GIS, such as: It changes the vision, body sense, and interaction mode of traditional GIS, and enables GIS users to experience real 3D GIS [

5]. With the development of information technologies and sensor technologies, timely geographic information is acquired by omnipresent space-based sensors, air-borne sensors, underground sensors, and human observers [

47]. Thus, geographic information and its services become real-time, dynamic, and online [

48]. GIS has evolved to real-time GIS from the perspective of time [

49,

50]. The previous work studied the visualization of 3D geographic scenes with prepared models based on HoloLens glasses, and the model could not flexibly change with timely data. In the era of real-time GIS, this will limit the applications of the previous work. The work of this paper tried to overcome the shortages of the previous work, and proposed an approach of modelling and visualizing holographic 3D geographic scenes with timely data. From this point, the work is meaningful and makes a great improvement compared with the previous work.

4. Summary and Outlook

This paper mainly described the design architecture of modelling and visualizing 3D geographic scenes with timely data through mixed reality glasses, the HoloLens, with natural human–computer physical interactions (e.g., gaze, gesture, voice). This design architecture was based mainly on three methods: The 3D geographic scene modelling method, the HoloDym3DGeoSce interaction method, and the size scene visualization method. The method proposed in this paper provides a new method and platform for 3D GIS data visualization. To verify the proposed design architecture, this paper tested the proposed method by using OpenStreetMap data, Bing Map Server map data, and image data. The experimental results showed that the Microsoft HoloLens can be used to model and render 3D geographic information.

The work of this paper would have wide applications referring to virtual and real coexistence 3D geographical scenes in the future. Two possible applicable scenarios are taken as an example. One is field natural survey teaching: By sharing a holographic 3D geographic scene with two HoloLens, a teacher in the field can remotely guide students in classes, and the effect is almost the same as the students being in the field. The other is an indoor fire simulation drill: Through a HoloLens system that simulates indoor fire and the real indoor environment, indoor fire simulation drills can be realized.

Future work will focus on integrating the geographic analysis model into the HoloDym3DGeoSce to enrich the HoloDym3DGeoSce’s spatial analysis ability and expand its applications.