Multi-Size Facility Allocation Under Competition: A Model with Competitive Decay and Reinforcement Learning-Enhanced Genetic Algorithm

Abstract

1. Introduction

Related Works

2. Materials and Methods

2.1. Mathematical Modeling

2.1.1. Probabilistic Expressions of Customer Behavior

- (1)

- It is particularly important to define the case when , where the attractiveness should retain its initial value 1, representing the system’s full attractiveness in the absence of competitors. To ensure the validity of the logarithmic decay function under this condition, an appropriate offset term is introduced. This adjustment avoids the undefined behavior of the logarithmic operation and ensures the accuracy and interpretability of the model in its initial state;

- (2)

- As the number of competing facilities increases, the competitive influence exhibits diminishing marginal effects, which aligns with real market competition characteristics;

- (3)

- The introduction of parameter allows us to adjust competitive intensity according to different market environments, enhancing the model’s adaptability.

- By substituting with , Equation offers the advantage of accounting for varying scale effects. In fact, merely doubling the size of a facility does not necessarily result in a doubling of its utility [40];

- The term in Equation is replaced by in Equation (3). This modification simplifies the computation process.

2.1.2. Objective Function

2.1.3. Constraint on Budget for New Facility

2.2. RL-Enhanced Genetic Algorithm

- Parallelism: The algorithm simultaneously operates on multiple individuals within the population, demonstrating strong global search capabilities;

- Randomness: Through random search strategies, it effectively avoids becoming trapped in local optima;

- Adaptability: Individuals within the population continuously evolve to adapt to the objective function, showing robust problem-solving capabilities.

2.2.1. Algorithm Theoretical Framework

2.2.2. Reinforcement Learning Policy Model

2.2.3. Experience Replay and Learning Mechanism

2.2.4. Reward Function Design

2.2.5. Adaptive Parameter Adjustment Mechanism

2.2.6. Algorithm Implementation and Computational Complexity Analysis

2.3. Parameter Estimation and Calibration

2.3.1. Competitive Decay Model Parameters

2.3.2. RL-GA Algorithm Parameters

2.3.3. Facility Scale and Cost Parameters

2.3.4. Validation and Sensitivity Analysis

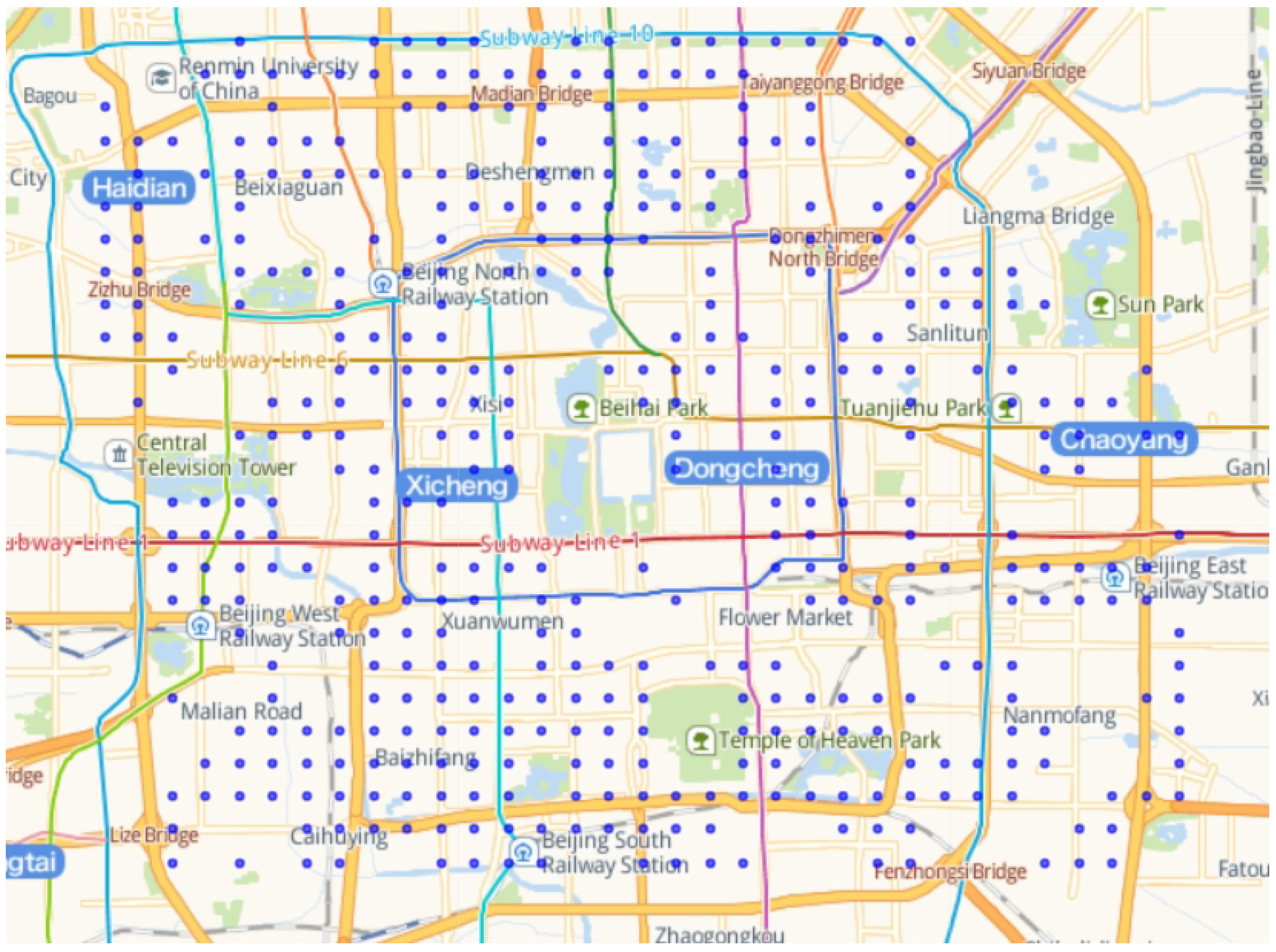

2.4. Real-World Data

3. Results

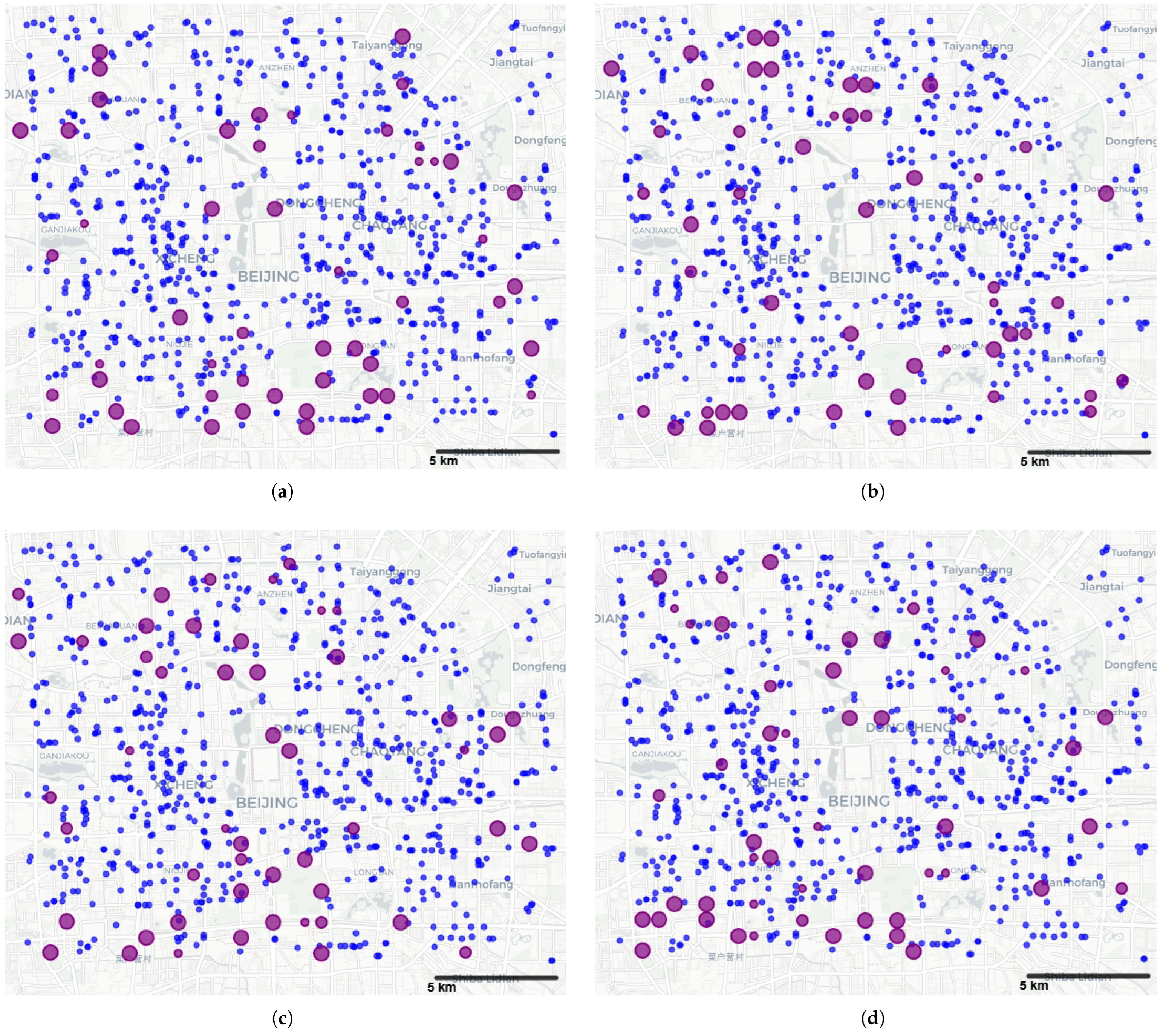

3.1. Optimization Results

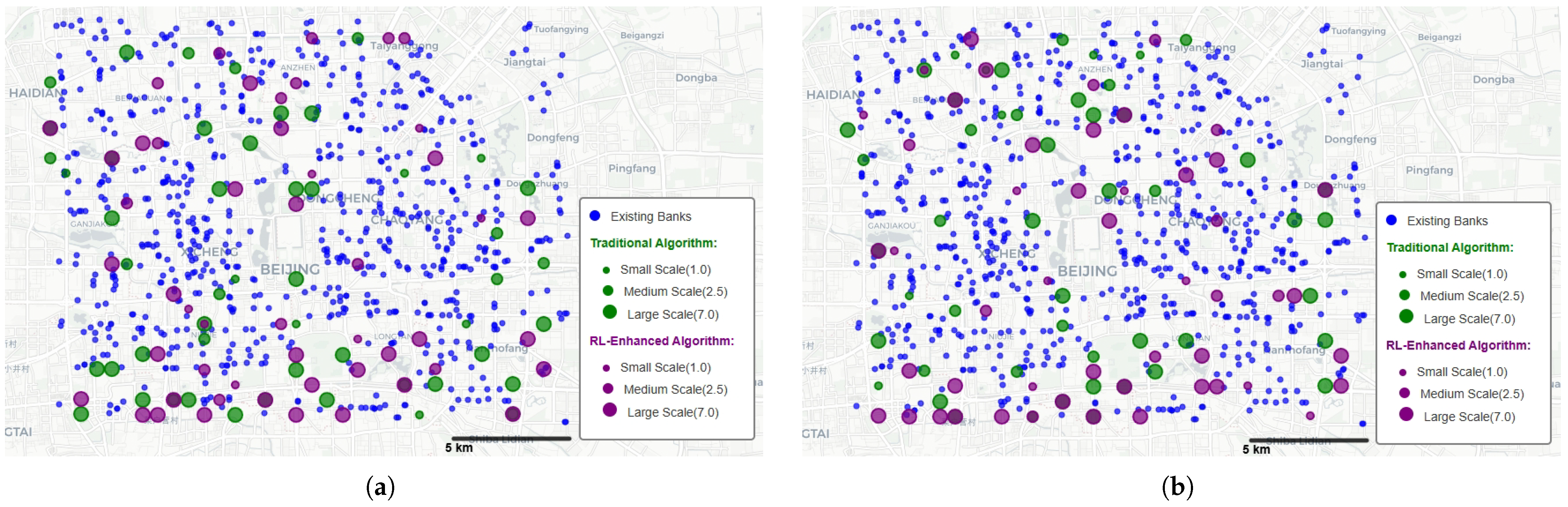

3.2. Comparison with Traditional Genetic Algorithm

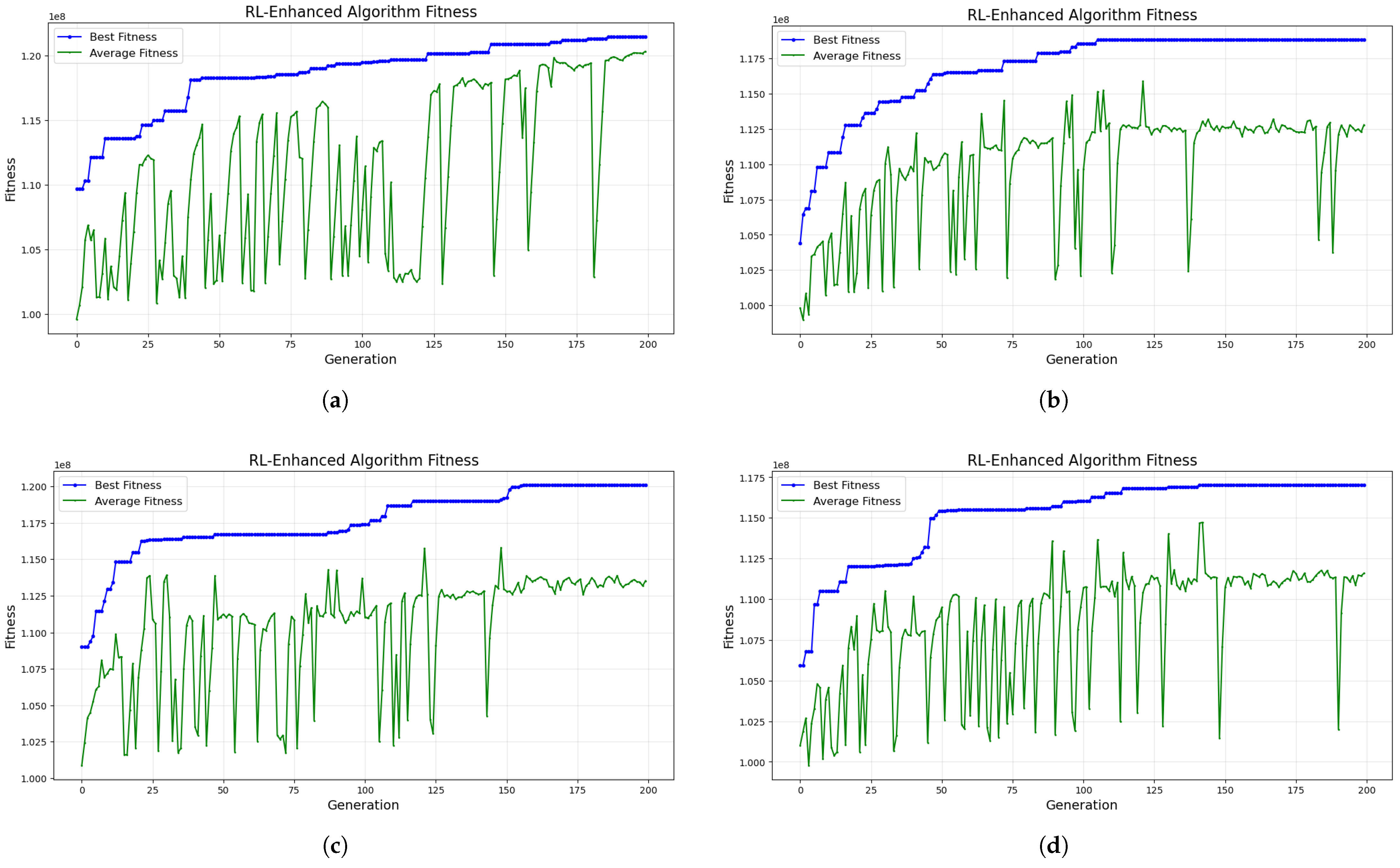

- (1)

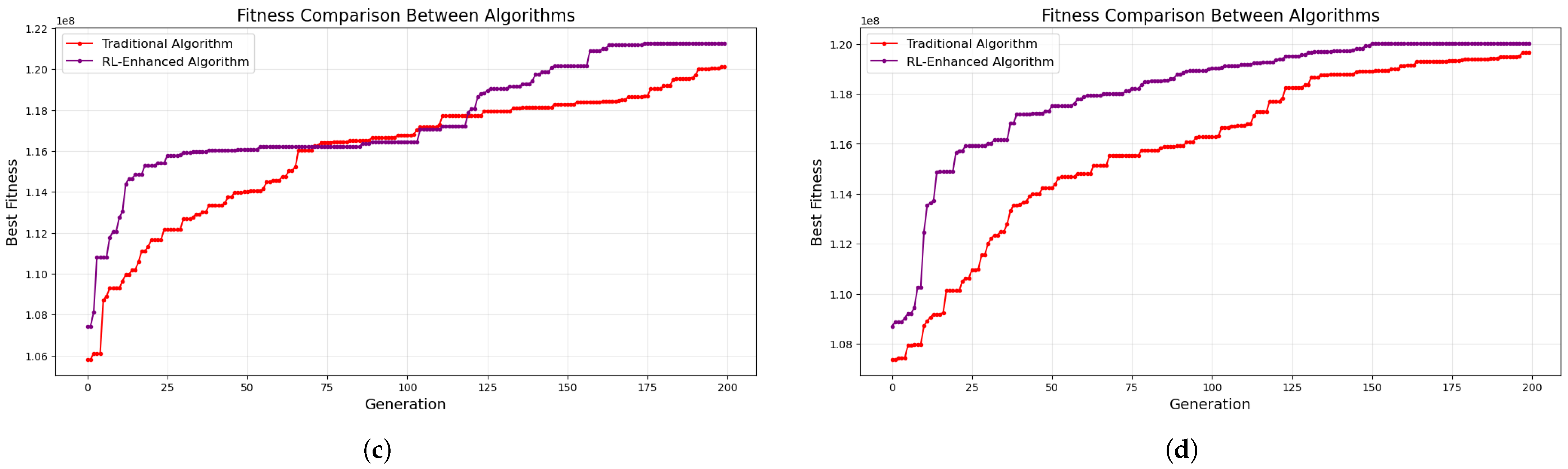

- The experimental results clearly demonstrate different convergence patterns between the two algorithmic approaches. The RL-enhanced genetic algorithm exhibits extraordinary rapid initial convergence, achieving substantial fitness improvements within the first 20–30 generations, as evidenced in both comparative trials. This rapid early-stage convergence represents a dramatic acceleration compared to the traditional algorithm, which follows a more gradual, linear progression throughout the evolutionary process. The RL-enhanced algorithm’s ability to quickly identify and exploit high-quality solution regions suggests superior search space navigation capabilities, likely attributable to its reinforcement learning-guided operator selection mechanism that adaptively chooses the most effective evolutionary operations based on current population states.

- (2)

- Both algorithms demonstrate the capability to achieve comparable final fitness values, indicating that neither approach suffers from fundamental optimization limitations. However, the pathways to these solutions differ substantially. The traditional genetic algorithm follows a steady, incremental improvement trajectory characterized by consistent but relatively modest fitness gains per generation. In contrast, the RL-enhanced algorithm achieves rapid initial gains followed by a stabilization period, suggesting efficient early exploration followed by focused exploitation of promising solution neighborhoods. This pattern indicates that the RL-enhanced approach can achieve near-optimal solutions with significantly fewer generations, representing substantial computational efficiency gains.

- (3)

- The facility location results reveal interesting differences in spatial distribution strategies between the two algorithms. Both approaches successfully avoid highly competitive regions dominated by existing bank facilities, demonstrating effective competitive avoidance behavior. However, subtle differences emerge in the specific location selections and scale distributions. The traditional algorithm tends to produce more conservative positioning with relatively uniform scale distributions, while the RL-enhanced algorithm shows more strategic clustering of larger-scale facilities in regions with optimal demand-to-competition ratios. These differences suggest that the RL-enhanced algorithm’s adaptive decision-making process enables more sophisticated spatial optimization strategies.

- (4)

- The comparative trials demonstrate consistent performance patterns across multiple runs for both algorithms. The traditional genetic algorithm maintains its characteristic smooth, monotonic progression in both trials, indicating high algorithmic stability and predictable behavior. The RL-enhanced algorithm shows remarkable consistency in its rapid convergence pattern across different trials, suggesting robust performance despite its more complex internal mechanisms. This consistency is particularly noteworthy given the stochastic nature of the reinforcement learning components, indicating that the algorithm’s learning mechanisms are sufficiently stable for practical applications.

- (5)

- From a computational efficiency perspective, the RL-enhanced algorithm presents significant advantages. The rapid convergence to near-optimal solutions means that satisfactory results can be obtained with substantially fewer generations than required by the traditional approach. In practical terms, this translates to reduced computational time and resource requirements, making the RL-enhanced algorithm particularly attractive for real-time or resource-constrained optimization scenarios. The traditional algorithm, while eventually achieving comparable results, requires prolonged execution times to reach equivalent solution quality levels.

- (6)

- The fitness evolution curves reveal fundamentally different exploration-exploitation strategies. The traditional algorithm maintains a consistent exploration-exploitation balance throughout the evolutionary process, resulting in steady but gradual improvement. The RL-enhanced algorithm demonstrates a more sophisticated adaptive strategy, with intensive early exploration leading to rapid discovery of high-quality solution regions, followed by focused exploitation to refine these solutions. This adaptive behavior suggests superior meta-optimization capabilities, where the algorithm learns not just about the problem landscape but also about optimal search strategies for different evolutionary phases.

4. Discussion

Limitations of the Study

- (1)

- The integration of reinforcement learning components introduces significant complexity in parameter tuning and algorithmic configuration. The RL-GA framework requires careful calibration of multiple parameters, including the learning rate , discount factor , exploration rate decay schedule , and the multi-component reward function weights . The performance of the algorithm is sensitive to these parameter choices, and suboptimal configuration can lead to poor convergence behavior or instability in the learning process. Furthermore, the experience replay buffer size and batch learning mechanisms require domain-specific tuning, making the algorithm less plug-and-play compared to traditional genetic algorithms. The black-box nature of the reinforcement learning decision-making process also poses challenges for transparency and debugging, particularly when the algorithm exhibits unexpected behavior during the optimization process.

- (2)

- Unlike traditional genetic algorithms that operate based on fundamental evolutionary principles, the RL-GA’s performance is inherently dependent on the quality and representativeness of the training scenarios encountered during the learning phase. The Q-learning component learns optimal policies based on the specific problem instances and population states experienced during training, which may not generalize well to significantly different problem contexts or market conditions. This dependency on training experience raises concerns about the algorithm’s robustness when applied to new geographical areas with different competitive landscapes, demographic patterns, or facility cost structures. The algorithm may require substantial retraining or adaptation periods when transferred to different application domains, potentially limiting its immediate applicability across diverse real-world scenarios.

- (3)

- While the RL-GA demonstrates superior convergence speed in terms of generations required, the computational overhead associated with the reinforcement learning components introduces additional complexity. The state encoding process, Q-value updates, policy learning, and experience replay mechanisms add computational layers that, while generally efficient, may become significant when dealing with extremely large-scale problems involving thousands of candidate locations or complex state representations. The memory requirements for maintaining the experience replay buffer and the computational cost of batch learning updates could potentially offset some of the efficiency gains achieved through faster convergence, particularly in resource-constrained computing environments.

- (4)

- The current study’s scope in characterizing bank facility locations remains constrained by data availability across multiple dimensions. While our RL-GA model demonstrates adaptive capabilities, the underlying mathematical formulation still primarily focuses on competitive dynamics and basic operational costs. The reality of bank branch location decisions involves complex interactions among socioeconomic factors, demographic characteristics, accessibility considerations, market potential indicators, and infrastructure development patterns [59,60,61]. The RL-GA’s learning mechanisms could potentially adapt to incorporate these additional factors through enhanced state representations and reward function engineering, but such extensions would require comprehensive multi-dimensional datasets that are currently unavailable. The algorithm’s ability to learn optimal strategies is inherently limited by the quality and completeness of the input data and problem formulation.

- (5)

- Although the RL-GA framework demonstrates superior adaptability compared to traditional approaches, the current model still primarily addresses competitive influences based on locational and scale factors. The financial services sector’s competitive landscape encompasses numerous dimensions including service quality differentiation, brand positioning, customer loyalty programs, pricing strategies for various financial products, and dynamic market responses to competitor actions [62,63,64]. While the reinforcement learning component could theoretically adapt to more complex competitive dynamics through enhanced reward signal design, the current implementation does not capture the full spectrum of strategic interactions that characterize real-world banking competition. Future research could explore multi-agent reinforcement learning frameworks that explicitly model competitor responses and strategic interactions.

- (6)

- The stochastic nature of both the genetic algorithm components and the reinforcement learning mechanisms raises questions about convergence guarantees and solution stability. While empirical results demonstrate consistent performance across multiple trials, the theoretical foundations for convergence assurance in the hybrid RL-GA framework remain less established compared to traditional evolutionary algorithms. The exploration-exploitation balance maintained by the -greedy strategy, while generally beneficial for solution quality, can occasionally lead to temporary performance degradation that may be unacceptable in certain practical applications requiring monotonic improvement guarantees.

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Owen, S.H.; Daskin, M.S. Strategic facility location: A review. Eur. J. Oper. Res. 1998, 111, 423–447. [Google Scholar] [CrossRef]

- Drezner, T.; Drezner, Z. The gravity multiple server location problem. Comput. Oper. Res. 2011, 38, 694–701. [Google Scholar] [CrossRef]

- Daskin, M.S.; Stern, E.H. A hierarchical objective set covering model for emergency medical service vehicle deployment. Transp. Sci. 1981, 15, 137–152. [Google Scholar] [CrossRef]

- Daskin, M.S. A maximum expected covering location model: Formulation, properties and heuristic solution. Transp. Sci. 1983, 17, 48–70. [Google Scholar] [CrossRef]

- Min, H.; Melachrinoudis, E. The three-hierarchical location-allocation of banking facilities with risk and uncertainty. Int. Trans. Oper. Res. 2001, 8, 381–401. [Google Scholar] [CrossRef]

- Miliotis, P.; Dimopoulou, M.; Giannikos, I. A hierarchical location model for locating bank branches in a competitive environment. Int. Trans. Oper. Res. 2002, 9, 549–565. [Google Scholar] [CrossRef]

- Arabani, A.B.; Farahani, R.Z. Facility location dynamics: An overview of classifications and applications. Comput. Ind. Eng. 2012, 62, 408–420. [Google Scholar] [CrossRef]

- Gendreau, M.; Laporte, G.; Semet, F. A dynamic model and parallel tabu search heuristic for real-time ambulance relocation. Parallel Comput. 2001, 27, 1641–1653. [Google Scholar] [CrossRef]

- Başar, A.; Çatay, B.; Ünlüyurt, T. A multi-period double coverage approach for locating the emergency medical service stations in Istanbul. J. Oper. Res. Soc. 2011, 62, 627–637. [Google Scholar] [CrossRef]

- Brimberg, J.; Drezner, Z. A new heuristic for solving the p-median problem in the plane. Comput. Oper. Res. 2013, 40, 427–437. [Google Scholar] [CrossRef]

- Alexandris, G.; Giannikos, I. A new model for maximal coverage exploiting GIS capabilities. Eur. J. Oper. Res. 2010, 202, 328–338. [Google Scholar] [CrossRef]

- Wang, Q.; Batta, R.; Rump, C.M. Algorithms for a facility location problem with stochastic customer demand and immobile servers. Ann. Oper. Res. 2002, 111, 17–34. [Google Scholar] [CrossRef]

- Wang, Q.; Batta, R.; Bhadury, J.; Rump, C.M. Budget constrained location problem with opening and closing of facilities. Comput. Oper. Res. 2003, 30, 2047–2069. [Google Scholar] [CrossRef]

- Hwang, H.S. Design of supply-chain logistics system considering service level. Comput. Ind. Eng. 2002, 43, 283–297. [Google Scholar] [CrossRef]

- Curtin, K.M.; Hayslett-McCall, K.; Qiu, F. Determining optimal police patrol areas with maximal covering and backup covering location models. Netw. Spat. Econ. 2010, 10, 125–145. [Google Scholar] [CrossRef]

- Murray, A.T.; Tong, D.; Kim, K. Enhancing classic coverage location models. Int. Reg. Sci. Rev. 2010, 33, 115–133. [Google Scholar] [CrossRef]

- Baron, O.; Berman, O.; Kim, S.; Krass, D. Ensuring feasibility in location problems with stochastic demands and congestion. IIE Trans. 2009, 41, 467–481. [Google Scholar] [CrossRef]

- Christaller, W.; Baskin, C.W. Central Places in Southern Germany; Prentice-Hall: Hoboken, NJ, USA, 1966. [Google Scholar]

- Lösch, A.; Woglom, W.H.; Stolper, W.F. The Economics of Location; Yale University Press: New Haven, CT, USA, 1954. [Google Scholar]

- Zeller, R.E.; Achabal, D.D.; Brown, L.A. Market penetration and locational conflict in franchise systems. Decis. Sci. 1980, 11, 58–80. [Google Scholar] [CrossRef]

- Ghosh, A.; Craig, C.S. An approach to determining optimal locations for new services. J. Mark. Res. 1986, 23, 354–362. [Google Scholar] [CrossRef]

- Drezner, T.; Drezner, Z.; Kalczynski, P. A cover-based competitive location model. J. Oper. Res. Soc. 2011, 62, 100–113. [Google Scholar] [CrossRef]

- Drezner, T.; Drezner, Z. Modelling lost demand in competitive facility location. J. Oper. Res. Soc. 2012, 63, 201–206. [Google Scholar] [CrossRef]

- Drezner, T.; Drezner, Z.; Kalczynski, P. A leader–follower model for discrete competitive facility location. Comput. Oper. Res. 2015, 64, 51–59. [Google Scholar] [CrossRef]

- Xia, L.; Yin, W.; Dong, J.; Wu, T.; Xie, M.; Zhao, Y. A hybrid nested partitions algorithm for banking facility location problems. IEEE Trans. Autom. Sci. Eng. 2010, 7, 654–658. [Google Scholar] [CrossRef]

- Zhang, L.; Rushton, G. Optimizing the size and locations of facilities in competitive multi-site service systems. Comput. Oper. Res. 2008, 35, 327–338. [Google Scholar] [CrossRef]

- Huff, D.L. Defining and estimating a trading area. J. Mark. 1964, 28, 34–38. [Google Scholar] [CrossRef]

- Huff, D.L. A programmed solution for approximating an optimum retail location. Land Econ. 1966, 42, 293–303. [Google Scholar] [CrossRef]

- NAKANISHI Mand COOPER, L. Parameter Estimate for Multiplicative Interactive Choice Model: Least Squares Approach. J. Mark. Res. 1974, 11, 303–311. [Google Scholar]

- Jain, A.K. Evaluating the competitive environment in retailing using multiplicative competitive interactive models. In Research in Marketing; JAI Press: London, UK, 1979. [Google Scholar]

- Prosperi, D.C.; Schuler, H.J. An alternate method to identify rules of spatial choice. Geogr. Perspect. 1976, 38, 33–38. [Google Scholar]

- Schuler, H.J. Grocery shopping choices: Individual preferences based on store attractiveness and distance. Environ. Behav. 1981, 13, 331–347. [Google Scholar] [CrossRef]

- Timmermans, H. Multipurpose trips and individual choice behaviour: An analysis using experimental design data. In Behavioural Modelling in Geography and Planning; Croom Helm: London, UK, 1988; pp. 356–367. [Google Scholar]

- Bell, D.R.; Ho, T.H.; Tang, C.S. Determining where to shop: Fixed and variable costs of shopping. J. Mark. Res. 1998, 35, 352–369. [Google Scholar] [CrossRef]

- Timmermans, H. Consumer choice of shopping centre: An information integration approach. Reg. Stud. 1982, 16, 171–182. [Google Scholar] [CrossRef]

- Downs, R.M. The cognitive structure of an urban shopping center. Environ. Behav. 1970, 2, 13–39. [Google Scholar] [CrossRef]

- Drezner, T. Derived attractiveness of shopping malls. IMA J. Manag. Math. 2006, 17, 349–358. [Google Scholar] [CrossRef]

- Plastria, F.; Carrizosa, E. Optimal location and design of a competitive facility. Math. Program. 2004, 100, 247–265. [Google Scholar] [CrossRef]

- Davies, R. Evaluation of retail store attributes and sales performance. Eur. J. Mark. 1973, 7, 89–102. [Google Scholar] [CrossRef]

- Pastor, J.T. Bicriterion programs and managerial location decisions: Application to the banking sector. J. Oper. Res. Soc. 1994, 45, 1351–1362. [Google Scholar] [CrossRef]

- Leonardi, G. Optimum Facility Location by Accessibility Maximizing. Environ. Plan. A Econ. Space 1978, 10, 1287–1305. [Google Scholar] [CrossRef]

- Holland, J.H. Adaptation in Natural and Artificial Systems: An Introductory Analysis with Applications to Biology, Control, and Artificial Intelligence; MIT Press: Cambridge, MA, USA, 1992. [Google Scholar]

- Sachdeva, A.; Singh, B.; Prasad, R.; Goel, N.; Mondal, R.; Munjal, J.; Bhatnagar, A.; Dahiya, M. Metaheuristic for hub-spoke facility location problem: Application to Indian e-commerce industry. arXiv 2022, arXiv:2212.08299. [Google Scholar]

- Lazari, V.; Chassiakos, A. Multi-objective optimization of electric vehicle charging station deployment using genetic algorithms. Appl. Sci. 2023, 13, 4867. [Google Scholar] [CrossRef]

- Salami, A.; Afshar-Nadjafi, B.; Amiri, M. A two-stage optimization approach for healthcare facility location-allocation problems with service delivering based on genetic algorithm. Int. J. Public Health 2023, 68, 1605015. [Google Scholar] [CrossRef]

- Goldberg, D.E.; Holland, J.H. Genetic Algorithms in Search, Optimization, and Machine Learning; Addison-Wesley: Reading, MA, USA, 1989; Volume 102, pp. 36–58. [Google Scholar]

- Reeves, C.R. Modern Heuristic Techniques for Combinatorial Problems; John Wiley & Sons, Inc.: Hoboken, NJ, USA, 1993. [Google Scholar]

- Deb, K.; Pratap, A.; Agarwal, S.; Meyarivan, T. A fast and elitist multiobjective genetic algorithm: NSGA-II. IEEE Trans. Evol. Comput. 2002, 6, 182–197. [Google Scholar] [CrossRef]

- Grefenstette, J.J. Optimization of control parameters for genetic algorithms. IEEE Trans. Syst. Man Cybern. 1986, 16, 122–128. [Google Scholar] [CrossRef]

- Golberg, D.E. Genetic Algorithms in Search, Optimization, and Machine Learning; Addion Wesley: Reading, MA, USA, 1989; p. 36. [Google Scholar]

- Michalewicz, Z. Genetic Algorithms + Data Structures = Evolution Programs; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2013. [Google Scholar]

- Zhong, Y.; Wang, S.; Liang, H.; Wang, Z.; Zhang, X.; Chen, X.; Su, C. ReCovNet: Reinforcement learning with covering information for solving maximal coverage billboards location problem. Int. J. Appl. Earth Obs. Geoinf. 2024, 128, 103710. [Google Scholar] [CrossRef]

- Zhang, W.; Gu, X.; Tang, L.; Yin, Y.; Liu, D.; Zhang, Y. Application of machine learning, deep learning and optimization algorithms in geoengineering and geoscience: Comprehensive review and future challenge. Gondwana Res. 2022, 109, 1–17. [Google Scholar] [CrossRef]

- Kovacs-Györi, A.; Ristea, A.; Havas, C.; Mehaffy, M.; Hochmair, H.H.; Resch, B.; Juhasz, L.; Lehner, A.; Ramasubramanian, L.; Blaschke, T. Opportunities and challenges of geospatial analysis for promoting urban livability in the era of big data and machine learning. ISPRS Int. J. Geo-Inf. 2020, 9, 752. [Google Scholar] [CrossRef]

- Liang, H.; Wang, S.; Li, H.; Zhou, L.; Chen, H.; Zhang, X.; Chen, X. Sponet: Solve spatial optimization problem using deep reinforcement learning for urban spatial decision analysis. Int. J. Digit. Earth 2024, 17, 2299211. [Google Scholar] [CrossRef]

- Su, H.; Zheng, Y.; Ding, J.; Jin, D.; Li, Y. Large-scale Urban Facility Location Selection with Knowledge-informed Reinforcement Learning. In Proceedings of the 32nd ACM International Conference on Advances in Geographic Information Systems, Atlanta, GA, USA, 29 October–1 November 2024; pp. 553–556. [Google Scholar]

- Bagga, P.S.; Delarue, A. Solving the quadratic assignment problem using deep reinforcement learning. arXiv 2023, arXiv:2310.01604. [Google Scholar]

- Wu, S.; Wang, J.; Jia, Y.; Yang, J.; Li, J. Planning and layout of tourism and leisure facilities based on POI big data and machine learning. PLoS ONE 2025, 20, e0298056. [Google Scholar] [CrossRef]

- Chammas, G. Factors Affecting the Geographical Distribution of Commercial Banks in Lebanon: An Analysis of the Need for Further Branch Banking in Aley. Ph.D. Thesis, Notre Dame University-Louaize, Zouk Mosbeh, Lebanon, 1997. [Google Scholar]

- Cinar, N.; Ahiska, S.S. A decision support model for bank branch location selection. Int. J. Mech. Ind. Sci. Eng. 2009, 3, 26–31. [Google Scholar]

- Pathak, S.; Liu, M.; Jato-Espino, D.; Zevenbergen, C. Social, economic and environmental assessment of urban sub-catchment flood risks using a multi-criteria approach: A case study in Mumbai City, India. J. Hydrol. 2020, 591, 125216. [Google Scholar] [CrossRef]

- Dick, A.A. Market size, service quality, and competition in banking. J. Money Credit Bank. 2007, 39, 49–81. [Google Scholar] [CrossRef]

- Degryse, H.; Kim, M.; Ongena, S. Microeconometrics of Banking: Methods, Applications, and Results; Oxford University Press: New York, NY, USA, 2009. [Google Scholar]

- Taherparvar, N.; Esmaeilpour, R.; Dostar, M. Customer knowledge management, innovation capability and business performance: A case study of the banking industry. J. Knowl. Manag. 2014, 18, 591–610. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Published by MDPI on behalf of the International Society for Photogrammetry and Remote Sensing. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhao, Z.; Wang, S.; Su, C.; Liang, H. Multi-Size Facility Allocation Under Competition: A Model with Competitive Decay and Reinforcement Learning-Enhanced Genetic Algorithm. ISPRS Int. J. Geo-Inf. 2025, 14, 347. https://doi.org/10.3390/ijgi14090347

Zhao Z, Wang S, Su C, Liang H. Multi-Size Facility Allocation Under Competition: A Model with Competitive Decay and Reinforcement Learning-Enhanced Genetic Algorithm. ISPRS International Journal of Geo-Information. 2025; 14(9):347. https://doi.org/10.3390/ijgi14090347

Chicago/Turabian StyleZhao, Zixuan, Shaohua Wang, Cheng Su, and Haojian Liang. 2025. "Multi-Size Facility Allocation Under Competition: A Model with Competitive Decay and Reinforcement Learning-Enhanced Genetic Algorithm" ISPRS International Journal of Geo-Information 14, no. 9: 347. https://doi.org/10.3390/ijgi14090347

APA StyleZhao, Z., Wang, S., Su, C., & Liang, H. (2025). Multi-Size Facility Allocation Under Competition: A Model with Competitive Decay and Reinforcement Learning-Enhanced Genetic Algorithm. ISPRS International Journal of Geo-Information, 14(9), 347. https://doi.org/10.3390/ijgi14090347