Machine Learning-Based Identification of Key Predictors for Lightning Events in the Third Pole Region

Abstract

1. Introduction

2. Data and Methodology

2.1. Lightning Observation

2.2. Numerical Model Setup

2.3. Machine Learning Models

2.4. Experimental Setup

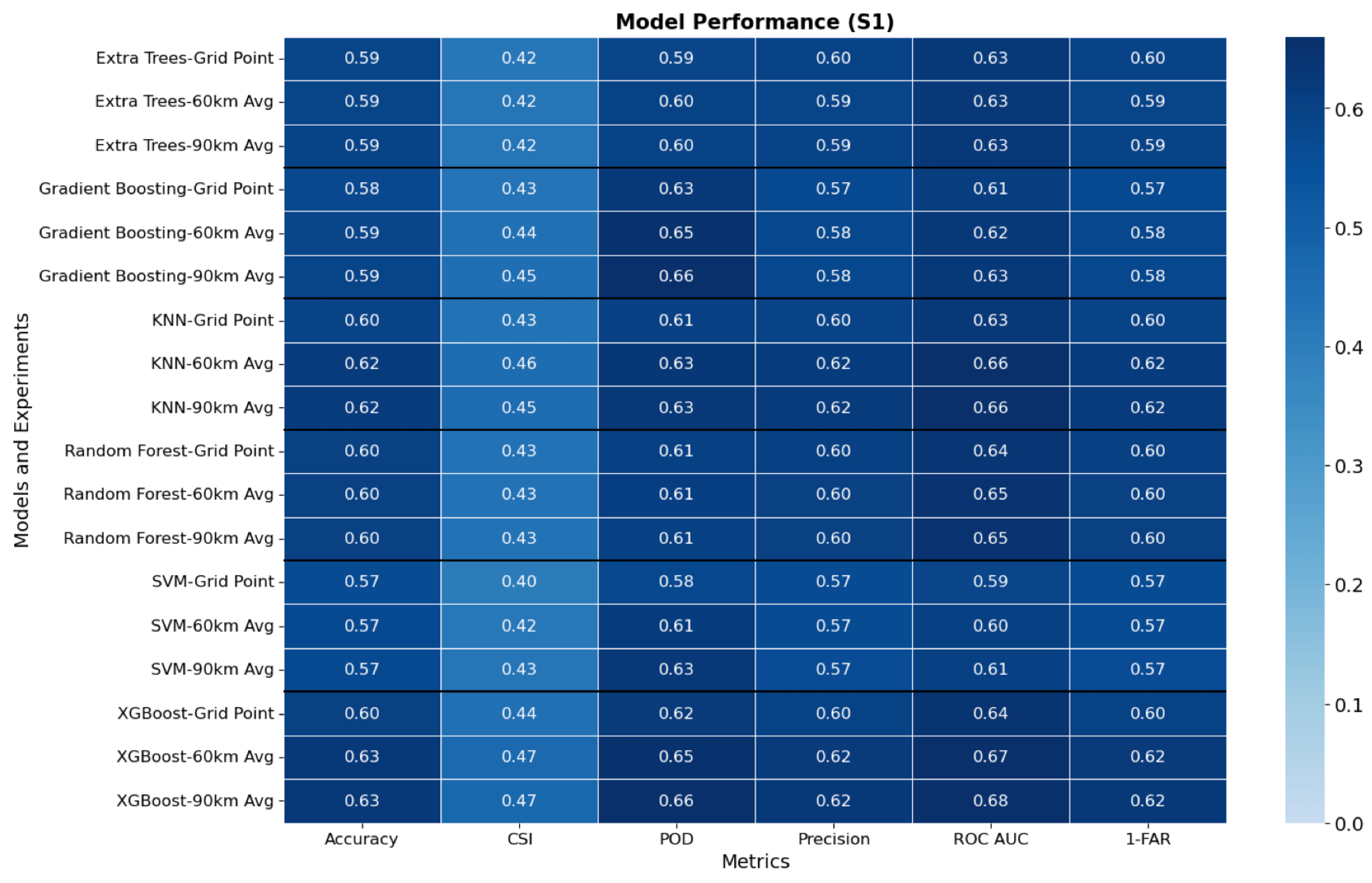

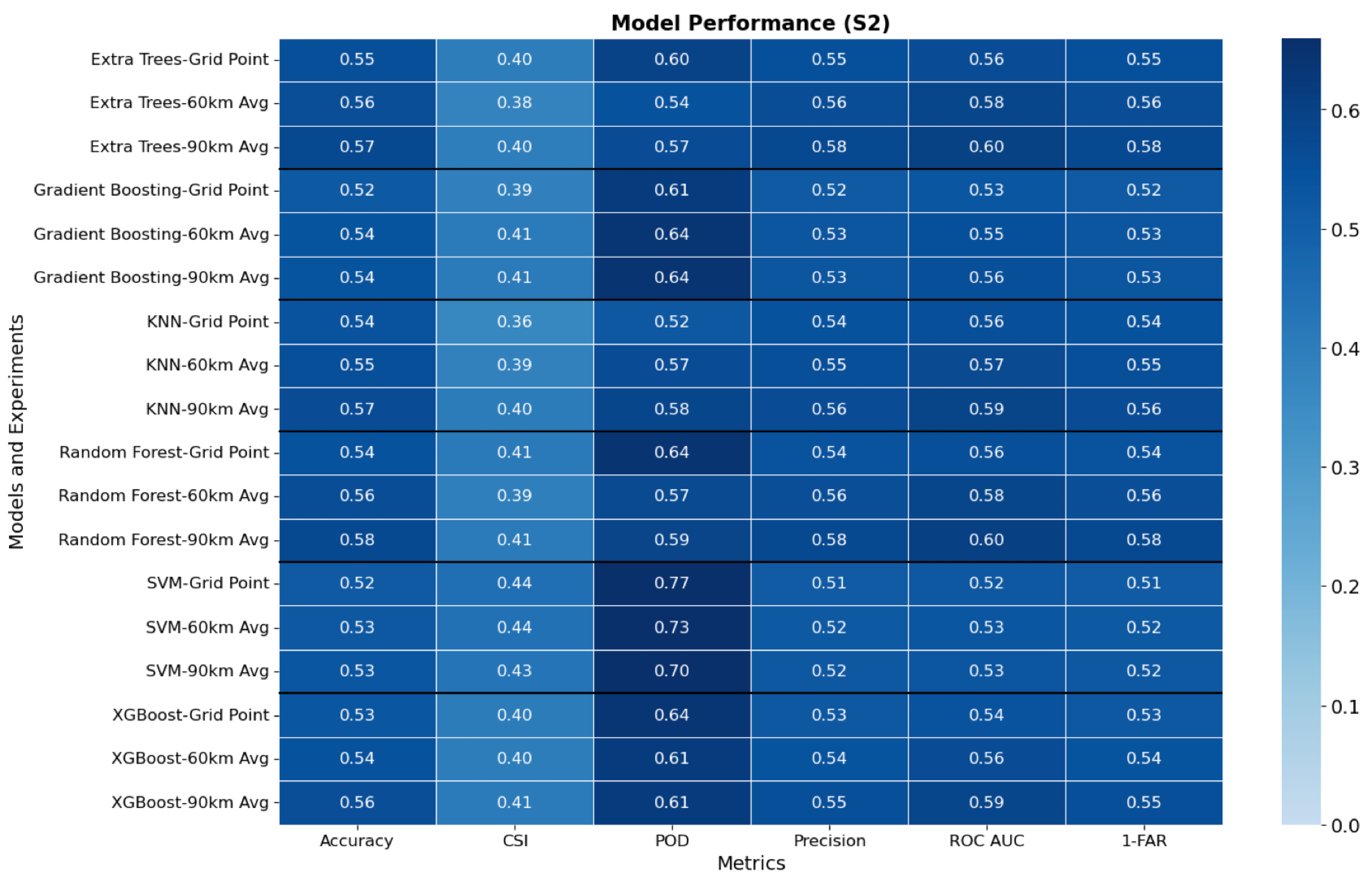

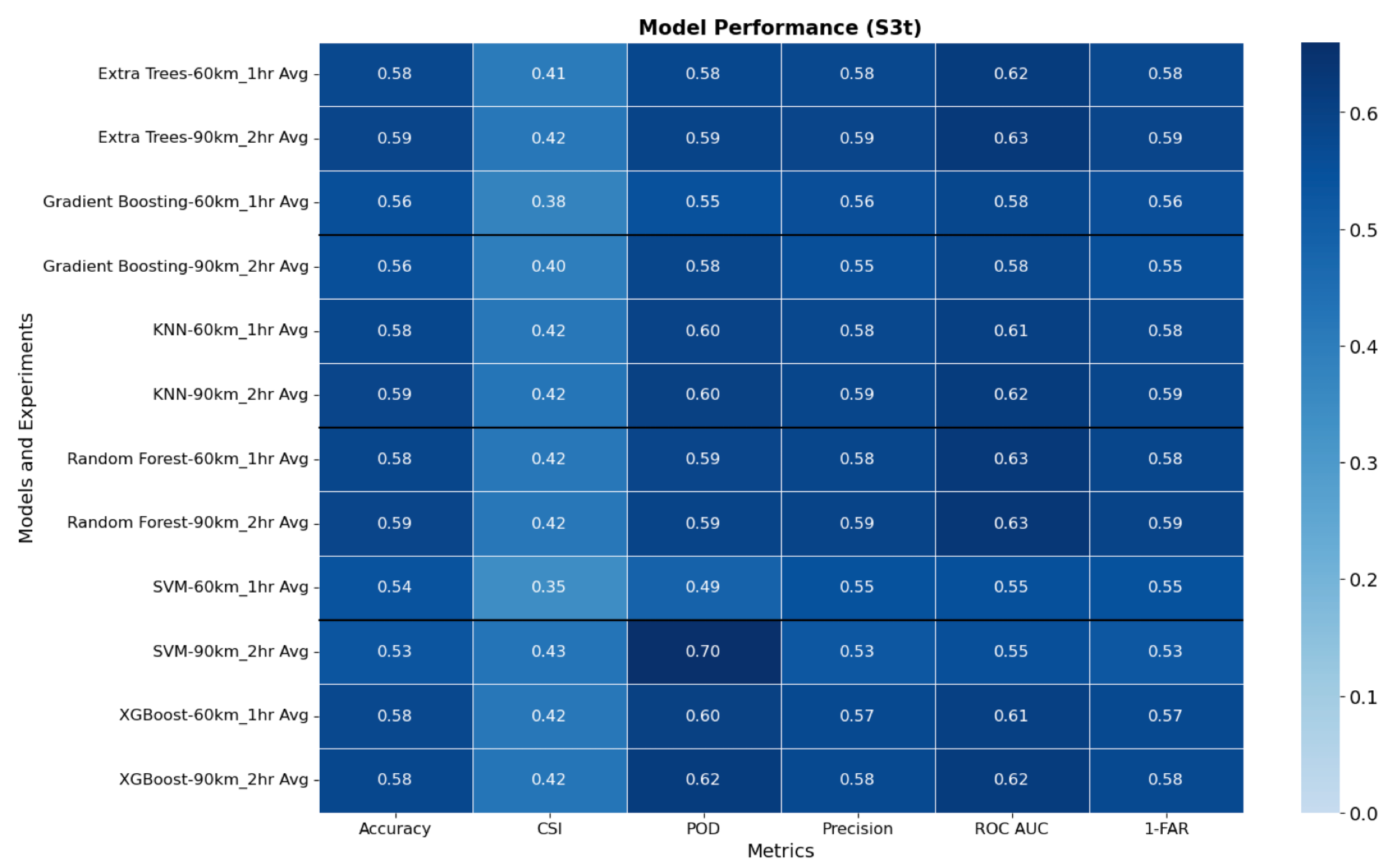

3. Results and Discussion

4. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| ISS-LIS | International Space Station Lightning Imaging Sensors |

| WWLLN | World Wide Lightning Location Network |

| LPI | Lightning Potential Index |

| CP | CAPE times Precipitation |

| RH_850,500,300 | relative humidity at (850, 500, 300) hPa |

| vor_850,500,300 | vorticity at (850, 500, 300) hPa |

| clcm and clch | medium and high cloud cover |

| cin_ml | convective inhibition of mean surface layer parcel |

| lhfl_s and shfl_s | surface latent and sensible heat flux |

| qhfl_s | surface moisture flux |

| sob_s and thb_s | shortwave and longwave net flux at surface |

| tqc and tqi | total column integrated cloud water and ice |

Appendix A

| Model | Algorithm Type | Key Features | Strengths | Limitations |

|---|---|---|---|---|

| Random Forest | Ensemble (Bagging) | Builds multiple decision trees and combines their outputs (majority vote). |

|

|

| Extra Trees | Ensemble (Bagging) | Similar to Random Forest but uses randomized splits for trees. |

|

|

| Gradient Boosting | Ensemble (Boosting) | Combines weak learners sequentially to reduce errors iteratively. |

|

|

| XGBoost | Ensemble (Boosting) | Highly efficient implementation of gradient boosting with regularization. |

|

|

| K-Nearest Neighbors | Instance-Based | Assigns class labels based on the majority vote of neighbors (k nearest points). |

|

|

| SVM (Support Vector Machine) | Discriminative | Finds a hyperplane to separate classes with maximum margin (can use kernels for non-linear problems). |

|

|

References

- Lal, D.M.; Pawar, S.D. Relationship between rainfall and lightning over central Indian region in monsoon and premonsoon seasons. Atmos. Res. 2009, 92, 402–410. [Google Scholar] [CrossRef]

- Albrecht, R.I.; Goodman, S.J.; Buechler, D.E.; Blakeslee, R.J.; Christian, H.J. Where are the lightning hotspots on Earth? Bull. Am. Meteorol. Soc. 2016, 97, 2051–2068. [Google Scholar] [CrossRef]

- Damase, N.P.; Banik, T.; Paul, B.; Saha, K.; Sharma, S.; De, B.K.; Guha, A. Comparative study of lightning climatology and the role of meteorological parameters over the Himalayan region. J. Atmos.-Sol.-Terr. Phys. 2021, 219, 105527. [Google Scholar] [CrossRef]

- Singh, P.; Ahrens, B. Modeling Lightning Activity in the Third Pole Region: Performance of a km-Scale ICON-CLM Simulation. Atmosphere 2023, 14, 1655. [Google Scholar] [CrossRef]

- Singh, P.; Ahrens, B. Lightning Potential Index Using ICON Simulation at the km-Scale over the Third Pole Region: ISS-LIS Events and ICON-CLM Simulated LPI; Zenodo: Geneva, Switzerland, 2023. [Google Scholar] [CrossRef]

- Saunders, C.P.R.; Bax-Norman, H.; Emersic, C.; Avila, E.E.; Castellano, N.E. Laboratory studies of the effect of cloud conditions on graupel/crystal charge transfer in thunderstorm electrification. Q. J. R. Meteorol. Soc. J. Atmos. Sci. Appl. Meteorol. Phys. Oceanogr. 2006, 132, 2653–2673. [Google Scholar] [CrossRef]

- Mostajabi, A.; Finney, D.L.; Rubinstein, M.; Rachidi, F. Nowcasting lightning occurrence from commonly available meteorological parameters using machine learning techniques. NPJ Clim. Atmos. Sci. 2019, 2, 41. [Google Scholar] [CrossRef]

- Adhikari, B.R. Lightning fatalities and injuries in Nepal. Weather. Clim. Soc. 2021, 13, 449–458. [Google Scholar] [CrossRef]

- Adhikari, P.B. People Deaths and Injuries Caused by Lightning in Himalayan Region, Nepal. Int. J. Geophys. 2022, 2022, 3630982. [Google Scholar] [CrossRef]

- Bishop, C.M.; Nasrabadi, N.M. Pattern Recognition and Machine Learning; Springer: New York, NY, USA, 2006; Volume 4, p. 738. [Google Scholar]

- Geng, Y.A.; Li, Q.; Lin, T.; Yao, W.; Xu, L.; Zheng, D.; Zhou, X.; Zheng, L.; Lyu, W.; Zhang, Y. A deep learning framework for lightning forecasting with multi-source spatiotemporal data. Q. J. R. Meteorol. Soc. 2021, 147, 4048–4062. [Google Scholar] [CrossRef]

- Price, C.; Rind, D. A simple lightning parameterization for calculating global lightning distributions. J. Geophys. Res. Atmos. 1992, 97, 9919–9933. [Google Scholar] [CrossRef]

- Simon, T.; Mayr, G.J.; Umlauf, N.; Zeileis, A. NWP-based lightning prediction using flexible count data regression. Adv. Stat. Climatol. Meteorol. Oceanogr. 2019, 5, 1–16. [Google Scholar] [CrossRef]

- Müller, R.; Barleben, A. Data-Driven Prediction of Severe Convection at Deutscher Wetterdienst (DWD): A Brief Overview of Recent Developments. Atmosphere 2024, 15, 499. [Google Scholar] [CrossRef]

- Brodehl, S.; Müller, R.; Schömer, E.; Spichtinger, P.; Wand, M. End-to-End Prediction of Lightning Events from Geostationary Satellite Images. Remote Sens. 2022, 14, 3760. [Google Scholar] [CrossRef]

- Chatterjee, C.; Mandal, J.; Das, S. A machine learning approach for prediction of seasonal lightning density in different lightning regions of India. Int. J. Climatol. 2023, 43, 2862–2878. [Google Scholar] [CrossRef]

- Vahid Yousefnia, K.; Bölle, T.; Zöbisch, I.; Gerz, T. A machine-learning approach to thunderstorm forecasting through post-processing of simulation data. Q. J. R. Meteorol. Soc. 2024, 150, 3495–3510. [Google Scholar] [CrossRef]

- Rameshan, A.; Singh, P.; Ahrens, B. Cross-Examination of Reanalysis Datasets on Elevation-Dependent Climate Change in the Third Pole Region. Atmosphere 2025, 16, 327. [Google Scholar] [CrossRef]

- Lang, T.; National Center for Atmospheric Research Staff (Eds.) The Climate Data Guide: Lightning Data from the TRMM and ISS Lightning Image Sounder (LIS): Towards a Global Lightning Climate Data Record. Available online: https://climatedataguide.ucar.edu/climate-data/lightning-data-trmm-and-iss-lightning-image-sounder-lis-towards-global-lightning (accessed on 26 February 2025).

- Mach, D.M.; Christian, H.J.; Blakeslee, R.J.; Boccippio, D.J.; Goodman, S.J.; Boeck, W.L. Performance assessment of the optical transient detector and lightning imaging sensor. J. Geophys. Res. Atmos. 2007, 112, D09210. [Google Scholar] [CrossRef]

- Zängl, G.; Reinert, D.; Rípodas, P.; Baldauf, M. The ICON (ICOsahedral Non-hydrostatic) modelling framework of DWD and MPI-M: Description of the non-hydrostatic dynamical core. Q. J. R. Meteorol. Soc. 2015, 141, 563–579. [Google Scholar] [CrossRef]

- Pham, T.V.; Steger, C.; Rockel, B.; Keuler, K.; Kirchner, I.; Mertens, M.; Rieger, D.; Zängl, G.; Früh, B. ICON in Climate Limited-area Mode (ICON release version 2.6.1): A new regional climate model. Geosci. Model Dev. 2021, 14, 985–1005. [Google Scholar] [CrossRef]

- Collier, E.; Ban, N.; Richter, N.; Ahrens, B.; Chen, D.; Chen, X.; Lai, H.-W.; Leung, R.; Li, L.; Medvedova, A. The first ensemble of kilometer-scale simulations of a hydrological year over the third pole. Clim. Dyn. 2024, 62, 7501–7518. [Google Scholar] [CrossRef]

- Romps, D.M.; Charn, A.B.; Holzworth, R.H.; Lawrence, W.E.; Molinari, J.; Vollaro, D. CAPE times P explains lightning over land but not the land-ocean contrast. Geophys. Res. Lett. 2018, 45, 12–623. [Google Scholar] [CrossRef]

- Saleh, N.; Gharaylou, M.; Farahani, M.M.; Alizadeh, O. Performance of lightning potential index, lightning threat index, and the product of CAPE and precipitation in the WRF model. Earth Space Sci. 2023, 10, e2023EA003104. [Google Scholar] [CrossRef]

- Brisson, E.; Blahak, U.; Lucas-Picher, P.; Purr, C.; Ahrens, B. Contrasting lightning projection using the lightning potential index adapted in a convection-permitting regional climate model. Clim. Dyn. 2021, 57, 2037–2051. [Google Scholar] [CrossRef]

- Lynn, B.; Yair, Y. Prediction of lightning flash density with the WRF model. Adv. Geosci. 2010, 23, 11–16. [Google Scholar] [CrossRef]

- Yair, Y.; Lynn, B.; Price, C.; Kotroni, V.; Lagouvardos, K.; Morin, E.; Mugnai, A.; Llasat, M.D.C. Predicting the potential for lightning activity in Mediterranean storms based on the Weather Research and Forecasting (WRF) model dynamic and microphysical fields. J. Geophys. Res. Atmos. 2010, 115, D04205. [Google Scholar] [CrossRef]

- Uhlířová, I.B.; Popová, J.; Sokol, Z. Lightning Potential Index and its spatial and temporal characteristics in COSMO NWP model. Atmos. Res. 2022, 268, 106025. [Google Scholar] [CrossRef]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Geurts, P.; Ernst, D.; Wehenkel, L. Extremely randomized trees. Mach. Learn. 2006, 63, 3–42. [Google Scholar] [CrossRef]

- Friedman, J.H. Greedy function approximation: A gradient boosting machine. Ann. Stat. 2001, 1189–1232. [Google Scholar] [CrossRef]

- Chen, T.; Guestrin, C. Xgboost: A scalable tree boosting system. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; pp. 785–794. [Google Scholar] [CrossRef]

- Cover, T.; Hart, P. Nearest neighbor pattern classification. IEEE Trans. Inf. Theory 1967, 13, 21–27. [Google Scholar] [CrossRef]

- Cortes, C.; Vapnik, V. Support-vector networks. Mach. Learn. 1995, 20, 273–297. [Google Scholar] [CrossRef]

- Cecil, D.J.; Buechler, D.E.; Blakeslee, R.J. Gridded lightning climatology from TRMM-LIS and OTD: Dataset description. Atmos. Res. 2014, 135, 404–414. [Google Scholar] [CrossRef]

- Virts, K.S.; Wallace, J.M.; Hutchins, M.L.; Holzworth, R.H. Highlights of a new ground-based, hourly global lightning climatology. Bull. Am. Meteorol. Soc. 2013, 94, 1381–1391. [Google Scholar] [CrossRef]

- San Segundo, H.; López, J.A.; Pineda, N.; Altube, P.; Montanyà, J. Sensitivity analysis of lightning stroke-to-flash grouping criteria. Atmos. Res. 2020, 242, 105023. [Google Scholar] [CrossRef]

- Natekin, A.; Knoll, A. Gradient boosting machines, a tutorial. Front. Neurorobot. 2013, 7, 21. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V.; et al. Scikit-learn: Machine learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

- El Alaoui, M. Fuzzy TOPSIS: Logic, Approaches, and Case Studies; CRC Press: Boca Raton, FL, USA, 2021. [Google Scholar] [CrossRef]

- Tippett, M.K.; Koshak, W.J. A baseline for the predictability of US cloud-to-ground lightning. Geophys. Res. Lett. 2018, 45, 10–719. [Google Scholar] [CrossRef]

- Mansouri, E.; Mostajabi, A.; Tong, C.; Rubinstein, M.; Rachidi, F. Lightning Nowcasting Using Solely Lightning Data. Atmosphere 2023, 14, 1713. [Google Scholar] [CrossRef]

- Leinonen, J.; Hamann, U.; Germann, U. Seamless lightning nowcasting with recurrent-convolutional deep learning. Artif. Intell. Earth Syst. 2022, 1, e220043. [Google Scholar] [CrossRef]

| Experiment Set | Variables Included | |||

|---|---|---|---|---|

| CAPE, prec_con, LPI, RH_300, RH_500, RH_850, vor_300, vor_500, | ||||

| S1 | vor_850, T_300, T_500, T_850, sfc_pres, t_2m, clcm, clch, cin_ml, | |||

| shfl_s, qhfl_s, lhfl_s, thb_s, sob_s, tqc, tqi, z | ||||

| S2 | CP, LPI | |||

| S3 | CP, LPI, TRMM Climatology | |||

| S3t | , , TRMM Climatology (i.e., time-averaged LPI and CP) | |||

| Spatial Coverage | Names of Experiments | |||

| S1 | S2 | S3 | S3t | |

| Grid Point (GP) | S1-GP | S2-GP | S3-GP | - |

| 60 km | S1-60 | S2-60 | S3-60 | S3t-60-1h |

| 90 km | S1-90 | S2-90 | S3-90 | S3t-90-2h |

| Metric | Formula | Interpretation |

|---|---|---|

| Accuracy | Overall correctness of the model | |

| Precision | Measured how many predicted events actually happened | |

| POD (Recall) | Measured how well the model detected actual events | |

| FAR | Measured how many predicted events were false alarms | |

| CSI | Balance between false alarms and missed events | |

| ROC-AUC | Measured model’s ability to distinguish classes (lightning and no-lightning) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Published by MDPI on behalf of the International Society for Photogrammetry and Remote Sensing. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jadhav, H.; Singh, P.; Ahrens, B.; Schmidli, J. Machine Learning-Based Identification of Key Predictors for Lightning Events in the Third Pole Region. ISPRS Int. J. Geo-Inf. 2025, 14, 319. https://doi.org/10.3390/ijgi14080319

Jadhav H, Singh P, Ahrens B, Schmidli J. Machine Learning-Based Identification of Key Predictors for Lightning Events in the Third Pole Region. ISPRS International Journal of Geo-Information. 2025; 14(8):319. https://doi.org/10.3390/ijgi14080319

Chicago/Turabian StyleJadhav, Harshwardhan, Prashant Singh, Bodo Ahrens, and Juerg Schmidli. 2025. "Machine Learning-Based Identification of Key Predictors for Lightning Events in the Third Pole Region" ISPRS International Journal of Geo-Information 14, no. 8: 319. https://doi.org/10.3390/ijgi14080319

APA StyleJadhav, H., Singh, P., Ahrens, B., & Schmidli, J. (2025). Machine Learning-Based Identification of Key Predictors for Lightning Events in the Third Pole Region. ISPRS International Journal of Geo-Information, 14(8), 319. https://doi.org/10.3390/ijgi14080319