Diffusion Model-Based Cartoon Style Transfer for Real-World 3D Scenes

Abstract

1. Introduction

1.1. Background and Challenges in Map Style Transfer

1.2. Our Work

2. Related Works

2.1. Image Style Transfer Methods

2.2. Map Style Transfer Methods

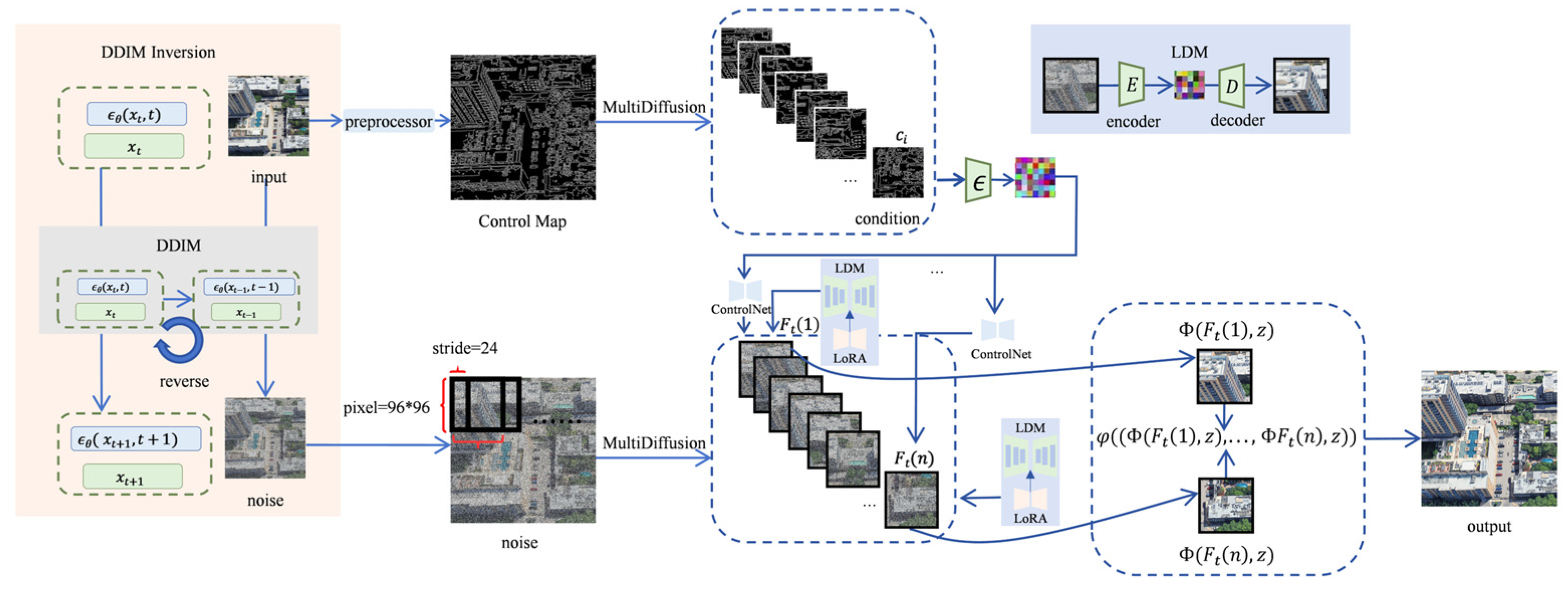

3. Method

3.1. Framework Development

3.1.1. Latent Diffusion Models

3.1.2. LoRA

3.1.3. ControlNet Tile

3.1.4. MultiDiffusion

3.1.5. Initial Noise Control

3.2. Intermediate Output Validation

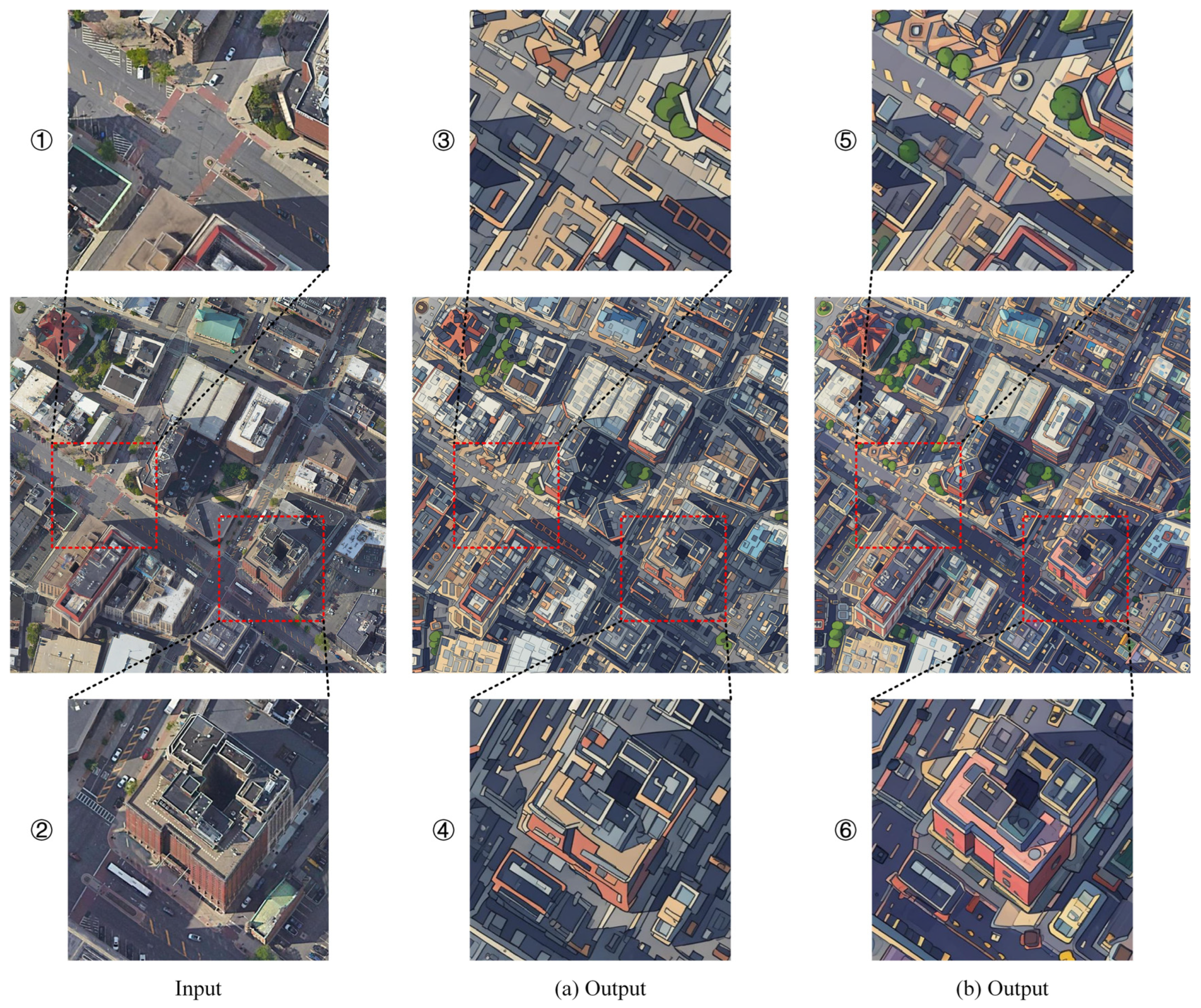

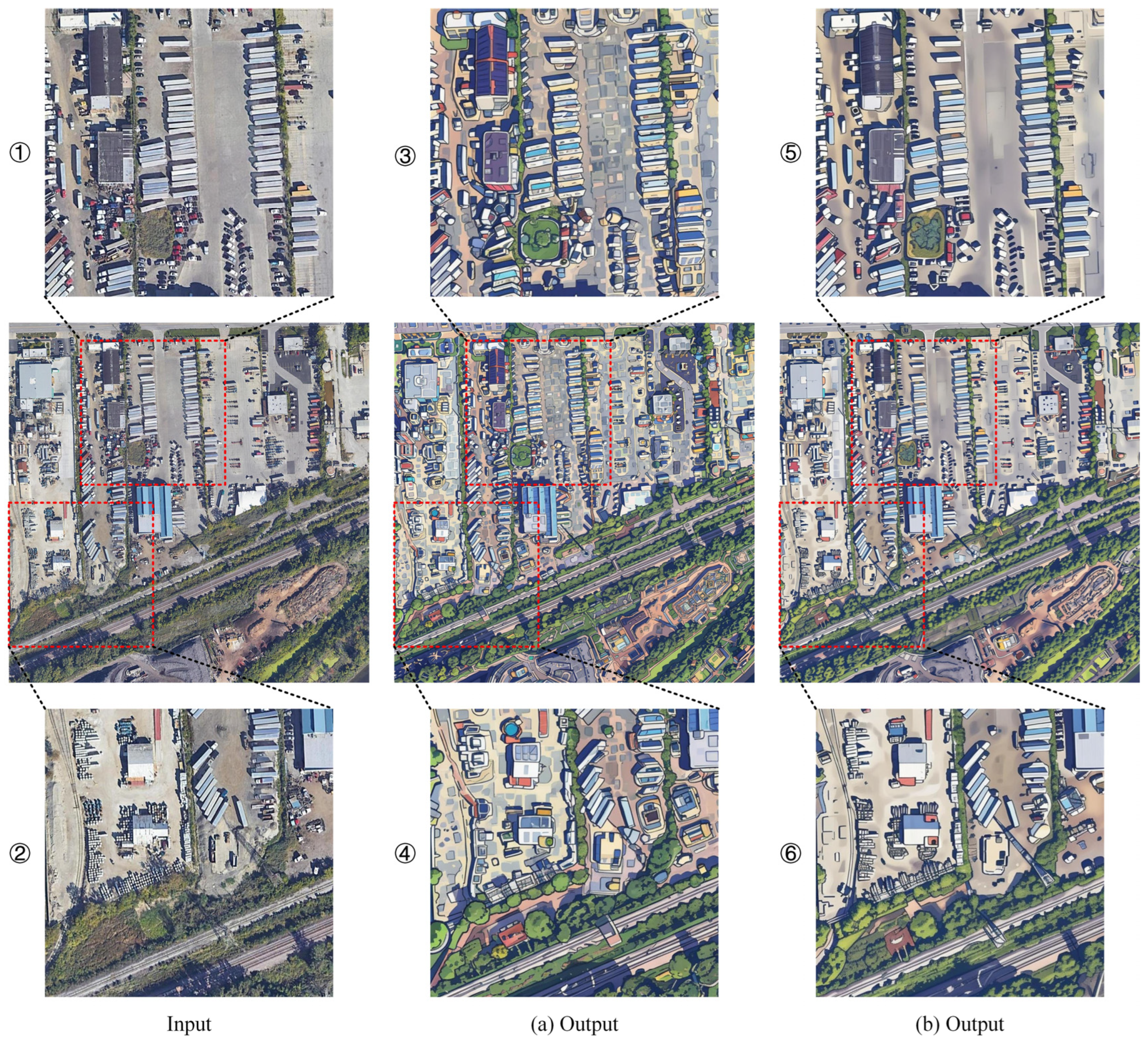

- (1)

- Our proposed method, while preserving the semantic content of the image, can reasonably adjust elements such as shape, brushstrokes, lines, and colors to achieve an outstanding cartoon effect;

- (2)

- When processing various complex and diverse scenes, our method can accurately maintain the integrity of the semantic content;

- (3)

- Furthermore, when generating stylized images, our method can avoid any obvious adverse effects, such as halo effects, ensuring the clarity and visual quality of the image.

3.3. Empirical Evaluation Protocol

- (1)

- For task 1 (The stylized scene presents a distinct cartoon style?), the average score is 4.606, with no significant difference across different scales, which indicates that the stylized scene effectively presents the cartoon style under various scales;

- (2)

- For task 2 (The stylized scene fully preserves the content of the original scene?), the average score is 4.394, with no significant difference across different scales, which indicates that the stylized scene can effectively preserve the original content;

- (3)

- For task 3 (Compared to the original scene, is the level of information loss in the stylized scene acceptable to me?), the average score is 4.424, with no significant difference across different scales, which indicates that users generally find the level of information loss in the stylized scene acceptable;

- (4)

- For task 4 (I like this stylized scene?), the average score is 4.475, which suggests that users generally favor this stylized scene, and that their preference is not influenced by the scale;

- (5)

- We extracted keywords from all the impressions left by users, and the results show that most participants gave positive feedback such as “interesting”, “realistic”, “with a cartoon style”, “attractive”, and “beautiful and vivid” after completing the questionnaire. The feedback from users about the stylized scene is positive and affirmative.

3.4. Quantitative Evaluation

4. Discussion

- (1)

- The generation process requires a certain amount of time. The experiments in this paper were conducted on an NVIDIA RTX 4080 GPU, and for a single 1024 × 1024 image, the average sampling time is about 41 s, which limits the possibility of real-time generation. In terms of efficiency, it is necessary to start from aspects such as model structure optimization, knowledge distillation, and hardware acceleration to compress the generation time from the minute level to the second or even millisecond level, which is a prerequisite for achieving interactive applications and large-scale deployment.

- (2)

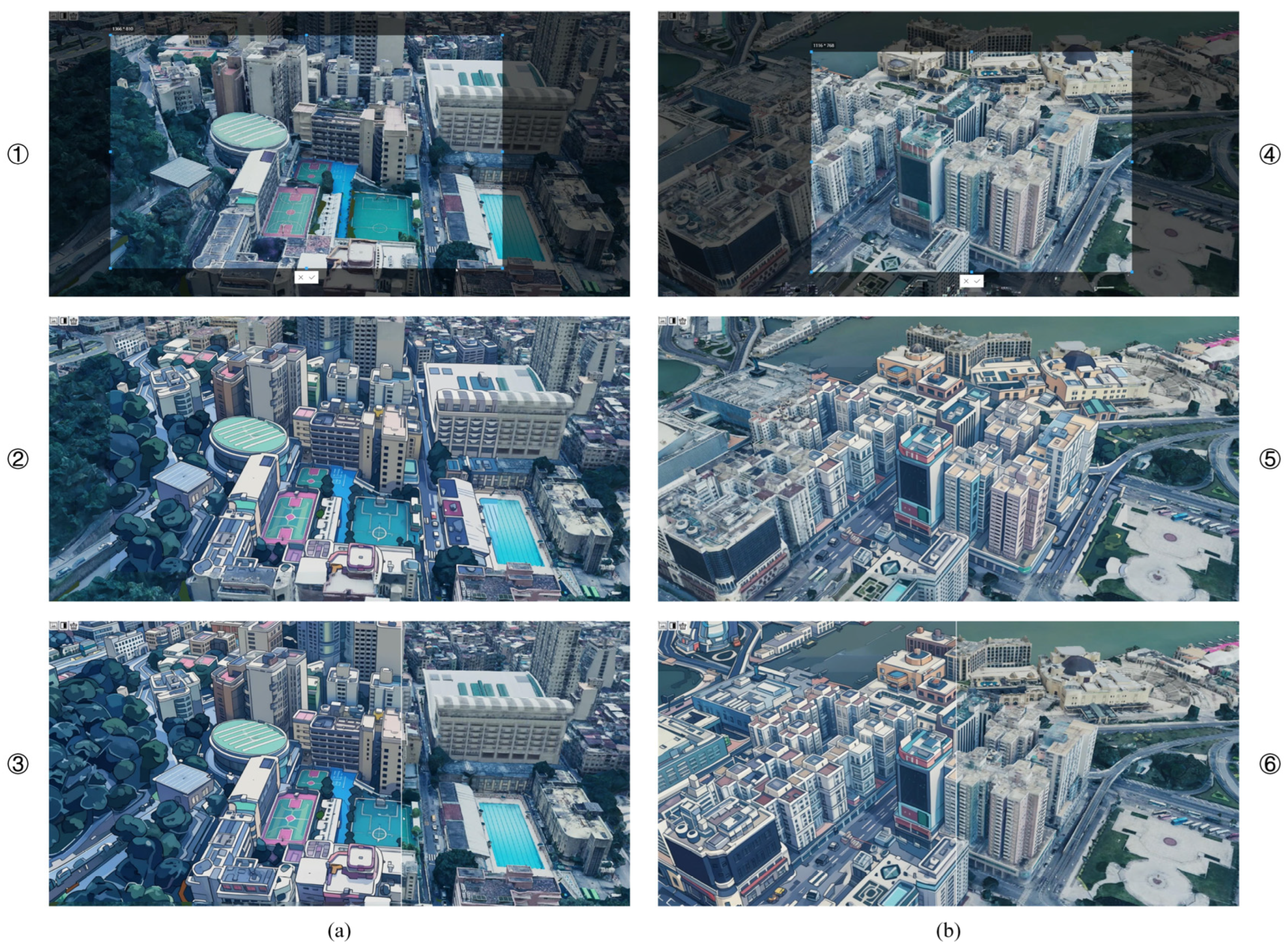

- Although this method performs excellently when processing high-resolution remote-sensing imagery from Google Earth and preprocessed 1024 × 1024 oblique photogrammetry tiles, its application on standard maps is not ideal. As shown in Figure 9, the core advantage of our framework lies in the cartoon-style simplification of texture-rich real-world scenes. When the input is standard map data, which are already highly abstract and symbolic, the model has difficulty performing effective secondary simplification. The generated results tend to remain at a superficial level of color enhancement and edge tracing, rather than producing creative style transfer. This indicates that future work requires the model to be able to intelligently identify different data types and adopt differentiated stylization strategies.

5. Conclusions

- (1)

- Proposing an automated framework based on DM that requires no prompt guidance and can stably transfer complex cartoon styles to photorealistic 3D scenes. We have demonstrated the possibility of achieving high-quality, high-consistency artistic visualization without sacrificing the fidelity of geographic information, providing technical support for the evolution of cartography from a traditional functional expression to a narrative medium that integrates science and art;

- (2)

- We conducted quantitative, qualitative, and user evaluations of this method. The results show that the method performs with high quality in both image content preservation and style transfer effects.

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Kent, A.J.; Vujakovic, P. Stylistic Diversity in European State 1: 50 000 Topographic Maps. Cartogr. J. 2009, 46, 179–213. [Google Scholar] [CrossRef]

- Roth, R.E. Cartographic Design as Visual Storytelling: Synthesis and Review of Map-Based Narratives, Genres, and Tropes. Cartogr. J. 2021, 58, 83–114. [Google Scholar] [CrossRef]

- Christophe, S.; Hoarau, C. Expressive Map Design Based on Pop Art: Revisit of Semiology of Graphics? Cartogr. Perspect. 2012, 73, 61–74. [Google Scholar] [CrossRef]

- Christophe, S.; Mermet, S.; Laurent, M.; Touya, G. Neural map style transfer exploration with GANs. Int. J. Cartogr. 2022, 8, 18–36. [Google Scholar] [CrossRef]

- Bogucka, E.P.; Meng, L. Projecting emotions from artworks to maps using neural style transfer. Proc. ICA 2019, 2, 9. [Google Scholar] [CrossRef][Green Version]

- Kang, Y.; Gao, S.; Roth, R.E. Transferring multiscale map styles using generative adversarial networks. Int. J. Cartogr. 2019, 5, 115–141. [Google Scholar] [CrossRef]

- Chen, X.; Chen, S.; Xu, T.; Yin, B.; Peng, J.; Mei, X.; Li, H. SMAPGAN: Generative Adversarial Network-Based Semisupervised Styled Map Tile Generation Method. IEEE Trans. Geosci. Remote Sens. 2021, 59, 4388–4406. [Google Scholar] [CrossRef]

- Ganguli, S.; Garzon, P.; Glaser, N. GeoGAN: A Conditional GAN with Reconstruction and Style Loss to Generate Standard Layer of Maps from Satellite Images. arXiv 2019, arXiv:1902.05611. [Google Scholar]

- Jin, W.; Zhou, S.; Zheng, L. Map style transfer using pixel-to-pixel model. J. Phys. Conf. Ser. 2021, 1903, 012041. [Google Scholar] [CrossRef]

- Li, Z.; Guan, R.; Yu, Q.; Chiang, Y.-Y.; Knoblock, C.A. Synthetic Map Generation to Provide Unlimited Training Data for Historical Map Text Detection. In Proceedings of the 4th ACM SIGSPATIAL International Workshop on AI for Geographic Knowledge Discovery, Beijing, China, 2 November 2021; pp. 17–26. [Google Scholar] [CrossRef]

- Ho, J.; Jain, A.; Abbeel, P. Denoising Diffusion Probabilistic Models. In Advances in Neural Information Processing Systems 33, Proceedings of the Annual Conference on Neural Information Processing Systems, Virtual, 6–12 December 2020; Curran Associates, Inc.: Red Hook, NY, USA, 2020; pp. 6840–6851. Available online: https://proceedings.neurips.cc/paper/2020/hash/4c5bcfec8584af0d967f1ab10179ca4b-Abstract.html (accessed on 2 May 2025).

- Rombach, R.; Blattmann, A.; Lorenz, D.; Esser, P.; Ommer, B. High-Resolution Image Synthesis with Latent Diffusion Models. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 10684–10695. Available online: https://openaccess.thecvf.com/content/CVPR2022/html/Rombach_High-Resolution_Image_Synthesis_With_Latent_Diffusion_Models_CVPR_2022_paper.html (accessed on 2 May 2025).

- Wang, B.; Wang, W.; Yang, H.; Sun, J. Efficient example-based painting and synthesis of 2D directional texture. IEEE Trans. Vis. Comput. Graph. 2004, 10, 266–277. [Google Scholar] [CrossRef]

- Zhang, W.; Cao, C.; Chen, S.; Liu, J.; Tang, X. Style Transfer Via Image Component Analysis. IEEE Trans. Multimed. 2013, 15, 1594–1601. [Google Scholar] [CrossRef]

- Jing, Y.; Yang, Y.; Feng, Z.; Ye, J.; Yu, Y.; Song, M. Neural Style Transfer: A Review. IEEE Trans. Vis. Comput. Graph. 2020, 26, 3365–3385. [Google Scholar] [CrossRef] [PubMed]

- Zhang, C.; Zhu, Y.; Zhu, S.-C. MetaStyle: Three-Way Trade-off among Speed, Flexibility, and Quality in Neural Style Transfer. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; Volume 33. [Google Scholar] [CrossRef]

- Zhang, Y.; Tang, F.; Dong, W.; Huang, H.; Ma, C.; Lee, T.-Y.; Xu, C. Domain Enhanced Arbitrary Image Style Transfer via Contrastive Learning. In Proceedings of the SIGGRAPH ‘22: Special Interest Group on Computer Graphics and Interactive Techniques Conference, Vancouver, BC, Canada, 7–11 August 2022; pp. 1–8. [Google Scholar] [CrossRef]

- Zhu, J.-Y.; Park, T.; Isola, P.; Efros, A.A. Unpaired Image-To-Image Translation Using Cycle-Consistent Adversarial Networks. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2223–2232. Available online: https://openaccess.thecvf.com/content_iccv_2017/html/Zhu_Unpaired_Image-To-Image_Translation_ICCV_2017_paper.html (accessed on 2 May 2025).

- Brock, A.; Donahue, J.; Simonyan, K. Large Scale GAN Training for High Fidelity Natural Image Synthesis. arXiv 2019, arXiv:1809.11096. [Google Scholar]

- Miyato, T.; Kataoka, T.; Koyama, M.; Yoshida, Y. Spectral Normalization for Generative Adversarial Networks. arXiv 2018, arXiv:1802.05957. [Google Scholar]

- Huang, N.; Tang, F.; Dong, W.; Xu, C. Draw Your Art Dream: Diverse Digital Art Synthesis with Multimodal Guided Diffusion. In Proceedings of the 30th ACM International Conference on Multimedia, Lisbon, Portugal, 10–14 October 2022; pp. 1085–1094. [Google Scholar] [CrossRef]

- Wang, Z.; Zhao, L.; Xing, W. StyleDiffusion: Controllable Disentangled Style Transfer via Diffusion Models. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Paris, France, 1–6 October 2023; pp. 7677–7689. Available online: https://openaccess.thecvf.com/content/ICCV2023/html/Wang_StyleDiffusion_Controllable_Disentangled_Style_Transfer_via_Diffusion_Models_ICCV_2023_paper.html (accessed on 2 May 2025).

- Li, S. DiffStyler: Diffusion-based Localized Image Style Transfer. arXiv 2024, arXiv:2403.18461. [Google Scholar]

- Mokady, R.; Hertz, A.; Aberman, K.; Pritch, Y.; Cohen-Or, D. NULL-Text Inversion for Editing Real Images Using Guided Diffusion Models. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 6038–6047. Available online: https://openaccess.thecvf.com/content/CVPR2023/html/Mokady_NULL-Text_Inversion_for_Editing_Real_Images_Using_Guided_Diffusion_Models_CVPR_2023_paper.html (accessed on 2 May 2025).

- Zhang, Y.; Huang, N.; Tang, F.; Huang, H.; Ma, C.; Dong, W.; Xu, C. Inversion-Based Style Transfer with Diffusion Models. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; pp. 10146–10156. Available online: https://openaccess.thecvf.com/content/CVPR2023/html/Zhang_Inversion-Based_Style_Transfer_With_Diffusion_Models_CVPR_2023_paper.html (accessed on 2 May 2025).

- Alaluf, Y.; Garibi, D.; Patashnik, O.; Averbuch-Elor, H.; Cohen-Or, D. Cross-Image Attention for Zero-Shot Appearance Transfer. arXiv 2023, arXiv:2311.03335. [Google Scholar]

- Hertz, A.; Voynov, A.; Fruchter, S.; Cohen-Or, D. Style Aligned Image Generation via Shared Attention. arXiv 2024, arXiv:2312.02133. [Google Scholar]

- He, F.; Li, G.; Zhang, M.; Yan, L.; Si, L.; Li, F. FreeStyle: Free Lunch for Text-Guided Style Transfer Using Diffusion Models. arXiv 2024, arXiv:2401.15636. [Google Scholar]

- Friedmannová, L. What Can We Learn from the Masters? Color Schemas on Paintings as the Source for Color Ranges Applicable in Cartography. In Cartography and Art; Springer: Berlin/Heidelberg, Germany, 2009; pp. 1–13. [Google Scholar] [CrossRef]

- Isola, P.; Zhu, J.-Y.; Zhou, T.; Efros, A.A. Image-To-Image Translation with Conditional Adversarial Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 1125–1134. Available online: https://openaccess.thecvf.com/content_cvpr_2017/html/Isola_Image-To-Image_Translation_With_CVPR_2017_paper.html (accessed on 12 May 2025).

- Wu, M.; Sun, Y.; Jiang, S. Adaptive color transfer from images to terrain visualizations. IEEE Trans. Vis. Comput. Graph. 2023, 30, 5538–5552. [Google Scholar] [CrossRef]

- Wu, M.; Sun, Y.; Li, Y. Adaptive transfer of color from images to maps and visualizations. Cartogr. Geogr. Inf. Sci. 2022, 49, 289–312. [Google Scholar] [CrossRef]

- Zhang, L.; Rao, A.; Agrawala, M. Adding Conditional Control to Text-to-Image Diffusion Models. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Paris, France, 1–6 October 2023; pp. 3836–3847. Available online: https://openaccess.thecvf.com/content/ICCV2023/html/Zhang_Adding_Conditional_Control_to_Text-to-Image_Diffusion_Models_ICCV_2023_paper.html (accessed on 2 May 2025).

- Sohl-Dickstein, J.; Weiss, E.; Maheswaranathan, N.; Ganguli, S. Deep Unsupervised Learning using Nonequilibrium Thermodynamics. In Proceedings of the 32nd International Conference on Machine Learning, Lille, France, 6–11 July 2015; pp. 2256–2265. Available online: https://proceedings.mlr.press/v37/sohl-dickstein15.html (accessed on 2 May 2025).

- Kingma, D.P.; Welling, M. Auto-Encoding Variational Bayes. arXiv 2022, arXiv:1312.6114. [Google Scholar]

- Hu, E.J.; Shen, Y.; Wallis, P.; Allen-Zhu, Z.; Li, Y.; Wang, S.; Wang, L.; Chen, W. LoRA: Low-Rank Adaptation of Large Language Models. arXiv 2021, arXiv:2106.09685. [Google Scholar]

- Ho, J.; Salimans, T. Classifier-Free Diffusion Guidance. arXiv 2022, arXiv:2207.12598. [Google Scholar]

- Bar-Tal, O.; Yariv, L.; Lipman, Y.; Dekel, T. MultiDiffusion: Fusing diffusion paths for controlled image generation. In Proceedings of the 40th International Conference on Machine Learning, Honolulu, HI, USA, 23–29 July 2023; Volume 202, pp. 1737–1752. [Google Scholar]

- Hertz, A.; Mokady, R.; Tenenbaum, J.; Aberman, K.; Pritch, Y.; Cohen-Or, D. Prompt-to-Prompt Image Editing with Cross Attention Control. arXiv 2022, arXiv:2208.01626. [Google Scholar]

- Radford, A.; Kim, J.W.; Hallacy, C.; Ramesh, A.; Goh, G.; Agarwal, S.; Sastry, G.; Askell, A.; Mishkin, P.; Clark, J.; et al. Learning Transferable Visual Models from Natural Language Supervision. In Proceedings of the 38th International Conference on Machine Learning, Virtual, 18–24 July 2021; pp. 8748–8763. Available online: https://proceedings.mlr.press/v139/radford21a.html (accessed on 2 May 2025).

| Methodology | Artistic Effect | Content Preservation | Stability | Example Image of 3D Scene |

|---|---|---|---|---|

| Neural style transfer (NST) | High (Excellent at transferring textures, brushstrokes, and colors; strong artistic feel) | Low (Severely damages geometric structures; roads, text, etc., are difficult to recognize) | High (For a given input, the output is deterministic and consistent) |  |

| Paired GAN (pix2pix) | Medium (More focused on mapping between two style systems rather than free artistic creation) | High (Strong supervisory signals ensure high consistency of content structure) | High (Stable training and inference processes; predictable results) |  |

| Unpaired GAN (CycleGAN) | High (Enables transfer across different domains, e.g., maps and paintings; large creative space) | Medium (Cycle-consistency helps, but can still distort content and introduce artifacts) | Low (Less stable to train than pix2pix; can suffer from mode collapse; results have some randomness) |  |

| Diffusion models (DM) | High (Controllable and high-quality injection of cartoon style; consistent style) | High (Strategies like ControlNet ensure the preservation of 3D geographic structure and semantic information) | Very High (Stable generation process, avoiding issues like mode collapse found in GAN) |  |

| Scale | Original Scenes | Stylized Scenes | Tasks | |

|---|---|---|---|---|

| Large | Group One |  |  | T1: The stylized scene presents a distinct cartoon style? T2: The stylized scene fully preserves the content of the original scene? T3: Compared to the original scene, is the level of information loss in the stylized scene acceptable to me? T4: I like this stylized scene? |

| Group Two |  |  | ||

| Group Three |  |  | ||

| Medium | Group One |  |  | |

| Group Two |  |  | ||

| Group Three |  |  | ||

| Small | Group One |  |  | |

| Group Two |  |  | ||

| Group Three |  |  | ||

| T5: How does the above stylized scenes affect your perception? | ||||

| Task | Scale | Average Score | Overall Average | F | P |

|---|---|---|---|---|---|

| Task 1 | Large | 4.455 | 4.606 | 0.188 | 0.020 |

| Medium | 4.697 | ||||

| Small | 4.667 | ||||

| Task 2 | Large | 4.515 | 4.394 | 0.163 | 0.018 |

| Medium | 4.273 | ||||

| Small | 4.394 | ||||

| Task 3 | Large | 4.394 | 4.424 | 0.073 | 0.368 |

| Medium | 4.485 | ||||

| Small | 4.394 | ||||

| Task 4 | Large | 4.424 | 4.475 | 0.056 | 0.558 |

| Medium | 4.515 | ||||

| Small | 4.485 |

| Task | Scale Level | Mean Difference (I–J) | P | |

|---|---|---|---|---|

| Level I | Level J | |||

| Task 1 | Large | Medium | −0.242 | 0.401 |

| Large | Small | −0.212 | 0.510 | |

| Small | Small | 0.030 | 0.900 | |

| Task 2 | Large | Medium | 0.242 | 0.302 |

| Large | Small | 0.121 | 0.721 | |

| Small | Small | −0.121 | 0.721 | |

| Metric | Our Method | NET | pix2pix | CycleGAN | ControlNet |

|---|---|---|---|---|---|

| LPIPS ↓ | 0.138 | 0.484 | 0.605 | 0.617 | 0.516 |

| SSIM ↑ | 0.865 | 0.499 | 0.047 | 0.409 | 0.171 |

| FID Score ↓ | 177.08 | 502.82 | 541.137 | 401.513 | 247.0703 |

| CLIP Similarity ↑ | 0.911 | 0.689 | 0.561 | 0.6153 | 0.705 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Published by MDPI on behalf of the International Society for Photogrammetry and Remote Sensing. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, Y.; Zhou, H.; Chen, J.; Yang, N.; Zhao, J.; Chao, Y. Diffusion Model-Based Cartoon Style Transfer for Real-World 3D Scenes. ISPRS Int. J. Geo-Inf. 2025, 14, 303. https://doi.org/10.3390/ijgi14080303

Chen Y, Zhou H, Chen J, Yang N, Zhao J, Chao Y. Diffusion Model-Based Cartoon Style Transfer for Real-World 3D Scenes. ISPRS International Journal of Geo-Information. 2025; 14(8):303. https://doi.org/10.3390/ijgi14080303

Chicago/Turabian StyleChen, Yuhang, Haoran Zhou, Jing Chen, Nai Yang, Jing Zhao, and Yi Chao. 2025. "Diffusion Model-Based Cartoon Style Transfer for Real-World 3D Scenes" ISPRS International Journal of Geo-Information 14, no. 8: 303. https://doi.org/10.3390/ijgi14080303

APA StyleChen, Y., Zhou, H., Chen, J., Yang, N., Zhao, J., & Chao, Y. (2025). Diffusion Model-Based Cartoon Style Transfer for Real-World 3D Scenes. ISPRS International Journal of Geo-Information, 14(8), 303. https://doi.org/10.3390/ijgi14080303