Abstract

Accurate clustering of buildings is a prerequisite for map generalization in densely populated urban data. Edge buildings at the edge of building groups, identified through human-eye recognition, may serve as boundary constraints for clustering. This paper proposes the use of seven Gestalt factors to distinguish edge buildings from other buildings. Employing the DGI model to produce high-quality node embeddings, optimize the mutual information between the local node representation and the global summary vector. We then conduct training to identify edge buildings in the two test datasets using eight feature combinations. This research introduces a modified distance metric called the ‘m_dis’ feature, which is used to describe the closeness between two adjacent buildings. Finally, the clusters of edge and inner buildings are determined through a constrained graph traversal that is based on the ‘m_dis’ feature. This method is capable of effectively identifying and distinguishing densely distributed building groups in Chengdu City, China, as demonstrated by experimental results. It offers novel concepts for edge building recognition in dense urban areas, confirms the significance of the LOF factor and the ‘m_dis’ feature, and achieves superior clustering results in comparison to other methods. Additionally, this semi-supervised clustering method (DGI-EIC) has the potential to achieve an ARI index of approximately 0.5.

1. Introduction

Cartography is an academic field focused on the processes involved in scale reduction for maps. The essence resides in the efficient conveyance of information within limited map space via the abstraction of geometric information [1]. In urban map generalization, the boundary structure and shape pertaining to buildings are both significant and intricate. Owing to the dense arrangement of buildings, they must initially be grouped [2,3], succeeded by map generation operators such as amalgamation and displacement [3,4,5,6].

Buildings, like complex planar polygons, possess attributes including size, orientation, shape, and distribution density. In the context of building clustering, algorithms must thoroughly ascertain the geometric attributes of the building, along with its distribution density and orientation, and establish constraints that adhere to Gestalt principles (proximity, similarity, closure, continuity) to optimize the clustering result [7,8,9].

At present, most building clustering algorithms are based on proximity and similarity in the Gestalt concept and pay less attention to the closure principle [10,11,12,13]. When the scale of a city map is reduced, the gap space between buildings reduces proportionately. After the QGIS 3.40.4 software executes the automated dissolve process, a high number of building combinations with concave features are commonly generated in the densely built-up areas [14]. Through the study of the maps of these dense areas with the human eye, a significant number of building groupings showing closed patterns can be found. The buildings positioned on the edges of these closed building groups often become the contour information captured by the human eye first [15]. This paper refers to such buildings as edge buildings. Therefore, the research of this paper focuses on the clustering problem of concave buildings, seeking to make up for the limitation of the existing approaches that do not fully incorporate the principle of closure. Specifically, this article will focus on the following two issues:

- Which building characteristics determine whether it can be designated as an edge building?

- Do alternative ways of identifying edge buildings affect the clustering results?

This article organizes its sections as follows: Section 2 analyzes the characteristics of several existing building clustering algorithms. Section 3 looks at the edge features of the building, explains the DGI model, and describes how to navigate the graph using the m_dis constraints. Section 4 presents three experiments. The first experiment involves the labeling of the edge buildings of the training dataset using automated rules and manual modifications. The second experiment is designed to demonstrate the binary classification results of the DGI model under eight training conditions based on feature combinations. The third experiment looks at how the clustering results from the DGI-EIC method and RF-EIC method in this paper stack up against the results from the CDC [16] and Multi-Graph-Partition methods (shortened to MGP) [11]. Section 5 is a discussion.

2. Related Work

Some clustering methodologies regard buildings as nodes, with the edge between two adjacent buildings representing the graph edge, thereby converting the clustering issue of buildings into node clustering problems within graphs [10,11,17,18,19,20]. Examples include clustering based on multi-layer graphs [19] and local or global edge cut-off based on the density of local Gestalt features and variance values [10,17,18,20].

Building groups frequently exhibit regular geometric, semantic, and structural features [21,22,23,24]. Research indicates that vertical and linear patterns significantly improve the aggregation effect of buildings [25,26]. We deduce that the visual characteristics of buildings, including elongated forms, uneven surrounding density distribution, and C-shaped, L-shaped, or U-shaped buildings [14] can be classified as boundary structures of clusters [27].

The distribution of buildings is complex, and the reasonable expression of many Gestalt rules [7], as well as the automatic identification of edge buildings, presents several challenges. Certain density-based clustering techniques examine the distribution density of buildings and the delineation of grouping boundaries [28,29,30,31,32,33,34,35].

Neural networks are a technique for information transformation that effectively represents the multidimensional attributes of buildings. Self-Organizing Map (SOM) is employed to identify the contours and boundaries of building clusters [36]. The graph convolutional network (GCN) enhances the contour feature analysis of building clusters by categorizing buildings into three kinds: edge, inner, and free [15]. By integrating the Fourier transform with GCN technology, building features are converted into the embedding space and subsequently grouped utilizing the k-means method [37]. These investigations demonstrate that neural networks serve as a potent deformation mechanism capable of nonlinearly transforming Gestalt factors of intricate building structures, thus producing readily identifiable building structures in embedded space.

Graph Convolutional Networks (GCN) is a robust semi-supervised classification method for graph-structured data [38]. Graphical convolutional networks (GCNs) can encapsulate the characteristics of buildings and their edges (including distances and comparisons of neighboring structures) via local graph convolution operations to derive intricate Gestalt features within groups of buildings [39].

At present, there is a significant scarcity of research on the characteristics of edge buildings, with the available research primarily focusing on three indicators: Local statistics (CV value) [34], which is the ratio of the standard deviation to the mean of the local arrangement of the Gestalt feature. The local coordinate deviation, which can be calculated by the center coordinate of the smallest bounding rectangle (SBR) of the central building node and its neighbors [15]. The CDC method measures the local centrality by calculating the directional uniformity of KNNs [40] to distinguish internal and boundary points [16].

3. Materials and Methods

In this study, we employ the Deep Graph Infomax (DGI) model [41], an unsupervised framework for graph representation learning, to tackle the challenge of delineating building clusters in densely populated urban environments. Based on DGI, we introduce an Edge and Inner Clustering method (DGI-EIC) that classifies each building as either an edge or inner node. This classification is achieved by maximizing the mutual information between local node embeddings and a global summary vector, allowing for precise differentiation between edge and inner buildings within complex building clusters.

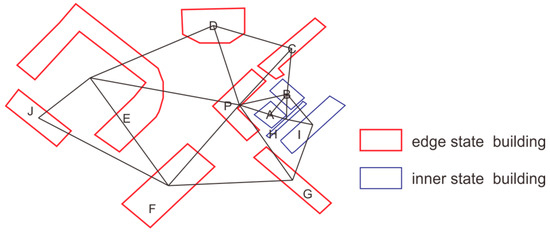

We maintain that buildings can be classified into two categories: edge buildings and inner buildings. Edge buildings are buildings situated on the outer perimeter of the building group. The P-building in Figure 1 is an example of an edge building that is typically conspicuous due to the stark contrast in visual features between the buildings on either side. The slender, concave shapes of these structures (C-typed, U-typed, L-typed) and the uneven density distribution surrounding them are frequently used to identify them. The appearance of the building group is typically defined by the presence of edge buildings surrounding inner buildings in clustering results. The distribution density, distance, and shape of the inner buildings are in high balance in relation to the surrounding buildings, as they are situated in the interior area of the cluster. The visual characteristics of the inner buildings are more consistent than those of the edge buildings.

The identification of edge building is the primary focus of this study in order to accomplish effective building clustering. The following features are used to identify edge buildings: directionality, distribution density, and geometric shape (such as C-shaped, L-shaped, or U-shaped). These characteristics can assist in determining whether the building constitutes a visual boundary. The inner buildings are subsequently categorized into the corresponding cluster by examining the spatial relationship between the inner buildings and the adjacent edge buildings. This approach guarantees the constraints of edge buildings while simultaneously optimizing the clustering attribution of inner buildings, thereby concluding the overall clustering assignment. The context of edge and inner buildings is illustrated in Figure 1:

Figure 1.

Edge buildings and inner buildings.

As illustrated in Figure 1. The buildings are identified by letters, with the building number P designated as an edge building. A building group is formed by the inner buildings A, B, H, and I, which are surrounded by the edge buildings C, G, and P, which form a very obvious L-shaped boundary constraint. Buildings D and F are evidently not included in any sub-cluster, while buildings E and J constitute a local sub-cluster.

It is important to note that the classification method employed in this study only categorizes buildings into two types: edge buildings and inner buildings. It does not include the “free state buildings” that have been discussed in previous studies [15]. The following section will provide a comprehensive description of the identification characteristics of edge buildings.

3.1. Descriptive Methods for Edge-Building Features

The building and its association relationship can be represented as graph structural data in the study of building clustering. Nodes represent buildings, and edges represent spatial associations between buildings. Suppose we have a graph G = (V, E), where V and E are the sets of nodes and edges, respectively, and the number of nodes is N. The node feature matrix is formed by the representation of the feature of each node i ∈ V as , where F is the number of features and N is the number of nodes. The adjacency matrix represents the edge structure information of each building node in the graph. If , it indicates that there is an edge between building node i and building node j; otherwise, it is 0. The Voronoi diagram units constructed at the encrypted boundary points of the building are combined to create the polygonal Voronoi diagram division of buildings [42], which can be observed in Figure 4a. The graph structure is formed by establishing the incident edges of two adjacent buildings based on the proximity relationship of the Voronoi diagram polygon.

3.1.1. Expand the K-NN Neighborhoods in Graph

The Euclidean distance between each building and its k nearest neighbor elements is determined by the conventional K-NN procedure. This neighborhood range expression simplifies the process of obtaining spherical neighborhood results. The natural adjacency relationship between the margins of buildings facilitates the construction of neighborhoods. This paper employs the shortest path algorithm and graph structure to define K-NN neighborhoods in the graph structure space, thereby obtaining K-NN expressions that are appropriate for constructing distribution characteristics. The length of the associated edges is used to quantify the distance between buildings. The is defined as the distance between buildings v and u, taking into account their volume and size:

In Formula (1), The path distance between node v and node u is denoted by d(v,u), which is calculated using the classic Dijkstra algorithm [43]. The mean radii of the buildings represented by node v and node u are r(v) and r(u), respectively [44]. The spatial volume of a building is more significant as its radius increases, which is then manipulated as a closer relationship in the distance calculation. The constant “0” in the formula is employed to prevent issues with negative value calculations. The volume characteristics of the building can be effectively taken into account by this correction distance. Larger buildings are more likely to be considered in close proximity at the same path distance.

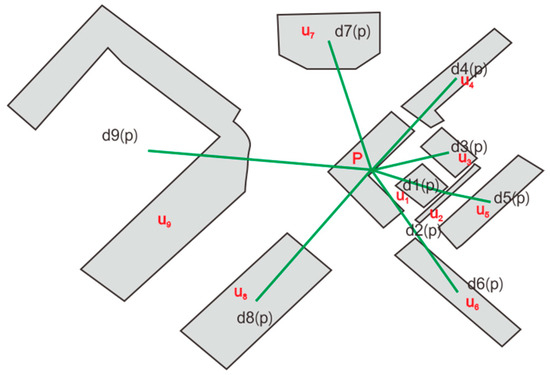

This paper further defines the neighborhood relationship of a building, selects a building node p as the starting point, calculates its shortest path distance from other nodes based on the graph structure, and arranges the path distances of all other nodes. The k-neighborhood collection of node p is composed of the first node to the k-th node of the sorting result of path distance, arranged in order of distance from small to large. The path distance between node u1 and node p is the minimum, and it is denoted as d1(p), as illustrated in Figure 2.

Figure 2.

P building and its K-NN neighborhoods.

The distances of the nine points to point P in Figure 2 are as follows:. The following two definitions are straightforward to comprehend, as indicated by Figure 2.

Definition 1.

K-distance from building P

The k-distance is the distance between the k-th nearest neighbor and point P. For node P, its K-distance is defined as Formula (2):

Among them, is the i-th neighbor sorted from small to large by the distance from point to point P. If the number of neighbors is less than k, then is the farthest distance among all neighbors at point P.

Definition 2.

K-NN neighborhood of building P

A set of K-NN neighbors of node P is defined as a neighborhood with a distance of no more than .

where is the number of the i-th neighbor sorted by distance.

3.1.2. The LOF Characteristic of the Building [29]

The Formula (4) defines the local reachable density for building P, which is the inverse of the average reachability distance based on the K-NN neighborhood of P.

|kn(P)| is the number of elements in the K-NN neighborhood of building P in Formula (4) and is the distance between point P and point in the K-NN neighborhood of point P. The LOF feature of point P is the average of the ratio of the local reachable density of each point in point Ps K-NN neighborhood to the local reachable density of point P. This feature represents the density of the local distribution of data, which is defined as . The formula is as follows:

In Formula (5), |kn(P)| is the number of features in the K-NN neighborhood of point P. is the local reachable density of point in the K-NN neighborhood of point P.

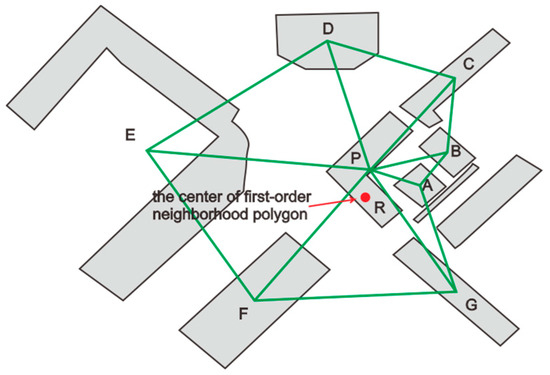

3.1.3. The Center_devia and First_neighbor_avg_r Features of Buildings

Figure 3 illustrates the points that constitute the first-order neighborhood of point P, which are points A, B, C, D, E, F, and G, respectively. A first-order neighborhood polygon of point P is formed by connecting them in sequence. Point R is the geometric centroid of this polygon. The Center_devia feature of the P point is the length of vector. The First_neighbor_avg_r feature is the average distance of the center of the polygon and each vertex of the polygon. As illustrated in Figure 3, the First_neighbor_avg_r is the average of the lengths of PA, PB, PC, PD, PE, PF, and PG.

Figure 3.

The center_devia and first_neighbor_avg_r characters of building P.

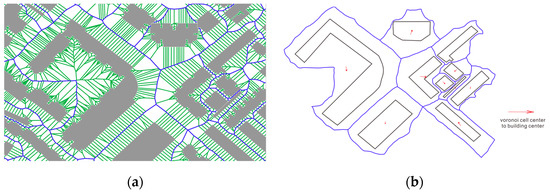

3.1.4. The Density and Vo_to_b_length Characters of the Building

To produce Voronoi diagrams for buildings, this paper implements Wu’s methodology [42]. Initially, the boundary contours of all buildings are interpolated, and the ID of the building is designated to each interpolation point. Subsequently, generate Voronoi diagrams by utilizing these interpolation points as the occurrence elements. The ID of the point is the ID of each Voronoi diagram unit. Then, merge Voronoi diagrams with the same ID to obtain the Voronoi diagram of the building. Figure 4 illustrates the buildings and their corresponding Voronoi diagram unit.

Figure 4.

(a) The encrypted points and their Voronoi diagrams; (b) The buildings and Voronoi diagrams.

In Figure 4b, the blue stroke area represents the Voronoi diagram unit where the building is located. The starting point of the red arrow line is the center of the Voronoi diagram unit, and the endpoint is the center of the building. This red arrow indicates the position where the building deviates from the center of the Voronoi graph unit, which can express the distribution of the building. The area of a building divided by the area of the Voronoi diagram unit is the density feature [44].

3.1.5. The m_dis of Two Adjacent Buildings and the m_dis_cv Character of a Building

We propose a modified distance (abbreviated as m_dis) between two adjacent buildings and generate the coefficient of variation for modified distances (abbreviated as m_dis_cv) of a building. There are detailed descriptions of the modified distance in Figure 5.

Figure 5.

Building A and its surrounding four buildings.

In Figure 5, A is a polygon, and B, C, D, and E are four polygons surrounding A. The shortest distances between A and B, C, D, and E are denoted as dab, dac, dad, and dae, and the inner widths of A, B, C, D, and E are ha, hb, hc, hd, and he. Among them, the internal widths of B, C, and E are equal, while the internal width of D is larger.

Figure 5 shows that polygons with the same inner width (like polygons B and E) are more likely to be linked to A by shorter distances. Since B and A are closer, they are more likely to be in the same cluster. Similar classifications are applied to polygons with larger inner widths. Because D has a wider inner width, D and A are more associated despite C having the same distance to A. This correlation defines the modified distance for every two nearby buildings, and the computation formula is as follows:

In the formula, A and i represent the buildings at both ends of their incident edge, d(A,i) is the closest distance between buildings A and i, and and are the inner widths of buildings A and i. For complex polygons, this inner width is difficult to calculate. In this study, the mean radius is used to represent the inner width. The mean radius means the average distance from each vertex of the building to its centroid [44].

For a building, all the m_dis of its incident edges constitute a numerical set, characterized by mean and standard deviation. The coefficient of variation of these m_dis can be shown as Formula (7):

In Formula (7), is the m_dis of the edge of the i-th building adjacent to A building, std is the standard deviation, and mean is the average. The larger this value, the more deviated the distribution of m_dis features, and the more likely the A building is an edge building.

In conclusion, Table 1 illustrates the seven characteristics that determine whether the building is an edge building. If it is an edge building, we assign it an attribute termed whether_edge = 1; else the whether_edge attribute is 0.

Table 1.

Characteristics to determine edge building.

3.2. The Node Representation Learning Based on the DGI Model

The DGI model offers a robust node-embedded representation learning technique for graph-structured data by maximizing local-global mutual information. It excels in numerous tasks, particularly in inductive learning that surpasses conventional unsupervised methods [41]. This paper employs the DGI model to determine edge buildings and conducts a binary classification (edge or inner) of buildings graph from the training set to the test set.

To extract the high-level features of the building, we employ a graph convolutional neural network as an encoder to map building node features and graph structure into node embedding space, thereby obtaining the higher-level representation of the node , where represents the embedding of node i and denotes the embedding dimension. The method of generating node embeddings can be articulated as follows:

In Formula (8), the is a graph convolution encoder that generates embeddings by aggregating the immediate neighbors of each node. These embeddings encapsulate the characteristics of a specific building while also integrating its contextual information within the graph. The generated node embedding hi encompasses a “subgraph” of a graph centered on node i, rather than exclusively the node itself. It is termed “subgraph representations”.

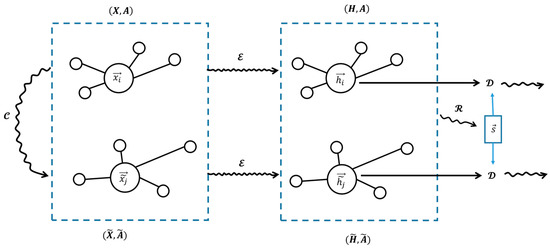

We utilize an unsupervised learning approach aimed at optimizing local-global mutual information to produce node embeddings that encapsulate both global and local information. The objective of the DGI model is to augment the mutual information between node embeddings and the graph-level summary vectors . Each node’s embedding signifies its distinct characteristics while ensuring coherence with the comprehensive information of the graph. The global graph summary vector is derived by aggregating all node embeddings, reflecting the overall semantic content of the graph. The graph convolution mechanism transforms the characteristics of building nodes into vectors . Subsequently, the local embedding vectors of every building are combined to create a summary vector . Figure 7a illustrates the process of building node features transformation and aggregation.

We train a discriminator by producing positive samples (node embeddings and global summaries from entire graphs) and negative samples (node embeddings and global summaries from altered graphs), therefore enabling node embeddings to encapsulate more nuanced representations.

We first consolidate all nodes within H into a comprehensive graph-level summary vector through a readout function , represented as , where the R function in this article aggregates nodes into a global graph summary . This function implements the average output of each dimension of the node. The function is utilized to extract the global semantic information of the entire graph. This global overview delineates the overarching attributes of the building graph. To enable the comparison of local and global information, we generate pairs of positive and negative samples. Positive samples are , while negative samples are .

The positive sample comprises the node embedding and the global graph summary of the original graph, encapsulating both local and global information within the same graph. The negative sample graph is generated by perturbing the original graph, for instance, by randomly modifying node features or edges, and the node embedding is derived from it. The negative samples are provided by pairing the summary from with patch representations of an alternative graph. This is shown in Figure 6.

The DGI model developed a discriminator to differentiate between positive sample pairs (from the original graph) and negative sample pairs (from an alternative graph). The discriminator enhances node embedding through comparison learning, enabling it to discern the relationship between local and global information. The objective function for optimization is a type of binary cross-entropy loss [41], which is in Formula (9):

In Formula (9), N is the number of positive samples, and M is the number of negative samples. By maximizing the scores of positive sample pairs while minimizing the scores of negative sample pairs, the DGI model is able to learn high-quality node embeddings. The structure of the DGI model is shown in Figure 6:

Figure 6.

The DGI model.

In Figure 6, is the original node feature, is the high-dimensional embedding representation of the node, is the perturbation operation of the node feature, and the is the operation to generate node embedding, is the readout function, is the global graph summary vector, and is the discriminator.

3.3. The Traverse of Building Graph

After segmenting the edge buildings from the building graph, the constraints derived from m_dis characteristics are examined to provide the clustering results of the building graph. Initially, the natural breakpoint approach [45] is employed to categorize the m_dis features into four levels, with the third-level m_dis feature value designated as the separation threshold. When the m_dis value is below the third level, it signifies a reduced m_dis feature between the two buildings, suggesting proximity and potential categorization within the same group; conversely, a higher m_dis value indicates an increased visual distance, implying they may belong to distinct categories.

The buildings in the graph structure are traversed to create the initial cluster, after which the buildings of the established clusters are removed from the total building set. A subsequent traversal is conducted to generate the second cluster, and this process continues until all edge buildings have been organized into their respective clusters. The design of the specific traversal algorithm is illustrated in the pseudo-code:

| Algorithm 1. BFS with KY Value Limit for Graph Traversal | |

| 1 | Input: Graph G = (V,E) (where V represents buildings and E represents edges), starting building Vstart, threshold m_dis_limit |

| 2 | Initialize: visited: an empty set to store visited buildings. queue: a list initialized with Vstart. traversal_result: an empty list to store the result of BFS traversal. |

| 3 | While queue is not empty do: |

| 4 | Pop the first building Vcurrent from queue. |

| 5 | If Vcurrent ∉ visited and G[Vcurrent].we = 1: |

| 6 | Add Vcurrent to visited. |

| 7 | Append Vcurrent to traversal_result. |

| 8 | For each neighbor Vneighbor of Vcurrent: |

| 9 | If Vneighbor ∉ visited: |

| 10 | Retrieve edge_data for (Vcurrent,Vneighbor). |

| 11 | If edge_data exists and edge_data.m_dis ≤ m_dis_limit. |

| 12 | Append Vneighbor to queue. |

| 13 | Return: traversal_result. |

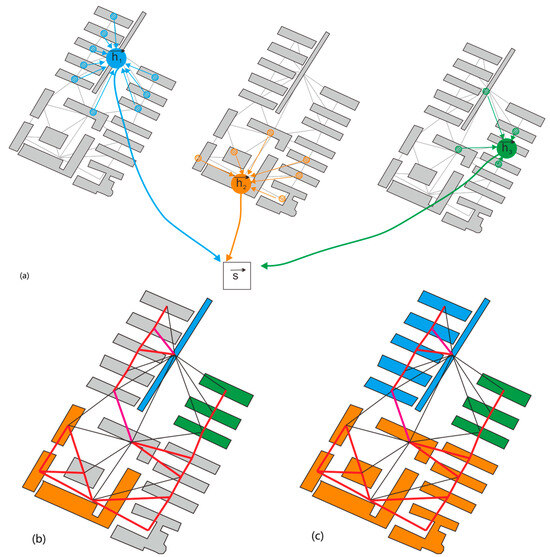

In pseudocode, the variable G[Vcurrent].we denote the whether_edge attribute (described in Section 3.1.5) of the current node Vcurrent. A value of 1 signifies that the node is an edge node. In reference to Figure 7, the edges are depicted in black when their m_dis feature value is below the established threshold; conversely, if the m_dis feature value exceeds the threshold, it is represented in red. Upon establishing the boundary structure, categorize the nodes by navigating the graph along the red edge. During the traversal process, in the absence of a red edge on the current side, the accessed edge buildings are classified into a distinct building group. This procedure persists until all edge buildings are visited. In the illustration, colored nodes signify edge buildings, which are designated distinct numbers during traversal to identify their respective clusters; the gray color denotes inner buildings, with their cluster numbers determined by the cluster numbers of their nearest edge building.

Figure 7.

(a) The vector is local graph convolution of buildings, and all constitute the summary vector , The large solid blue circle represents a building node, and the small blue diagonal circles around it represent the first-order nodes around this node. The same is true for the orange nodes and the green nodes. Their convolution information is all stacked in the global vector ; (b) Three edge building clusters after the traverse; (c) Allocate inner buildings to clusters based on proximity to adjacent edge buildings.

4. Results

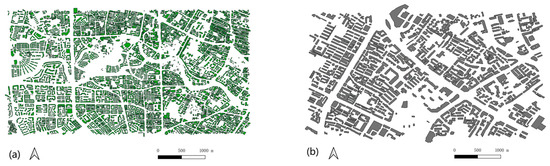

The experimental data presented in this article are derived from the Chengdu urban planning map, which was generated by the Chengdu Planning Research and Application Technology Center (abbreviated as CD data in the article). The CD dataset is the source of the training dataset, which comprises 4851 buildings. The test dataset is a subset of the CD dataset, consisting of 689 buildings. The training and test datasets contain a variety of concave buildings, including C-shaped, U-shaped, Z-shaped, L-shaped, and octopus-shaped structures, as illustrated in Figure 8 and Table 2.

Figure 8.

The experiment data has a map scale of 1:3000: (a) the train data from the CD dataset and (b) the test data from the CD dataset.

Table 2.

The percent of concave and rectangular buildings in the experiment data.

The subsequent three experiments are as follows: Section 3.1, Semi-automatically identify the edge buildings in the training dataset. Section 3.2: Employ the DGI model to train the dataset in order to acquire the model parameters. In Section 3.3, utilize the parameters acquired from model training to perform binary classification of edge buildings and inner buildings on the test dataset. Utilize the graph traversal method (described in Section 3.3, with Algorithm 1) to obtain the clustering results of the test dataset and compare these results with the results of the RF-BIC method (described in Section 3.2 and Section 3.3), the CDC method [16], and the MGP method [11].

4.1. Experiment for Semi-Automatically Labeling Edge-Buildings

This subsection pertains to the execution of edge-building delineation. We utilize the Local Outlier Factor (LOF) and the vector length from the Voronoi polygon center to the building center (vo_to_b_length) to develop edge building labeling. The following are the procedural steps:

4.1.1. Attribute Definition and Grading

The LOF attribute denotes the degree of local outlierness of a building, with elevated values signifying an increased probability of the building being situated at the periphery. Utilizing the natural break method [45], the LOF attribute is categorized into three tiers: low-value range (0.838–0.995), medium-value range (0.995–1.055), and high-value range (1.055–2.000). The vo_to_b_length attribute indicates the vector length from the center of the Voronoi polygon to the center of the building. Greater values reflect a more significant divergence between the Voronoi polygon center and the building center. According to the natural break method, this attribute is also classified into three levels: low-value range (0.02–3.24), medium-value range (3.24–8.70), and high-value range (8.70–115.41).

4.1.2. The Semi-Automated Labeling of Edge Buildings

Initially, employ the subsequent regulations to autonomously identify the edge buildings:

- (1)

- When a building’s vo_to_b_length value falls within the high-value range (>8.690), it receives the label of an edge building (whether_edge = 1).

- (2)

- If the vo_to_b_length value falls within the medium-value range (3.236–8.690) and the corresponding LOF value falls within the high-value range (>1.055), it is also labeled as an edge building (whether_edge = 1).

- (3)

- Buildings that do not satisfy the above conditions are labeled as inner buildings (whether_edge = 0).

Ultimately, a collection of labels that are consistent with the human identification results is obtained by manually modifying a few labels after the edge building is automatically marked. The training dataset contains 2065 edge buildings that are automatically labeled according to the rules and 727 edge buildings that are manually modified, resulting in 2792 edge buildings in total. Similarly, we added labels of edge buildings to the test datasets to evaluate the results of the DGI model binary classification.

4.2. The DGI Model Training Phase

The DGI model employed in the investigation has an input feature dimension of 7, corresponding to the edge-building features, while the hidden layer dimension is 512, representing the node embeddings extracted through a graph convolutional network (GCN) module via a linear transformation layer. This layer is activated by a nonlinear function (PReLU). Weight initialization uses the Xavier uniform distribution, with bias terms initialized to zero, enhancing the model’s stability and training efficiency. The GCN module aggregates neighbor information through the adjacency matrix, improving computational efficiency by performing matrix multiplication during forward propagation. Each node’s embedding is determined by the weighted sum of its adjacent nodes’ features, processed through the activation function. The global representation, computed as the average of all node embeddings by the global readout module AvgReadout, is subsequently compressed to the range of [0, 1] using the Sigmoid function, producing a summary vector at the full graph level.

The discriminator module distinguishes the global-local alignment relationship between positive samples (real graph) and negative samples (alternative graph) through contrastive learning tasks. It uses a bilinear layer to calculate the relationship score between the global representation and the node embedding, generating the final learning vector by concatenating the score vectors of positive and negative samples.

We use a logistic regression classifier [46] to binary classify the final learning vectors generated by the DGI model. The input is the embedding vector of the node, with the same dimension as the embedding vector, and the output is a probability distribution indicating whether the node is an edge or inner node, with a dimension of 2. The Adam optimizer is employed with a learning rate of 0.01 and weight decay set to 0 to avoid overfitting, training the first 100 samples of the embedding vector. The labels during training are randomly generated.

During training, we calculate the logits (unnormalized probability) output by the classifier, evaluate the cross-entropy loss between the logits and the real label using the cross-entropy loss function, and perform backpropagation to update the classifier parameters. After training, the classifier switches to evaluation mode, inferring the embedding vectors of all nodes and processing the logits output by the model through a maximum value index operation to obtain the final binary classification result (edge or inner).

We developed training protocols for eight feature combinations to assess their impact on binary classification tasks involving edge buildings versus inner buildings. These combinations range from subsets missing a single characteristic to a comprehensive set that includes all seven features, with the goal of evaluating the effect of each feature or group of features on classification performance. The specific combinations are in Table 3:

Table 3.

The training of different feature combinations.

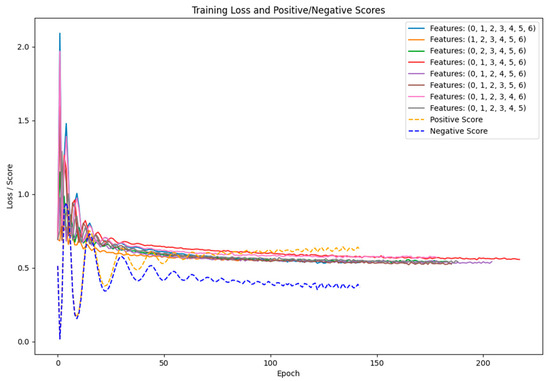

In each training session, the scales of train, val, and test are identical, accounting for 60%, 20%, and 20% of the total. In order to prevent training from collapsing into overfitting, we implement early stopping by setting a variable named cnt_wait. Whether the current loss is less than the highest loss previously recorded will be verified by the code; cnt_wait will be increased by 1 if the current loss does not improve. The early stopping mechanism will be activated, and the training will be terminated when cnt_wait reaches the predetermined patience value. This suggests that it is necessary to discontinue training in order to prevent overfitting if the loss does not progress after multiple training cycles. In Figure 9, the training loss is illustrated:

Figure 9.

The training loss diagram for eight feature combinations.

The training of each feature combination requires 5 min (exclusively on CPU), resulting in a cumulative training duration of 40 min for the 8 models (on CPU). Among the combinations, the feature set (1, 2, 3, 4, 5, 7) results in the quickest training termination, concluding in 114 steps. This indicates that the absence of the first_neighbor_avg_r feature facilitates the most rapid convergence of the training process. When utilizing the feature combination (1, 2, 3, 5, 6, 7), the training progresses at the slowest rate, concluding in 233 steps. This implies that when the vo_to_b_length feature is absent, it necessitates additional iterations in the learning process to identify the optimal solution. So, the average radius of a building’s first-order neighborhood polygon little influences the determination of its status as an edge building, while the vector length from the center of the Voronoi diagram unit corresponding to the building’s center significantly influences the determination of whether the building qualifies as an edge building.

The blue dotted line in Figure 9 represents the negative sample score during training of feature combinations (1, 2, 3, 4, 5, 6, 7), while the yellow dotted line represents the positive sample score during training of feature combinations (1, 2, 3, 4, 5, 6, 7). The average positive sample score of each node embedding is 0.5. The average positive sample score progressively increases as the training commences, ultimately reaching 0.63. The average negative sample score decreases steadily until it reaches 0.34. Positive and negative sample scores are clearly distinguished. The node embedding and the global summary are more correlated, and the mutual information is maximized as the positive sample score increases. The global summary can suppress noise interference by disregarding the node embedding after the perturbation, as evidenced by a decreased negative sample score. The negative sample score’s ideal value is not close to the ideal value of 0, and the average value of the positive sample score in our study is not close to the ideal value of 1. This suggests that the research conducted in this article has the potential for development.

This work employs evaluation metrics for classification results, namely Precision, Recall, and F1-score, to assess the binary classification outcomes of edge buildings and inner buildings. The subsequent formula illustrates

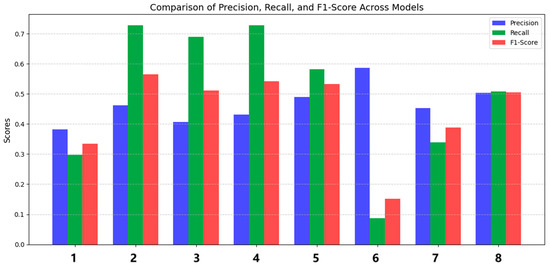

In Formulas (10)–(12), TP (true positive) is the count of samples anticipated as positive that are indeed positive. FP (false positive) is the quantity of samples erroneously forecasted as positive while being negative. FN (false negative) is the quantity of samples anticipated as negative that are, in fact, positive. The binary classification outcomes for edge buildings and inner buildings, under various combinations of training characteristics, are illustrated in Figure 10 below:

Figure 10.

Evaluation diagram of edge buildings and inner buildings.

In Figure 10, the horizontal axis denotes the combination of training features, comprising eight distinct categories. Each combination of features is linked to a model training, labeled as Model 1 to Model 8, while the vertical axis shows the evaluation metrics for binary classification, which are precision, recall, and F1-score. Based on the three measures, Model 8 performed the best, indicating that training buildings require using all seven features together to improve results.

The indicators for the outcomes of No. 1 training are evidently poor, indicating that m_dis_cv is a significant characteristic when delineating edge buildings, as it reflects the distribution irregularity surrounding the building, and this parameter is a statistical measure. The m_dis feature (refer to Section 3.1.5), which quantifies the spacing and volume of a building, is a distinctive element introduced in this article. The outcomes of training sessions No. 2 to 5 exhibit a significant disparity in precision and recall metrics, with the recall parameters being elevated. The elevated recall parameters suggest the model can identify most positive samples, but the prediction is low and may generate more false positives, implying that the absence of any one of the features—first_neighbor_avg_r; center_devia; vo_to_b_length; or density—will affect the training of edge buildings. The recall parameter of the sixth training is the lowest, indicating a significant number of edge buildings are unrecognized. Additionally, the F1-score is the lowest, suggesting that the LOF value is a crucial attribute for detecting edge buildings, corroborating prior research findings [29,35]. The poor recall parameter of the seventh training indicates that concavity is a significant characteristic in assessing edge buildings. Manual visual inspection revealed that a greater number of edge buildings are configured in L-shaped, C-shaped, and U-shaped forms.

Combining the performance of the eight training feature combinations in Table 3, this paper selects the eighth set of feature combinations when classifying edge buildings and inner buildings; that is, all seven features are considered as training feature inputs.

A random forest classifier is incorporated into the biclassification of buildings as a reference group for biclassification in order to demonstrate the superiority of the DGI method employed in this paper. The input features of the random forest classifier are identical to those of the DGI model. All seven features are used as input for the random forest classifier. The random forest classifier is from the scikit-learn library [47]. The random_state is set to 42, and the number of estimators is set to 100.

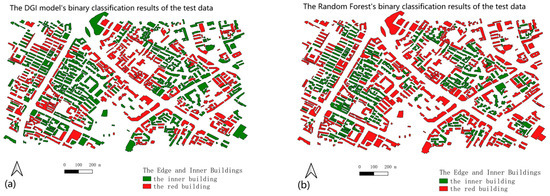

The results of the binary classification of edge and inner buildings are as illustrated in Figure 11. Where Figure 11a represents the binary classification result of DGI and Figure 11b represents the binary classification result of random forest (hereinafter referred to as RF).

Figure 11.

The binary classification results of edge and inner buildings: (a) DGI; (b) Random Forest.

Some buildings are classified as inner buildings in the binary classification results of the DGI model and edge buildings in the RF binary classification results, as illustrated in Figure 11. The random forest method’s F1-score, recall, and precision indicators are 0.59, 0.77, and 0.67, respectively. The recall value of the random forest method is greater than that of the DGI model, suggesting that it has identified a higher number of edge buildings.

4.3. Clustering Comparison Experiment

The DGI-EIC results were obtained using graph traversal of the m_dis feature value constraints on the edge and inner buildings from Section 3.2. The clustering results can better describe the building’s geometric arrangement’s density properties and match human perception using graph traversal methods and m_dis feature constraints.

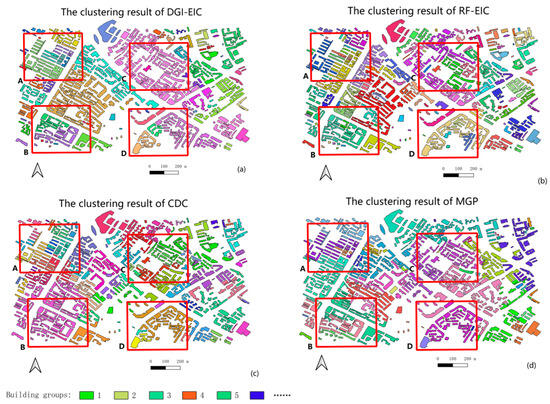

Section 3.2 concluded with binary classification experiments for inner and edge buildings using a random forest classifier. So, we raise a random forest edge inner classifier, known as the RF-EIC method, replacing the binary classification part of the building in the DGI-EIC method. Consequently, this paper includes three comparison groups in the DGI-EIC method: the RF-EIC, the CDC method [16], and the MGP method [11]. The CDC method utilizes only the x-coordinate and y-coordinate of the building’s center point, area, and average distance [44] as input, whereas the MGP method assigns weights to the four Gestalt properties of the edges connecting buildings based on the coefficients (0.6, 0.05, 0.3, 0.05) specified in the original text. Figure 12 below illustrates the comparison of the four clustering results:

Figure 12.

Comparison of the results of four clustering methods: (a) GGI-EIC; (b) RF-EIC; (c) CDC; (d) MGP. Select the four local areas A, B, C, and D in the red box in the figure for comparison. Buildings labeled with the same color are considered to be in the same group.

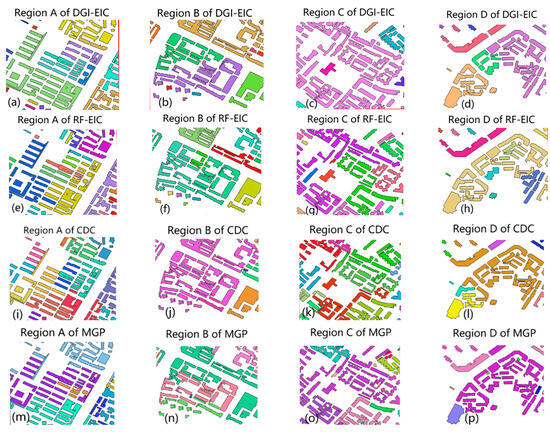

As shown in Figure 13 below, the four local regions A, B, C, and D of the test data set are selected from the test data set to further prove the reliability of the clustering results of the DGI-EIC method. Each column in Figure 13 represents a region of the test data set, and each row represents the clustering results of different methods in this region. We observe column by column. First, it is region A column; Figure 13a is the result of the DGI-EIC method, and Figure 13e is the result of the RF-EIC method. These two methods have better results in identifying E-type clusters in the upper left corner of area A (in Figure 13a is a green cluster, and in Figure 13c is a blue cluster). The results of this recognition are consistent with the cognition of human vision. Neither the CDC technology (Figure 13i) nor the MGP method (Figure 13m) correctly identified the E-type building group in the upper left corner of Area A. Then, we observe the region B column. The purple region in Figure 13b indicates that the DGI-EIC method has certain defects. The two clusters are mistakenly identified as one cluster, and Figure 13f identifies the region as green clusters of different tones. The RF-EIC result is closer to the square block division shape. For Region B, Figure 13j shows that the CDC method cannot recognize local fine cluster shapes and recognizes this area as a cluster. The red and green clusters in Figure 13n are wrong building group recognition results. Next, in the Region C column, Figure c recognizes a large area; however, the cluster shapes identified by Figure 13g do not conform to human visual cognition. Additionally, the clustering results displayed in Figure 13k,o are subpar, indicating that these four methods are ineffective for clustering in region C. Finally, in the Region D column, Figure 13d recognizes four distinct clusters, while Figure 13h mistakenly identifies the three clusters from Figure 13d as a single cluster, which clearly shows a gap in the middle of this combined cluster. Obviously, the recognition result of Figure 13d is better, and the problem of excessive recognition range of the same cluster also occurs in Figure 13l,p, which means that the clustering effect of the DGI-EIC method is better for region D.

Figure 13.

Clustering details of test data: (a) DGI-EIC; (b) DGI-EIC; (c) DGI-EIC; (d) DGI-EIC; (e) RF-EIC; (f) RF-EIC; (g) RF-EIC; (h) RF-EIC; (i) CDC; (j) CDC; (k) CDC; (l) CDC; (m) MGP; (n) MGP; (o) MGP; (p) MGP.

The clustering results of the test datasets are evaluated using these indexes: silhouette coefficient, Davies-Bouldin index, Calinski-Harabasz index, and ARI [48]. The results of index statistics are as shown in Table 4:

Table 4.

Evaluation of clustering results of the test dataset.

Table 4’s index analysis indicates that the Silhouette Coefficient for the DGI-EIC method, the CDC method, and the MGP method exhibited subpar performance. The assessment of the Silhouette Coefficient primarily relies on the four attributes of x-coordinate, y-coordinate of the building’s center, area, and average distance, whereas the differentiation and internal cohesion among the building clusters encompass more intricate Gestalt characteristics. Consequently, the aforementioned three evaluation metrics (Silhouette coefficient, Davies-Bouldin index, and Calinski-Harabasz index) yield suboptimal assessment outcomes for the three clustering methodologies.

In the test data set, the DGI-EIC method presented in this work has attained a notable enhancement in the ARI index relative to the CDC method. The clustering outcomes of the DGI-EIC method closely align with the visual clustering interpretations perceived by the human eye, thereby supporting the premise of this article: that accurate delineation of clusters is achievable through the successful extraction of edge buildings. Nonetheless, the drawback of this strategy is that the DGI model training yielded suboptimal results while transitioning from Chengdu to Nanchang, mostly attributable to the substantial disparities in data distribution density and morphology between the two locations. The MGP technique exhibits minimal change in the ARI index across the two city datasets, demonstrating its robustness to diverse data distributions.

5. Discussion

Building a clustering is an unsupervised learning task. The primary research significance of this study is the identification of the edge building of urban morphology through the analysis of the spatial distribution characteristics of buildings. However, the difficulty of migrating from one regional training set to another is not the only thing that is exacerbated by the significant differences in distribution patterns, morphological characteristics, and so forth between different cities. This also places greater demands on the model’s generalization ability and the accuracy of cross-region clustering. Therefore, future research should concentrate on investigating the potential of transfer learning or adaptive clustering algorithms to enhance the model’s adaptability to data in various cities.

Furthermore, clustering results evaluation continues to be a significant challenge in the present investigation. Historically, the Adjusted Rand Index (ARI) has been adjusted by relying on the correct clustering identifiers, which are frequently challenging to acquire in unsupervised clustering. Conversely, evaluation methodologies that utilize indicators (e.g., the Silhouette coefficient) necessitate the selection of particular features, including the building’s shape characteristics. Nevertheless, there are numerous definitions of shape characteristics, and additional research is required to judiciously choose them in order to accurately evaluate the quality of clusters.

Concurrently, the rapid advancement of deep learning technology has facilitated the development of clustering methods that are based on generative adversarial networks (GANs). These methods have opened up new research avenues for the construction of clustering. This method is capable of not only accurately capturing the intricate spatial relationships between buildings but also substantially enhancing the robustness and accuracy of clustering by optimizing the clustering target and feature extraction process in conjunction. Consequently, future research may attempt to integrate multi-scale map feature extraction with GANs.

Author Contributions

Conceptualization, Hesheng Huang; Methodology, Hesheng Huang; Data Curation, Hesheng Huang and Yijun Zhang; Resources, Yijun Zhang; Software, Hesheng Huang; Visualization, Hesheng Huang; Writing—Original Draft Preparation, Hesheng Huang; Writing—Review and Editing, Hesheng Huang. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Science and Technology Plan Project of the Jiangxi Provincial Department of Education, GJJ200454.

Data Availability Statement

Data is contained within the article.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Li, Z.; Liu, Q.; Tang, J. Towards a Scale-driven Theory for Spatial Clustering. Acta Geod. Cartogr. Sin. 2017, 46, 1534–1548. [Google Scholar]

- Deng, M.; Tang, J.; Liu, Q.; Wu, F. Recognizing building groups for generalization: A comparative study. Cartogr. Geogr. Inf. Sci. 2018, 45, 187–204. [Google Scholar] [CrossRef]

- Basaraner, M.; Selcuk, M. A structure recognition technique in contextual generalisation of buildings and built-up areas. Cartogr. J. 2008, 45, 274–285. [Google Scholar] [CrossRef]

- Allouche, M.K.; Moulin, B. Amalgamation in cartographic generalization using Kohonen’s feature nets. Int. J. Geogr. Inf. Sci. 2005, 19, 899–914. [Google Scholar] [CrossRef]

- Huang, H.; Guo, Q.; Sun, Y.; Liu, Y. Reducing building conflicts in map generalization with an improved PSO algorithm. ISPRS Int. J. Geo-Inf. 2017, 6, 127. [Google Scholar] [CrossRef]

- Sahbaz, K.; Basaraner, M. A zonal displacement approach via grid point weighting in building generalization. ISPRS Int. J. Geo-Inf. 2021, 10, 105. [Google Scholar] [CrossRef]

- Li, Z.; Yan, H.; Ai, T.; Chen, J. Automated building generalization based on urban morphology and Gestalt theory. Int. J. Geogr. Inf. Sci. 2004, 18, 513–534. [Google Scholar] [CrossRef]

- Qi, H.B.; Li, Z.L. An approach to building grouping based on hierarchical constraints. Int. Arch. Photogramm. Remote Sens. Spatial Inf. Sci. 2008, XXXVII, 449–454. [Google Scholar]

- Yan, H.; Weibel, R.; Yang, B. A multi-parameter approach to automated building grouping and generalization. Geoinformatica 2008, 12, 73–89. [Google Scholar] [CrossRef]

- Zhang, L.; Deng, H.; Chen, D.; Wang, Z. A spatial cognition-based urban building clustering approach and its applications. Int. J. Geogr. Inf. Sci. 2013, 27, 721–740. [Google Scholar]

- Wang, W.; Du, S.; Guo, Z.; Luo, L. Polygonal clustering analysis using multilevel graph-partition. Trans. GIS 2015, 19, 716–736. [Google Scholar] [CrossRef]

- Chen, Z.; Ma, X.; Wu, L.; Xie, Z. An intuitionistic fuzzy similarity approach for clustering analysis of polygons. ISPRS Int. J. Geo-Inf. 2019, 8, 98. [Google Scholar] [CrossRef]

- Xu, D.; Tian, Y. A comprehensive survey of clustering algorithms. Ann. Data Sci. 2015, 2, 165–193. [Google Scholar] [CrossRef]

- Basaraner, M.; Cetinkaya, S. Performance of shape indices and classification schemes for characterising perceptual shape complexity of building footprints in GIS. Int. J. Geogr. Inf. Sci. 2017, 31, 1952–1977. [Google Scholar] [CrossRef]

- Bei, W.; Guo, M.; Huang, Y. A spatial adaptive algorithm framework for building pattern recognition using graph convolutional networks. Sensors 2019, 19, 5518. [Google Scholar] [CrossRef]

- Peng, D.; Gui, Z.; Wang, D.; Ma, Y.; Huang, Z.; Zhou, Y.; Wu, H. Clustering by measuring local direction centrality for data with heterogeneous density and weak connectivity. Nat. Commun. 2022, 13, 5455. [Google Scholar] [CrossRef] [PubMed]

- Zahn, C.T. Graph-theoretical methods for detecting and describing gestalt clusters. IEEE Trans. Comput. 1971, 100, 68–86. [Google Scholar] [CrossRef]

- Zhong, C.; Miao, D.; Wang, R. A graph-theoretical clustering method based on two rounds of minimum spanning trees. Pattern Recognit. 2010, 43, 752–766. [Google Scholar] [CrossRef]

- Anders, K.H. A hierarchical graph-clustering approach to find groups of objects. In Proceedings of the 5th Workshop on Progress in Automated Map Generalization, Paris, France, 28–30 April 2003; Citeseer: Princeton, NJ, USA, 2003; pp. 1–8. [Google Scholar]

- Liu, Q.; Deng, M.; Shi, Y.; Wang, J. A density-based spatial clustering algorithm considering both spatial proximity and attribute similarity. Comput. Geosci. 2012, 46, 296–309. [Google Scholar] [CrossRef]

- Cetinkaya, S.; Basaraner, M.; Burghardt, D. Proximity-based grouping of buildings in urban blocks: A comparison of four algorithms. Geocarto Int. 2015, 30, 618–632. [Google Scholar] [CrossRef]

- Yu, W.; Ai, T.; Liu, P.; Cheng, X. The analysis and measurement of building patterns using texton co-occurrence matrices. Int. J. Geogr. Inf. Sci. 2017, 31, 1079–1100. [Google Scholar] [CrossRef]

- Wei, Z.; Guo, Q.; Wang, L.; Yan, F. On the spatial distribution of buildings for map generalization. Cartogr. Geogr. Inf. Sci. 2018, 45, 539–555. [Google Scholar] [CrossRef]

- Liu, P.; Shao, Z.; Xiao, T. Second-order texton feature extraction and pattern recognition of building polygon cluster using CNN network. Int. J. Appl. Earth Obs. Geoinf. 2024, 129, 103794. [Google Scholar] [CrossRef]

- Regnauld, N. Contextual building typification in automated map generalization. Algorithmica 2001, 30, 312–333. [Google Scholar] [CrossRef]

- Pilehforooshha, P.; Karimi, M. An integrated framework for linear pattern extraction in the building group generalization process. Geocarto Int. 2019, 34, 1000–1021. [Google Scholar] [CrossRef]

- Nosovskiy, G.V.; Liu, D.; Sourina, O. Automatic clustering and boundary detection algorithm based on adaptive influence function. Pattern Recognit. 2008, 41, 2757–2776. [Google Scholar] [CrossRef]

- Sander, J.; Ester, M.; Kriegel, H.-P.; Xu, X. Density-based clustering in spatial databases: The algorithm gdbscan and its applications. Data Min. Knowl. Discov. 1998, 2, 169–194. [Google Scholar] [CrossRef]

- Breunig, M.M.; Kriegel, H.P.; Ng, R.T.; Sander, J. LOF: Identifying density-based local outliers. In Proceedings of the 2000 ACM SIGMOD International Conference on Management of Data, Dallas, TX, USA, 16–18 May 2000; pp. 93–104. [Google Scholar]

- Zhang, X.; Ai, T.; Stoter, J. The evalutation of spatial distribution density in map generalization, ISPRS 2008. In Proceedings of the XXI Congress: Silk Road for Information from Imagery: The International Society for Photogrammetry and Remote Sensing, Beijing, China, 3–11 July 2008; Comm. II, WG II/2. International Society for Photogrammetry and Remote Sensing (ISPRS): Beijing, China, 2008; pp. 181–187. [Google Scholar]

- Deng, M.; Liu, Q.; Cheng, T.; Shi, Y. An adaptive spatial clustering algorithm based on Delaunay triangulation. Comput. Environ. Urban. Syst. 2011, 35, 320–332. [Google Scholar] [CrossRef]

- Jahirabadkar, S.; Kulkarni, P. Algorithm to determine ε-distance parameter in density based clustering. Expert. Syst. Appl. 2014, 41, 2939–2946. [Google Scholar] [CrossRef]

- Rodriguez, A.; Laio, A. Clustering by fast search and find of density peaks. Science 2014, 344, 1492–1496. [Google Scholar] [CrossRef]

- Pilehforooshha, P.; Karimi, M. A local adaptive density-based algorithm for clustering polygonal buildings in urban block polygons. Geocarto Int. 2020, 35, 141–167. [Google Scholar] [CrossRef]

- Meng, N.; Wang, Z.; Gao, C.; Li, L. A vector building clustering algorithm based on local outlier factor. Geomat. Inf. Sci. Wuhan Univ. 2024, 49, 562–571. [Google Scholar]

- Cheng, B.; Liu, Q.; Li, X. Local perception-based intelligent building outline aggregation approach with back propagation neural network. Neural Process. Lett. 2015, 41, 273–292. [Google Scholar] [CrossRef]

- Yan, X.; Ai, T.; Yang, M.; Tong, X.; Liu, Q. A graph deep learning approach for urban building grouping. Geocarto Int. 2022, 37, 2944–2966. [Google Scholar] [CrossRef]

- Kipf, T.N.; Welling, M. Semi-supervised classification with graph convolutional networks. arXiv 2016, arXiv:1609.02907. [Google Scholar]

- Zhao, R.; Ai, T.; Yu, W.; He, Y.; Shen, Y. Recognition of building group patterns using graph convolutional network. Cartogr. Geogr. Inf. Sci. 2020, 47, 400–417. [Google Scholar] [CrossRef]

- Cover, T.; Hart, P. Nearest neighbor pattern classification. IEEE Trans. Inf. Theory 1967, 13, 21–27. [Google Scholar] [CrossRef]

- Veličković, P.; Fedus, W.; Hamilton, W.L.; Liò, P.; Bengio, Y.; Hjelm, R.D. Deep Graph Infomax. arXiv 2018, arXiv:1809.10341. [Google Scholar]

- Wu, J.; Dai, P.; Hu, X.; Zhao, Y.; Xiong, J.; Hu, L.; Wei, N.; Tu, H. An adaptive approach for generating Voronoi diagrams for residential areas containing adjacent polygons. Int. J. Digit. Earth 2024, 17, 2431100. [Google Scholar] [CrossRef]

- Edsger, W.D. A note on two problems in connexion with graphs. Numer. Math. 1959, 1, 269–271. [Google Scholar]

- Yan, X.; Ai, T.; Yang, M.; Yin, H. A graph convolutional neural network for classification of building patterns using spatial vector data. ISPRS J. Photogramm. Remote Sens. 2019, 150, 259–273. [Google Scholar] [CrossRef]

- Chen, J.; Yang, S.T.; Li, H.W.; Zhang, B.; Lv, J.R. Research on geographical environment unit division based on the method of natural breaks (Jenks). Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2013, 40, 47–50. [Google Scholar] [CrossRef]

- Richardson, A. Logistic Regression: A Self-Learning Text, by David G. Kleinbaum, Mitchel Klein. Int. Stat. Rev. 2011, 79, 296. [Google Scholar] [CrossRef]

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V.; et al. Scikit-learn: Machine learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

- Arbelaitz, O.; Gurrutxaga, I.; Muguerza, J.; Pérez, J.M.; Perona, I. An extensive comparative study of cluster validity indices. Pattern Recognit. 2013, 46, 243–256. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Published by MDPI on behalf of the International Society for Photogrammetry and Remote Sensing. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).