A Traffic Flow Forecasting Method Based on Transfer-Aware Spatio-Temporal Graph Attention Network

Abstract

1. Introduction

- (1)

- A transfer probability matrix and a distance decay matrix are introduced into the GAT to characterize the transmission capability and distance-dependent correlation attenuation between road nodes, enabling more accurate modeling of spatial dependencies.

- (2)

- A gating mechanism bridges spatial and temporal representations, allowing dynamic feature fusion across time intervals and capturing spatiotemporal continuity.

- (3)

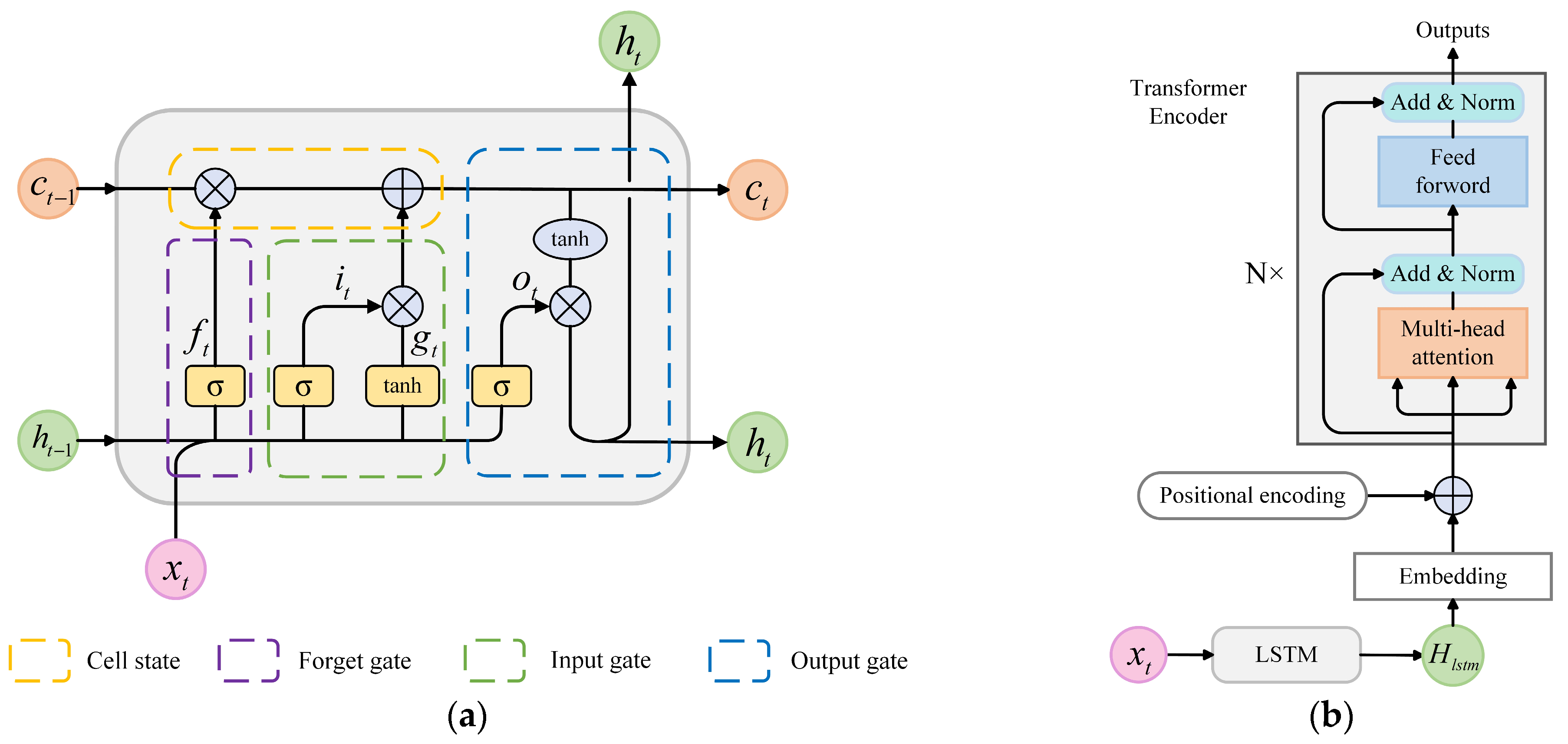

- The integration of LSTM and a Transformer Encoder enhances the ability to model both local and global temporal dependencies, addressing the limitations of existing sequential models.

2. Related Work

3. Methods

3.1. Overall Framework

- (1)

- Inputs module: This module processes raw input data through smoothing and normalization, ensuring the data is properly preconditioned for subsequent modelling stages.

- (2)

- Spatial feature extraction module (TA-GAT): This module integrates a GAT, a transfer probability matrix, and a distance decay matrix. It is responsible for modelling and capturing the spatial dependencies within the road network, accounting for interactions between road nodes.

- (3)

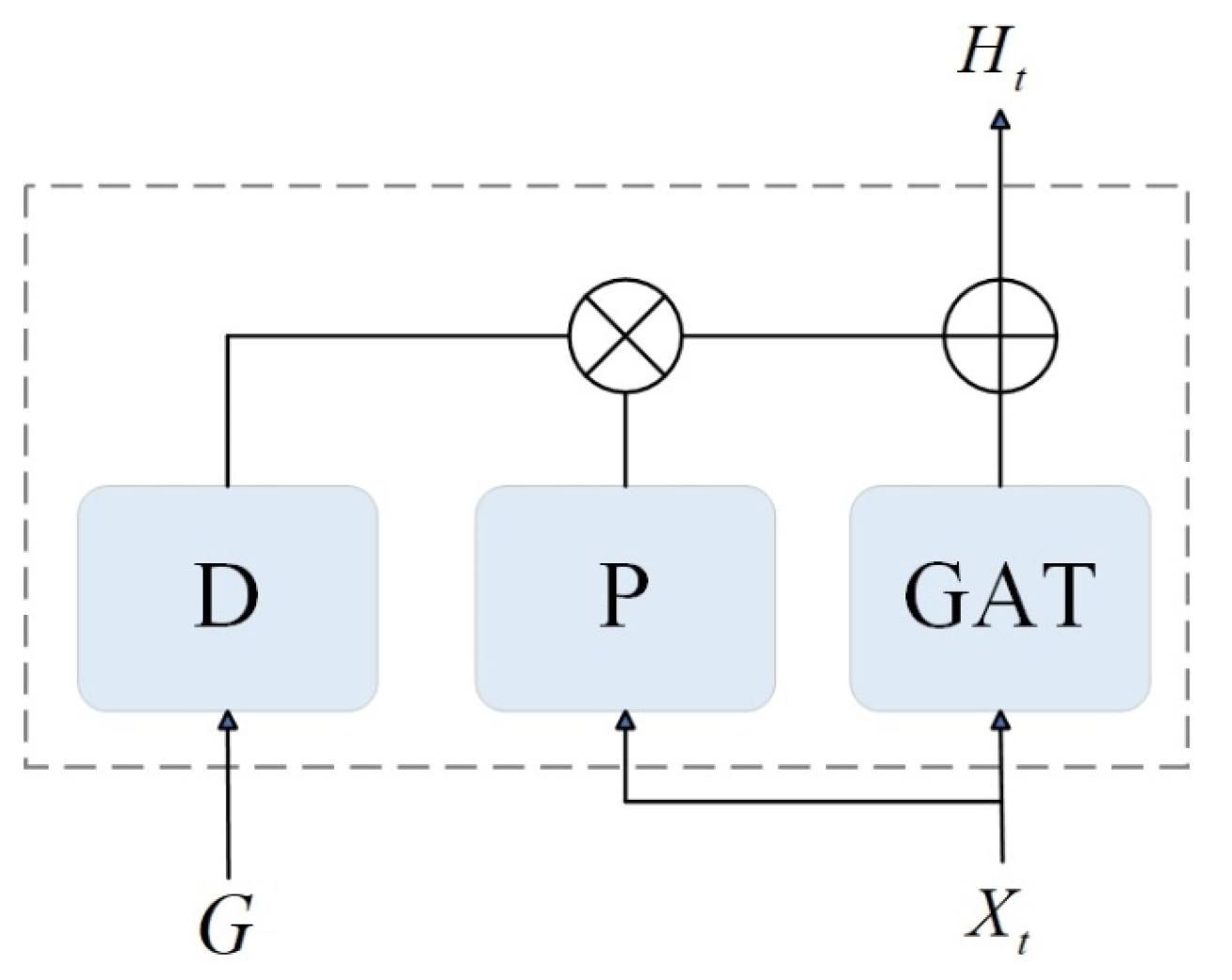

- Gating Network module: Comprising multiple gating networks, this module performs preliminary temporal aggregation of the spatial features extracted by the TA-GAT module. It enhances the model’s ability to capture temporal variations in spatial dependencies.

- (4)

- Temporal feature extraction module (LSTM-Trans): This module combines a Long-Short Term Memory (LSTM) network with a Transformer Encoder layer, effectively capturing temporal features in historical traffic sequences by modelling local and global temporal dynamics.

- (5)

- Training and output module: This module uses a fully connected (FC) layer to map the extracted spatio-temporal feature vectors to the prediction outcomes. The predicted results are then denormalized to revert them to their original scale. Finally, the model is trained using a loss function to optimize prediction accuracy.

3.2. Inputs Module

3.2.1. Smooth

3.2.2. Normalization

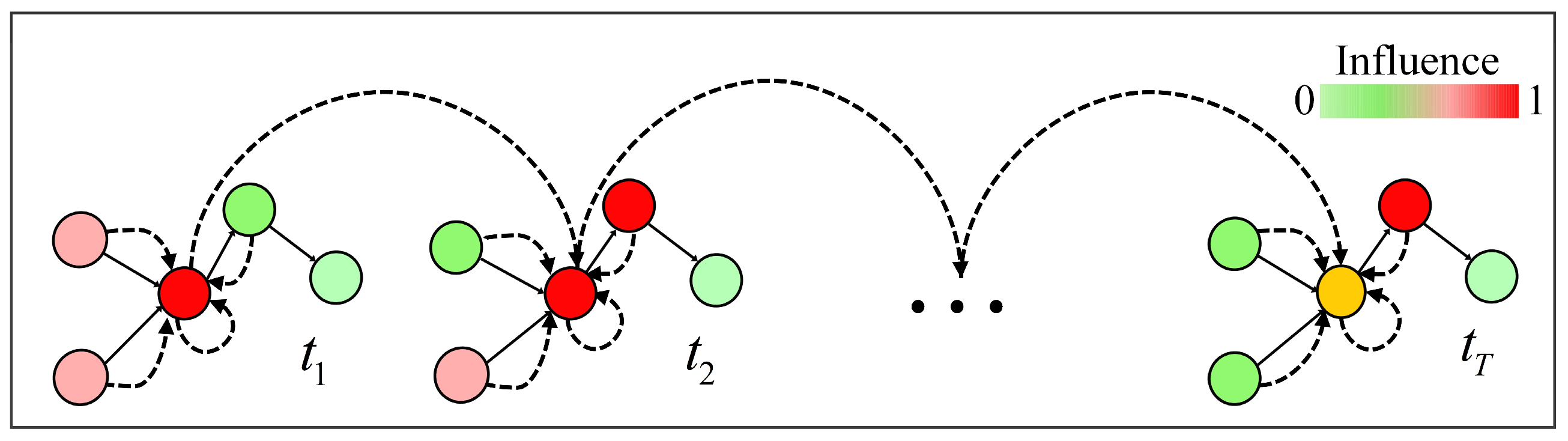

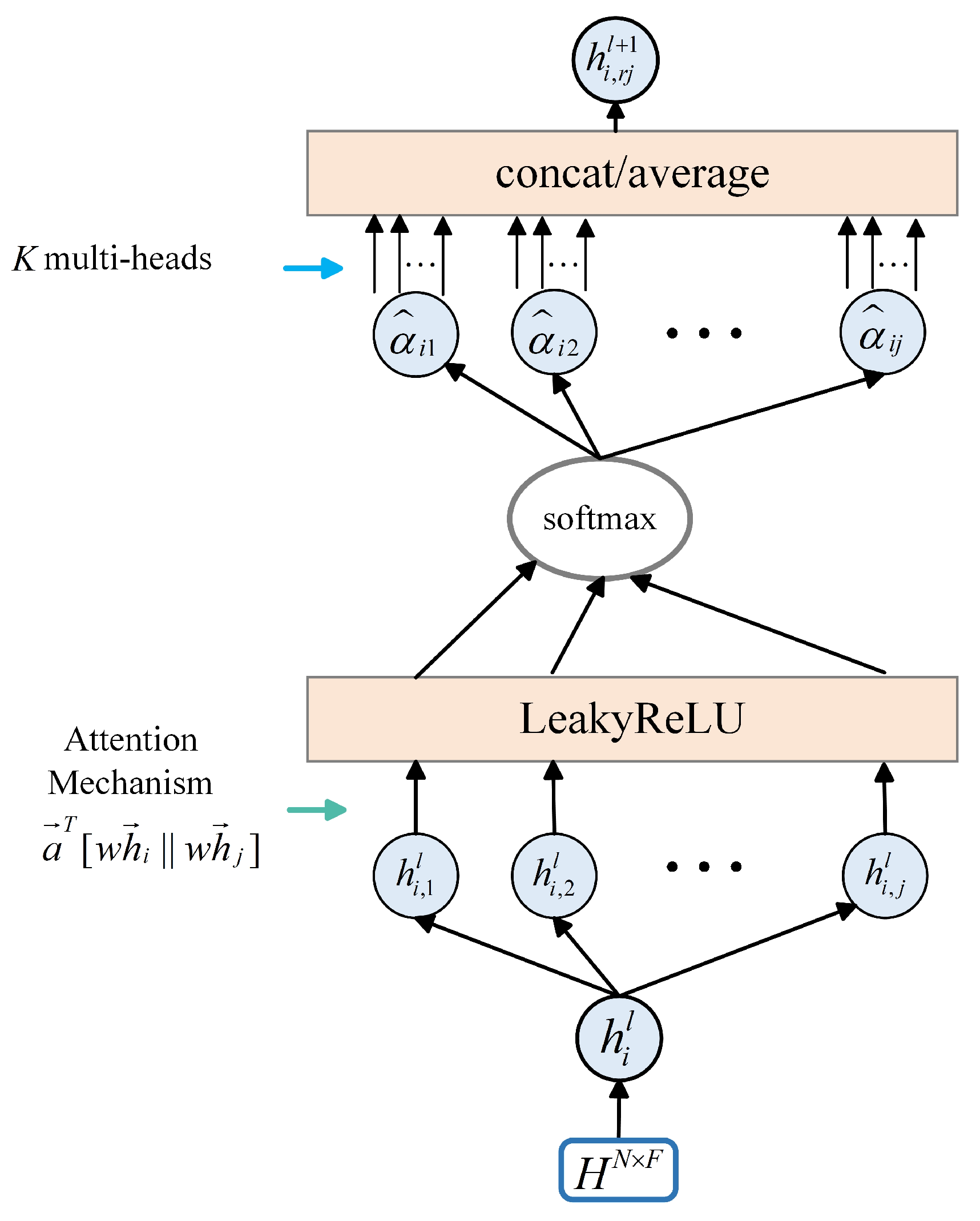

3.3. Spatial Feature Extraction Module

3.3.1. GAT

3.3.2. Transfer Probability Matrix

3.3.3. Distance Decay Matrix

3.3.4. Fusion

3.4. Gating Network Module

3.5. Temporal Feature Extraction Module

3.6. Training and Output Module

3.7. Method Summary

4. Results

4.1. Datasets

4.2. Experimental Settings

4.3. Baselines

- (1)

- ARIMA [32]: Autoregressive integrated moving average (ARIMA) model is a traditional temporal sequence analysis method widely used for short-term forecasting, particularly effective in handling linear trends and seasonal variations in data.

- (2)

- VAR [33]: Vector auto-regressive (VAR) model is a multivariate temporal sequence model used to analyze several interdependent temporal sequence data and the relationships among their components.

- (3)

- LSTM [34]: LSTM is a specialized type of RNN that effectively handles long-term dependency issues by introducing memory cells and forgetting mechanisms.

- (4)

- DCRNN [35]: Diffusion convolutional recurrent neural network (DCRNN) utilizes bidirectional random walks on a graph and recurrent neural networks to learn the spatio-temporal features of traffic flow.

- (5)

- ASTGCN(r) [36]: Attention-based spatial-temporal graph convolutional network (ASTGCN) integrate the spatio-temporal attention mechanism and convolution, capturing time-period dependencies across different time scales, including recent, daily, and weekly, through three components, with their outputs combined to generate the final predictions. For fairness, only the temporal block of the recent cycle is utilized to simulate periodicity.

- (6)

- STDSGNN [37]: Spatial–temporal dynamic semantic graph neural network (STDSGNN) constructs two types of semantic adjacency matrices using dynamic time warping and Pearson correlation, incorporates a dynamic aggregation method for feature weighting, and employs an injection-stacked structure to reduce over-smoothing and improve forecasting accuracy.

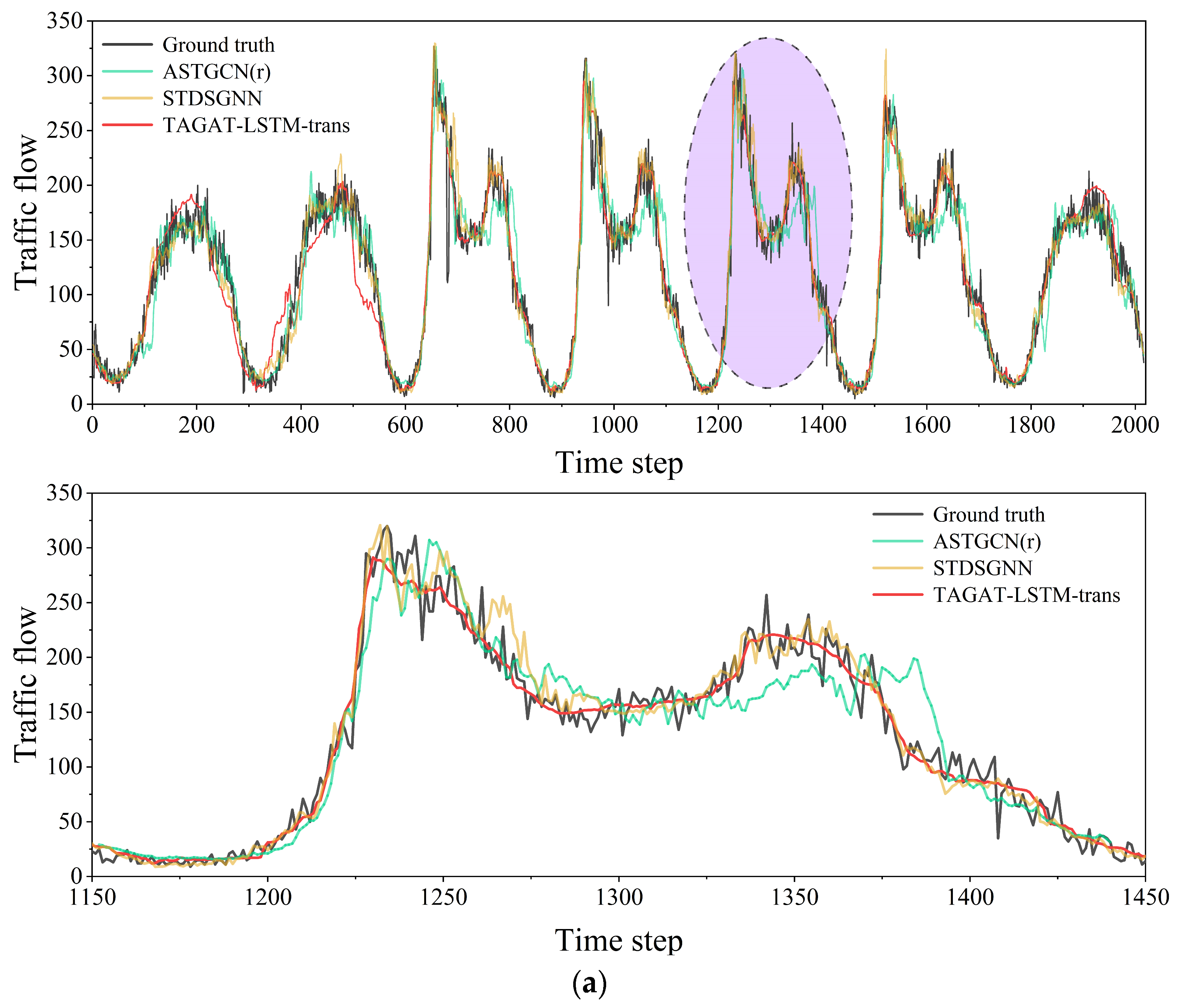

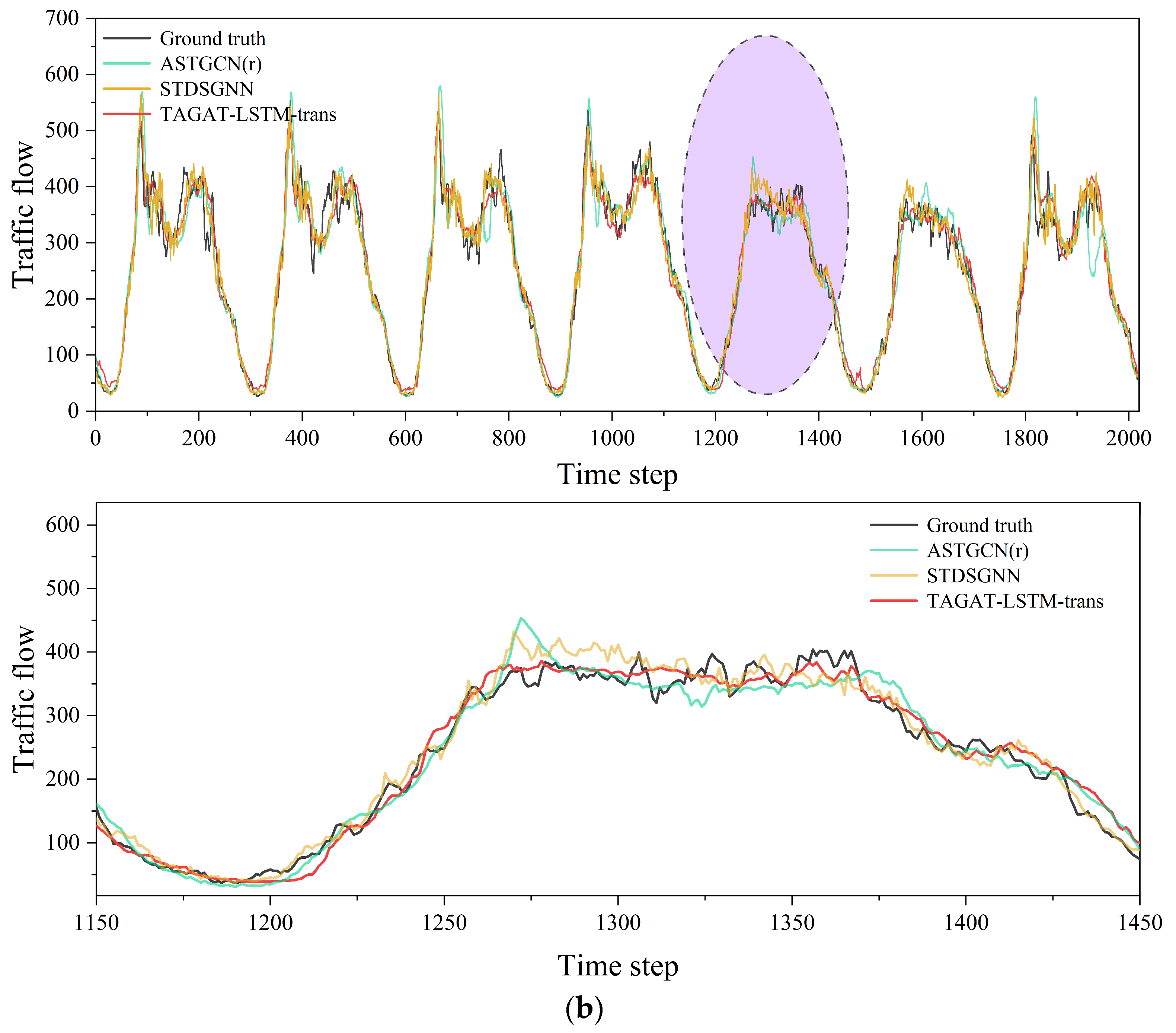

4.4. Experimental Results

4.5. Model Complexity and Computational Efficiency

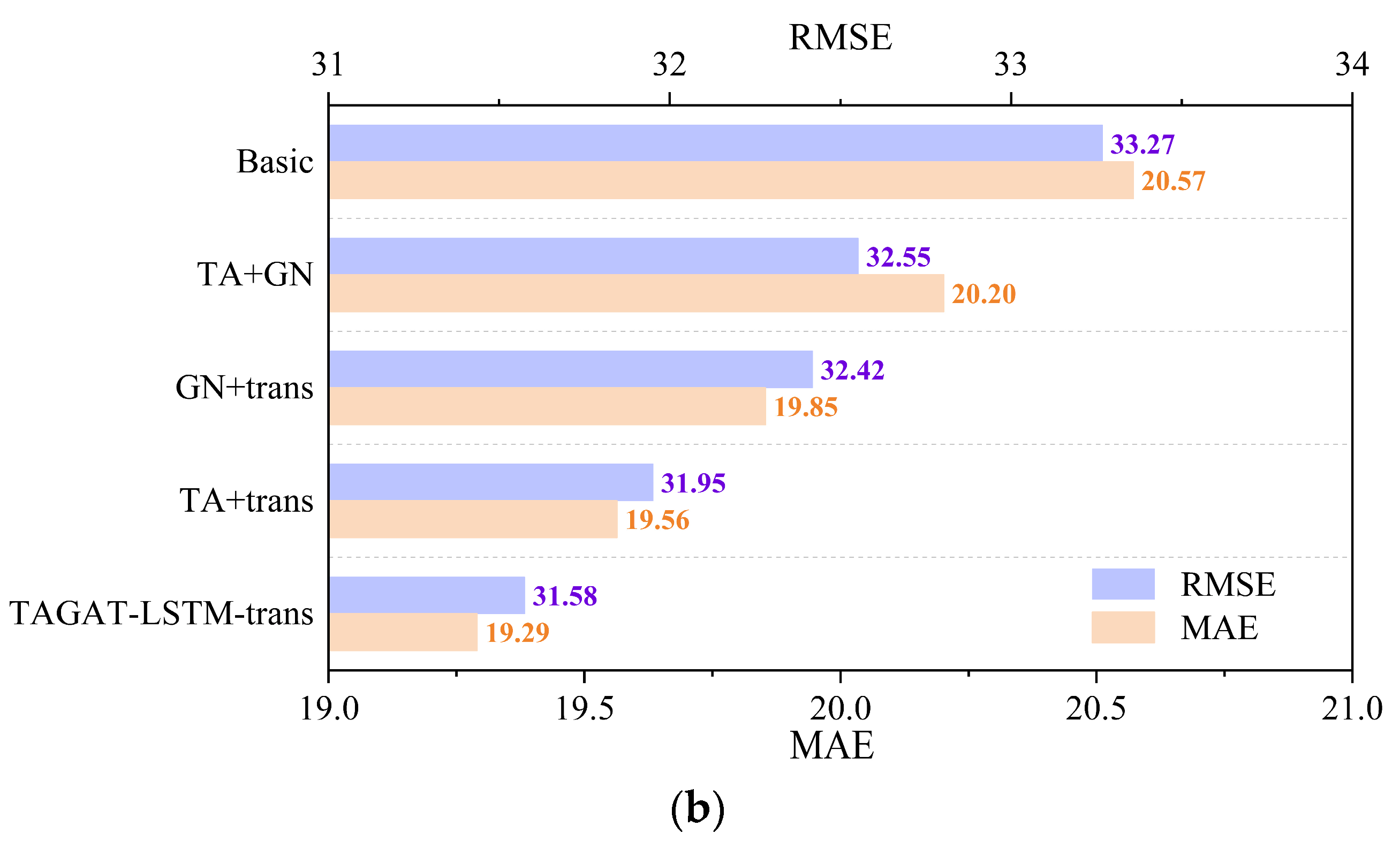

4.6. Ablation Studies

- (1)

- Basic: This variant removes the transfer-aware (TA), gating network (GN), and Transformer Encoder (trans) modules, relying solely on GAT and LSTM to learn the spatio-temporal dependencies. It represents the most basic model configuration.

- (2)

- TA + GN: This variant omits the trans module to evaluate the necessity of capturing global temporal dependencies in traffic flow.

- (3)

- GN + trans: This variant excludes the TA module, assessing the importance of considering the transmission capacity of traffic features between nodes when aggregating spatial features from neighbouring nodes.

- (4)

- TA + trans: This variant eliminates the GN module to evaluate the impact of integrating spatial features of traffic flow from adjacent time steps.

5. Discussion

5.1. Performance Superiority and Mechanism Interpretation

5.2. Component Contributions and Functional Synergy

5.3. Limitations

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Cao, S.; Wu, L.; Wu, J.; Wu, D.; Li, Q. A spatio-temporal sequence-to-sequence network for traffic flow prediction. Inf. Sci. 2022, 610, 185–203. [Google Scholar] [CrossRef]

- Zhang, J.; Zheng, Y.; Qi, D.; Li, R.; Yi, X.; Li, T. Predicting citywide crowd flows using deep spatio-temporal residual networks. Artif. Intell. 2018, 259, 147–166. [Google Scholar] [CrossRef]

- Lv, M.; Hong, Z.; Chen, L.; Chen, T.; Zhu, T.; Ji, S. Temporal multi-graph convolutional network for traffic flow prediction. IEEE Trans. Intell. Transp. Syst. 2020, 22, 3337–3348. [Google Scholar] [CrossRef]

- Boukerche, A.; Wang, J. Machine learning-based traffic prediction models for intelligent transportation systems. Comput. Netw. 2020, 181, 107530. [Google Scholar] [CrossRef]

- Zhuang, W.; Cao, Y. Short-term traffic flow prediction based on cnn-bilstm with multicomponent information. Appl. Sci. 2022, 12, 8714. [Google Scholar] [CrossRef]

- Lecun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef] [PubMed]

- Ren, C.; Chai, C.; Yin, C.; Ji, H.; Cheng, X.; Gao, G.; Zhang, H. Short-Term Traffic Flow Prediction: A Method of Combined Deep Learnings. J. Adv. Transp. 2021, 2021, 9928073. [Google Scholar] [CrossRef]

- Zhang, W.; Yu, Y.; Qi, Y.; Shu, F.; Wang, Y. Short-Term Traffic Flow Prediction Based on Spatio-Temporal Analysis and CNN Deep Learning. Transp. A Transp. Sci. 2019, 15, 1688–1711. [Google Scholar] [CrossRef]

- Jian-xi, Y.; Chao-shun, Y.U.; Ren, L.I. Traffic network speed prediction via multi-periodic-component spatial-temporal neural network. J. Transp. Syst. Eng. Inf. Technol. 2021, 21, 112. [Google Scholar]

- Narmadha, S.; Vijayakumar, V. Spatio-Temporal vehicle traffic flow prediction using multivariate CNN and LSTM model. Mater. Today Proc. 2023, 81, 826–833. [Google Scholar] [CrossRef]

- Peng, H.; Du, B.; Liu, M.; Liu, M.; Ji, S.; Wang, S.; Zhang, X.; He, L. Dynamic graph convolutional network for long-term traffic flow prediction with reinforcement learning. Inf. Sci. 2021, 578, 401–416. [Google Scholar] [CrossRef]

- Luan, S.; Ke, R.; Huang, Z.; Ma, X. Traffic congestion propagation inference using dynamic Bayesian graph convolution network. Transp. Res. Part C Emerg. Technol. 2022, 135, 103526. [Google Scholar] [CrossRef]

- Qu, Z.; Liu, X.; Zheng, M. Temporal-spatial quantum graph convolutional neural network based on Schrödinger approach for traffic congestion prediction. IEEE Trans. Intell. Transp. Syst. 2022, 24, 8677–8686. [Google Scholar] [CrossRef]

- Wang, Y.; Jing, C.; Xu, S.; Guo, T. Attention based spatiotemporal graph attention networks for traffic flow forecasting. Inf. Sci. 2022, 607, 869–883. [Google Scholar] [CrossRef]

- Fu, R.; Zhang, Z.; Li, L. Using LSTM and GRU neural network methods for traffic flow prediction. In Proceedings of the 2016 31st Youth Academic Annual Conference of Chinese Association of Automation (YAC), Wuhan, China, 11–13 November 2016; pp. 324–328. [Google Scholar]

- Zheng, C.; Fan, X.; Wen, C.; Chen, L.; Wang, C.; Li, J. DeepSTD: Mining spatio-temporal disturbances of multiple context factors for citywide traffic flow prediction. IEEE Trans. Intell. Transp. Syst. 2019, 21, 3744–3755. [Google Scholar] [CrossRef]

- Veličković, P.; Cucurull, G.; Casanova, A.; Romero, A.; Lio, P.; Bengio, Y. Graph attention networks. arXiv 2017, arXiv:1710.10903. [Google Scholar]

- Gan, R.; An, B.; Li, L.; Qu, X.; Ran, B. A freeway traffic flow prediction model based on a generalized dynamic spatio-temporal graph convolutional network. IEEE Trans. Intell. Transp. Syst. 2024, 25, 13682–13693. [Google Scholar] [CrossRef]

- Ali, A.; Ullah, I.; Ahmad, S.; Wu, Z.; Li, J.; Bai, X. An attention-driven spatio-temporal deep hybrid neural networks for traffic flow prediction in transportation systems. IEEE Trans. Intell. Transp. Syst. 2025, 26, 14154–14168. [Google Scholar] [CrossRef]

- Chen, Y.; Huang, J.; Xu, H.; Guo, J.; Su, L. Road traffic flow prediction based on dynamic spatiotemporal graph attention network. Sci. Rep. 2023, 13, 14729. [Google Scholar] [CrossRef]

- Lu, K.F.; Liu, Y.; Peng, Z.-R. Assessing the impacts of transit systems and urban street features on bike-sharing ridership: A graph-based spatiotemporal analysis and prediction model. J. Transp. Geogr. 2025, 128, 104356. [Google Scholar] [CrossRef]

- Wang, Q.; Ma, Z.; Yang, X.; Chien, S.I.-J.; Zhang, S.; Yin, Y. Exploring spatiotemporal dynamic of metro ridership and the influence of built environment factors at the station level: A case study of Nanjing, China. J. Transp. Geogr. 2025, 129, 104440. [Google Scholar] [CrossRef]

- Cui, M.; Yu, L.; Nie, S.; Dai, Z.; Ge, Y.-E.; Levinson, D. How do access and spatial dependency shape metro passenger flows. J. Transp. Geogr. 2025, 123, 104069. [Google Scholar] [CrossRef]

- Chen, X.; Wu, S.; Shi, C.; Huang, Y.; Yang, Y.; Ke, R.; Zhao, J. Sensing data supported traffic flow prediction via denoising schemes and ANN: A comparison. IEEE Sens. J. 2020, 20, 14317–14328. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.; Kaiser, L.; Polosukhin, I. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 30. [Google Scholar] [CrossRef]

- Wu, F.; Cheng, Z.; Chen, H.; Qiu, Z.; Sun, L. Traffic state estimation from vehicle trajectories with anisotropic Gaussian processes. Transp. Res. Part C Emerg. Technol. 2024, 163, 104646. [Google Scholar] [CrossRef]

- Deng, H. Traffic-Forecasting Model with Spatio-Temporal Kernel. Electronics 2025, 14, 1410. [Google Scholar] [CrossRef]

- Gers, F.A.; Schmidhuber, J.; Cummins, F. Learning to forget: Continual prediction with LSTM. Neural Comput. 2000, 12, 2451–2471. [Google Scholar] [CrossRef]

- An, Q.; Dong, M. Design and Case Study of Long Short Term Modeling for Next POI Recommendation. Int. J. Eng. Res. Manag. 2024, 11, 18–21. [Google Scholar]

- Li, S.; Cui, Y.; Xu, J.; Li, L.; Meng, L.; Yang, W.; Zhang, F.; Zhou, X. Unifying Lane-Level Traffic Prediction from a Graph Structural Perspective: Benchmark and Baseline. arXiv 2024, arXiv:2403.14941. [Google Scholar] [CrossRef]

- Kingma, D.P. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Williams, B.M.; Hoel, L.A. Modeling and forecasting vehicular traffic flow as a seasonal ARIMA process: Theoretical basis and empirical results. J. Transp. Eng. 2003, 129, 664–672. [Google Scholar] [CrossRef]

- Zivot, E.; Wang, J. Vector Autoregressive Models for Multivariate Time Series. In Modeling Financial Time Series with S-PLUS®; Springer: Berlin/Heidelberg, Germany, 2006; pp. 385–429. [Google Scholar]

- Hochreiter, S. Long Short-term Memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- Li, Y.; Yu, R.; Shahabi, C.; Liu, Y. Diffusion convolutional recurrent neural network: Data-driven traffic forecasting. arXiv 2017, arXiv:1707.01926. [Google Scholar]

- Guo, S.; Lin, Y.; Feng, N.; Song, C.; Wan, H. Attention based spatial-temporal graph convolutional networks for traffic flow forecasting. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; pp. 922–929. [Google Scholar]

- Zhang, R.; Xie, F.; Sun, R.; Huang, L.; Liu, X.; Shi, J. Spatial-temporal dynamic semantic graph neural network. Neural Comput. Appl. 2022, 34, 16655–16668. [Google Scholar] [CrossRef]

- Liu, L.; Wu, M.; Lv, Q.; Liu, H.; Wang, Y. CCNN-former: Combining convolutional neural network and Transformer for image-based traffic time series prediction. Expert Syst. Appl. 2025, 268, 126146. [Google Scholar] [CrossRef]

- Yang, B.; Li, R.; Wang, Y.; Xiang, S.; Zhu, S.; Dai, C.; Dai, S.; Guo, B. Cross-city transfer learning for traffic forecasting via incremental distribution rectification. Knowl. Based Syst. 2025, 315, 113336. [Google Scholar] [CrossRef]

- Yu, W.; Wang, W.; Hua, X.; Zhao, D.; Ngoduy, D. Dynamic patterns of intercity mobility and influencing factors: Insights from similarities in spatial time-series. J. Transp. Geogr. 2025, 124, 104154. [Google Scholar] [CrossRef]

- Pranolo, A.; Saifullah, S.; Utama, A.B.; Wibawa, A.P.; Bastian, M. High-performance traffic volume prediction: An evaluation of RNN, GRU, and CNN for accuracy and computational trade-offs. BIO Web Conf. 2024, 148, 02034. [Google Scholar] [CrossRef]

- Jiang, J.; Han, C.; Zhao, W.X.; Wang, J. Pdformer: Propagation delay-aware dynamic long-range transformer for traffic flow prediction. In Proceedings of the AAAI Conference on Artificial Intelligence, Washington, DC, USA, 7–14 February 2023; Volume 37, pp. 4365–4373. [Google Scholar]

- Sun, F.; Hao, W.; Zou, A.; Cheng, K. TVGCN: Time-varying graph convolutional networks for multivariate and multifeature spatiotemporal series prediction. Sci. Prog. 2024, 107, 00368504241283315. [Google Scholar] [CrossRef]

| Category | Methods | Technical Approach and Strengths | Limitations |

|---|---|---|---|

| Image_based | CNN, CNN-LSTM | Maps traffic flow data onto regular grids, enabling powerful spatial feature extraction | Unsuitable for non-Euclidean graph data |

| RNN-based | LSTM, GRU | Models temporal dependencies via gating mechanisms, effectively capturing local dynamics | Information decay, neglects spatial features |

| GCN-based | ASTGCN, Graph diffusion | Dynamically assigns weights to neighbors via attention mechanisms, capturing adaptive spatial dependencies | Assumes static and uniform node relationships |

| GAT-based | ASTGAT, DSTGAT | Adapts to dynamic spatial correlations | Ignores transmission capacity and physical distance |

| Ours | TAGAT-LSTM-trans | Models dynamic spatial influences and both local/global temporal dependencies | Increased model complexity |

| Datasets | Sensors | Edges | Time |

|---|---|---|---|

| PeMS03 | 358 | 547 | 1 September 2018–30 November 2018 |

| PeMS04 | 307 | 340 | 1 January 2018–28 February 2018 |

| Module | Hyperparameters | Numbers |

|---|---|---|

| TAGAT | Hidden units | 32 |

| Attention heads | 2 | |

| LSTM | Hidden units | 256 |

| Layers | 2 | |

| Transformer | Hidden units | 256 |

| Attention heads | 4 | |

| Other | Batch size | 50 |

| Learning rate | 5 × 10−4 | |

| Dropout | 0.1 |

| Model | PeMS03 | PeMS04 | ||

|---|---|---|---|---|

| MAE | RMSE | MAE | RMSE | |

| ARIMA | 23.07 | 40.62 | 37.84 | 59.03 |

| VAR | 23.65 | 38.26 | 33.76 | 51.73 |

| LSTM | 21.33 ± 0.24 | 35.11 ± 0.50 | 27.14 ± 0.20 | 41.59 ± 0.21 |

| DCRNN | 18.18 ± 0.15 | 30.31 ± 0.25 | 24.70 ± 0.22 | 38.12 ± 0.26 |

| ASTGCN(r) | 17.69 ± 1.43 | 29.66 ± 1.68 | 22.93 ± 1.29 | 35.22 ± 1.90 |

| STDSGNN | 16.20 ± 0.18 | 25.89 ± 0.62 | 20.82 ± 0.25 | 32.56 ± 0.55 |

| TAGAT-LSTM-trans | 14.99 ± 0.09 | 24.98 ± 0.12 | 19.49 ± 0.18 | 31.68 ± 0.22 |

| Model | Parameters | Size (MB) | Time/Iteration (ms) | Epochs to Convergence |

|---|---|---|---|---|

| ASTGCN(r) | 45,0031 | 1.72 | 172.24 ± 9.5 | 200 |

| STDSGNN | 1,044,605 | 3.98 | 268.43 ± 1.2 | 200 |

| TAGAT-LSTM-trans (Ours) | 1,810,741 | 6.91 | 337.86 ± 19.44 | ~79 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Published by MDPI on behalf of the International Society for Photogrammetry and Remote Sensing. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhou, Y.; Wang, X.; Jia, J. A Traffic Flow Forecasting Method Based on Transfer-Aware Spatio-Temporal Graph Attention Network. ISPRS Int. J. Geo-Inf. 2025, 14, 459. https://doi.org/10.3390/ijgi14120459

Zhou Y, Wang X, Jia J. A Traffic Flow Forecasting Method Based on Transfer-Aware Spatio-Temporal Graph Attention Network. ISPRS International Journal of Geo-Information. 2025; 14(12):459. https://doi.org/10.3390/ijgi14120459

Chicago/Turabian StyleZhou, Yan, Xiaodi Wang, and Jipeng Jia. 2025. "A Traffic Flow Forecasting Method Based on Transfer-Aware Spatio-Temporal Graph Attention Network" ISPRS International Journal of Geo-Information 14, no. 12: 459. https://doi.org/10.3390/ijgi14120459

APA StyleZhou, Y., Wang, X., & Jia, J. (2025). A Traffic Flow Forecasting Method Based on Transfer-Aware Spatio-Temporal Graph Attention Network. ISPRS International Journal of Geo-Information, 14(12), 459. https://doi.org/10.3390/ijgi14120459