Geo-MRC: Dynamic Boundary Inference in Machine Reading Comprehension for Nested Geographic Named Entity Recognition

Abstract

1. Introduction

- (1)

- We present Geo-MRC, a novel MRC-based framework that reformulates Geo-NER as a question-answering task, enabling robust recognition of nested and overlapping geographic entities.

- (2)

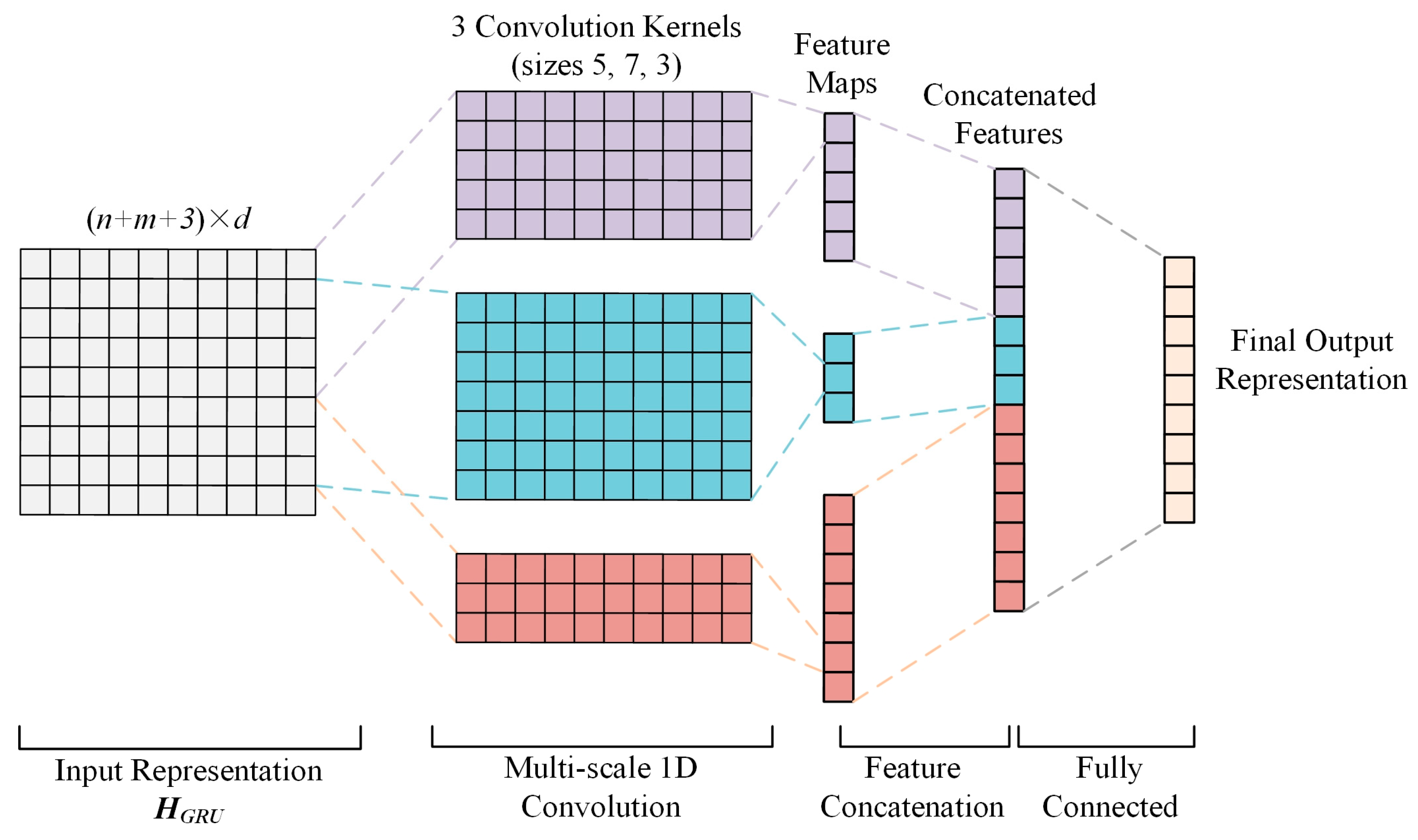

- We design a multi-layer feature extraction strategy that fuses BERT representations, GRU-based semantic fusion, and multi-scale 1D convolutions to capture both multi-level semantics and local contextual patterns.

- (3)

- We propose a dynamic boundary inference mechanism that jointly predicts start positions, end positions, and span lengths, allowing flexible and accurate entity span reconstruction.

- (4)

- We create manually augmented nested versions of four public Chinese and English datasets and conduct comprehensive experiments, achieving consistent improvements over strong MRC-based baselines on both mixed and nested benchmarks.

2. Related Work

2.1. Geo-NER Methods

- Rule-based and statistical methods: Rule-based approaches rely on manually curated dictionaries and pattern-matching techniques to identify geographic entities [19,20]. For example, location names may be recognized through common suffixes, while organization names may be detected using domain-specific keywords [21]. These methods perform well in domain-specific scenarios but suffer from poor generalization due to their reliance on handcrafted rules. Moreover, because they depend heavily on surface forms, such methods are unable to capture contextual variations or nested relationships that are common in geographic text. Statistical methods, such as those based on Hidden Markov Models (HMM) and Conditional Random Fields (CRF) [22,23], enhance recognition accuracy by incorporating contextual features and the probabilistic distribution of labeled sequences. However, their feature engineering process is labor-intensive and insufficient for modeling complex spatial hierarchies or overlapping geographic entities, which are often encountered in real-world Geo-NER tasks.

- Traditional deep learning-based methods: These methods have become the mainstream approach in Geo-NER in recent years. A notable example is the work by Lample et al. [24], who utilized Bidirectional Long Short-Term Memory networks (Bi-LSTM) to capture contextual dependencies, in conjunction with a Conditional Random Field (CRF) layer to ensure global label consistency. This architecture achieved significant success in general NER tasks. Within the Geo-NER domain, such methods have been further enhanced by integrating geographic gazetteers, attention mechanisms, external knowledge bases, and multi-task learning strategies to improve the identification of geographic entities [25,26,27,28]. Compared with traditional statistical models, deep learning-based approaches reduce the dependence on handcrafted features and demonstrate stronger capabilities in capturing context-sensitive geographic information. However, they still treat Geo-NER as a sequence labeling problem, where each token receives a single label. This design makes it difficult to detect nested and boundary-sharing entities such as “北京清华大学 (Tsinghua University in Beijing)” or “上海浦东新区 (Shanghai’s Pudong New Area),” where multiple spatial units overlap. In addition, as the volume of textual data continues to grow, traditional neural architectures begin to show limitations in handling large-scale corpora due to constrained modeling capacity and computational inefficiencies.

- Pre-trained language model-based methods: These approaches leverage large-scale corpus pre-training to generate rich semantic representations, thereby significantly improving the accuracy of geographic entity recognition. For example, models such as BERT, ALBERT, ERNIE, BART, and XLNet have been integrated into traditional deep learning architectures to enhance contextual understanding in Geo-NER tasks [9,29,30,31,32]. In addition, some studies reformulate Geo-NER as a sequence-to-sequence task using encoder–decoder frameworks, enabling better modeling of complex dependencies and facilitating the recognition of flexible, nested, or ambiguous geographic expressions [33,34]. To further adapt to the unique characteristics of geographic texts, domain-specific pre-trained models such as GeoBERT [35] and CnGeoPLM [36] have been developed, demonstrating improved performance in Geo-NER. Compared to traditional deep learning methods, pre-trained language models excel at capturing long-distance dependencies, reduce reliance on large-scale annotated data, and demonstrate greater robustness and accuracy when handling complex geographical entities. However, despite these advances, most of these models still follow the sequence labeling paradigm and cannot effectively handle the multi-level semantic hierarchies or spatially overlapping entities inherent in geographic data. Therefore, new frameworks are needed to model the nested, boundary-sharing, and hierarchically structured characteristics of geographic entities more explicitly.

2.2. MRC-Based NER Methods

3. Method

3.1. Input Representation Construction

3.2. Multi-Layer Contextual Feature Extraction

3.2.1. Multi-Layer BERT Representations

3.2.2. GRU-Based Semantic Fusion

3.2.3. Multi-Scale 1D Convolutions

3.3. Dynamic Boundary Inference

- Start and end position prediction. Token-level probabilities are obtained via sigmoid functions, indicating whether each token is the beginning or end of an entity.

- Length prediction. For each token, span length distributions are predicted, where l is the maximum entity length, enabling flexible boundary construction. In this work, the length predictor operates on token-level representations derived from the BERT tokenizer. Specifically, for Chinese datasets, we use Chinese-BERT-wwm-ext, and for English datasets, we employ BERT-base-cased, both adopting WordPiece-based tokenization. Consequently, the predicted length refers to the number of WordPiece sub-tokens that form each entity span rather than full words or characters.

- Boundary inference. Based on these outputs, entity spans are dynamically determined. If a token is predicted as a start, its boundary is given by [posi, posi + Lstart[i]], where posi is the position of the token. Conversely, if predicted as an end, the span is [posi-Lend[i], posi]. Duplicate spans are removed, and the entity type is determined by the predefined question.

4. Experiments

4.1. Experimental Setup

4.1.1. Datasets

- (1)

- Hierarchical nesting, where entities form spatial or administrative hierarchies, such as “上海浦东新区 (Shanghai’s Pudong New Area)”, reflecting part–whole relationships that require multi–level semantic understanding. This type of nesting is closely related to the first problem identified in this study, as existing models that rely only on top-layer features often fail to capture such hierarchical semantics.

- (2)

- Boundary sharing, where two or more entities share the same start or end positions, such as “北京清华大学 (Tsinghua University in Beijing)” containing both “清华大学 (Tsinghua University)” and “北京 (Beijing)”. This form of overlap corresponds directly to the second problem addressed in this work. Conventional sequence labeling and MRC-based methods typically detect only one of the entities because they assume that each token can belong to a single span, making them ineffective for boundary-sharing cases.

- (3)

- Cross-level semantic overlap, where geographic and organizational meanings coexist within the same span, such as “兰州交通大学 (Lanzhou Jiaotong University)”, representing both a location and an institution. Although this phenomenon is not the focus of the present study, it reflects the inherent semantic ambiguity of geographic entities. The MRC framework can naturally handle such cases by designing different questions for different entity types, allowing the model to extract multiple category labels for the same text span.

4.1.2. Evaluation Metrics

4.1.3. Parameter Settings

4.2. Comparative Experiments

4.2.1. Performance on Mixed Datasets

4.2.2. Performance on Nested Datasets

4.3. Ablation Studies

4.4. Results Analysis and Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Nozza, D.; Manchanda, P.; Fersini, E.; Palmonari, M.; Messina, E. LearningToAdapt with word embeddings: Domain adaptation of named entity recognition systems. Inf. Process. Manag. 2021, 58, 102537. [Google Scholar] [CrossRef]

- Berragan, C.; Singleton, A.; Calafiore, A.; Morley, J. Transformer based named entity recognition for place name extraction from unstructured text. Int. J. Geogr. Inf. Sci. 2023, 37, 747–766. [Google Scholar] [CrossRef]

- Li, P.; Liu, J.; Luo, A.; Wang, Y.; Zhu, J.; Xu, S. Deep learning method for Chinese multisource point of interest matching. Comput. Environ. Urban Syst. 2022, 96, 101821. [Google Scholar] [CrossRef]

- Weckmüller, D.; Dunkel, A.; Burghardt, D. Embedding-Based Multilingual Semantic Search for Geo-Textual Data in Urban Studies. J. Geovis. Spat. Anal. 2025, 9(2), 31. [Google Scholar] [CrossRef]

- Kryvasheyeu, Y.; Chen, H.; Obradovich, N.; Moro, E.; Van Hentenryck, P.; Fowler, J.; Cebrian, M. Rapid assessment of disaster damage using social media activity. Sci. Adv. 2016, 2, e1500779. [Google Scholar] [CrossRef]

- Tadakaluru, A. Context Optimized and Spatial Aware Dummy Locations Generation Framework for Location Privacy. J. Geovis. Spat. Anal. 2022, 6, 27. [Google Scholar] [CrossRef]

- Li, Y.; Peng, L.; Sang, Y.; Gao, H. The characteristics and functionalities of citizen-led disaster response through social media: A case study of the #HenanFloodsRelief on Sina Weibo. Int. J. Disaster Risk Reduct. 2024, 106, 104419. [Google Scholar]

- Marasinghe, R.; Yigitcanlar, T.; Mayere, S.; Washington, T.; Limb, M. Towards responsible urban geospatial AI: Insights from the white and grey literatures. J. Geovis. Spat. Anal. 2024, 8, 24. [Google Scholar] [CrossRef]

- Tao, L.; Xie, Z.; Xu, D.; Ma, K.; Qiu, Q.; Pan, S.; Huang, B. Geographic named entity recognition by employing natural language processing and an improved BERT model. ISPRS Int. J. Geo-Inf. 2022, 11, 598. [Google Scholar] [CrossRef]

- Li, P.; Zhu, Q.; Liu, J.; Liu, T.; Du, P.; Liu, S.; Zhang, Y. A Multi-Semantic Feature Fusion Method for Complex Address Matching of Chinese Addresses. ISPRS Int. J. Geo-Inf. 2025, 14, 227. [Google Scholar] [CrossRef]

- Gritta, M.; Pilehvar, M.T.; Collier, N. A pragmatic guide to geoparsing evaluation. Lang. Resour. Eval. 2020, 54, 683–712. [Google Scholar] [CrossRef]

- Nadeau, D.; Sekine, S. A survey of named entity recognition and classification. Lingvist. Investig. 2007, 30, 3–26. [Google Scholar] [CrossRef]

- Luo, L.; Yang, Z.; Yang, P.; Zhang, Y.; Wang, L.; Lin, H.; Wang, J. An attention-based BiLSTM-CRF approach to document-level chemical named entity recognition. Bioinformatics 2018, 34, 1381–1388. [Google Scholar] [CrossRef]

- Li, J.; Sun, A.; Han, J.; Li, C. A survey on deep learning for named entity recognition. IEEE Trans. Knowl. Data Eng. 2022, 34, 50–70. [Google Scholar] [CrossRef]

- Wang, Y.; Sun, Y.; Ma, Z.; Gao, L.; Xu, Y.; Sun, T. Application of pre-training models in named entity recognition. In Proceedings of the 2020 12th International Conference on Intelligent Human-Machine Systems and Cybernetics, Hangzhou, China, 22–23 August 2020; IEEE: Piscataway, NJ, USA, 2020; Volume 1, pp. 23–26. [Google Scholar]

- Li, X.; Feng, J.; Meng, Y.; Han, Q.; Wu, F.; Li, J. A unified MRC framework for named entity recognition. arXiv 2020, arXiv:1910.11476. [Google Scholar]

- Yu, J.; Ji, B.; Li, S.; Ma, J.; Liu, H.; Xu, H. S-NER: A Concise and Efficient Span-Based Model for Named Entity Recognition. Sensors 2022, 22, 2852. [Google Scholar] [CrossRef]

- Bao, X.; Tian, M.; Zha, Z.; Qin, B. MPMRC-MNER: Unified MRC Framework for MNER. In Proceedings of the 32nd ACM International Conference on Information and Knowledge Management, Birmingham, UK, 21–25 October 2023; pp. 47–56. [Google Scholar]

- Leidner, J.L. Toponym resolution in text: Annotation, evaluation and applications of spatial grounding. In ACM SIGIR Forum; ACM: New York, NY, USA, 2007; Volume 41, pp. 124–126. [Google Scholar]

- Shaalan, K.; Raza, H. NERA: Named entity recognition for Arabic. J. Am. Soc. Inf. Sci. Technol. 2009, 60, 1652–1663. [Google Scholar] [CrossRef]

- Shen, J.; Li, F.; Xu, F.; Uszkoreit, H. Recognition of chinese organization names and abbreviations. J. Chin. Inf. Process. 2007, 21, 17–21. [Google Scholar]

- Lafferty, J.D.; McCallum, A.; Pereira, F. Conditional random fields: Probabilistic models for segmenting and labeling sequence data. In Proceedings of the International Conference on Machine Learning, Beijing, China, 21–26 June 2001; Volume 22, pp. 1–28. [Google Scholar]

- Morwal, S.; Jahan, N.; Chopra, D. Named entity recognition using Hidden Markov Model (HMM). Int. J. Nat. Lang. Comput. (IJNLC) 2012, 1, 15–23. [Google Scholar] [CrossRef]

- Lample, G.; Ballesteros, M.; Subramanian, S.; Kawakami, K.; Dyer, C. Neural architectures for named entity recognition. arXiv 2016, arXiv:1603.01360. [Google Scholar] [CrossRef]

- Qiu, Q.; Xie, Z.; Wu, L.; Tao, L.; Li, W. BiLSTM-CRF for geological named entity recognition from the geoscience literature. Earth Sci. Inform. 2019, 12, 565–579. [Google Scholar] [CrossRef]

- Song, C.H.; Lawrie, D.; Finin, T.; Mayfield, T. Improving neural named entity recognition with gazetteers. arXiv 2020, arXiv:2003.03072. [Google Scholar] [CrossRef]

- Hu, X.; Al-Olimat, H.S.; Kersten, J.; Wiegmann, M.; Klan, F.; Sun, Y.; Fan, H. GazPNE: Annotation-free deep learning for place name extraction from microblogs leveraging gazetteer and synthetic data by rules. Int. J. Geogr. Inf. Sci. 2022, 36, 310–337. [Google Scholar] [CrossRef]

- Tang, X.; Huang, Y.; Xia, M.; Long, C. A multi-task BERT-BiLSTM-AM-CRF strategy for Chinese named entity recognition. Neural Process. Lett. 2023, 55, 1209–1229. [Google Scholar] [CrossRef]

- Wang, Y.; Sun, Y.; Ma, Z.; Gao, L.; Xu, Y. An ERNIE-based joint model for Chinese named entity recognition. Appl. Sci. 2020, 10, 5711. [Google Scholar] [CrossRef]

- Cui, L.; Wu, Y.; Liu, J.; Yang, S.; Zhang, Y. Template-based named entity recognition using BART. arXiv 2021, arXiv:2106.01760. [Google Scholar] [CrossRef]

- Yan, R.; Jiang, X.; Dang, D. Named entity recognition by using XLNet-BiLSTM-CRF. Neural Process. Lett. 2021, 53, 3339–3356. [Google Scholar] [CrossRef]

- Zhang, W.; Meng, J.; Wan, J.; Zhang, C.; Zhang, J.; Wang, Y.; Xu, L.; Li, F. ChineseCTRE: A model for geographical named entity recognition and correction based on deep neural networks and the BERT model. ISPRS Int. J. Geo. Inf. 2023, 12, 394. [Google Scholar] [CrossRef]

- Nayak, T.; Ng, H. Effective modeling of encoder-decoder architecture for joint entity and relation extraction. In Proceedings of the Thirty-Fourth AAAI Conference on Artificial Intelligence 2020, New York, NY, USA, 7–12 February 2020; pp. 8528–8535. [Google Scholar]

- Yan, H.; Gui, T.; Dai, J.; Guo, Q.; Zhang, Z.; Qiu, X. A unified generative framework for various NER subtasks. arXiv 2021, arXiv:2106.01223. [Google Scholar] [CrossRef]

- Liu, H.; Qiu, Q.; Wu, L.; Li, W.; Wang, B.; Zhou, Y. Few-shot learning for name entity recognition in geological text based on GeoBERT. Earth Sci. Inform. 2022, 15, 979–991. [Google Scholar] [CrossRef]

- Ma, K.; Zheng, S.; Tian, M.; Qiu, Q.; Tan, Y.; Hu, X.; Li, H.; Xie, Z. CnGeoPLM: Contextual knowledge selection and embedding with pretrained language representation model for the geoscience domain. Earth Sci. Inform. 2023, 16, 3629–3646. [Google Scholar] [CrossRef]

- Zhang, Y.; Zhang, H. FinBERT–MRC: Financial named entity recognition using BERT under the machine reading comprehension paradigm. Neural Process. Lett. 2023, 55, 7393–7413. [Google Scholar] [CrossRef]

- Sun, C.; Yang, Z.; Wang, L.; Zhang, Y.; Lin, H.; Wang, J. Biomedical named entity recognition using BERT in the machine reading comprehension framework. J. Biomed. Inform. 2021, 118, 103799. [Google Scholar] [CrossRef]

- Zhang, H.; Guo, J.; Wang, Y.; Zhang, Z.; Zhao, H. Judicial nested named entity recognition method with MRC framework. Int. J. Cogn. Comput. Eng. 2023, 4, 118–126. [Google Scholar] [CrossRef]

- Huang, Z.; He, L.; Yang, Y.; Li, A.; Zhang, Z.; Wu, S.; Wang, Y.; He, Y.; Liu, X. Application of machine reading comprehension techniques for named entity recognition in materials science. J. Cheminform. 2024, 16, 76. [Google Scholar] [CrossRef]

- Shrimal, A.; Jain, A.; Mehta, K.; Yenigalla, P. NER-MQMRC: Formulating named entity recognition as multi question machine reading comprehension. arXiv 2022, arXiv:2205.05904. [Google Scholar] [CrossRef]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. Bert: Pre-training of deep bidirectional transformers for language under-standing. arXiv 2018, arXiv:1810.04805. [Google Scholar]

- Gao, Y.; Xiong, Y.; Wang, S.; Wang, H. GeoBERT: Pre-training geospatial representation learning on point-of-interest. Appl. Sci. 2022, 12, 12942. [Google Scholar] [CrossRef]

- Li, Z.; Kim, J.; Chiang, Y.Y.; Chen, M. SpaBERT: A pretrained language model from geographic data for geo-entity representation. In Proceedings of the Findings of the Association for Computational Linguistics: EMNLP, Miami, FL, USA, 7–11 December 2022; pp. 2757–2769. [Google Scholar]

- Gardazi, N.M.; Daud, A.; Malik, M.K.; Bukhari, A.; Alsahfi, T.; Alshemaimri, B. BERT applications in natural language processing: A review. Artif. Intell. Rev. 2025, 58, 166. [Google Scholar] [CrossRef]

- Cho, K.; Van Merriënboer, B.; Gulcehre, C.; Bahdanau, D.; Bougares, F.; Schwenk, H.; Bengio, Y. Learning phrase representations using RNN encoder-decoder for statistical machine translation. arXiv 2014, arXiv:1406.1078. [Google Scholar] [CrossRef]

- Li, P.; Luo, A.; Liu, J.; Wang, Y.; Zhu, J.; Deng, Y.; Zhang, J. Bidirectional gated recurrent unit neural network for Chinese address element segmentation. ISPRS Int. J. Geo-Inf. 2020, 9, 635. [Google Scholar] [CrossRef]

- Jiang, X.; He, K.; He, J.; Yan, G. A new entity extraction method based on machine reading comprehension. arXiv 2021, arXiv:2108.06444. [Google Scholar] [CrossRef]

- Fei, Y.; Xu, X. GFMRC: A machine reading comprehension model for named entity recognition. Pattern Recognit. Lett. 2023, 172, 97–105. [Google Scholar] [CrossRef]

- Zhang, Y.; Wang, J.; Zhu, X.; Sakai, T.; Yamana, H. NER-to-MRC: Named-entity recognition completely solving as machine reading comprehension. arXiv 2023, arXiv:2305.03970. [Google Scholar]

- Du, X.; Zhao, H.; Xing, D.; Jia, Y.; Zan, H. MRC-based Nested Medical NER with Co-prediction and Adaptive Pre-training. arXiv 2024, arXiv:2403.15800. [Google Scholar]

- Aryabumi, V.; Dang, J.; Talupuru, D.; Dash, S.; Cairuz, D.; Lin, H.; Venkitesh, B.; Smith, M.; Campos, J.A.; Tan, Y.C.; et al. Aya 23: Open weight releases to further multilingual progress. arXiv 2024, arXiv:2405.15032. [Google Scholar] [CrossRef]

- Team, G.; Kamath, A.; Ferret, J.; Pathak, S.; Vieillard, N.; Merhej, R.; Perrin, S.; Matejovicova, T.; Ramé, A.; Rivière, M.; et al. Gemma 3 technical report. arXiv 2025, arXiv:2503.19786. [Google Scholar] [CrossRef]

| Entity Type | Question | |

|---|---|---|

| 1 | Location name | What are the location names in the text? |

| 2 | Organization name | What are the organization names in the text? |

| 3 | Person name | What are the person names in the text? |

| Example Sentence | Augmented Nested Annotation | Type of Nesting | |

|---|---|---|---|

| 1 | … on the New York Stock Exchange. | ORG: New York Stock Exchange LOC: New York | Boundary sharing |

| 2 | John F. Kennedy International Airport closed… | LOC: John F. Kennedy International Airport PER: John F. Kennedy | Cross-level semantic overlap |

| 3 | “北京清华大学的科研成果…” (“Research results from Tsinghua University in Beijing…”) | ORG: 清华大学 (Tsinghua University) LOC: 北京 (Beijing) ORG: 北京清华大学 (Tsinghua University in Beijing) | Boundary sharing |

| 4 | “…在上海浦东新区” (“…in Shanghai’s Pudong New Area”) | LOC: 上海浦东新区 (Shanghai’s Pudong New Area) LOC: 上海 (Shanghai) LOC: 浦东新区 (Pudong New Area) | Hierarchical nesting Boundary sharing |

| Example Sentence | Entity Type (Question) | Input Pair (MRC Format) | Expected Answer |

|---|---|---|---|

| … on the New York Stock Exchange. | Organization name (What are the organization names in the text?) | Q: What are the organization names in the text? T: …on the New York Stock Exchange | New York Stock Exchange |

| Location name (What are the location names in the text?) | Q: What are the location names in the text? T: …on the New York Stock Exchange | New York | |

| Person name (What are the person names in the text?) | Q: What are the person names in the text? T: …on the New York Stock Exchange | None |

| Category | Parameter | Value |

|---|---|---|

| Input Representation Construction | Max Sentence Length (Tokens) | 128 |

| Max Entity Length (Tokens) | 15 | |

| Multi-layer Contextual Feature Extraction | GRU Hidden Units | 256 |

| GRU Layers | 1 | |

| Convolution Kernel Sizes | 3, 5, 7 | |

| Dropout Rate | 0.1 | |

| Dynamic Boundary Inference | FFN Layers | 2 |

| FFN Hidden Units | 768 | |

| Training Settings | Learning Rate | |

| Batch Size | 256 | |

| Training Epochs | 20 | |

| Dropout Rate | 0.1 |

| Datasets (Mixed: Non-Nested + Nested) | Methods | F1 Score | Micro-F1 | ||

|---|---|---|---|---|---|

| PER | LOC | ORG | |||

| RenMinRiBao | MRC-I2DP | 92.13% | 78.99% | 80.67% | 82.49% |

| GFMRC | 91.42% | 78.05% | 80.11% | 81.80% | |

| NER-to-MRC | 92.28% | 78.85% | 81.29% | 82.65% | |

| MRC-CAP | 91.52% | 78.83% | 82.02% | 82.60% | |

| Geo-MRC | 95.84% | 90.87% | 79.76% | 88.40% | |

| MSRA | MRC-I2DP | 91.70% | 77.63% | 76.14% | 80.35% |

| GFMRC | 92.01% | 78.47% | 75.58% | 80.60% | |

| NER-to-MRC | 91.53% | 78.02% | 76.21% | 80.43% | |

| MRC-CAP | 91.50% | 77.79% | 75.47% | 80.12% | |

| Geo-MRC | 95.66% | 89.91% | 79.03% | 87.94% | |

| CoNLL-2003 | MRC-I2DP | 83.36% | 76.79% | 66.85% | 74.85% |

| GFMRC | 85.66% | 78.63% | 67.42% | 76.58% | |

| NER-to-MRC | 76.68% | 80.03% | 67.88% | 74.45% | |

| MRC-CAP | 87.18% | 78.12% | 67.80% | 77.07% | |

| Geo-MRC | 87.30% | 82.30% | 68.97% | 79.27% | |

| OntoNotes 5.0 | MRC-I2DP | 83.28% | 43.87% | 63.14% | 68.35% |

| GFMRC | 84.41% | 45.65% | 64.54% | 69.67% | |

| NER-to-MRC | 84.85% | 47.07% | 64.25% | 70.04% | |

| MRC-CAP | 83.50% | 48.44% | 63.97% | 69.37% | |

| Geo-MRC | 84.99% | 50.88% | 67.13% | 72.24% | |

| Datasets (Nested) | Methods | F1 Score | Micro-F1 | ||

|---|---|---|---|---|---|

| PER | LOC | ORG | |||

| RenMinRiBao | MRC-I2DP | 67.55% | 51.32% | 36.47% | 46.70% |

| GFMRC | 64.60% | 50.60% | 37.36% | 46.36% | |

| NER-to-MRC | 64.71% | 50.00% | 38.38% | 46.46% | |

| MRC-CAP | 67.53% | 52.39% | 34.64% | 46.63% | |

| Geo-MRC | 69.57% | 57.41% | 42.11% | 51.66% | |

| MSRA | MRC-I2DP | 70.49% | 52.84% | 40.07% | 49.74% |

| GFMRC | 69.65% | 53.74% | 42.00% | 50.69% | |

| NER-to-MRC | 69.98% | 55.16% | 42.49% | 51.75% | |

| MRC-CAP | 69.04% | 53.16% | 37.41% | 48.57% | |

| Geo-MRC | 71.11% | 61.50% | 47.45% | 56.57% | |

| CoNLL-2003 | MRC-I2DP | 42.63% | 41.15% | 28.25% | 35.62% |

| GFMRC | 44.44% | 39.67% | 27.18% | 34.75% | |

| NER-to-MRC | 47.19% | 38.06% | 29.92% | 35.76% | |

| MRC-CAP | 46.76% | 37.76% | 27.25% | 34.54% | |

| Geo-MRC | 53.52% | 42.97% | 35.17% | 44.69% | |

| OntoNotes 5.0 | MRC-I2DP | 41.87% | 34.77% | 26.33% | 32.44% |

| GFMRC | 47.75% | 37.14% | 29.49% | 36.03% | |

| NER-to-MRC | 49.39% | 36.64% | 29.01% | 36.08% | |

| MRC-CAP | 48.43% | 36.11% | 26.07% | 34.51% | |

| Geo-MRC | 51.34% | 38.98% | 33.44% | 39.75% | |

| Datasets | Methods | Micro-F1 | |

|---|---|---|---|

| Mixed | Nested | ||

| RenMinRiBao | Geo-MRC w/o GRU | 85.16% | 50.52% |

| Geo-MRC w/o CNN | 86.30% | 50.59% | |

| Geo-MRC w/o length | 79.60% | 45.79% | |

| Geo-MRC | 88.40% | 51.66% | |

| MSRA | Geo-MRC w/o GRU | 86.41% | 54.14% |

| Geo-MRC w/o CNN | 87.13% | 55.15% | |

| Geo-MRC w/o length | 78.94% | 50.56% | |

| Geo-MRC | 87.94% | 56.57% | |

| CoNLL-2003 | Geo-MRC w/o GRU | 75.56% | 39.38% |

| Geo-MRC w/o CNN | 74.89% | 41.16% | |

| Geo-MRC w/o length | 73.74% | 35.84% | |

| Geo-MRC | 79.27% | 44.69% | |

| OntoNotes 5.0 | Geo-MRC w/o GRU | 71.69% | 35.16% |

| Geo-MRC w/o CNN | 70.78% | 37.14% | |

| Geo-MRC w/o length | 69.11% | 34.99% | |

| Geo-MRC | 72.24% | 39.75% | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Published by MDPI on behalf of the International Society for Photogrammetry and Remote Sensing. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, Y.; Li, J.; Li, P.; Liu, T.; Du, P.; Hao, X. Geo-MRC: Dynamic Boundary Inference in Machine Reading Comprehension for Nested Geographic Named Entity Recognition. ISPRS Int. J. Geo-Inf. 2025, 14, 431. https://doi.org/10.3390/ijgi14110431

Zhang Y, Li J, Li P, Liu T, Du P, Hao X. Geo-MRC: Dynamic Boundary Inference in Machine Reading Comprehension for Nested Geographic Named Entity Recognition. ISPRS International Journal of Geo-Information. 2025; 14(11):431. https://doi.org/10.3390/ijgi14110431

Chicago/Turabian StyleZhang, Yuting, Jingzhong Li, Pengpeng Li, Tao Liu, Ping Du, and Xuan Hao. 2025. "Geo-MRC: Dynamic Boundary Inference in Machine Reading Comprehension for Nested Geographic Named Entity Recognition" ISPRS International Journal of Geo-Information 14, no. 11: 431. https://doi.org/10.3390/ijgi14110431

APA StyleZhang, Y., Li, J., Li, P., Liu, T., Du, P., & Hao, X. (2025). Geo-MRC: Dynamic Boundary Inference in Machine Reading Comprehension for Nested Geographic Named Entity Recognition. ISPRS International Journal of Geo-Information, 14(11), 431. https://doi.org/10.3390/ijgi14110431