Cross-Context Aggregation for Multi-View Urban Scene and Building Facade Matching

Abstract

1. Introduction

- A detector-free framework that integrates multi-scale local representations, cross-region contextual aggregation, and geometric priors, enhancing the robustness and spatial consistency in urban feature matching;

- A lightweight channel feature reorganization module that selectively emphasizes informative channels while suppressing redundant features;

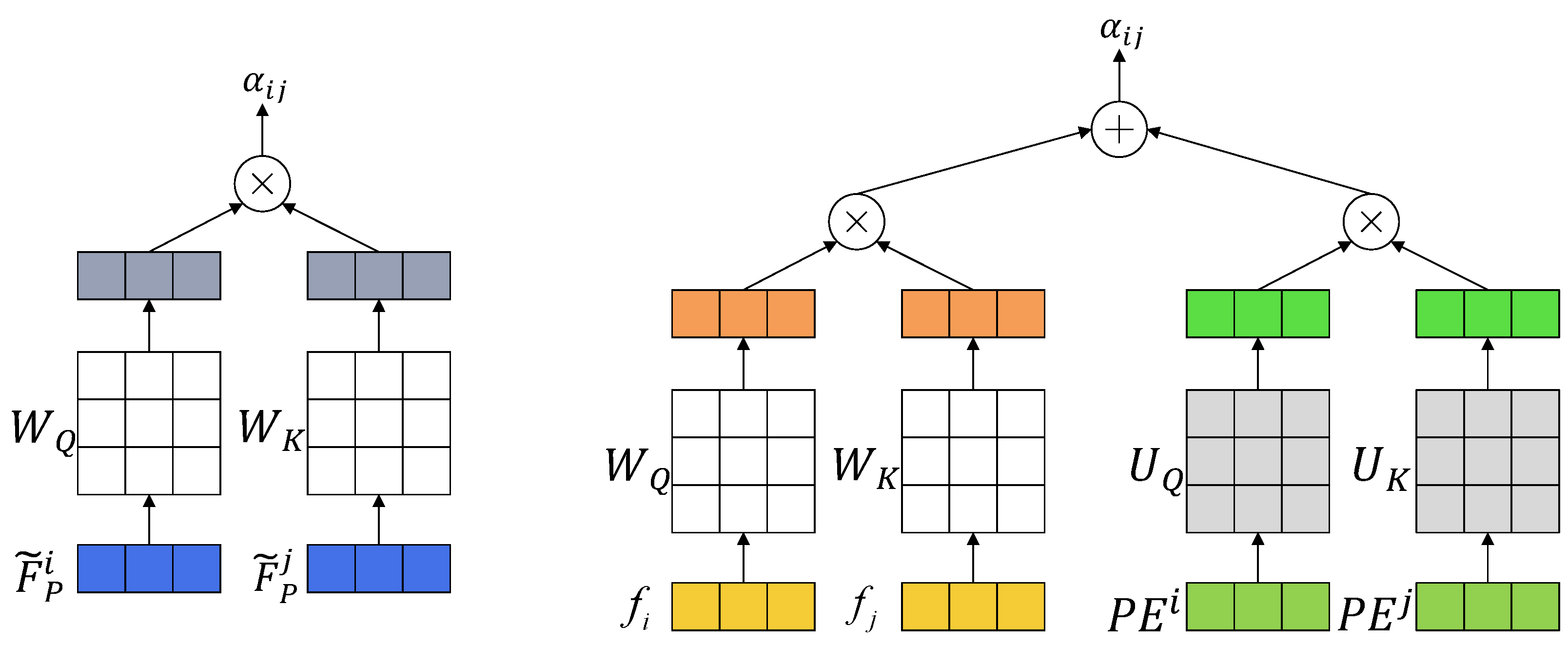

- A novel attention design that combines parallel self- and cross-attention with independent positional encoding to retain spatial information through deep attention layers;

- Extensive experiments on challenging urban scenes that demonstrate the effectiveness and competitiveness of the proposed approach.

2. Related Work

2.1. Detector-Based Feature Matching

2.2. Detector-Free Feature Matching

3. Methodology

3.1. Framework

3.2. Backbone Feature Extraction

3.3. Self- and Cross-Attention for Feature Aggregation

3.4. Independent Positional Encoding

3.5. Local Feature Enhancement

3.6. Integration and Description

3.7. Matching Layer

4. Experiments

4.1. Implementation Details

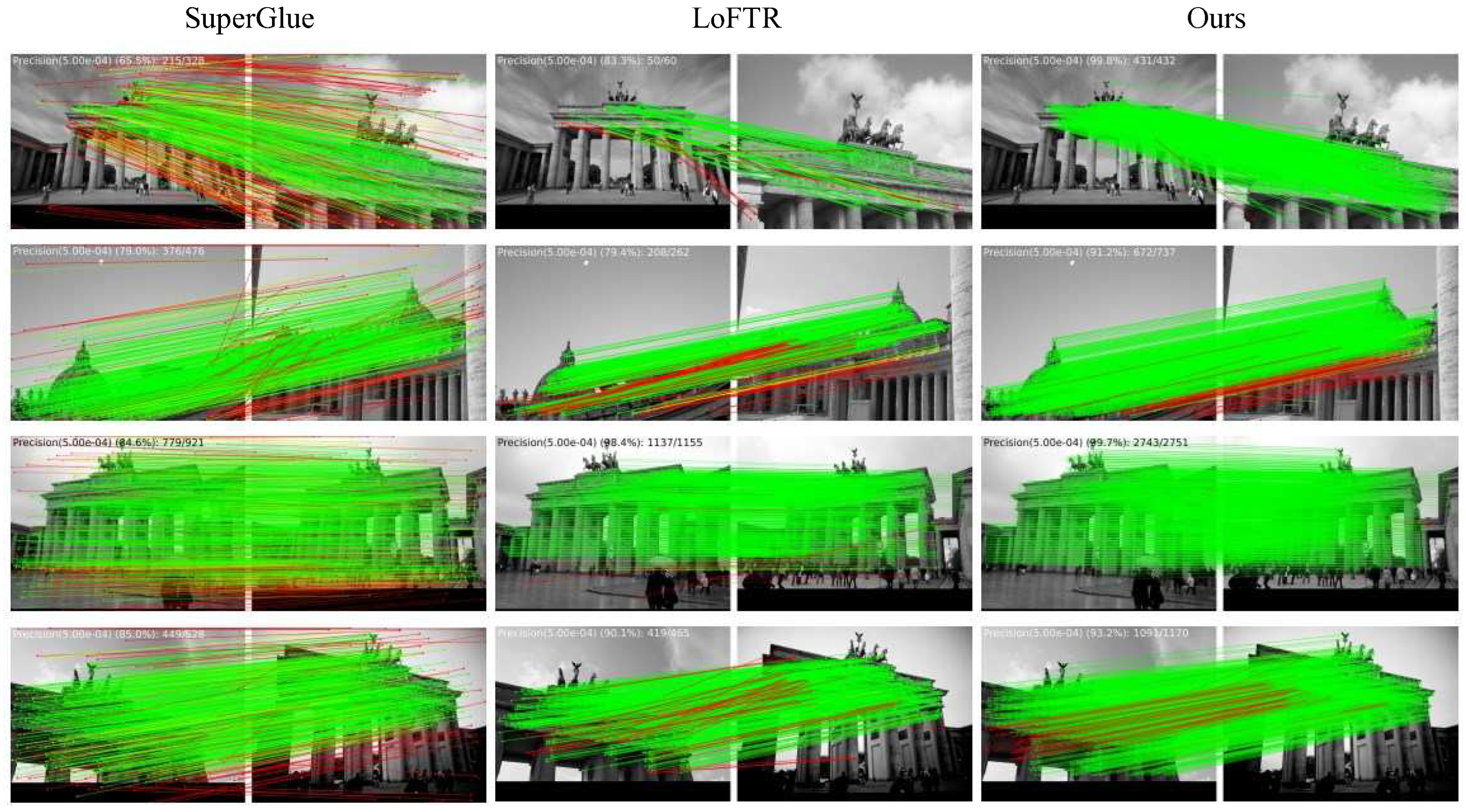

4.2. Pose Estimation for Urban Scenes

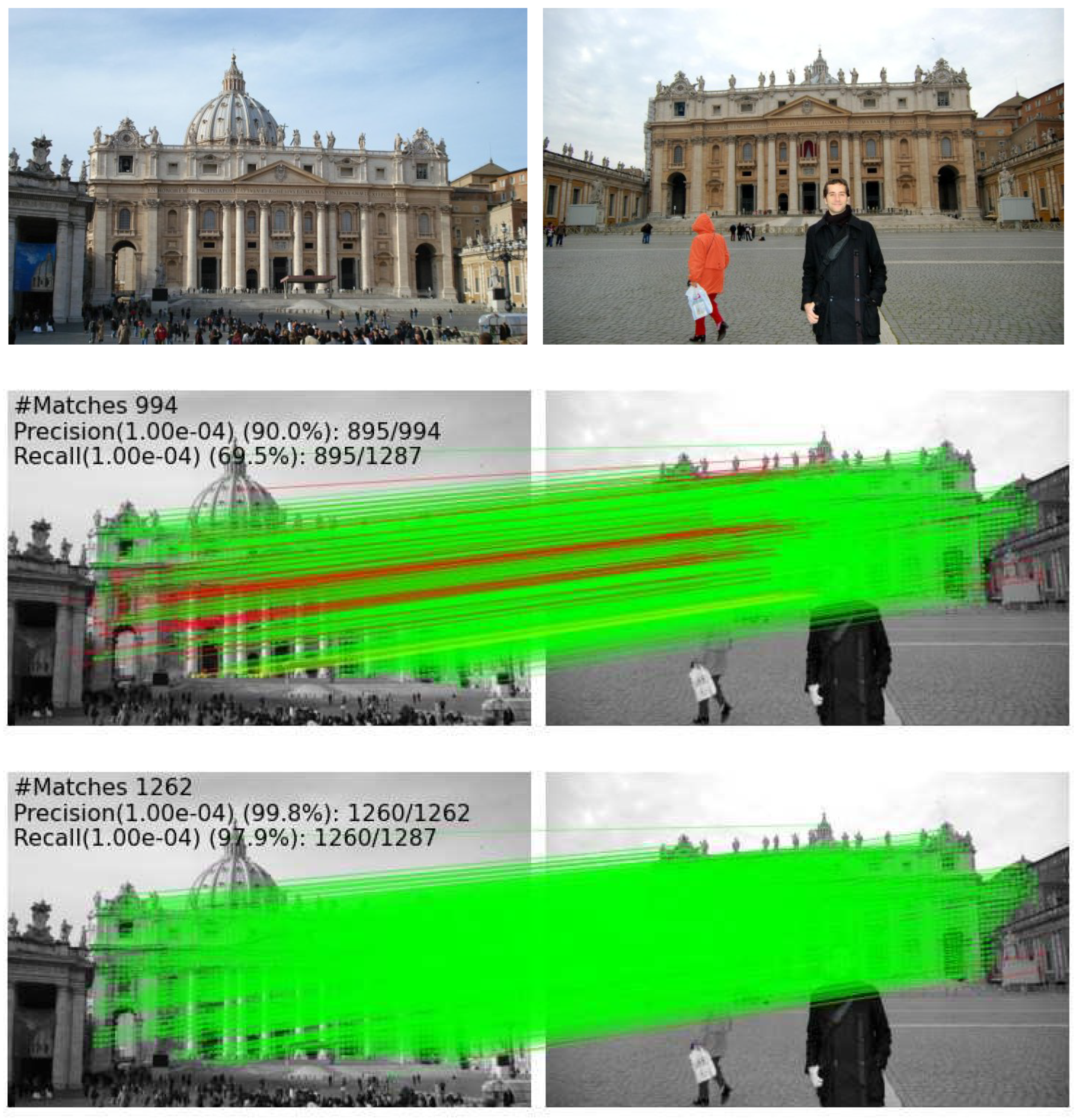

4.3. Visual Localization Under Cross-Daylight Conditions

4.4. Discussion

4.4.1. Embedding Components

4.4.2. Positional Encoding

4.4.3. Extraction Depth of Cross-Scale Local Features

4.4.4. Efficiency Analysis

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Luo, H.; Zhang, J.; Liu, X.; Zhang, L.; Liu, J. Large-Scale 3D Reconstruction from Multi-View Imagery: A Comprehensive Review. Remote Sens. 2024, 16, 773. [Google Scholar]

- Huang, X.; Yang, J.; Li, J.; Wen, D. Urban functional zone mapping by integrating high spatial resolution nighttime light and daytime multi-view imagery. ISPRS J. Photogramm. Remote Sens. 2021, 175, 403–415. [Google Scholar] [CrossRef]

- Zhang, X.; Qin, H.; Ma, L.; Yu, Y.; Ma, Y.; Hu, Y. Deep Feature Matching of Different-Modal Images for Visual Geo-Localization of AAVs. IEEE Trans. Aerosp. Electron. Syst. 2025, 61, 2784–2801. [Google Scholar] [CrossRef]

- Zhang, Y.; Zhen, J.; Liu, T.; Yang, Y.; Cheng, Y. Adaptive Differentiation Siamese Fusion Network for Remote Sensing Change Detection. IEEE Geosci. Remote Sens. Lett. 2025, 22, 1–5. [Google Scholar] [CrossRef]

- Lowe, D.G. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vision 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Bay, H.; Tuytelaars, T.; Van Gool, L. SURF: Speeded Up Robust Features. In Proceedings of the European Conference on Computer Vision, Graz, Austria, 7–13 May 2006; pp. 404–417. [Google Scholar]

- Rocco, I.; Cimpoi, M.; Arandjelović, R.; Torii, A.; Pajdla, T.; Sivic, J. Neighbourhood consensus networks. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, QC, Canada, 3–8 December 2018; pp. 1658–1669. [Google Scholar]

- Li, X.; Han, K.; Li, S.; Prisacariu, V. Dual-Resolution Correspondence Networks. In Proceedings of the Advances in Neural Information Processing Systems, Virtual, 6–12 December 2020; pp. 17346–17357. [Google Scholar]

- Sun, J.; Shen, Z.; Wang, Y.; Bao, H.; Zhou, X. LoFTR: Detector-Free Local Feature Matching with Transformers. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 8918–8927. [Google Scholar]

- Xu, C.; Wang, B.; Ye, Z.; Mei, L. ETQ-Matcher: Efficient Quadtree-Attention-Guided Transformer for Detector-Free Aerial–Ground Image Matching. Remote Sens. 2025, 17, 1300. [Google Scholar] [CrossRef]

- Rublee, E.; Rabaud, V.; Konolige, K.; Bradski, G. ORB: An efficient alternative to SIFT or SURF. In Proceedings of the International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; pp. 2564–2571. [Google Scholar]

- Dusmanu, M.; Rocco, I.; Pajdla, T.; Pollefeys, M.; Sivic, J.; Torii, A.; Sattler, T. D2-Net: A Trainable CNN for Joint Description and Detection of Local Features. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 8084–8093. [Google Scholar]

- DeTone, D.; Malisiewicz, T.; Rabinovich, A. Superpoint: Self-supervised interest point detection and description. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Salt Lake City, UT, USA, 18–23 June 2018; pp. 224–236. [Google Scholar]

- Zhang, J.; Jiao, L.; Ma, W.; Liu, F.; Liu, X.; Li, L.; Zhu, H. RDLNet: A Regularized Descriptor Learning Network. IEEE Trans. Neural Netw. Learn. Syst. 2023, 34, 5669–5681. [Google Scholar] [CrossRef] [PubMed]

- Xiao, X.; Qu, W.; Xia, G.S.; Xu, M.; Shao, Z.; Gong, J.; Li, D. A novel real-time matching and pose reconstruction method for low-overlap agricultural UAV images with repetitive textures. ISPRS J. Photogramm. Remote Sens. 2025, 226, 54–75. [Google Scholar] [CrossRef]

- He, H.; Xiong, W.; Zhou, F.; He, Z.; Zhang, T.; Sheng, Z. Topology-Aware Multi-View Street Scene Image Matching for Cross-Daylight Conditions Integrating Geometric Constraints and Semantic Consistency. ISPRS Int. J. Geo-Inf. 2025, 14, 212. [Google Scholar] [CrossRef]

- Sarlin, P.E.; DeTone, D.; Malisiewicz, T.; Rabinovich, A. SuperGlue: Learning Feature Matching With Graph Neural Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 4937–4946. [Google Scholar]

- Lu, X.; Yan, Y.; Kang, B.; Du, S. ParaFormer: Parallel Attention Transformer for Efficient Feature Matching. In Proceedings of the AAAI Conference on Artificial Intelligence, Washington, DC, USA, 7–14 February 2023; pp. 1853–1860. [Google Scholar]

- Cui, Z.; Tang, R.; Wei, J. UAV Image Stitching With Transformer and Small Grid Reformation. IEEE Geosci. Remote Sens. Lett. 2023, 20, 1–5. [Google Scholar] [CrossRef]

- Wang, Z.; Shi, D.; Qiu, C.; Jin, S.; Li, T.; Shi, Y.; Liu, Z.; Qiao, Z. Sequence Matching for Image-Based UAV-to-Satellite Geolocalization. IEEE Trans. Geosci. Remote Sens. 2024, 62, 1–15. [Google Scholar] [CrossRef]

- Yu, J.; Chang, J.; He, J.; Zhang, T.; Yu, J.; Wu, F. Adaptive Spot-Guided Transformer for Consistent Local Feature Matching. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 18–22 June 2023; pp. 21898–21908. [Google Scholar]

- Edstedt, J.; Sun, Q.; Bökman, G.; Wadenbäck, M.; Felsberg, M. RoMa: Robust Dense Feature Matching. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 19790–19800. [Google Scholar]

- Li, D.; Yan, Y.; Liang, D.; Du, S. MSFORMER: Multi-Scale Transformer with Neighborhood Consensus for Feature Matching. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing, Rhodes Island, Greece, 4–10 June 2023. [Google Scholar]

- Zhou, Y.; Cheng, X.; Zhai, X.; Xue, L.; Du, S. CSFormer: Cross-Scale Transformer for Feature Matching. In Proceedings of the International Conference on Sensing, Measurement and Data Analytics in the Era of Artificial Intelligence, Xi’an, China, 2–4 November 2023; pp. 1–6. [Google Scholar]

- Zhang, Y.; Lan, C.; Zhang, H.; Ma, G.; Li, H. Multimodal Remote Sensing Image Matching via Learning Features and Attention Mechanism. IEEE Trans. Geosci. Remote Sens. 2024, 62, 1–20. [Google Scholar] [CrossRef]

- Lin, T.; Dollár, P.; Girshick, R.B.; He, K.; Hariharan, B.; Belongie, S.J. Feature Pyramid Networks for Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 936–944. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-Excitation Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Spatial Pyramid Pooling in Deep Convolutional Networks for Visual Recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 1904–1916. [Google Scholar] [CrossRef] [PubMed]

- Cuturi, M. Sinkhorn distances: Lightspeed computation of optimal transport. In Proceedings of the Advances in Neural Information Processing Systems, Lake Tahoe, NV, USA, 5–10 December 2013; pp. 2292–2300. [Google Scholar]

- Li, Z.; Snavely, N. MegaDepth: Learning Single-View Depth Prediction from Internet Photos. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 2041–2050. [Google Scholar]

- Schönberger, J.L.; Frahm, J.M. Structure-from-Motion Revisited. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 4104–4113. [Google Scholar]

- Dai, A.; Chang, A.X.; Savva, M.; Halber, M.; Funkhouser, T.; Nießner, M. ScanNet: Richly-Annotated 3D Reconstructions of Indoor Scenes. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2432–2443. [Google Scholar]

- Bian, J.; Lin, W.Y.; Matsushita, Y.; Yeung, S.K.; Nguyen, T.D.; Cheng, M.M. GMS: Grid-Based Motion Statistics for Fast, Ultra-Robust Feature Correspondence. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2828–2837. [Google Scholar]

- Yi, K.M.; Trulls, E.; Ono, Y.; Lepetit, V.; Salzmann, M.; Fua, P. Learning to Find Good Correspondences. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 2666–2674. [Google Scholar]

- Zhang, J.; Sun, D.; Luo, Z.; Yao, A.; Zhou, L.; Shen, T.; Chen, Y.; Liao, H.; Quan, L. Learning Two-View Correspondences and Geometry Using Order-Aware Network. In Proceedings of the IEEE International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 5844–5853. [Google Scholar]

- Luo, Z.; Shen, T.; Zhou, L.; Zhang, J.; Yao, Y.; Li, S.; Fang, T.; Quan, L. ContextDesc: Local Descriptor Augmentation With Cross-Modality Context. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 2522–2531. [Google Scholar]

- Sattler, T.; Maddern, W.; Toft, C.; Torii, A.; Hammarstrand, L.; Stenborg, E.; Safari, D.; Okutomi, M.; Pollefeys, M.; Sivic, J.; et al. Benchmarking 6DOF Outdoor Visual Localization in Changing Conditions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8601–8610. [Google Scholar]

- Sarlin, P.E.; Cadena, C.; Siegwart, R.; Dymczyk, M. From Coarse to Fine: Robust Hierarchical Localization at Large Scale. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 12708–12717. [Google Scholar]

| Category | Method | Advantages | Limitations |

|---|---|---|---|

| Detector-based | SuperPoint [13] | Efficient inference, illumination robust | Limited robustness in dynamic scenes |

| RDLNet [14] | Compact discriminative descriptor | Requires extensive training to learn hard samples | |

| SuperGlue [17] | Strong contextual reasoning, robust to occlusion and viewpoint changes | Poor performance in textureless regions | |

| ParaFormer [18] | Improved computational efficiency | Prolonged training convergence | |

| Detector-free | DRC-Net [8] | Increased matching reliability | Limited receptive field |

| LoFTR [9] | Robust to occlusion and illumination changes | Losing detailed information | |

| ASTR [21] | High accuracy under extreme viewpoint changes | Requires the camera’s intrinsic parameters | |

| RoMa [22] | Robustness under extreme scenarios | False geometric matches |

| Category | Image Size | Method | Pose Estimation AUC (%) | Precision (%) | ||

|---|---|---|---|---|---|---|

| @5° | @10° | @20° | @1e-4 | |||

| Detector-based | - | SuperPoint [13] | 31.7 | 46.8 | 60.1 | - |

| +NN+mutual | ||||||

| - | SuperPoint [13] | 36.54 | 54.33 | 69.42 | - | |

| +SuperGlue [17] | ||||||

| Detector-free | - | DRC-Net [8] | 27.01 | 42.96 | 58.31 | - |

| 640 | MSFormer [23] | 45.35 | 62.24 | 74.96 | 92.85 | |

| 640 | LoFTR [9] | 36.76 | 53.04 | 66.17 | 89.16 | |

| 640 | CSFormer [24] | 46.84 | 62.57 | 74.81 | 93.65 | |

| 640 | Ours | 46.41 | 62.72 | 75.00 | 94.23 | |

| 720 | LoFTR [9] | 43.13 | 58.74 | 70.81 | 81.75 | |

| 720 | CSFormer [24] | 47.96 | 64.25 | 76.75 | 94.25 | |

| 720 | Ours | 48.15 | 64.70 | 77.36 | 95.87 | |

| Category | Method | Pose Estimation AUC (%) | Precision (%) | ||

|---|---|---|---|---|---|

| @5° | @10° | @20° | @5e-4 | ||

| Detector-based | ORB [11] + GMS [33] | 5.21 | 13.65 | 25.36 | 72.0 |

| D2Net [12] + NN | 5.25 | 14.53 | 27.96 | 46.7 | |

| ContextDesc [36] +Ratio Test [5] | 6.64 | 15.01 | 25.75 | 51.2 | |

| SuperPoint [13] + NN | 9.43 | 21.53 | 36.40 | 50.4 | |

| SuperPoint [13] + GMS [33] | 8.39 | 18.96 | 31.56 | 50.3 | |

| SuperPoint [13] + PointCN [34] | 11.40 | 25.47 | 41.41 | 71.8 | |

| SuperPoint [13] + OANet [35] | 11.76 | 26.90 | 43.85 | 74.0 | |

| SuperPoint [13] + SuperGlue [17] | 11.82 | 26.76 | 40.38 | 85.1 | |

| Detector-free | DRC-Net [8] | 7.69 | 11.93 | 30.49 | - |

| LoFTR [9] | 12.47 | 26.98 | 41.64 | 62.20 | |

| CSFormer [24] | 13.08 | 27.80 | 44.17 | 77.36 | |

| Ours | 13.26 | 28.03 | 45.05 | 78.08 | |

| Method | Day | Night | ||||

|---|---|---|---|---|---|---|

| (0.25 m, 2°) | (0.5 m, 5°) | (1.0 m, 10°) | (0.25 m, 2°) | (0.55 m, 5°) | (5 m, 10°) | |

| SuperGlue [17] | 87.4 | 93.4 | 97.1 | 66.0 | 80.6 | 91.5 |

| LoFTR [9] | 86.0 | 92.5 | 96.7 | 68.1 | 80.1 | 91.6 |

| CSFormer [24] | 86.2 | 93.9 | 97.6 | 72.3 | 89.5 | 97.4 |

| Ours | 86.5 | 94.3 | 97.9 | 72.6 | 90.1 | 98.1 |

| Parallel Attention | Channel Reorganization | Cross-Scale Aggregation | AUC (%) | ||

|---|---|---|---|---|---|

| @5° | @10° | @20° | |||

| ✓ | ✓ | 27.89 | 43.56 | 59.03 | |

| ✓ | 45.32 | 61.45 | 74.81 | ||

| ✓ | ✓ | 47.06 | 63.11 | 76.27 | |

| ✓ | ✓ | ✓ | 48.15 | 64.70 | 77.36 |

| APE | IPE | AUC (%) | Precision (%) | ||

|---|---|---|---|---|---|

| @5° | @10° | @20° | @1e-4 | ||

| ✓ | 47.96 | 64.25 | 76.75 | 94.25 | |

| ✓ | 47.11 | 63.71 | 76.73 | 95.28 | |

| ✓ | ✓ | 48.15 | 64.70 | 77.36 | 95.87 |

| AUC (%) | Precision (%) | |||

|---|---|---|---|---|

| @5° | @10° | @20° | @1e-4 | |

| 1 | 45.17 | 61.43 | 74.20 | 92.50 |

| 2 | 47.22 | 63.89 | 76.11 | 92.63 |

| 3 | 48.28 | 64.46 | 76.74 | 94.64 |

| 4 | 48.15 | 64.70 | 77.36 | 95.87 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Published by MDPI on behalf of the International Society for Photogrammetry and Remote Sensing. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yan, Y.; Zhou, Y. Cross-Context Aggregation for Multi-View Urban Scene and Building Facade Matching. ISPRS Int. J. Geo-Inf. 2025, 14, 425. https://doi.org/10.3390/ijgi14110425

Yan Y, Zhou Y. Cross-Context Aggregation for Multi-View Urban Scene and Building Facade Matching. ISPRS International Journal of Geo-Information. 2025; 14(11):425. https://doi.org/10.3390/ijgi14110425

Chicago/Turabian StyleYan, Yaping, and Yuhang Zhou. 2025. "Cross-Context Aggregation for Multi-View Urban Scene and Building Facade Matching" ISPRS International Journal of Geo-Information 14, no. 11: 425. https://doi.org/10.3390/ijgi14110425

APA StyleYan, Y., & Zhou, Y. (2025). Cross-Context Aggregation for Multi-View Urban Scene and Building Facade Matching. ISPRS International Journal of Geo-Information, 14(11), 425. https://doi.org/10.3390/ijgi14110425