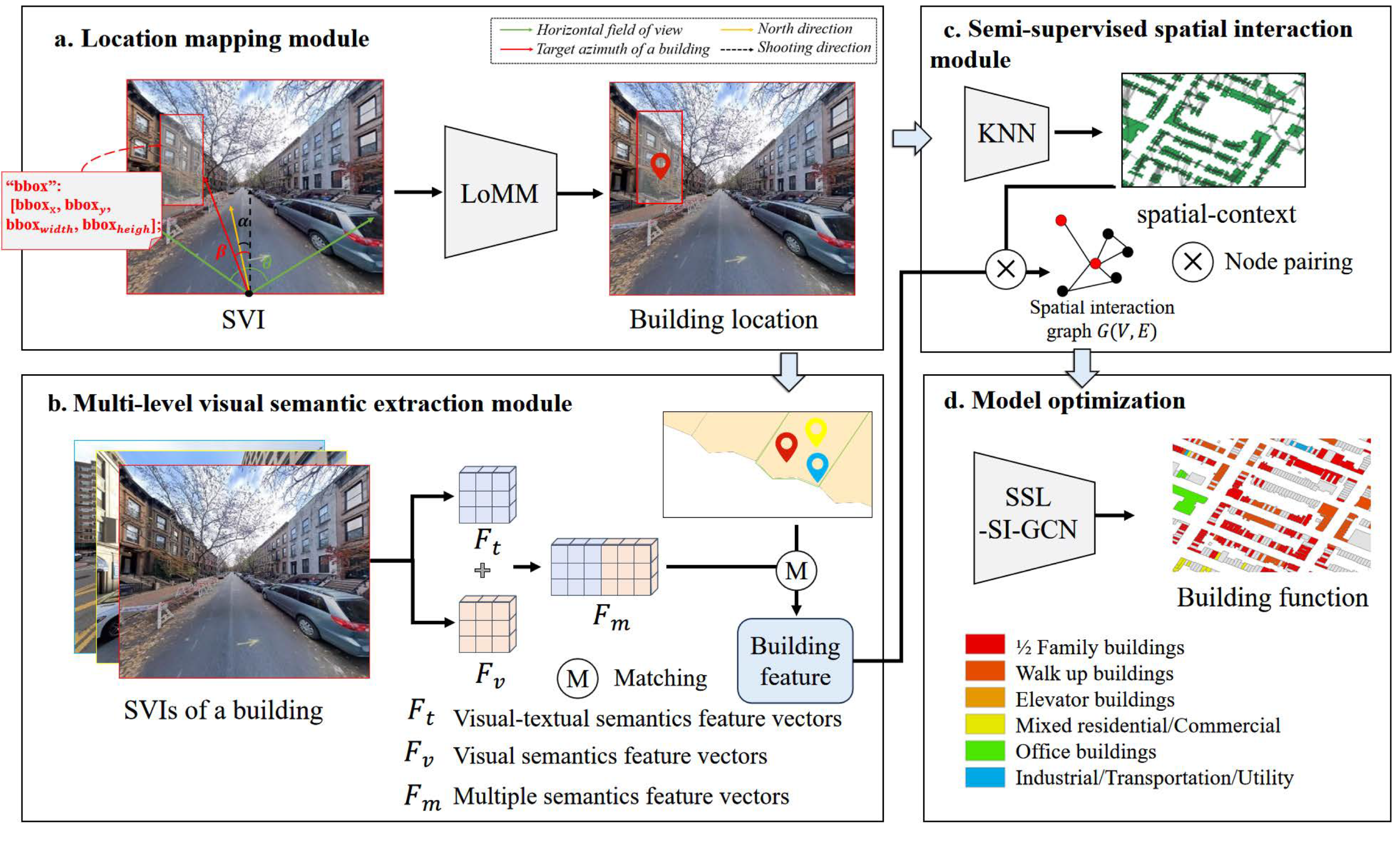

This study aims to improve building function identification by integrating multiple semantics of SVIs with the spatial context of buildings, and it proposes the multi-semantic semi-supervised building function identification (MS-SS-BFI) method. As depicted in

Figure 1, the proposed method comprises four key modules: a location mapping module (LoMM) that is designed to align SVIs with buildings, a multi-level visual semantic extraction module (ML-VSEM) that extracts visual semantics and visual-textual semantics from SVIs, a semi-supervised spatial interaction module (S

3IM) that integrates multi-semantics and spatial context between buildings via graph-based aggregation, and a model optimization module that trains the model and predicts the function category of each building.

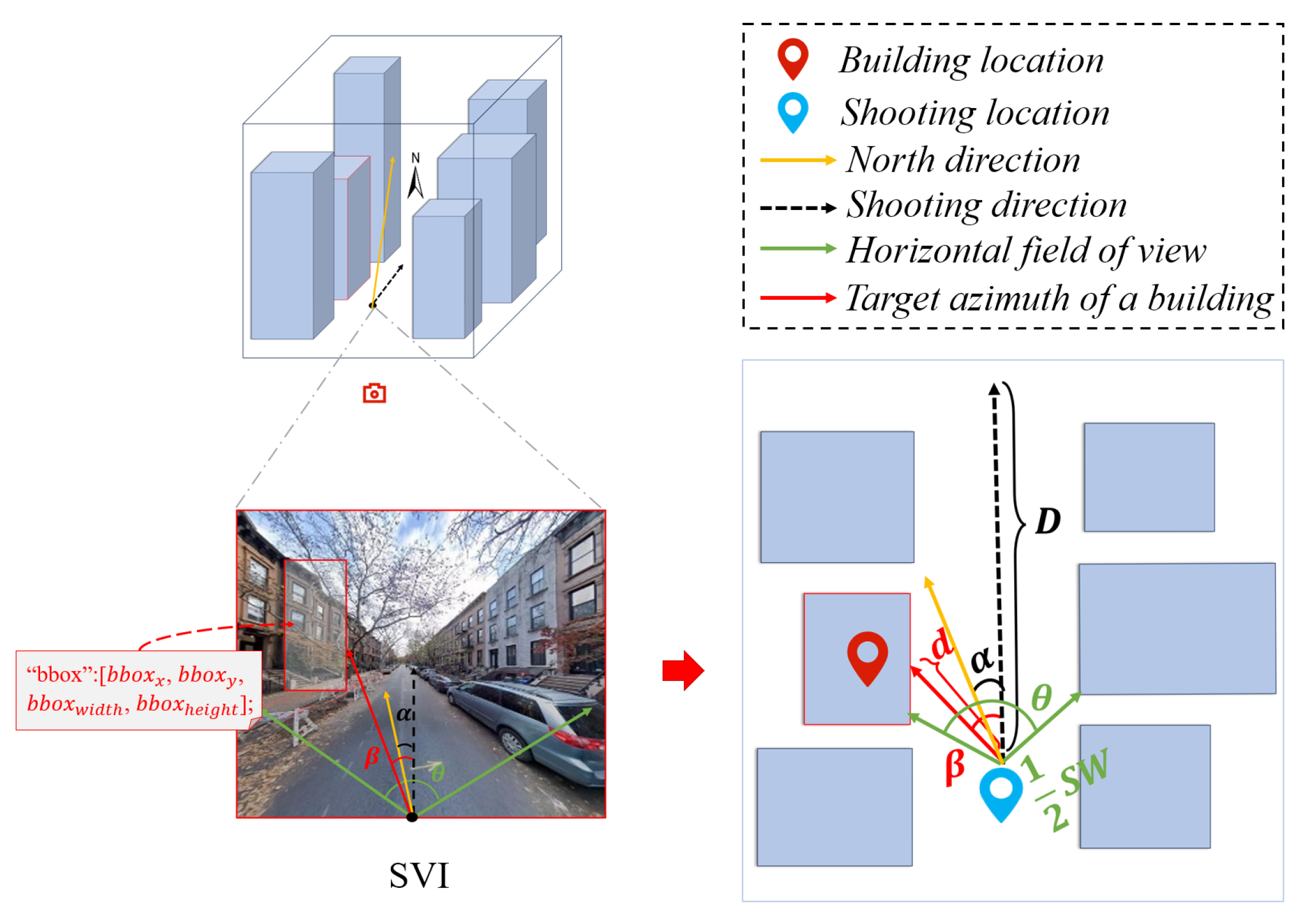

3.1. Location Mapping Module

The recorded geographic coordinates of SVIs refer to the location of the camera, since SVIs are typically captured by vehicles traveling along roadways [

24]. That is, the image coordinates reflect the shooting locations (i.e., where the SVI was taken), not the building locations (i.e., the true locations of the buildings). To align the image objects with building objects, the proposed method develops a location mapping algorithm. As illustrated in

Figure 2, the algorithm is a three-step process.

Step 1. Calculating building azimuth .

The building azimuth represents the angle between the shooting direction of the SVI and true north, describing the orientation of the building facade in the image. The values of typically range from 0° to 360°, where 0° corresponds to true north, 90° to east, 180° to south, and 270° to west. can be inferred from the SVI’s shooting direction and the building’s position within the image.

The pixel position

of the building in an SVI can be calculated using the bounding box annotations of the SVI, as shown in Equation (

1):

where

and

denote the x- and y-coordinates of the bounding box’s top-left corner, respectively; and

and

represent the width and height of the bounding box, respectively.

The angular deviation

between the building and the SVI’s shooting direction can be computed using Equation (

2):

where

, estimated through multi-angle observations, denotes the horizontal field of view (HFOV) of the image; and

is the total number of horizontal pixels in the image.

is computed as the ratio of the difference of building azimuths (

) to the difference in the x-coordinates of the bounding boxes’ top-left corners.

The building azimuth

is derived as follows:

where

represents the azimuth angle of the SVI’s shooting direction.

Step 2. Estimating the distance between shooting and building location.

The distance between the shooting and building location, d, is closely related to the absolute value of the horizontal offset angle. When the absolute offset angle approaches 0°, i.e., the SVI shooting direction aligns with the street’s longitudinal axis, the buildings appearing near the center of the image tend to be farther away, as they lie along the depth of the street. Conversely, when the offset angle approaches 90°, indicating a side-view perspective perpendicular to the street’s longitudinal direction, the buildings are located on both sides of the street and much closer to the camera, with the distance approaching approximately half the street width ().

To account for the fact that buildings captured at the far end of a street are not infinitely distant, this study introduces a hyperparameter,

D, to denote the maximum distance from the SVI to the building. A nonlinear quadratic function is employed to represent distance variation as the building’s position shifts from the image edges toward the center. The distance

d is computed as follows:

where

is a hyperparameter representing the street width, and

is the azimuthal deviation of the building relative to the shooting direction.

Step 3. Mapping shooting location to building location.

Given the geographic coordinates of the SVI shooting location, the building azimuth

, and the estimated distance

d, the actual geographic location of the building can be inferred by solving the forward geodesic problem. The central angle

and deviation angle

(in radians) are calculated by Equations (5) and (6):

where

R is the radius of the Earth,

d is the distance between shooting and building location, and

is the building azimuth.

The building’s latitude

and longitude

are then derived using the Haversine formula:

where

and

denote the latitude and longitude of the shooting location where the SVI was taken, respectively.

The complete location mapping algorithm is presented in Algorithm 1.

| Algorithm 1 Location Mapping Algorithm |

- Input:

SVI tuple svi, Building annotations - Output:

BUILDING set buildings - 1:

buildings ← empty set(∅) - 2:

maximum distance - 3:

HOFV of svi - 4:

horizontal resolution of svi - 5:

radius of the Earth - 6:

for m ∈ SVI do - 7:

BUILDING b - 8:

for n ∈ and n corresponds to of m do - 9:

, ← the latitude and longitude of m - 10:

b.svfid ← the id of m - 11:

+ n. - 12:

× - 13:

- 14:

b. ← - 15:

b.d ← + - 16:

- 17:

- 18:

b.Gx ← - 19:

b.Gy ← - 20:

end for - 21:

append buildings with b - 22:

end for - 23:

return buildings

|

3.2. Multi-Level Visual Semantic Extraction Module

Visual semantics from SVIs effectively characterize building functional features, while visual-textual semantics, derived from image captions [

25], provide the scene knowledge of the SVI from pre-trained large models. These two kinds of visual semantics jointly provide complementary semantics for building function identification. As shown in

Figure 3, visual semantics are first extracted using the Vision Transformer (ViT) model [

26]. Subsequently, a pre-trained image captioning model generates descriptive text captions for each SVI to embed latent semantic information. Finally, the generated captions are processed by BERT [

27] to extract contextualized visual-textual semantics.

Specifically, the input SVI is denoted as

, where

H,

W, and

C represent the image height, width, and number of channels, respectively. The image is first divided into

N non-overlapping patches, each of size

. Each patch is flattened into a 1-D vector and mapped to a high-dimensional embedding space, represented as follows:

The embedding layer transforms these image patches into D-dimensional vectors:

where

is positional encoding.

The embedding vectors are processed through the Transformer encoder consisting of identical

L layers. Each layer employs a residual connection and layer normalization (LN) around a multi-head self-attention (MSA) mechanism, followed by a position-wise feed-forward network (MLP):

The visual semantic feature vectors

are derived from the last Transformer layer:

Subsequently, an image caption model generates a textual description

for the SVI. The generation process is modeled as follows:

where

denotes the hidden state of the LSTM at time step

t,

represents the parameters of the output layer, and

is the bias term.

The textual description

is fed into a pre-trained BERT model to encode into a semantic embedding. Let

denote the BERT encoding function; the resulting vector representation is

Following Yang et al. [

28], a concatenation-based fusion strategy integrates the visual and visual-textual semantic features. The experimental results in

Section 5.5 suggest the strategy is effective.

For each building

b, let

denote its corresponding SVI set. The final building-level feature

is obtained by aggregating the features of all SVIs in

.

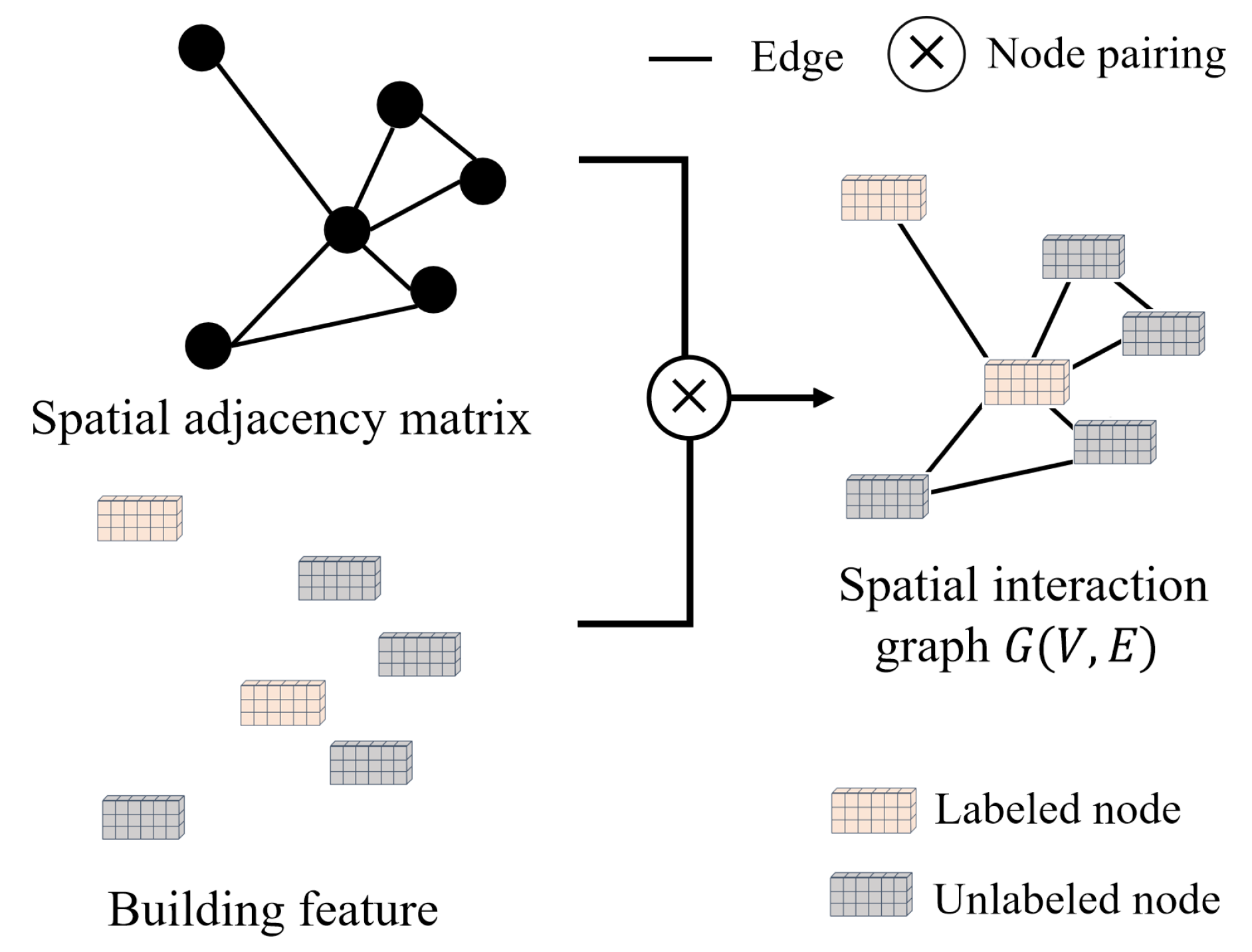

3.3. Semi-Supervised Spatial Interaction Module

A semi-supervised spatial interaction graph convolutional network (S

3I-GCN) is designed to integrate multi-semantics with spatial context between buildings. Specifically, a spatial weight matrix

is constructed to represent the spatial relationships among buildings, where

n is the number of buildings. The matrix is defined as follows:

where

denotes that building

is spatially related to building

, and

represents the spatial weight between them. The spatial weight is computed using a K-nearest neighbor (KNN) spatial weighting scheme [

29], where

is inversely proportional to the distance between

and

, typically defined as follows:

where

is a hyperparameter controlling the weight decay with distance, proportional to the scaling exponent of the spatial interaction, and is set to 0 to 3 according to the spatial autocorrelation theory [

30].

As illustrated in

Figure 4, once the spatial weight matrix

M is established, a spatial interaction graph

is constructed. Each node

corresponds to a building instance and is associated with a feature vector

. Each edge

captures the spatial context between the corresponding building instances and is associated with

.

The S

3I-GCN network stacks three layers of GCN to capture the relationships between nodes and their spatial interaction in graph

. Each layer learns node representations by aggregating features from neighboring nodes, as formulated in Equations (20) and (21):

where

is the node feature matrix at the

l-th layer;

M is the adjacency matrix of the graph, indicating the connections between nodes.

D is the degree matrix of the graph.

is the normalized adjacency matrix, obtained by normalizing the adjacency matrix

M. In semi-supervised training, the graph structure enables the propagation of label information from labeled to unlabeled nodes through feature aggregation using the matrix

.