LA-GATs: A Multi-Feature Constrained and Spatially Adaptive Graph Attention Network for Building Clustering

Abstract

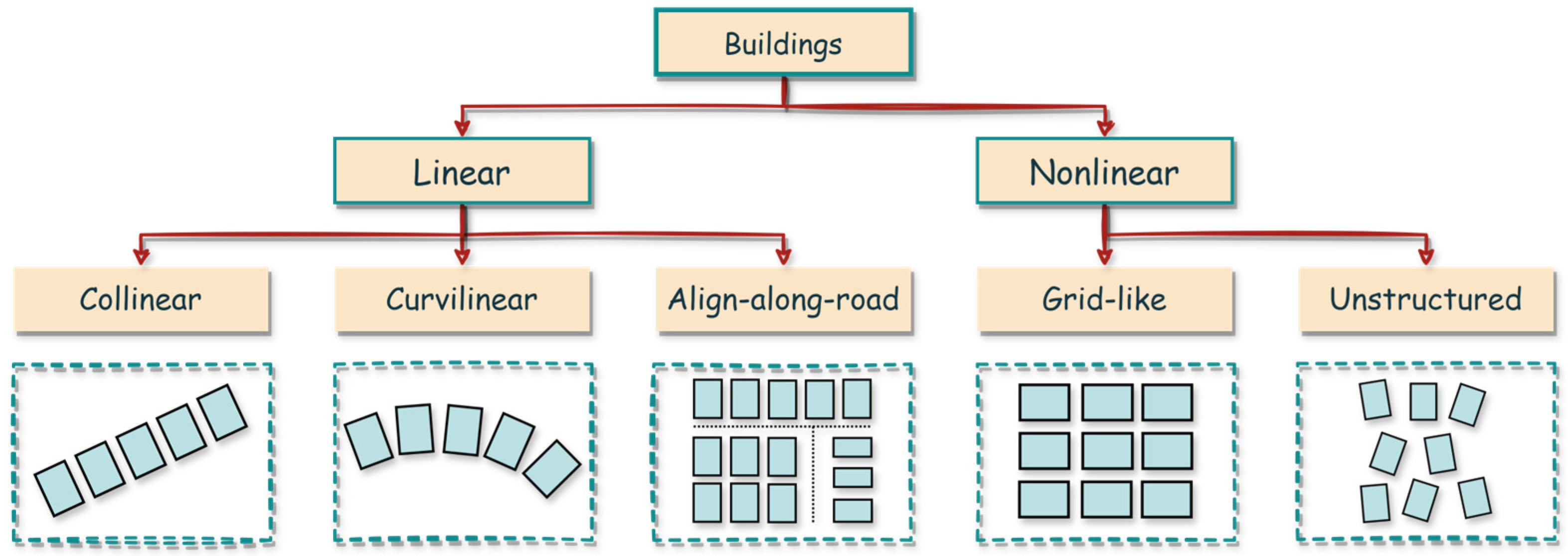

1. Introduction

2. Related Work

3. Materials and Methods

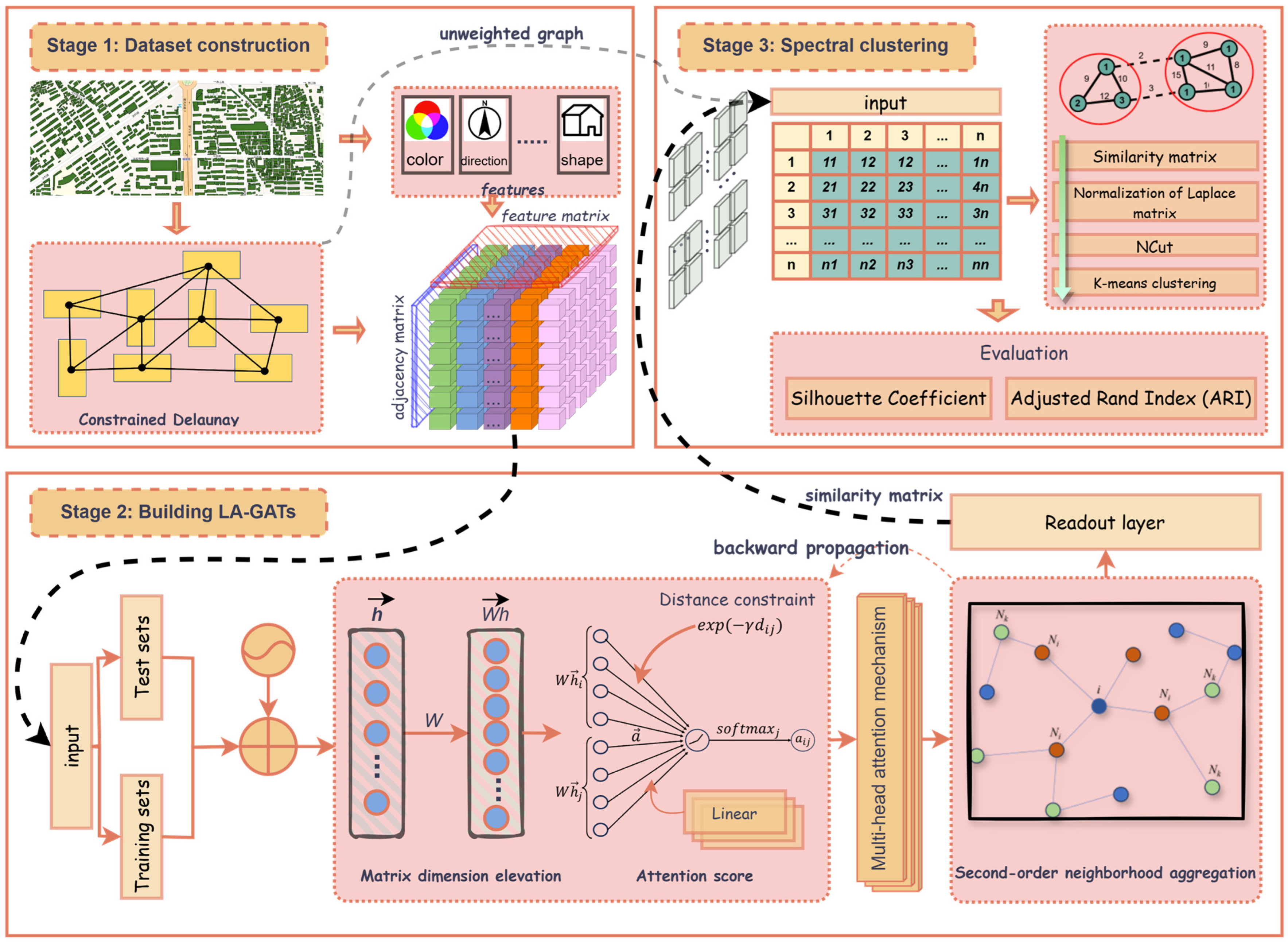

- Step 1: Building the dataset. The geometric, appearance, and spatial attributes of each building are encoded into feature vectors to construct the initial feature matrix. Considering the computational complexity of the features, this study selects four features—compactness, orientation, color, and height—for training. Previous research has demonstrated that these features align with Gestalt principles and, when applied to building clustering, provide visual perceptual constraints that help to more accurately identify the distribution patterns of buildings [9,23,24,28]. Subsequently, heterogeneous feature matrices are computed (e.g., Euclidean distance, directional angle differences, and color histogram differences) to quantify the multi-dimensional relationships between buildings.

- Step 2: Building the feature similarity matrix. Pass the feature matrix as input to the Graph Attention Network (GAT). During the learning of attention coefficients, a distance bias term (distance decay factor) is incorporated to ensure continuity in the spatial distribution of buildings. A multi-head attention mechanism is adopted to improve model accuracy and enhance the stability of feature representation, while a second-order neighborhood aggregation strategy is employed to mitigate the propagation of errors caused by imperfections in Delaunay triangulation construction.

- Step 3: Spectral Clustering. The input for spectral clustering is obtained by calculating the similarity matrix. The optimal number of clusters is determined using the elbow method [37], and then clustering is performed by eigen-decomposition of the normalized Laplacian matrix.

3.1. Feature Design for Buildings

3.2. Building Feature Extraction

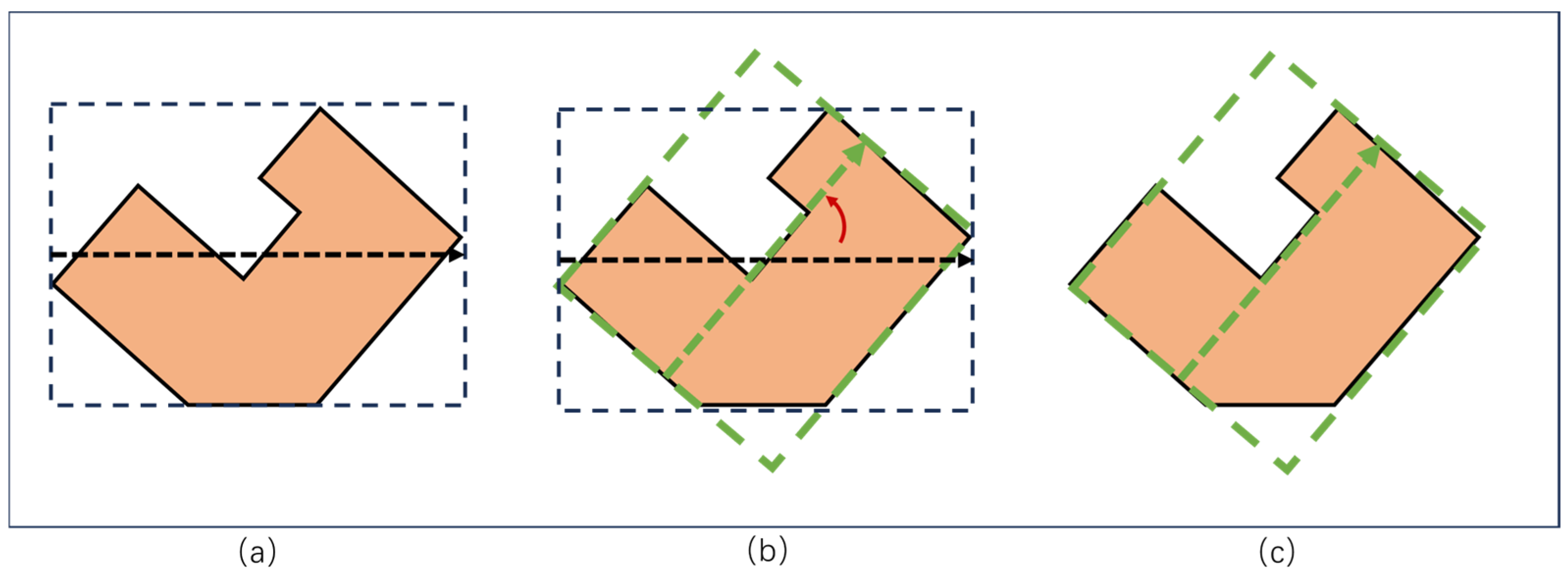

3.2.1. Orientation Feature

3.2.2. Compactness Feature

3.2.3. Other Features

3.3. Spatial Relationship Modeling

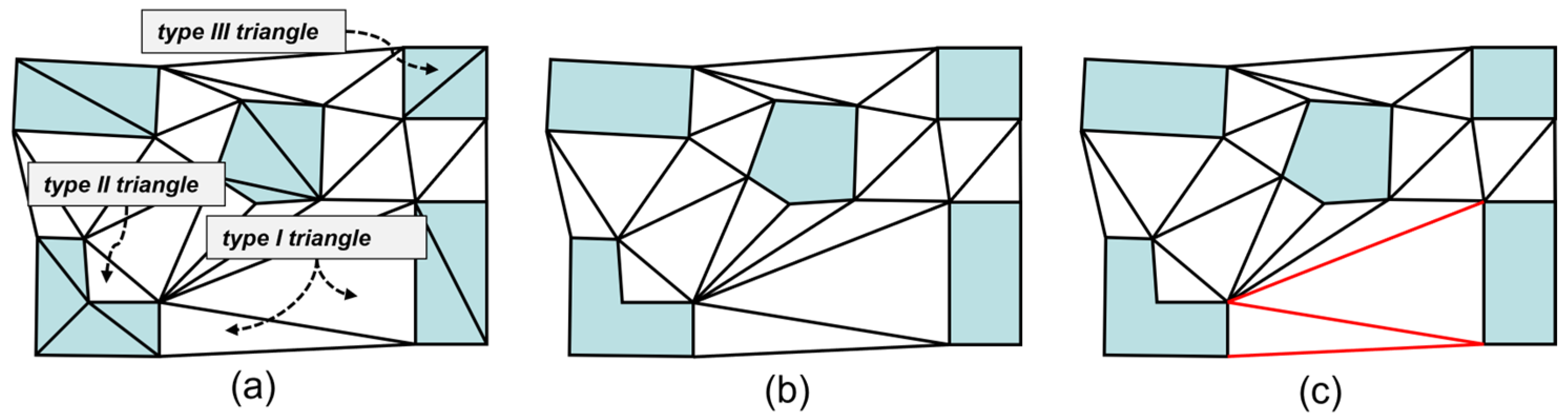

3.3.1. Constrained Delaunay Triangulation

- Step 1: Perform point interpolation encryption on the polygon boundary vector points;

- Step 2: Construct the Delaunay triangulation;

- Step 3: Embed the constraint edges to form a constrained Delaunay triangulation, achieving triangulation refinement.

3.3.2. Computing the Nearest Distance

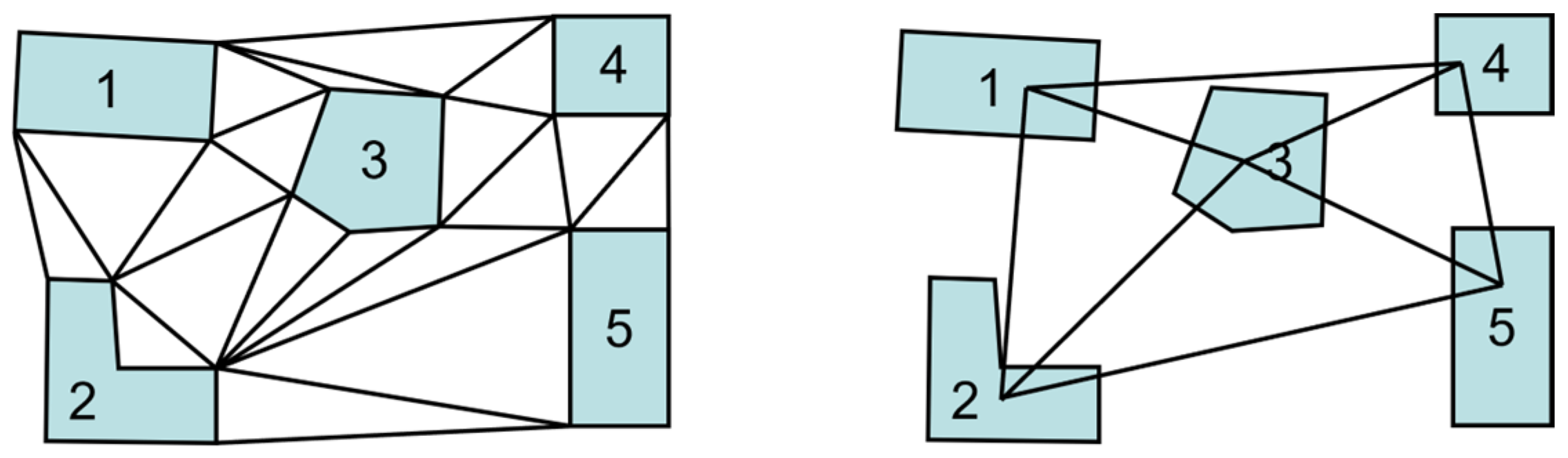

3.3.3. Construction of the Nearest-Neighbor Graph

3.3.4. Construction of the Feature Matrix

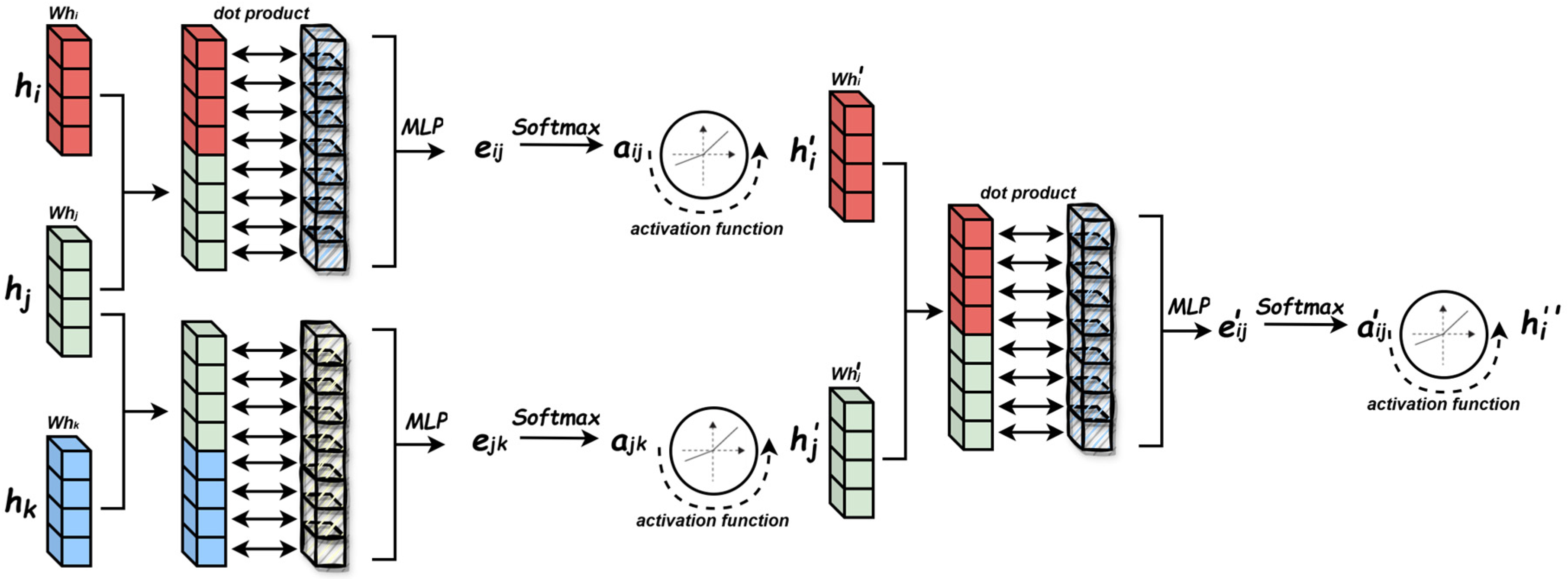

3.4. Optimized Graph Attention Network Architecture

3.4.1. Graph Attention Network

- 1.

- Attention Coefficient Computation

- 2.

- Attention Coefficient Computation

- 3.

- Multi-Head Attention

3.4.2. Second-Order Neighborhood Aggregation

3.4.3. Distance-Constrained Attention Mechanism

3.5. Clustering Result Generation and Evaluation

3.5.1. Spectral Clustering

- Step 1: Compute the degree matrix , a diagonal matrix whose diagonal elements are the row sums of the similarity matrix :

- Step 2: Construct the normalized Laplacian matrix in its symmetric form:where is a diagonal matrix with elements .

- Step 3: Perform eigen-decomposition on to obtain the first eigenvectors corresponding to its smallest eigenvalues: . Form the matrix whose columns are the eigenvectors:Normalize each row of to unit length ( normalization) to obtain matrix :

- Step 4: Treat each row of as a point in and perform K-means clustering to produce the final partition:

3.5.2. Design of Clustering Evaluation Metrics

- 1.

- Cluster Compactness

- 2.

- Silhouette Coefficient

- 3.

- Adjusted Rand Index (ARI)

4. Results

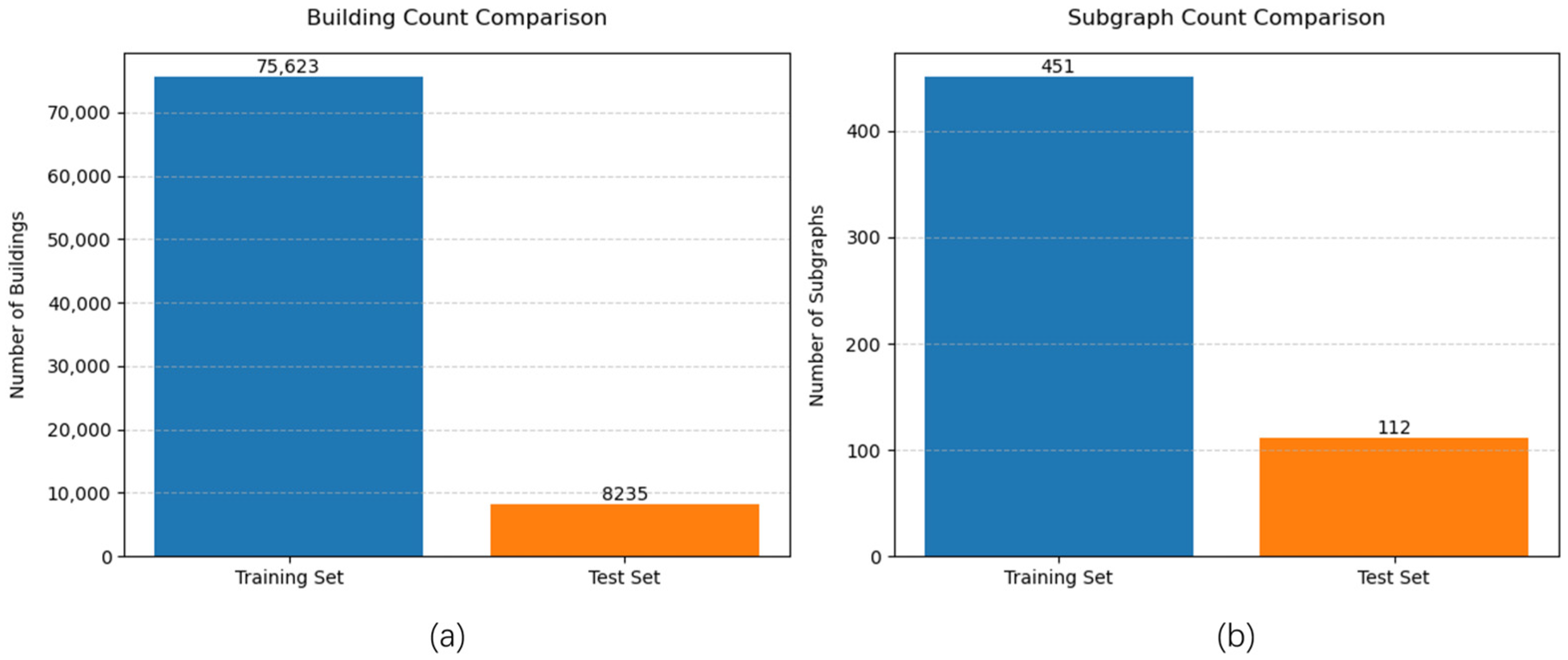

4.1. Data Collection and Analysis

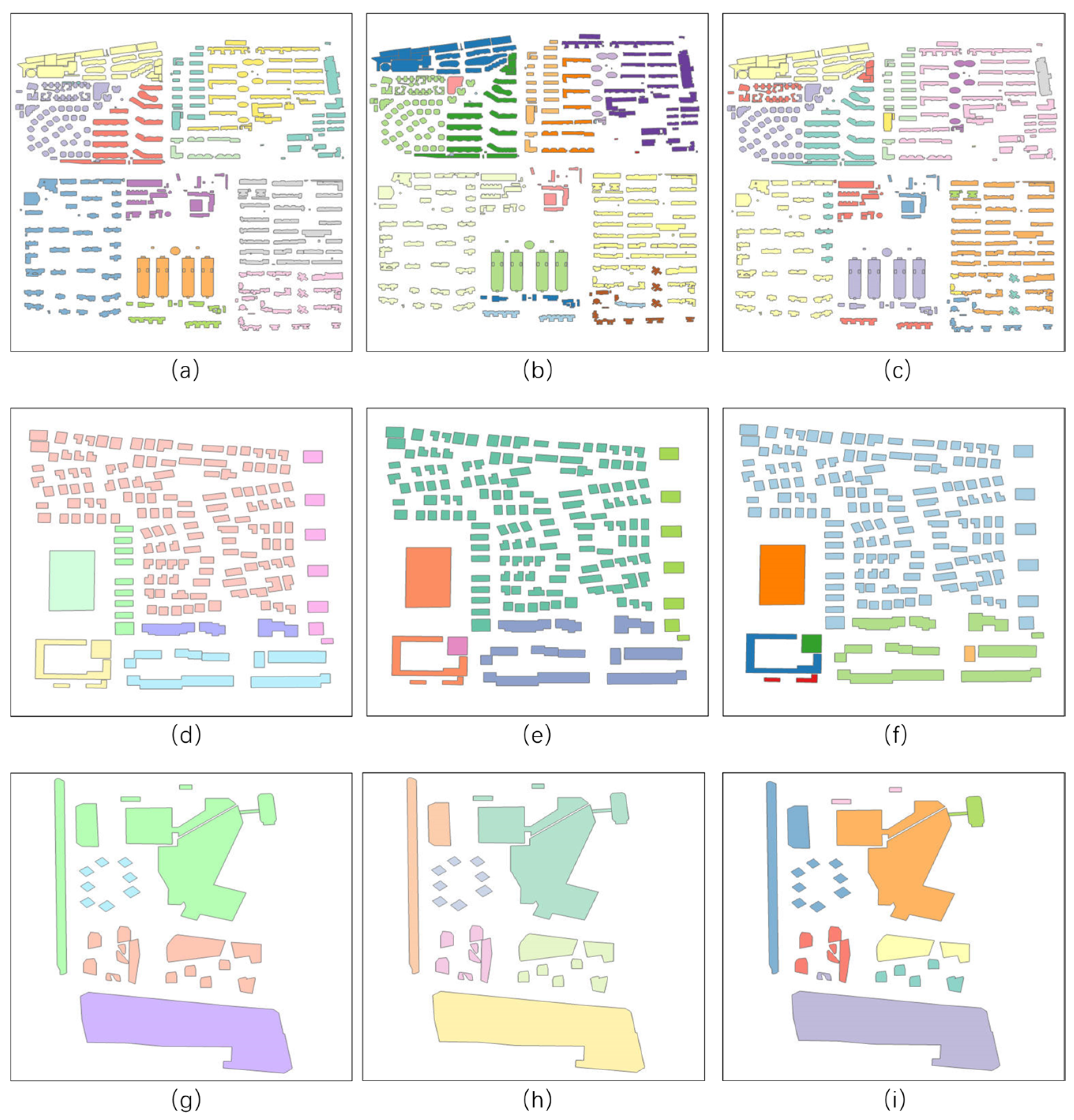

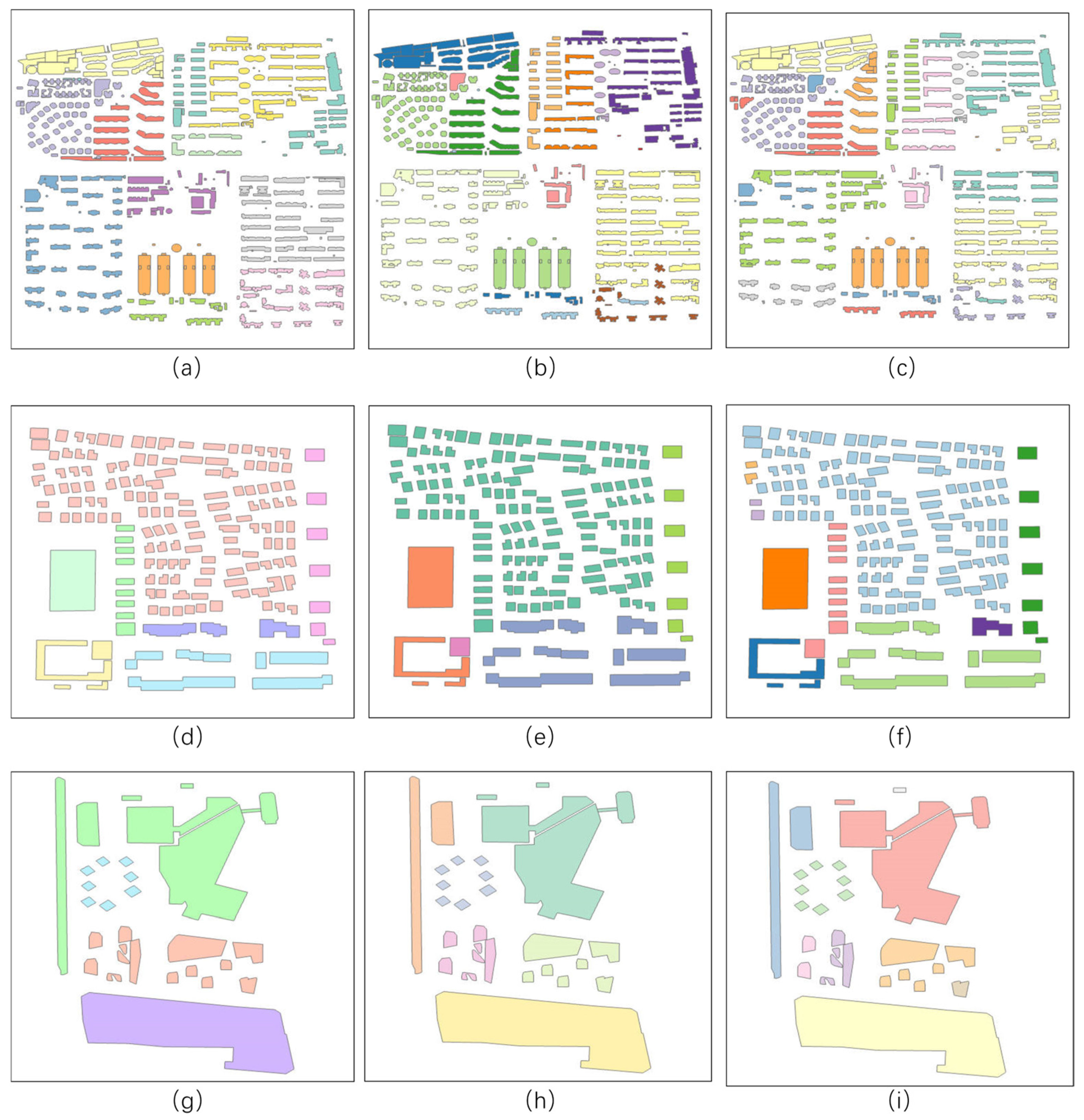

- Region 1: Qujiang New District, Xi’an (34.1990° N, 108.9595° E to 34.1893° N, 108.9704° E)—369 buildings, dense commercial zone.

- Region 2: Residential area near Beijing Normal University High School, Daxing District, Beijing (39.7759° N, 116.3164° E to 39.7717° N, 116.3212° E)—168 buildings, medium-density residential area.

- Region 3: Huiju Shopping Mall area, Xihongmen, Daxing District, Beijing (39.79002° N, 116.3142°E to 39.77836° N, 116.3246° E)—31 buildings, low-density irregular distribution.

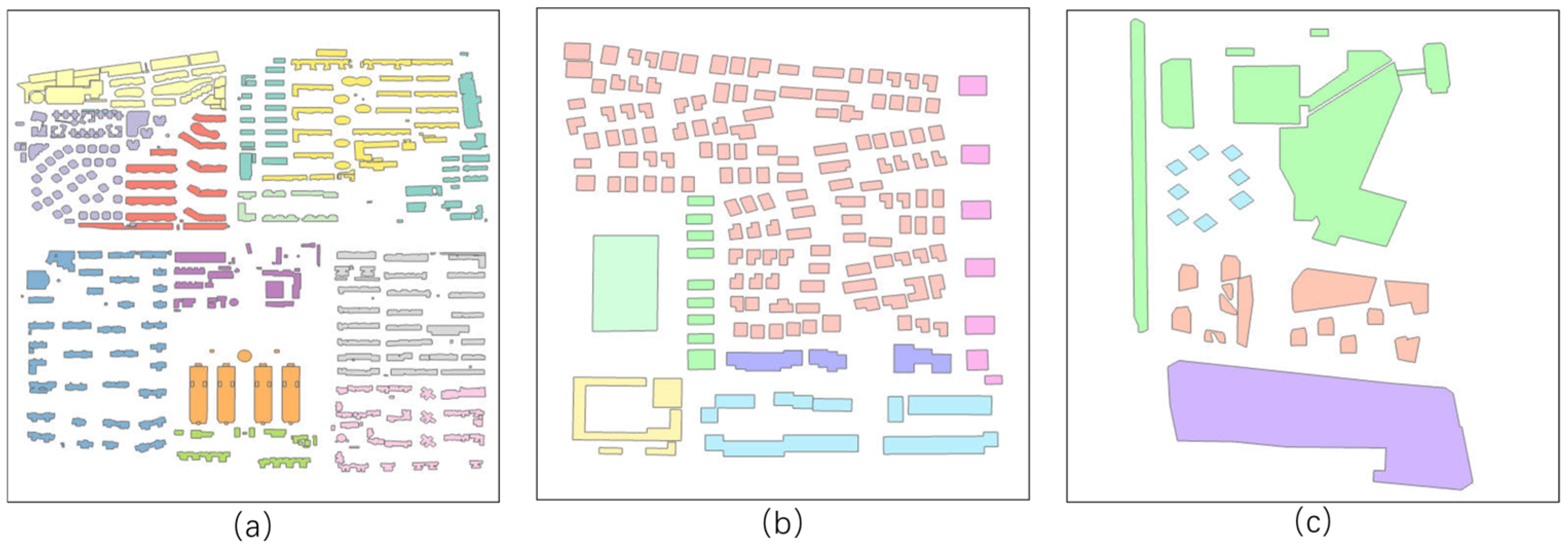

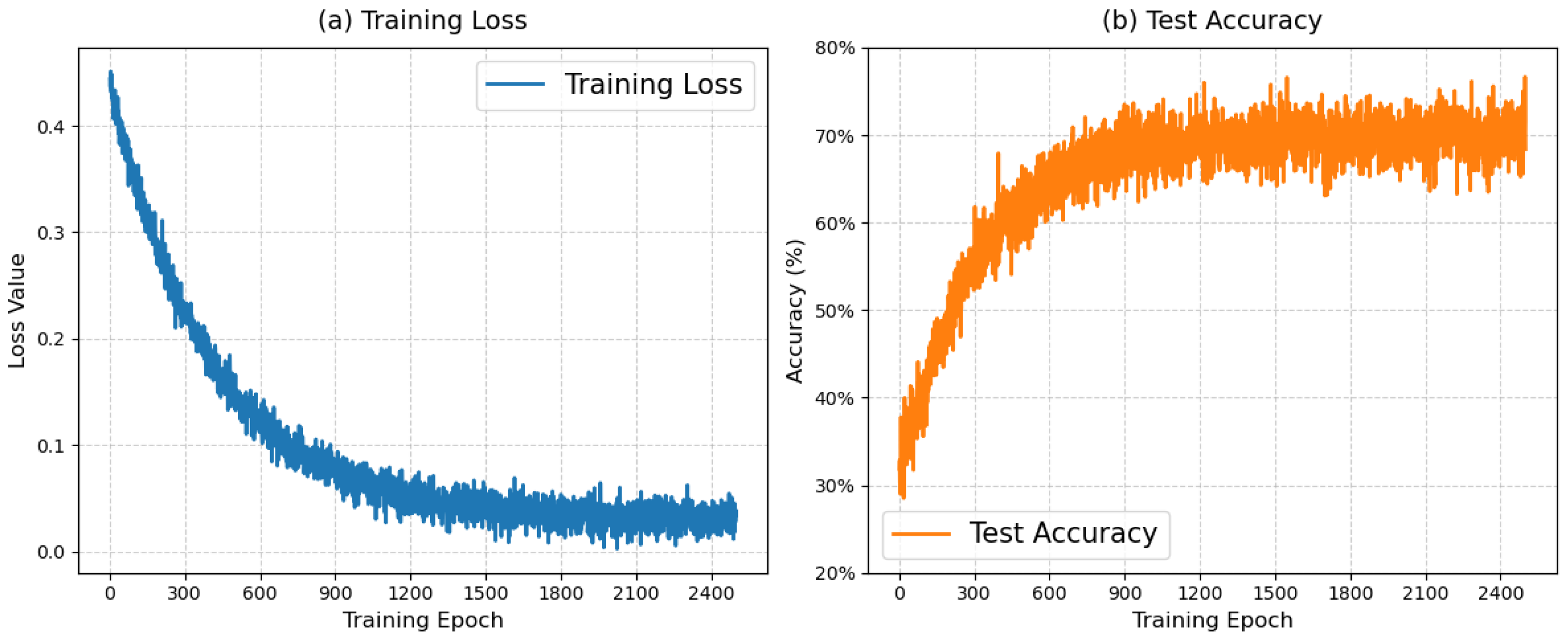

4.2. LA-GATs Clustering Results

4.3. Component Contribution Analysis

4.3.1. Impact of Distance Bias Term

4.3.2. Impact of Second-Order Neighborhood Aggregation

4.4. Comparison with Multiple Clustering Algorithms

5. Conclusions

- Extension and optimization of GATs-based building clustering—enhancing the model architecture to better capture spatial and semantic relationships among buildings.

- Incorporation of spatially constrained attention—introducing a distance bias term to explicitly model spatial autocorrelation. Experiments conducted in Xi’an and Beijing show notable improvements in clustering evaluation metrics with this mechanism.

- Adaptive second-order neighborhood aggregation strategy—expanding the receptive field of feature propagation to improve the recognition of building group patterns. The ARI value reflects the consistency between clustering results and true labels, serving as an accuracy metric for clustering outcomes [55,56]. This strategy improves clustering accuracy by approximately 21% over existing clustering methods in residential areas, while maintaining the separability of distinct functional zones.

- The current methods primarily focus on the features of each building; future work could incorporate block-level or city-scale contextual information to enhance the recognition of complex spatial patterns.

- The methodology proposed in this study is designed primarily for typical urban built environments, including residential, commercial, and mixed-use areas. In environments with distinctive architectural characteristics (e.g., contiguous historic districts/ancient architectural complexes), material distribution, color application, and structural forms may exhibit significant deviations from conventional urban textures. Expanding the number of building features, feature calculation methods, or feature categories (semantically related features) can optimize GATs training outcomes. We identify this as a priority topic for future research.

- The integration of advanced machine learning architectures such as Transformer and LSTM could further refine the attention mechanism, enabling improved building clustering and citywide spatial distribution prediction.

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Yu, W.; Ai, T.; Liu, P.; Cheng, X. The Analysis and Measurement of Building Patterns Using Texton Co-Occurrence Matrices. Int. J. Geogr. Inf. Sci. 2017, 31, 1079–1100. [Google Scholar] [CrossRef]

- He, X.; Deng, M.; Luo, G. Recognizing Building Group Patterns in Topographic Maps by Integrating Building Functional and Geometric Information. ISPRS Int. J. Geo-Inf. 2022, 11, 332. [Google Scholar] [CrossRef]

- Yan, X.; Ai, T.; Zhang, X. Template Matching and Simplification Method for Building Features Based on Shape Cognition. ISPRS Int. J. Geo-Inf. 2017, 6, 250. [Google Scholar] [CrossRef]

- Xing, R.; Wu, F.; Gong, X.; Du, J.; Liu, C. An Axis-Matching Approach to Combined Collinear Pattern Recognition for Urban Building Groups. Geocarto Int. 2022, 37, 4823–4842. [Google Scholar] [CrossRef]

- Hu, Y.; Liu, C.; Li, Z.; Xu, J.; Han, Z.; Guo, J. Few-Shot Building Footprint Shape Classification with Relation Network. ISPRS Int. J. Geo-Inf. 2022, 11, 311. [Google Scholar] [CrossRef]

- Chen, G.; Qian, H. Building clustering method that integrates graph attention networks and spectral clustering. Geocarto Int. 2025, 40, 2471091. [Google Scholar] [CrossRef]

- Deng, M.; Tang, J.; Liu, Q.; Wu, F. Recognizing building groups for generalization: A comparative study. Cartogr. Geogr. Inf. Sci. 2018, 45, 187–204. [Google Scholar] [CrossRef]

- Li, Z.; Yan, H.; Ai, T.; Chen, J. Automated building generalization based on urban morphology and Gestalt theory. Int. J. Geogr. Inf. Sci. 2004, 18, 513–534. [Google Scholar] [CrossRef]

- Ai, T.; Guo, R. Polygon cluster pattern mining based on gestalt principles. Acta Geod Cart. Sin. 2007, 36, 302–308. [Google Scholar]

- Liu, H.; Wang, W.; Tang, J.; Deng, M.; Ding, C. A Building Group Recognition Method Integrating Spatial and Semantic Similarity. ISPRS Int. J. Geo-Inf. 2025, 14, 154. [Google Scholar] [CrossRef]

- Qi, H.B.; Li, Z.L. An Approach to Building Grouping Based on Hierarchical Constraints. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2008, 2008, 449–454. [Google Scholar]

- Boffet, A.; Serra, S.R. Identification of spatial structures within urban blocks for town characterization. In Proceedings of the 20th International Cartographic Conference, Beijing, China, 6–10 August 2001; Volume 3, pp. 1974–1983. [Google Scholar]

- Ezugwu, A.E.; Ikotun, A.M.; Oyelade, O.O.; Abualigah, L.; Agushaka, J.O.; Eke, C.I.; Akinyelu, A.A. A comprehensive survey of clustering algorithms: State-of-the-art machine learning applications, taxonomy, challenges, and future research prospects. Eng. Appl. Artif. Intell. 2022, 110, 104743. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 30, 5998–6008. [Google Scholar] [CrossRef]

- Veličković, P.; Cucurull, G.; Casanova, A.; Romero, A.; Lio, P.; Bengio, Y. Graph attention networks. arXiv 2017, arXiv:1710.10903. [Google Scholar] [CrossRef]

- Liu, P.; Shao, Z.; Xiao, T. Second-order texton feature extraction and pattern recognition of building polygon cluster using CNN network. Int. J. Appl. Earth Obs. Geoinf. 2024, 129, 103794. [Google Scholar] [CrossRef]

- Gao, A.; Lin, J. ConstellationNet: Reinventing Spatial Clustering through GNNs. arXiv 2025, arXiv:2503.07643. [Google Scholar] [CrossRef]

- Tsitsulin, A.; Palowitch, J.; Perozzi, B.; Müller, E. Graph clustering with graph neural networks. J. Mach. Learn. Res. 2023, 24, 1–21. [Google Scholar]

- Kong, B.; Ai, T.; Zou, X.; Yan, X.; Yang, M. A graph-based neural network approach to integrate multi-source data for urban building function classification. Comput. Environ. Urban Syst. 2024, 110, 102094. [Google Scholar] [CrossRef]

- Ai, T.; Liu, Y. A method of point cluster simplification with spatial distribution properties preserved. Acta Geod Cart. Sin. 2002, 31, 175–181. [Google Scholar]

- Rodriguez, A.; Laio, A. Clustering by fast search and find of density peaks. Science 2014, 344, 1492–1496. [Google Scholar] [CrossRef]

- Huimin, L.I.U.; Wenke, H.U.; Jianbo, T.A.N.G.; Yan, S.H.I.; Min, D.E.N.G. A method for recognizing building clusters by considering functional features of buildings. Acta Geod. Cartogr. Sin. 2020, 49, 622. [Google Scholar]

- Jin, C.; An, X.; Chen, Z.; Ma, X. A multi-level graph partition clustering method of vector residential area polygon. Geomat. Inf. Sci. Wuhan Univ. 2021, 46, 19–29. [Google Scholar]

- Yan, H.; Weibel, R.; Yang, B. A multi-parameter approach to automated building grouping and generalization. Geoinformatica 2008, 12, 73–89. [Google Scholar] [CrossRef]

- Gao, X.; Yan, H.; Lu, X. Semantic similarity measurement for building polygon aggregation in multi-scale map space. Acta Geod. Cartogr. Sin. 2022, 51, 95. [Google Scholar]

- Regnauld, N. Contextual building typification in automated map generalization. Algorithmica 2001, 30, 312–333. [Google Scholar] [CrossRef]

- Regnauld, N. Spatial structures to support automatic generalization. In Proceedings of the XXII Int. Cartographic Conference, A Coruña, Spain, 9–16 July 2005. [Google Scholar]

- Sun, D.; Shen, T.; Yang, X.; Huo, L.; Kong, F. Research on a Multi-Scale Clustering Method for Buildings Taking into Account Visual Cognition. Buildings 2024, 14, 3310. [Google Scholar] [CrossRef]

- Von Luxburg, U. A tutorial on spectral clustering. Stat. Comput. 2007, 17, 395–416. [Google Scholar] [CrossRef]

- Paatero, P.; Tapper, U. Positive matrix factorization: A non-negative factor model with optimal utilization of error estimates of data values. Environmetrics 1994, 5, 111–126. [Google Scholar] [CrossRef]

- He, Z.; Xie, S.; Zdunek, R.; Zhou, G.; Cichocki, A. Symmetric nonnegative matrix factorization: Algorithms and applications to probabilistic clustering. IEEE Trans. Neural Netw. 2011, 22, 2117–2131. [Google Scholar] [CrossRef]

- Tang, F.; Wang, C.; Su, J.; Wang, Y. Spectral clustering-based community detection using graph distance and node attributes. Comput. Stat. 2020, 35, 69–94. [Google Scholar] [CrossRef]

- Zhang, X.; Liu, H.; Wu, X.-M.; Zhang, X.; Liu, X. Spectral embedding network for attributed graph clustering. Neural Netw. 2021, 142, 388–396. [Google Scholar] [CrossRef]

- Kang, Z.; Lin, Z.; Zhu, X.; Xu, W. Structured graph learning for scalable subspace clustering: From single view to multiview. IEEE Trans. Cybern. 2021, 52, 8976–8986. [Google Scholar] [CrossRef]

- Berahmand, K.; Mohammadi, M.; Faroughi, A.; Mohammadiani, R.P. A novel method of spectral clustering in attributed networks by constructing parameter-free affinity matrix. Clust. Comput. 2022, 25, 869–888. [Google Scholar] [CrossRef]

- Yan, X.; Ai, T.; Yang, M.; Tong, X.; Liu, Q. A graph deep learning approach for urban building grouping. Geocarto Int. 2022, 37, 2944–2966. [Google Scholar] [CrossRef]

- Shi, C.; Wei, B.; Wei, S.; Wang, W.; Liu, H.; Liu, J. A quantitative discriminant method of elbow point for the optimal number of clusters in clustering algorithm. EURASIP J. Wirel. Commun. Netw. 2021, 2021, 31. [Google Scholar] [CrossRef]

- Köhler, W. Gestalt psychology. Psychol. Forsch. 1967, 31, XVIII–XXX. [Google Scholar] [CrossRef] [PubMed]

- Zhang, L.; Wang, G.; Sun, W. Automatic extraction of building geometries based on centroid clustering and contour analysis on oblique images taken by unmanned aerial vehicles. Int. J. Geogr. Inf. Sci. 2022, 36, 453–475. [Google Scholar] [CrossRef]

- Duchêne, C.; Bard, S.; Barillot, X.; Ruas, A.; Trevisan, J.; Holzapfel, F. Quantitative and qualitative description of building orientation. In Proceedings of the Fifth Workshop on Progress in Automated Map Generalisation, Paris, France, 28–30 April 2003. [Google Scholar]

- Zhang, X.; Ai, T.; Stoter, J. Characterization and detection of building patterns in cartographic data: Two algorithms. In Advances in Spatial Data Handling and GIS: 14th International Symposium on Spatial Data Handling; Springer: Berlin/Heidelberg, Germany, 2012; pp. 93–107. [Google Scholar]

- Shirowzhan, S.; Lim, S.; Trinder, J.; Li, H.; Sepasgozar, S. Data mining for recognition of spatial distribution patterns of building heights using airborne lidar data. Adv. Eng. Inform. 2020, 43, 101033. [Google Scholar] [CrossRef]

- Yang, X.; Huo, L.; Shen, T.; Wang, X.; Yuan, S.; Liu, X. A large-scale urban 3D model organisation method considering spatial distribution of buildings. IET Smart Cities 2023, 6, 54–64. [Google Scholar] [CrossRef]

- Li, X.; Qin, C.; Qian, Z.; Yao, H.; Zhang, X. Perceptual Robust Hashing for Color Images with Canonical Correlation Analysis. arXiv 2020, arXiv:2012.04312. [Google Scholar] [CrossRef]

- Singh, A.; Khan, M.Z.; Sharma, S.; Debnath, N.C. Perceptual Hashing Algorithms for Image Recognition. In Proceedings of the International Conference on Advanced Intelligent Systems and Informatics, Port Said, Egypt, 20–22 January 2025; Springer Nature: Cham, Switzerland, 2025; pp. 90–101. [Google Scholar]

- Caruso, G.; Hilal, M.; Thomas, I. Measuring urban forms from inter-building distances: Combining MST graphs with a Local Index of Spatial Association. Landsc. Urban Plan. 2017, 163, 80–89. [Google Scholar] [CrossRef]

- Cetinkaya, S.; Basaraner, M.; Burghardt, D. Proximity-based grouping of buildings in urban blocks: A comparison of four algorithms. Geocarto Int. 2015, 30, 618–632. [Google Scholar] [CrossRef]

- Li, X.; Li, W.; Meng, Q.; Zhang, C.; Jancso, T.; Wu, K. Modelling building proximity to greenery in a three-dimensional perspective using multi-source remotely sensed data. J. Spat. Sci. 2016, 61, 389–403. [Google Scholar] [CrossRef]

- Bose, P.; De Carufel, J.L.; Shaikhet, A.; Smid, M. Essential constraints of edge-constrained proximity graphs. In Workshop on Combinatorial Algorithms; Springer International Publishing: Cham, Switzerland, 2016; pp. 55–67. [Google Scholar]

- Niroumand-Jadidi, M.; Vitti, A. Reconstruction of river boundaries at sub-pixel resolution: Estimation and spatial allocation of water fractions. ISPRS Int. J. Geo-Inf. 2017, 6, 383. [Google Scholar] [CrossRef]

- Maas, A.L.; Hannun, A.Y.; Ng, A.Y. Rectifier nonlinearities improve neural network acoustic models. Proc. icml. 2013, 30, 3. [Google Scholar]

- Zhou, J.; Du, Y.; Zhang, R.; Zhang, R. Adaptive depth graph attention networks. arXiv 2023, arXiv:2301.06265. [Google Scholar] [CrossRef]

- Chen, J.; Fang, C.; Zhang, X. Global attention-based graph neural networks for node classification. Neural Process. Lett. 2023, 55, 4127–4150. [Google Scholar] [CrossRef]

- Shi, J.; Malik, J. Normalized cuts and image segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 888–905. [Google Scholar] [CrossRef]

- Hassan, B.A.; Tayfor, N.B.; Hassan, A.A.; Ahmed, A.M.; Rashid, T.A.; Abdalla, N.N. From A-to-Z review of clustering validation indices. Neurocomputing 2024, 601, 128198. [Google Scholar] [CrossRef]

- Ikotun, A.M.; Habyarimana, F.; Ezugwu, A.E. Cluster validity indices for automatic clustering: A comprehensive review. Heliyon 2025, 11, e41953. [Google Scholar] [CrossRef]

- Zhang, T.; Lan, X.; Feng, J. A Progressive Clustering Approach for Buildings Using MST and SOM with Feature Factors. ISPRS Int. J. Geo-Inf. 2025, 14, 103. [Google Scholar] [CrossRef]

- Rousseeuw, P.J. Silhouettes: A graphical aid to the interpretation and validation of cluster analysis. J. Comput. Appl. Math. 1987, 20, 53–65. [Google Scholar] [CrossRef]

- Pavlopoulos, J.; Vardakas, G.; Likas, A. Revisiting silhouette aggregation. In International Conference on Discovery Science; Springer Nature: Cham, Switzerland, 2024; pp. 354–368. [Google Scholar]

- Hubert, L.; Arabie, P. Comparing partitions. J. Classif. 1985, 2, 193–218. [Google Scholar] [CrossRef]

- Xu, D.; Tian, Y. A comprehensive survey of clustering algorithms. Ann. Data Sci. 2015, 2, 165–193. [Google Scholar] [CrossRef]

- Kingma, D.P. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar] [CrossRef]

- Zaheer, R.; Shaziya, H. A study of the optimization algorithms in deep learning. In Proceedings of the 2019 Third International Conference on Inventive Systems and Control (ICISC), Coimbatore, India, 10–11 January 2019; IEEE: New York, NY, USA, 2019; pp. 536–539. [Google Scholar] [CrossRef]

| Compactness | ARI | Silhouette | ||||

|---|---|---|---|---|---|---|

| Test1 | 0.201 | 0.153 | 0.72 | 0.87 | 0.52 | 0.65 |

| Test2 | 0.217 | 0.159 | 0.75 | 0.89 | 0.50 | 0.67 |

| Test3 | 0.225 | 0.161 | 0.78 | 0.92 | 0.48 | 0.69 |

| Compactness | ARI | Silhouette | ||||

|---|---|---|---|---|---|---|

| Test1 | 0.187 | 0.153 | 0.79 | 0.87 | 0.58 | 0.65 |

| Test2 | 0.193 | 0.159 | 0.81 | 0.89 | 0.60 | 0.67 |

| Test3 | 0.201 | 0.161 | 0.86 | 0.92 | 0.62 | 0.69 |

| Compactness | ARI | Silhouette | |

|---|---|---|---|

| LA-GATs | 0.153 | 0.87 | 0.65 |

| GATs | 0.177 | 0.69 | 0.61 |

| MST | 0.220 | 0.63 | 0.58 |

| DBSCAN | 0.252 | 0.62 | 0.52 |

| K-means | 0.354 | 0.28 | 0.41 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Published by MDPI on behalf of the International Society for Photogrammetry and Remote Sensing. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yang, X.; Xie, X.; Liu, D. LA-GATs: A Multi-Feature Constrained and Spatially Adaptive Graph Attention Network for Building Clustering. ISPRS Int. J. Geo-Inf. 2025, 14, 415. https://doi.org/10.3390/ijgi14110415

Yang X, Xie X, Liu D. LA-GATs: A Multi-Feature Constrained and Spatially Adaptive Graph Attention Network for Building Clustering. ISPRS International Journal of Geo-Information. 2025; 14(11):415. https://doi.org/10.3390/ijgi14110415

Chicago/Turabian StyleYang, Xincheng, Xukang Xie, and Dingming Liu. 2025. "LA-GATs: A Multi-Feature Constrained and Spatially Adaptive Graph Attention Network for Building Clustering" ISPRS International Journal of Geo-Information 14, no. 11: 415. https://doi.org/10.3390/ijgi14110415

APA StyleYang, X., Xie, X., & Liu, D. (2025). LA-GATs: A Multi-Feature Constrained and Spatially Adaptive Graph Attention Network for Building Clustering. ISPRS International Journal of Geo-Information, 14(11), 415. https://doi.org/10.3390/ijgi14110415