Automated Detection of Beaver-Influenced Floodplain Inundations in Multi-Temporal Aerial Imagery Using Deep Learning Algorithms

Abstract

1. Introduction

2. Methods

2.1. Study Area and Data

2.2. Modeling Workflow

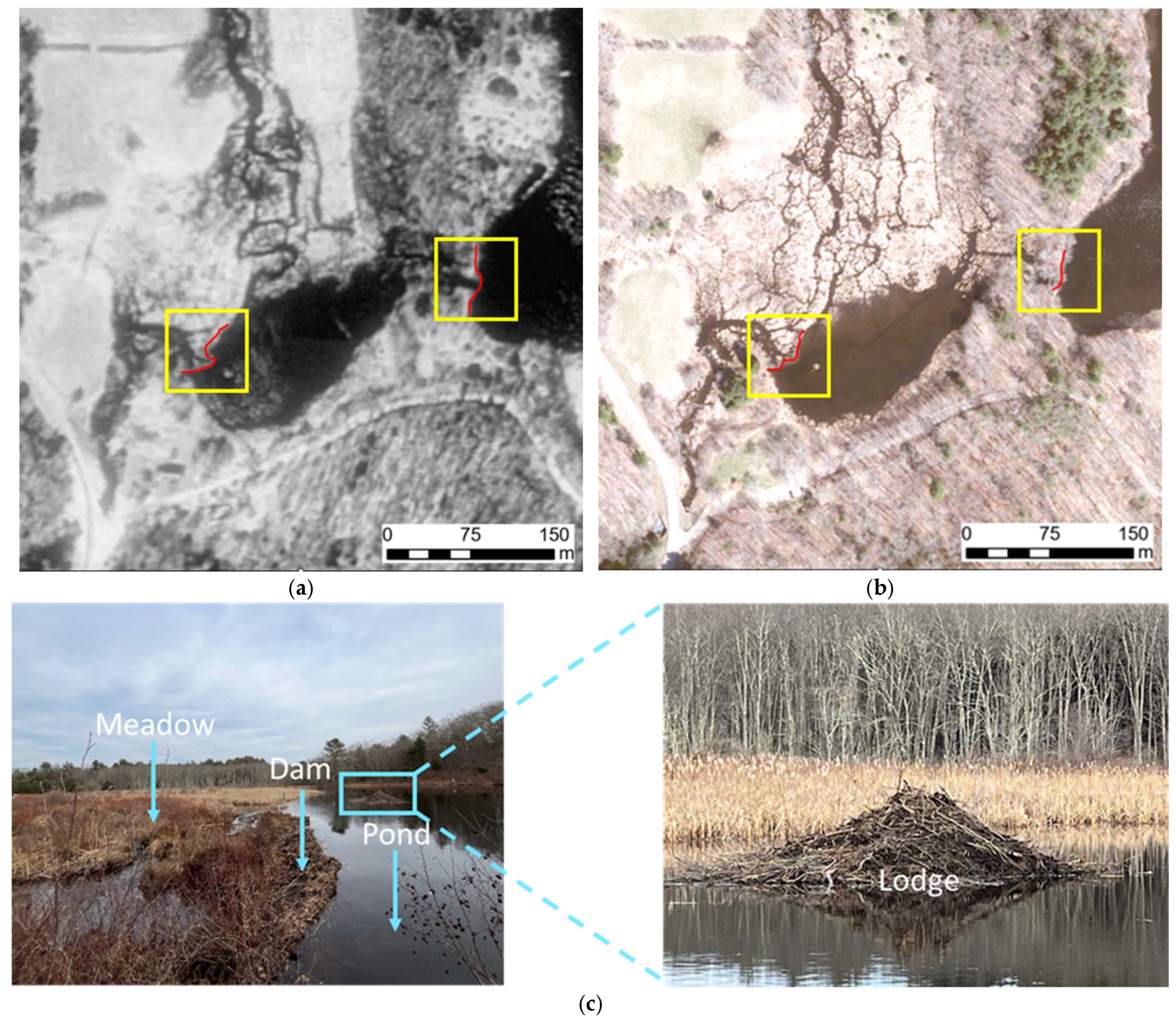

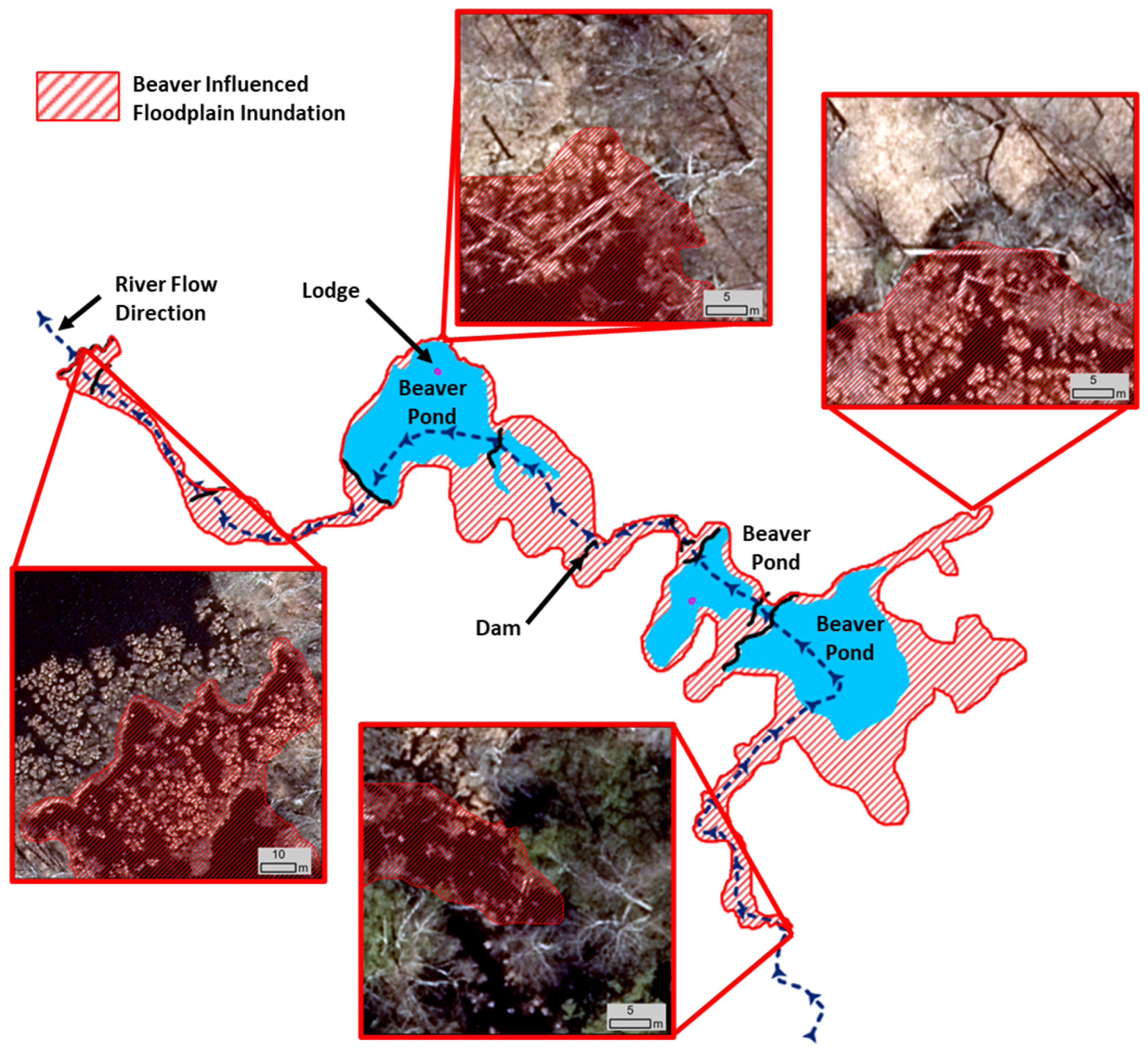

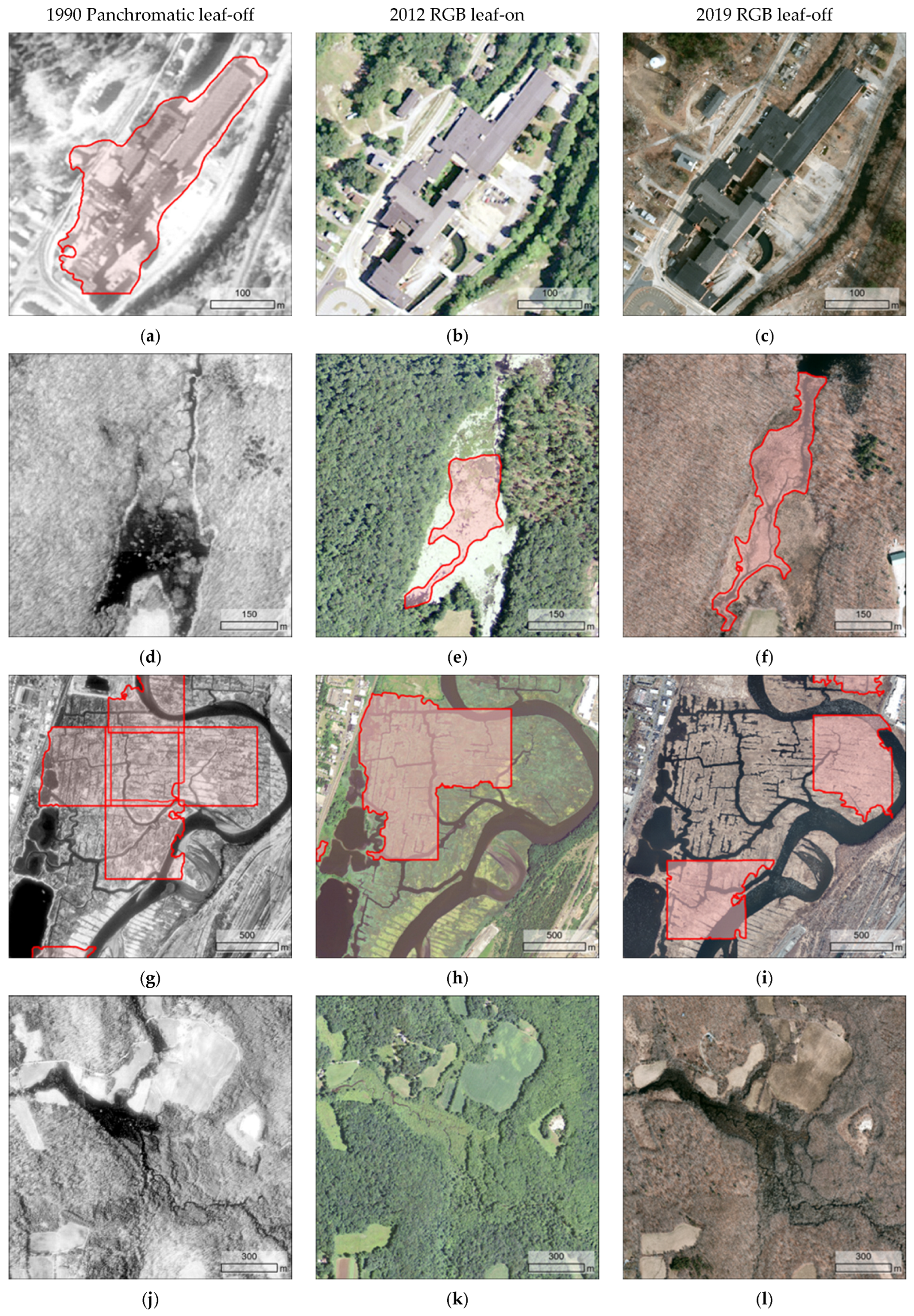

2.3. Defining Beaver-Influenced Floodplain Inundation

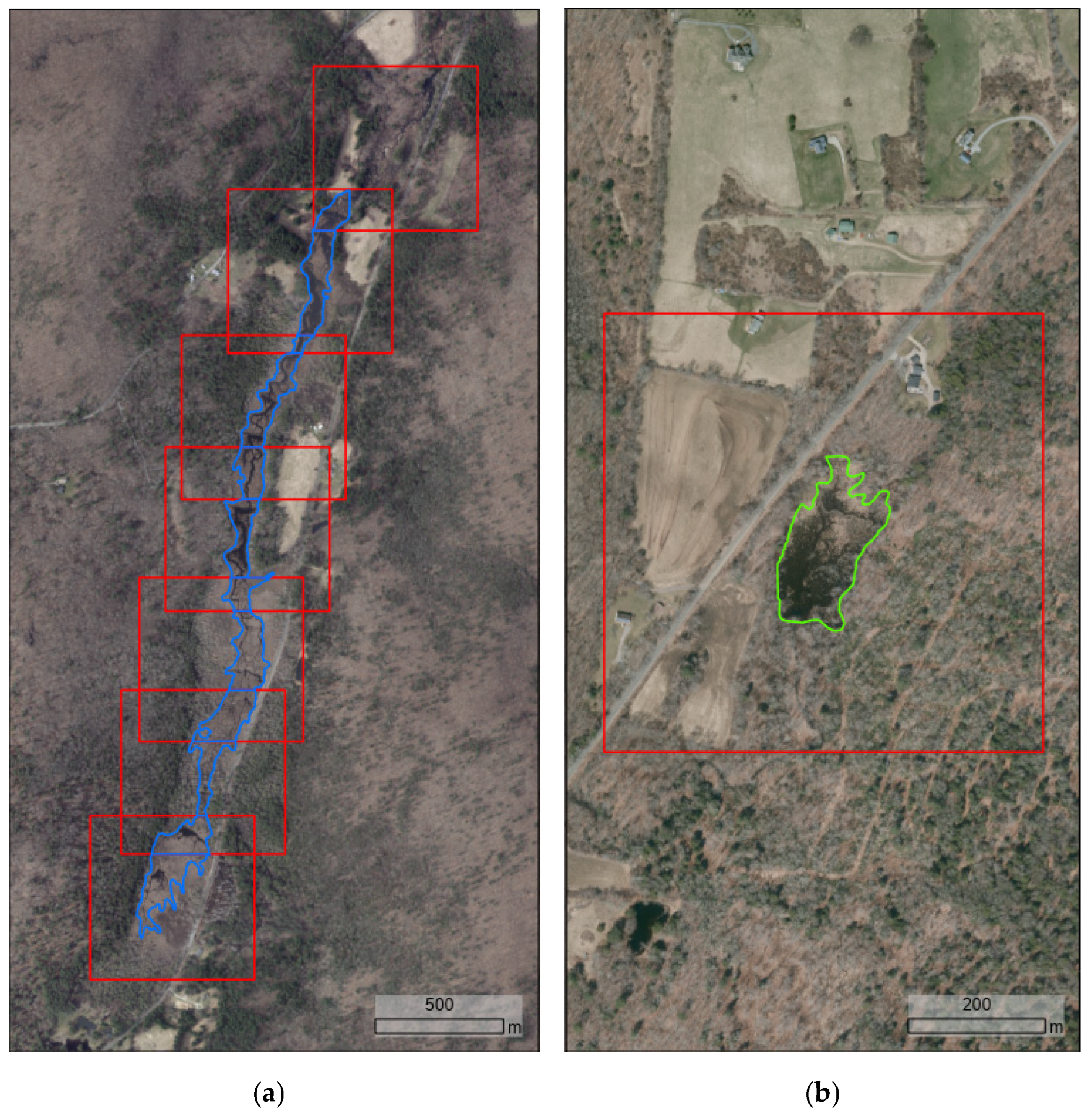

2.4. Compiling Training Dataset

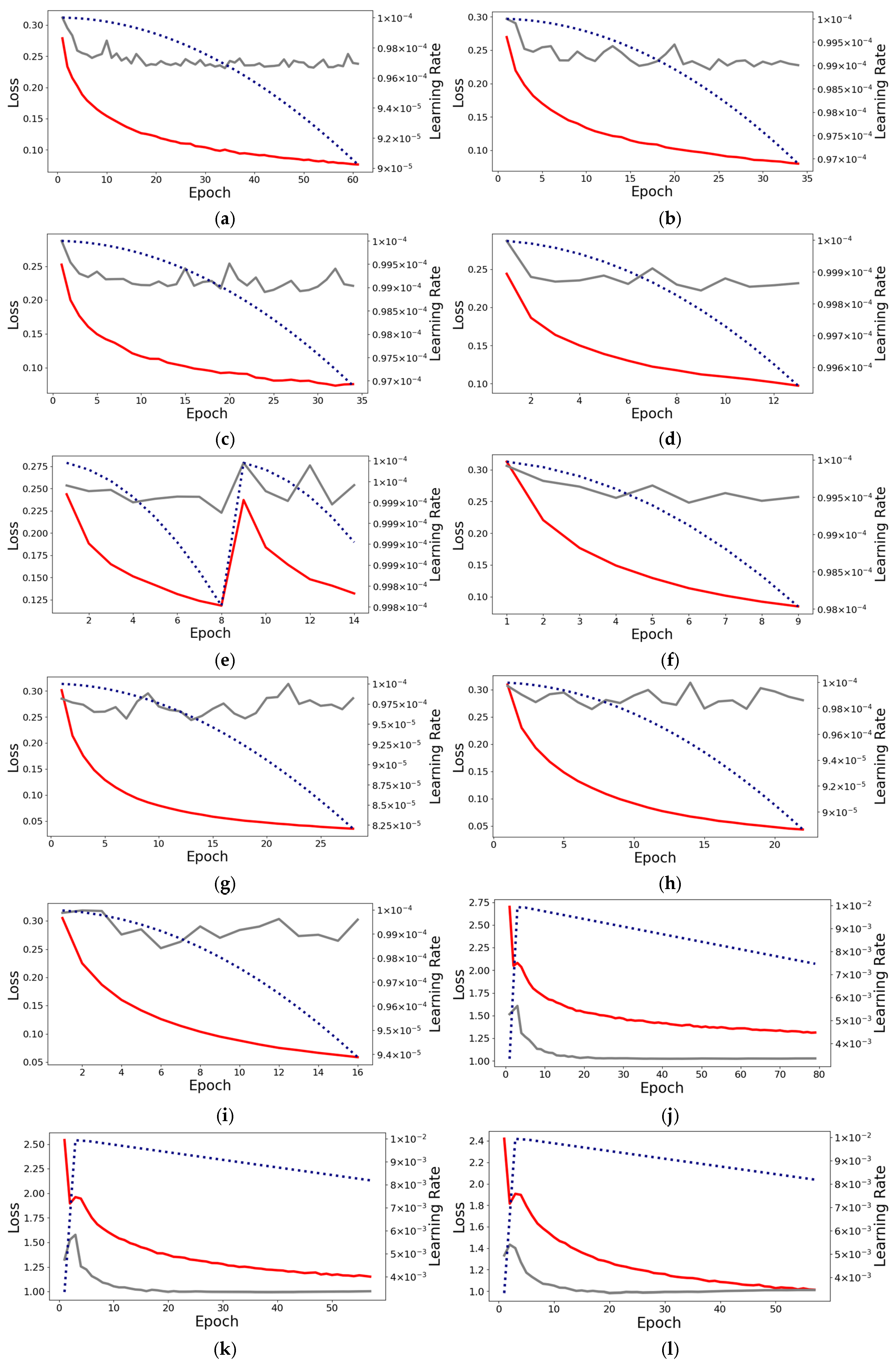

2.5. Deep Learning Model Training

2.6. Training Data Ablation

2.7. Straight-Edge Post-Processing Methodology

2.8. Visual Quality and Map Accuracy Assessment

3. Results

3.1. Training Dataset Analysis

3.2. Model Performance

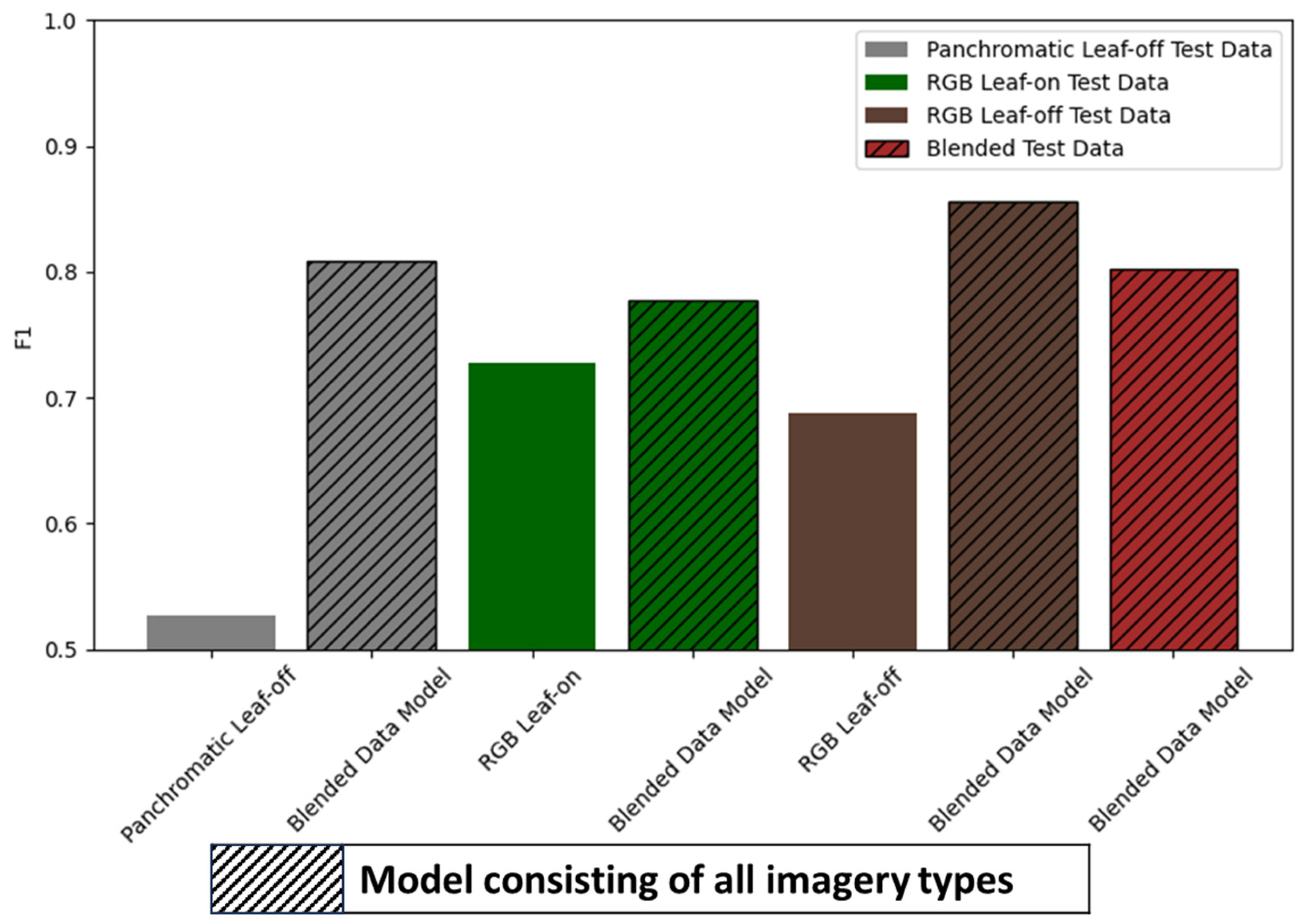

3.3. Training Data Ablation Analysis

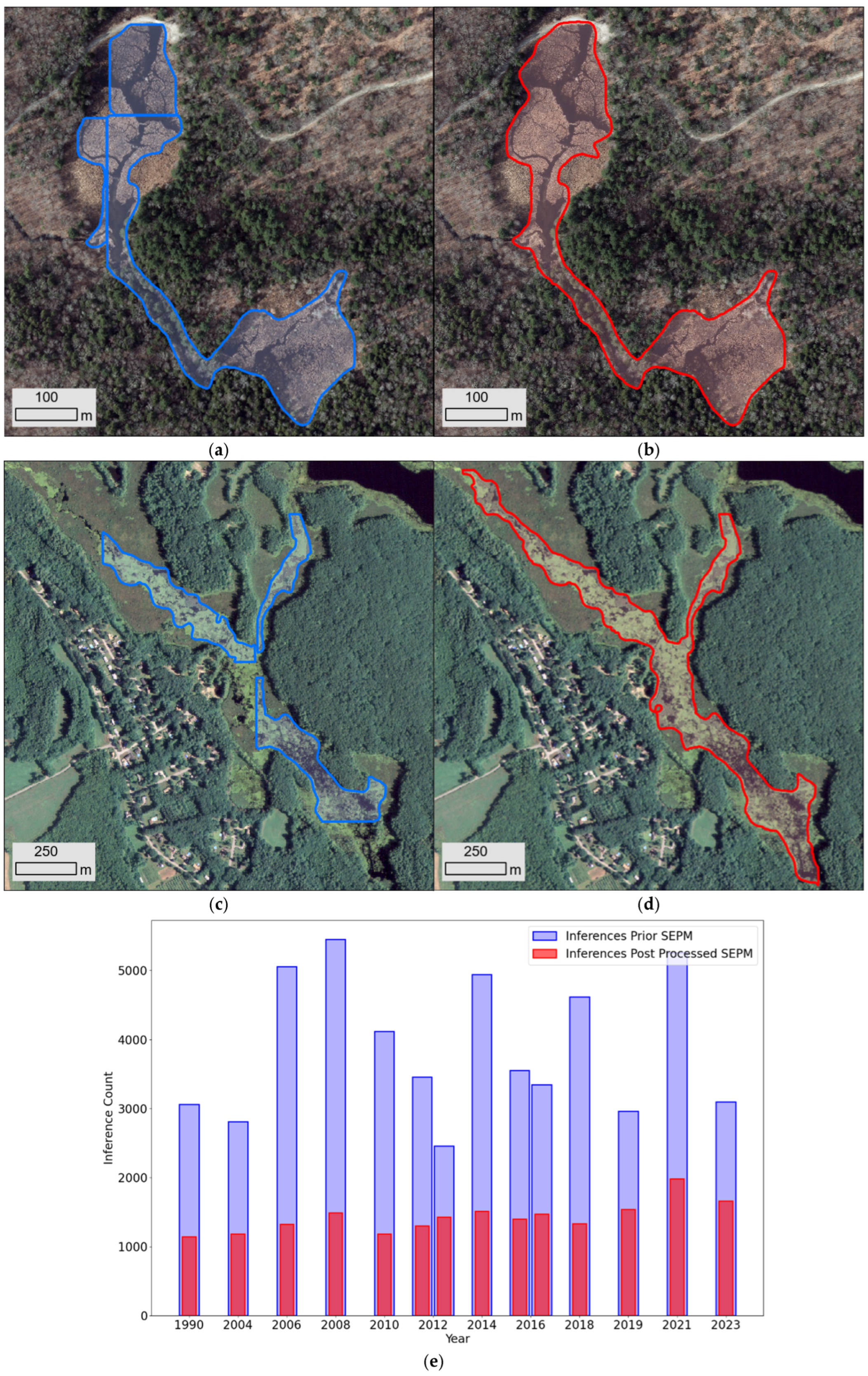

3.4. Straight-Edge Post-Processing Method

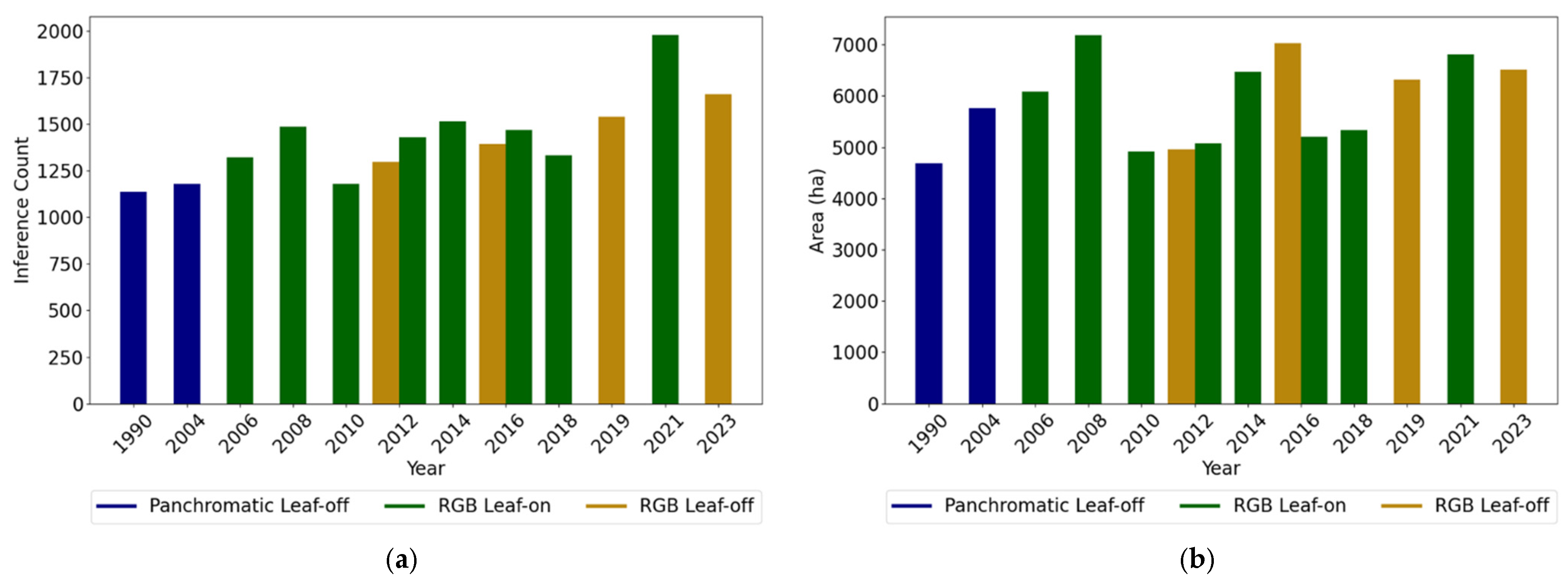

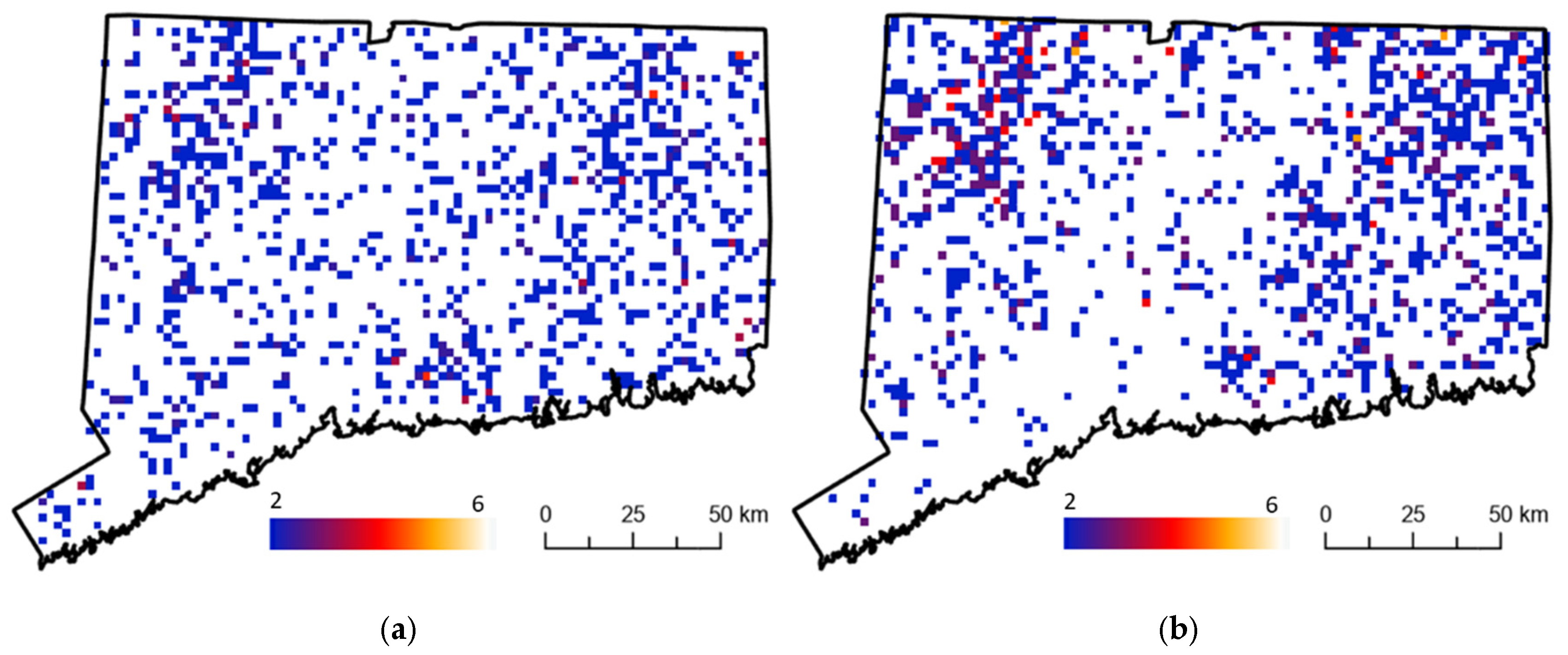

3.5. Statewide Beaver-Influenced Floodplain Inundation Detection

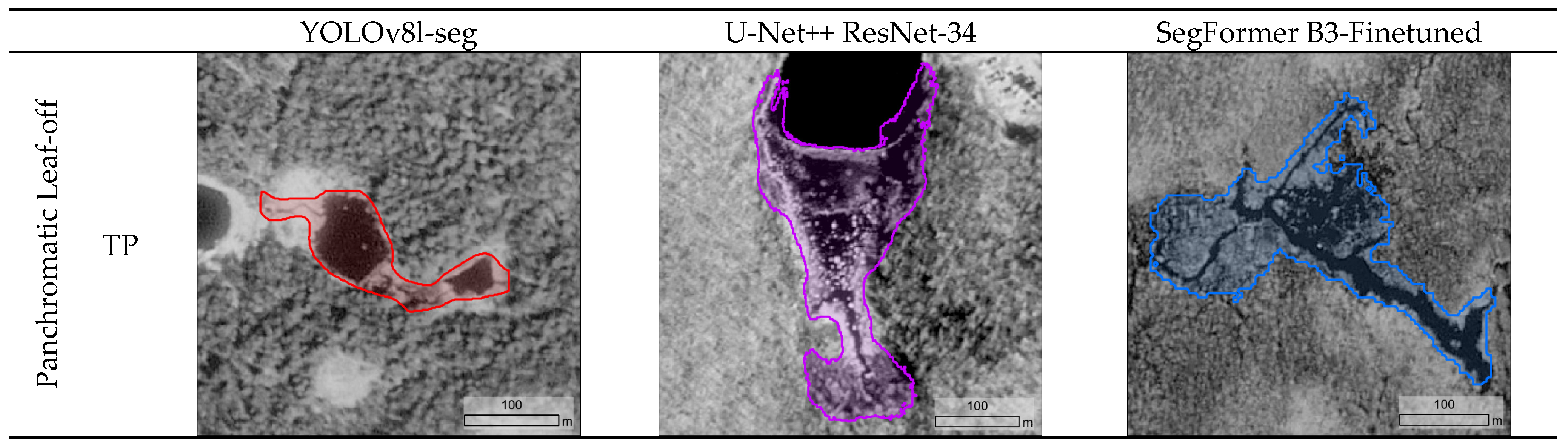

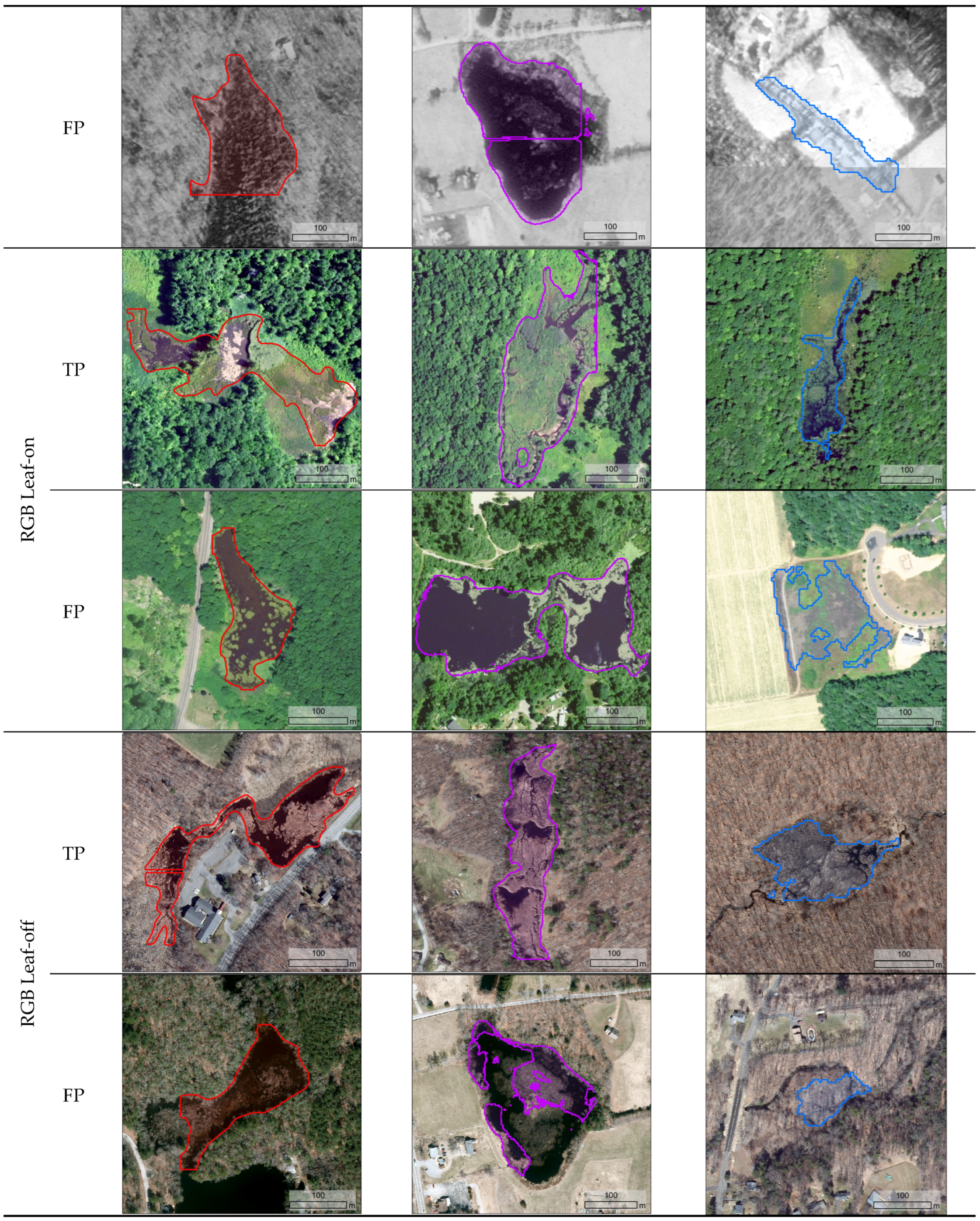

3.6. Visual Quality Assessment

3.7. Map Accuracy Assessment

4. Discussion

4.1. Training Dataset Analysis

4.2. Model Performance

4.3. Training Data Ablation Analysis

4.4. Straight-Edge Post-Processing Method

4.5. Statewide Beaver-Influenced Floodplain Inundation Detection

4.6. Visual Quality Assessment

4.7. Map Accuracy Assessment

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Foster, D.R.; Motzkin, G.; Bernardos, D.; Cardoza, J. Wildlife dynamics in the changing New England landscape. J. Biogeogr. 2002, 29, 1337–1357. [Google Scholar] [CrossRef]

- Stringer, A.P.; Gaywood, M.J. The impacts of beavers Castor spp. on biodiversity and the ecological basis for their reintroduction to Scotland, UK. Mammal Rev. 2016, 46, 270–283. [Google Scholar] [CrossRef]

- Adam, E.; Mutanga, O.; Rugege, D. Multispectral and hyperspectral remote sensing for identification and mapping of wetland vegetation: A review. Wetl. Ecol. Manag. 2010, 18, 281–296. [Google Scholar] [CrossRef]

- Butler, D.R. Characteristics of beaver ponds on deltas in a mountain environment. Earth Surf. Process. Landf. 2012, 37, 876–882. [Google Scholar] [CrossRef]

- Parker, H.; Nummi, P.; Hartman, G.; Rosell, F. Invasive North American beaver Castor canadensis in Eurasia: A review of potential consequences and a strategy for eradication. Wildl. Biol. 2012, 18, 354–365. [Google Scholar] [CrossRef]

- Fairfax, E.; Zhu, E.; Clinton, N.; Maiman, S.; Shaikh, A.; Macfarlane, W.W.; Wheaton, J.M.; Ackerstein, D.; Corwin, E. EEAGER: A neural network model for finding beaver complexes in satellite and aerial imagery. J. Geophys. Res. Biogeosciences 2023, 128, e2022JG007196. [Google Scholar] [CrossRef]

- Burchsted, D.; Daniels, M.D. Classification of the alterations of beaver dams to headwater streams in northeastern Connecticut, U.S.A. Geomorphology 2014, 205, 36–50. [Google Scholar] [CrossRef]

- Jones, B.M.; Tape, K.D.; Clark, J.A.; Bondurant, A.C.; Ward Jones, M.K.; Gaglioti, B.V.; Elder, C.D.; Witharana, C.; Miller, C.E. Multi-Dimensional Remote Sensing Analysis Documents Beaver-Induced Permafrost Degradation, Seward Peninsula, Alaska. Remote Sens. 2021, 13, 4863. [Google Scholar] [CrossRef]

- Rogan, J.; Chen, D. Remote sensing technology for mapping and monitoring land-cover and land-use change. Prog. Plan. 2004, 61, 301–325. [Google Scholar] [CrossRef]

- U.S. Department of Agriculture, Farm Service Agency. National Agriculture Imagery Program (NAIP). 2023. Available online: https://www.fsa.usda.gov/programs-and-services/aerial-photography/imagery-programs/naip-imagery/ (accessed on 13 December 2023).

- Ellis, E.C.; Wang, H.; Xiao, H.S.; Peng, K.; Liu, X.P.; Li, S.C.; Ouyang, H.; Cheng, X.; Yang, L.Z. Measuring Long-Term Ecological Changes in Densely Populated Landscapes Using Current and Historical High Resolution Imagery. Remote Sens. Environ. 2006, 100, 457–473. [Google Scholar] [CrossRef]

- Bergado, J.R.; Persello, C.; Gevaert, C. A deep learning approach to the classification of sub-decimetre resolution aerial images. In Proceedings of the 2016 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Beijing, China, 10–15 July 2016; IEEE: New York, NY, USA; pp. 1516–1519. [Google Scholar]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Aleissaee, A.A.; Kumar, A.; Anwer, R.M.; Khan, S.; Cholakkal, H.; Xia, G.-S.; Khan, F.S. Transformers in remote sensing: A survey. Remote Sens. 2023, 15, 1860. [Google Scholar] [CrossRef]

- Parmar, N.; Vaswani, A.; Uszkoreit, J.; Kaiser, L.; Shazeer, N.; Ku, A.; Tran, D. Image transformer. In Proceedings of the International Conference on Machine Learning, (PMLR, 2018), Stockholm, Sweden, 10–15 July 2018; pp. 4055–4064. [Google Scholar]

- Kim, H.E.; Maros, M.E.; Miethke, T.; Kittel, M.; Siegel, F.; Ganslandt, T. Lightweight Visual Transformers Outperform Convolutional Neural Networks for Gram-Stained Image Classification: An Empirical Study. Biomedicines 2023, 11, 1333. [Google Scholar] [CrossRef] [PubMed]

- Sreedevi, K.L.; Edison, A. Wild animal detection using deep learning. In Proceedings of the 2022 IEEE 19th India Council International Conference (INDICON), Kochi, India, 24–26 November 2022; pp. 1–5. [Google Scholar] [CrossRef]

- Yousif, H.; Yuan, J.; Kays, R.; He, Z. Fast human-animal detection from highly cluttered camera-trap images using joint background modeling and deep learning classification. In Proceedings of the 2017 IEEE International Symposium on Circuits and Systems (ISCAS), Baltimore, MD, USA, 28–31 May 2017; pp. 1–4. [Google Scholar] [CrossRef]

- Chen, R.; Little, R.; Mihaylova, L.; Delahay, R.; Cox, R. Wildlife surveillance using deep learning methods. Ecol. Evol. 2019, 9, 9453–9466. [Google Scholar] [CrossRef] [PubMed]

- Wäldchen, J.; Mäder, P. Machine learning for image based species identification. Methods Ecol. Evol. 2018, 9, 2216–2225. [Google Scholar] [CrossRef]

- Nguyen, H.; Maclagan, S.J.; Nguyen, T.; Nguyen, T.P.; Flemons, P.; Andrews, K.; Ritchie, E.G.; Phung, D. Animal recognition and identification with deep convolutional neural networks for automated wildlife monitoring. In Proceedings of the 2017 IEEE International Conference on Data Science and Advanced Analytics (DSAA), Tokyo, Japan, 19–21 October 2017; IEEE: New York, NY, USA; pp. 40–49. [Google Scholar] [CrossRef]

- Rast W, Kimmig SE, Giese L, Berger A Machine learning goes wild: Using data from captive individuals to infer wildlife behaviours. PLoS ONE 2020, 15, e0227317. [CrossRef]

- Garcia-Quintas, A.; Roy, A.; Barbraud, C.; Demarcq, H.; Denis, D.; Lanco Bertrand, S. Machine and deep learning approaches to understand and predict habitat suitability for seabird breeding. Ecol. Evol. 2023, 13, e10549. [Google Scholar] [CrossRef]

- Leblanc, C.; Bonnet, P.; Servajean, M.; Chytrý, M.; Aćić, S.; Argagnon, O.; Bergamini, A.; Biurrun, I.; Bonari, G.; Campos, J.A.; et al. A deep-learning framework for enhancing habitat identification based on species composition. Appl. Veg. Sci. 2024, 27, e12802. [Google Scholar] [CrossRef]

- Kumar, S.; Satish, K.; Berger, H.; Zhang, L. WildlifeMapper: Aerial Image Analysis for Multi-Species Detection and Identification. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition 2024, Seattle, WA, USA, 16–22 June 2024; pp. 12594–12604. [Google Scholar]

- Chabot, D.; Dillon, C.; Francis, C.M. An approach for using off-the-shelf object-based image analysis software to detect and count birds in large volumes of aerial imagery. Avian Conserv. Ecol. 2018, 13, 227–251. [Google Scholar] [CrossRef]

- Zhang, W.; Hu, B.; Brown, G.; Meyer, S. Beaver pond identification from multi-temporal and multi- sourced remote sensing data. Geo-Spat. Inf. Sci. 2023, 27, 953–967. [Google Scholar] [CrossRef]

- Swift, T.P.; Kennedy, L.M. Beaver-Driven Peatland Ecotone Dynamics: Impoundment Detection Using Lidar and Geomorphon Analysis. Land 2021, 10, 1333. [Google Scholar] [CrossRef]

- Jensen, A.M.; Neilson, B.T.; McKee, M.; Chen, Y. Thermal remote sensing with an autonomous unmanned aerial remote sensing platform for surface stream temperatures. In Proceedings of the 2012 IEEE International Geoscience and Remote Sensing Symposium 2012, Munich, Germany, 22–27 July 2012; pp. 5049–5052. Available online: https://ieeexplore.ieee.org/abstract/document/6352476 (accessed on 25 August 2025).

- Matechuk, L. Predictive Modelling of Beaver Habitats Using Machine Learning. Master’s Thesis, University of British Columbia, Vancouver, BC, Canada, 2024. Available online: https://open.library.ubc.ca/collections/ubctheses/24/items/1.0445073 (accessed on 25 August 2025).

- Shrestha, A.; Mahmood, A. Review of deep learning algorithms and architectures. IEEE Access 2019, 7, 53040–53065. [Google Scholar] [CrossRef]

- Zhang, L.; Zhang, L.; Du, B. Deep learning for remote sensing data: A technical tutorial on the state of the art. IEEE Geosci. Remote Sens. Mag. 2016, 4, 22–40. [Google Scholar] [CrossRef]

- Arnold, C.; Wilson, E.; Hurd, J.; Civco, D. 30 Years of Land Cover Change in Connecticut, USA: A Case Study of Long-Term Research, Dissemination of Results, and Their Use in Land Use Planning and Natural Resource Conservation. Land 2020, 9, 255. [Google Scholar] [CrossRef]

- Wilson, M.; Judy, M. Beavers in Connecticut: Their Natural History and Management; Connecticut Department of Environmental Protection Bureau of Natural Resources Wildlife Division: Hartford, CT, USA, 2001. [Google Scholar]

- Grudzinski, B.P.; Fritz, K.; Golden, H.E.; Newcomer-Johnson, T.A.; Rech, J.A.; Levy, J.; Fain, J.; McCarty, J.L.; Johnson, B.; Vang, T.K.; et al. A global review of beaver dam impacts: Stream conservation implications across biomes. Glob. Ecol. Conserv. 2022, 37, e02163. [Google Scholar] [CrossRef] [PubMed]

- Błȩdzki, L.A.; Bubier, J.L.; Moulton, L.A.; Kyker-Snowman, T.D. Downstream effects of beaver ponds on the water quality of New England first- and second-order streams. Ecohydrology 2011, 4, 698–707. [Google Scholar] [CrossRef]

- Hartman, G.; Törnlöv, S. Influence of watercourse depth and width on dam-building behaviour by Eurasian beaver (Castor fiber). J. Zool. 2006, 268, 127–131. [Google Scholar] [CrossRef]

- Dittbrenner, B.J.; Pollock, M.M.; Schilling, J.W.; Olden, J.D.; Lawler, J.J.; E Torgersen, C. Modeling intrinsic potential for beaver (Castor canadensis) habitat to inform restoration and climate change adaptation. PLoS ONE 2018, 13, e0192538. [Google Scholar] [CrossRef]

- Wang, G.; McClintic, L.F.; Taylor, J.D. Habitat selection by American beaver at multiple spatial scales. Anim. Biotelemetry 2019, 7, 10. [Google Scholar] [CrossRef]

- Jiang, P.; Ergu, D.; Liu, F.; Cai, Y.; Ma, B. A Review of YOLO Algorithm Developments. Procedia Comput. Sci. 2022, 199, 1066–1073. [Google Scholar] [CrossRef]

- Brazier, R.E.; Puttock, A.; Graham, H.A.; Auster, R.E.; Davies, K.H.; Brown, C.M.L. Beaver: Nature’s ecosystem engineers. WIREs Water 2021, 8, e1494. [Google Scholar] [CrossRef]

- Xie, E.; Wang, W.; Yu, Z.; Anandkumar, A.; Alvarez, J.M.; Luo, P. SegFormer: Simple and Efficient Design for Semantic Segmentation with Transformers. arXiv 2021, arXiv:2105.15203. [Google Scholar] [CrossRef]

- Zhou, Z.; Siddiquee, M.M.R.; Tajbakhsh, N.; Liang, J. U-NET++: A Nested U-Net Architecture for Medical Image Segmentation. In Deep Learning in Medical Image Analysis and Multimodal Learning for Clinical Decision Support; Springer: Berlin/Heidelberg, Germany, 2018; pp. 3–11. [Google Scholar]

- Zhou, Z.; Siddiquee, M.M.R.; Tajbakhsh, N.; Liang, J. U-NET++: Redesigning Skip Connections to Exploit Multiscale Features in Image Segmentation. In IEEE Transactions on Medical Imaging; IEEE: New York, NY, USA, 2019. [Google Scholar]

- Talaat, F.M.; ZainEldin, H. An improved fire detection approach based on YOLO-v8 for smart cities. Neural Comput. Appl. 2023, 35, 20939–20954. [Google Scholar] [CrossRef]

- Zhanchao Huang, J.; Wang, X.; Fu, X.; Yu, T.; Guo, Y.; Wang, R. DC-SPP-YOLO: Dense connection and spatial pyramid pooling-based YOLO for object detection. Inf. Sci. 2020, 522, 241–258. [Google Scholar] [CrossRef]

- Joshi, D.; Witharana, C. Vision transformer-based unhealthy tree crown detection in mixed northeastern us forests and evaluation of annotation uncertainty. Remote Sens. 2025, 17, 1066. [Google Scholar]

- Nathan, R.J.A.A.; Bimber, O. Synthetic aperture anomaly imaging for through-foliage target detection. Remote Sens. 2023, 15, 4369. [Google Scholar]

- Waser, L.T.; Rüetschi, M.; Psomas, A.; Small, D.; Rehush, N. Mapping dominant leaf type based on combined Sentinel-1/-2 data–Challenges for mountainous countries. ISPRS J. Photogramm. Remote Sens. 2021, 180, 209–226. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Wang, T.; Huan, J.; Li, B. Data dropout: Optimizing training data for convolutional neural networks. In Proceedings of the 2018 IEEE 30th International Conference on Tools with Artificial Intelligence (ICTAI), Volos, Greece, 5–7 November 2018; IEEE: New York, NY, USA; pp. 39–46. [Google Scholar]

- Pan, X.; Zhao, J.; Xu, J. An end-to-end and localized post-processing method for correcting high-resolution remote sensing classification result images. Remote Sens. 2020, 12, 852. [Google Scholar]

- Howard, R.J.; Larson, J.S. A stream habitat classification system for beaver. J. Wildl. Manag. 1985, 49, 19–25. [Google Scholar] [CrossRef]

| Acquisition Year | Leaf-On/Off | Spatial Resolution (m) | Georeferenced (Yes/No) | Spectral Bands | Cloud Cover | Area Imaged | Originators | Used in This Study? |

|---|---|---|---|---|---|---|---|---|

| 1934 | Off | 1 | N | Panchromatic | n/a | Statewide | Fairchild Aerial Survey, Inc. for the State Planning Board | No |

| 1951-52 | On | 1 | N | Panchromatic | n/a | Statewide | Robinson Aerial Surveys, Inc., for the U.S. Department of Agriculture, Agriculture Stabilization and Marketing Service | No |

| 1965 | Off | 1 | N | Panchromatic | n/a | Statewide | Keystone Aerial Surveys, Inc., for the Department of Public Works | No |

| 1970 | Off | 1 | N | Panchromatic | n/a | Statewide | Keystone Aerial Survey, Inc. for the State Department of Transportation | No |

| 1985-86 | Off | 1 | N | Panchromatic | n/a | Statewide | Aero Graphics Corp., Bohemia, NY | No |

| 1990 | Off | 1 | Y | Panchromatic | n/a | Statewide | DEEP, U.S. Geological Survey | Training Inferencing |

| 2004 | Off | 0.30 | Y | Panchromatic | n/a | Statewide | DEEP, Aero-Metric, Inc. | Training Inferencing |

| 2006 | On | 1 | Y | RGB | 10 | Statewide | SDA-FSA-APFO Aerial Photography Field Office | Training Inferencing |

| 2008 | On | 1 | Y | RGB, NIR | 10 | Statewide | USDA-FSA-APFO Aerial Photography Field Office | Training Inferencing |

| 2010 | On | 1 | Y | RGB, NIR | 10 | Statewide | USDA-FSA-APFO Aerial Photography Field Office | Training Inferencing |

| 2012 | Off | 0.30 | Y | RGB, NIR | 0 | Statewide | Photo Science, State of Connecticut Department of Emergency Services and Public Protection | Training Inferencing |

| 2012 | On | 1 | Y | RGB, NIR | 10 | Statewide | USDA-FSA-APFO Aerial Photography Field Office | Training Inferencing |

| 2014 | On | 1 | Y | RGB, NIR | 10 | Statewide | USDA-FSA-APFO Aerial Photography Field Office | Training Inferencing |

| 2016 | Off | 0.08 | Y | RGB, NIR | 0 | Statewide | The Sanborn Map Company, State of Connecticut Department of Emergency Services and Public Protection | Training Inferencing |

| 2016 | On | 0.60 | Y | RGB, NIR | 10 | Statewide | USDA-FSA-APFO Aerial Photography Field Office | Training Inferencing |

| 2018 | On | 0.60 | Y | RGB, NIR | 10 | Statewide | USDA-FSA-APFO Aerial Photography Field Office | Training Inferencing |

| 2019 | Off | 0.15 | Y | RGB, NIR | 0 | Statewide | Quantum Spatial Inc., State of Connecticut Department of Emergency Services and Public Protection | Training Inferencing |

| 2021 | On | 0.6 | Y | RGB, NIR | - | Statewide | USDA-FSA-APFO Aerial Photography Field Office | Inferencing |

| 2023 | Off | 0.08 | Y | RGB, NIR | - | Statewide | Office of Policy and Management | Inferencing |

| 2023 | On | 0.6 | Y | RGB, NIR | - | Statewide | USDA-FSA-APFO Aerial Photography Field Office | No |

| Architecture | Model Variants | Parameters | Citation | ||

|---|---|---|---|---|---|

| Transformer | Semantic | SegFormer | B0-Finetuned | 3.4 M | Xie et al., 2021 [42] |

| SegFormer | B1-Finetuned | 13.0 M | |||

| SegFormer | B2-Finetuned | 25.0 M | |||

| SegFormer | B3-Finetuned | 45.0 M | |||

| SegFormer | B4-Finetuned | 60.0 M | |||

| Convolutional Neural Nets | U-Net++ | ResNet-18 | 11.7 M | Zhou et al., 2018 [43] | |

| U-Net++ | ResNet-34 | 21.8 M | |||

| U-Net++ | ResNet-50 | 25.6 M | |||

| U-Net++ | ResNet-101 | 44.6 M | |||

| Instance | YOLOv8 | YOLOv8n-Seg | 3.2 M | Ultralytics, 2023 | |

| YOLOv8 | YOLOv8s-Seg | 11.2 M | |||

| YOLOv8 | YOLOv8m-Seg | 25.9 M | |||

| YOLOv8 | YOLOv8l-Seg | 43.7 M | |||

| YOLOv8 | YOLOv8x-Seg | 68.2 M | |||

| Model | Epochs | BIFI Precision | BIFI Recall | BIFI F1 | BIFI IoU |

|---|---|---|---|---|---|

| SegFormer B0-Finetuned | 61 | 0.6471 | 0.5081 | 0.5202 | 0.4191 |

| SegFormer B1-Finetuned | 34 | 0.6898 | 0.6898 | 0.5425 | 0.4389 |

| SegFormer B2-Finetuned | 34 | 0.6988 | 0.5753 | 0.5855 | 0.4825 |

| SegFormer B3-Finetuned | 13 | 0.7417 | 0.6992 | 0.6834 | 0.5775 |

| SegFormer B4-Finetuned | 14 | 0.6907 | 0.5392 | 0.5551 | 0.4527 |

| U-Net++ ResNet-18 | 9 | 0.8042 | 0.7706 | 0.7605 | 0.6650 |

| U-Net++ ResNet-34 | 29 | 0.8193 | 0.7886 | 0.7802 | 0.6942 |

| U-Net++ ResNet-50 | 22 | 0.7977 | 0.7828 | 0.7582 | 0.6628 |

| U-Net++ ResNet-101 | 16 | 0.8090 | 0.7633 | 0.7572 | 0.6625 |

| YOLOv8n-seg | 42 | 0.7765 | 0.8350 | 0.7816 | 0.6983 |

| YOLOv8s-seg | 57 | 0.7955 | 0.8550 | 0.8029 | 0.7225 |

| YOLOv8m-seg | 20 | 0.7917 | 0.8486 | 0.7985 | 0.7211 |

| YOLOv8l-seg | 25 | 0.7964 | 0.8577 | 0.8059 | 0.7259 |

| YOLOv8x-seg | 21 | 0.7901 | 0.8342 | 0.7947 | 0.7210 |

| Ipswich, MA | Umpqua River, Oregon | |

|---|---|---|

| Percentage true positive | 3.25 | 0.00 |

| Percentage false positive | 0.40 | 0.00 |

| Percentage false negative | 0.65 | 0.10 |

| Percentage true negative | 95.70 | 99.90 |

| Percentage true positive | 13.27 |

| Percentage false positive | 86.73 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Published by MDPI on behalf of the International Society for Photogrammetry and Remote Sensing. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zocco, E.; Witharana, C.; Ortega, I.M.; Ouimet, W. Automated Detection of Beaver-Influenced Floodplain Inundations in Multi-Temporal Aerial Imagery Using Deep Learning Algorithms. ISPRS Int. J. Geo-Inf. 2025, 14, 383. https://doi.org/10.3390/ijgi14100383

Zocco E, Witharana C, Ortega IM, Ouimet W. Automated Detection of Beaver-Influenced Floodplain Inundations in Multi-Temporal Aerial Imagery Using Deep Learning Algorithms. ISPRS International Journal of Geo-Information. 2025; 14(10):383. https://doi.org/10.3390/ijgi14100383

Chicago/Turabian StyleZocco, Evan, Chandi Witharana, Isaac M. Ortega, and William Ouimet. 2025. "Automated Detection of Beaver-Influenced Floodplain Inundations in Multi-Temporal Aerial Imagery Using Deep Learning Algorithms" ISPRS International Journal of Geo-Information 14, no. 10: 383. https://doi.org/10.3390/ijgi14100383

APA StyleZocco, E., Witharana, C., Ortega, I. M., & Ouimet, W. (2025). Automated Detection of Beaver-Influenced Floodplain Inundations in Multi-Temporal Aerial Imagery Using Deep Learning Algorithms. ISPRS International Journal of Geo-Information, 14(10), 383. https://doi.org/10.3390/ijgi14100383