Abstract

With the widespread adoption of large language models (LLMs) in code generation tasks, geospatial code generation has emerged as a critical frontier in the integration of artificial intelligence and geoscientific analysis. This growing trend underscores the urgent need for systematic evaluation methodologies to assess the generation capabilities of LLMs in geospatial contexts. In particular, geospatial computation and visualization tasks in the JavaScript environment rely heavily on the orchestration of diverse frontend libraries and ecosystems, posing elevated demands on a model’s semantic comprehension and code synthesis capabilities. To address this challenge, we propose GeoJSEval—the first multimodal, function-level automatic evaluation framework for LLMs in JavaScript-based geospatial code generation tasks. The framework comprises three core components: a standardized test suite (GeoJSEval-Bench), a code submission engine, and an evaluation module. It includes 432 function-level tasks and 2071 structured test cases, spanning five widely used JavaScript geospatial libraries that support spatial analysis and visualization functions, as well as 25 mainstream geospatial data types. GeoJSEval enables multidimensional quantitative evaluation across metrics such as accuracy, output stability, resource consumption, execution efficiency, and error type distribution. Moreover, it integrates boundary testing mechanisms to enhance robustness and evaluation coverage. We conduct a comprehensive assessment of 20 state-of-the-art LLMs using GeoJSEval, uncovering significant performance disparities and bottlenecks in spatial semantic understanding, code reliability, and function invocation accuracy. GeoJSEval offers a foundational methodology, evaluation resource, and practical toolkit for the standardized assessment and optimization of geospatial code generation models, with strong extensibility and promising applicability in real-world scenarios. This manuscript represents the peer-reviewed version of our earlier preprint previously made available on arXiv.

1. Introduction

General-purpose code is typically written in formal programming languages such as Python, C++, or Java, and is employed to perform data processing, implement algorithms, and handle multitask operations such as network communication [1]. Users translate logical expressions into executable instructions through programming, enabling the processing of diverse data types including text, structured data, and imagery. However, the rapid growth of high-resolution remote sensing imagery and crowdsourced spatiotemporal data has imposed greater demands on computational capacity and methodological customization in geospatial information analysis, particularly for tasks involving indexing, processing, and analysis of heterogeneous geospatial data. Geospatial data types are inherently complex, encompassing remote sensing imagery, vector layers, and point clouds. These data involve various geometric structures (points, lines, polygons, volumes) and semantic components such as attributes, metadata, and temporal information [2,3]. Spatial analysis commonly entails dozens of operations, including buffer generation, point clustering, spatial interpolation, spatiotemporal trajectory analysis, isopleth and isochrone generation, linear referencing, geometric merging and clipping, and topological relationship evaluation [4]. Effective geospatial data processing requires integrated consideration of spatial reference system definition and transformation, spatial indexing structures (e.g., R-trees, Quad-trees), validation of geometric and topological correctness, and the modeling and management of spatial databases [5]. Moreover, modern geospatial applications demand the integration of functionalities such as basemap services (e.g., OpenStreetMap, Mapbox), vector rendering, user interaction, heatmaps, trajectory animation, and three-dimensional visualization [6,7]. Consequently, the foundational libraries of conventional general-purpose languages are increasingly insufficient for addressing the complexities of contemporary geospatial analysis.

Although geospatial analysis platforms such as ArcGIS and QGIS have integrated a wide range of built-in functions, they primarily rely on graphical user interfaces (GUIs) for point-and-click operations. While these interfaces are user-friendly, they present limitations in terms of workflow customization, task reproducibility, and methodological dissemination [8,9]. In contrast, code-driven approaches offer greater flexibility and reusability. Users can automate complex tasks and enable cross-context reuse through scripting, thereby facilitating the efficient dissemination and sharing of GIS methodologies. Meanwhile, cloud-based platforms such as Google Earth Engine (GEE) provide JavaScript and Python APIs that support large-scale online analysis of remote sensing and spatiotemporal data [10]. However, the underlying computational logic of these platforms depends on pre-packaged functions and remote execution environments, which restrict fine-grained control over processing workflows. As a result, they struggle to meet demands for local deployment, custom operator definition, and data privacy—particularly in scenarios requiring enhanced security or functional extensibility. In recent years, the rise in WebGIS has been driving a paradigm shift in spatial analysis toward browser-based execution. In applications such as urban governance, disaster response, agricultural monitoring, ecological surveillance, and public participation, users increasingly expect an integrated, browser-based environment for data loading, analysis, and visualization—enabling a lightweight, WYSIWYG (what-you-see-is-what-you-get) experience [11]. This trend is propelling the evolution of geospatial computing from traditional desktop and server paradigms toward “computable WebGIS.” The browser is increasingly serving as a lightweight yet versatile computational terminal, opening new possibilities for frontend spatial modeling and visualization.

Against this backdrop, JavaScript has emerged as a key language for geospatial analysis and visualization, owing to its native browser execution capability and mature frontend ecosystem. Compared to languages such as Python (e.g., GeoPandas, Shapely, Rasterio), R, or MATLAB, JavaScript can run directly in the front-end, eliminating the need for environment setup (e.g., Python requires installing interpreters and libraries). This makes it particularly well-suited for lightweight analysis workflows with low resource overhead and strong cross-platform compatibility [12]. For spatial tasks, the open-source community has developed a rich ecosystem of JavaScript-based geospatial tools, which can be broadly categorized into two types. The first category focuses on spatial analysis and computation. For instance, Turf.js offers operations such as buffering, merging, clipping, and distance measurement; JSTS supports topological geometry processing; and Geolib enables precise calculations of distances and angles. The second category centers on map visualization. Libraries such as Leaflet and OpenLayers provide functionalities for layer management, plugin integration, and interactive user interfaces, significantly accelerating the shift in GIS capabilities to the Web [13]. In this paper, we collectively refer to these tools as “JavaScript-based Geospatial Code”, which enables the execution of core geospatial analysis and visualization tasks without requiring backend services. These tools are particularly suitable for small-to-medium-scale data processing, prototype development, and educational demonstrations. While JavaScript may fall short of Python in terms of large-scale computation or deep learning integration, it offers distinct advantages in frontend spatial computing and method sharing due to its excellent platform compatibility, low development barrier, and high reusability [14].

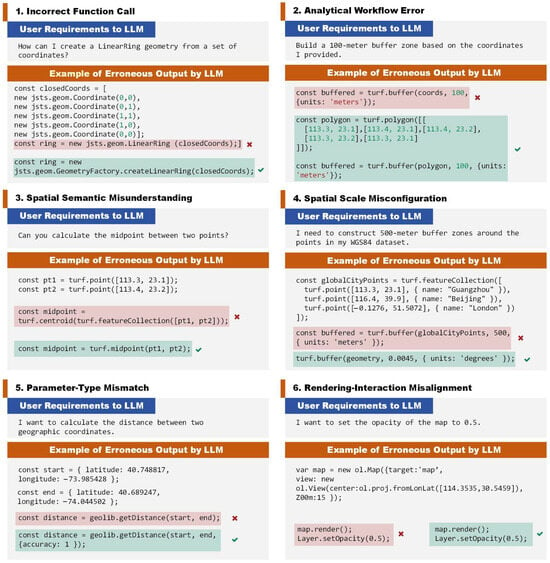

Authoring JavaScript-based geospatial code requires not only general programming skills but also domain-specific knowledge of spatial data types, core library APIs, spatial computation logic, and visualization techniques—posing a significantly higher barrier to entry than conventional coding tasks [15]. As GIS increasingly converges with fields such as remote sensing, urban studies, and environmental science, a growing number of non-expert users are eager to engage in geospatial analysis and application development. However, many of them encounter substantial difficulties in modeling and implementation, highlighting the urgent need for automated tools to lower learning and execution costs. In recent years, large language models (LLMs), empowered by massive code corpora and Transformer-based architectures, have achieved remarkable progress in natural language-driven code generation tasks. Models such as GPT-4o, DeepSeek, Claude, and LLaMA are capable of translating natural language prompts directly into executable program code [16]. Domain-specific models such as DeepSeek Coder, Qwen 2.5-Coder, and Code LLaMA have further enhanced accuracy and robustness, offering feasible solutions for automated code generation in geospatial tasks [17]. However, unlike general-purpose coding tasks, geospatial code generation entails elevated demands in terms of semantic understanding, library function invocation, and structural organization—challenges that are particularly pronounced in the JavaScript environment. In contrast to backend languages such as Python and Java, which are primarily used for building and managing server-side logic, the JavaScript ecosystem is characterized by fragmented function interfaces, inconsistent documentation standards, and strong dependence on specific browsers and operating systems. Moreover, it often requires the tight integration of map rendering, spatial computation, and user interaction logic, thereby imposing stricter demands on semantic precision and modular organization [18]. Current mainstream LLMs frequently exhibit hallucination issues when generating JavaScript-based geospatial code. These manifest as incorrect function calls, spatial semantic misunderstandings, flawed analytical workflows, misaligned rendering–interaction logic, parameter-type mismatches, and spatial scale misconfigurations (Figure 1), often resulting in erroneous or non-executable outputs.

Figure 1.

Common error types in geospatial code generation with LLMs.

This raises a critical question: how well do current LLMs actually perform in generating JavaScript-based geospatial code? Without systematic evaluation, direct application of these models may result in code with structural flaws, logical inconsistencies, or execution failures—ultimately compromising the reliability of geospatial analyses. Although LLMs have demonstrated strong performance on general code benchmarks such as HumanEval and MBPP [19], these benchmarks overlook the inherent complexity of geospatial tasks and the unique characteristics of the JavaScript frontend ecosystem, making them insufficient for assessing real-world usability and stability in spatial contexts. Existing studies have made preliminary attempts to evaluate geospatial code generation, yet significant limitations remain. For instance, GeoCode-Bench and GeoCode-Eval proposed by Wuhan University [20], and the GeoSpatial-Code-LLMs Dataset developed by Wrocław University of Science and Technology, primarily focus on Python or GEE-based code, with limited attention to JavaScript-centric scenarios. Specifically, GeoCode-Bench and GeoCode-Eval rely on manual scoring, resulting in high evaluation costs, subjectivity, and limited reproducibility. While the GeoSpatial-Code-LLMs Dataset introduces automation to some extent, it suffers from a small sample size (approximately 40 examples) and lacks coverage of multimodal data structures such as vectors and rasters. Moreover, AutoGEEval and AutoGEEval++ have established evaluation pipelines for the GEE platform, yet they fail to address browser-based execution environments or the distinctive requirements of JavaScript frontend tasks [21,22]. Therefore, there is an urgent need to develop an automated, end-to-end evaluation pipeline for systematically assessing the performance of mainstream LLMs in JavaScript-based geospatial code generation. Such a framework is essential for identifying model limitations and appropriate application scopes, guiding future optimization and fine-tuning efforts, and laying the theoretical and technical foundation for building low-barrier, high-efficiency geospatial code generation tools.

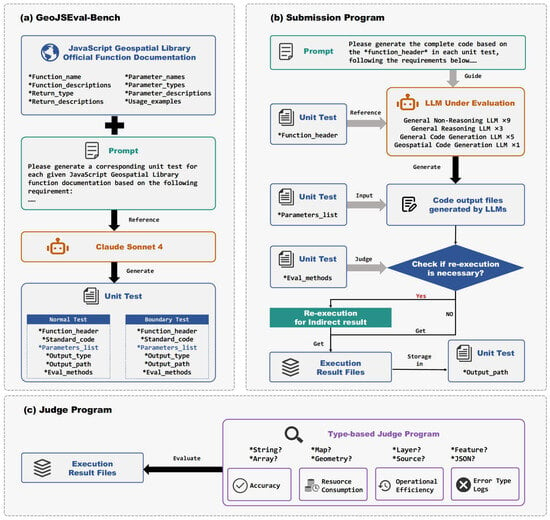

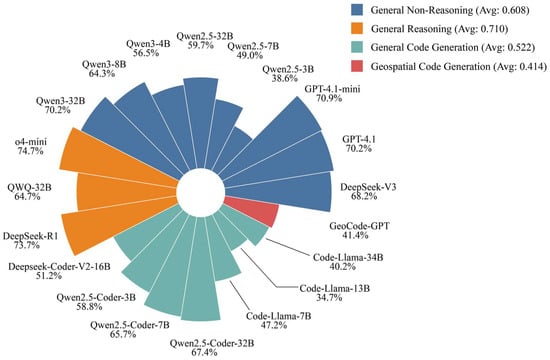

To address the aforementioned challenges, this study proposes and implements GeoJSEval, a LLM evaluation framework designed for unit-level function generation assessment. GeoJSEval establishes an automated and structured evaluation system for JavaScript-based geospatial code. As illustrated in Figure 2, GeoJSEval comprises three core components: the GeoJSEval-Bench test suite, the Submission Program, and the Judge Program. The GeoJSEval-Bench module (Figure 2a) is constructed based on the official documentation of five mainstream JavaScript geospatial libraries: Turf.js, JSTS, Geolib, Leaflet, and OpenLayers. The benchmark contains a total of 432 function-level tasks and 2071 structured test cases. All test cases were automatically generated using multi-round prompting strategies with Claude Sonnet 4 and subsequently verified and validated by domain experts to ensure correctness and executability. Each test case encompasses three major components—function semantics, runtime configuration, and evaluation logic—and includes seven key metadata fields: function_header, reference_code, parameters_list, output_type, eval_methods, output_path, and expected_answer. The benchmark spans 25 data types supported across the five libraries, including geometric objects, map elements, numerical values, and boolean types—demonstrating high representativeness and diversity. These five libraries are widely adopted and well-established in both academic research and industrial practice. Their collective functionality reflects the mainstream capabilities of the JavaScript ecosystem in geospatial computation and visualization, providing a sufficiently comprehensive foundation for conducting rigorous benchmarking studies. The Submission Program (Figure 2b) uses the function header as a core prompt to guide the evaluated LLM in generating a complete function implementation. The system then injects predefined test parameters and executes the generated code, saving the output to a designated path for downstream evaluation. The Judge Program (Figure 2c) automatically selects the appropriate comparison strategy based on the output type, enabling systematic evaluation of code correctness and executability. GeoJSEval also integrates resource and performance monitoring modules to track token usage, response time, and code length, allowing the derivation of runtime metrics such as Inference Efficiency, Token Efficiency, and Code Efficiency. Additionally, the framework incorporates an error detection mechanism that automatically identifies common failure patterns—including syntax errors, type mismatches, attribute errors, and runtime exceptions—providing quantitative insights into model performance. In the experimental evaluation, we systematically assess a total of 20 state-of-the-art LLMs: 9 general non-reasoning models, 3 reasoning-augmented models, 5 code-centric models, and 1 geospatial-specialized model, GeoCode-GPT [20]. The results reveal significant performance disparities, structural limitations, and potential optimization directions in the ability of current LLMs to generate JavaScript geospatial code.

Figure 2.

GeoJSEval framework structure. The diagram highlights GeoJSEval-Bench (a), the Submission Program (b), and the Judge Program (c).

The main contributions of this study [23] are summarized as follows:

- We propose GeoJSEval, the first automated evaluation framework for assessing LLMs in JavaScript-based geospatial code generation. The framework enables a fully automated, structured, and extensible end-to-end pipeline—from prompt input and code generation to execution, result comparison, and error diagnosis—addressing the current lack of standardized evaluation tools in this domain.

- We construct an open-source GeoJSEval-Bench, a comprehensive benchmark consisting of 432 function-level test tasks and 2071 parameterized cases, covering 25 types of JavaScript-based geospatial data. The benchmark systematically addresses core spatial analysis functions, enabling robust assessment of LLM performance in geospatial code generation.

- We conduct a systematic evaluation and comparative analysis of 20 representative LLMs across four model families. The evaluation quantifies performance across multiple dimensions—including execution pass rates, accuracy, resource consumption, and error type distribution—revealing critical bottlenecks in spatial semantic understanding and frontend ecosystem adaptation, and offering insights for future model optimization.

The remainder of this paper is organized as follows: Section 2 reviews related work on geospatial code, code generation tasks, and LLM-based code evaluation. Section 3 presents the task modeling, module design, construction method, and construction results of the GeoJSEval-Bench test suite. Section 4 details the overall evaluation pipeline of the GeoJSEval framework, including the implementation of the submission and judge programs. Section 5 describes the evaluated models, experimental setup, and the multidimensional evaluation metrics. Section 6 presents a systematic analysis and multidimensional comparison of the evaluation results. Section 7 concludes the study by summarizing key contributions, discussing limitations, and outlining future research directions.

2. Related Work

2.1. Geospatial Code

Geospatial code refers to the programmatic representation of logic for reading, processing, analyzing, and visualizing geospatial data. It plays a central role in enabling task automation and reusable modeling in modern Geographic Information Systems (GIS) [24]. Since the 1980s, geospatial code has undergone a multi-phase evolution—from embedded scripting in proprietary platforms, to cross-platform function libraries, and more recently to cloud computing and browser-based interactive environments. In the early stages, geospatial code was embedded within closed GIS platforms such as Arc/INFO, MapInfo, and GRASS GIS. Users performed basic tasks—such as vector editing, raster reclassification, and buffer generation—via command-line interfaces or macro languages. These systems were platform-dependent and lacked portability and extensibility. Since the 2000s, driven by the rise of open-source ecosystems, GIS programming has shifted toward function library paradigms built on general-purpose languages such as Python, R, and Java. Toolchains such as GDAL/OGR, Shapely, PySAL, and PostGIS have enhanced standardization and cross-platform operability in geospatial analysis workflows [25]. In parallel, mainstream desktop GIS platforms introduced scripting APIs—such as ArcPy for ArcGIS and PyQGIS for QGIS—enabling users to build plugins and automated workflows for customizable spatial analysis [26]. Since the 2010s, the advent of cloud computing has driven the evolution of geospatial code toward service-oriented architectures. Platforms like GEE offer cloud-based processing of global-scale remote sensing and spatiotemporal data through JavaScript and Python APIs, enabling a “Code-as-a-Service” paradigm for geospatial modeling. With the proliferation of WebGIS applications, JavaScript has emerged as a key language due to its native browser execution and high interactivity. Frontend libraries such as Leaflet, OpenLayers, and Mapbox support map rendering and interactive control, while analytical libraries like Turf.js, JSTS, and Geolib enable buffer analysis, geometric operations, and spatial relationship evaluation. These tools collectively foster a transition from backend geospatial coding to a “computable web” architecture [27]. JavaScript’s lightweight nature, cross-platform compatibility, and client-side computing capabilities position it as a powerful medium for real-time visualization and interactive spatial analysis.

2.2. Code Generation

Code generation originated in the general-purpose programming domain and has evolved through three major phases: rule-based, data-driven, and LLM-based approaches. Since the 1980s, early code generation techniques primarily relied on manually defined rules and templates to automate code creation. For example, visual programming languages enabled users to generate code by dragging and dropping graphical modules. While suitable for educational or low-complexity tasks, such systems lacked the capacity to model semantic and logical dependencies, limiting their applicability in complex software development [28]. Beginning around 2010, the increasing availability of large-scale code datasets and advancements in deep learning enabled the emergence of data-driven code generation approaches. These methods learned from existing code samples to perform tasks such as structure completion and syntax correction. Systems like DeepCode, for instance, learned general coding patterns from open-source repositories to support code completion and provide security suggestions [29]. However, these approaches often relied on static feature extraction, exhibited limited generalization capabilities, and were heavily dependent on annotated training data. Since 2020, the advent of LLMs has ushered in a new era of code generation. CodeBERT introduced a dual-modality pretraining strategy that jointly learns from natural and programming languages, supporting tasks such as code completion and Natural Language to Code (NL2Code) generation [30]. Subsequently, models such as OpenAI Codex and PolyCoder leveraged large-scale natural language–code pair datasets to enable end-to-end automation from requirement understanding to multilingual code generation, thereby establishing the NL2Code paradigm. Models developed during this stage not only demonstrated strong language comprehension, but also began to exhibit emerging capabilities in code reasoning and task planning, leading to widespread deployment in real-world software development tools [31].

Compared to general-purpose code generation, research on geospatial code generation emerged relatively late. Early efforts primarily relied on platform-embedded template systems and rule-based engines. Examples include the parameterized scripting wizards in GEE, the ModelBuilder in Esri ArcGIS Pro, and subsequent AI-assisted suggestion tools, all of which focused on improving function call efficiency but lacked support for cross-platform generalization and natural language task interpretation. It was not until October 2024, with the publication of two seminal benchmark studies, that geospatial code generation was formally defined as an independent research task. These works extended the general NL2Code paradigm into NL2GeospatialCode, providing the theoretical foundation for this emerging field. Since then, the field has experienced rapid growth, with a proliferation of optimization strategies. For example, the Chain-of-Programming (CoP) strategy enables task decomposition via programming thought chains [32]; Geo-FuB and GEE-Ops construct functional semantic and API knowledge bases, which, when integrated with Retrieval-Augmented Generation (RAG), significantly enhance code generation accuracy [33,34]. GeoCode-GPT further advances the field by fine-tuning LLMs on geoscientific code corpora, making it the first domain-specialized LLM for geospatial code generation [20]. In addition, agent-based frameworks such as GeoAgent, GIS Copilot, and ShapefileGPT incorporate task planners, tool callers, and feedback loops to support end-to-end workflows from natural language understanding to geospatial code generation and autonomous execution—forming the preliminary blueprint of a “self-driving GIS” system [35]. Collectively, these advancements signify a shift in geospatial code generation from niche applications toward mainstream research in system modeling and model optimization.

Nevertheless, significant challenges remain, including strong platform dependency, complex function structures, heterogeneous data formats, and high semantic reasoning difficulty [36,37]. Furthermore, the absence of standardized evaluation protocols and reproducible testbeds limits the scalability of model development and impedes fair cross-model comparison. Therefore, establishing a comprehensive evaluation framework for geospatial code generation—one that supports multi-platform, cross-language, and task-complete assessments—remains a critical step toward advancing this field.

2.3. Evaluation of Code Generation

Evaluation frameworks for code generation tasks have undergone a continuous evolution from syntax validation to semantic understanding. Early approaches primarily focused on syntactic correctness, functional executability, and output consistency—typically evaluated by directly comparing the generated output against the expected results. For example, Sutherland’s 1988 theory on code generation correctness emphasized syntactic precision and logical consistency, yet lacked consideration for multidimensional aspects of code quality [38]. Since 2010, with the emergence of large-scale annotated datasets and automated verification systems, evaluation has progressively shifted toward a data-driven paradigm. For instance, Google conducted comprehensive evaluations of Python code generation within neural machine translation frameworks, covering aspects such as functional correctness, execution efficiency, and structural soundness—substantially enhancing both accuracy and applicability of evaluation. Since 2020, the advancement of LLMs has led to the standardization of evaluation benchmarks. Datasets such as HumanEval, MBPP, and APPS are now widely adopted to assess code executability, semantic fidelity, and task generalization [39]. Evaluation protocols proposed by OpenAI further broaden the scope by incorporating deep understanding of complex code structures and logical reasoning, with a particular emphasis on models’ capabilities in semantic comprehension and intent recognition [40].

Compared to general-purpose code, evaluating geospatial code generation presents substantially more complex challenges, primarily due to the difficulty of handling multimodal heterogeneous data and the strong dependency on specific platforms. Geospatial data spans various formats, including vector and raster types. However, platforms differ significantly in terms of data structures, access mechanisms, and operator invocation logic, often resulting in semantic inconsistencies when generated code is executed across different environments. Against this backdrop, evaluating geospatial code in the JavaScript environment introduces additional dimensions of complexity. In recent years, JavaScript has been widely adopted in geospatial visualization and analysis scenarios, owing to its native browser execution and high interactivity. Browser-executable geospatial code is characterized by lightweight design, asynchronous execution, and tightly coupled logic. Evaluating such code requires not only verifying syntactic and logical correctness, but also assessing spatial semantic comprehension, API interface alignment, browser runtime behavior, and interaction fidelity. However, JavaScript-based geospatial code remains largely unaddressed in mainstream code evaluation benchmarks, and lacks dedicated, systematic assessment frameworks. Some preliminary efforts have been made to evaluate geospatial code generation—for example, Wuhan University proposed GeoCode-Bench and GeoCode-Eval—but these rely heavily on manual expert review, leading to issues of subjectivity and poor reproducibility. The University of Wisconsin conducted a preliminary evaluation of GPT-4 for ArcPy code generation, yet the associated data and methodology were not publicly released, and the scope of task complexity and platform diversity was limited. AutoGEEval and its extension AutoGEEval++ introduced automated evaluation pipelines for the GEE platform, improving systematization and execution validation. Nevertheless, their support for JavaScript frontend ecosystems and diverse task types remains limited [21,22].

3. GeoJSEval-Bench

This study presents GeoJSEval-Bench, a standardized benchmark suite designed for unit-level evaluation of geospatial function calls. It aims to assess the capability of LLMs in generating JavaScript-based geospatial code. The benchmark is constructed based on the official API documentation of five representative open-source JavaScript geospatial libraries, covering core task modules ranging from spatial computation to map visualization. These libraries include Turf.js, JSTS, Geolib, Leaflet, and OpenLayers. These libraries can be broadly categorized into two functional groups. The first group focuses on spatial analysis and geometric computation. Turf.js offers essential spatial analysis functions such as buffering, union, clipping, and distance calculation, effectively covering core geoprocessing operations in frontend environments. JSTS specializes in topologically correct geometric operations, supporting high-precision analysis for complex geometries. Geolib focuses on point-level operations in latitude–longitude space, providing precise distance calculation, azimuth determination, and coordinate transformation. It is particularly valuable in location-based services and trajectory analysis. The second group comprises libraries for map visualization and user interaction. Leaflet, a lightweight web mapping framework, offers core APIs for map loading, tile layer control, event handling, and marker rendering. It is widely used in developing small to medium-scale interactive web maps. In contrast, OpenLayers provides more comprehensive functionalities, including support for various map service protocols, coordinate projection transformations, vector styling, and customizable interaction logic—making it a key tool for building advanced WebGIS clients. Based on the function systems of these libraries, GeoJSEval-Bench contains 432 unit-level test cases, spanning 25 data types, including map objects, layer objects, strings, and numerical values. This chapter provides a detailed description of the unit-level task modeling, module design, construction methodology, and the final composition of the benchmark.

3.1. Task Modeling

The unit-level testing task () proposed in GeoJSEval is designed to perform fine-grained evaluation of LLMs in terms of their ability to understand and generate function calls for each API function defined in five representative JavaScript geospatial libraries. This includes assessing their comprehension of function semantics, parameter structure, and input-output specifications. Specifically, each task evaluates whether a model can, given a known function header, accurately reconstruct the intended call semantics, generate a valid parameter structure, and synthesize a syntactically correct, semantically aligned, and executable JavaScript code snippet that effectively invokes the target function . This process simulates a typical developer workflow—reading API documentation and writing invocation code—and comprehensively assesses the model’s capability chain from function comprehension to code construction and finally behavioral execution. Fundamentally, this task examines the model’s code generation ability at the finest operational granularity—a single function call—serving as a foundational measure of an LLM’s capacity for function-level behavioral modeling.

Let the set of functions provided in the official documentation of the five mainstream JavaScript geospatial libraries be denoted as:

For each model under evaluation, the task is to generate a code snippet that conforms to JavaScript syntax specifications and can be successfully executed in a real runtime environment:

GeoJSEval formalizes each function-level test task as the following function mapping process:

Specifically, denotes the function header, including the function name and parameter signature. refers to the large language model, which takes as input and produces a function call code snippet . The symbol represents the target test function, and denotes the execution result of the generated code applied to . The expected reference output is denoted by . The module Exec represents the code execution engine, while Judge is the evaluation module responsible for determining whether and are consistent. Furthermore, GeoJSEval defines a task consistency function to assess whether the model behavior aligns with expectations over a test set . The equality operator “=” used in this comparison may represent strict equivalence, floating-point tolerance, or set-based inclusion—depending on the semantic consistency requirements of the task.

3.2. Module Design

All unit-level test cases were generated based on reference data using Claude Sonnet 4, an advanced large language model developed by Anthropic, guided by predefined prompts. Each test case was manually reviewed and validated by domain experts to ensure correctness and executability (see Section 3.3 for details). Each complete test case is structured into three distinct modules: the Function Semantics Module, the Execution Configuration Module, and the Evaluation Parsing Module.

3.2.1. Function Semantics Module

In the task of automatic geospatial function generation, accurately understanding the invocation semantics and target behavior of a function is fundamental to enabling the model to generate executable code correctly. Serving as the semantic entry point of the entire evaluation pipeline, the Function Semantics Module aims to clarify two key aspects: (i) what the target function is intended to accomplish, and (ii) how it should be correctly implemented. This module comprises two components: the function header, which provides the semantic constraints for input, and the standard_code, which serves as the behavioral reference for correct implementation.

- The function declaration provides the structured input for each test task. It includes the function name, parameter types, and semantic descriptions. This information is combined with prompt templates to offer semantic guidance for the large language model, steering it to generate the complete function body. The structure of is formally defined as:

Here, denotes the function name; represents the list of input parameters; specifies the type information for each parameter ; and “” provides a natural language description of the function’s purpose and usage scenario.

- The reference code snippet provides the correct implementation of the target function under standard semantics. It is generated by Claude Sonnet 4 using a predefined prompt and is executed by domain experts to produce the ground-truth output. The behavior of this function should remain stable and reproducible across all valid inputs, that is:

The resulting output serves as the reference answer in the subsequent evaluation logic module. It is important to note that this reference implementation is completely hidden from the model during evaluation. The model generates the test function body solely based on the function declaration , without access to .

3.2.2. Execution Configuration Module

GeoJSEval incorporates an Execution Configuration Module into each test case, which explicitly defines all prerequisites necessary for execution in a structured manner. This includes parameters_list, output_type, edge_test, and output_path. Together, these elements ensure the completeness and reproducibility of the test process.

- The parameter list explicitly specifies the concrete input values required for each test invocation. These structured parameters are injected as fixed inputs into both the reference implementation and the model-generated code , enabling the construction of a complete and executable function invocation and driving the generation of output results.

- The output type specifies the expected return type of the function and serves as a constraint on the format of the model-generated output. Upon executing the model-generated code and obtaining the output , the system first verifies whether its return type matches the expected type . If a type mismatch is detected, the downstream consistency evaluation is skipped to avoid false judgments or error propagation.

- The edge test item indicates whether the boundary testing mechanism is enabled for this test case. If the value is not empty, the system will automatically generate a set of extreme or special boundary parameters based on the standard input values and inject them into the test process. This is done to assess the model’s robustness and generalization ability under extreme input conditions. The edge test set encompasses the following categories of boundary conditions: (1) Numerical boundaries, where input values approach their maximum or minimum limits, potentially introducing floating-point errors during computation. (2) Geometric-type boundaries, where the geometry of input objects reaches extreme cases, such as empty geometries or polygonal data degenerated into a single line. (3) Parameter-validity boundaries, where input parameters exceed predefined ranges—for instance, longitude values beyond the valid range of −180° to 180° are regarded as invalid.

- The output path specifies the storage location of the output results produced by the model during execution. The testing system will read the external result file produced by the model from the specified path.

3.2.3. Evaluation Parsing Module

The evaluation logic module is responsible for defining the decision-making markers that the testing framework will use during the evaluation phase. It clarifies whether the results of the model-generated code are comparable, whether structural information needs to be extracted, and whether auxiliary functions are required for comparison. Serving as the input for the judging logic, this module consists of two components: expected_answer and eval_methods. Its core responsibility is to provide a structured, automated mechanism for evaluability annotation.

- The expected answer refers to the correct result obtained by executing the reference code snippet with the predefined parameters, serving as the sole benchmark for comparison during the evaluation phase, used to assess the accuracy of the model’s output. The generation process is detailed in Section 3.2.1, which covers the Function Semantics Module.

- The evaluation method indicates whether the function output can be directly evaluated. If direct evaluation is not possible, it specifies the auxiliary function(s) to be invoked. This field includes two types: direct evaluation and indirect evaluation. Direct evaluation applies when the function output is a numerical value, boolean, text, or a parseable data structure (e.g., JSON, GeoJSON), which can be directly compared. For such functions, is marked as null, indicating that no further processing is required. Indirect evaluation applies when the function output is a complex object (e.g., Map, Layer, Source), requiring the use of specific methods from external libraries to extract relevant features. In this case, explicitly marks the required auxiliary functions for feature extraction. For auxiliary function calls, based on the function’s role and return type, we select functions from the platform’s official API that can extract indirect semantic features of complex objects (e.g., map center coordinates, layer visibility, feature count in a data source). This ensures accurate comparison under structural consistency and semantic alignment. This paper constructs an auxiliary function library for three typical complex objects in geospatial visualization platforms (Map, Layer, Source), as shown in Table 1. Depending on the complexity of the output structure of different evaluation functions, a single method or a combination of auxiliary methods may be chosen to ensure the accuracy of high-level semantic evaluations.

Table 1. Auxiliary Evaluation Methods for Indirect Output Objects.

Table 1. Auxiliary Evaluation Methods for Indirect Output Objects.

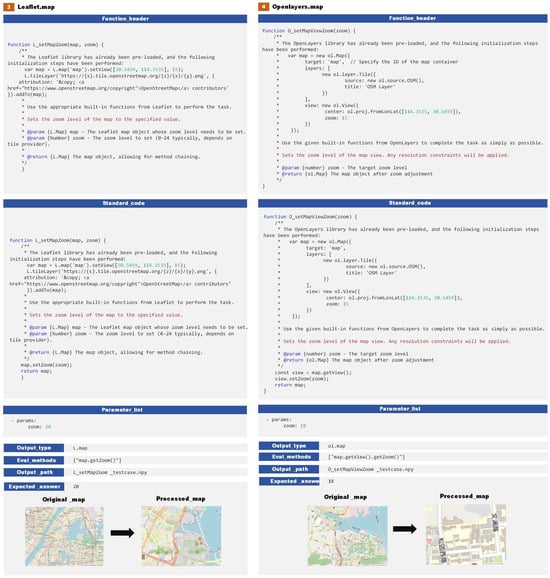

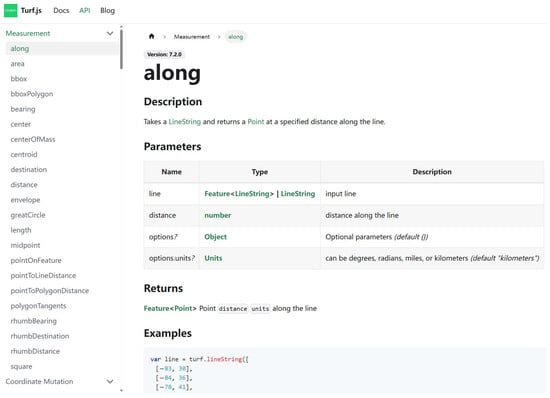

3.3. Construction Method

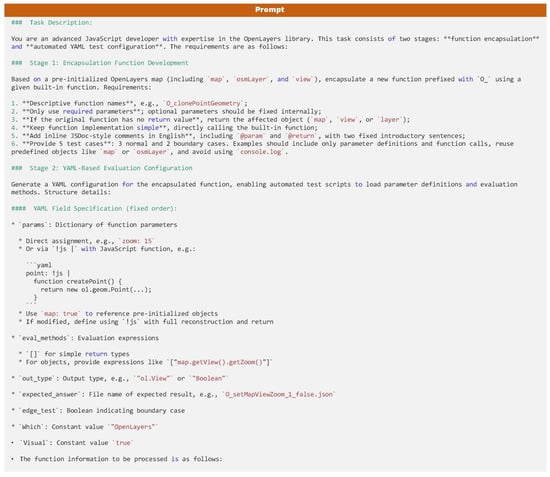

Based on task modeling principles and modular design concepts, GeoJSEval-Bench has constructed a clearly structured and automatically executable unit test benchmark, designed for the systematic evaluation of LLMs in geospatial function generation tasks. This benchmark is based on the official API documentation of five prominent JavaScript geospatial libraries—Turf.js, JSTS, Geolib, Leaflet, and OpenLayers—covering a total of 432 function interfaces. Each function page provides key information, including the function name, functional description, parameter list with type constraints, return value type, and semantic explanations. Some functions come with invocation examples, while others lack examples and require inference of the call structure based on available metadata. Figure A3 illustrates a typical function documentation page.

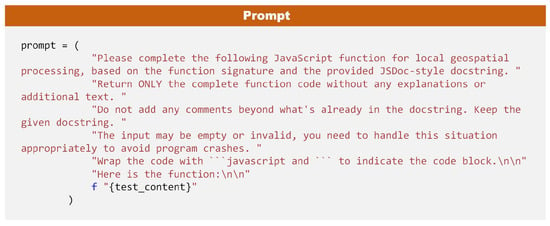

During the construction process, the research team first conducted a structured extraction of the API documentation for the five target libraries, standardizing the function page contents into a unified JSON format. Based on this structured representation, the framework designed dedicated Prompt templates for each function to guide the large language model in reading the semantic structure and generating standardized test tasks. The template design carefully considers the differences across libraries in function naming conventions, parameter styles, and invocation semantics. Figure 3 presents a sample Prompt for a function from the OpenLayers library. Each automatically generated test task is subject to a strict manual review process. The review process is conducted by five experts with geospatial development experience and proficiency in JavaScript. The review focuses on whether the task objectives are reasonable, descriptions are clear, parameters are standardized, and whether the code can execute stably and return logically correct results. For tasks exhibiting runtime errors, semantic ambiguity, or structural irregularities, the experts applied minor revisions based on professional judgment. For example, in a test task generated by the large language model for the buffer function in the Turf.js library, the return parameter was described as “Return the buffered region.” This description was insufficiently precise, as it did not specify the return data type. The experts revised it to “Return the buffered FeatureCollection | Feature < Polygon | MultiPolygon>.” thereby clarifying that the output should be either a FeatureCollection or a Feature type, explicitly containing geometric types such as Polygon or MultiPolygon. The background information and selection criteria for the expert team are detailed in Table A1. All tasks that pass the review and execute successfully have their standard outputs saved in the specified output_path as the reference answer. During the testing phase, the judging program automatically reads the reference results from this path and compares them with the model-generated output, serving as the basis for evaluating accuracy.

Figure 3.

Prompt for test construction.

3.4. Construction Results

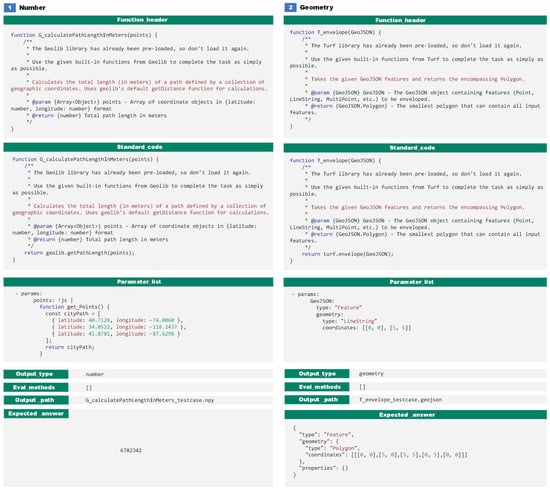

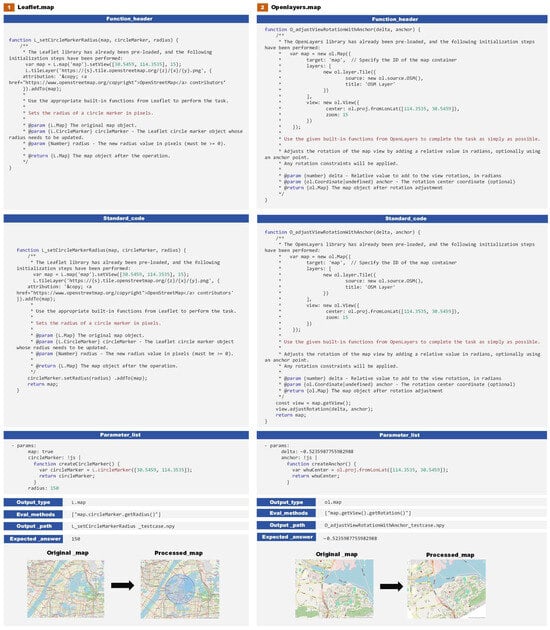

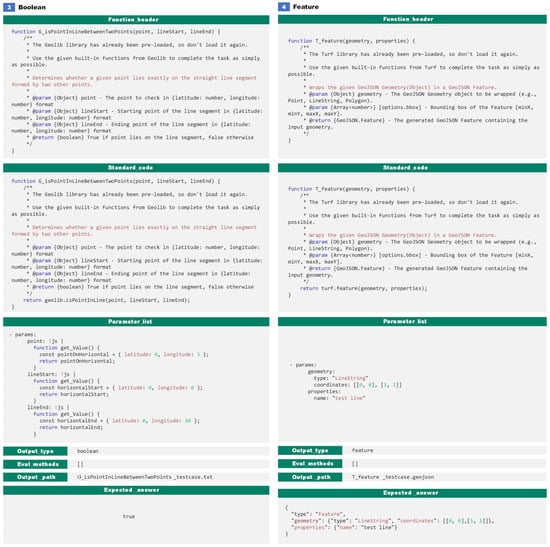

GeoJSEval-Bench ultimately constructed 432 primary function-level test tasks, covering all well-defined and valid functions across the five target libraries. Based on this foundation, and incorporating various input parameters and invocation path configurations, an additional 2071 structured unit test cases were generated to systematically cover diverse use cases. The test tasks encompass a wide variety of data types, reflecting common data structures in geospatial development practice. A total of 25 types are covered, with the detailed list provided in Table 2. From an evaluability perspective, these data types can be broadly categorized into two groups: one consists of basic types with clear structures and stable semantics, such as numeric variables, strings, arrays, and standard GeoJSON. These types of data can be directly parsed into structured results and are suitable for direct comparison in evaluation. The other group consists of more complex encapsulated object types, such as Map and Layer objects in Leaflet or OpenLayers, where internal state information cannot be accessed directly and requires the use of auxiliary methods to extract semantic information and align the structure. To aid in understanding the differences between these types and their corresponding evaluation strategies, Figure 4 shows a direct evaluation test case example, while Figure 5 demonstrates a test case for complex object types requiring indirect structural extraction. A few examples are provided here, with additional detailed examples available in Appendix A.

Table 2.

Output Types in GeoJSEval-Bench.

Figure 4.

Unit Test Example for Direct Evaluation of Primitive Output Types.

Figure 5.

Unit Test Example for Indirect Evaluation of Complex Geospatial Objects.

4. Submission and Judging Programs

During the evaluation phase, the GeoJSEval framework constructs an automated evaluation system through the collaboration of the Submission Program and the Judge Program, covering the entire process from code generation to result determination. The Submission Program is responsible for scheduling the large language model to generate and execute function call code based on the GeoJSEval-Bench test suite. The Judge Program systematically evaluates the model’s output by comparing it with the reference answer. It applies various equivalence determination strategies based on output type and semantic features. This chapter outlines the core design and operational flow of the Submission Program and Judge Program.

4.1. Submission Program

The Submission Program is responsible for scheduling the evaluation of each test task in GeoJSEval-Bench by the large language model. As shown in Figure 2b, the overall process includes four key modules: code generation, evaluability determination, code execution, and result storage. During the generation phase, the system uses the structured function header provided by the Function Semantics Module as the sole input, combined with predefined prompt templates, to guide the model in generating the target function body. The prompt explicitly instructs the model to output only the JavaScript function body, without any additional text or explanations (as shown in Figure 6), ensuring that the generated code is executable and free from unstructured interference.

Figure 6.

Prompt for submission program.

After the model generates the code, the system injects input values into the function call based on the parameters_list set in the Execution Configuration Module. The structured parameter values are mapped to the formal parameter positions in the model-generated JavaScript code, constructing a complete executable function call expression. This ensures that the generated code has all the necessary contextual information for execution in a uniform environment. Subsequently, the system refers to the eval_methods field in the Evaluation Logic Module to determine how the output should be stored and whether it needs to enter an auxiliary processing path. If eval_methods is empty, it indicates that the function output is a structured basic type (e.g., numeric, boolean, array, GeoJSON), which can be directly obtained through code execution. The result is then saved to the specified output_path. If eval_methods specifies auxiliary function calls (e.g., map.getCenter(), layer.getOpacity()), the system will automatically append the auxiliary function logic after executing the main function call. The output from these auxiliary functions will then be stored as part of the evaluation.

4.2. Judging Program

The overall process of the judging module is shown in Figure 2c, consisting of three stages: result reading, output parsing, and equivalence determination. The core task is to read the result file (specified by output_path) and, based on the output_type, select the appropriate comparison logic to evaluate the consistency between the model output and the expected_answer. Specifically, the 25 types covered by GeoJSEval-Bench encompass a broad range of types, from basic data structures to spatial geometric objects (as detailed in Table 2). However, in practice, although the configuration module specifies a clear output type for each function, results often exhibit overlap in both value and structural levels. For instance, Boolean and String both appear as atomic strings, while functions like geolib.coordinates and geolib.center output nested JSON objects, with differing field structures but similar semantics. Similarly, several spatial functions, such as turf.buffer, jsts.union, and turf.difference, return values in different forms, but essentially represent GeoJSON-wrapped spatial geometries, with types like Geometry, Feature, or FeatureCollection. Based on this, GeoJSEval introduces an intermediate abstraction layer for “numeric representations”, merging types into several comparable data expression categories according to the actual format of the runtime results. The system then selects the most suitable comparison strategy based on this categorization. Finally, the system outlines the structural features and evaluation logic mapping for various function outputs across the five main libraries (as shown in Table 3). Based on this mapping, the framework establishes a type-aware equivalence judgment function, which selects the most appropriate comparison strategy based on the mapped type:

Table 3.

Summary of Value Representations and Evaluation Strategies in GeoJSEval.

Let denote the execution result of the model-generated code in the runtime environment, and represent the reference standard answer. represents the unified mapped performance type, and denotes the error tolerance threshold, primarily used for approximate matching of floating-point numeric types. “” is used for equivalence determination of set types, where the element order is ignored. “” is applicable to nested JSON structures, assessing key set consistency and exact key-value pair matching. “” is intended for standard GeoJSON spatial objects, evaluating the consistency of their geometric topology and attribute semantics. For complex object types returned by visualization platforms, such as Map or Layer, GeoJSEval cannot directly compare their entire structure. Instead, it introduces an auxiliary function set “”, such as “”, to extract key structural and semantic features from the model’s execution results. The formal output “” is then compared with the reference answer for indirect equivalence determination.

It is noteworthy that for test cases involving complex object types such as Map or Layer, a unified and strictly controlled testing environment was designed. Prior to executing any model-generated code, the system preloads a consistent basemap and environment configuration, ensuring that execution unfolds within a standardized context and mitigating interference from background variability. This guarantees that structured auxiliary functions can reliably determine whether the model-generated code has correctly implemented the intended functionality. After the model code completes the webpage rendering, the system automatically captures the visible region of the page and saves it as a standard screenshot. Although screenshot results do not directly participate in scoring, they provide an auxiliary review perspective when the output structure is unclear or when the model’s behavior may lead to visual discrepancies. This helps identify issues such as layers not loading, abnormal styling, or interaction failures—problems that structural analysis alone may fail to capture.

5. Experiments

This chapter introduces the selection criteria for the models under evaluation, the experimental setup, and the evaluation metrics framework.

5.1. Evaluation Models

The evaluation objects selected in this study are mainstream LLMs publicly released as of July 2025, encompassing representative models with high performance, good reproducibility, and broad application impact. All included models are either officially open-source or have open interfaces, with stable release records and empirical validation in academic or industrial settings, ensuring the reproducibility and practical relevance of the evaluation results. The evaluation focuses on the model’s performance in end-to-end application scenarios, aiming to provide researchers and developers in geospatial computing with practical guidance for model selection. For models available in multiple parameter scales, we included as many variants as possible (e.g., 3B, 7B, 32B) to comprehensively capture performance differences across scales in real-world applications. It is important to note that although strategies like Prompt Engineering, RAG, and multi-agent collaboration may improve performance in some tasks, they do not fundamentally alter the model structure. Furthermore, their performance is highly dependent on specific configurations and scenario adaptation, raising concerns about stability and generalization capabilities. Additionally, such methods often introduce lengthy prompts, resulting in extra token consumption, which impacts the fairness and resource controllability of the evaluation. To ensure controllability, generalizability, and cross-model comparability within the evaluation framework, these strategy-based methods are deliberately excluded from the present study. In terms of model type coverage, the evaluation encompasses four major categories: (1) general non-reasoning large language models; (2) general reasoning large language models; (3) general code generation models; (4) geospatial code generation models. For some models with publicly available versions of varying parameter sizes, this study tests different instances to comprehensively present the impact of model size on performance. It is worth noting that, because the test questions in this study were generated from reference data using predefined prompts with Claude Sonnet 4, all Claude-series LLMs were deliberately excluded from the evaluation phase. A total of 20 model instances were evaluated, with the specific list of models and their parameter settings provided in Table 4.

Table 4.

Information of Evaluated LLMs.

5.2. Experimental Setup

The experiment was conducted on a local machine equipped with 32 GB of memory and an NVIDIA RTX 4090 GPU, enabling efficient inference and concurrent processing for medium-scale models. For open-source models with parameter sizes not exceeding 16B, the Ollama tool was used to locally deploy and execute inference. For closed-source models with parameters exceeding 16B or those that do not support local deployment, inference was performed via their official API interfaces to ensure operational stability and output consistency. In terms of parameter settings, general-purpose non-reasoning models were configured with a low generation temperature (temperature = 0.2) to enhance output determinism. Large models with reasoning capabilities retained their default configurations to fully leverage their native logical abilities. To prevent output truncation and standardize result formats, the maximum output token count for all models was set to 16,384. The time consumption for each phase and task descriptions are detailed in Table 5.

Table 5.

Time Allocation Across Experimental Stages.

5.3. Evaluation Metrics

This study systematically evaluates the performance of LLMs in geospatial code generation tasks across four key dimensions: accuracy metrics, resource consumption metrics, operational efficiency metrics, and error type logs.

5.3.1. Accuracy Metrics

To comprehensively evaluate the stability and accuracy of the model in code generation tasks, this study uses pass@n as the core performance metric. This metric indicates the probability that at least one of the outputs, after n independent generations for the same problem, matches the reference answer. It is commonly used to assess the reliability and robustness of models in generation tasks. Given that large language models often experience hallucination issues during generation—where multiple outputs with significant semantic or syntactic differences arise from the same input—single-round generation is often insufficient to reflect the true capabilities of the model. To enhance the stability of the evaluation and the reliability of the results, this study sets three configurations for comparison, with n = 1, 3, and 5.

Here, represents the total number of generated samples, and denotes the number of correct results among them.

Furthermore, this study introduces the Coefficient of Variation (CV) as an additional evaluation metric to measure the degree of variation in the model’s results across multiple generations, indirectly reflecting the severity of the hallucination phenomenon. The CV is defined as the ratio of the standard deviation to the mean, with the following formula:

Here, is the standard deviation, and is the mean. A lower CV value indicates less variability in the model’s generated results, reflecting higher stability.

To achieve a more comprehensive model evaluation, this study further introduces Stability-Adjusted Accuracy (SA), which builds on the evaluation of final accuracy (represented by Pass@5) while incorporating the stability factor (CV) into the calculation. A higher SA value is achieved when Pass@5 scores are high and CV is low, reflecting that the model not only maintains accuracy but also exhibits strong consistency in generation. The calculation is as follows:

5.3.2. Resource Consumption Metrics

The resource consumption metrics aim to assess the utilization of computational power and resources by LLMs during geospatial code generation tasks. These metrics include three dimensions:

Token Consumption (Tok.): Represents the average number of tokens consumed by the model to complete each unit test. For locally deployed open-source models, this value reflects their usage of local computational resources, such as memory and GPU memory. When calling closed-source or commercial model APIs, the token count is directly mapped to usage costs. Currently, most mainstream models charge based on “tokens per million”, with significant variations in consumption. This impacts resource scheduling, as well as the economic feasibility and scalability of the system.

Inference Time (In.T): Refers to the average response time (in seconds) for the model to generate the code for a single test case. It is used to evaluate the model’s inference latency and response efficiency, which directly impacts the user interaction experience in real-world applications. For models accessed via remote APIs, the inference time inevitably includes network request and response transmission delays, and thus cannot be regarded as a pure measure of the model’s intrinsic inference speed. In contrast, locally deployed models reflect only the raw computational time required under the local hardware environment. Consequently, the evaluation metric In.T is influenced by environmental factors and is denoted with a double asterisk (**) in Table 8.

Code Lines (Co.L): This metric counts the number of valid code lines in the model’s output, excluding non-functional content such as comments, natural language explanations, and formatting instructions. Compared to token consumption, this metric better reflects the model’s actual output in structured code generation, making it easier to assess its programming efficiency and output quality.

5.3.3. Operational Efficiency Metrics

To systematically evaluate the cost-effectiveness of large language models in geospatial code generation tasks, this study introduces the operational efficiency metrics to measure the level of accuracy achieved per unit of resource consumption. These metrics are based on three key resource dimensions: time consumption, token consumption, and code structure complexity, which correspond to the model’s inference efficiency, token utilization efficiency, and structured code efficiency, respectively. Considering the inherent randomness in model outputs, and that each task generates five results, all efficiency-related metrics are based on Pass@5, ensuring comparability and statistical robustness across models in terms of evaluation frequency and accuracy.

Inference Efficiency: Inference efficiency measures the average accuracy achieved by the model per unit of time. It is calculated as the accuracy divided by the average inference time (in seconds):

This metric reflects the model’s overall balance between inference speed and output quality. A shorter inference time combined with higher accuracy indicates a stronger advantage in computational resource utilization and user response experience, making it suitable for tasks that require high real-time interactivity.

Token Efficiency: Token efficiency characterizes the accuracy achieved by the model per unit of token cost, defined as follows:

This metric can be used to compare the cost-effectiveness between different models, particularly useful for evaluating API-based pay-per-use large language models. It holds significant reference value in scenarios with limited resource budgets or large-scale deployments. Models with lower token consumption and higher accuracy exhibit a better cost-performance ratio (cost-effectiveness). As discussed in Section 5.3.2, the Inference Time metric for locally deployed models and models accessed via remote APIs is affected by environmental factors. Therefore, the Inference Efficiency metric is denoted with a double asterisk (**) in Table 9.

Code Line Efficiency: Code efficiency focuses on the number of core executable code lines generated by the model, excluding non-structural content such as natural language explanations and comments. It is defined by the following formula:

This metric reveals the model’s ability to generate output with compact structure and logical validity, closely aligning with the requirements for maintainability and execution efficiency in real-world engineering development. Models that generate concise and structurally clear code have greater practical value during deployment and subsequent iterations.

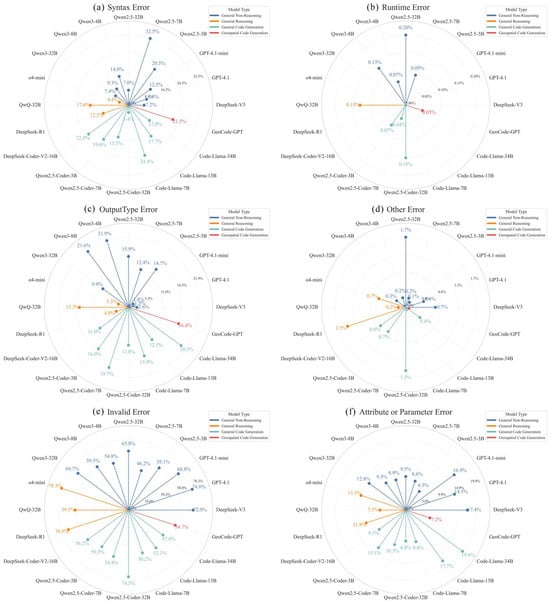

5.3.4. Error Type Logs

To further enhance the qualitative understanding of model behavior and the identification of performance bottlenecks, the GeoJSEval framework introduces an automatic runtime error capture mechanism in the standard JavaScript execution environment. This mechanism systematically records the types of errors generated during the code generation process by large language models, aiding in targeted optimization of model performance. This mechanism supports the identification and classification of the following four common error types:

Syntax Error: Refers to issues in the syntactical structure of the code that prevent it from compiling. For example, unclosed parentheses, spelling mistakes, or missing required module imports, which commonly result in a SyntaxError.

Attribute or Parameter Error: Refers to incorrect calls to object properties or function parameters, such as accessing a non-existent function or passing incorrect types or quantities of parameters. This typically results in a TypeError or ReferenceError. This type of error indicates a misunderstanding by the model of the API semantics and interface structure, especially in handling function signatures and invocation constraints.

Output Type Error: Refers to cases where the result type returned by the generated code after execution does not match the expected type and cannot be correctly parsed by the evaluation program. For example, expecting a GeoJSON.Feature but the actual output is a general object, or mistakenly generating a string instead of a coordinate array. This type of error reflects the model’s shortcomings in type inference, structure construction, and task semantic understanding, directly affecting the code’s evaluability and generality.

Invalid Answer: Refers to cases where the code syntax and types are correct, and the code executes successfully, but the output does not match the reference answer. This may manifest as logical errors in functionality implementation or incorrect function selection. This type of error is attributed to the model’s misunderstanding of the task objectives or function semantics, representing a semantic-level failure.

Runtime Error: Refers to cases where the model-generated code experiences prolonged periods of no response or enters an infinite loop during execution, exceeding the system’s maximum runtime threshold (30 s), resulting in a forced termination. These errors are often caused by irrational model logic design, unterminated asynchronous function calls, repeated recursion, or excessive data processing. They severely impact the executability of the task and the stability of system resource utilization.

Other Error: This type of error refers to miscellaneous issues that do not fall under common syntax, parameter, or runtime exceptions. These errors typically arise from the model’s misinterpretation or neglect of contextual prompts during the code generation process. For example, in the GeoJSEval testing environment, all necessary third-party libraries (such as Turf, Geolib, OpenLayers, Leaflet, etc.) are pre-loaded, and the prompt explicitly informs the model that “module imports do not need to be repeated.” Despite this, some models still exhibit the behavior of redundantly importing modules when generating code, such as re-invoking import or require statements. Although this type of error does not affect the syntax itself, it can lead to module conflicts or duplicate declaration errors in the JavaScript execution environment, affecting code executability and evaluation stability. This phenomenon reflects a gap in current large language models’ ability to understand multi-turn contexts and follow instructions accurately.

6. Results

Building upon Section 5.3, this chapter systematically introduces and analyzes the evaluation results based on the GeoJSEval framework and the GeoJSEval-Bench test suite, focusing on four key aspects: accuracy metrics, resource consumption metrics, operational efficiency metrics, and error type logs.

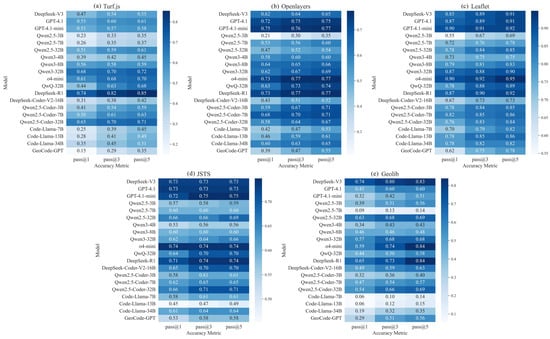

6.1. Accuracy

Figure 7 shows the performance of various large language models in terms of execution accuracy across five major JavaScript geospatial libraries, with pass@5 as the primary metric. Overall, there are significant differences in the average accuracy rates of models across different libraries, reflecting an uneven generalization ability across task types. Models performed better in libraries focused on visualization and interaction control, such as Leaflet (0.837) and OpenLayers (0.633), indicating that they are more adaptable to tasks with intuitive semantics and structured frameworks. However, in libraries like Turf.js (0.571) and Geolib (0.565), which involve complex spatial logic and geometric computations, the accuracy is relatively low, indicating that the models still have significant shortcomings in spatial semantic modeling. From a model category perspective, General Reasoning models consistently maintain high accuracy across all libraries, demonstrating stronger cross-task stability and structural adaptability. In contrast, General Non-Reasoning and Geospatial Code Generation models show a noticeable drop in performance on tasks with complex structures or ambiguous function semantics. Individual models, such as Qwen3-32B, show considerable variation in accuracy across different libraries, indicating strong task sensitivity. GeoCode-GPT and CodeLlama-7B rank lowest in accuracy across all libraries, indicating that they lack effective generalization capabilities for the semantic structures of mainstream JS geospatial libraries.

Figure 7.

Heatmap of Accuracy Across Models and Geospatial JavaScript Libraries. Each cell represents the pass@1, pass@3, pass@5 accuracy (%) of a specific model on a specific geospatial JavaScript library. Darker shades indicate higher accuracy. The white text within each cell displays the exact pass@1, pass@3, pass@5 score.

The accuracy-related evaluation results for each model are shown in Table A2. The overall pass@n metric for each model is calculated by combining all tasks across the five geospatial libraries, rather than averaging the results for each library, to more accurately reflect the model’s overall performance in JavaScript geospatial code understanding and generation tasks.

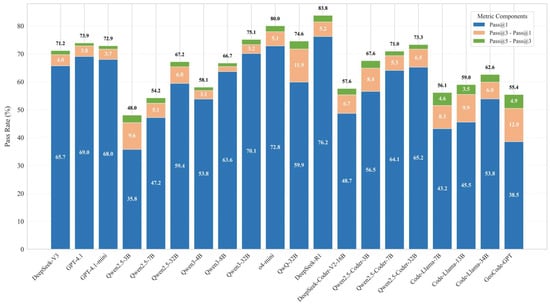

Figure 8 shows the performance differences of 20 models across pass@1, pass@3, and pass@5, reflecting their accuracy distribution characteristics in both single-round and multi-round generation processes. Overall, DeepSeek-R1 (83.8%), o4-mini (80.0%), and Qwen3-32B (75.1%) performed the best. DeepSeek-R1 achieved pass@1 of 76.2%, with limited subsequent gains, indicating strong one-shot generation capability. Model scale was closely associated with performance: within the Qwen-2.5 and Qwen-2.5-Coder series, the 32B model significantly outperformed the 7B and 3B versions; similarly, within the Code-Llama series, the 34B model achieved substantially higher accuracy than the 13B and 7B versions. These results suggest that increasing parameter size contributes to improved stability. It is worth noting that models such as GPT-4.1, GPT-4.1-mini, and DeepSeek-V3 achieved high accuracy at pass@1, reflecting their good generalization ability and high initial generation quality in geospatial tasks.

Figure 8.

Stacked Bar Chart of pass@n Metrics. The blue represents the Pass@1 value, the orange represents the improvement of Pass@3 over Pass@1, and the green represents the improvement of Pass@5 over Pass@3. The white text on the bars indicates the absolute scores for Pass@1, Pass@3, and Pass@5, respectively.

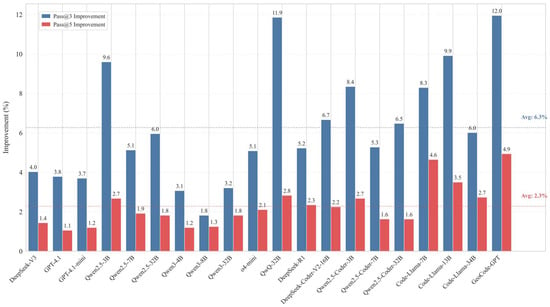

Figure A4 analyzes the accuracy improvement in multi-round generation, reflecting the “compensability” of candidate redundancy. The results show that the average gain from pass@1 to pass@3 is 6.1%, while the gain from pass@3 to pass@5 is only 2.2%, displaying a clear diminishing marginal effect. This suggests that most effective candidates are concentrated in the first three generations. Some models, such as GeoCode-GPT (+12.0%), Qwen3-32B (+11.9%), and Qwen-2.5-3B (+9.6%), show significant improvement at pass@3, indicating that their initial generation is unstable but has the potential to achieve better solutions through candidate reordering. In contrast, models like the GPT-4.1 series and DeepSeek-V3 show smaller gains, indicating that their initial generation quality is high and the value of candidate redundancy is limited. Multi-round generation strategies have a stronger compensatory effect on smaller and medium-sized models, while the potential for improvement is more limited for larger models with higher structural optimization.

To evaluate the trade-off between accuracy and stability in code generation by large language models, this study constructs a composite ranking metric based on Pass@5, Coefficient of Variation (CV), and Stability-Adjusted Accuracy (SA), with the results shown in Table 6. The orange shading in the table indicates models with higher accuracy ranking (P_Rank) but poorer stability, leading to a lower composite ranking (S_Rank). Examples include QwQ-32B, Qwen2.5-Coder-3B, and Code-Llama-7B, reflecting their significant fluctuations in generated results. The blue shading indicates models with average accuracy rankings but outstanding stability, showing a clear advantage in S_Rank. Examples include GPT-4.1, GPT-4.1-mini, DeepSeek-V3, Qwen2.5-7B, and Qwen3-8B, making them suitable for scenarios where consistency in results is crucial. General Reasoning models demonstrate a more balanced performance between accuracy and stability, leading to higher composite rankings.

Table 6.

Ranking of the models under Pass@5, CV, and SA metrics. P_Rank, C_Rank, and S_Rank represent the rankings based on Pass@5, CV, and SA, respectively. Higher values of Pass@5 and SA indicate better performance and higher ranking, while a lower CV value indicates better performance and a higher ranking. The table is sorted by S_Rank, reflecting the accuracy ranking of the models with the inclusion of stability factors, rather than solely considering accuracy. Category 1, 2, 3, and 4 correspond to General Non-Reasoning Models, General Reasoning Models, General Code Generation Models, and Geospatial Code Generation Models, respectively. The orange shading in the table indicates models with higher accuracy ranking (P_Rank) but poorer stability, leading to a lower composite ranking (S_Rank). The blue shading indicates models with average accuracy rankings but outstanding stability, showing a clear advantage in S_Rank.

6.2. Resource Consumption

The evaluation results of resource consumption are shown in Table 7, where this study visualizes the analysis of token consumption, inference time, and the number of core code lines generated.

Table 7.

Evaluation Results for Resource Consumption. For the QwQ-32B model using API calls, due to the provider’s configuration, only “streaming calls” are supported. In this mode, Token consumption cannot be tracked, so it is marked as N/A.

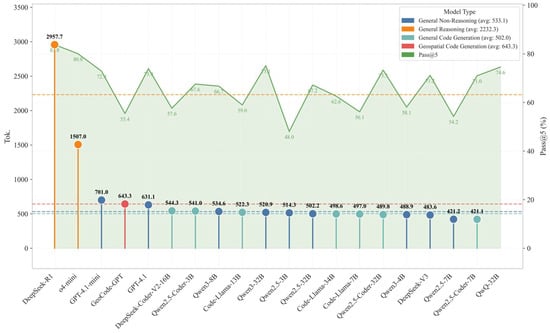

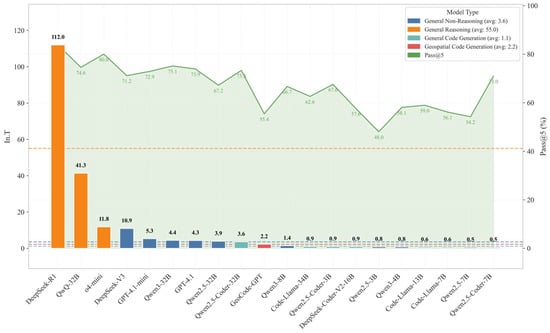

Figure 9 displays the average token consumption of each model during the code generation process, with the green line representing the average pass@5 performance across the five geospatial libraries. The results show that General Reasoning models consume far more tokens than other model types, approximately 15 to 42 times higher than General Non-Reasoning, Code Generation, and Geospatial Code Generation models. Despite their clear advantage in accuracy, the high inference costs increase the usage expenses. This result provides a cost assessment basis for model deployment, advising users to balance accuracy benefits with resource consumption when selecting models, and to match the application scenario with performance requirements accordingly.

Figure 9.

Average Token Consumption of LLMs and Mean pass@5 Performance Across Five JavaScript Libraries for Different LLMs. Each point on the green line represents the pass@5 value of the corresponding model.

The inference time consumption of various large language models during code generation is shown in Figure 10. General Reasoning models generally have higher inference times, but o4-mini is an exception, with an average inference time lower than most models, even slightly better than DeepSeek-V3, possibly benefiting from a more efficient inference architecture or deployment optimization. The average inference times of DeepSeek-R1 and QwQ-32B are 112.0 s and 41.3 s, respectively, significantly higher than similar models, indicating substantial inference overhead. This result suggests that in resource-constrained or response-sensitive application scenarios, the practicality of such models should be carefully evaluated, and inference efficiency can be further optimized in the future in line with deployment requirements.

Figure 10.

Average Inference Time Comparison Across LLMs and Mean pass@5 Performance Across Five JavaScript Libraries for Different LLMs.

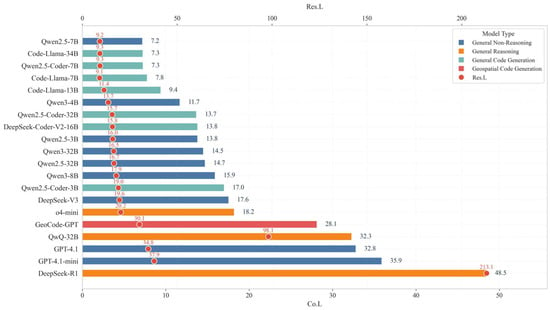

To analyze the model output features, this study uses total lines of response (Res.L) and cleaned code lines (Co.L) as evaluation metrics, with the results shown in Figure 11. Res.L shows that General Reasoning models have higher overall redundancy, with DeepSeek-R1 and QwQ-32B being particularly notable, primarily due to their inclusion of the complete reasoning chain content. In contrast, o4-mini has a significantly lower Res.L as it does not explicitly output the reasoning process. The Co.L metric shows that DeepSeek-R1, the GPT-4.1 series, and QwQ-32B generate more code lines, possibly related to the embedding of comments and structural normalization. Notably, in the Qwen2.5 series, code length does not increase linearly with parameter size, and some larger models even generate more concise code, reflecting their stronger output compression and structural abstraction capabilities developed during training.

Figure 11.

Average Lines of Generated Code per Model. The black numbers indicate the values of Co.L, while the red numbers represent the values of Res.L.

6.3. Operational Efficiency

The operational efficiency results for each model are shown in Table 8.

Table 8.

Evaluation Results for Operational efficiency. In.T refers to the average response time (in seconds) for generating a single test case, and Co.L indicates the number of valid lines of code generated by the model. For the QwQ-32B model using API calls, due to the provider’s configuration, only “streaming calls” are supported. In this mode, Token consumption cannot be tracked, so it is marked as N/A.

Since Tok.-E, In.T-E, and Co.L-E are ratio-based efficiency metrics, lacking a unified dimension and intuitive interpretability, this study independently ranks them as T_Rank, I_Rank, and Co_Rank, and computes the arithmetic average of the three to construct the composite efficiency ranking metric E_Rank, which measures the model’s overall performance in terms of resource consumption (see Table 9). By integrating the accuracy ranking P_Rank (accuracy ranking) and S_Rank (stability-adjusted accuracy ranking), we further conducted a sliced analysis of three core ranking metrics. This provides a systematized evaluation of the relative advantages of each model in terms of accuracy, stability, and efficiency. It should be noted that P_Rank reflects the original accuracy ranking, S_Rank incorporates stability adjustments, and E_Rank reflects the correction effect of resource utilization efficiency on availability. Finally, the arithmetic average of these three is used to define the overall performance metric, Total_Rank, which is used to assess the model’s overall performance. According to the ranking results, Qwen3-32B and Qwen2.5-Coder-32B rank highly across all three dimensions, showing stable performance and supporting local deployment, making them highly practical. In contrast, o4-mini, DeepSeek-R1, and QwQ-32B, which are general reasoning models, excel in accuracy and stability but have relatively lower efficiency rankings. They are more suitable for application scenarios that require high generation quality and are tolerant of higher latency.

Table 9.