1. Introduction

In today’s information era, more users have access to the Internet every day. The increased pursuit of knowledge by humans requires machines to act intelligently [

1]. Many researchers have studied open-domain question-answering (QA) for building computer systems that automatically answer questions in natural language. These open-domain QA systems, which address questions about nearly anything, relying on general ontologies and world knowledge, are widely used on a daily basis. For example, many commercial language understanding or voice control systems, such as Apple’s Siri, Google’s Assistant, and Xiaomi’s Xiaoai, have been widely adopted by the general public [

2].

Depending on the source of information, open-domain QA can be segmented into open-domain question answering from text (TextQA), knowledge-base question answering (KBQA), and question answering from tables. Substantial progress has been made in TextQA because of recent developments in machine reading comprehension and text retrieval. Furthermore, recent advances in pre-trained language models have led to substantial improvements in KBQA models. In short, recent progress in natural language processing (NLP) has considerably advanced open-domain QA.

Figure 1a,b show examples of open-domain QA systems answering specific questions.

Figure 1a shows the example of TextQA, and

Figure 1b shows the example of KBQA.

Although recent progress in NLP has significantly improved the performance of open-domain QA systems, there has been little research on open-domain QA systems specific to geographic questions. Even commercial QA products such as Google QA systems struggle to answer many simple geographic questions.

Figure 1c,d show failure cases of Google QA systems adopted from [

2]. Although these questions are easily answered by simple path traversals in geo-knowledge graphs (Geo-KG) if appropriate information exists, Google QA systems fail to answer these questions.

Researchers have studied the field of Geographic QA (GeoQA) to mitigate these problems. GeoQA can be segmented into Factoid GeoQA [

2] and Geo-analytic QA [

3,

4], depending on the type of question. Factoid GeoQA answers questions based on geographic facts, whereas Geo-analytic QA focuses on questions with complex spatial analytical intents. In [

5,

6,

7], the researchers focused on building rule-based GeoQA pipelines to solve questions in GeoQuestions201 [

5], whereas geo-analytical questions using GeoAnQu were analyzed in [

3,

8].

Some research has been conducted on building the GeoQA dataset. In [

5], a benchmark set of 201 geospatial questions were created from natural language questions and corresponding SPARQL/GeoSPARQL queries. However, these questions were developed by third-year undergraduate students without proper standards. The GeoAnQu dataset in [

4] was generated from 100 scientific articles collected in the context of a master’s thesis at Utrecht University and textbooks on GIScience and GIS. GeoAnQu comprises 429 geo-analytic questions in the natural language without corresponding SPARQL/GeoSPARQL queries. The MS MARCO dataset [

9] is a large-scale machine reading comprehension dataset. The dataset comprising diverse questions was generated from Bing’s search log sampling queries. Among the 1,010,916 questions, approximately 62,400 were location-relevant questions. Thus, [

4,

5] are small-scale datasets generated without proper standards, and [

9] is a large-scale dataset generated from real-world queries.

However, there has been little research on the nature of questions in the geographic domain needed to build intelligent QA agents. Thus, the datasets in [

4,

5] contribute to building QA agents that fail to consider user interests and requirements.

Some studies have analyzed place-related questions that people ask and clustered similar questions on the basis of their own standard. A semantic encoding-based approach was proposed in [

10] to investigate the structural patterns of real-world place-related questions on MS MARCO [

9], which gathered data from real-world queries on search engines. In [

4], a semantic encoding-based approach as in [

10] was proposed to analyze the patterns in GeoQuestions201, GeoAnQu, and MS MARCO. Although [

10] analyzed a large-scale GeoQA dataset that could represent real users’ interests and clustered similar questions, their methods were based on semantic encodings, which have limitations in representing similarities to those questions and therefore not commonly used techniques in topic modeling or Natural Language Processing (NLP). Furthermore, they did not present the latent topics of the clusters.

Table 1 demonstrates the semantic encoding schema from [

4], and

Table 2 illustrates the results of applying semantic encoding schema to some questions in MS MARCO from [

4]. As can be seen from

Table 2, semantic encodings are not able to capture the words that are not detected as a predefined semantic encoding (e.g., the verb “located” is missing in the semantic encoding “1o”). Furthermore, it could not capture the semantics of the sentence because any arbitrary words that are classified using the same code (e.g., not only could “ores” be represented as “o”, but every word that is classified as an object will also be encoded as “o”) will be represented using the same code. That is, the semantic encoding-based approach could not fully capture the structure or semantic in a sentence, and could only roughly capture the structure of a sentence. Therefore, it could only roughly estimate the similarity of syntactic structure, and it could not assess the degree of semantic similarity. These problems will be addressed by adopting the embedding-based topic modeling approach.

Topic modeling is frequently used to discover latent semantic structures, referred to as topics, in a large collection of documents by clustering similar documents into a group. Latent Dirichlet allocation (LDA) and probabilistic latent semantic analysis are widely used topic-modeling techniques. Because these methods rely on the bag-of-words (BoW) representation of documents, which ignores the ordering and semantics of words, distributed representations of words and documents have recently gained popularity in topic modeling [

11,

12,

13,

14]. In particular, [

11,

12] proved that sentence embeddings in topic modeling produce more meaningful and coherent topics than topic models based on BoW or distributed word representations.

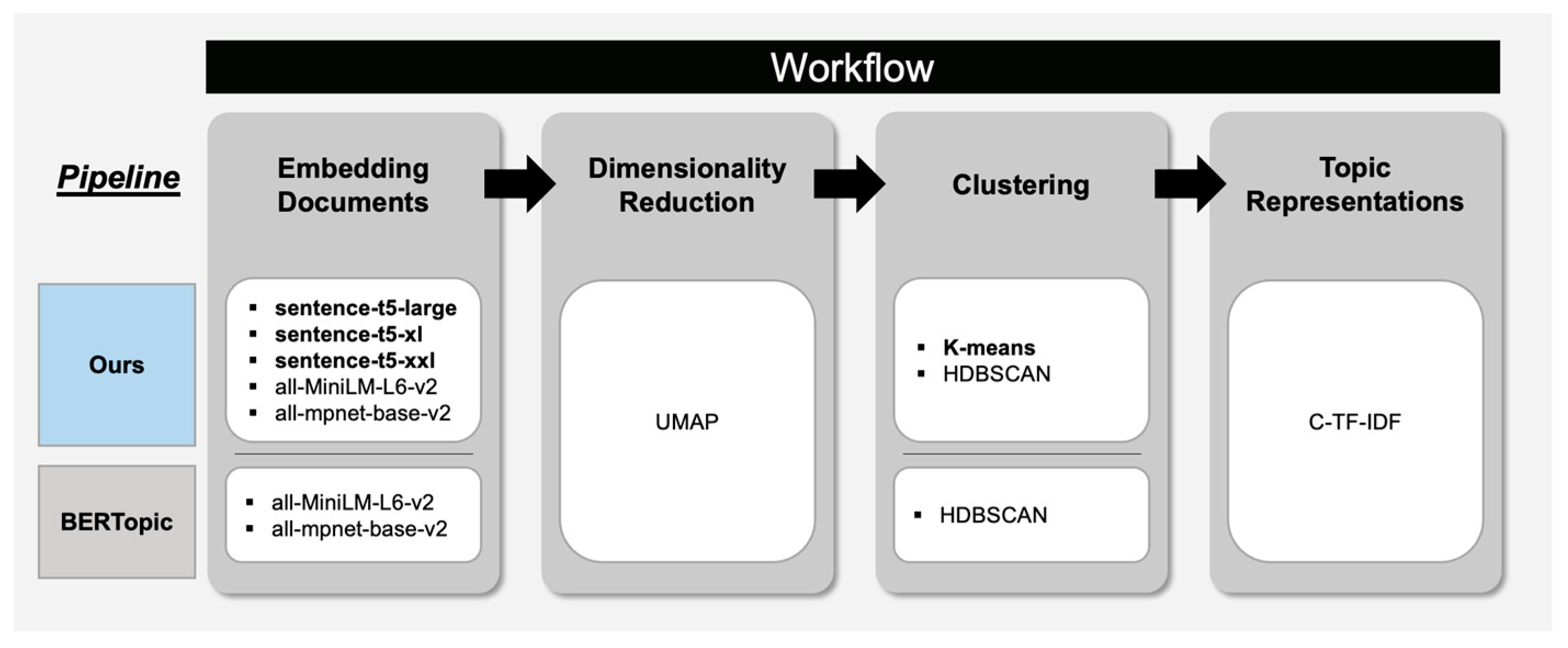

Therefore, we aim to group semantically similar questions into a cluster that has similar latent topics using an embedding-based topic modeling approach and utilize the topic modeling results to determine the users’ interest in the geographic domain. To the best of our knowledge, this is the first study to analyze geographic questions based on semantic similarity and determine the latent topics inside numerous geographic questions. The contributions of this study are as follows:

We analyzed place-related questions based on semantic similarity. To the best of our knowledge, unlike existing works that have clustered structurally similar questions, this is the first study analyzing geographic questions based on semantic similarity.

Because of the power of semantic similarity, we separated the geographic questions by clustering semantically similar questions.

We demonstrated latent topics within an extensive collection of geographic questions. These results propose the direction of questions that GeoQA systems should handle to satisfy user needs.

Our paper is organized as follows.

Section 2 describes previous works related to the GeoQA dataset and topic modeling. Then, in

Section 3, we demonstrate the pipeline of topic modeling and provide a detailed description for each stage of topic modeling. In

Section 4, we provide a description of the dataset to be analyzed, the setup of topic modeling, and how to evaluate the results of topic modeling.

Section 5 provides the results in terms of a quantitative and qualitative evaluation.

Section 6 presents a discussion of some of the results regarding model choices and their impact. Finally, in

Section 7, we demonstrate the findings of our work, how these results could be utilized, the limitations of our study, and possible directions of future research.

2. Related Works

In this section, we discuss related work, starting with a brief overview of GeoQA (

Section 2.1), the GeoQA dataset (

Section 2.2), the analysis of GeoQA dataset (

Section 2.3), and topic modeling (

Section 2.4). Specifically,

Section 2.1 and

Section 2.2 provide an introduction to GeoQA and the GeoQA dataset.

Section 2.3 demonstrates related research and our research in terms of analyzing geographic questions and finding latent topics inside them.

Section 2.4 describes related studies with respect to topic modeling, which we used to analyze geographic questions and find latent topics within them.

Note that our research is primarily focused on analyzing the GeoQA dataset. Existing studies on analyzing the GeoQA dataset have utilized semantic encoding, which is not commonly used in the NLP field. The reason for this is that semantic encoding treats all words within a specific code as the same. That is, it may not distinguish different words with different meanings.

In order to represent documents, BoW representations have traditionally been used, and more recently, contextualized representations have been used. Both models are able to distinguish different words as different representations. However, BoW representations are not able to deliver contextual information, while contextualized representations are. Therefore, in terms of document representations, the semantic encoding-based approach has a severe limitation and thus is not widely used in NLP.

Therefore, it should be noted that although the contribution of our research is primarily in the area of GeoQA, the algorithms we used to improve upon existing GeoQA research came from the field of NLP. In other words, we used recently proposed algorithms in NLP to contribute to the field of GeoQA.

Table 3 demonstrates the overview of related works.

2.1. Brief Overview of GeoQA

GeoQA is a sub-domain of QA that aims to answer geographic or place-related questions. GeoQA research can be divided into two categories: Factoid GeoQA and Geo-analytic QA.

Factoid GeoQA answers questions based on geographic facts. In [

5], the authors implemented the first QA engine that could answer questions with a geospatial dimension. They proposed a template-based query translator that could translate a natural language following predefined templates into SPARQL/GeoSPARQL queries. Deep neural networks were used to improve the methods in [

6,

7] for named entity recognition, dependency parsing, constituency parsing, and BERT representations for contextualized word representations. Thus, they improved the accuracy of query generation results. However, that research also relied on a rule-based approach for translating natural language into SPARQL/GeoSPARQL queries. All these studies were conducted using GeoQuestions201, as discussed later.

Geo-analytic QA refers to answering geographic questions that require complicated geoprocessing workflows. Although some works [

3,

8] have studied Geo-analytic QA, no work has fully implemented the entire QA pipeline for Geo-analytic QA. In [

3], the authors focused on why core concepts are essential for handling Geo-analytic QA, while in [

8], language questions were translated into concept transformations. However, they failed to convert concept transformations into query languages such as SPARQL/GeoSPARQL. Although they translated natural language into intermediate structures, these can only be regarded as a partial implementation of Geo-analytic QA.

2.2. GeoQA Dataset

There are several datasets available for geographic questions. Nguyen et al. [

9] introduced a large-scale machine-reading comprehension dataset named MS MARCO. The dataset comprises 1,010,916 anonymized questions sampled from Bing’s search query logs, each with a human-generated answer and 182,669 completely human-rewritten-generated answers. These datasets contained 6.17% location-related queries, i.e., approximately 62,000 queries. Although MS MARCO was originally introduced for real-world machine reading comprehension, to the best of our knowledge, it is the first and only large-scale geographic question corpus that has been studied.

GeoQuestions201 [

5] comprises data sources, ontologies, natural language questions, and SPARQL/GeoSPARQL queries. These questions were answered by third-year students of the 2017–2018 Artificial Intelligence course in the authors’ departments. The students were asked to target three data sources (DBpedia, OpenStreetMap, and General Administrative Divisions dataset) by imagining scenarios in which geospatial information would be required and could provide intelligent assistance and propose questions with a geospatial dimension that they considered “simple”.

GeoAnQu [

4] contains 429 geo-analytic questions compiled from scientific articles collected in the context of a master’s thesis at Utrecht University using Scopus and textbooks on GIScience and GIS. For scientific articles, the articles explicitly stated the questions in some cases, but in most cases, the authors manually formulated the question based on reading the article. For textbooks, they reformulated questions when they were not yet explicit.

GeoQuestions201 and GeoAnQu are small dataset made up of subjective, uncommon questions among public users, respectively. Therefore, there is no guarantee that the questions in [

4,

5] could represent a “natural” distribution of the information needs that users may want to satisfy using an intelligent assistant. As QA’s fundamental role is to answer users’ queries, a practical QA engine must be improved to satisfy the user’s request. In conclusion, the MS MARCO dataset would be a good fit for GeoQA tasks, as it comprises questions that were sourced from a real-world question-answering engine.

2.3. Analyzing the GeoQA Dataset

Several studies have analyzed GeoQA datasets. Hamzei et al. [

10] analyzed MS MARCO in terms of its syntactic structure. Words or spans were encoded as semantic encodings such as place names, place types, activities, situations, qualitative spatial relationships, WH words, and other generic objects. They calculated the Jaro similarity [

19] and applied the k-means clustering algorithm [

20] to the encoded sentences. They also analyzed frequent patterns. However, because semantic encoding-based methods are based on syntactic structure, there is no guarantee of finding semantically similar clusters. Furthermore, they did not provide any latent topics of clusters.

In [

4], MS MARCO, GeoQuestions201, and GeoAnQu were compared by adopting similar pipelines based on semantic encoding. They compared each dataset in terms of encoded patterns, such as n-gram patterns, encoded as predefined semantic encodings. They also analyzed and compared each dataset based on the frequency of specific words such as how, what, and when. However, because these works are based on word frequency or Jaro similarity in encoded patterns, there is no guarantee that these clusters share semantically similar topics. Furthermore, this work did not present any latent topic of the cluster.

2.4. Topic Modeling

The ability to organize, search, and summarize a large volume of text is a ubiquitous problem in NLP. Topic modeling is often used when a large collection of texts cannot be reasonably read and sorted by a person. Given a corpus comprising many texts, referred to as documents, a topic model will uncover latent semantic structures or topics in the documents. Topics can then be used to find high-level summaries of a large collection of documents, search for documents of interest, and group similar documents [

13].

Conventional models, such as LDA [

18] and non-negative matrix factorization (NMF) [

21], describe a document as a bag of words, and model each document as a mixture of latent topics [

11]. However, because BoW representations disregard the syntactic and semantic relationships among the words in a document, there are two main linguistic avenues to coherent text [

12].

Recently, pre-trained language models have successfully been used to tackle a broad set of NLP tasks such as natural language inference, QA, and sentiment classification. Bianchi et al. [

12] demonstrated that combining these contextualized representations, that is, BERT, with topic models produced more meaningful and coherent topics than traditional BoW topic models. Grootendorst [

11] introduced BERTopic, which also uses pre-trained language models. It extends the clustering embedding approach described in [

13,

14] by incorporating a class-based variant of TF-IDF to create topic representations.

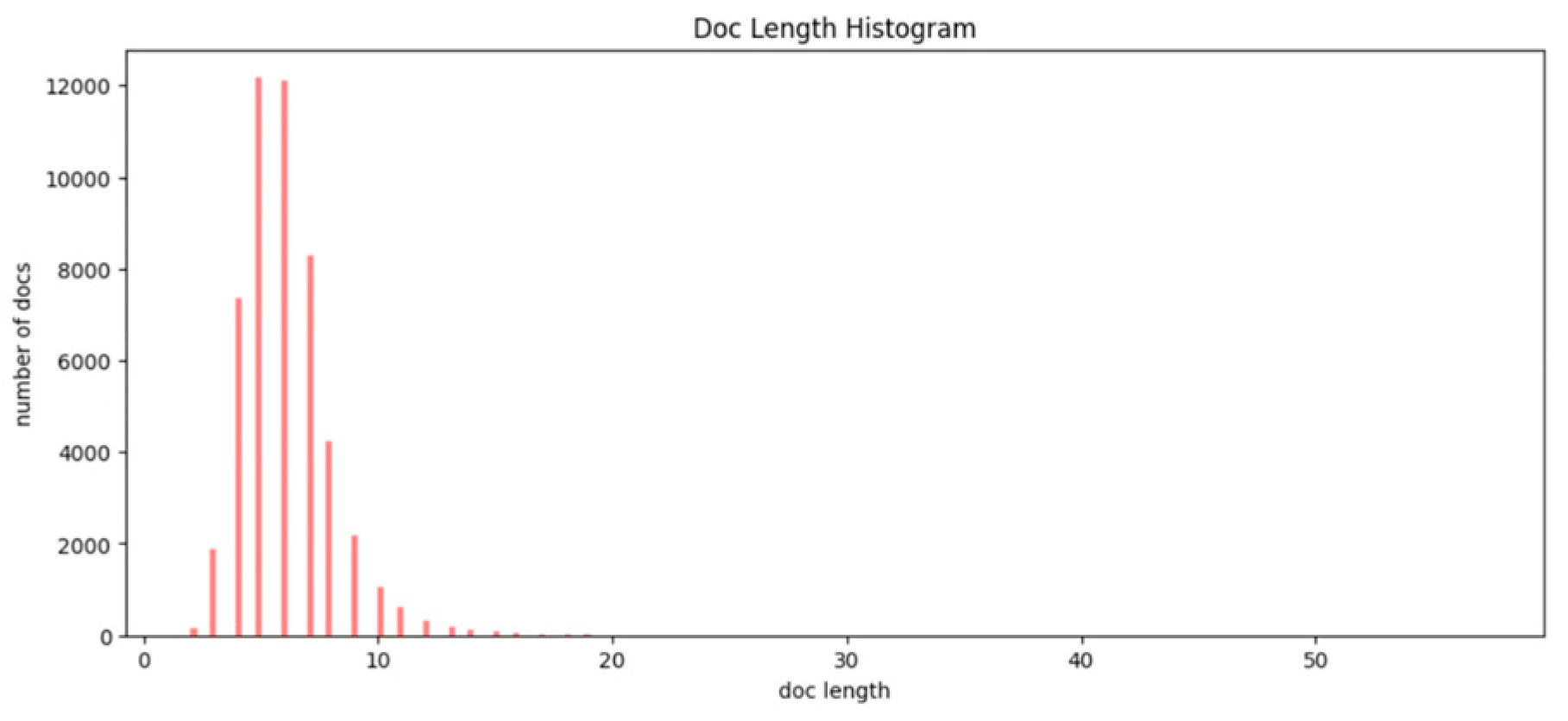

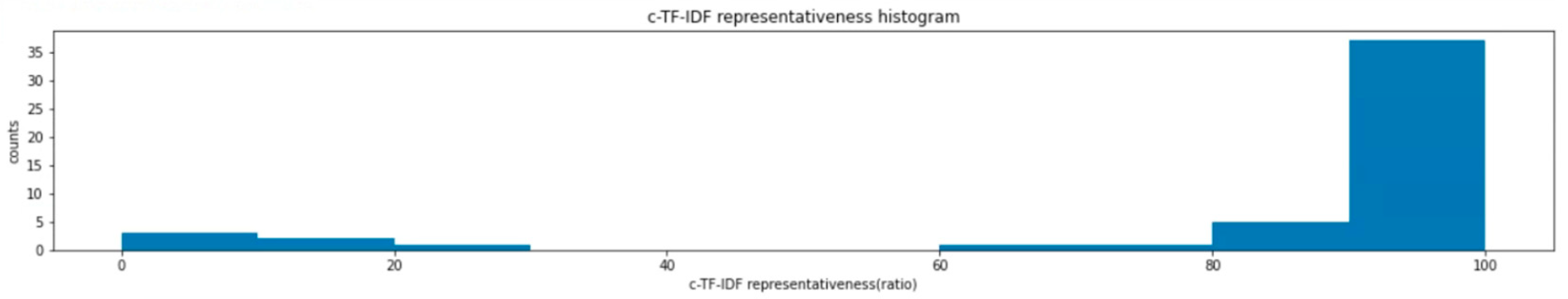

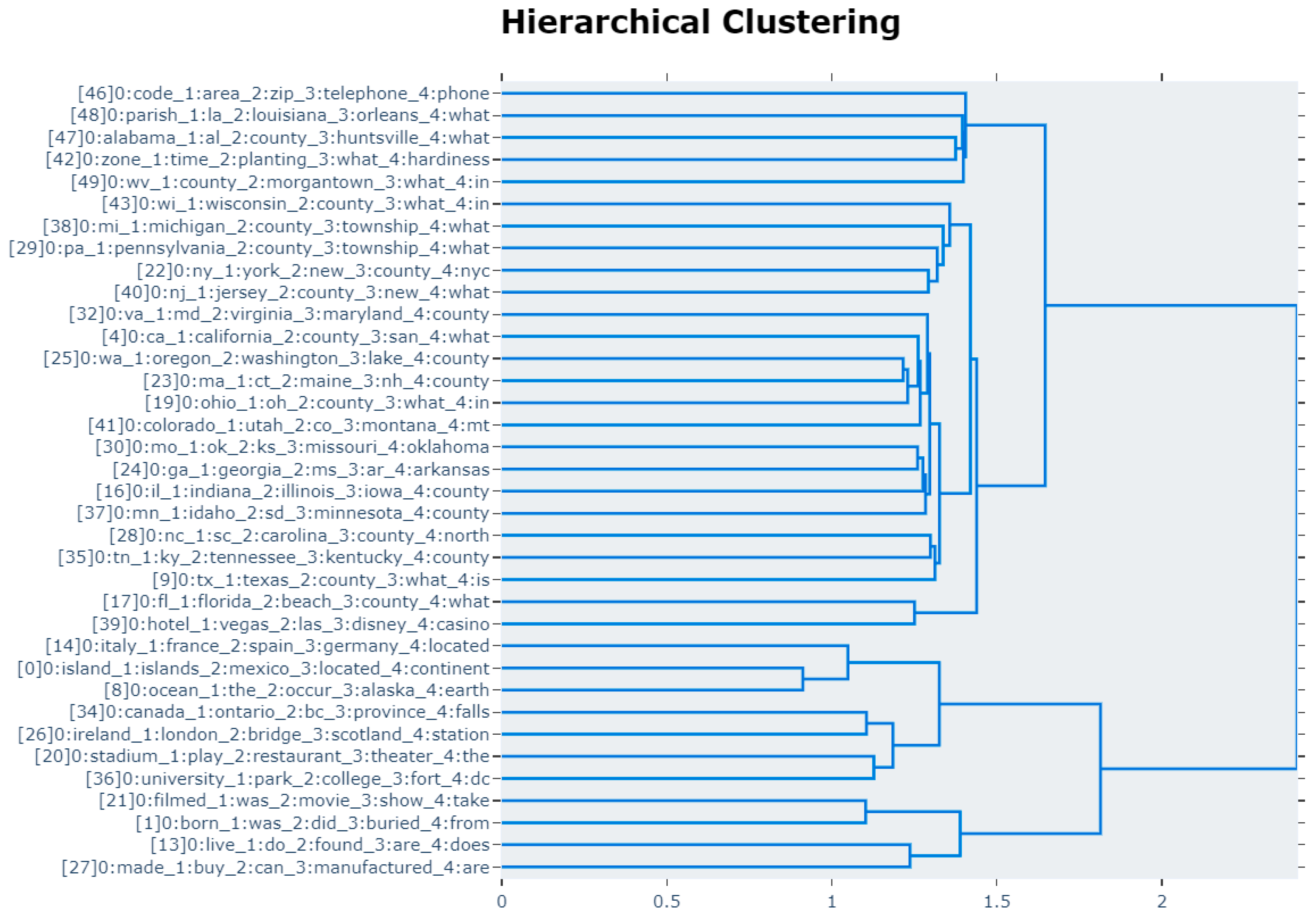

6. Discussion

In this section, we provide a discussion regarding our choice of models and their impact on our results. Specifically, we discuss sentence embedding, clustering, and c-TF-IDF representations.

Our results show that Sentence T5 consistently outperformed SBERT in terms of topic coherence. The reason for this is the quality of sentence embedding, as it was previously shown in [

17] that Sentence T5 consistently outperformed SBERT in various downstream tasks. Furthermore, the best performing model was the biggest model (i.e., sentence-t5-xxl). This result is also coherent with existing studies reporting that models with larger size with appropriate fine-tuning consistently outperform smaller ones (sentence-t5-large vs. sentence-t5-xl).

For clustering algorithms, k-means clustering consistently outperformed HDBSCAN. This was coherent with existing studies [

28] reporting that for a dataset with more than 2k samples, k-means clustering consistently outperforms HDBSCAN. Before applying clustering algorithms, we adopted dimensionality reduction, UMAP. As clustering algorithms tend to suffer from the curse of dimensionality [

28] (i.e., high-dimensional data (in our case 384 for all-MiniLM-L6-v2 and 768 for other models) often require an exponentially growing number of observed samples to obtain a reliable result), this is essential to improve the clustering accuracy and time. As can be seen from

Table A2, the choice of UMAP embedding dimension (among 5, 10, 15) has little impact on the performance.

Our results demonstrate that c-TF-IDF representations well represent the topics. However, we did not remove the stop words while building the topic representations. With proper postprocessing, such as stop word removal, we could enhance the quality of topic representations. Furthermore, we did not study the relationships between each topic. That is, if we had investigated the topic representations in terms of topic similarity with pairs of clusters, we could have obtained more comprehensive high-level summaries in a large collection of geographic questions. We leave this to future work.

7. Conclusions

This study analyzed geographic questions based on their semantic similarity and identified the geographic topics of interest. Existing studies have analyzed geographic questions in terms of syntactic structures, which have limitations in terms of representing semantic information and do not identify latent topics. To the best of our knowledge, this is the first study to analyze geographic questions in terms of semantic similarity and demonstrate the corresponding latent topics.

The BERTopic pipeline was adopted to cluster similar questions and discover latent topics. Following the BERTopic pipeline, we performed various experiments with different hyperparameters to select the appropriate models. Thus, we successfully selected topic models with high topic coherence to find the semantic structure of numerous documents.

A manual inspection showed the effectiveness of the embedding-based topic modeling approach and discovered the latent geographic topics that are of interest. First, we determined that topic representations generated by embedding-based topic modeling offer high-level summaries of numerous documents. Second, because of the coherent collection of documents inside each cluster and high-level summaries provided by topic representation, we effectively separated geographic and non-geographic clusters. Third, by inspecting geographic questions with higher topic representativeness, we demonstrated latent topics within several geographic questions. These findings show geographic topics that people of interest therefore propose regarding the direction of questions that GeoQA systems should handle to satisfy user needs.

Although this research proposes a direction that GeoQA systems would follow, there is a limitation in that we analyzed the MS MARCO dataset. Although MS MARCO gathered questions from real users’ queries, this dataset was generated a few years ago and is outdated. Furthermore, all queries from MS MARCO were collected from Bing and could be biased in terms of user distribution, such as nationality. Therefore, future studies should analyze recent questions asked by users of a representative search engine.