Extracting Objects’ Spatial–Temporal Information Based on Surveillance Videos and the Digital Surface Model

Abstract

:1. Introduction

2. Related Work

2.1. Camera Calibration

2.2. Video Geo-Spatialization

2.3. Object Detection and Tracking

2.4. The Integration of Surveillance Videos and Geographic Information

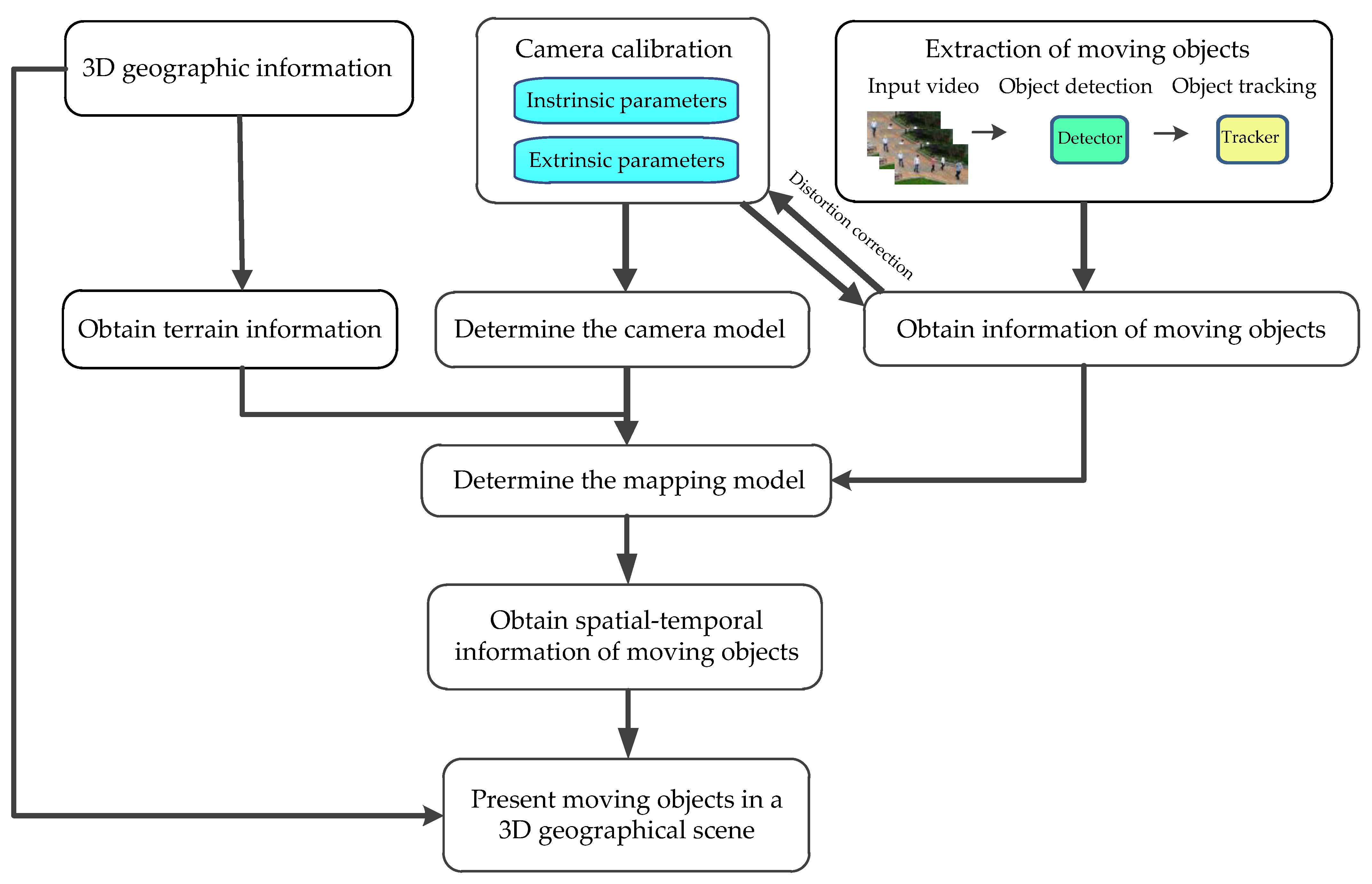

3. Framework of 3D Geographic Information and Moving Objects

- Camera calibration. Camera calibration aims to obtain intrinsic and extrinsic parameters which can determine the camera model.

- The extraction of moving objects. Object detectors and trackers can extract the information of moving objects in a video which is about spatial-temporal positions in an image. These positions are not very accurate and contain errors. They need distortion correction.

- Mapping model. In the process of camera imaging, depth information is lost. To recover the position of the object in space, we need terrain information obtained by a 3D geographic model. Given a calibrated camera and an image pixel, an imaging ray is constructed. The imaging ray intersects the terrain at a point where the object is located.

- Spatial–temporal information in 3D space. In addition to the 3D position information, the width and height of the object can also be obtained by geometric calculation.

- Visualization. Present moving objects in a 3D geographic scene.

4. Principles and Methods

4.1. Camera Imaging Model

4.2. Ray Intersection with the DSM

- (1)

- Construct an imaging ray, , where is the camera location in space, is an arbitrary distance, and is a unit vector representing the direction of the imaging ray from the camera. As shown in Figure 2a, assume that the location of point A in the image is , and the pixel coordinates of any point B on the imaging ray OA are also . Substitute (a constant) into Equation (1) to obtain . This gives:

- (2)

- Search for object points. Start from the camera point and search along the direction of the imaging ray OA. The search step is the grid spacing of the DSM. The coordinates of the th search point are:Substitute into the DSM to search and match. Record the elevation at on the DSM as . The elevations of the four corner points of the grid where is located are , , and , respectively. Then,when appears for the first time, it indicates that object A has passed:

- ①

- If , is the world coordinates of object A.

- ②

- If , it means that the object point is located between the search point and . is abbreviated as . By interpolation, a more precise location estimate can be obtained. The interpolation process is shown in Figure 2b. According to the triangle proportion relation, there is:

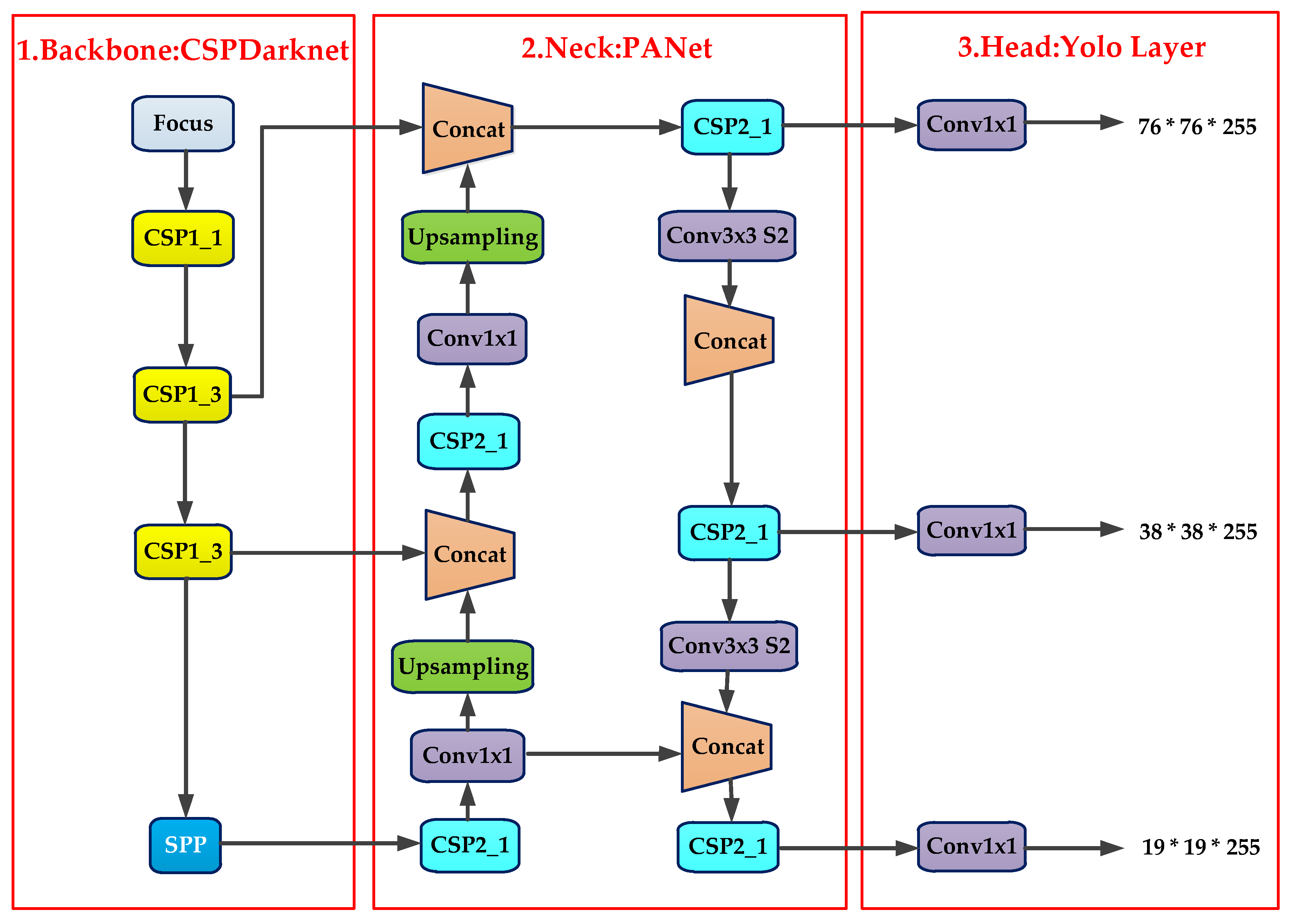

4.3. Pedestrian Detection and Tracking

4.4. Acquisition of Objects’ Spatial–Temporal Information

5. Experiments and Results

5.1. Experimental Environment

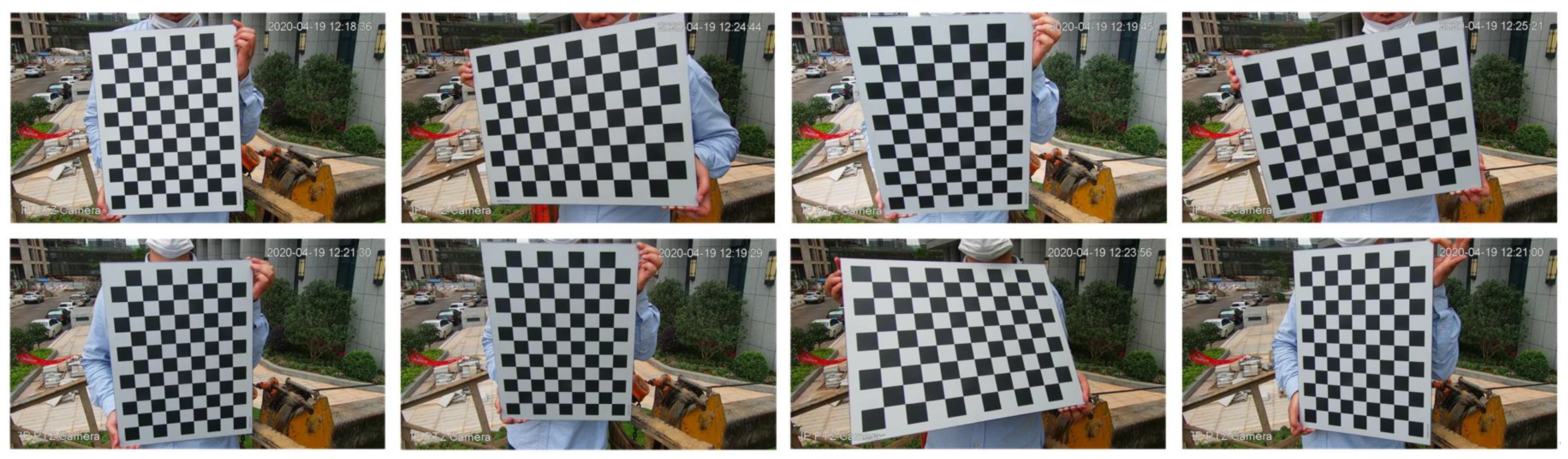

5.2. Intrinsic Parameters

5.3. Extrinsic Parameters

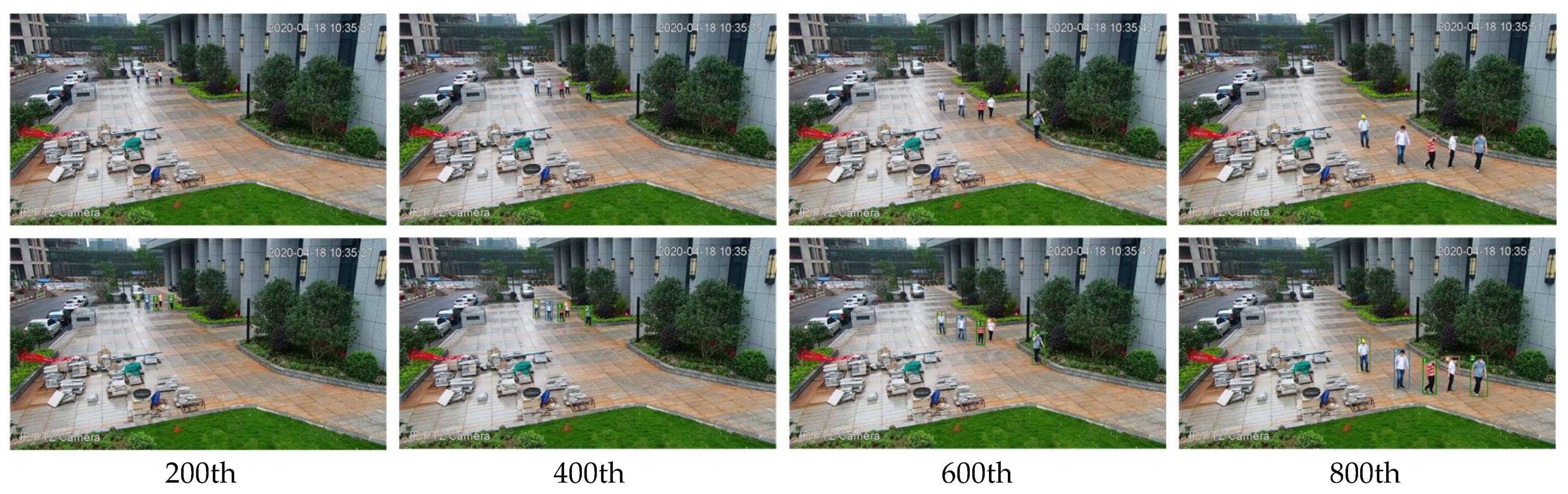

5.4. Object Tracking

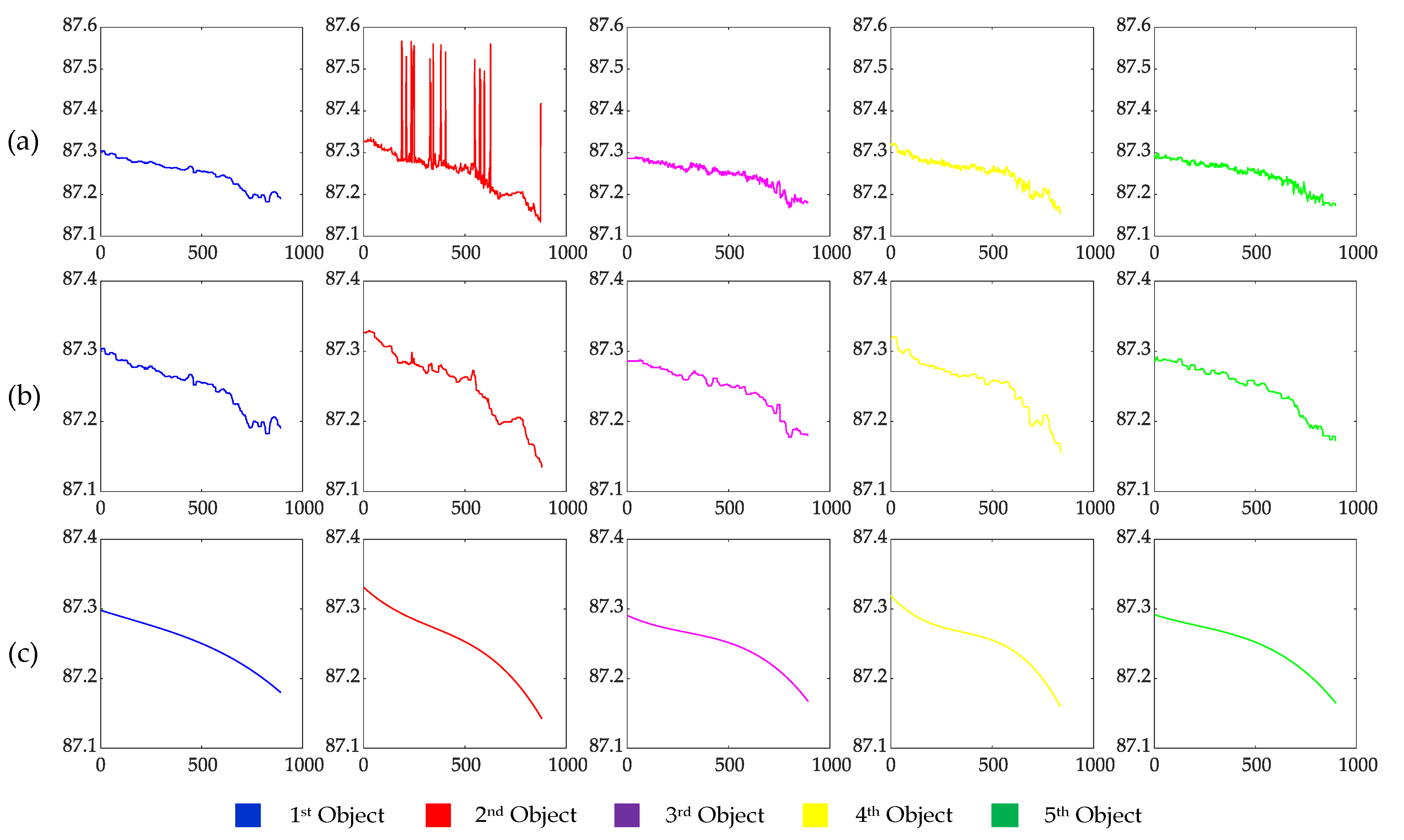

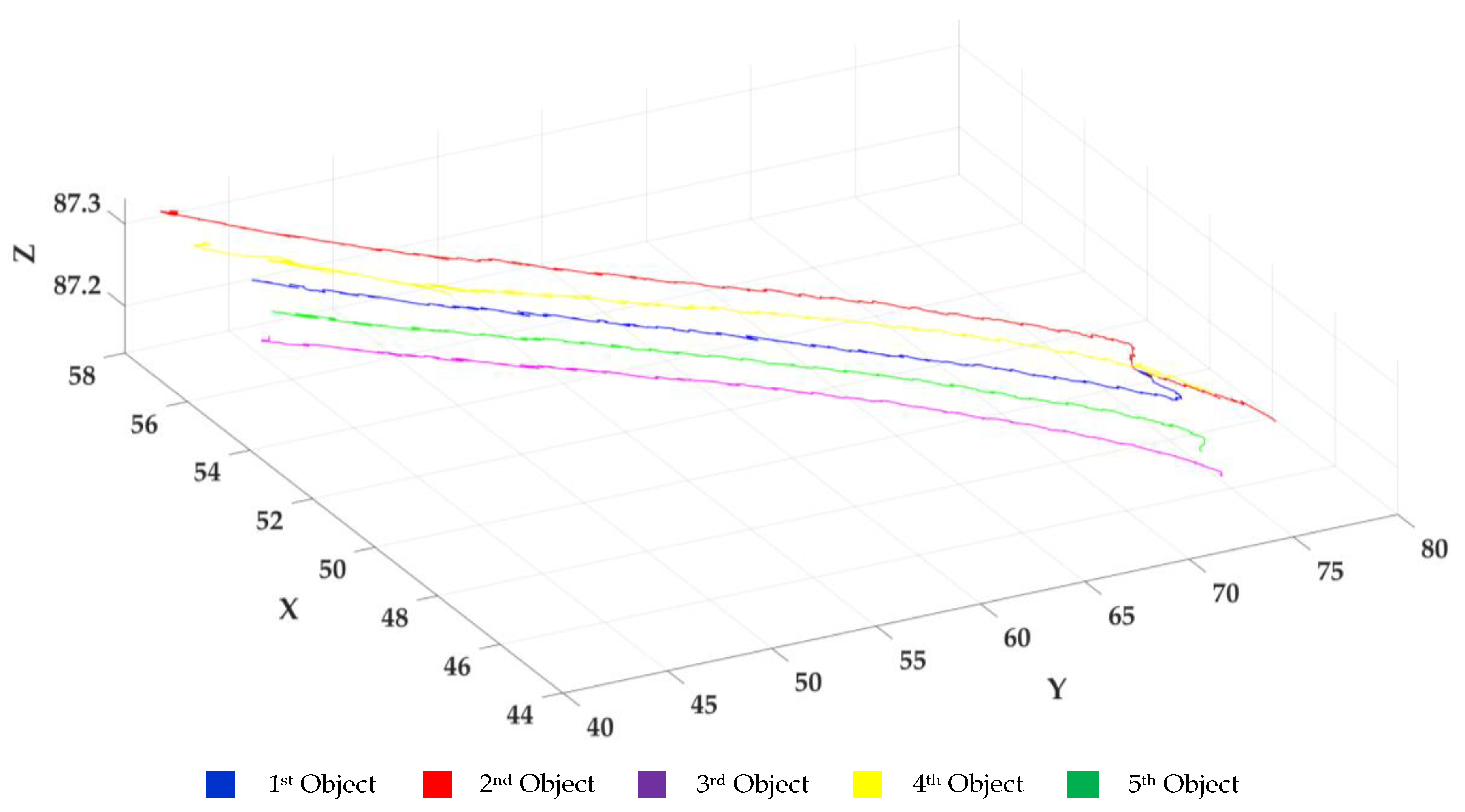

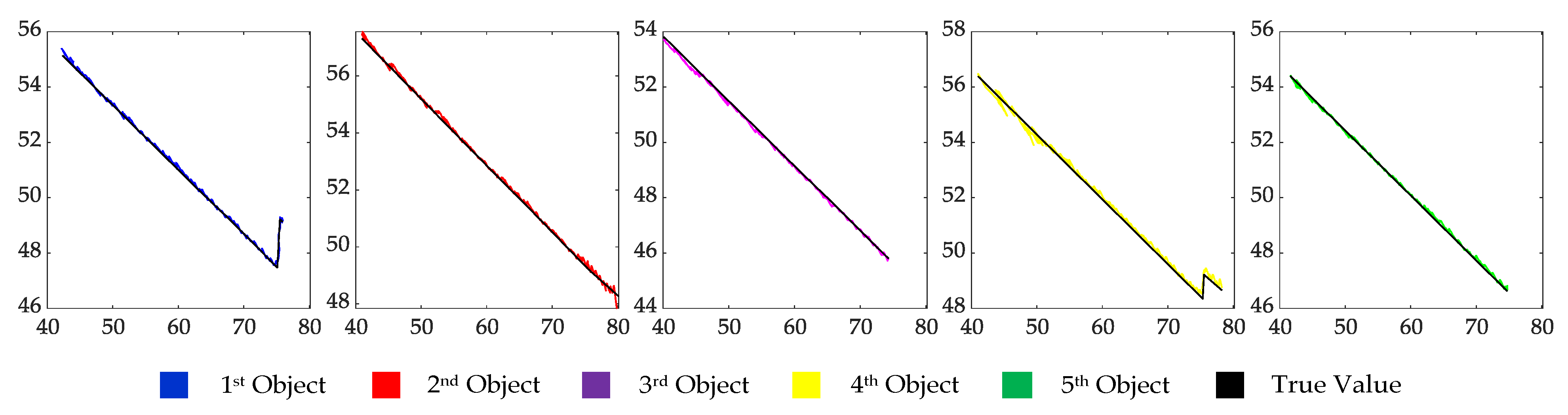

5.5. Estimating the Object Location

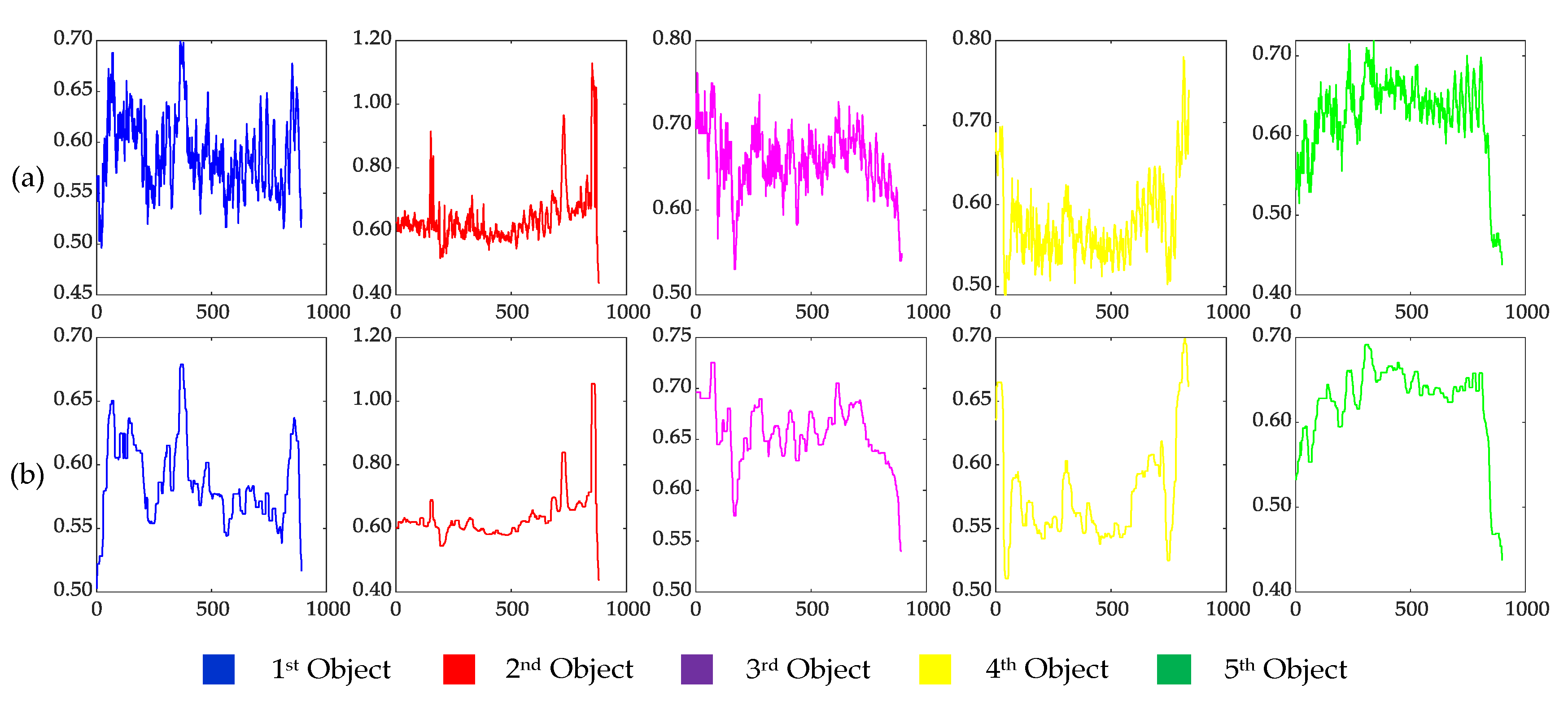

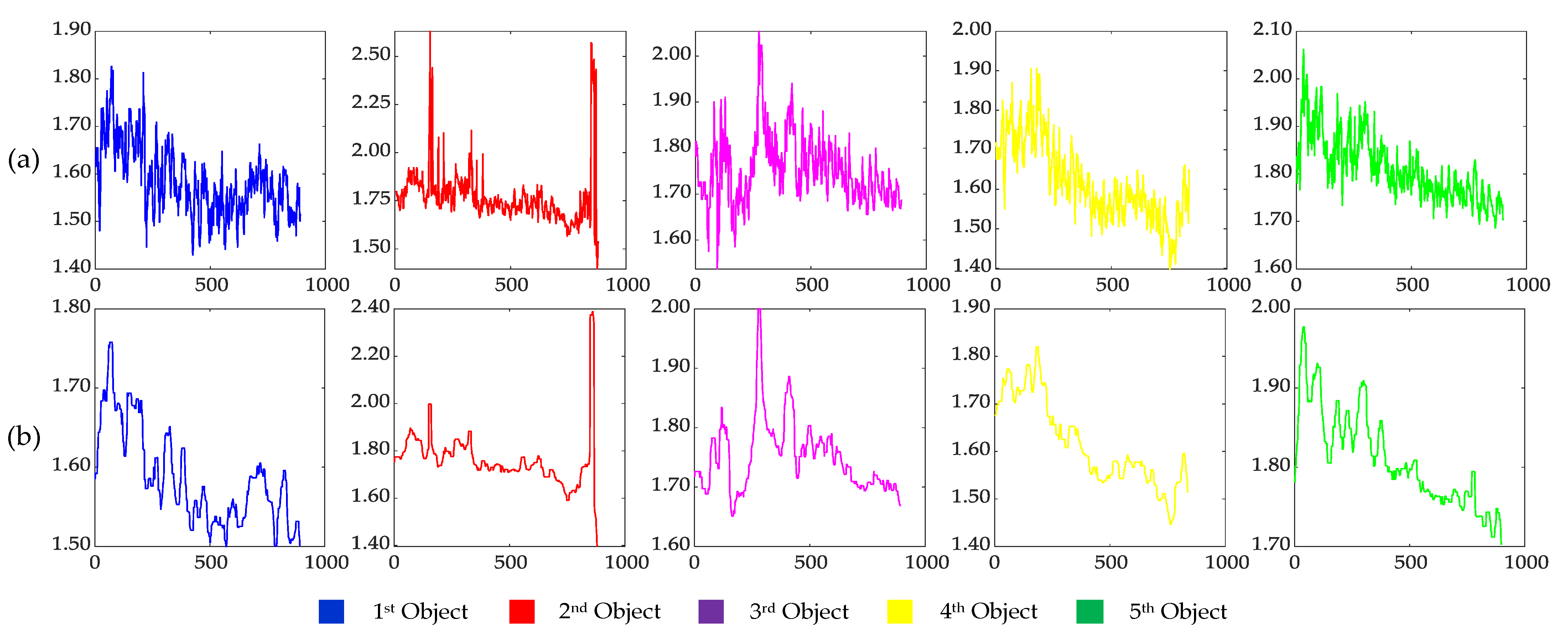

5.6. Estimating the Object Width and Height

5.7. Spatial–Temporal Information of Moving Objects

5.8. Statistics of the Experimental Time

5.9. Analysis of the Experimental Results

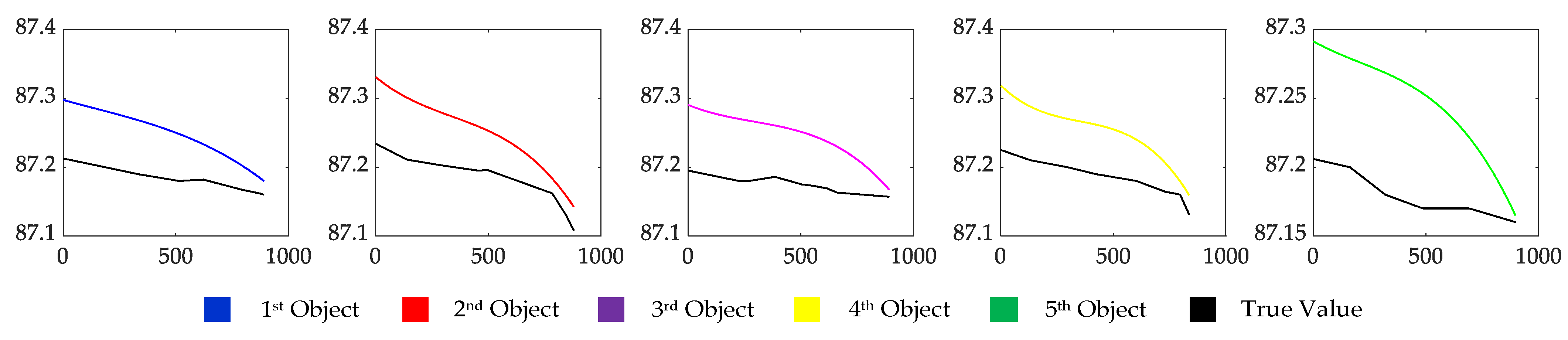

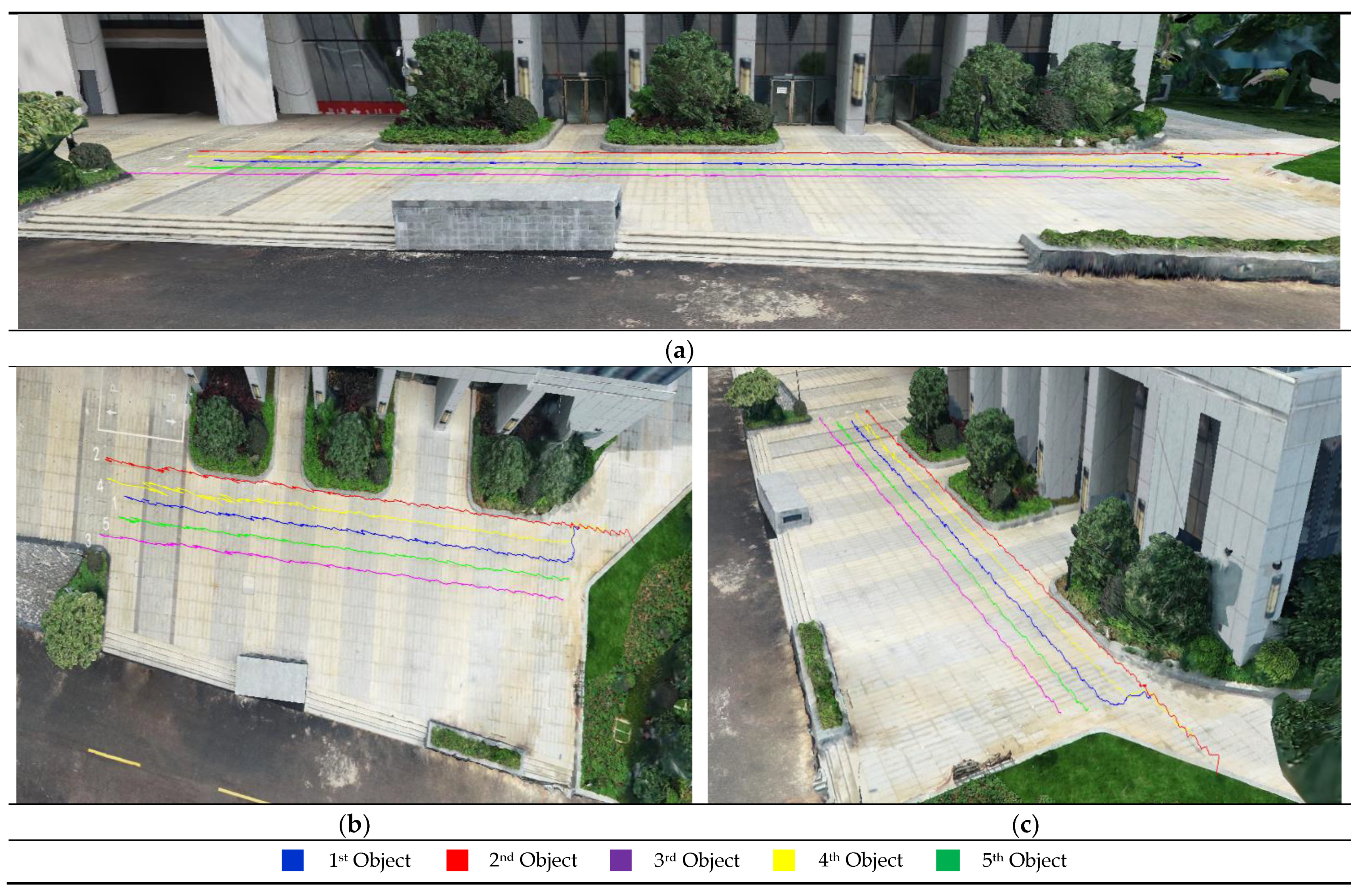

6. Visualization

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Elharrouss, O.; Almaadeed, N.; Al-Maadeed, S. A review of video surveillance systems. J. Vis. Commun. Image Represent. 2021, 77, 103116. [Google Scholar] [CrossRef]

- Lee, S.C.; Nevatia, R. Robust camera calibration tool for video surveillance camera in urban environment. In Proceedings of the CVPR 2011 Workshops, Colorado Springs, CO, USA, 20–25 June 2011; pp. 62–67. [Google Scholar]

- Eldrandaly, K.A.; Abdel-Basset, M.; Abdel-Fatah, L. PTZ-surveillance coverage based on artificial intelligence for smart cities. Int. J. Inf. Manag. 2019, 49, 520–532. [Google Scholar] [CrossRef]

- Liu, S.; Liu, D.; Srivastava, G.; Połap, D.; Woźniak, M. Overview and methods of correlation filter algorithms in object tracking. Complex Intell. Syst. 2020, 7, 1895–1917. [Google Scholar] [CrossRef]

- Kawasaki, N.; Takai, Y. Video monitoring system for security surveillance based on augmented reality. In Proceedings of the 12th International Conference on Artificial Reality and Telexistence, Tokyo, Japan, 4–6 December 2002; pp. 4–6. [Google Scholar]

- Sankaranarayanan, K.; Davis, J.W. A fast linear registration framework for multi-camera GIS coordination. In Proceedings of the 2008 IEEE Fifth International Conference on Advanced Video and Signal Based Surveillance, Santa Fe, NM, USA, 1–3 September 2008; pp. 245–251. [Google Scholar]

- Xie, Y.; Wang, M.; Liu, X.; Mao, B.; Wang, F. Integration of Multi-Camera Video Moving Objects and GIS. ISPRS Int. J. Geo. Inf. 2019, 8, 561. [Google Scholar] [CrossRef] [Green Version]

- Xie, Y.; Wang, M.; Liu, X.; Wu, Y. Integration of GIS and moving objects in surveillance video. ISPRS Int. J. Geo. Inf. 2017, 6, 94. [Google Scholar] [CrossRef] [Green Version]

- Girgensohn, A.; Kimber, D.; Vaughan, J.; Yang, T.; Shipman, F.; Turner, T.; Rieffel, E.; Wilcox, L.; Chen, F.; Dunnigan, T. Dots: Support for effective video surveillance. In Proceedings of the 15th ACM international conference on Multimedia, Bavaria, Germany, 24–29 September 2007; pp. 423–432. [Google Scholar]

- Han, L.; Huang, B.; Chen, L. Integration and application of video surveillance system and 3DGIS. In Proceedings of the 2010 18th International Conference on Geoinformatics, Beijing, China, 18–20 June 2010; pp. 1–5. [Google Scholar]

- Zheng, J.; Zhang, D.; Zhang, Z.; Lu, X. An integrated system of video surveillance and GIS. IOP Conf. Ser. Earth Environ. Sci. 2018, 170, 022088. [Google Scholar] [CrossRef] [Green Version]

- Xie, Y.; Wang, M.; Liu, X.; Wu, Y. Surveillance Video Synopsis in GIS. ISPRS Int. J. Geo Inf. 2017, 6, 333. [Google Scholar] [CrossRef] [Green Version]

- Song, H.; Liu, X.; Zhang, X.; Hu, J. Real-time monitoring for crowd counting using video surveillance and GIS. In Proceedings of the 2012 2nd International Conference on Remote Sensing, Environment and Transportation Engineering, Nanjing, China, 1–3 June 2012; pp. 1–4. [Google Scholar]

- Zhang, Z. A flexible new technique for camera calibration. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 1330–1334. [Google Scholar] [CrossRef] [Green Version]

- Hartley, R.I. Self-calibration of stationary cameras. Int. J. Comput. Vis. 1997, 22, 5–23. [Google Scholar] [CrossRef]

- Triggs, B. Autocalibration and the absolute quadric. In Proceedings of the Proceedings of IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Juan, PR, USA, 17–19 June 1997; pp. 609–614. [Google Scholar]

- Lu, X.X. A review of solutions for perspective-n-point problem in camera pose estimation. J. Phys. Conf. Ser. 2018, 1087, 052009. [Google Scholar] [CrossRef]

- Lepetit, V.; Moreno-Noguer, F.; Fua, P. Epnp: An accurate o (n) solution to the pnp problem. Int. J. Comput. Vis. 2009, 81, 155. [Google Scholar] [CrossRef] [Green Version]

- Li, S.; Xu, C.; Xie, M. A robust O (n) solution to the perspective-n-point problem. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 34, 1444–1450. [Google Scholar] [CrossRef]

- Collins, R.; Tsin, Y.; Miller, J.R.; Lipton, A. Using a DEM to determine geospatial object trajectories. In Proceedings of the DARPA Image Understanding Workshop, Monterey, CA, USA, 20–23 November 1998; pp. 115–122. [Google Scholar]

- Milosavljević, A.; Rančić, D.; Dimitrijević, A.; Predić, B.; Mihajlović, V. Integration of GIS and video surveillance. Int. J. Geogr. Inf. Sci. 2016, 1–19. [Google Scholar] [CrossRef]

- Milosavljević, A.; Rančić, D.; Dimitrijević, A.; Predić, B.; Mihajlović, V. A Method for Estimating Surveillance Video Georeferences. ISPRS Int. J. Geo. Inf. 2017, 6, 211. [Google Scholar] [CrossRef] [Green Version]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the Proceedings of the IEEE conference on computer vision and pattern recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- Girshick, R. Fast r-cnn. In Proceedings of the Proceedings of the IEEE international conference on computer vision, Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. Adv. Neural Inf. Processing Syst. 2015, 28, 91–99. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask r-cnn. In Proceedings of the Proceedings of the IEEE international Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2961–2969. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the Proceedings of the IEEE conference on computer vision and pattern recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.-Y.; Berg, A.C. Ssd: Single shot multibox detector. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; pp. 21–37. [Google Scholar]

- Huang, C.; Li, Y.; Nevatia, R. Multiple target tracking by learning-based hierarchical association of detection responses. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 35, 898–910. [Google Scholar] [CrossRef] [PubMed]

- He, Y.; Wei, X.; Hong, X.; Shi, W.; Gong, Y. Multi-target multi-camera tracking by tracklet-to-target assignment. IEEE Trans. Image Processing 2020, 29, 5191–5205. [Google Scholar] [CrossRef]

- Xu, J.; Bo, C.; Wang, D. A novel multi-target multi-camera tracking approach based on feature grouping. Comput. Electr. Eng. 2021, 92, 107153. [Google Scholar] [CrossRef]

- Wang, Z.; Zheng, L.; Liu, Y.; Li, Y.; Wang, S. Towards real-time multi-object tracking. In Proceedings of the Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020; pp. 107–122. [Google Scholar]

- Ristani, E.; Tomasi, C. Features for multi-target multi-camera tracking and re-identification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 6036–6046. [Google Scholar]

- Zhang, Z.; Wu, J.; Zhang, X.; Zhang, C. Multi-target, multi-camera tracking by hierarchical clustering: Recent progress on dukemtmc project. arXiv 2017, arXiv:1712.09531. [Google Scholar]

- Tagore, N.K.; Singh, A.; Manche, S.; Chattopadhyay, P. Deep Learning based Person Re-identification. arXiv 2020, arXiv:2005.03293. [Google Scholar]

- Katkere, A.; Moezzi, S.; Kuramura, D.Y.; Kelly, P.; Jain, R. Towards video-based immersive environments. Multimed. Syst. 1997, 5, 69–85. [Google Scholar] [CrossRef]

- Takehara, T.; Nakashima, Y.; Nitta, N.; Babaguchi, N. Digital diorama: Sensing-based real-world visualization. In Proceedings of the International Conference on Information Processing and Management of Uncertainty in Knowledge-Based Systems, Dortmund, Germany, 28 June–2 July 2010; Springer: Berlin/Heidelberg, Germany, 2010; Volume II, pp. 663–672. [Google Scholar]

- Zhang, X.; Liu, X.; Song, H. Video surveillance GIS: A novel application. In Proceedings of the 2013 21st International Conference on Geoinformatics, Kaifeng, China, 20–22 June 2013; pp. 1–4. [Google Scholar]

- Yang, Y.; Chang, M.-C.; Tu, P.; Lyu, S. Seeing as it happens: Real time 3D video event visualization. In Proceedings of the 2015 IEEE International Conference on Image Processing (ICIP), Quebec, ON, Canada, 27–30 September 2015; pp. 2875–2879. [Google Scholar]

- Wojke, N.; Bewley, A.; Paulus, D. Simple online and realtime tracking with a deep association metric. In Proceedings of the 2017 IEEE International Conference on Image Processing (ICIP), Beijing, China, 17–20 September 2017; pp. 3645–3649. [Google Scholar]

| Point | u/pixel | v/pixel | X/m | Y/m | Z/m |

|---|---|---|---|---|---|

| B1 | 1220 | 848 | 46.129 | 75.967 | 87.433 |

| B6 | 472 | 713 | 42.334 | 69.258 | 87.127 |

| B25 | 700 | 401 | 50.669 | 53.49 | 87.186 |

| B32 | 756 | 242 | 68.291 | 14.321 | 87.586 |

| B37 | 1193 | 219 | 56.517 | 62.117 | 90.979 |

| Object ID | 1 | 2 | 3 | 4 | 5 |

|---|---|---|---|---|---|

| Average width/m | 0.59 | 0.64 | 0.66 | 0.58 | 0.62 |

| Average height/m | 1.59 | 1.76 | 1.75 | 1.61 | 1.80 |

| Object ID | X/m | Y/m | Z/m | Width/m | Height/m | Frame |

|---|---|---|---|---|---|---|

| 4 | 53.323 | 54.572 | 87.267 | 0.603 | 1.622 | 368 |

| 4 | 53.367 | 54.480 | 87.266 | 0.603 | 1.612 | 369 |

| 4 | 53.367 | 54.480 | 87.266 | 0.603 | 1.612 | 370 |

| 4 | 53.386 | 54.489 | 87.266 | 0.603 | 1.608 | 371 |

| 4 | 53.386 | 54.489 | 87.266 | 0.603 | 1.608 | 372 |

| 4 | 53.341 | 54.581 | 87.267 | 0.603 | 1.608 | 373 |

| 4 | 53.360 | 54.590 | 87.267 | 0.594 | 1.608 | 374 |

| 4 | 53.379 | 54.599 | 87.267 | 0.594 | 1.608 | 375 |

| 4 | 53.156 | 55.109 | 87.270 | 0.594 | 1.608 | 376 |

| 4 | 53.156 | 55.109 | 87.270 | 0.594 | 1.608 | 377 |

| 4 | 53.156 | 55.109 | 87.270 | 0.594 | 1.608 | 378 |

| 4 | 53.192 | 55.126 | 87.270 | 0.594 | 1.608 | 379 |

| 4 | 53.277 | 54.999 | 87.262 | 0.592 | 1.608 | 380 |

| 4 | 53.274 | 55.052 | 87.265 | 0.585 | 1.608 | 381 |

| 4 | 53.292 | 55.061 | 87.265 | 0.585 | 1.626 | 382 |

| 4 | 53.358 | 54.925 | 87.264 | 0.585 | 1.628 | 383 |

| 4 | 53.377 | 54.934 | 87.264 | 0.585 | 1.631 | 384 |

| Core Hardware for Computing | CPU | GPU |

|---|---|---|

| Model | Intel Xeon W-2145 3.70 GHz 8 G RAM | Nvidia Quadro P4000 |

| Object Detection and Tracking Time (s) | 1068.37 | 125.84 |

| Spatial–temporal Information Extraction Time (s) | 0.84 | 0.84 |

| Total Computing Time (s) | 1069.21 | 126.68 |

| Average Computing Time per Frame (s) | 1.19 | 0.14 |

| Object ID | 1 | 2 | 3 | 4 | 5 |

|---|---|---|---|---|---|

| ME/cm | 31 | 40 | 22 | 48 | 19 |

| RMSE/cm | 4 | 6 | 5 | 7 | 4 |

| Object ID | 1 | 2 | 3 | 4 | 5 |

|---|---|---|---|---|---|

| ME/cm | 9 | 10 | 10 | 9 | 9 |

| RMSE/cm | 1.9 | 2.2 | 2 | 1.7 | 2.3 |

| Object ID | Width/m | Error/cm | Height/m | Error/cm | ||

|---|---|---|---|---|---|---|

| 1 | 0.59 | 0.55 | 4 | 1.59 | 1.61 | −2 |

| 2 | 0.64 | 0.59 | 5 | 1.76 | 1.75 | 1 |

| 3 | 0.66 | 0.61 | 5 | 1.75 | 1.73 | 2 |

| 4 | 0.58 | 0.54 | 4 | 1.61 | 1.60 | 1 |

| 5 | 0.62 | 0.60 | 2 | 1.80 | 1.78 | 2 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Han, S.; Dong, X.; Hao, X.; Miao, S. Extracting Objects’ Spatial–Temporal Information Based on Surveillance Videos and the Digital Surface Model. ISPRS Int. J. Geo-Inf. 2022, 11, 103. https://doi.org/10.3390/ijgi11020103

Han S, Dong X, Hao X, Miao S. Extracting Objects’ Spatial–Temporal Information Based on Surveillance Videos and the Digital Surface Model. ISPRS International Journal of Geo-Information. 2022; 11(2):103. https://doi.org/10.3390/ijgi11020103

Chicago/Turabian StyleHan, Shijing, Xiaorui Dong, Xiangyang Hao, and Shufeng Miao. 2022. "Extracting Objects’ Spatial–Temporal Information Based on Surveillance Videos and the Digital Surface Model" ISPRS International Journal of Geo-Information 11, no. 2: 103. https://doi.org/10.3390/ijgi11020103

APA StyleHan, S., Dong, X., Hao, X., & Miao, S. (2022). Extracting Objects’ Spatial–Temporal Information Based on Surveillance Videos and the Digital Surface Model. ISPRS International Journal of Geo-Information, 11(2), 103. https://doi.org/10.3390/ijgi11020103