DeepDBSCAN: Deep Density-Based Clustering for Geo-Tagged Photos

Abstract

:1. Introduction

- SELECT id, DBSCAN(gps,eps:=50,mpts:=3) over() AS cid

- FROM tourPhotos

- WHERE ST_within(gps, $Yellowstone) AND IsDeepTrue(photo,‘Bear’,‘CNN_COCO’);

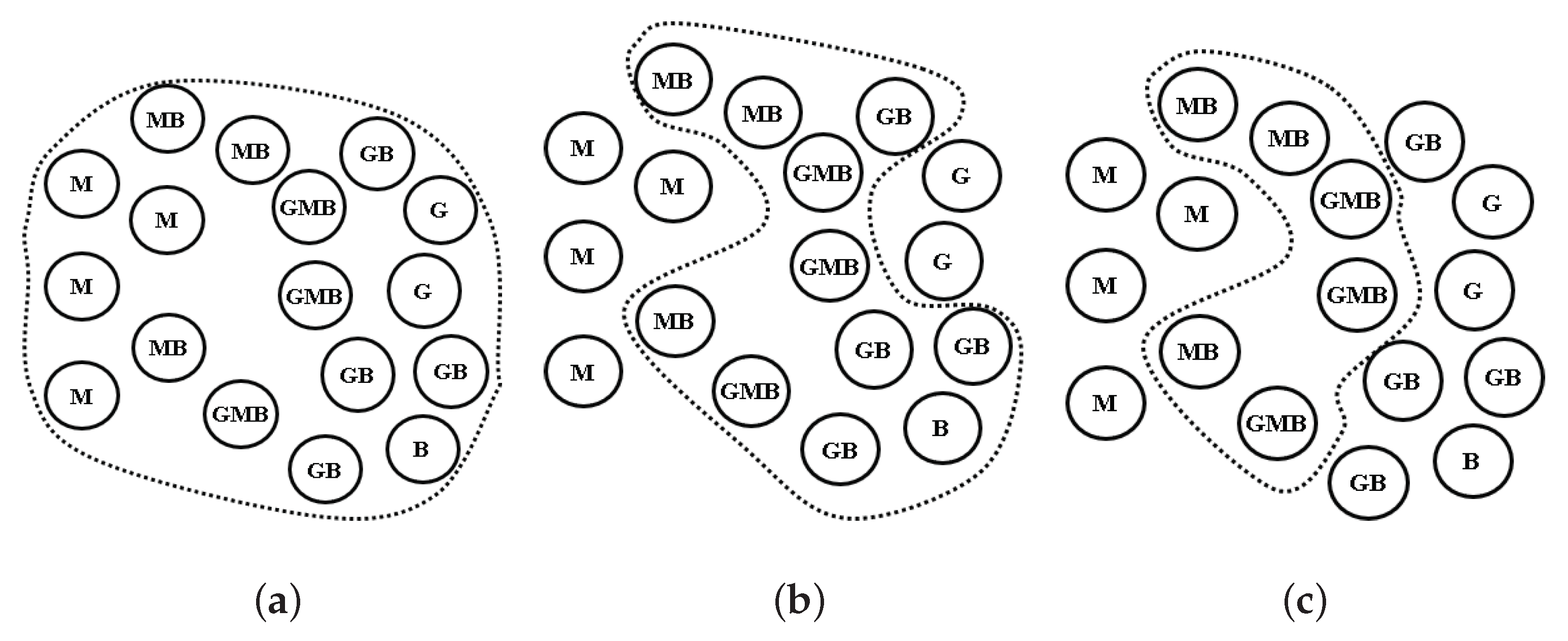

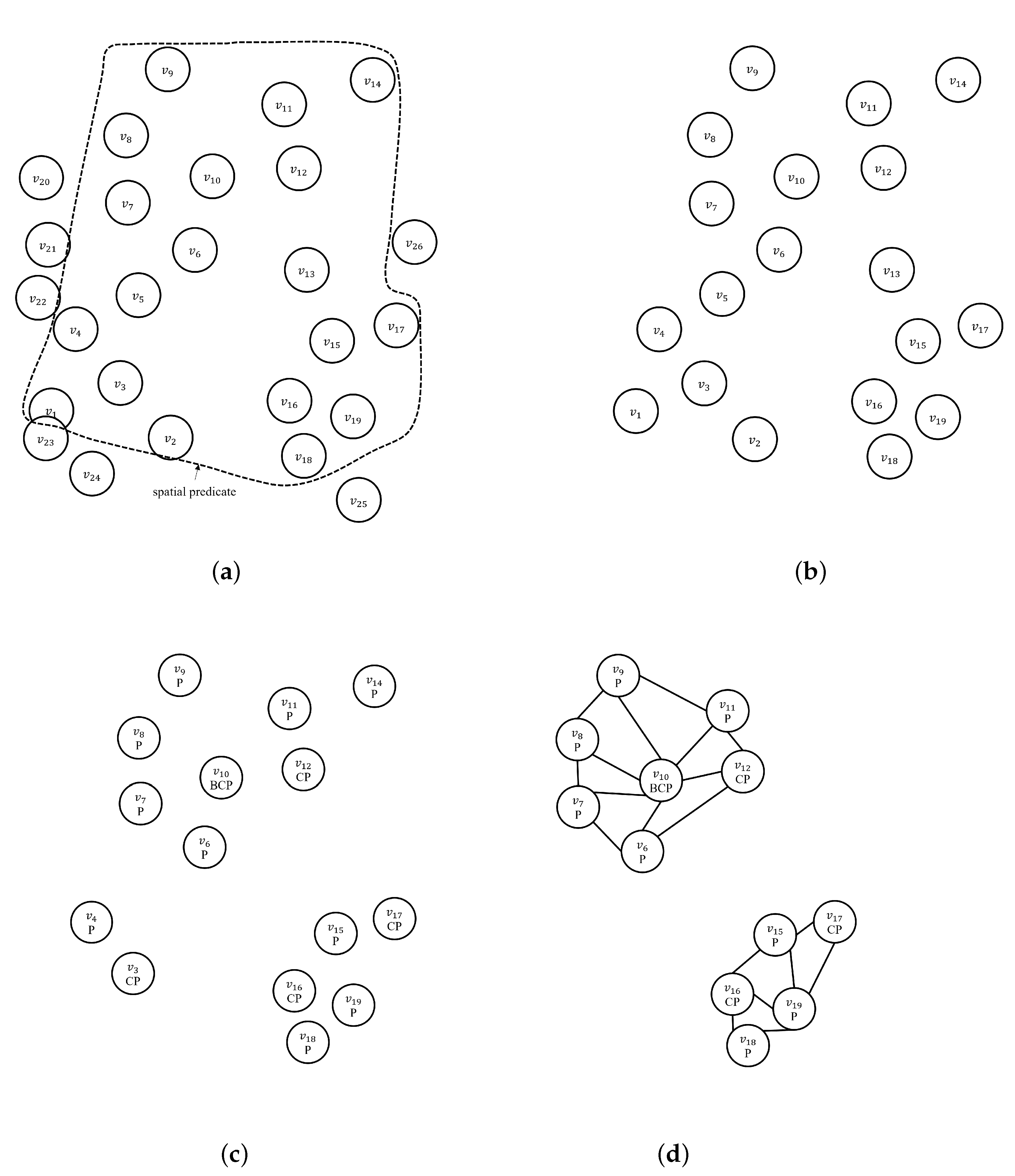

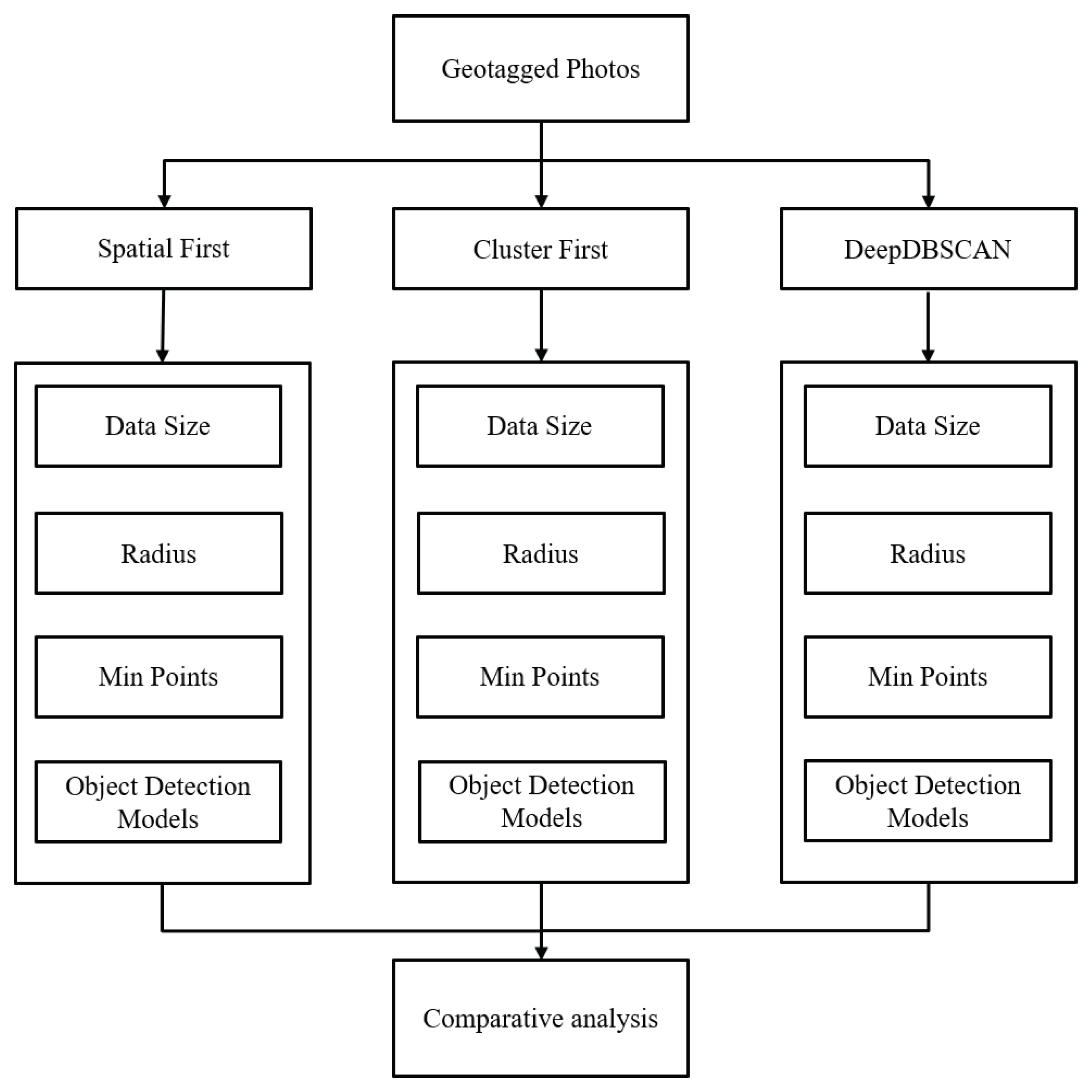

- SpatialFirst DBSCAN: This algorithm performs spatialSelection first. We can reduce the number of deep detections by performing deep detection only on the spatially filtered results.

- ClusterFirst DBSCAN: Spatial selection and density-based clustering are performed in the first steps. Deep detection and selection are performed only on the photos in the clusters. However, we need to perform density-based clustering again only for photos that satisfy the deep predicate.

- DeepDBSCAN: Deep detection is performed by integrating it within the clustering algorithm. Deep detection is carefully performed on the nearest neighboring graph that satisfies the density threshold.

2. Related Work

2.1. Mining GPS and Trajectory Data

2.2. Mining Geo-Tagged Photos

3. Preliminaries

| Algorithm 1: NaiveDBSCAN. |

| Input: P, sc, dp, , k Output: 1 deepSelection(P, dp) 2 spatialSelection(, sc) 3 constructNNGraph(, , k) 4 expandCluster() 5return C |

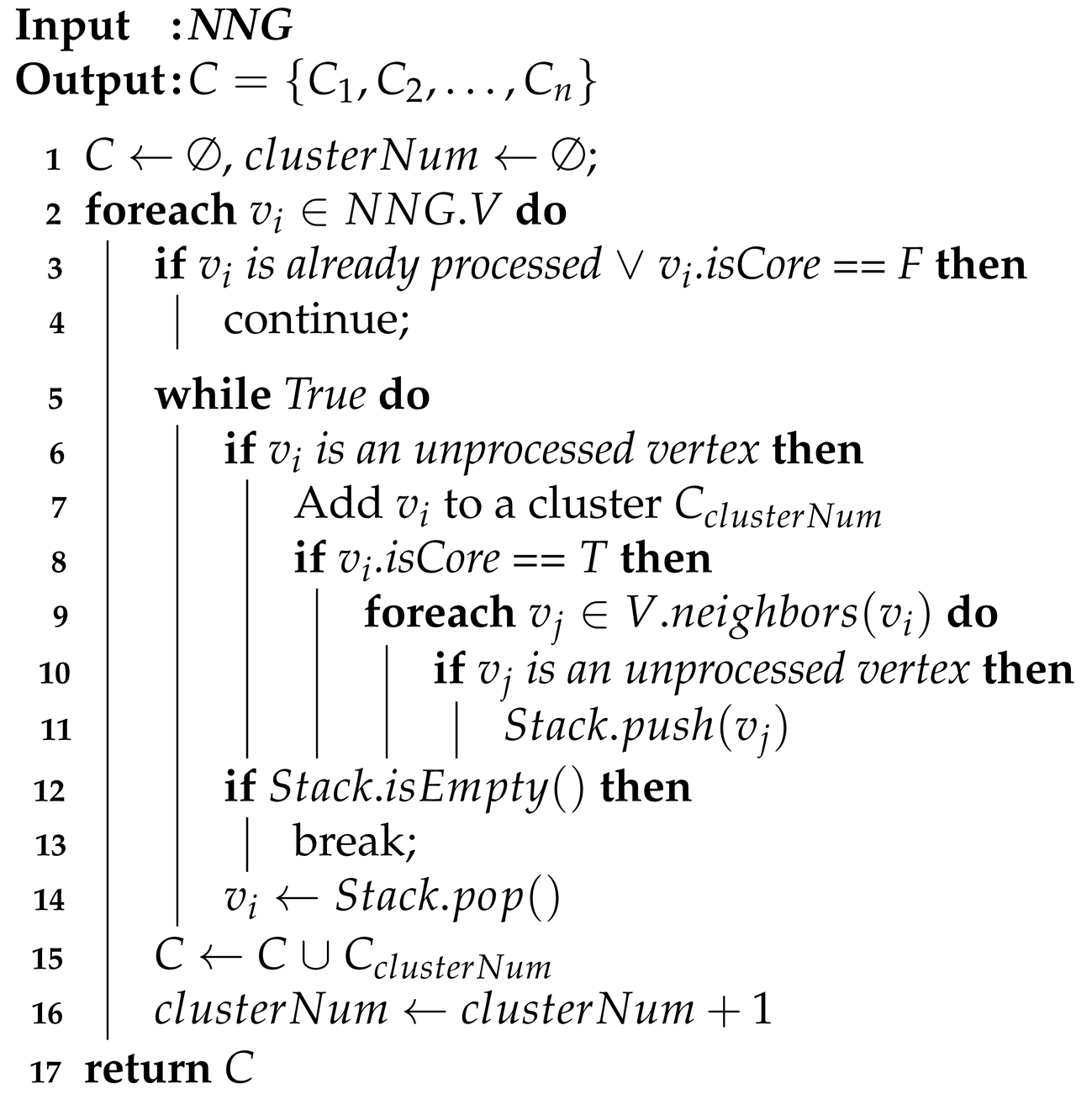

| Algorithm 2: expandCluster. |

|

4. Deep Content-Based Density Clustering

4.1. Spatial-First Approach

| Algorithm 3: SpatialFirst DBSCAN. |

| Input: P, sp, dp, , k Output: 1 spatialSelection(P, sp) 2 deepSelection(, dp) 3 constructNNGraph(, , k) 4 expandCluster() 5return C |

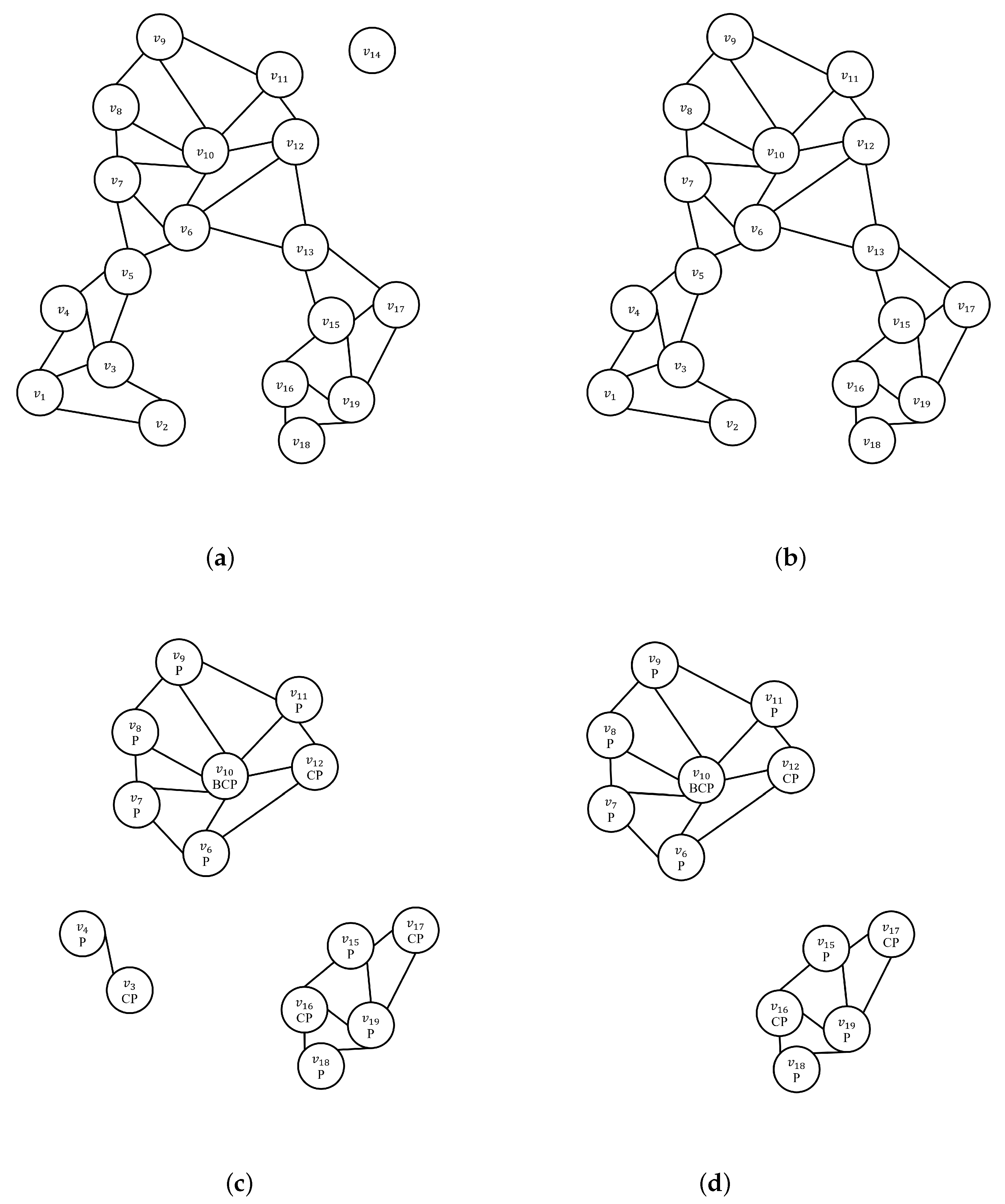

4.2. Clustering-First Approach

| Algorithm 4: ClusterFirst DBSCAN. |

| Input: P, sp, dp, , k Output: 1 spatialSelection(P, sp) 2 constructNNGraph(, , k) 3 expandCluster() 4 deepSelection( flat(C), dp) 5 constructNNGraph(, ) 6 expandCluster() 7return |

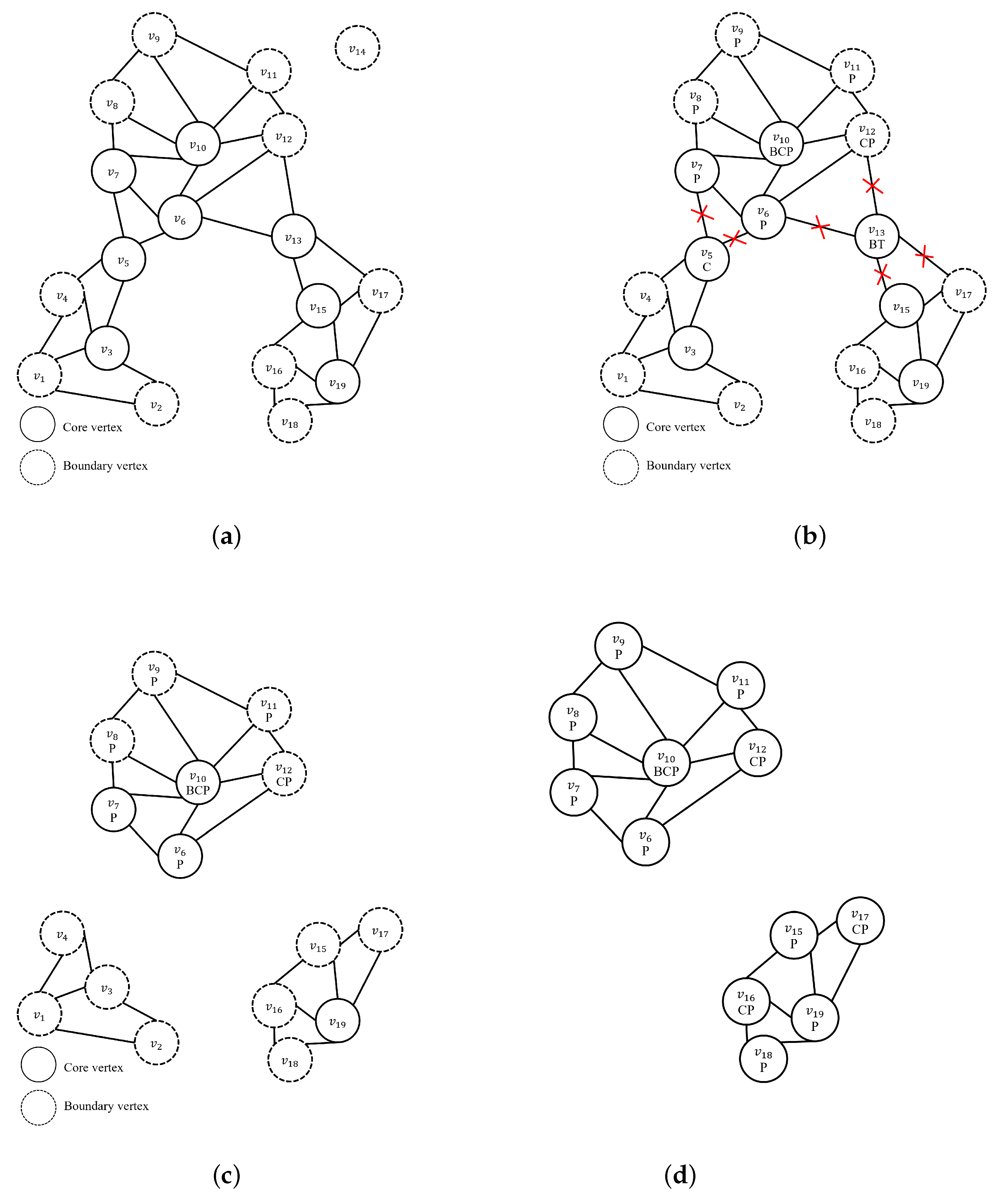

4.3. DeepDBSCAN Approach

| Algorithm 5: DeepDBSCAN. |

| Input: P, sp, dp, , k Output: 1 spatialSelection(P, sp) 2 constructNNGraph(, , k) 3 NNG_DeepFiltering(, dp, k) 4 expandCluster() 5return C |

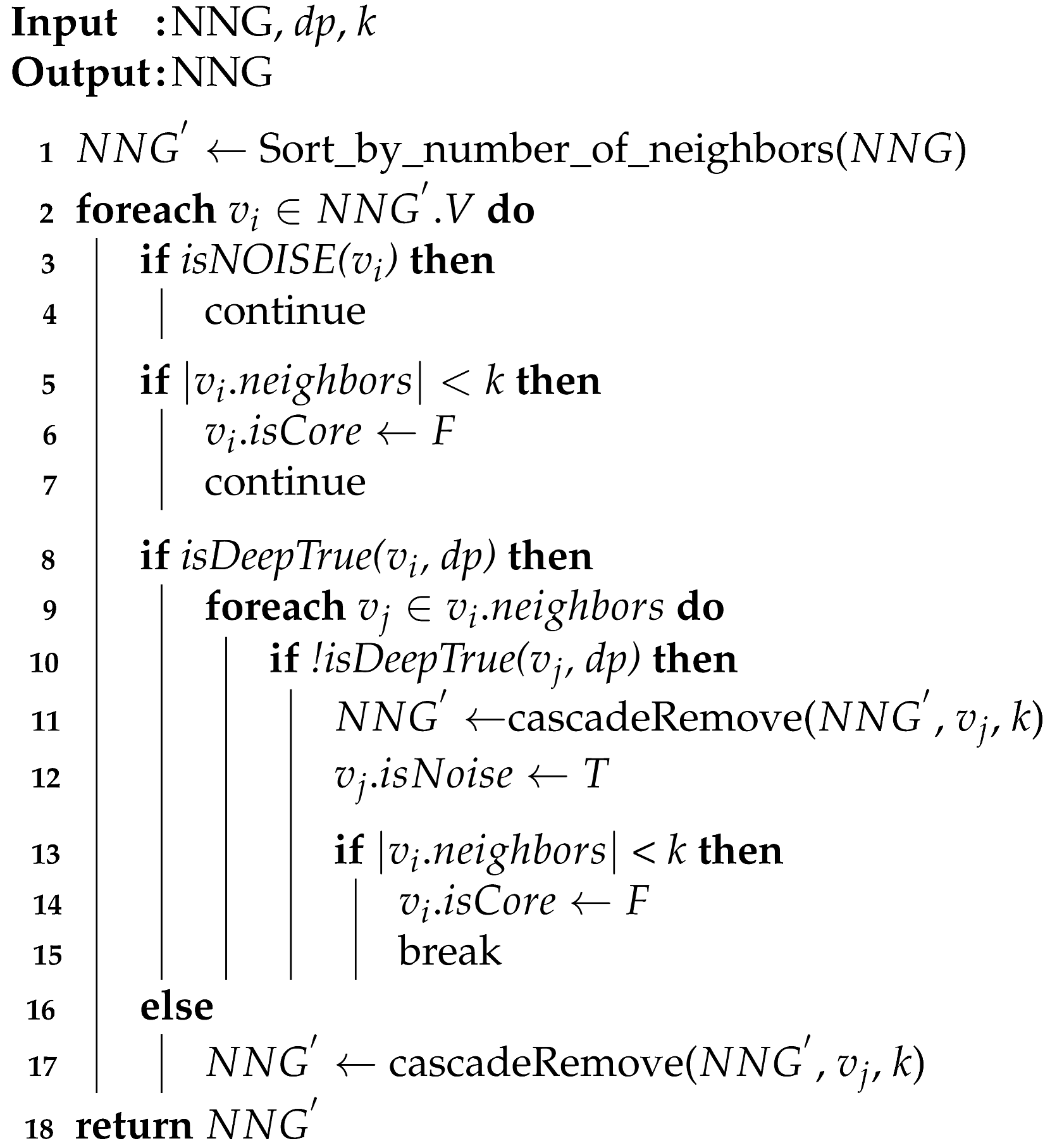

| Algorithm 6: NNG_DeepFiltering. |

|

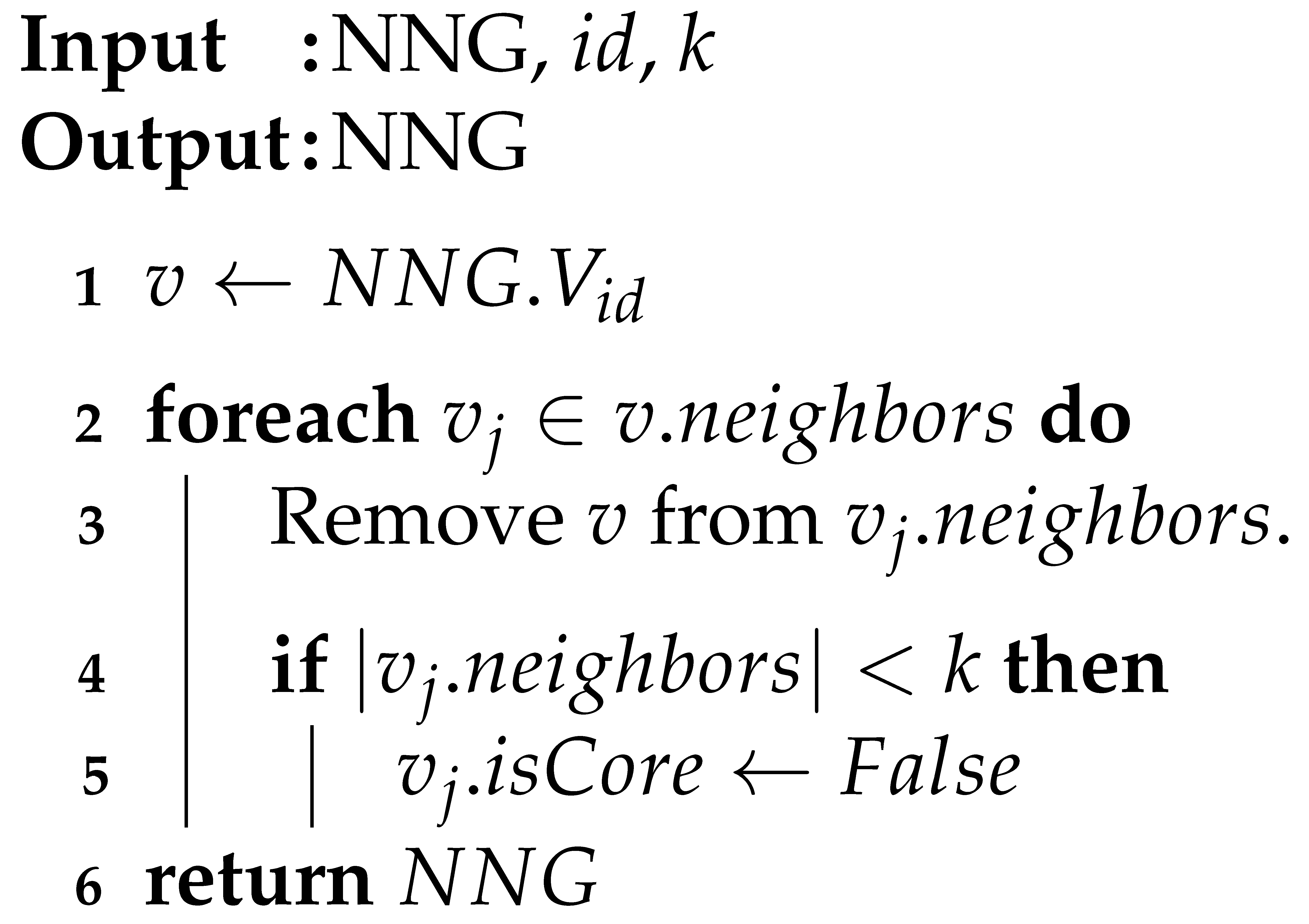

| Algorithm 7: cascadeRemove. |

|

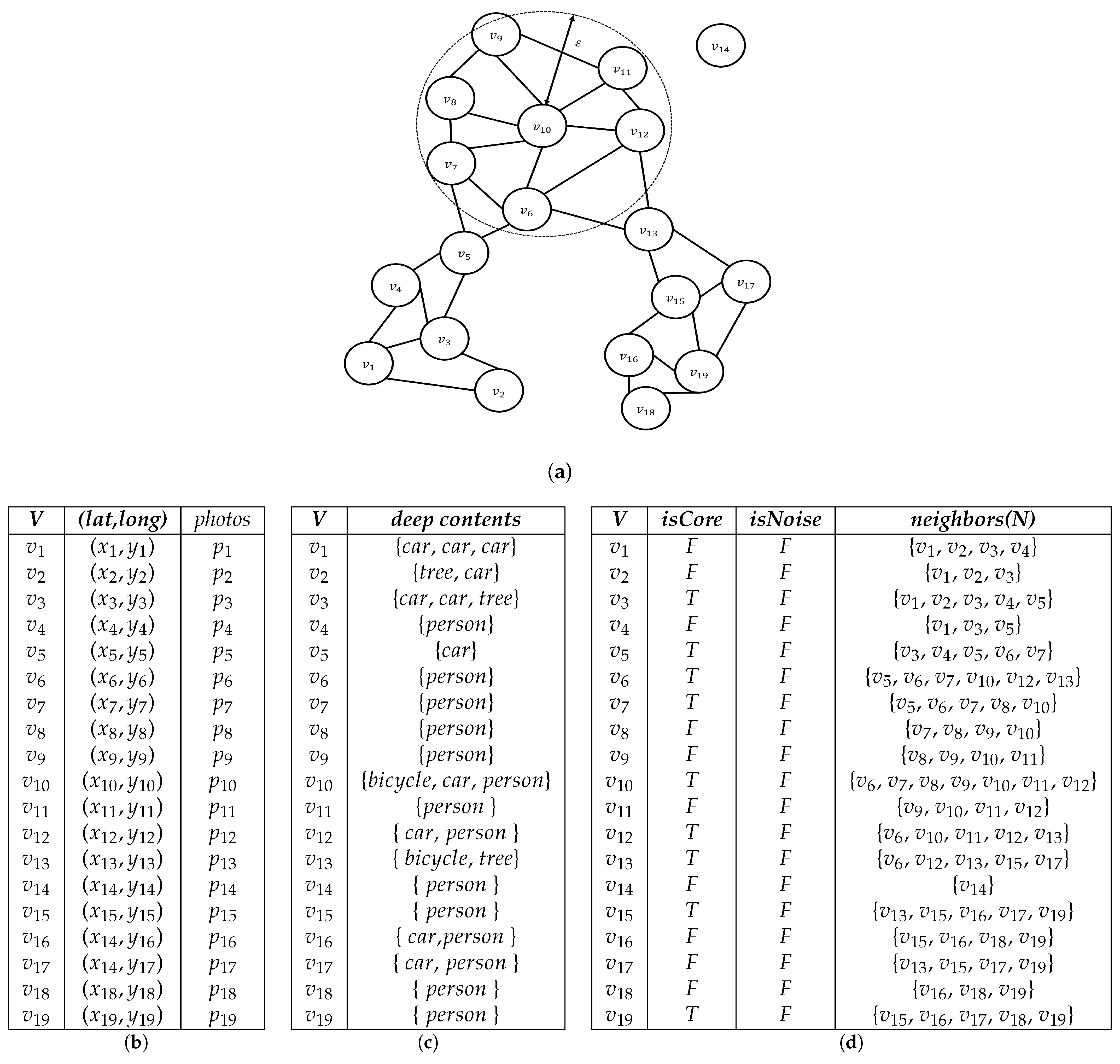

| vertex | isCore | isNoise | neighbors |

| F | T | {, , , } |

| vertex | isCore | isNoise | neighbors |

| F | F | {, , , } | |

| F | F | {, , } | |

| T | F | {, , , , } | |

| F | F | {, , , } |

5. Experiments and Evaluation

5.1. Experimental Setup

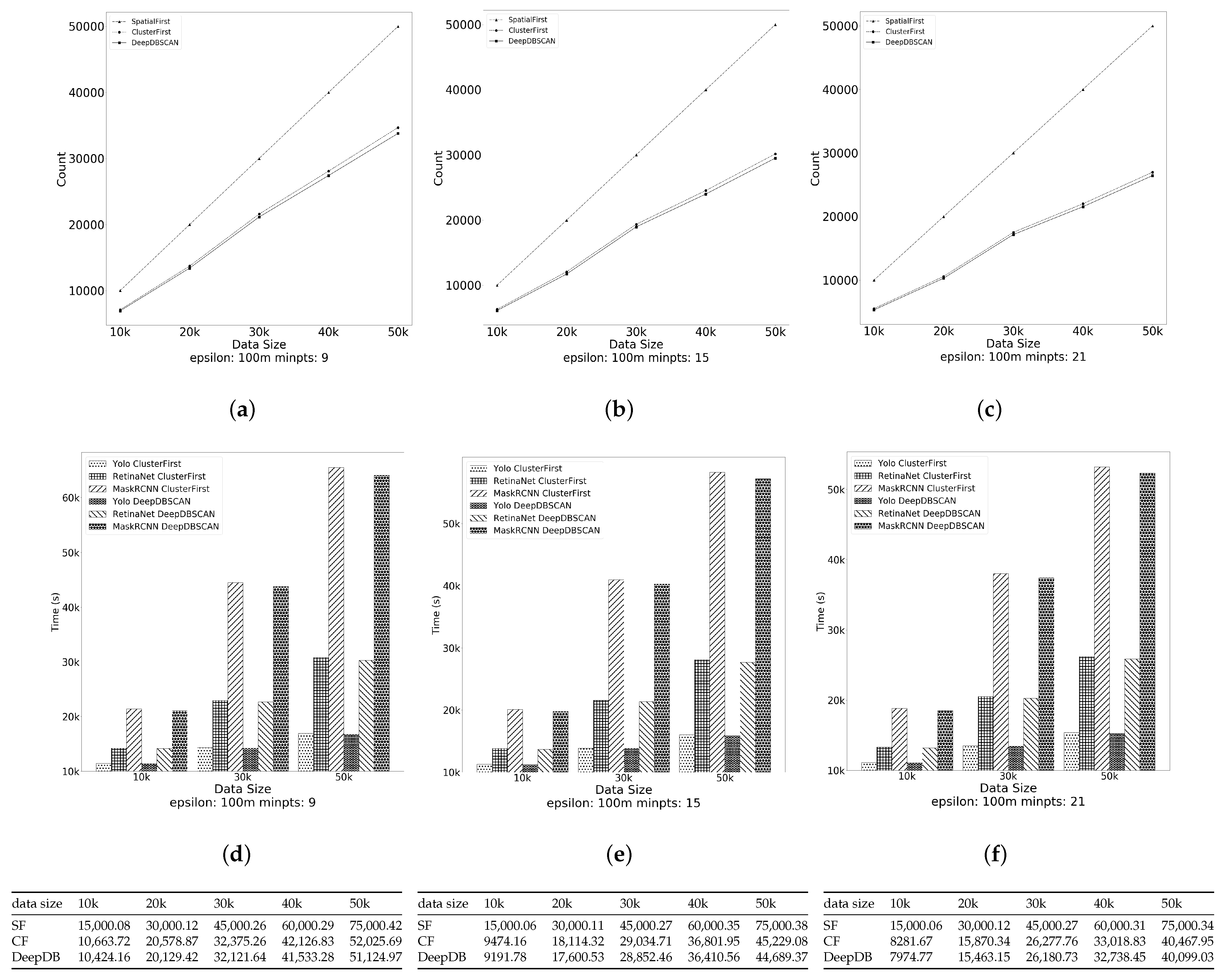

5.2. Effect of the Data Size

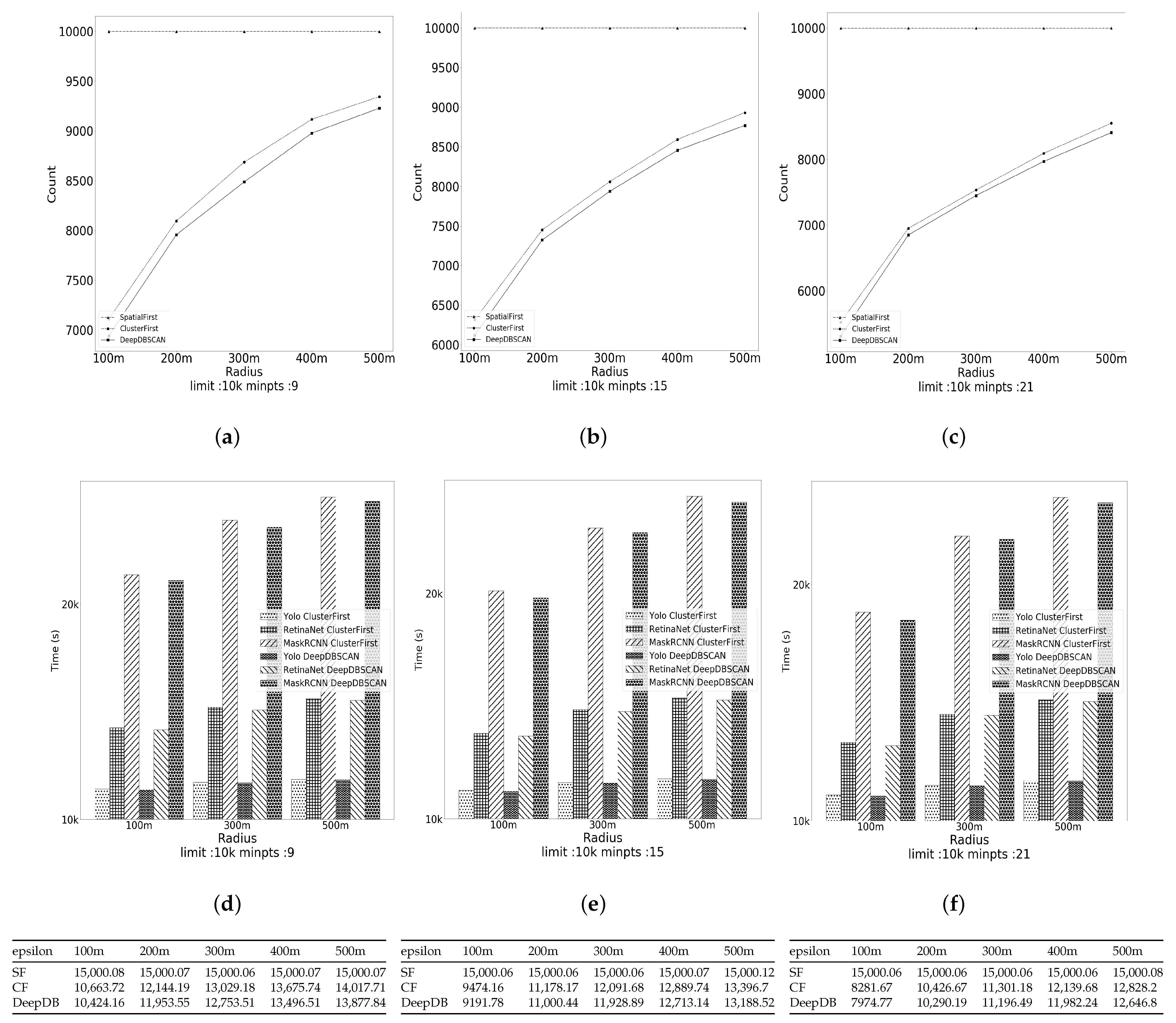

5.3. Effect of the Radius

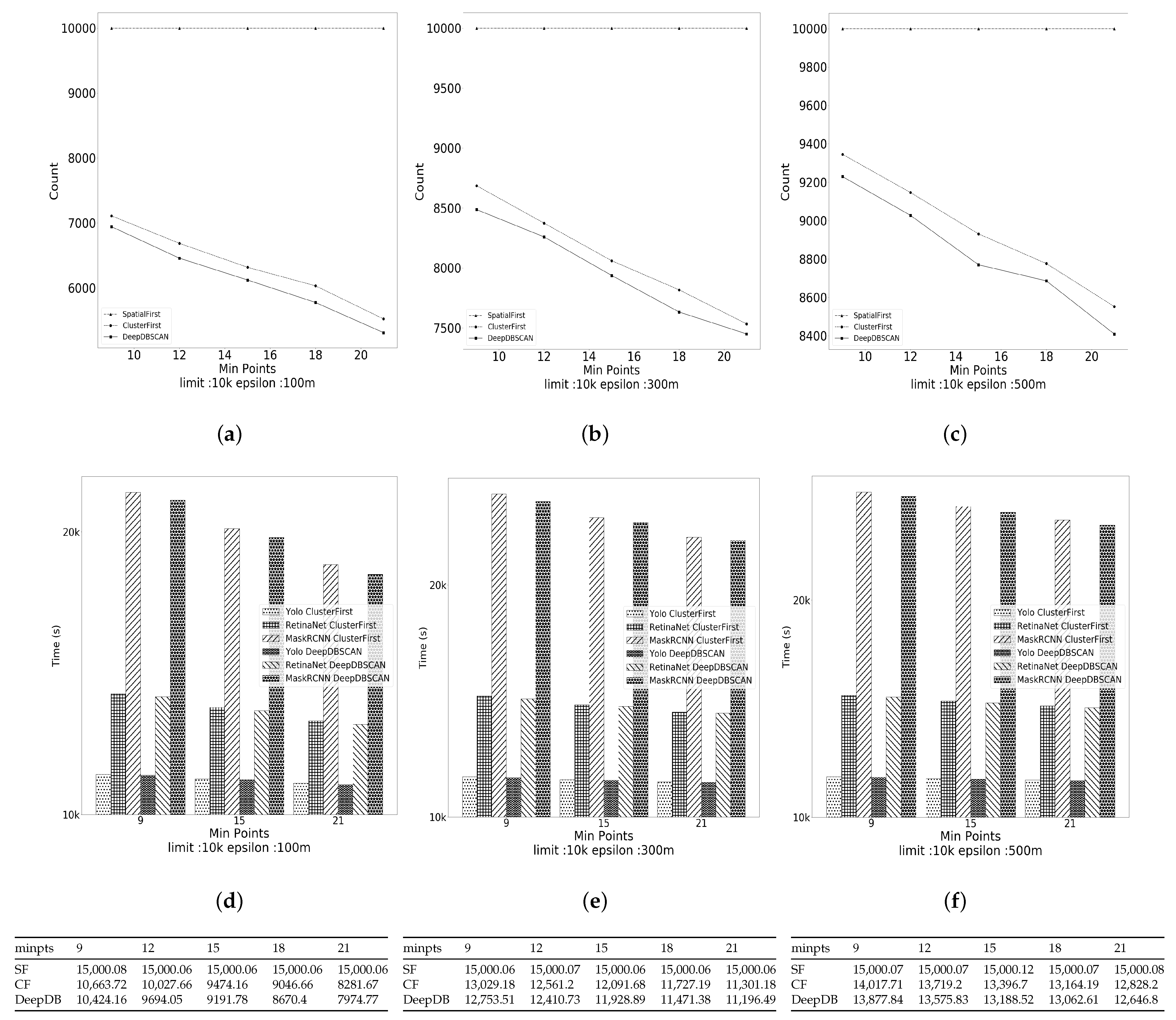

5.4. Effect of the Minimum Number of Points

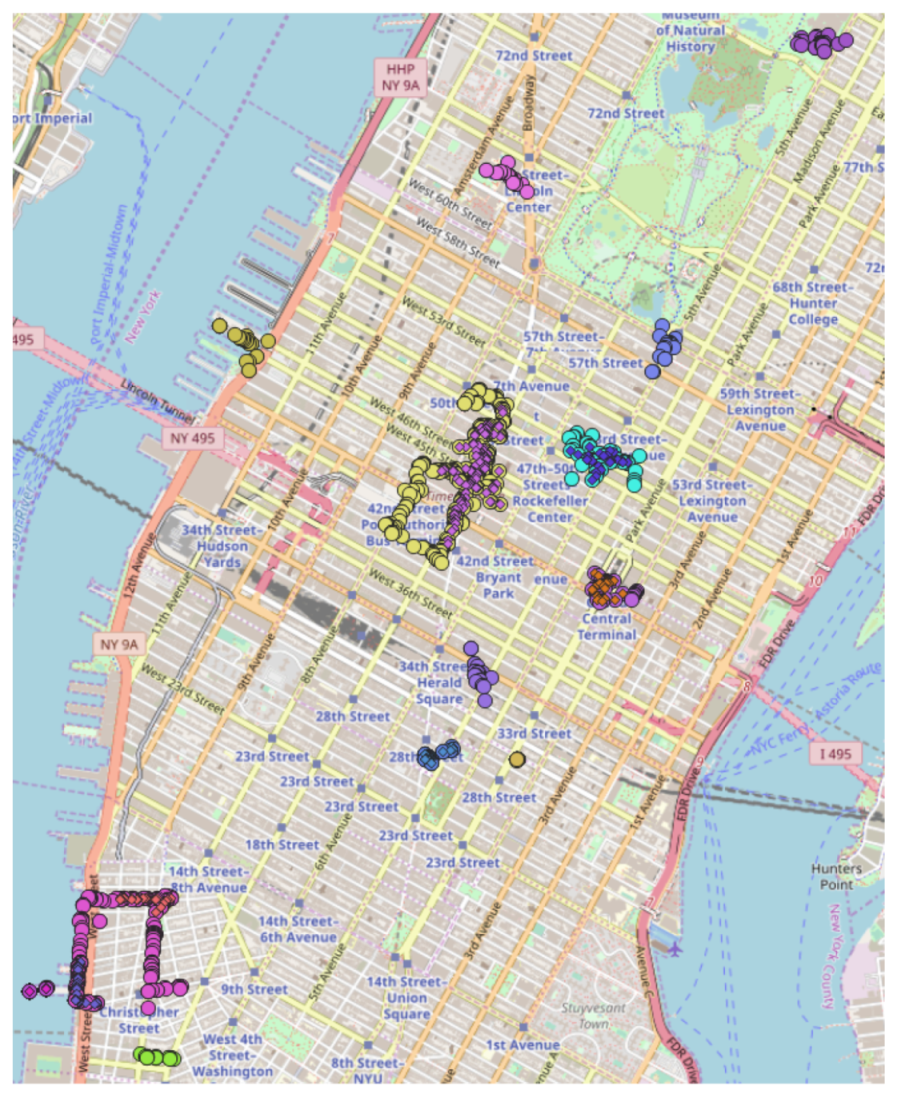

6. Case Study

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Conflicts of Interest

References

- Kim, D.; Kang, Y.; Park, Y.; Kim, N.; Lee, J. Understanding tourists’ urban images with geotagged photos using convolutional neural networks. Spat. Inf. Res. 2020, 28, 241–255. [Google Scholar] [CrossRef] [Green Version]

- Kisilevich, S.; Keim, D.; Andrienko, N.; Andrienko, G. Towards acquisition of semantics of places and events by multi-perspective analysis of geotagged photo collections. In Geospatial Visualisation; Springer: Berlin/Heidelberg, Germany, 2013; pp. 211–233. [Google Scholar]

- Tian, J.; Ding, W.; Wu, C.; Nam, K.W. A Generalized Approach for Anomaly Detection From the Internet of Moving Things. IEEE Access 2019, 7, 144972–144982. [Google Scholar] [CrossRef]

- Ding, W.; Yang, K.; Nam, K.W. Measuring similarity between geo-tagged videos using largest common view. Electron. Lett. 2019, 55, 450–452. [Google Scholar] [CrossRef] [Green Version]

- Zeng, Z.; Zhang, R.; Liu, X.; Guo, X.; Sun, H. Generating tourism path from trajectories and geo-photos. In Proceedings of the International Conference on Web Information Systems Engineering, Doha, Qatar, 13–15 November 2012; Springer: Berlin/Heidelberg, Germany, 2012; pp. 199–212. [Google Scholar]

- Kurashima, T.; Iwata, T.; Irie, G.; Fujimura, K. Travel route recommendation using geotags in photo sharing sites. In Proceedings of the 19th ACM International Conference on Information and Knowledge Management, Toronto, ON, Canada, 26–30 October 2010; pp. 579–588. [Google Scholar]

- Chen, Y.Y.; Cheng, A.J.; Hsu, W.H. Travel recommendation by mining people attributes and travel group types from community-contributed photos. IEEE Trans. Multimed. 2013, 15, 1283–1295. [Google Scholar] [CrossRef]

- Zheng, Y.T.; Zha, Z.J.; Chua, T.S. Mining travel patterns from geotagged photos. ACM Trans. Intell. Syst. Technol. (TIST) 2012, 3, 1–18. [Google Scholar] [CrossRef]

- Kim, Y.; Kim, J.; Yu, H. Geotree: Using spatial information for georeferenced video search. Knowl.-Based Syst. 2014, 61, 1–12. [Google Scholar] [CrossRef]

- Kim, S.H.; Lu, Y.; Constantinou, G.; Shahabi, C.; Wang, G.; Zimmermann, R. Mediaq: Mobile multimedia management system. In Proceedings of the 5th ACM Multimedia Systems Conference, Singapore, 19–21 March 2014; pp. 224–235. [Google Scholar]

- Lu, Y.; To, H.; Alfarrarjeh, A.; Kim, S.H.; Yin, Y.; Zimmermann, R.; Shahabi, C. GeoUGV: User-generated mobile video dataset with fine granularity spatial metadata. In Proceedings of the 7th International Conference on Multimedia Systems, Klagenfurt, Austria, 10–13 May 2016; pp. 1–6. [Google Scholar]

- Ester, M.; Kriegel, H.P.; Sander, J.; Xu, X. A density-Based Algorithm for Discovering Clusters in Large Spatial Databases with Noise; KDD: Washington, DC, USA, 1996; Volume 96, pp. 226–231. [Google Scholar]

- Chang, B.; Park, Y.; Kim, S.; Kang, J. DeepPIM: A deep neural point-of-interest imputation model. Inf. Sci. 2018, 465, 61–71. [Google Scholar] [CrossRef]

- Zhang, S.; Yao, L.; Sun, A.; Tay, Y. Deep learning based recommender system: A survey and new perspectives. ACM Comput. Surv. (CSUR) 2019, 52, 1–38. [Google Scholar] [CrossRef] [Green Version]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft coco: Common objects in context. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; Springer: Berlin/Heidelberg, Germany, 2014; pp. 740–755. [Google Scholar]

- Birant, D.; Kut, A. ST-DBSCAN: An algorithm for clustering spatial–temporal data. Data Knowl. Eng. 2007, 60, 208–221. [Google Scholar] [CrossRef]

- Li, Z.; Han, J.; Ji, M.; Tang, L.A.; Yu, Y.; Ding, B.; Lee, J.G.; Kays, R. Fast mining of spatial frequent wordset from social database. ACM Trans. Intell. Syst. Technol. 2011, 2, 1–32. [Google Scholar]

- Andrade, G.; Ramos, G.; Madeira, D.; Sachetto, R.; Ferreira, R.; Rocha, L. G-dbscan: A gpu accelerated algorithm for density-based clustering. Procedia Comput. Sci. 2013, 18, 369–378. [Google Scholar] [CrossRef] [Green Version]

- Yin, H.; Wang, C.; Yu, N.; Zhang, L. Trip mining and recommendation from geo-tagged photos. In Proceedings of the 2012 IEEE International Conference on Multimedia and Expo Workshops, Melbourne, VIC, Australia, 9–13 July 2012; pp. 540–545. [Google Scholar]

- Spyrou, E.; Sofianos, I.; Mylonas, P. Mining tourist routes from Flickr photos. In Proceedings of the 2015 10th International Workshop on Semantic and Social Media Adaptation and Personalization (SMAP), Trento, Italy, 5–6 November 2015; pp. 1–5. [Google Scholar]

- Lee, I.; Cai, G.; Lee, K. Mining points-of-interest association rules from geo-tagged photos. In Proceedings of the 2013 46th Hawaii International Conference on System Sciences, Wailea, HI, USA, 7–10 January 2013; pp. 1580–1588. [Google Scholar]

- Zou, Z.; He, X.; Xie, X.; Huang, Q. Enhancing the Impression on Cities: Mining Relations of Attractions with Geo-Tagged Photos. In Proceedings of the 2018 IEEE SmartWorld, Ubiquitous Intelligence & Computing, Advanced & Trusted Computing, Scalable Computing & Communications, Cloud & Big Data Computing, Internet of People and Smart City Innovation, Guangzhou, China, 8–12 October 2018; pp. 1718–1724. [Google Scholar]

- Kisilevich, S.; Mansmann, F.; Keim, D. P-DBSCAN: A density based clustering algorithm for exploration and analysis of attractive areas using collections of geo-tagged photos. In Proceedings of the 1st International Conference and Exhibition on Computing for Geospatial Research & Application, Washington, DC, USA, 21–23 June 2010; pp. 1–4. [Google Scholar]

- Weng, J.; Lee, B.S. Event detection in twitter. In Proceedings of the International AAAI Conference on Web and Social Media, Barcelona, Spain, 17–21 July 2011; Volume 5. [Google Scholar]

- Lee, Y.; Nam, K.W.; Ryu, K.H. Fast mining of spatial frequent wordset from social database. Spat. Inf. Res. 2017, 25, 271–280. [Google Scholar] [CrossRef] [Green Version]

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal loss for dense object detection. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2961–2969. [Google Scholar]

| Notation | Description |

|---|---|

| P | Geo-tagged photos |

| Nearest neighbor graph | |

| C | A set of clusters |

| V | A set of vertices for geo-tagged photos |

| Spatial predicate | |

| Deep content-based predicate | |

| k | Minimum number of points |

| Threshold of the radius for neighbors |

| vertex | isCore | isNoise | neighbors |

|---|---|---|---|

| T | F | {, , , , , , } | |

| T | F | {, , , , , } | |

| T | F | {, , , , } | |

| T | F | {, , , , } | |

| T | F | {, , , , } | |

| F | F | {, , , , } | |

| T | F | {, , , , } | |

| T | F | {, , , , } | |

| T | F | {, , , , } | |

| F | F | {, , , } | |

| F | F | {, , , } | |

| F | F | {, , , } | |

| F | F | {, , , } | |

| F | F | {, , , } | |

| F | F | {, , , } | |

| F | F | {, , } | |

| F | F | {, , } | |

| F | F | {, , } | |

| F | F | {} |

| vertex | isCore | isNoise | neighbors |

|---|---|---|---|

| T | F | {, , , , , , } | |

| F | F | {, , , } | |

| F | F | {, , , } | |

| F | T | {} | |

| F | F | {, , , } | |

| F | F | {, , , } | |

| F | T | {} | |

| F | F | {, , , } | |

| T | F | {, , , , } | |

| F | F | {, , , } | |

| F | F | {, , , } | |

| F | F | {, , , } | |

| F | F | {, , , } | |

| F | F | {, , , } | |

| F | F | {, , } | |

| F | F | {, , } | |

| F | F | {, , } | |

| F | F | {, , } | |

| F | T | {} |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Park, J.Y.; Ryu, D.J.; Nam, K.W.; Jang, I.; Jang, M.; Lee, Y. DeepDBSCAN: Deep Density-Based Clustering for Geo-Tagged Photos. ISPRS Int. J. Geo-Inf. 2021, 10, 548. https://doi.org/10.3390/ijgi10080548

Park JY, Ryu DJ, Nam KW, Jang I, Jang M, Lee Y. DeepDBSCAN: Deep Density-Based Clustering for Geo-Tagged Photos. ISPRS International Journal of Geo-Information. 2021; 10(8):548. https://doi.org/10.3390/ijgi10080548

Chicago/Turabian StylePark, Jang You, Dong June Ryu, Kwang Woo Nam, Insung Jang, Minseok Jang, and Yonsik Lee. 2021. "DeepDBSCAN: Deep Density-Based Clustering for Geo-Tagged Photos" ISPRS International Journal of Geo-Information 10, no. 8: 548. https://doi.org/10.3390/ijgi10080548