Semantic Relation Model and Dataset for Remote Sensing Scene Understanding

Abstract

:1. Introduction

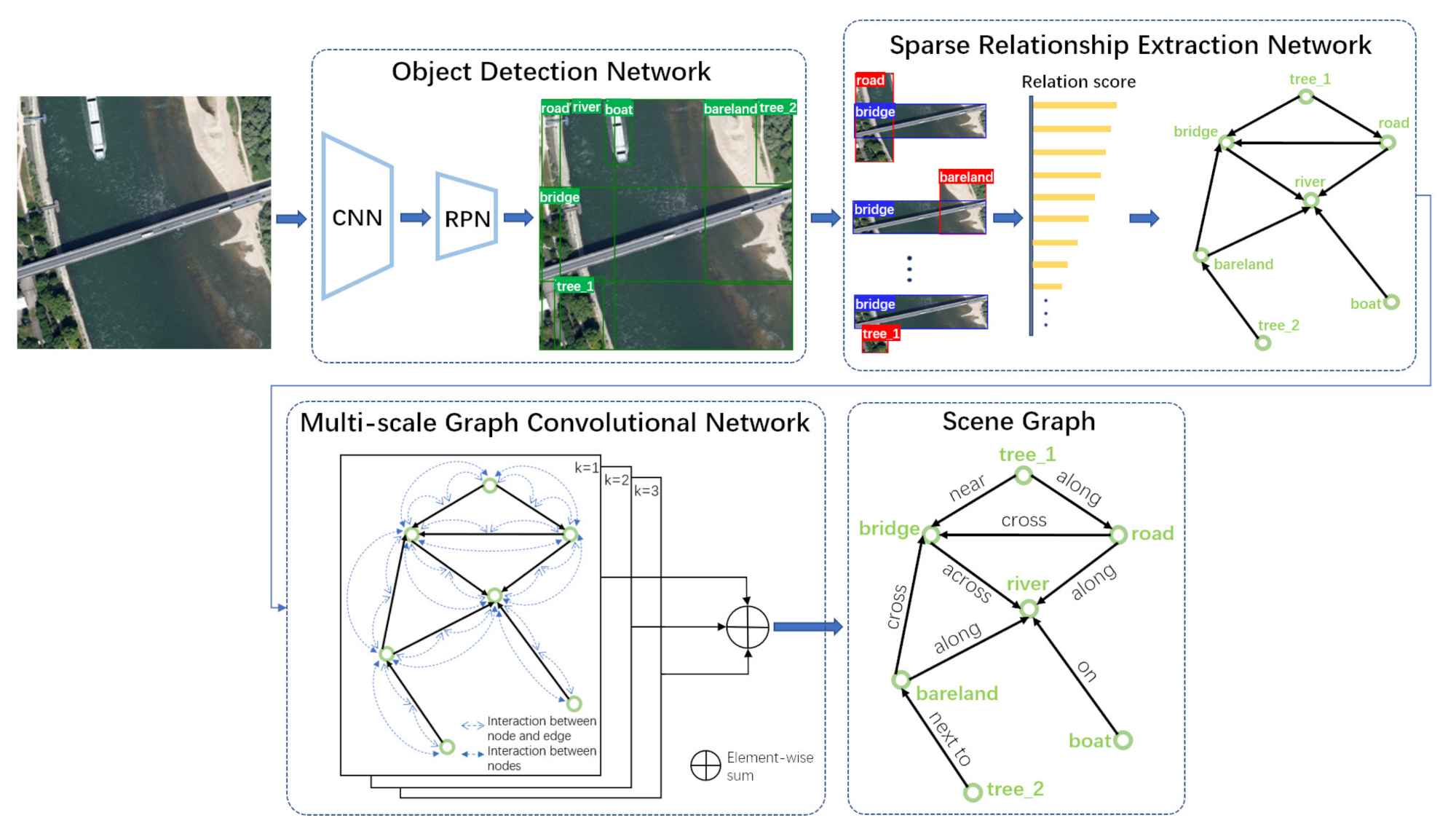

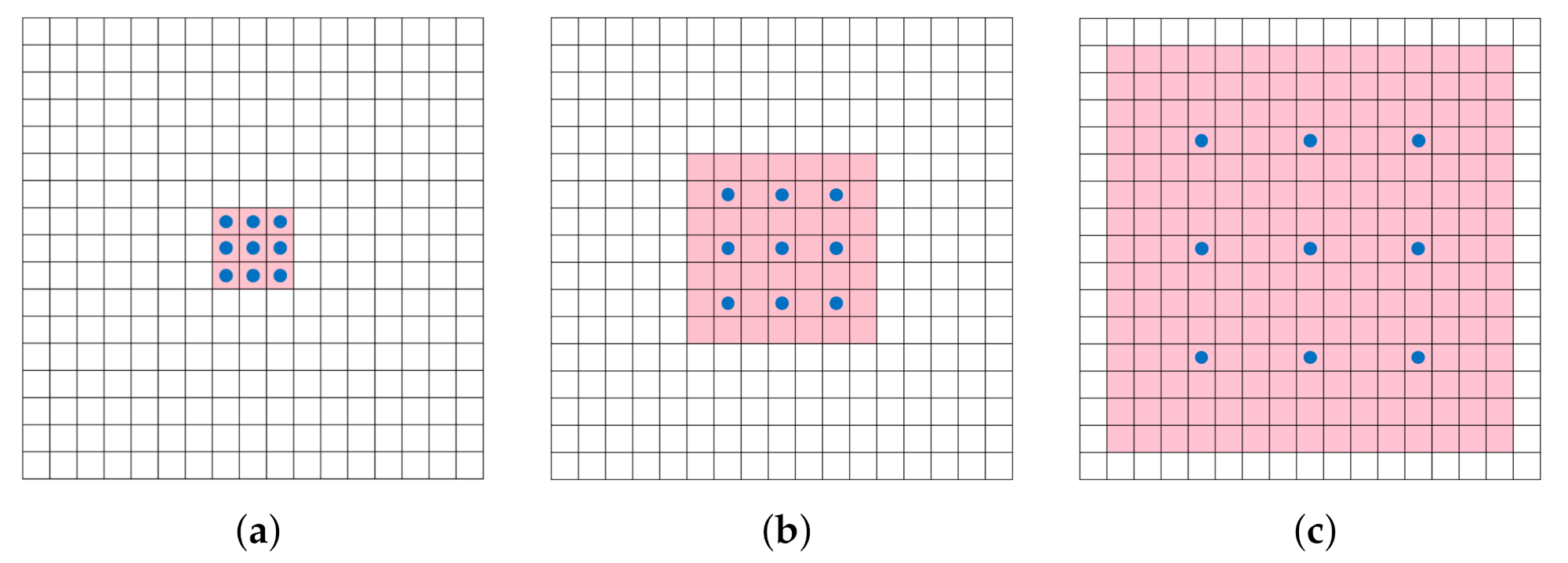

- To deal with the inherent characteristics in remote sensing images, such as large spans and particular spatial distribution of entities, this paper introduces dilated convolution [24] into our method and builds a multi-scale graph convolutional network creatively, which is helpful to expand the cognitive vision of semantic information.

- A novel multi-scale semantic fusion network is presented for scene graph generation. In addition, to improve the efficiency of relation reasoning, translation embedding (TransE) [29] is adopted to calculate the correlation scores between nodes and further eliminate the invalid candidate edges.

- Aiming at the construction task of remote sensing scene graph, a tailored dataset is proposed to break down the semantic barrier between category perception and relation cognition. To the best of our knowledge, RSSGD is the first scene graph dataset in remote sensing field.

2. Related Works

2.1. Scene Graph Generation

2.2. Scene Graph Dataset

2.3. Attention Mechanism

2.4. Graph Convolutional Network

3. Materials and Methods

3.1. Scene Graph Generation Model for Remote Sensing Image

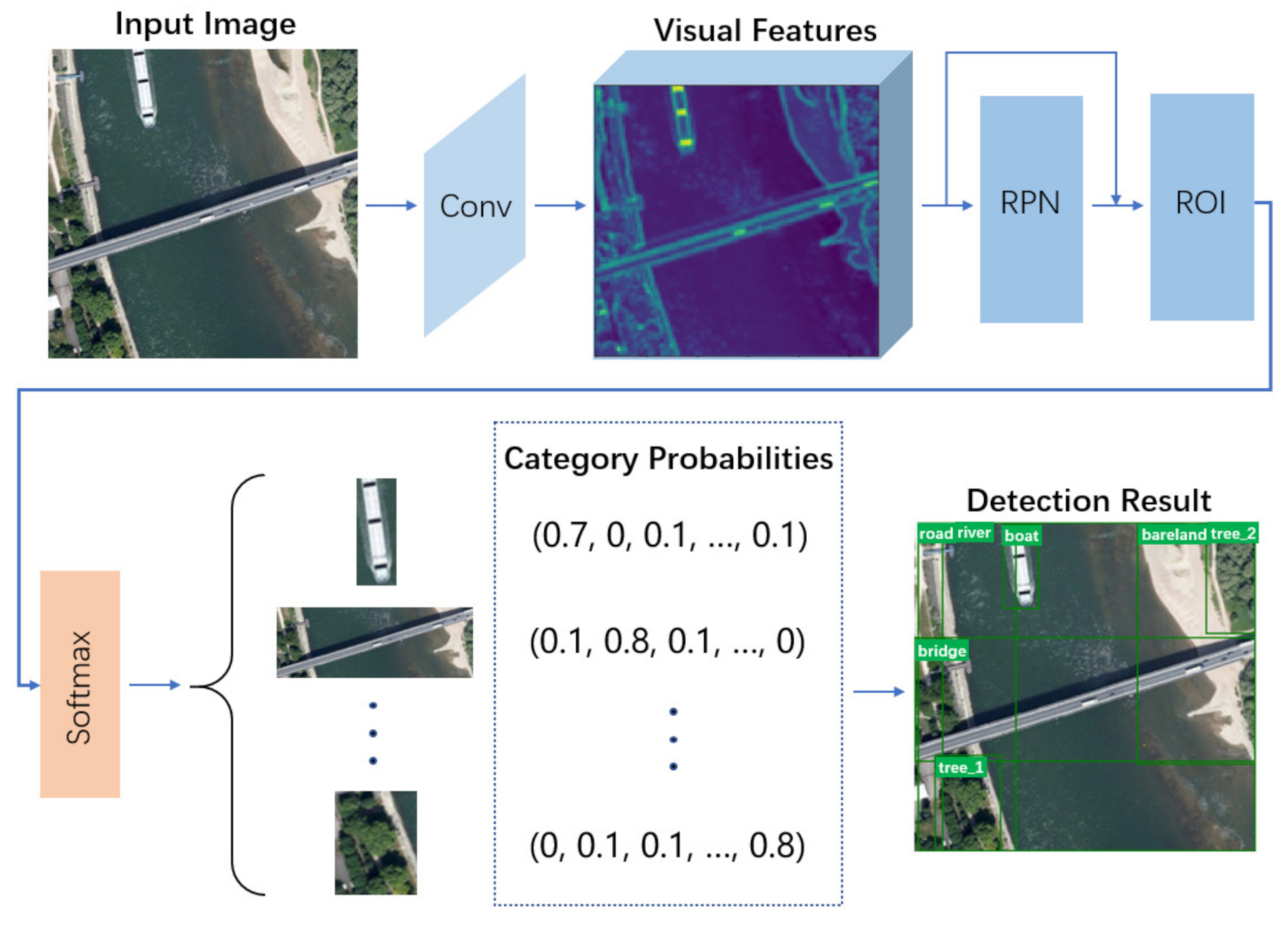

- Object Detection Network. The target patches with their initial categories are identified by this detection framework from the input pictures. In general, the regions are marked by a group of bounding boxes one by one. The details are shown in Figure 3.

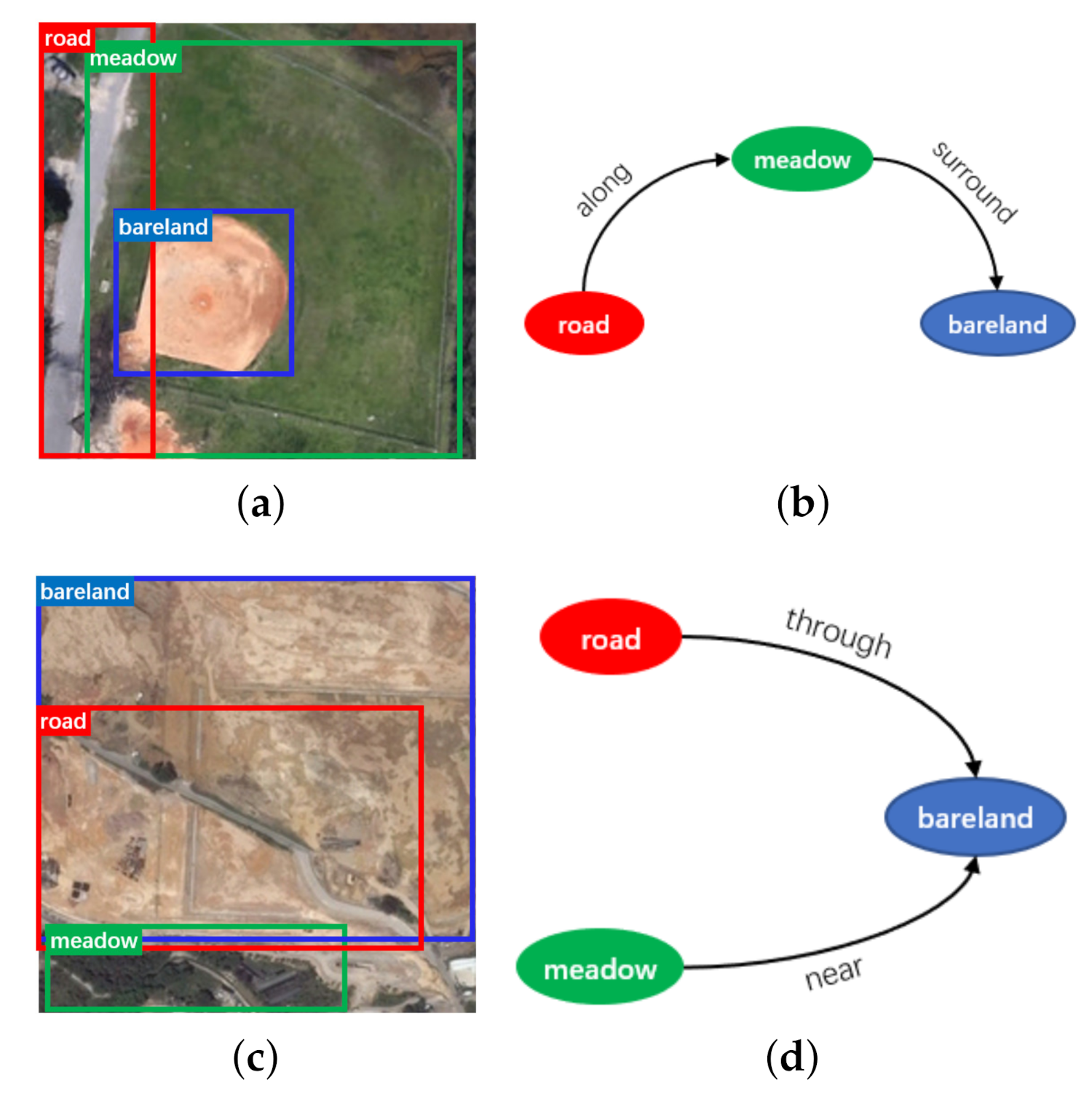

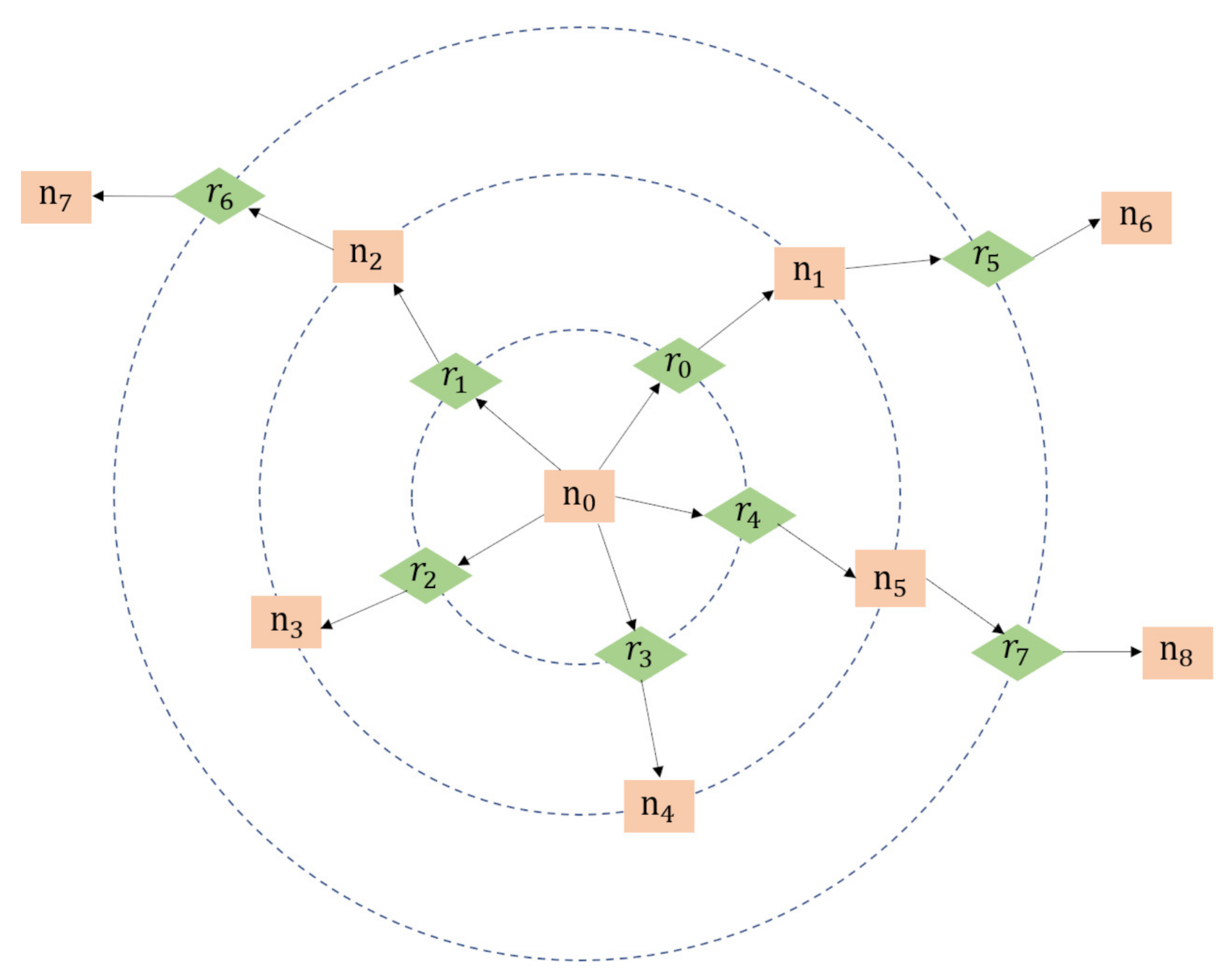

- Sparse Relationship Extraction Network (SREN). This module is designed for calculating and sorting the scores of relations between all pairs of nodes (red box representing subject and blue box representing object) to remove the invalid or weak correlation node pairs, so as to clarify the semantic combinations of subjects and objects.

- Multi-scale Graph Convolutional Network (MS-GCN). Based on the selected node pairs with strong semantic relatedness, the multi-scale contextual information in visual scene is propagated and fused to infer the categories of relationships, and a scene graph is finally generated.

3.1.1. Object Detection Network

3.1.2. Sparse Relationship Extraction Network

3.1.3. Multi-Scale Graph Convolutional Network

3.2. Scene Graph Dataset for Remote Sensing Image

- If there is more than one description of relationship in the same node pair, the one most consistent with the actual image scene or with the highest occurrence frequency will be chosen.

- The label should be in the singular form. However, for multiple descriptions, such as “some planes”, the solution is: “some” denotes attribute and “plane” denotes label. Similarly, “two cars” is treated as two nodes labeled with “car”, which can be distinguished by “car_1” and “car_2” in visual representation.

- To maintain the universality and expansibility of annotations, if the labels of the same kind of entities are divergent, the one most consistent with the actual image content or with the highest occurrence frequency shall prevail. For example, “office building” and “business building” are collectively called “building”, and “airport runway” is expressed as “runway”.

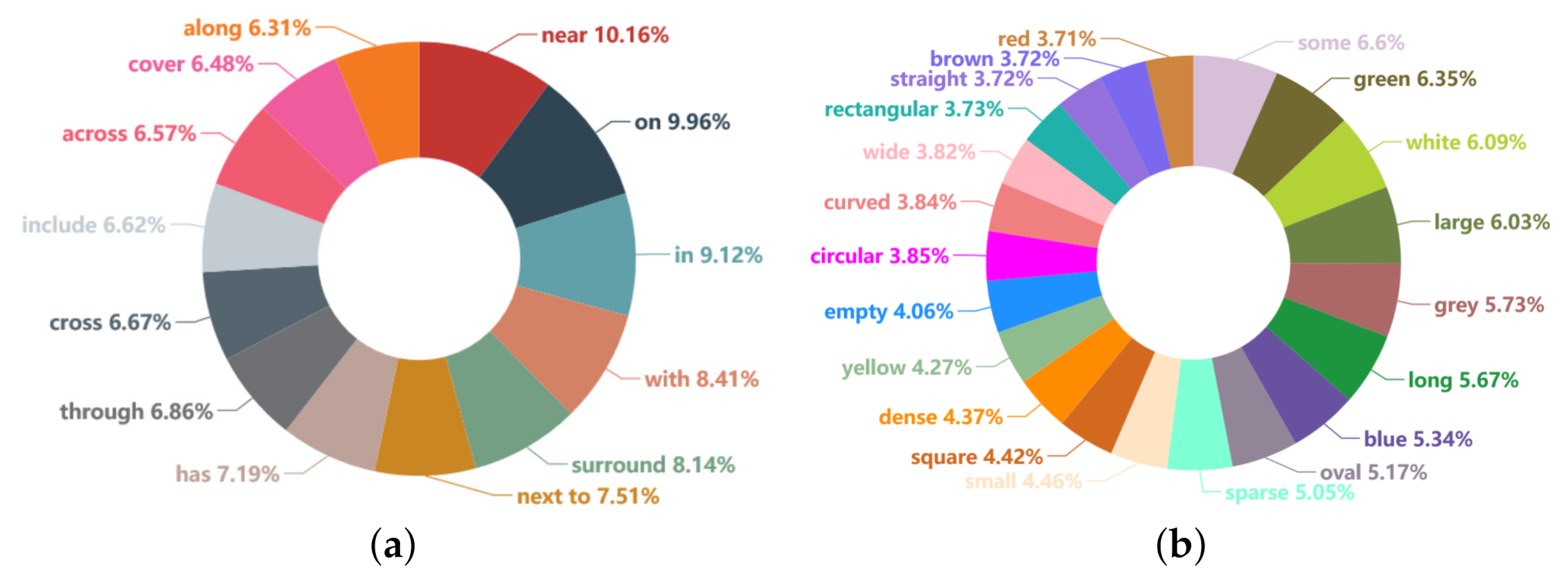

3.2.1. Statistics and Analysis

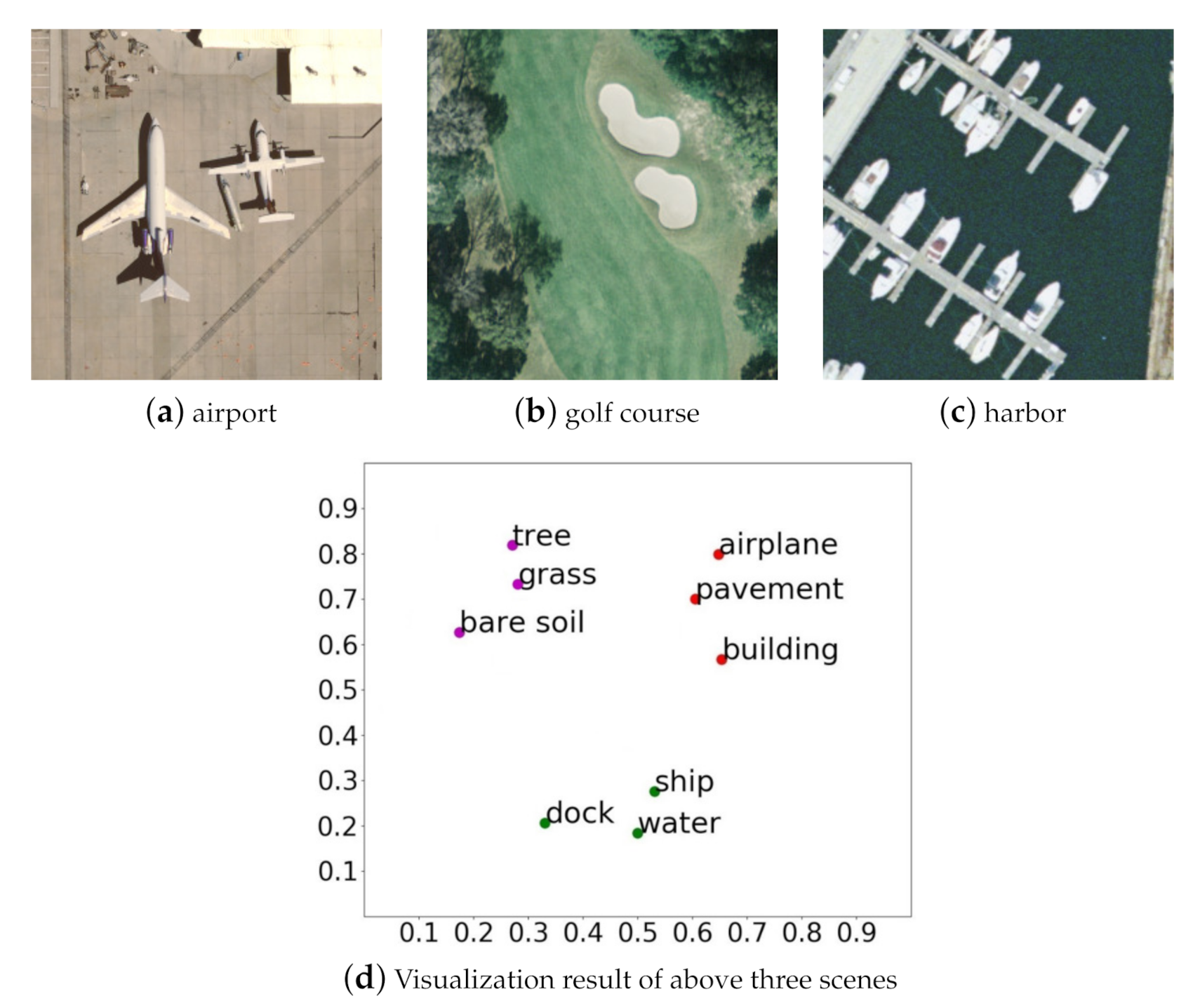

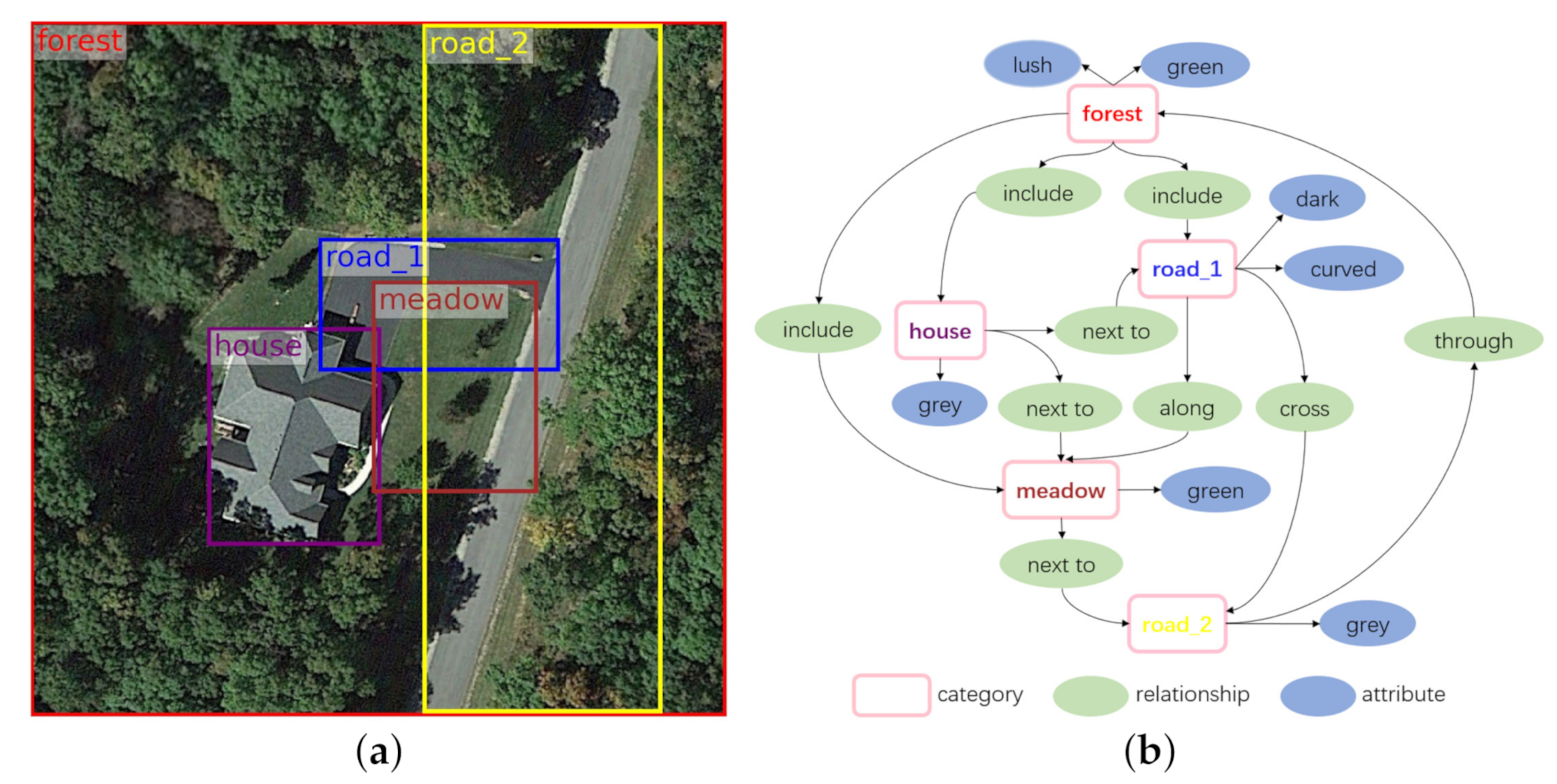

3.2.2. Visual Representation

4. Results and Discussion

4.1. Experiment Setting

- Datasets. To verify the generalization and adaptability of the proposed method fully, we conduct experiments on RSSGD and VG [49] dataset. VG [49] is a popular benchmark for scene graph generation in the field of natural images. It includes 108,077 images with thousands of unique nodes and relation categories, yet most of these categories have very limited instances. Therefore, previous works [40,75,76] proposed various VG [49] splits to remove rare categories. We adopt the most popular one from IMP [40], which selects top-150 object categories and top-50 relation categories by frequency. The entire dataset is divided into the training set and testing set by 70%, 30% respectively.

- Tasks. Given an image, the scene graph generation task is to locate a set of nodes, classify their category labels, and predict relationship between each pair of nodes. We evaluate our model in three sub-tasks.The predicate classification (PredCls) sub-task is to predict the predicates of all pairwise relationships. This sub-task just verifies the model’s performance on predicate classification in isolation from other factors.The scene graph classification (SGCls) sub-task is to predict the predicate as well as the node categories of the subject and object in every pairwise relationship given a set of localized nodes.The scene graph generation (SGGen) sub-task is to simultaneously detect a set of nodes and predict the predicate between each pair of the detected nodes.

- Evaluation Metric. Previous models like IMP [40], VTransE [39] and Motifs [42] adopt the traditional () as evaluation metric, which computes the fraction of times that the relationships are reasoned correctly in the top X confident relation predictions. However, due to incomplete annotation and subjective deviation, the scene graph dataset usually has a problem of long tails [75], which leads the model to cater for high-frequency relationships, but is insensitive to low-frequency relationships. In order to address this problem, we adopt mean () as the evaluation metric of this paper rather than . By traversing each relationship separately and averaging of all relationships, is more effective for mining the semantic relations of specific scenes and can be calculated as:where , are the numbers of true positive and false negative, respectively.where is the recall rate of the confident relation.

4.2. Implementation Details

4.3. Comparing Models

- IMP [40]: this method iterates messages between the original and dual subgraphs along the topology of scene graph. Furthermore, it improves prediction performance by incorporating contextual cues.

- Graph R-CNN [66]: based on graph convolutional network, this model effectively leverages relational regularities to intelligently reason over candidate scene graphs for scene graph generation.

- Knowledge-embedded [78]: in order to deal with the problem of unbalanced distribution of relationships, this model uses the statistical correlations between node pairs as the introduced priors for scene graph generation.

4.4. Experimental Results and Discussion

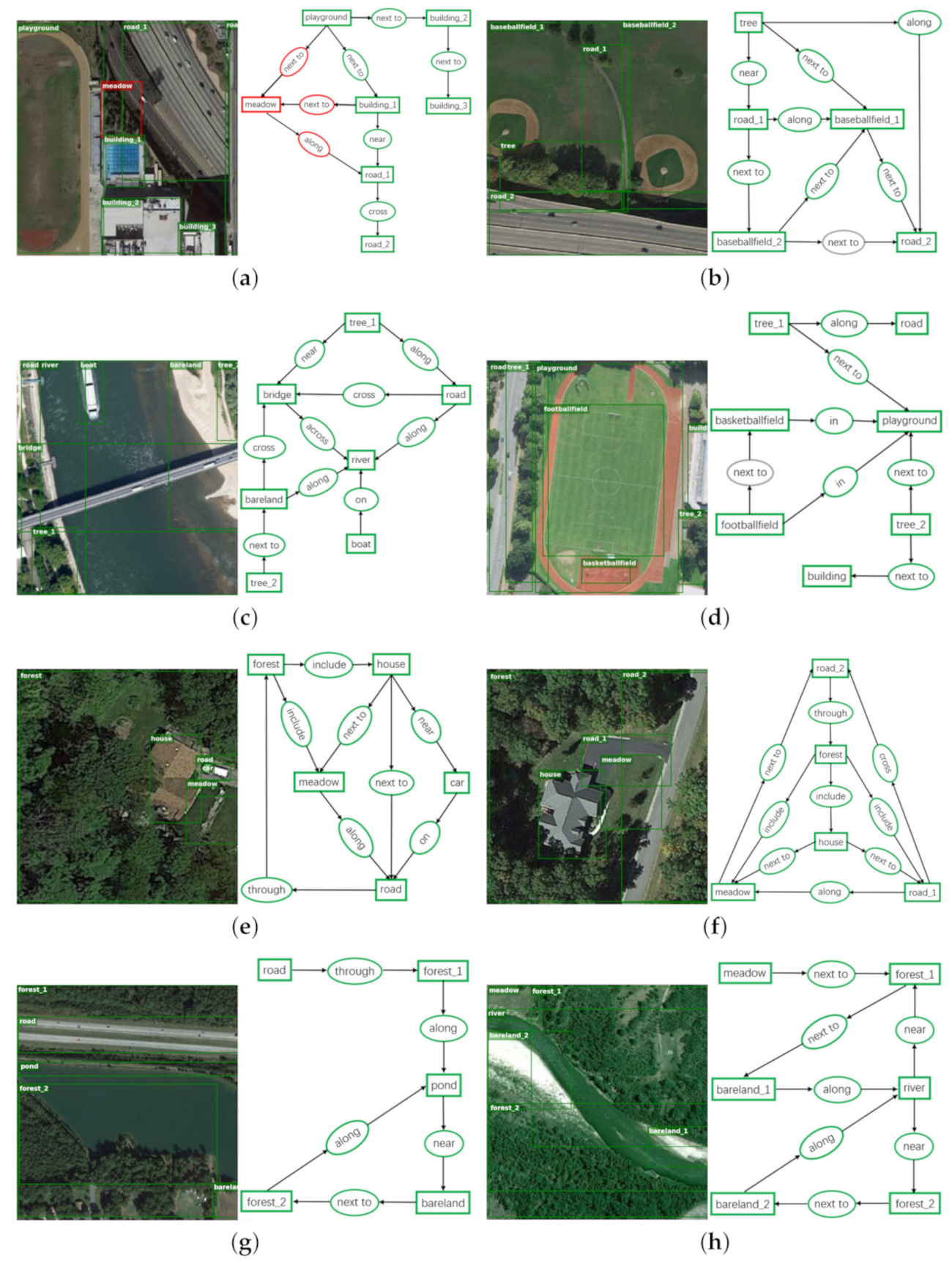

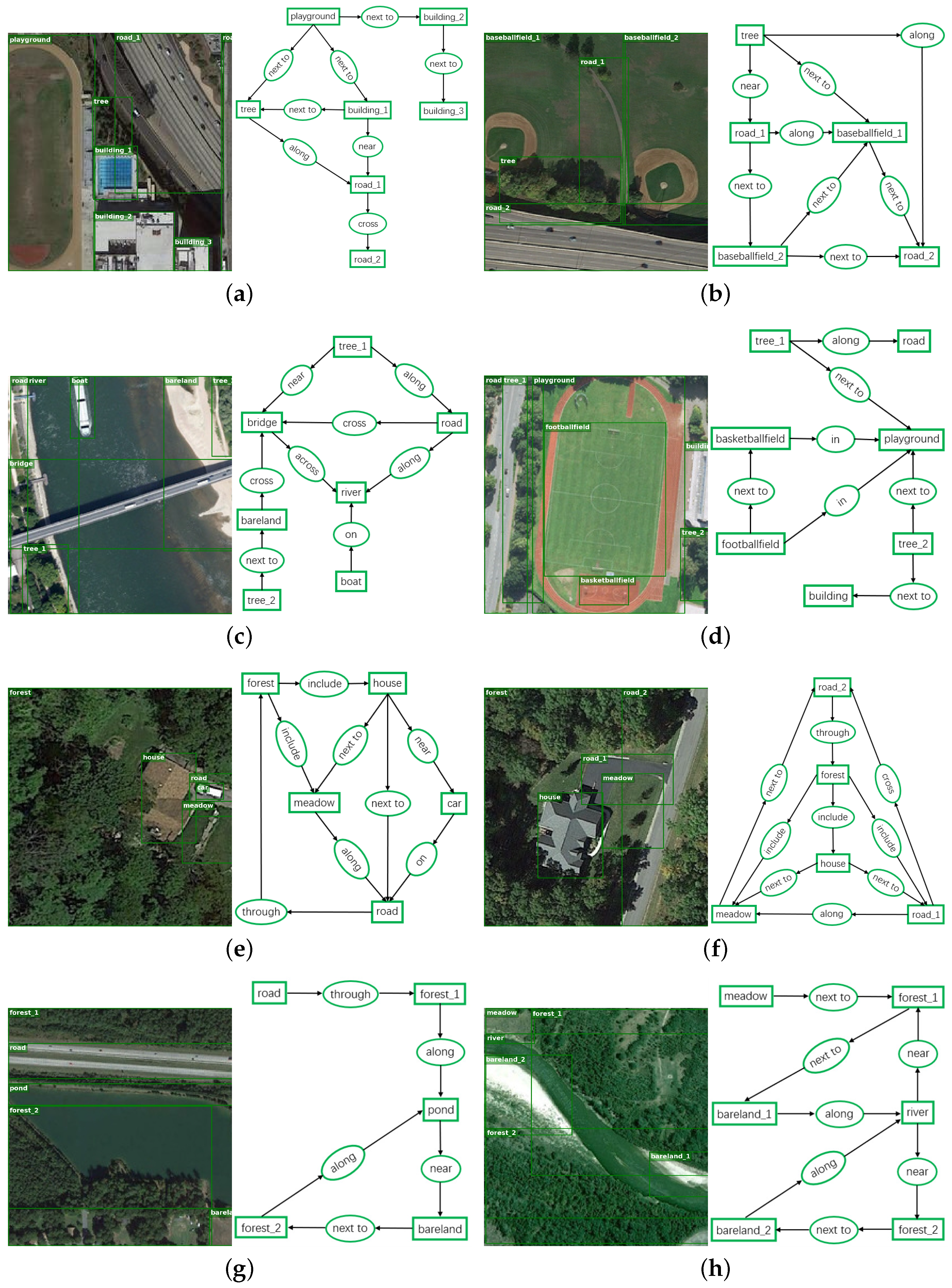

- RSSGD contains abundant ground-truth annotations corresponding to the remote sensing images as displayed in Figure 12, such as , , and so on, which play an irreplaceable role in improving the comprehensive and in-depth understanding on remote sensing scenes.

- From Figure 11 we can readily find that our model can accurately predict the relationships that are more appropriate for a particular scene. For example, in Figure 11e, the house is close to the meadow tightly, and our model precisely applies “next to” to represent the interactive relation among them. In contrast, the house is just near the car but not adjacent to it, so our model selects “near” to show the difference instead of still using “next to”. Likewise, in Figure 11c, the bridge and road are thin and long, so “cross” can correctly reflect the interaction between them. Furthermore, because the river is much wider than the road, so our method resourcefully employs “across” to emphasize the relationship between the bridge and the river from one side to the other. All the examples show a clear trend that our model is much more sensitive to those semantically informative relationships instead of the trivially biased ones.

- Similar to the previous works in scene graph parsing [39,42], Faster R-CNN [35] is used as the object detector in all experiments. However, this model cannot effectively extract deep visual features [66], which will inevitably interfere with the prediction on node categories. For instance, in Figure 11a, the tree is improperly identified as “meadow”, as a result, all of these detected relationships related to it are considered as negative.

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Du, Z.; Li, X.; Lu, X. Local structure learning in high resolution remote sensing image retrieval. Neurocomputing 2016, 207, 813–822. [Google Scholar] [CrossRef]

- Gu, Y.; Wang, Q.; Xie, B. Multiple Kernel Sparse Representation for Airborne LiDAR Data Classification. IEEE Trans. Geosci. Remote Sens. 2017, 55, 1085–1105. [Google Scholar] [CrossRef]

- Lu, X.; Zheng, X.; Yuan, Y. Remote Sensing Scene Classification by Unsupervised Representation Learning. IEEE Trans. Geosci. Remote Sens. 2017, 55, 5148–5157. [Google Scholar] [CrossRef]

- Cheng, G.; Han, J.; Lu, X. Remote Sensing Image Scene Classification: Benchmark and State of the Art. Proc. IEEE 2017, 105, 1865–1883. [Google Scholar] [CrossRef] [Green Version]

- Maggiori, E.; Tarabalka, Y.; Charpiat, G.; Alliez, P. Convolutional Neural Networks for Large-Scale Remote-Sensing Image Classification. IEEE Trans. Geosci. Remote Sens. 2017, 55, 645–657. [Google Scholar] [CrossRef] [Green Version]

- Han, X.; Zhong, Y.; Zhang, L. An Efficient and Robust Integrated Geospatial Object Detection Framework for High Spatial Resolution Remote Sensing Imagery. Remote Sens. 2017, 9, 666. [Google Scholar] [CrossRef] [Green Version]

- Han, J.; Zhang, D.; Cheng, G.; Guo, L.; Ren, J. Object Detection in Optical Remote Sensing Images Based on Weakly Supervised Learning and High-Level Feature Learning. IEEE Trans. Geosci. Remote Sens. 2015, 53, 3325–3337. [Google Scholar] [CrossRef] [Green Version]

- Yuan, J.; Wang, D.; Li, R. Remote Sensing Image Segmentation by Combining Spectral and Texture Features. IEEE Trans. Geosci. Remote Sens. 2014, 52, 16–24. [Google Scholar] [CrossRef]

- Ma, F.; Gao, F.; Sun, J.; Zhou, H.; Hussain, A. Weakly Supervised Segmentation of SAR Imagery Using Superpixel and Hierarchically Adversarial CRF. Remote Sens. 2019, 11, 512. [Google Scholar] [CrossRef] [Green Version]

- Chen, F.; Ren, R.; de Voorde, T.V.; Xu, W.; Zhou, G.; Zhou, Y. Fast Automatic Airport Detection in Remote Sensing Images Using Convolutional Neural Networks. Remote Sens. 2018, 10, 443. [Google Scholar] [CrossRef] [Green Version]

- Dai, B.; Zhang, Y.; Lin, D. Detecting Visual Relationships with Deep Relational Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 3298–3308. [Google Scholar] [CrossRef] [Green Version]

- Farhadi, A.; Hejrati, S.M.M.; Sadeghi, M.A.; Young, P.; Rashtchian, C.; Hockenmaier, J.; Forsyth, D.A. Every Picture Tells a Story: Generating Sentences from Images. In Proceedings of the 11th European Conference on Computer Vision, Heraklion, Greece, 5–11 September 2010; pp. 15–29. [Google Scholar] [CrossRef] [Green Version]

- Plummer, B.A.; Wang, L.; Cervantes, C.M.; Caicedo, J.C.; Hockenmaier, J.; Lazebnik, S. Flickr30k Entities: Collecting Region-to-Phrase Correspondences for Richer Image-to-Sentence Models. Int. J. Comput. Vis. 2017, 123, 74–93. [Google Scholar] [CrossRef] [Green Version]

- Torresani, L.; Szummer, M.; Fitzgibbon, A.W. Efficient Object Category Recognition Using Classemes. In Proceedings of the 11th European Conference on Computer Vision, Heraklion, Greece, 5–11 September 2010; pp. 776–789. [Google Scholar] [CrossRef]

- Lu, C.; Krishna, R.; Bernstein, M.S.; Li, F.F. Visual Relationship Detection with Language Priors. In Proceedings of the 14th European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; pp. 852–869. [Google Scholar] [CrossRef] [Green Version]

- Karpathy, A.; Li, F.F. Deep Visual-Semantic Alignments for Generating Image Descriptions. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 664–676. [Google Scholar] [CrossRef] [Green Version]

- Xu, K.; Ba, J.; Kiros, R.; Cho, K.; Courville, A.C.; Salakhutdinov, R.; Zemel, R.S.; Bengio, Y. Show, Attend and Tell: Neural Image Caption Generation with Visual Attention. In Proceedings of the 32nd International Conference on Machine Learning, Lille, France, 6–11 July 2015; pp. 2048–2057. [Google Scholar]

- Ben-younes, H.; Cadène, R.; Thome, N.; Cord, M. BLOCK: Bilinear Superdiagonal Fusion for Visual Question Answering and Visual Relationship Detection. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; pp. 8102–8109. [Google Scholar] [CrossRef] [Green Version]

- Johnson, J.; Krishna, R.; Stark, M.; Li, L.; Shamma, D.A.; Bernstein, M.S.; Li, F.F. Image retrieval using scene graphs. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3668–3678. [Google Scholar] [CrossRef]

- Li, Y.; Ouyang, W.; Zhou, B.; Shi, J.; Zhang, C.; Wang, X. Factorizable Net: An Efficient Subgraph-Based Framework for Scene Graph Generation. In Proceedings of the 15th European Conference on Computer Vision, Munich, Germany, 8–14 September 2018; pp. 346–363. [Google Scholar] [CrossRef] [Green Version]

- Qi, M.; Li, W.; Yang, Z.; Wang, Y.; Luo, J. Attentive Relational Networks for Mapping Images to Scene Graphs. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 3957–3966. [Google Scholar] [CrossRef] [Green Version]

- Klawonn, M.; Heim, E. Generating Triples With Adversarial Networks for Scene Graph Construction. In Proceedings of the AAAI Conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018; pp. 6992–6999. [Google Scholar]

- Lu, X.; Wang, B.; Zheng, X.; Li, X. Exploring Models and Data for Remote Sensing Image Caption Generation. IEEE Trans. Geosci. Remote Sens. 2018, 56, 2183–2195. [Google Scholar] [CrossRef] [Green Version]

- Yu, F.; Koltun, V. Multi-Scale Context Aggregation by Dilated Convolutions. In Proceedings of the 4th International Conference on Learning Representations, San Juan, PR, USA, 2–4 May 2016. [Google Scholar]

- Qu, B.; Li, X.; Tao, D.; Lu, X. Deep semantic understanding of high resolution remote sensing image. In Proceedings of the International Conference on Computer Information and Telecommunication Systems, Kunming, China, 6–8 July 2016; pp. 1–5. [Google Scholar] [CrossRef]

- Shi, Z.; Zou, Z. Can a Machine Generate Humanlike Language Descriptions for a Remote Sensing Image? IEEE Trans. Geosci. Remote Sens. 2017, 55, 3623–3634. [Google Scholar] [CrossRef]

- Zhang, X.; Wang, X.; Tang, X.; Zhou, H.; Li, C. Description Generation for Remote Sensing Images Using Attribute Attention Mechanism. Remote Sens. 2019, 11, 612. [Google Scholar] [CrossRef] [Green Version]

- Wang, B.; Lu, X.; Zheng, X.; Li, X. Semantic Descriptions of High-Resolution Remote Sensing Images. IEEE Geosci. Remote Sens. Lett. 2019, 16, 1274–1278. [Google Scholar] [CrossRef]

- Bordes, A.; Usunier, N.; García-Durán, A.; Weston, J.; Yakhnenko, O. Translating Embeddings for Modeling Multi-relational Data. In Proceedings of the 27th Annual Conference on Neural Information Processing Systems, Lake Tahoe, NV, USA, 5–8 December 2013; pp. 2787–2795. [Google Scholar]

- Ladicky, L.; Russell, C.; Kohli, P.; Torr, P.H.S. Graph Cut Based Inference with Co-occurrence Statistics. In Proceedings of the 11th European Conference on Computer Vision, Heraklion, Greece, 5–11 September 2010; pp. 239–253. [Google Scholar] [CrossRef]

- Oliva, A.; Torralba, A. The role of context in object recognition. Trend. Cogn. Sci. 2007, 11, 520–527. [Google Scholar] [CrossRef]

- Parikh, D.; Zitnick, C.L.; Chen, T. From appearance to context-based recognition: Dense labeling in small images. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Anchorage, AK, USA, 24–26 June 2008. [Google Scholar] [CrossRef]

- Rabinovich, A.; Vedaldi, A.; Galleguillos, C.; Wiewiora, E.; Belongie, S.J. Objects in Context. In Proceedings of the IEEE International Conference on Computer Vision, Rio de Janeiro, Brazil, 14–20 October 2007; pp. 1–8. [Google Scholar] [CrossRef]

- Girshick, R.B.; Donahue, J.; Darrell, T.; Malik, J. Rich Feature Hierarchies for Accurate Object Detection and Semantic Segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar] [CrossRef] [Green Version]

- Ren, S.; He, K.; Girshick, R.B.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [Green Version]

- Redmon, J.; Divvala, S.K.; Girshick, R.B.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar] [CrossRef] [Green Version]

- Schuster, S.; Krishna, R.; Chang, A.X.; Li, F.F.; Manning, C.D. Generating Semantically Precise Scene Graphs from Textual Descriptions for Improved Image Retrieval. In Proceedings of the Fourth Workshop on Vision and Language, Lisbon, Portugal, 18 September 2015; pp. 70–80. [Google Scholar] [CrossRef] [Green Version]

- Woo, S.; Kim, D.; Cho, D.; Kweon, I.S. LinkNet: Relational Embedding for Scene Graph. In Proceedings of the Annual Conference on Neural Information Processing Systems, Montréal, QC, Canada, 3–8 December 2018; pp. 558–568. [Google Scholar]

- Zhang, H.; Kyaw, Z.; Chang, S.; Chua, T. Visual Translation Embedding Network for Visual Relation Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 3107–3115. [Google Scholar] [CrossRef] [Green Version]

- Xu, D.; Zhu, Y.; Choy, C.B.; Li, F.F. Scene Graph Generation by Iterative Message Passing. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–27 July 2017; pp. 3097–3106. [Google Scholar] [CrossRef] [Green Version]

- Hu, R.; Rohrbach, M.; Andreas, J.; Darrell, T.; Saenko, K. Modeling Relationships in Referential Expressions with Compositional Modular Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4418–4427. [Google Scholar] [CrossRef] [Green Version]

- Zellers, R.; Yatskar, M.; Thomson, S.; Choi, Y. Neural Motifs: Scene Graph Parsing With Global Context. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 5831–5840. [Google Scholar] [CrossRef] [Green Version]

- Li, Y.; Ouyang, W.; Zhou, B.; Wang, K.; Wang, X. Scene Graph Generation from Objects, Phrases and Region Captions. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 1270–1279. [Google Scholar] [CrossRef] [Green Version]

- Hwang, S.J.; Ravi, S.N.; Tao, Z.; Kim, H.J.; Collins, M.D.; Singh, V. Tensorize, Factorize and Regularize: Robust Visual Relationship Learning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 1014–1023. [Google Scholar] [CrossRef]

- Herzig, R.; Raboh, M.; Chechik, G.; Berant, J.; Globerson, A. Mapping Images to Scene Graphs with Permutation-Invariant Structured Prediction. In Proceedings of the Annual Conference on Neural Information Processing Systems, Montréal, QC, Canada, 3–8 December 2018; pp. 7211–7221. [Google Scholar]

- Yu, R.; Li, A.; Morariu, V.I.; Davis, L.S. Visual Relationship Detection with Internal and External Linguistic Knowledge Distillation. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 1068–1076. [Google Scholar] [CrossRef] [Green Version]

- Cui, Z.; Xu, C.; Zheng, W.; Yang, J. Context-Dependent Diffusion Network for Visual Relationship Detection. In Proceedings of the 26th ACM international conference on Multimedia, Seoul, Korea, 22–26 October 2018; pp. 1475–1482. [Google Scholar] [CrossRef] [Green Version]

- Lin, T.; Maire, M.; Belongie, S.J.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft COCO: Common Objects in Context. In Proceedings of the 13th European Conferenceon Computer Vision, Zurich, Switzerland, 6–12 September 2014; pp. 740–755. [Google Scholar] [CrossRef] [Green Version]

- Krishna, R.; Zhu, Y.; Groth, O.; Johnson, J.; Hata, K.; Kravitz, J.; Chen, S.; Kalantidis, Y.; Li, L.; Shamma, D.A.; et al. Visual Genome: Connecting Language and Vision Using Crowdsourced Dense Image Annotations. Int. J. Comput. Vis. 2017, 123, 32–73. [Google Scholar] [CrossRef] [Green Version]

- Liang, Y.; Bai, Y.; Zhang, W.; Qian, X.; Zhu, L.; Mei, T. VrR-VG: Refocusing Visually-Relevant Relationships. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Korea, 2 November–27 October 2019; pp. 10402–10411. [Google Scholar] [CrossRef] [Green Version]

- Peyre, J.; Laptev, I.; Schmid, C.; Sivic, J. Weakly-Supervised Learning of Visual Relations. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 5189–5198. [Google Scholar] [CrossRef] [Green Version]

- Haut, J.M.; Fernández-Beltran, R.; Paoletti, M.E.; Plaza, J.; Plaza, A. Remote Sensing Image Superresolution Using Deep Residual Channel Attention. IEEE Trans. Geosci. Remote Sens. 2019, 57, 9277–9289. [Google Scholar] [CrossRef]

- Luo, H.; Chen, C.; Fang, L.; Zhu, X.; Lu, L. High-Resolution Aerial Images Semantic Segmentation Using Deep Fully Convolutional Network With Channel Attention Mechanism. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2019, 12, 3492–3507. [Google Scholar] [CrossRef]

- Wang, J.; Shen, L.; Qiao, W.; Dai, Y.; Li, Z. Deep Feature Fusion with Integration of Residual Connection and Attention Model for Classification of VHR Remote Sensing Images. Remote Sens. 2019, 11, 1617. [Google Scholar] [CrossRef] [Green Version]

- Ba, R.; Chen, C.; Yuan, J.; Song, W.; Lo, S. SmokeNet: Satellite Smoke Scene Detection Using Convolutional Neural Network with Spatial and Channel-Wise Attention. Remote Sens. 2019, 11, 1702. [Google Scholar] [CrossRef] [Green Version]

- Li, J.; Xiu, J.; Yang, Z.; Liu, C. Dual Path Attention Net for Remote Sensing Semantic Image Segmentation. ISPRS Int. J. Geo-Inf. 2020, 9, 571. [Google Scholar] [CrossRef]

- Ren, S.; Zhou, F. Semi-Supervised Classification of PolSAR Data with Multi-Scale Weighted Graph Convolutional Network. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium, Waikoloa, HI, USA, 26 September–2 October 2020; pp. 1715–1718. [Google Scholar] [CrossRef]

- Wan, S.; Gong, C.; Zhong, P.; Du, B.; Zhang, L.; Yang, J. Multiscale Dynamic Graph Convolutional Network for Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2020, 58, 3162–3177. [Google Scholar] [CrossRef] [Green Version]

- Zhao, L.; Song, Y.; Zhang, C.; Liu, Y.; Wang, P.; Lin, T.; Deng, M.; Li, H. T-GCN: A Temporal Graph Convolutional Network for Traffic Prediction. IEEE Trans. Intell. Transp. Syst. 2020, 21, 3848–3858. [Google Scholar] [CrossRef] [Green Version]

- Shahraki, F.F.; Prasad, S. Graph Convolutional Neural Networks for Hyperspectral Data Classification. In Proceedings of the IEEE Global Conference on Signal and Information Processing, Anaheim, CA, USA, 26–29 November 2018; pp. 968–972. [Google Scholar] [CrossRef]

- Qin, A.; Shang, Z.; Tian, J.; Wang, Y.; Zhang, T.; Tang, Y.Y. Spectral-Spatial Graph Convolutional Networks for Semisupervised Hyperspectral Image Classification. IEEE Geosci. Remote Sens. Lett. 2019, 16, 241–245. [Google Scholar] [CrossRef]

- Wan, S.; Gong, C.; Zhong, P.; Pan, S.; Li, G.; Yang, J. Hyperspectral Image Classification With Context-Aware Dynamic Graph Convolutional Network. IEEE Trans. Geosci. Remote Sens. 2021, 59, 597–612. [Google Scholar] [CrossRef]

- Mou, L.; Lu, X.; Li, X.; Zhu, X.X. Nonlocal Graph Convolutional Networks for Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2020, 58, 8246–8257. [Google Scholar] [CrossRef]

- Khan, N.; Chaudhuri, U.; Banerjee, B.; Chaudhuri, S. Graph convolutional network for multi-label VHR remote sensing scene recognition. Neurocomputing 2019, 357, 36–46. [Google Scholar] [CrossRef]

- Shi, Y.; Li, Q.; Zhu, X.X. Building segmentation through a gated graph convolutional neural network with deep structured feature embedding. ISPRS J. Photogramm. Remote Sens. 2020, 159, 184–197. [Google Scholar] [CrossRef]

- Yang, J.; Lu, J.; Lee, S.; Batra, D.; Parikh, D. Graph R-CNN for Scene Graph Generation. In Proceedings of the 15th European Conference on Computer Vision, Munich, Germany, 8–14 September 2018; pp. 690–706. [Google Scholar] [CrossRef] [Green Version]

- Qiu, H.; Li, H.; Wu, Q.; Meng, F.; Ngan, K.N.; Shi, H. A2RMNet: Adaptively Aspect Ratio Multi-Scale Network for Object Detection in Remote Sensing Images. Remote Sens. 2019, 11, 1594. [Google Scholar] [CrossRef] [Green Version]

- Van der Maaten, L.; Hinton, G. Visualizing data using t-SNE. J. Mach. Learn. Res. 2008, 9, 2579–2605. [Google Scholar]

- Zhang, J.; Lin, S.; Ding, L.; Bruzzone, L. Multi-Scale Context Aggregation for Semantic Segmentation of Remote Sensing Images. Remote Sens. 2020, 12, 701. [Google Scholar] [CrossRef] [Green Version]

- Chen, L.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. DeepLab: Semantic Image Segmentation with Deep Convolutional Nets, Atrous Convolution, and Fully Connected CRFs. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 40, 834–848. [Google Scholar] [CrossRef]

- Li, G.; Müller, M.; Thabet, A.K.; Ghanem, B. DeepGCNs: Can GCNs Go As Deep As CNNs? In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Korea, 27 October–2 November 2019; pp. 9266–9275. [Google Scholar] [CrossRef] [Green Version]

- Jaderberg, M.; Simonyan, K.; Zisserman, A.; Kavukcuoglu, K. Spatial Transformer Networks. In Proceedings of the Annual Conference on Neural Information Processing Systems, Montreal, QC, Canada, 7–12 December 2015; pp. 2017–2025. [Google Scholar]

- Andrews, M.; Chia, Y.K.; Witteveen, S. Scene Graph Parsing by Attention Graph. arXiv 2019, arXiv:1909.06273. [Google Scholar]

- Yang, Z.; Qin, Z.; Yu, J.; Hu, Y. Scene graph reasoning with prior visual relationship for visual question answering. arXiv 2018, arXiv:1812.09681. [Google Scholar]

- Tang, K.; Zhang, H.; Wu, B.; Luo, W.; Liu, W. Learning to Compose Dynamic Tree Structures for Visual Contexts. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 6619–6628. [Google Scholar] [CrossRef] [Green Version]

- Zhang, J.; Elhoseiny, M.; Cohen, S.; Chang, W.; Elgammal, A.M. Relationship Proposal Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 5226–5234. [Google Scholar] [CrossRef]

- Hochreiter, S.; Schmidhuber, J. Long Short-Term Memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef]

- Chen, T.; Yu, W.; Chen, R.; Lin, L. Knowledge-Embedded Routing Network for Scene Graph Generation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 6163–6171. [Google Scholar] [CrossRef] [Green Version]

| Input Size | Convolution Kernel | Output Size | Number of Parameters |

|---|---|---|---|

| 224 × 224 | 3 × 3, 64 | 224 × 224 | (3 × 3 × 3) × 64 = 1728 |

| 224 × 224 | 3 × 3, 64 | 224 × 224 | (3 × 3 × 64) × 64 = 36,864 |

| Max Pooling | |||

| 112 × 112 | 3 × 3, 128 | 112 × 112 | (3 × 3 × 64) × 128 = 73,728 |

| 112 × 112 | 3 × 3, 128 | 112 × 112 | (3 × 3 × 128) × 128 = 147,456 |

| Max Pooling | |||

| 56 × 56 | 3 × 3, 256 | 56 × 56 | (3 × 3 × 128) × 256 = 294,912 |

| 56 × 56 | 3 × 3, 256 | 56 × 56 | (3 × 3 × 256) × 256 = 589,824 |

| 56 × 56 | 3 × 3, 256 | 56 × 56 | (3 × 3 × 256) × 256 = 589,824 |

| Max Pooling | |||

| 28 × 28 | 3 × 3, 512 | 28 × 28 | (3 × 3 × 256) × 512 = 1,179,684 |

| 28 × 28 | 3 × 3, 512 | 28 × 28 | (3 × 3 × 512) × 512 = 2,359,296 |

| 28 × 28 | 3 × 3, 512 | 28 × 28 | (3 × 3 × 512) × 512 = 2,359,296 |

| Max Pooling | |||

| 14 × 14 | 3 × 3, 512 | 14 × 14 | (3 × 3 × 512) × 512 = 2,359,296 |

| 14 × 14 | 3 × 3, 512 | 14 × 14 | (3 × 3 × 512) × 512 = 2,359,296 |

| 14 × 14 | 3 × 3, 512 | 14 × 14 | (3 × 3 × 512) × 512 = 2,359,296 |

| Model | PredCls | SGCls | SGGen | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| IMP [40] | - | 10.1% | 10.8% | - | 6.0% | 6.1% | - | 3.7% | 4.9% | |||

| VTransE [39] | 7.6% | 12.4% | 14.3% | 4.9% | 6.8% | 7.2% | 3.2% | 5.3% | 5.8% | |||

| Motifs [42] | 11.3% | 14.2% | 15.4% | 6.2% | 8.0% | 8.3% | 4.4% | 6.0% | 7.2% | |||

| Graph R-CNN [66] | 13.1% | 18.8% | 22.6% | 7.9% | 9.7% | 11.1% | 4.9% | 6.8% | 8.6% | |||

| Knowledge-embedded [78] | 15.4% | 20.7% | 24.2% | 9.2% | 11.3% | 12.0% | 6.2% | 8.1% | 9.8% | |||

| VCTree [75] | 18.3% | 25.2% | 28.3% | 12.3% | 13.1% | 14.2% | 6.6% | 9.3% | 11.1% | |||

| Ours | SREN | AGCN | MS-GCN | |||||||||

| × | ✓ | × | 12.2% | 17.5% | 22.4% | 6.5% | 7.6% | 10.3% | 3.8% | 5.2% | 7.4% | |

| ✓ | ✓ | × | 14.4% | 19.6% | 24.3% | 8.1% | 9.2% | 11.8% | 4.6% | 6.4% | 9.2% | |

| ✓ | × | ✓ | 17.8% | 24.0% | 29.1% | 11.9% | 13.6% | 15.4% | 6.3% | 10.2% | 12.3% | |

| Model | PredCls | SGCls | SGGen | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| IMP [40] | - | 12.1% | 13.3% | - | 7.4% | 8.2% | - | 5.6% | 6.5% | |||

| VTransE [39] | 10.2% | 16.8% | 18.6% | 8.3% | 13.2% | 16.1% | 5.8% | 6.9% | 8.3% | |||

| Motifs [42] | 13.1% | 19.3% | 21.6% | 10.1% | 15.7% | 19.1% | 6.3% | 7.6% | 9.8% | |||

| Graph R-CNN [66] | 18.3% | 23.2% | 28.1% | 15.8% | 20.9% | 23.4% | 13.6% | 18.1% | 22.6% | |||

| Knowledge-embedded [78] | 20.4% | 25.1% | 30.7% | 17.3% | 22.6% | 25.6% | 15.2% | 19.4% | 24.4% | |||

| VCTree [75] | 21.6% | 27.4% | 31.6% | 18.8% | 23.3% | 27.2% | 16.1% | 20.7% | 25.2% | |||

| Ours | SREN | AGCN | MS-GCN | |||||||||

| × | ✓ | × | 17.2% | 22.4% | 27.0% | 14.3% | 20.3% | 22.6% | 11.8% | 17.2% | 21.3% | |

| ✓ | ✓ | × | 19.4% | 24.6% | 29.3% | 16.2% | 22.1% | 24.9% | 14.6% | 19.4% | 23.1% | |

| ✓ | × | ✓ | 23.7% | 28.9% | 34.2% | 20.1% | 26.3% | 29.1% | 18.3% | 23.6% | 27.4% | |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, P.; Zhang, D.; Wulamu, A.; Liu, X.; Chen, P. Semantic Relation Model and Dataset for Remote Sensing Scene Understanding. ISPRS Int. J. Geo-Inf. 2021, 10, 488. https://doi.org/10.3390/ijgi10070488

Li P, Zhang D, Wulamu A, Liu X, Chen P. Semantic Relation Model and Dataset for Remote Sensing Scene Understanding. ISPRS International Journal of Geo-Information. 2021; 10(7):488. https://doi.org/10.3390/ijgi10070488

Chicago/Turabian StyleLi, Peng, Dezheng Zhang, Aziguli Wulamu, Xin Liu, and Peng Chen. 2021. "Semantic Relation Model and Dataset for Remote Sensing Scene Understanding" ISPRS International Journal of Geo-Information 10, no. 7: 488. https://doi.org/10.3390/ijgi10070488

APA StyleLi, P., Zhang, D., Wulamu, A., Liu, X., & Chen, P. (2021). Semantic Relation Model and Dataset for Remote Sensing Scene Understanding. ISPRS International Journal of Geo-Information, 10(7), 488. https://doi.org/10.3390/ijgi10070488