Road Characteristics Detection Based on Joint Convolutional Neural Networks with Adaptive Squares

Abstract

1. Introduction

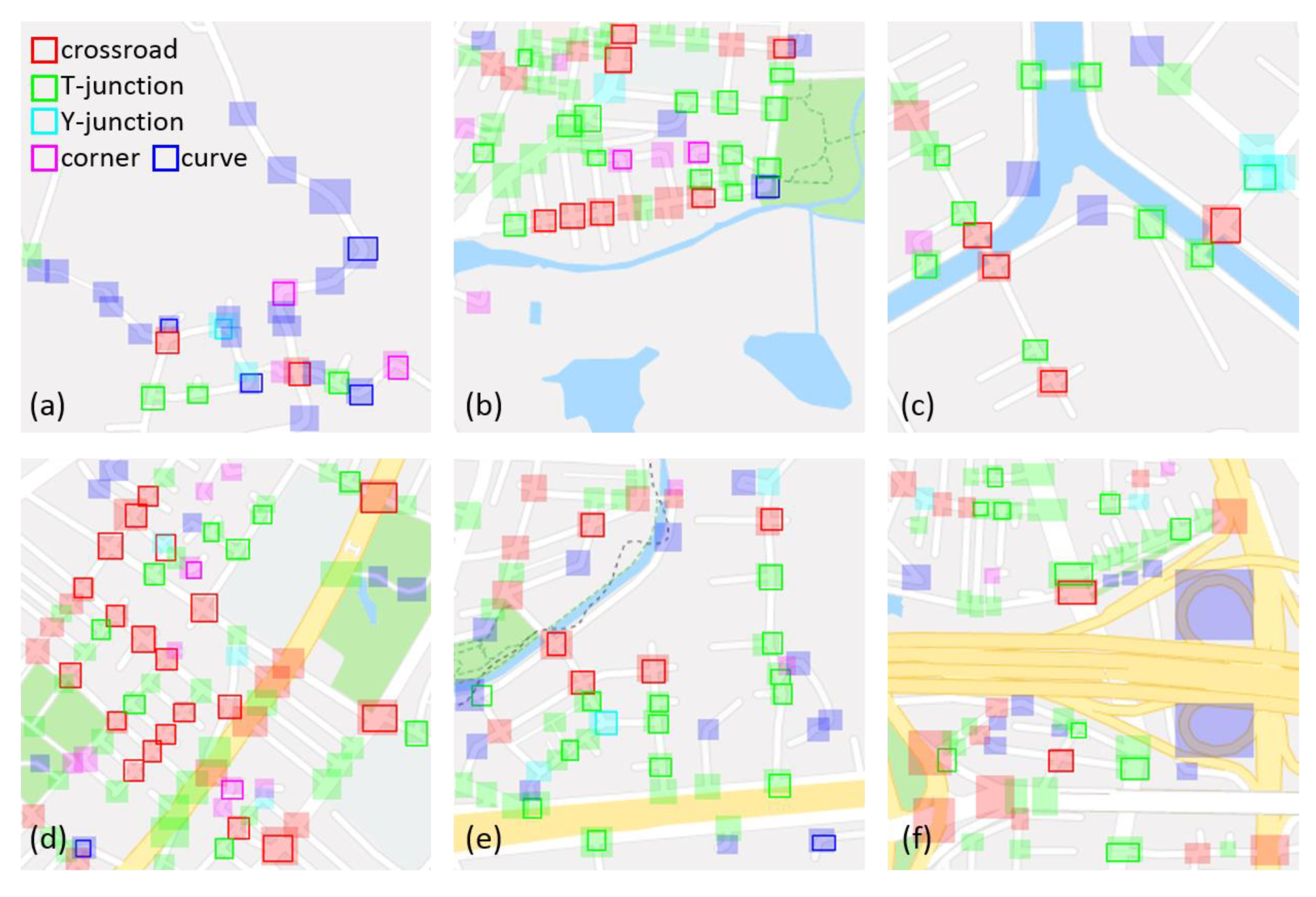

- A simple joint deep framework including binary classification and multi-class classification for detection with high accuracy of various types of road characteristics from popular roadmap tiles with high accessibility and availability is proposed.

- Adaptive squares and combination rules are proposed with reference to mapping criteria and geometric patterns of road characteristics in the roadmap to efficiently find optimal detection results.

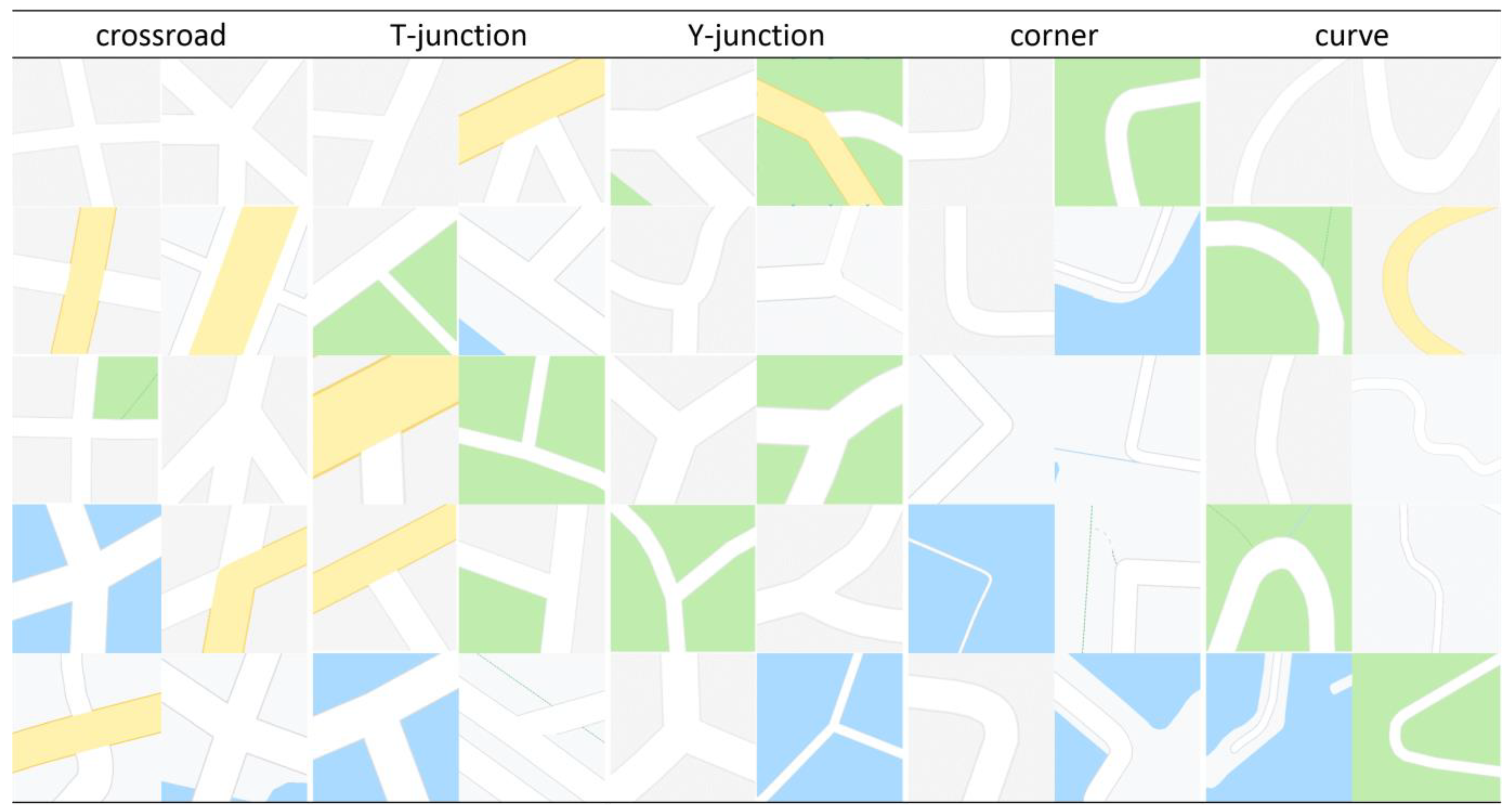

- Five common road characteristics, crossroads, T-junctions, Y-junctions, curves, and corners are successfully detected with outstanding performance.

- Locations with one type of road characteristic where the information originally presented on maps is elaborately exploited and converted from human-readable to machine-readable, which has the potential to benefit many road-network-based applications with user-friendly programs.

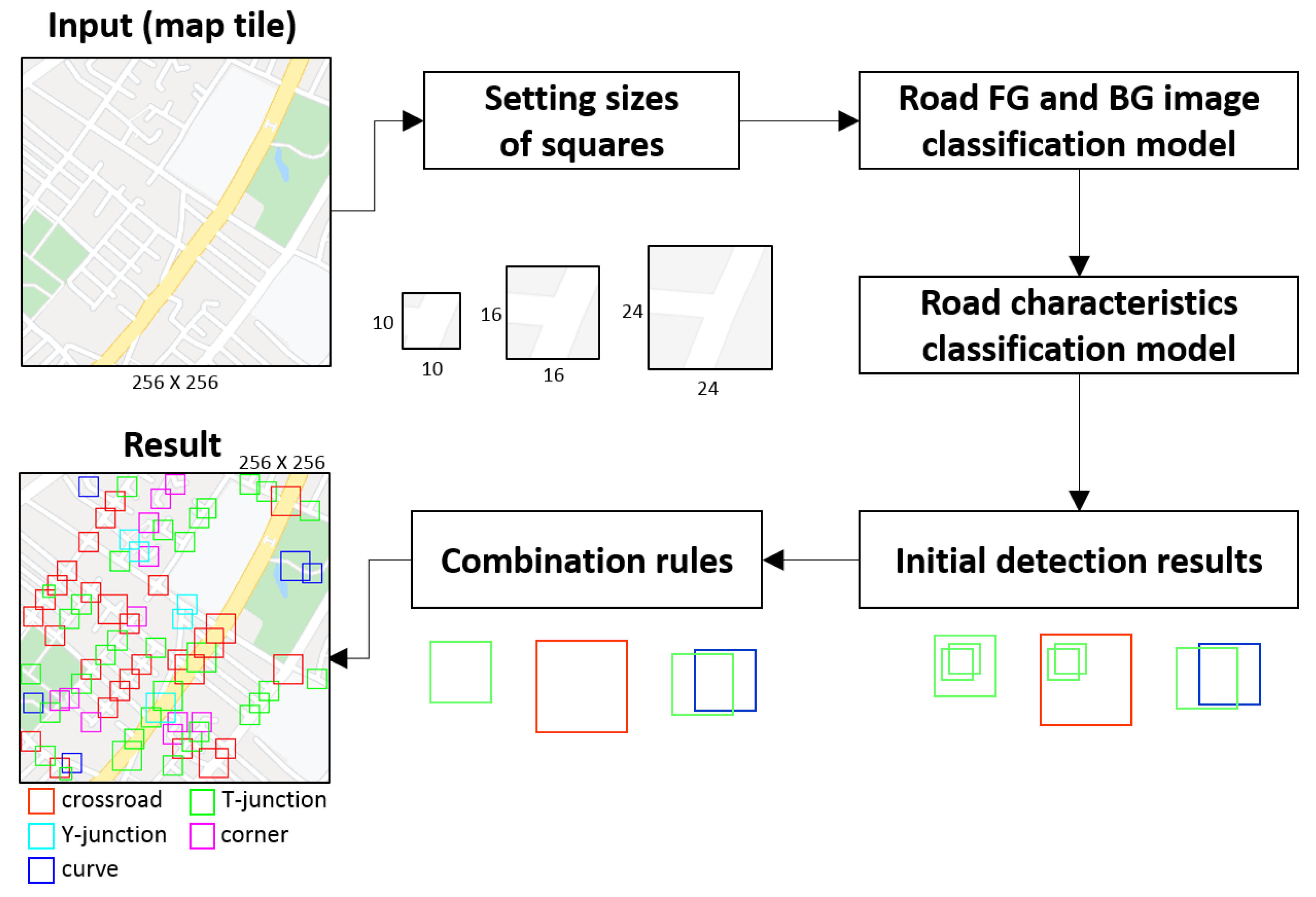

2. Methods

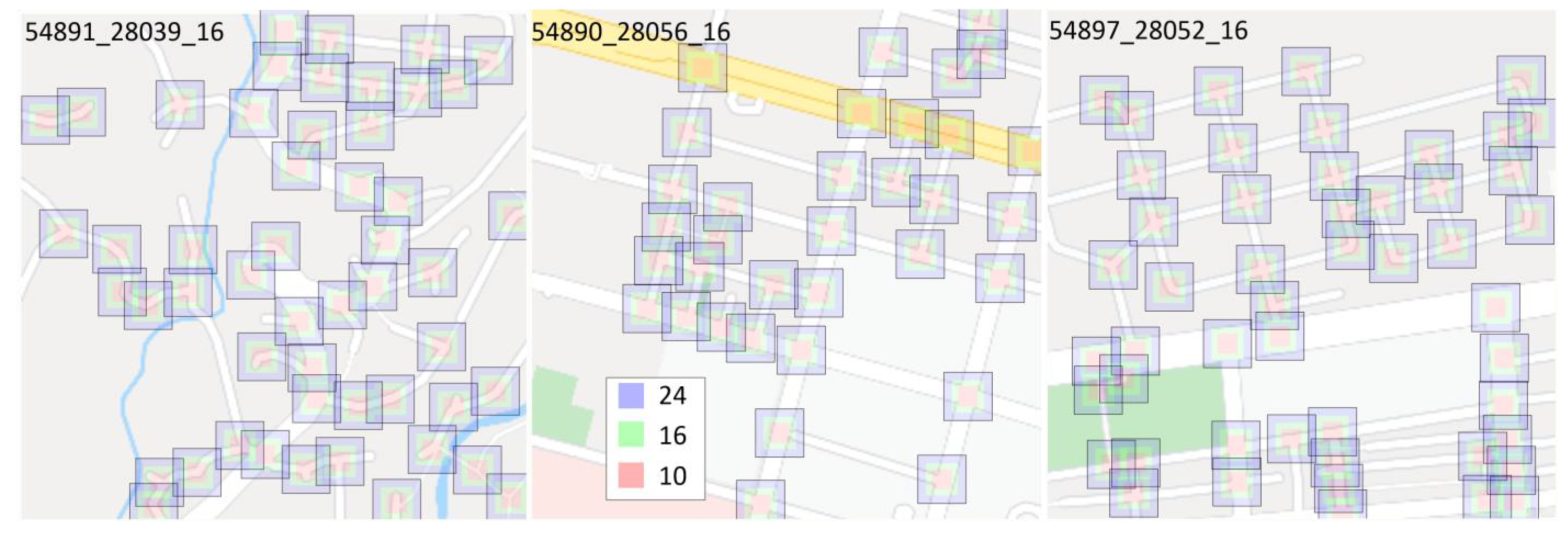

2.1. Setting Sizes of Squares

2.2. Joint Convolutional Neural Networks

2.2.1. Road Foreground and Background (FG and BG) Image Classification Model

2.2.2. Road Characteristics Classification Model

2.3. Process Steps and Combination Rules

- Process step 1 (for the same type of road characteristic at each size): the process begins at the cases of the same type of road characteristics. The purpose of this step is to remove the type I duplicated detection results that occurred for each size of initial detection results by scanning with a moving step. A non-maximum suppression (NMS) algorithm [60] is adopted to select the best candidate for the process afterwards.

- Process step 2 (for various types of road characteristics at each size): after the process of step 1, there still could be multiple results with various types of road characteristics detected for a target location at each size. This is called a type I inconsistent detection result. To remove it, Rule I with an IoU threshold determined by a heuristic approach based on a street-level representation, zoom level 16, is applied, and the following comparison order is conducted according to the accuracy of those road characteristics. The comparison order is set as crossroad > T-junction > Y-junction > corner (in decreasing priority from left to right according to their accuracy and the evaluation metrics of model 2). A T-junction has higher priority than a Y-junction because the former has higher precision than the latter in the validation report. Each type is compared against the other types. To avoid duplicate comparison, the types of objects for crossroad are T-junction, Y-junction, corner, and curve; the types of objects for T-junction are Y-junction, corner, and curve; the types of objects for Y-junction are curve and corner; the type of objects for the corner is the curve.

- Rule I: if two detection results have a qualified intersection determined by their IoU, such as an IoU equal to or greater than a threshold T1, the subject or the object with lower confidence is removed. When the subject and the object have the same confidence scores, the subject is preserved, and the object is removed.

- Process step 3 (for adding supplementary detection results from large and small squares): the medium square is taken as the main size of detection to obtain basically much more precise results than others. However, deficient specific cases such as insufficient detection squares for a wide road or oversized detection squares among roads in dense areas leading to incorrect detection results may appear in the medium size. To improve this situation, in this step, supplementary detection results from large and small squares will be added by applying Rule II.

- Rule II: if two detection results, that is, the subject is from a medium size, and the object is either from a large or a small size, do not have a qualified intersection, for example, the distance between their centers is greater than a threshold T2 × L, where T2 indicates a scaling factor and L indicates the side of the larger detection results, the object is added. That means two objects are valid detection results at various locations. The distance between the centers of two detection results is measured and used to determine whether one of the two results is duplicated as the process is located at various sizes of detection results and the measurement is more efficient and more stable than the IoU method. In short, the result of using the IoU method is affected by the area of the two detection results, but not in our method. Next, when two objects found through large and small detection region squares have a qualified intersection, one of the two objects must be removed through comparison to avoid a type II duplicated case that occurs since the same detection results regarding a type of road characteristic for the same target location are generated by two sizes of squares. If the confidence scores of the two objects are the same, the detection result from the large size is preserved, and the other is removed because the large one encompasses a wider range with more certain information than the small one. Otherwise, the one with the lower confidence score is removed.

- Process step 4 (for crossroads): after supplementing from other sizes of detection results, the type II inconsistent detection results caused by incomplete coverage of medium size may still occur. For example, a location may be detected as a T-junction at medium size but a crossroad at large size due to insufficient detection size of the medium square. This step aims at utilizing large-sized crossroads to solve type II inconsistent detection results. Then, Rule III is conducted. In such cases, only large-sized crossroads containing no medium-sized crossroads are processed because a large-sized crossroad containing a medium-sized crossroad is not possible via process step 3. However, after applying Rule III, there could be cases of large-sized and medium-sized crossroads or large-sized and small-sized crossroads existing, leading to type II duplicated cases for a target location. Then Rule IV is conducted to remove duplicated crossroads.

- Rule III (for large-sized crossroads and medium-sized T-junctions or Y-junctions): if two detection results (the subject is a T-junction or a Y-junction from a medium size, and the object is a crossroad from large size) have a qualified intersection, for example, the distance between their centers is equal to or smaller than threshold T2 × L, which are the same as described in Rule II, the object is preserved, and the subject is removed.

- Rule IV (for large-sized and medium-sized crossroads, large-sized and small sized-crossroads): when two detection results are crossroads, if the subject detected from the medium-size square and the object detected from the large-size square have a qualified intersection, for example, the same as described in Rule III, the subject is preserved, and the object is removed because the subject is the main size. In addition, if the subject detected from the large-size square and the object detected from the small-size square have a qualified intersection, the subject is preserved, and the object is removed because the subject encompasses larger coverage with more extensive investigation than the object.

- Process step 5 (optional for curves): the shape of the curve is much more diverse than other types, while roads may frequently bend based on topography or other practical demands. For example, a curve could be a sharp turn in a mountain area or a smooth turn like an arc in a flat area. To avoid generating too many discrete curves, especially for a curve with a huge curvature radius, this step aims at merging adjacent curves into a curve. The combination process is conducted by Rule V. This is an optional process step, as many discrete curves detected but not merged are also allowed.

- Rule V: If two detection results have a qualified intersection, for example, the distance between their centers is equal to or smaller than a threshold T3 × L, where T3 indicates a scaling factor, a new spatial range for the location of the curve type is reconstructed based on the maximum extents of the subject and object.

3. Implementation

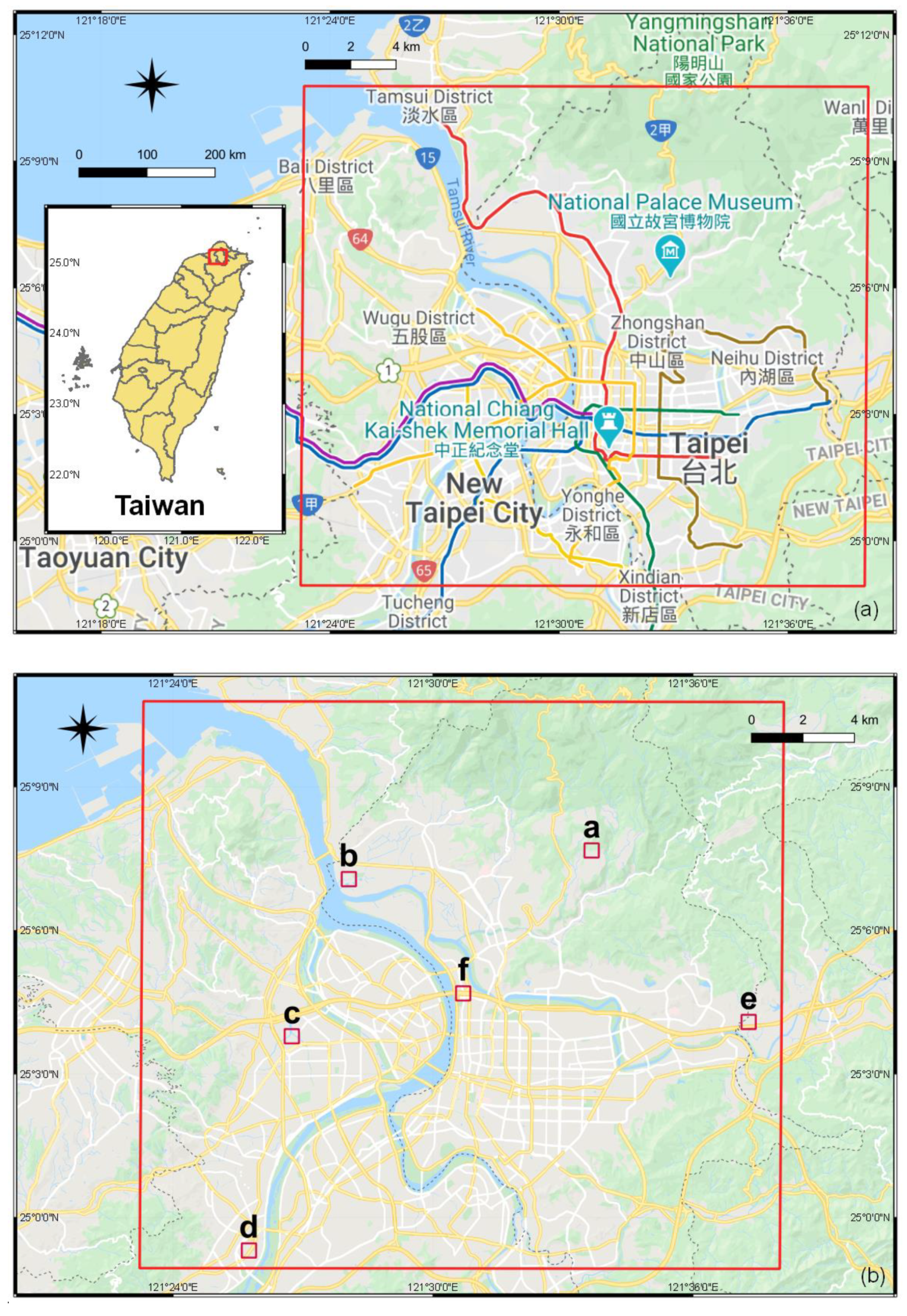

3.1. Study Area and Study Materials

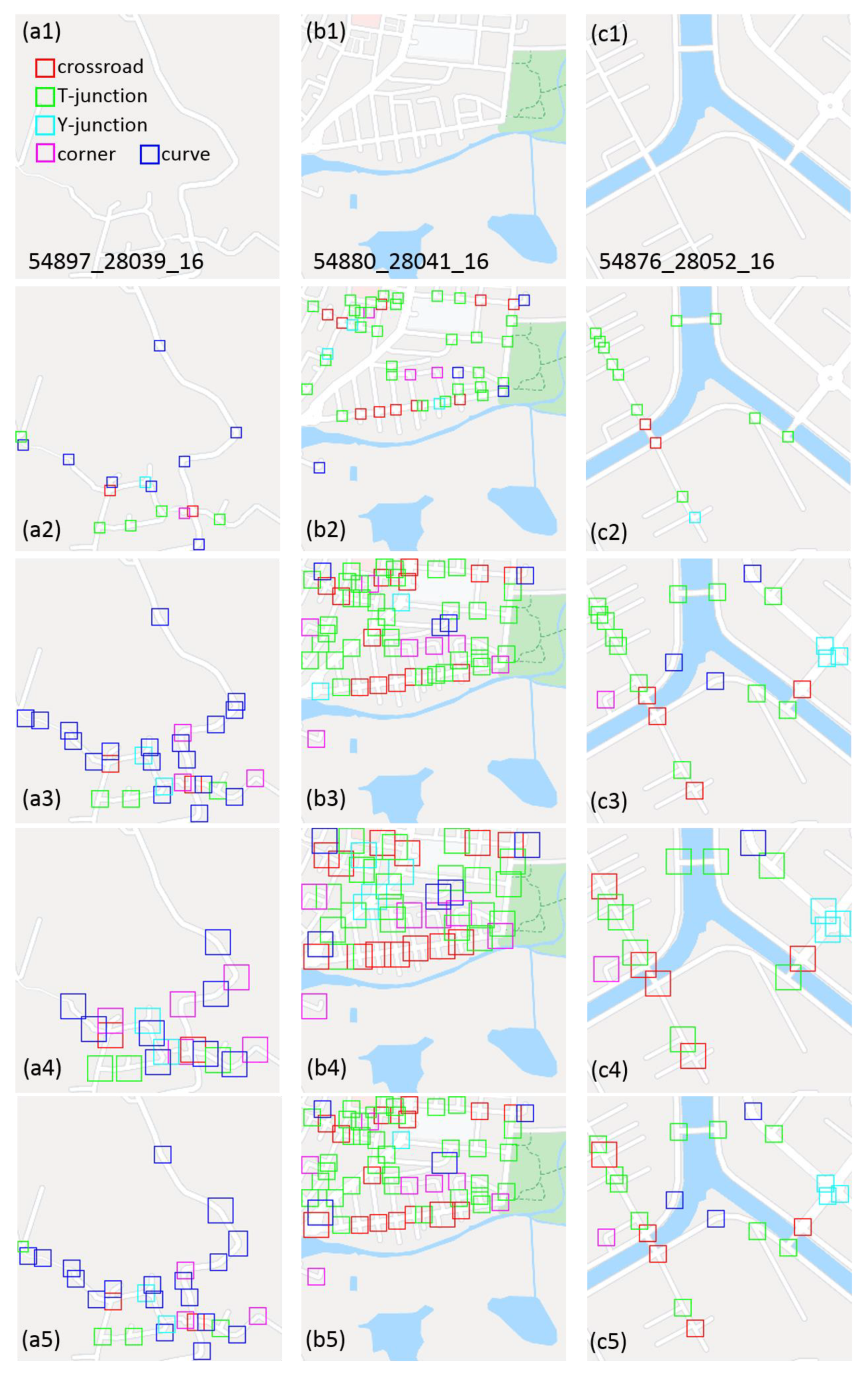

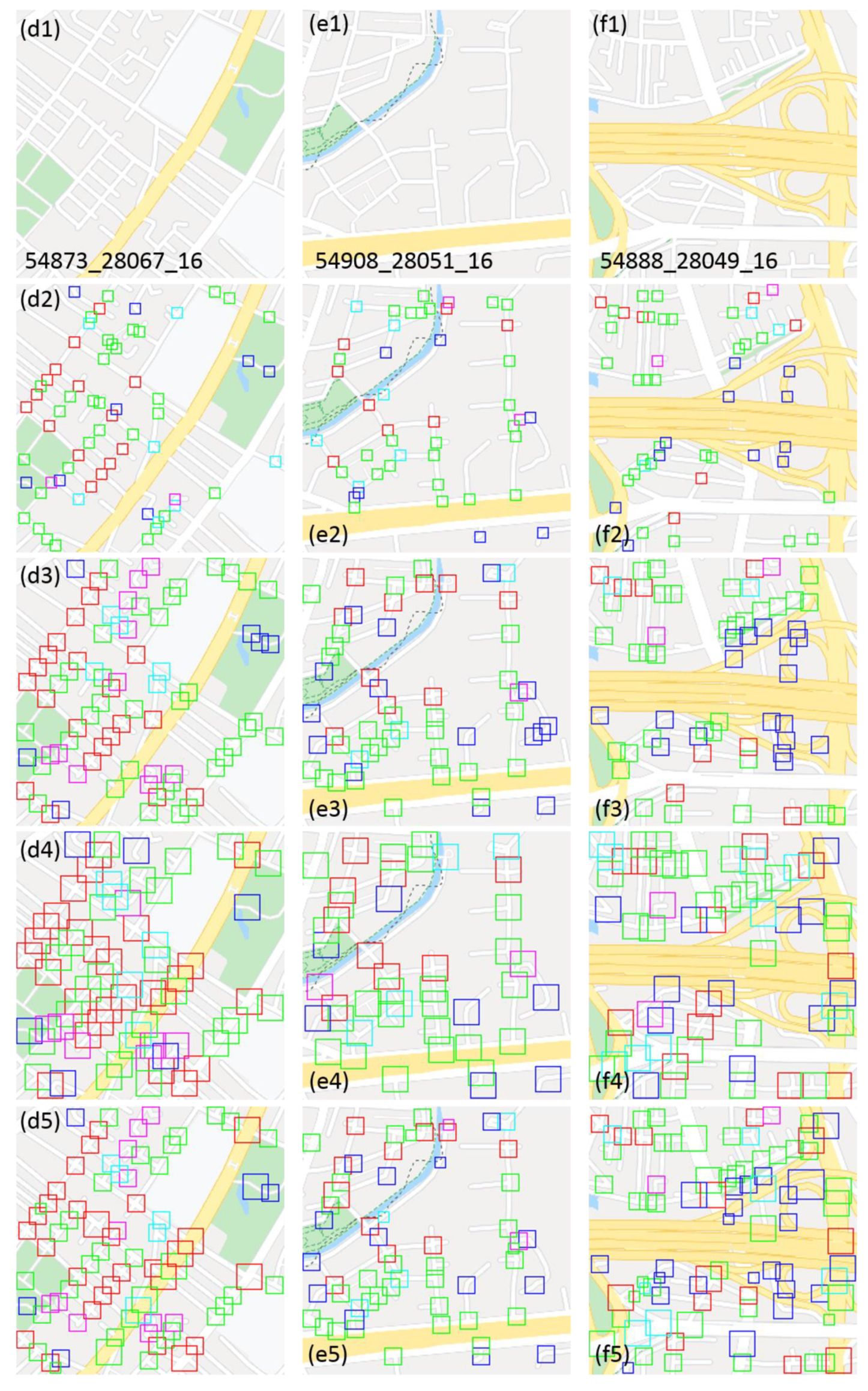

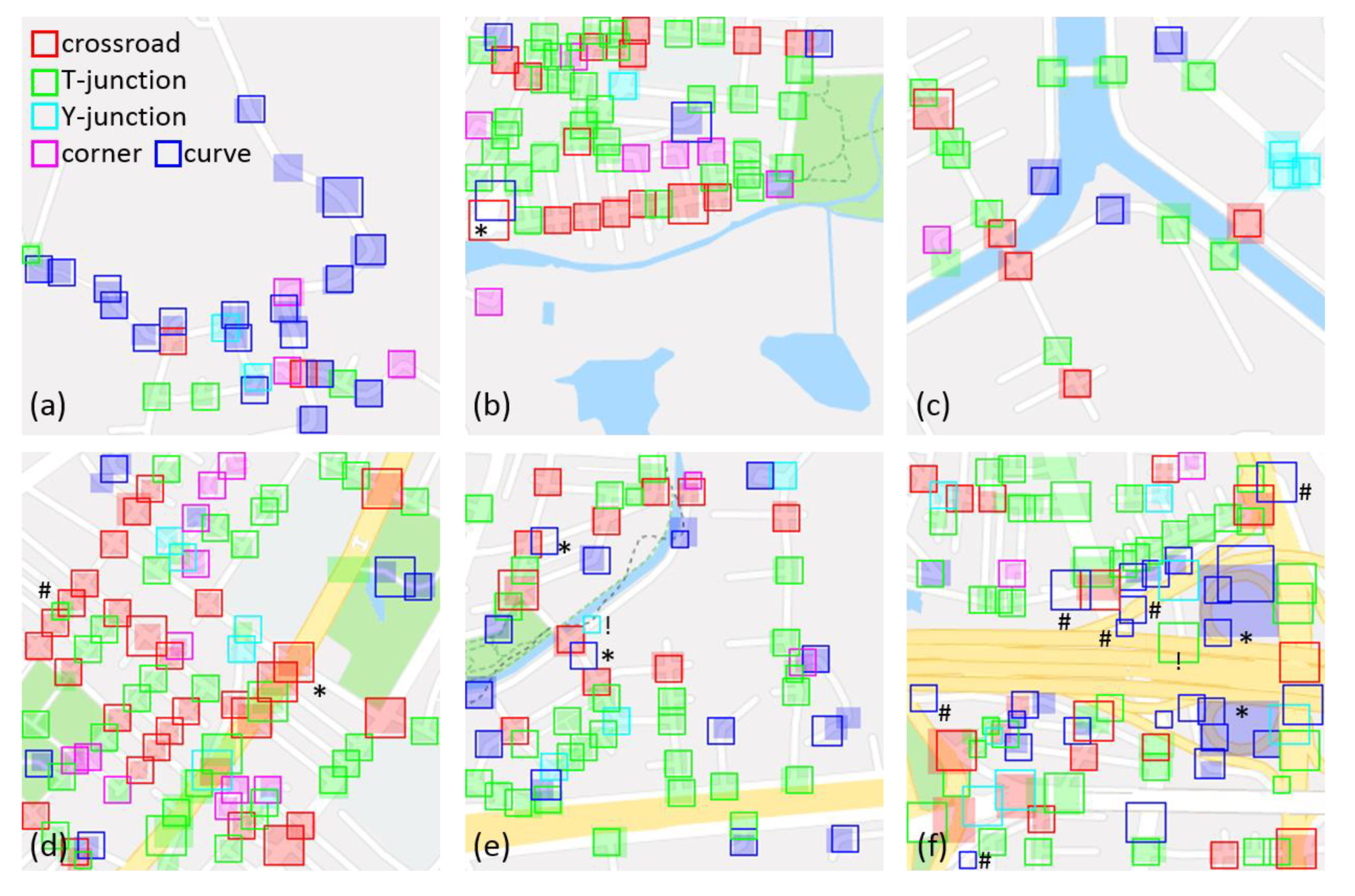

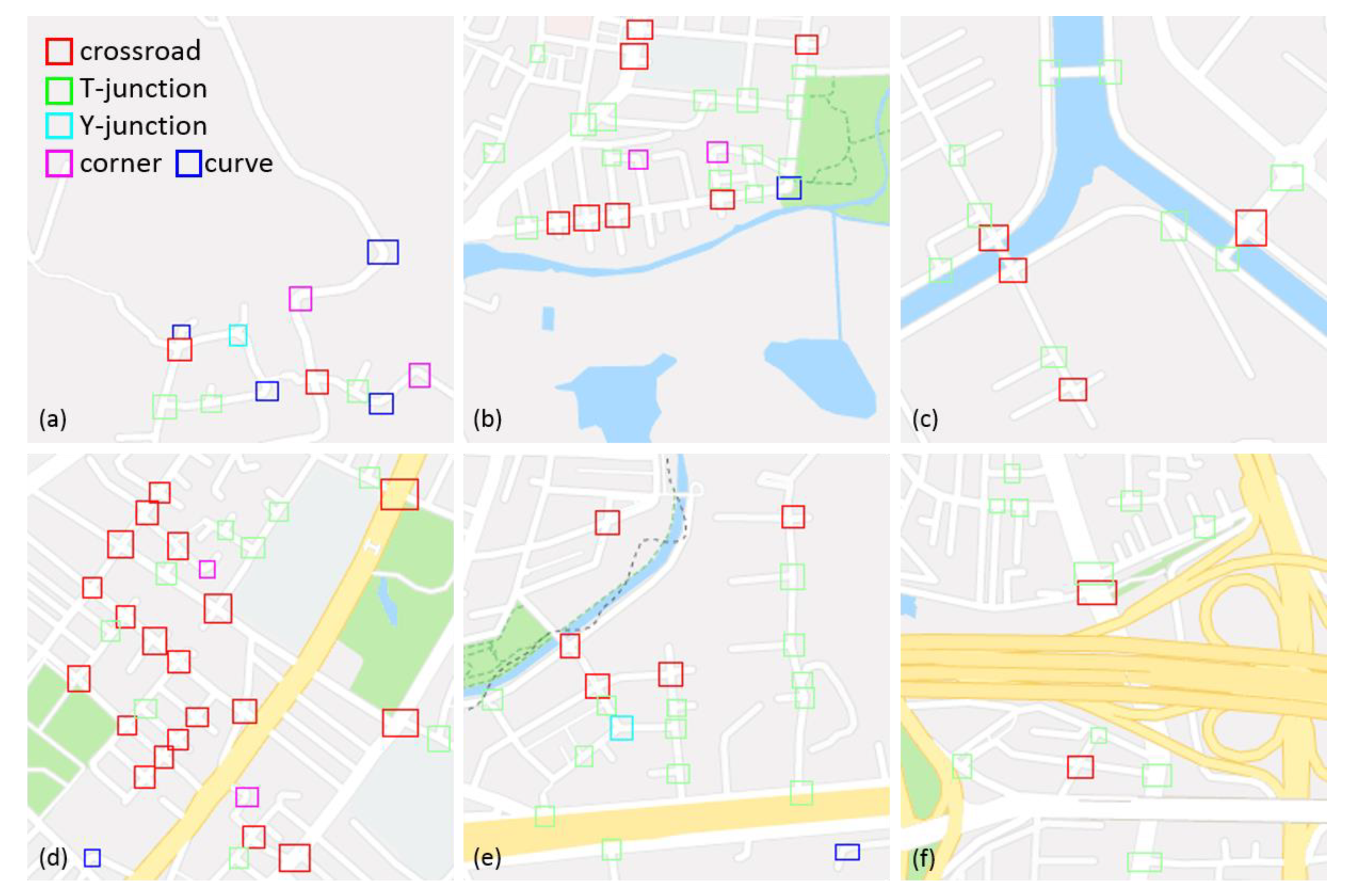

3.2. Experiments and Results

3.3. Discussion and Evaluation

- The types of corner and curve have lower precision and lower recall than other types because of misclassification between these two types. Although a corner is defined as a road characteristic type shaped like a 90-degree geometric pattern, a curve is sometimes classified as a corner because its curvature is nearly 90 degrees. This is why the curve type has only 86% precision.

- The detection of the types T-junction and Y-junction has shown good performance. However, false-positive cases of T-junctions may be caused when two nearly straight lanes are connected, or a curve is connected with a lane from three lanes of a Y-junction. In addition, the cases may be caused by vague images on the border between freeways and general roads as well. So it may be solved by including more training datasets.

- Using adaptive squares for road characteristics detection has performed an outstanding job. However, a few incorrect detection results are mostly caused by insufficient coverage in the squares. For example, crossroads or T-junctions with large widths are not detected. Thus, this is a limitation identified in this study.

4. Conclusions and Future Work

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Xie, K.; Wang, X.; Ozbay, K.; Yang, H. Crash frequency modeling for signalized intersections in a high-density urban road network. Anal. Methods Accid. Res. 2014, 2, 39–51. [Google Scholar] [CrossRef]

- Farahani, R.Z.; Miandoabchi, E.; Szeto, W.Y.; Rashidi, H. A review of urban transportation network design problems. European J. Oper. Res. 2013, 229, 281–302. [Google Scholar] [CrossRef]

- Marshall, W.E.; Garrick, N.W. Does street network design affect traffic safety? Accid. Anal. Prev. 2011, 43, 769–781. [Google Scholar] [CrossRef]

- Ewing, R.; Hamidi, S.; Grace, J.B. Urban sprawl as a risk factor in motor vehicle crashes. Urban Stud. 2016, 53, 247–266. [Google Scholar] [CrossRef]

- Wang, X.; Wu, X.; Abdel-Aty, M.; Tremont, P.J. Investigation of road network features and safety performance. Accid. Anal. Prev. 2013, 56, 22–31. [Google Scholar] [CrossRef] [PubMed]

- Montella, A.; Guida, C.; Mosca, J.; Lee, J.; Abdel-Aty, M. Systemic approach to improve safety of urban unsignalized intersections: Development and validation of a Safety Index. Accid. Anal. Prev. 2020, 141, 105523. [Google Scholar] [CrossRef] [PubMed]

- Yang, C.; Gidófalvi, G. Detecting regional dominant movement patterns in trajectory data with a convolutional neural network. Int. J. Geogr. Inf. Sci. 2020, 34, 996–1021. [Google Scholar] [CrossRef]

- Iagnemma, K. Route Planning for an Autonomous Vehicle. U.S. Patent US10126136B2, 13 November 2018. [Google Scholar]

- Chen, C.; Rickert, M.; Knoll, A. Combining task and motion planning for intersection assistance systems. In Proceedings of the 2016 IEEE Intelligent Vehicles Symposium (IV), Gothenburg, Sweden, 19–22 June 2016. [Google Scholar]

- Qiu, J.; Wang, R. Automatic extraction of road networks from GPS traces. Photogramm. Eng. Remote. Sens. 2016, 82, 593–604. [Google Scholar] [CrossRef]

- Li, L.; Li, D.; Xing, X.; Yang, F.; Rong, W.; Zhu, H. Extraction of road intersections from GPS traces based on the dominant orientations of roads. ISPRS Int. J. Geo-Inf. 2017, 6, 403. [Google Scholar] [CrossRef]

- Wang, J.; Wang, C.; Song, X.; Raghavan, V. Automatic intersection and traffic rule detection by mining motor-vehicle GPS trajectories. Comput. Environ. Urban Syst. 2017, 64, 19–29. [Google Scholar] [CrossRef]

- Yang, X.; Tang, L.; Niu, L.; Zhang, X.; Li, Q. Generating lane-based intersection maps from crowdsourcing big trace data. Transp. Res. Part C Emerg. Technol. 2018, 89, 168–187. [Google Scholar] [CrossRef]

- Xie, X.; Philips, W. Road intersection detection through finding common sub-tracks between pairwise GNSS traces. ISPRS Int. J. Geo-Inf. 2017, 6, 311. [Google Scholar] [CrossRef]

- Xie, X.; Wong, B.-Y.K.; Aghajan, H.; Veelaert, P.; Philips, W. Inferring directed road networks from GPS traces by track alignment. ISPRS Int. J. Geo-Inf. 2015, 4, 2446–2471. [Google Scholar] [CrossRef]

- Chen, B.; Ding, C.; Ren, W.; Xu, G. Extended Classification Course Improves Road Intersection Detection from Low-Frequency GPS Trajectory Data. ISPRS Int. J. Geo-Inf. 2020, 9, 181. [Google Scholar] [CrossRef]

- Munoz-Organero, M.; Ruiz-Blaquez, R.; Sánchez-Fernández, L. Automatic detection of traffic lights, street crossings and urban roundabouts combining outlier detection and deep learning classification techniques based on GPS traces while driving. Comput. Environ. Urban Syst. 2018, 68, 1–8. [Google Scholar] [CrossRef]

- Zourlidou, S.; Fischer, C.; Sester, M. Classification of street junctions according to traffic regulators. In Geospatial Technologies for Local and Regional Development: Short Papers, Posters and Poster Abstracts, Proceedings of the 22nd AGILE Conference on Geographic Information Science, Limassol, Cyprus, 17–20 June 2019; Kyriakidis, P., Hadjimitsis, D., Skarlatos, D., Mansourian, A., Eds.; Springer: Berlin/Heidelberg, Germany, 2019. [Google Scholar]

- Soilán, M.; Truong-Hong, L.; Riveiro, B.; Laefer, D. Automatic extraction of road features in urban environments using dense ALS data. Int. J. Appl. Earth Obs. Geoinf. 2018, 64, 226–236. [Google Scholar] [CrossRef]

- Jung, J.; Bae, S.-H. Real-time road lane detection in urban areas using LiDAR data. Electronics 2018, 7, 276. [Google Scholar] [CrossRef]

- Hu, J.; Razdan, A.; Femiani, J.C.; Cui, M.; Wonka, P. Road network extraction and intersection detection from aerial images by tracking road footprints. IEEE Trans. Geosci. Remote. Sens. 2007, 45, 4144–4157. [Google Scholar] [CrossRef]

- Chiang, Y.-Y.; Knoblock, C.A.; Shahabi, C.; Chen, C.-C. Automatic and accurate extraction of road intersections from raster maps. GeoInformatica 2009, 13, 121–157. [Google Scholar] [CrossRef]

- Chiang, Y.-Y.; Knoblock, C.A.; Chen, C.-C. Automatic extraction of road intersections from raster maps. In Proceedings of the 13th annual ACM international workshop on Geographic information systems, Bremen, Germany, 4–5 November 2005. [Google Scholar]

- Liu, J.; Qin, Q.; Li, J.; Li, Y. Rural road extraction from high-resolution remote sensing images based on geometric feature inference. ISPRS Int. J. Geo-Inf. 2017, 6, 314. [Google Scholar] [CrossRef]

- Bakhtiari, H.R.R.; Abdollahi, A.; Rezaeian, H. Semi automatic road extraction from digital images. Egypt. J. Remote. Sens. Space Sci. 2017, 20, 117–123. [Google Scholar] [CrossRef]

- Mokhtarzade, M.; Zoej, M.V. Road detection from high-resolution satellite images using artificial neural networks. Int. J. Appl. Earth Obs. Geoinf. 2007, 9, 32–40. [Google Scholar] [CrossRef]

- Bhatt, D.; Sodhi, D.; Pal, A.; Balasubramanian, V.; Krishna, M. Have i reached the intersection: A deep learning-based approach for intersection detection from monocular cameras. In Proceedings of the 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 24–28 September 2017. [Google Scholar]

- Bastani, F.; He, S.; Abbar, S.; Alizadeh, M.; Balakrishnan, H.; Chawla, S.; Madden, S.; DeWitt, D. Roadtracer: Automatic extraction of road networks from aerial images. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar]

- Saeedimoghaddam, M.; Stepinski, T. Automatic extraction of road intersection points from USGS historical map series using deep convolutional neural networks. Int. J. Geogr. Inf. Sci. 2020, 34, 947–968. [Google Scholar] [CrossRef]

- Ruiz, J.; Rubio, T.; Urena, M. Automatic extraction of road intersections from images based on texture characterisation. Surv. Rev. 2011, 43, 212–225. [Google Scholar] [CrossRef]

- Masó, J.; Pomakis, K.; Julià, N. OGC Web Map Tile Service (WMTS). Implement. Standard. Ver 2010, 1, 114. [Google Scholar]

- Tile Map Service Specification. Available online: https://wiki.osgeo.org/wiki/Tile_Map_Service_Specification (accessed on 15 June 2020).

- Kastanakis, B. Mapbox Cookbook; Packt Publishing Ltd.: Birmingham, UK, 2016. [Google Scholar]

- Martinelli, L.; Roth, M. Vector Tiles from OpenStreetMap; HSR Hochschule für Technik Rapperswil: Rapperswil-Jona, Switzerland, 2015. [Google Scholar]

- Shi, N.X.; Wu, X. Method of Client Side Map Rendering with Tiled Vector Data. U.S. Patent US7734412B2, 8 June 2010. [Google Scholar]

- Zhao, Z.-Q.; Zheng, P.; Xu, S.-T.; Wu, X. Object detection with deep learning: A review. IEEE Trans. Neural Netw. Learn. Syst. 2019, 30, 3212–3232. [Google Scholar] [CrossRef] [PubMed]

- Liu, L.; Ouyang, W.; Wang, X.; Fieguth, P.; Chen, J.; Liu, X.; Pietikäinen, M. Deep learning for generic object detection: A survey. Int. J. Comput. Vis. 2020, 128, 261–318. [Google Scholar] [CrossRef]

- Pathak, A.R.; Pandey, M.; Rautaray, S. Application of deep learning for object detection. Procedia Comput. Sci. 2018, 132, 1706–1717. [Google Scholar] [CrossRef]

- Behrendt, K.; Novak, L.; Botros, R. A deep learning approach to traffic lights: Detection, tracking, and classification. In Proceedings of the 2017 IEEE International Conference on Robotics and Automation (ICRA), Singapore, 29 May–3 June 2017. [Google Scholar]

- Mundhenk, T.N.; Konjevod, G.; Sakla, W.A.; Boakye, K. A large contextual dataset for classification, detection and counting of cars with deep learning. In European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2016. [Google Scholar]

- Kussul, N.; Lavreniuk, M.; Skakun, S.; Shelestov, A. Deep learning classification of land cover and crop types using remote sensing data. IEEE Geosci. Remote. Sens. Lett. 2017, 14, 778–782. [Google Scholar] [CrossRef]

- Zhang, C.; Sargent, I.; Pan, X.; Li, H.; Gardiner, A.; Hare, J.; Atkinson, P.M. Joint Deep Learning for land cover and land use classification. Remote. Sens. Environ. 2019, 221, 173–187. [Google Scholar] [CrossRef]

- Google Maps. Available online: https://www.google.com/maps (accessed on 1 February 2020).

- OpenStreetMap. Available online: https://www.openstreetmap.org/ (accessed on 5 May 2021).

- Pourabdollah, A.; Morley, J.; Feldman, S.; Jackson, M. Towards an authoritative OpenStreetMap: Conflating OSM and OS OpenData national maps’ road network. ISPRS Int. J. Geo-Inf. 2013, 2, 704–728. [Google Scholar] [CrossRef]

- Wu, S.; Du, C.; Chen, H.; Xu, Y.; Guo, N.; Jing, N. Road extraction from very high resolution images using weakly labeled OpenStreetMap centerline. ISPRS Int. J. Geo-Inf. 2019, 8, 478. [Google Scholar] [CrossRef]

- Nasiri, A.; Abbaspour, R.A.; Chehreghan, A.; Arsanjani, J.J. Improving the quality of citizen contributed geodata through their historical contributions: The case of the road network in OpenStreetMap. ISPRS Int. J. Geo-Inf. 2018, 7, 253. [Google Scholar] [CrossRef]

- Li, J.; Qin, H.; Wang, J.; Li, J. OpenStreetMap-based autonomous navigation for the four wheel-legged robot via 3D-Lidar and CCD camera. IEEE Trans. Ind. Electron. 2021, 1-1. [Google Scholar] [CrossRef]

- Keller, S.; Gabriel, R.; Guth, J. Machine learning framework for the estimation of average speed in rural road networks with openstreetmap data. ISPRS Int. J. Geo-Inf. 2020, 9, 638. [Google Scholar] [CrossRef]

- Novack, T.; Wang, Z.; Zipf, A. A system for generating customized pleasant pedestrian routes based on OpenStreetMap data. Sensors 2018, 18, 3794. [Google Scholar] [CrossRef]

- Wang, Z.; Niu, L. A data model for using OpenStreetMap to integrate indoor and outdoor route planning. Sensors 2018, 18, 2100. [Google Scholar] [CrossRef]

- Sehra, S.S.; Singh, J.; Rai, H.S. Assessing OpenStreetMap Data Using Intrinsic Quality Indicators: An Extension to the QGIS Processing Toolbox. Future Internet 2017, 9, 15. [Google Scholar] [CrossRef]

- Jacobs, K.T.; Mitchell, S.W. OpenStreetMap quality assessment using unsupervised machine learning methods. Trans. GIS 2020, 24, 1280–1298. [Google Scholar] [CrossRef]

- Mooney, P.; Minghini, M. A review of OpenStreetMap data. In Mapping and the Citizen Sensor; Foody, G., See, L., Fritz, S., Mooney, P., Olteanu-Raimond, A., Fonte, C.C., Antoniou, V., Eds.; Ubiquity Press: London, UK, 2017; pp. 37–59. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. arXiv 2015, arXiv:1506.01497. [Google Scholar] [CrossRef]

- Google Maps Platform Documentation- Maps Static API. Available online: https://developers.google.com/maps/documentation/maps-static/start (accessed on 10 October 2020).

- Google Maps Platform Documentation- Styled Maps. Available online: https://developers.google.com/maps/documentation/maps-static/styling (accessed on 10 October 2020).

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Szegedy, C.; Ioffe, S.; Vanhoucke, V.; Alemi, A.A. Inception-v4, inception-resnet and the impact of residual connections on learning. In Proceedings of the Thirty-First AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 4–9 February 2017. [Google Scholar]

- Hosang, J.; Benenson, R.; Schiele, B. Learning non-maximum suppression. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4507–4515. [Google Scholar]

- Google Maps Platform Styling Wizard. Available online: https://mapstyle.withgoogle.com/ (accessed on 14 October 2019).

- LabelImg. Git Code. Available online: https://github.com/tzutalin/labelImg (accessed on 1 July 2020).

| Object | 10 × 10 Pixels (Small) | 16 × 16 Pixels (Medium) | 24 × 24 Pixels (Large) | ||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Subject | A | B | C | D | E | A | B | C | D | E | A | B | C | D | E | ||||||

| 10 × 10 pixels (small) | A | / | / | / | / | / | / | / | / | / | / | ||||||||||

| B | / | / | / | / | / | / | / | / | / | / | / | / | / | / | |||||||

| C | / | / | / | / | / | / | / | / | / | / | |||||||||||

| D | / | / | / | / | / | / | / | / | / | / | / | / | / | / | |||||||

| E | / | / | / | / | / | / | / | / | / | / | |||||||||||

| 16 × 16 pixels (medium) | A | ||||||||||||||||||||

| B | / | / | / | / | |||||||||||||||||

| C | |||||||||||||||||||||

| D | / | / | / | / | |||||||||||||||||

| E | |||||||||||||||||||||

| 24 × 24 pixels (large) | A | / | / | / | / | / | |||||||||||||||

| B | / | / | / | / | / | / | / | / | / | ||||||||||||

| C | / | / | / | / | / | ||||||||||||||||

| D | / | / | / | / | / | / | / | / | / | ||||||||||||

| E | / | / | / | / | / | ||||||||||||||||

Rule II:

Rule II:  Rule III:

Rule III:  Rule IV:

Rule IV:  Rule V:

Rule V:  NMS + CS:

NMS + CS:  .

.| Model | Model 1 | Model 2 | |||||||

|---|---|---|---|---|---|---|---|---|---|

| Data Set | BG | FG | BG | Crossroad | T-Junction | Y-Junction | Corner | Curve | |

| Training set | 2550 | 2550 | 510 | 510 | 510 | 510 | 510 | 510 | |

| Validation set | 1275 | 1275 | 250 | 255 | 255 | 255 | 255 | 255 | |

| Test set | 425 | 425 | 80 | 85 | 85 | 85 | 85 | 85 | |

| Model | Validation Set | Test Set |

|---|---|---|

| Model 1 | 0.962 | 0.960 |

| Model 2 | 0.962 | 0.969 |

| Measures | Precision | Recall | F1-Score | |

|---|---|---|---|---|

| Model with Types | ||||

| Model 1 | BG | 0.951 | 0.969 | 0.960 |

| FG | 0.969 | 0.952 | 0.960 | |

| Model 2 | BG | 0.998 | 0.993 | 0.995 |

| crossroad | 1 | 1 | 1 | |

| T-junction | 0.941 | 0.941 | 0.941 | |

| Y-junction | 0.941 | 0.941 | 0.941 | |

| corner | 0.965 | 0.901 | 0.932 | |

| curve | 0.859 | 0.948 | 0.901 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kuo, C.-L.; Tsai, M.-H. Road Characteristics Detection Based on Joint Convolutional Neural Networks with Adaptive Squares. ISPRS Int. J. Geo-Inf. 2021, 10, 377. https://doi.org/10.3390/ijgi10060377

Kuo C-L, Tsai M-H. Road Characteristics Detection Based on Joint Convolutional Neural Networks with Adaptive Squares. ISPRS International Journal of Geo-Information. 2021; 10(6):377. https://doi.org/10.3390/ijgi10060377

Chicago/Turabian StyleKuo, Chiao-Ling, and Ming-Hua Tsai. 2021. "Road Characteristics Detection Based on Joint Convolutional Neural Networks with Adaptive Squares" ISPRS International Journal of Geo-Information 10, no. 6: 377. https://doi.org/10.3390/ijgi10060377

APA StyleKuo, C.-L., & Tsai, M.-H. (2021). Road Characteristics Detection Based on Joint Convolutional Neural Networks with Adaptive Squares. ISPRS International Journal of Geo-Information, 10(6), 377. https://doi.org/10.3390/ijgi10060377