1. Introduction

Virtual reality (VR) in an educational setting reduces passive learning that students often experience in the classroom. Instead, students using VR engage in active learning, which is more immersive and engaging [

1]. VR can be used to address the practical challenges in education, such as physically inaccessible sites, limitations due to liability or hazards, and the high costs associated with site visits [

2]. VR has been used as an educational and instructional tool as early as the 1980s (e.g., flight simulations) [

3,

4]. In higher education, VR was introduced in the 1990s; however, limitations such as high purchase and maintenance cost, physical and psychological discomfort of the users, and poor virtual environment design were the main reasons for prohibiting widespread dissemination [

4]. The reduction in the cost of computer and VR hardware, increase of computer power, and photorealistic computer graphics allowed a rapid rise in desktop-based virtual technology in education. However, a major drawback of traditional application is the low level of immersion as the user interacts with the virtual environment via a standard computer monitor, mouse, and keyboard. This limits the presence, experience, and engagement of the user [

5]. Several studies for various purposes, such as U.S. army training, medicine, engineering, and elementary education, have found that immersive VR leads to increased learning [

6,

7,

8,

9]. In addition, Patel et al. [

10] found that, in physical tasks, participants learn more in immersive VR than using 2D video systems. In contrast, other studies found that immersive VR had no significant effect on learning [

11,

12]. Immersive VR has a significant advantage over desktop-based systems when teaching topics that require special reasoning [

13,

14,

15,

16]. The main two types of immersive VR are (i) cave automatic virtual environments, where the walls of a room are covered with displays and the user wears 3D glasses; and (ii) head mounted displays (HMDs), where the user wears a display device to see the virtual environment and touch controllers or gloves to interact with it. The high cost of the former prohibits its widespread use [

2]. Only in recent years, since the founding of Oculus in 2012, has widespread VR accessibility become feasible with a large reduction in price and an improved user experience. This has created the opportunity for application in engineering education and related disciplines. For example, immersive VR has supported engineering design [

17] in construction engineering. VR has been used for visualization, safety training, training of the use of equipment such as cranes [

18], geohazard visualization and assessment [

19], simulating of a marine engine room for training marine engineers [

20], and training for beginners on radiation shielding in nuclear engineering [

21]. Furthermore, immersive VR has found applications in geography, geosciences, and spatial sciences [

22,

23]. Some recent examples include the use of VR to conduct virtual field trips to outcrop sites and take measurements about the rock characteristics (e.g., thickness or rocks layers) [

24,

25], studying the internal system of volcanos [

26], and a serious game of a moon base to motivate and support space research [

27].

Previous virtual implementations of surveying tasks were desktop-based [

28,

29,

30,

31]. For instance, Dib et al. [

28] created a differential leveling lab in desktop-based VR to assist student comprehension of concepts and practices. Differential leveling is the process of finding elevation differences between points [

32]. This method can be used to establish new vertical control points in surveying. The authors found that virtual learning environments aid students’ declarative and procedural knowledge, which shows great potential of VR technology for surveying engineering education. However, student answers to open-ended questions revealed the limitation of non-immersive VR, as students found that navigation in flying motion was difficult and they would prefer a camera attached at the eye level of the character [

28]. Another desktop-based attempt was SimuSurvey and SimuSurvey X, developed by [

29,

30,

31], which simulated manipulation of surveying instruments. Furthermore, the software simulated instrument errors and their interaction, which aided student comprehension of instrument operation and systematic erratic behavior [

33]. Assessment of the software in surveying education showed an improvement of student academic performance [

34]; however, some of the identified limitations were the inability to read measured data and decision-making of surveying procedures in a 3D environment that includes terrain constraints [

34]. In addition, the desktop-based environment created challenges in instrument operation and changing view angles. This is because desktop-based environments cannot mimic hand movement and real instrument operation. The user needs to switch between several viewpoints to navigate through the virtual environment; this is unnatural and cumbersome, reducing realism and the immersion of the virtual lab. Other simpler attempts include software for training students on how to read a leveling rod [

35] and control point selection for terrain modeling [

36]. In surveying engineering, students must operate complicated instruments with several components. These tasks require hand-eye coordination as well as 3D spatial awareness. The above examples demonstrate the limitations of desktop-based implementation of VR and the need to move into immersive implementations to create a sense of naturalism in navigation and movement. Immersive VR has only recently found its way into surveying engineering [

37,

38,

39]. Bolkas and Chiampi [

37] proposed that immersive VR labs can be used to address challenges in first year surveying engineering education related to cancellation of outdoor labs as a result of weather (rain and snow) and the inability to conduct labs in various terrains and sites (e.g., different terrains, cities, and construction sites). The recent coronavirus pandemic pushes universities to remote learning, but also opens the door for implementation of novel remote learning methods. However, there are still some important barriers related to hardware cost to allow for the widespread application of immersive VR for remote learning. Therefore, desktop-based VR is still the most suitable platform for remote learning purposes of surveying labs. The first results in Bolkas et al. [

38] of an immersive and interactive leveling lab (a leveling loop) were promising, but despite the positives, the main drawbacks of immersive VR are symptoms of nausea and dizziness for novice users. Their effect tends to subside with time. Levin et al. [

39] is another example of immersive VR implementation in surveying. The authors created a topographic exercise to generate contours in a simulated terrain of varying complexity. The contours created by students were compared with reference ones, showing practical aspects of terrain modeling. Their example demonstrates the value of VR to address education challenges; however, the implementation was simple, missing several important parts of the fieldwork, such as centering the tripod, work with tribrach screws, and instrument leveling.

To address these educational challenges, we have developed a virtual reality software solution named SurReal—surveying reality. This software simulates surveying labs in an immersive and interactive virtual environment. The application replicates, to a high degree of fidelity, a differential level instrument, but can also incorporate additional instruments, a variety of terrains, and can be used in a variety of surveying scenarios.

This paper focuses on the technical aspects of the software and the main objective is to present and discuss the main software features, the encountered challenges, and the methods developed to overcome them. Of note is our novel technique that was developed for the positioning of complex objects (like a tripod) on complex surfaces in VR with low computational load. Complex objects are becoming ever present as the intricacy of simulations and games is increasing; therefore, it is important to maintain a low computational load that will not affect the performance of the virtual simulation. This paper is structured into separate sections pertinent to the main features of the software. We then provide demonstrations of our virtual differential leveling labs, i.e., a three-benchmark leveling loop and a point-to-point leveling line. The final section summarizes the main conclusions of this paper and discusses remarks for future work.

2. Materials and Methods

2.1. Development Platform, Hardware, and User Interface

The software is in continuous development using an Agile Software Development methodology. Features and bugs are tracked via Microsoft Planner and tasks are distributed during frequent scrum meetings. A private GitHub code repository facilitates development and versioning. New features are developed in branches before being merged into the master after passing testing. Alpha testing is routinely conducted by team members. Issues and changes are then added to Microsoft Planner before being addressed at the next scrum meeting. Occasionally, surveying students, from outside of the project, use the software and act as a small-scale beta test. This methodology allows us to quickly implement changes and improve the software.

To choose a development platform, we compared the Unity game engine with the Unreal game engine. Both game engines support VR development and have similar feature sets. Unity uses C# and Unreal uses C++. Although both languages are similar, there were significant advantages to C#, such as allowing for faster iteration and being easier to learn. Furthermore, Unity 3D has a more active development community, as it is the most popular game engine for educational and training VR applications [

40]. With respect to hardware, we considered the Oculus Rift and HTC Vive. Price points between the Oculus Rift and HTC Vive were similar. We decided on the Oculus Rift after examining both software development kits (SDKs), looking at the documentation, and performing a trial run with both devices. The system requirements of the software are based on the system requirements of Oculus Rift, which can be found in [

41].

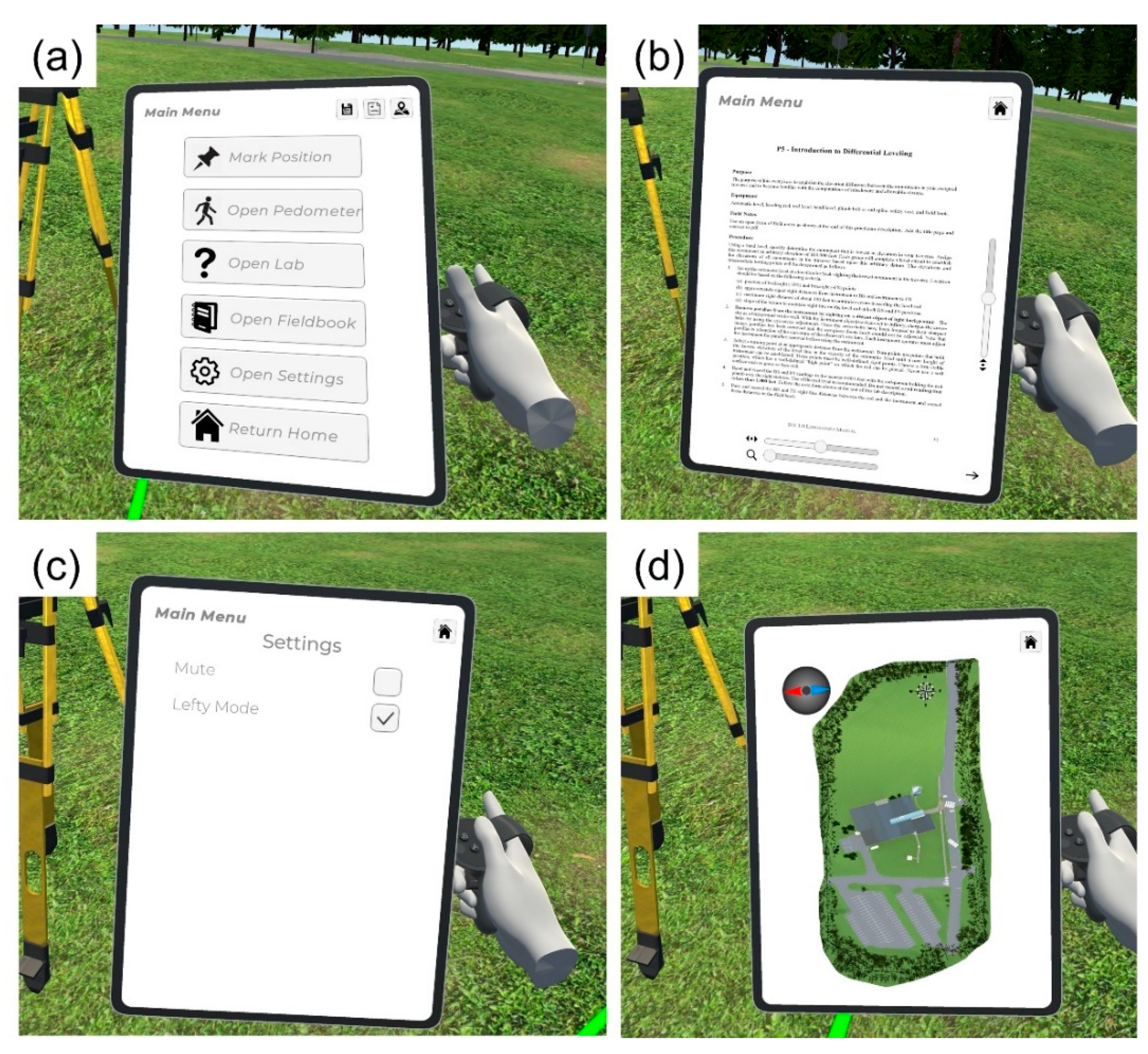

Interaction with virtual objects can be broken down into two categories: (i) selection and (ii) grabbing. The interaction is controlled through controllers (

Figure 1).

Grabbing: This is where the user can physically grab the object by extending their hand, holding it, and dropping it in a new position. This interaction is very intuitive and feels fluid even for new users with no experience in VR (see

Figure 1).

Selection: In the real-world, surveyors must adjust tripod legs, screws, and knobs by making fine movements using their fingers. Such movements are difficult to simulate in VR because of tracking limitations; thus, a selection and menu method was developed. Oculus provides a very basic form of user interface (UI) control; we, therefore, built a custom UI system to work seamlessly in VR. This system uses a pointer to select UI elements and interact with them. With a basic piece of equipment, the entire object is selected no matter what part the user selects. With more complex objects, which are broken down into standalone individual components, the user can select them and interact with them separately. When the user has an object selected, a menu for that object will appear. We handle menus as a screen on a virtual tablet held in the player’s nondominant hand.

In the main menu of the virtual tablet (

Figure 2a), the user can access basic software functions that are useful for the virtual labs: marking the position, which drops a temporary point; open the pedometer for counting paces; and open the lab instructions, which opens a PDF with lab instructions for students (

Figure 2b). Students can open the fieldbook to access their notes and make modifications. The “open settings” option allows students to mute ambient sounds of the virtual environment. From settings, the students can select the lefty mode, switching the selection pointer to their left hand (

Figure 2c). At the top right corner of the main menu, there are three additional options, i.e., save progress, export a lab report in PDF, and an option to open a map of the area (

Figure 2d).

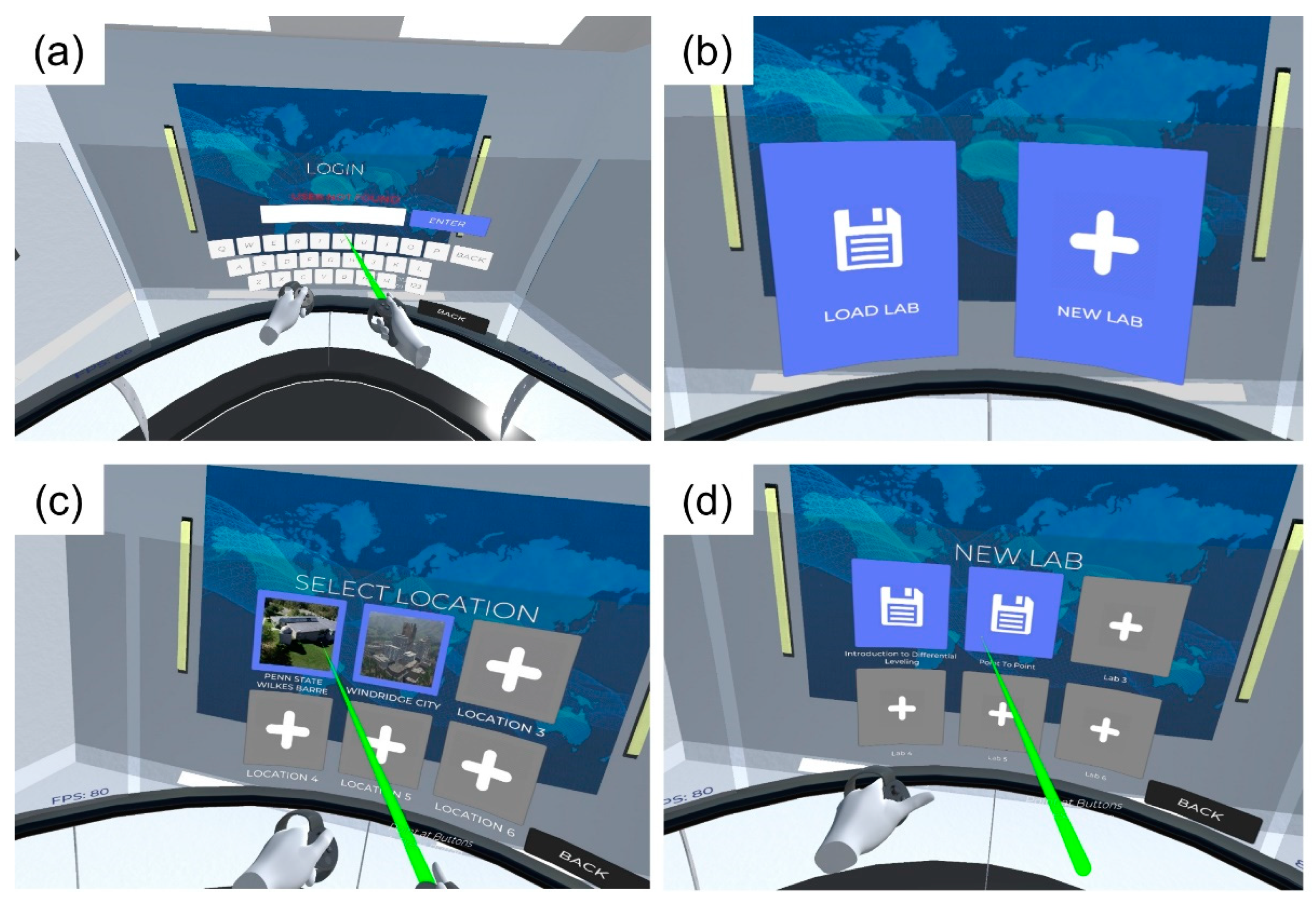

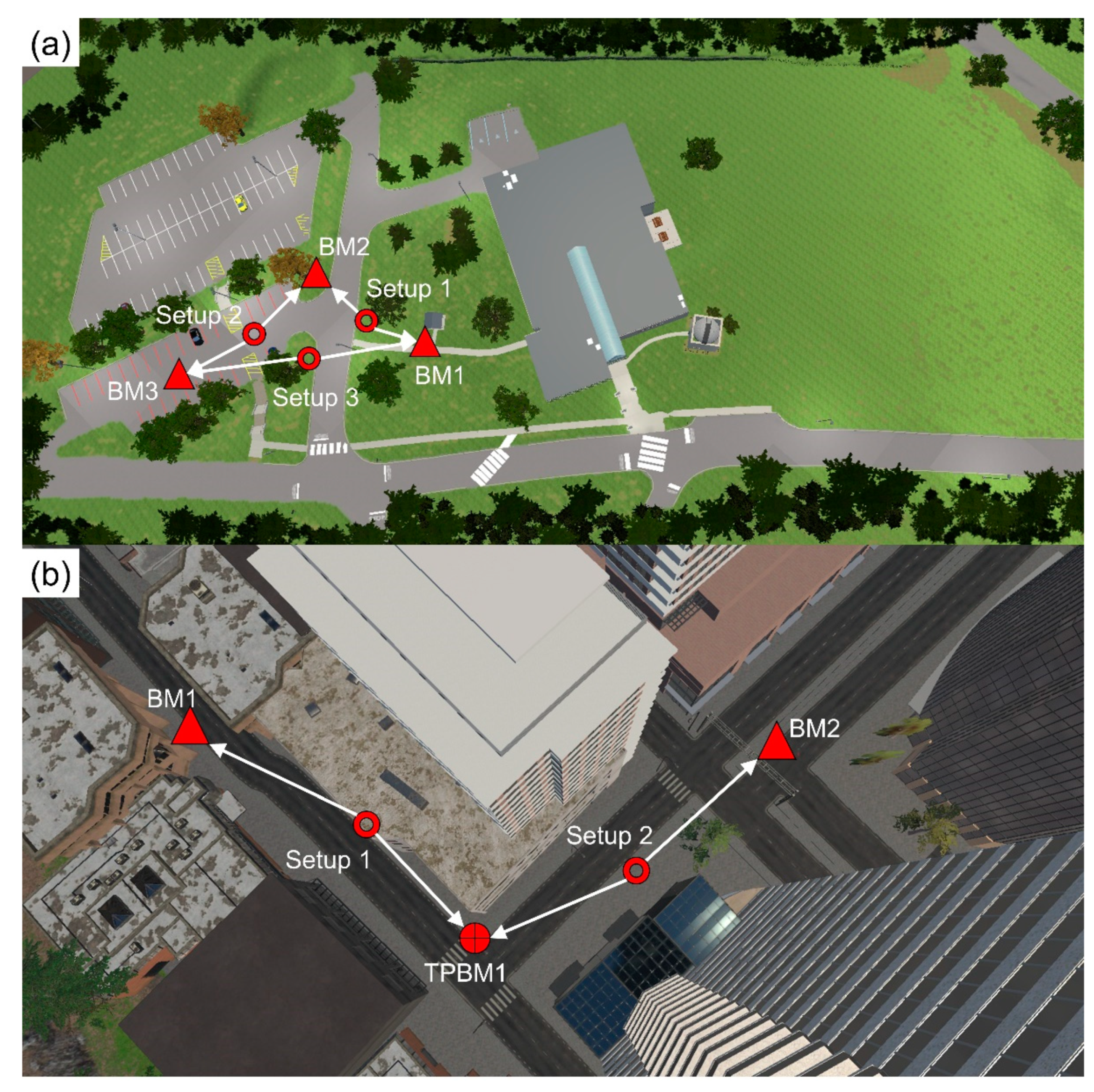

2.2. The Ready Room and Virtual Environment

When students start the software application, they are brought into a virtual environment we call the “ready room” (

Figure 3). Here, students can log into their account to access the software (

Figure 3a). The login options were included to authenticate and authorize the users. In addition, this enables a student to start a lab on one machine and complete it on another (

Figure 3b). Through the ready room, students can choose which virtual environment and which lab that they want to use. Currently, we have two leveling labs, the first is a three-benchmark loop and the second is a level line. Our future work will expand the available labs to include additional instruments (e.g., total stations and global navigation satellite system) and more tasks (e.g., setting control and collecting data for topographic mapping).

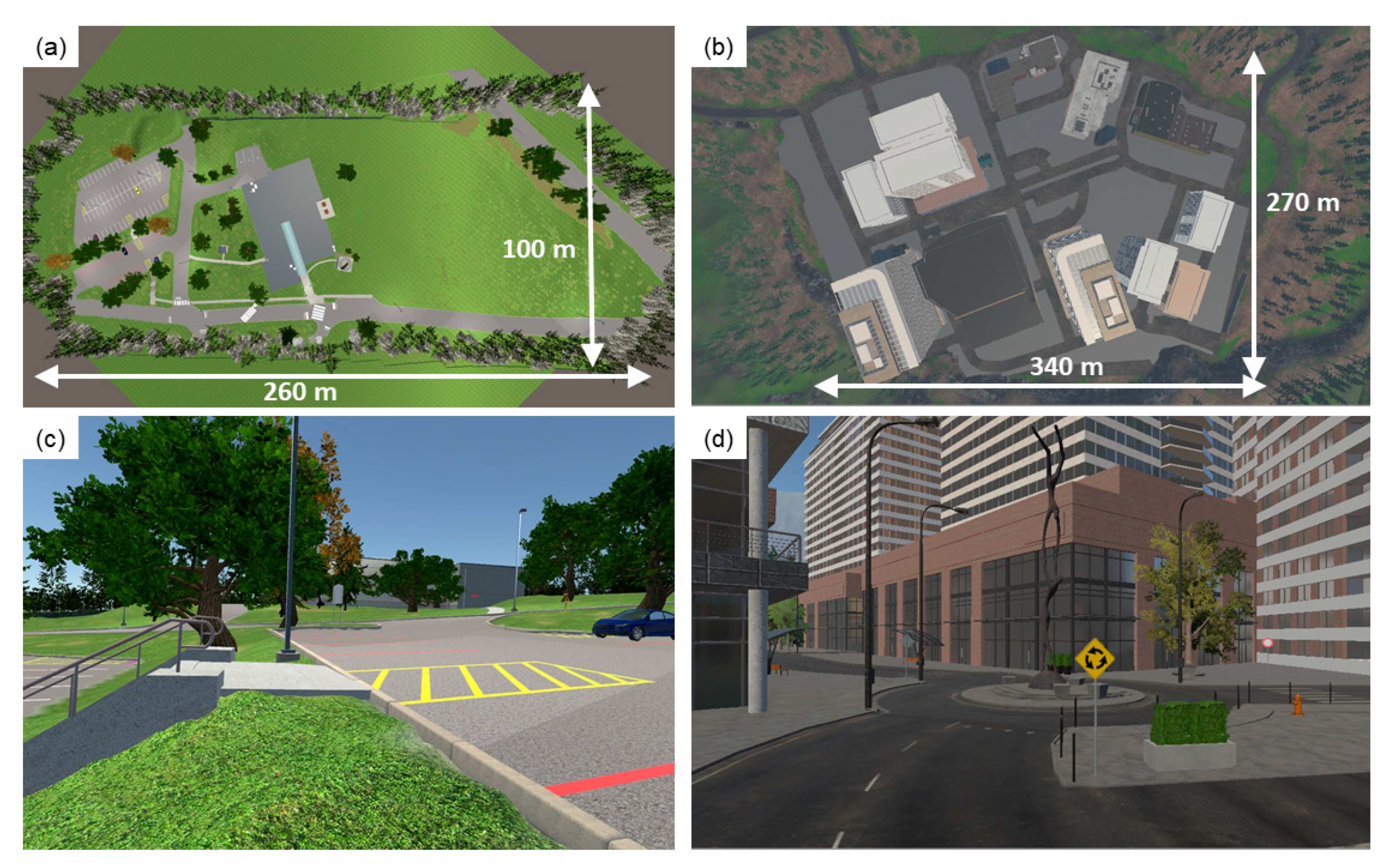

An integral component of any VR software, game, or application is the virtual environment. Through the environment, users can navigate, explore, and experience the main software features. In VR, the environment is key to create a feeling of “being there”. The first environment we created was a part of the Penn State Wilkes-Barre campus, where surveying students often complete their physical labs. This served as validation for using point cloud technologies, namely aerial photogrammetry from small unmanned aerial systems (sUAS) and terrestrial laser scanning, to create a realistic environment (terrain and objects) [

42]. Such technologies capture geometric information at the few cm-level, thus allowing for accurate geometric representation of real scenes in VR. Point cloud technologies have been used to create virtual environments in several disciplines such as in gaming and filmmaking, preservation of cultural heritage, and geoscience for field trips [

43,

44,

45,

46,

47,

48]. Another essential aspect of virtual environments is textures, as they give a sense of realism. To create realistic textures, we used close-up pictures and applied them as materials on the 3D objects [

42].

Figure 4 shows an example of the virtual environment. The second environment available in the current version of the software is the Windridge City [

49]. This environment is offered free and ready to use by Unity. We use the Windridge City to simulate urban surveys within the software. The software can support several environments and labs, and more environments with different terrains will be added in the future.

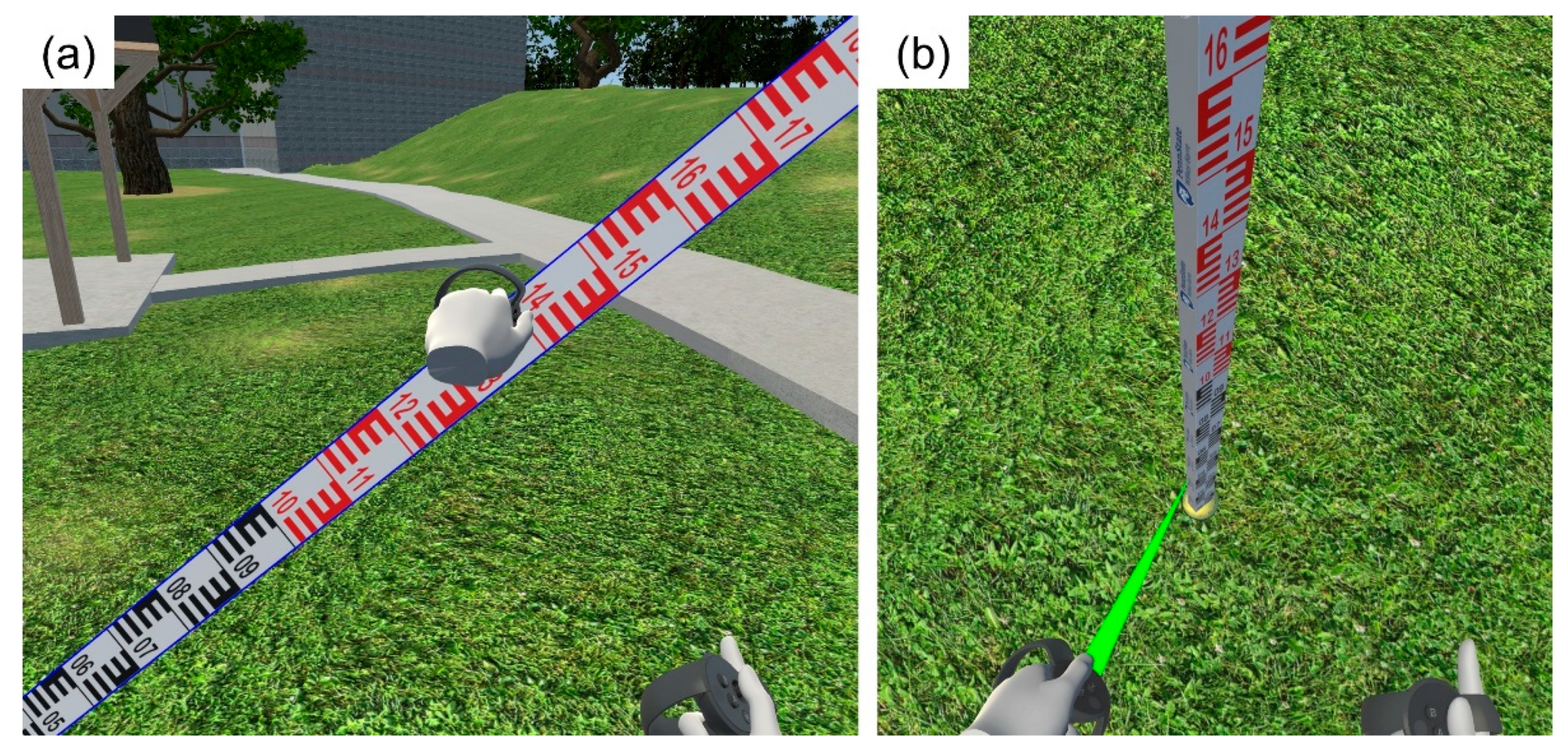

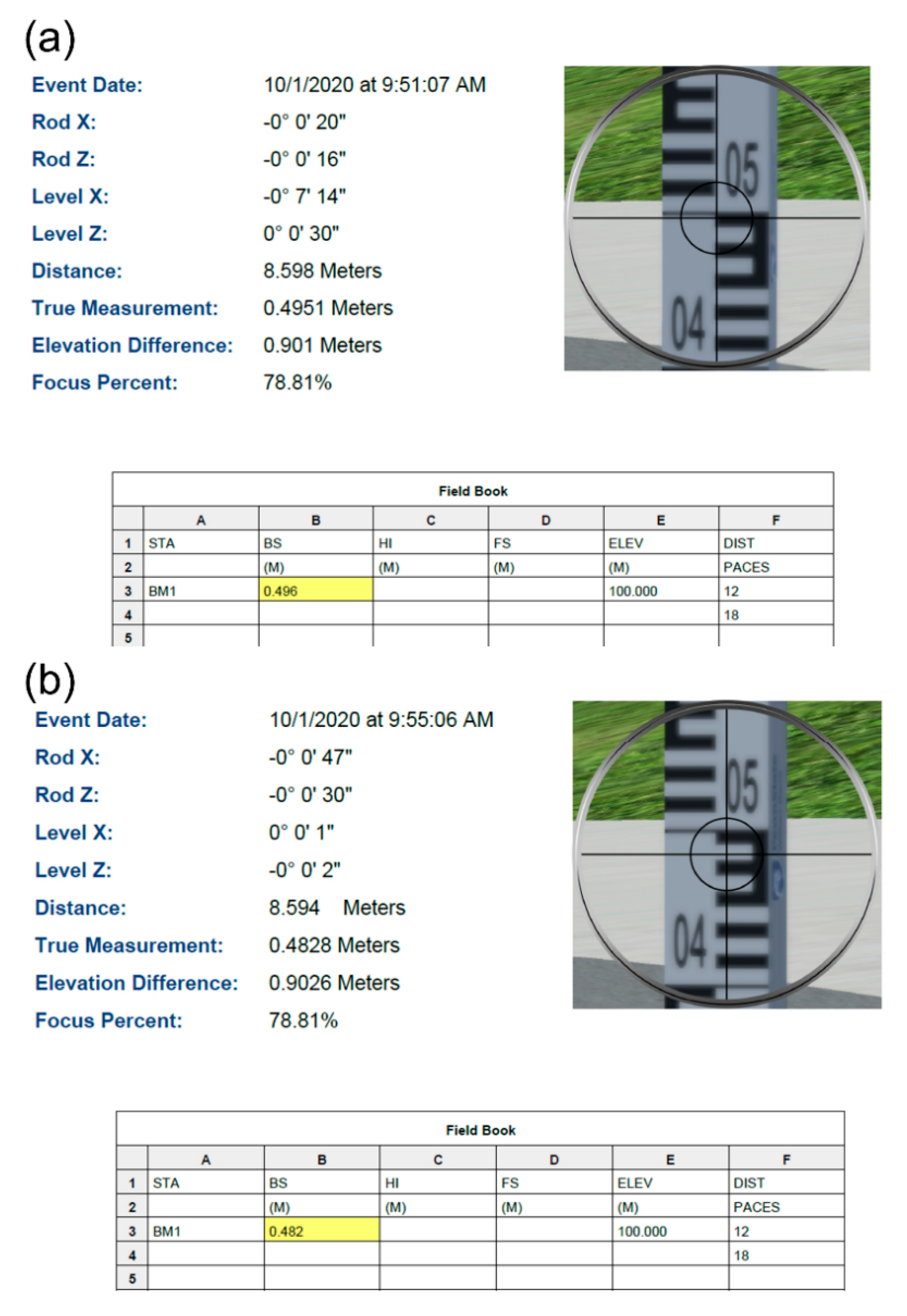

2.3. The Leveling Rod

For the leveling rod, markings were created in Photoshop and turned into a texture in Unity. The user can grab and drop the rod in any location. In early versions of the software, the user had to perform a trial-and-error approach to achieve precise centering on a location (e.g., surveying monument or turning point), and centering was difficult, time-consuming, and counterproductive. To simplify this approach, we allow the rod to snap precisely on monuments or turning points when the rod touches the monument or turning point.

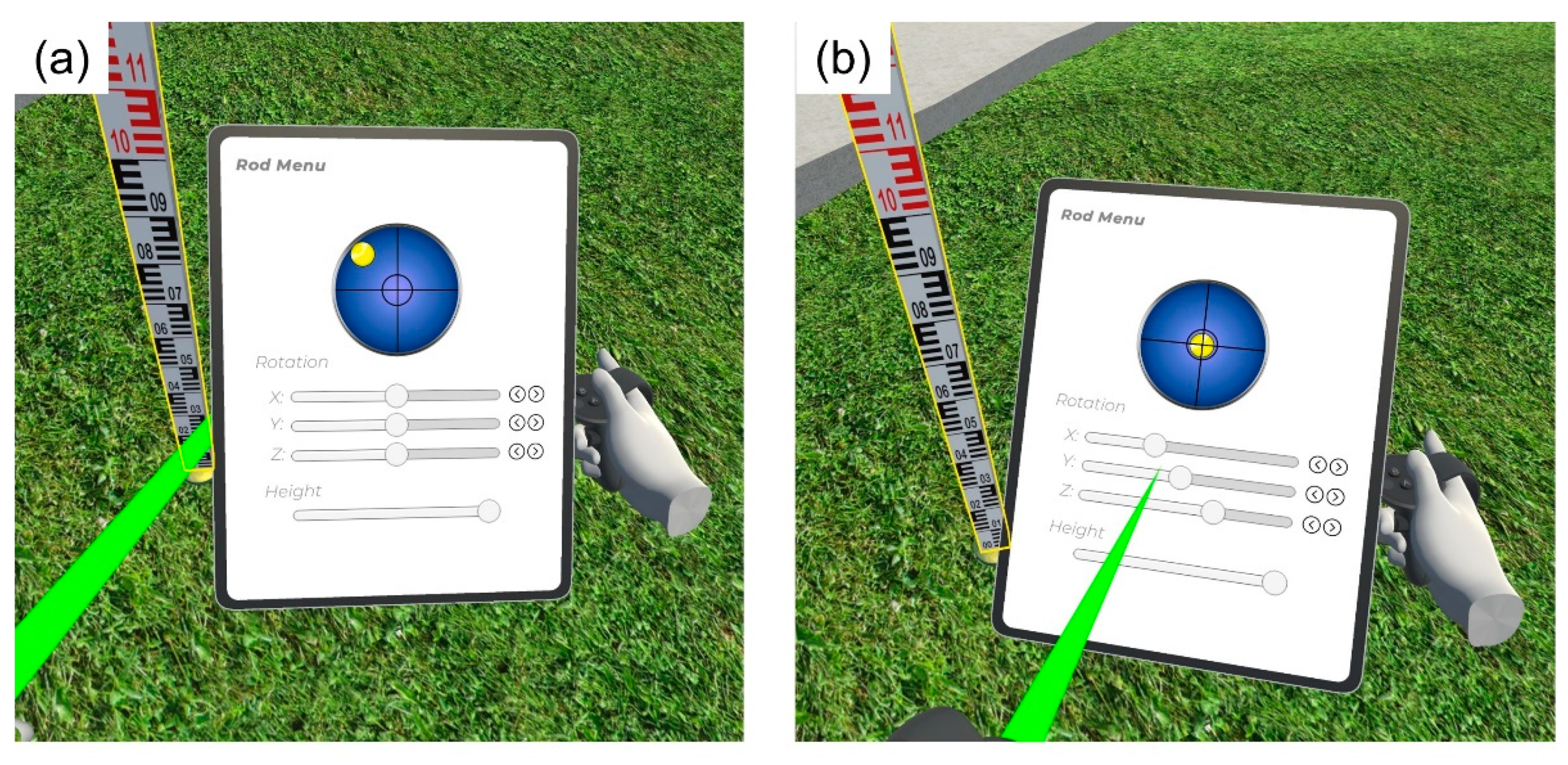

In real life, a surveyor will hold the rod with a circular bubble attached to it, trying to level it. Such a hand movement cannot be replicated with high accuracy in VR; therefore, a different approach using the virtual tablet was followed. By selecting the rod, the user can see a menu with three axes on the virtual tablet and see the leveling state of the rod (

Figure 5a). The virtual bubble moves out of the edges of the circular vial when the rod is leveled to within 1° (the circular vial ranges from −1° to +1°). There are two controls in each axis. The first control moves a slider that allows for coarse rotations for approximate leveling of the rod. Then, using the arrow buttons, the user applies finer rotations for precise leveling of the rod. The fine arrows make changes of 0.01° (36′′) each time. This allows for leveling the rod within 30′′ to 1′ in most cases.

Figure 5b shows an example of the rod being leveled. With this workaround, students understand that they have to level the rod before moving to the next lab step, thus maintaining this specific learning objective. Finally, the user can expand and collapse the rod as needed in one-meter intervals up to five meters (see “Height” slider in

Figure 5).

2.4. The Differential Level Functions

For the differential level instrument, we created a model based on a Topcon AT-G3 instrument. This instrument is attached to the tripod, because, in differential leveling, centering is not necessary, and in the field, surveyors often transport the differential level instrument mounted on the tripod. As with the rod, the user can reach towards any tripod leg grab and move the tripod and instrument to a different location.

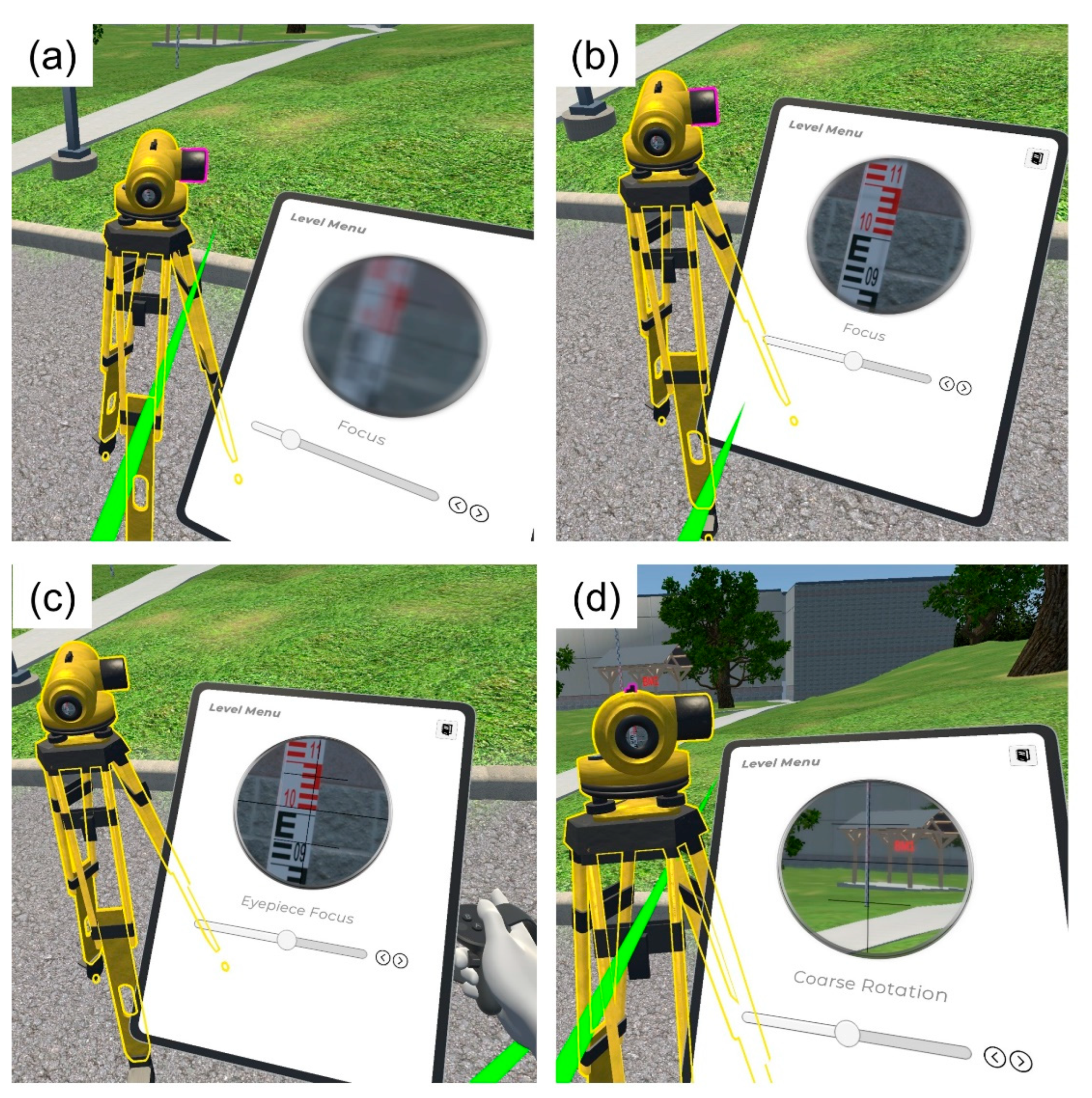

Most components in the tripod and level are selectable, giving them separate functionality, namely, these are tripod legs, tribrach screws, telescope, peep sight, focus knob, and eyepiece. As with the rod, these individual components are controlled via the virtual tablet. The instrument focus is simulated through blurring of the picture with a distance-dependent component and a focus capability from 0 to 150 m.

Figure 6a,b show the instrument view before and after focusing. Note that, in

Figure 6b, the crosshair is still blurry. The user needs to select the eyepiece and focus the crosshair (

Figure 6c). Then, by selecting the peep sight, the user has a coarse rotation of the instrument (

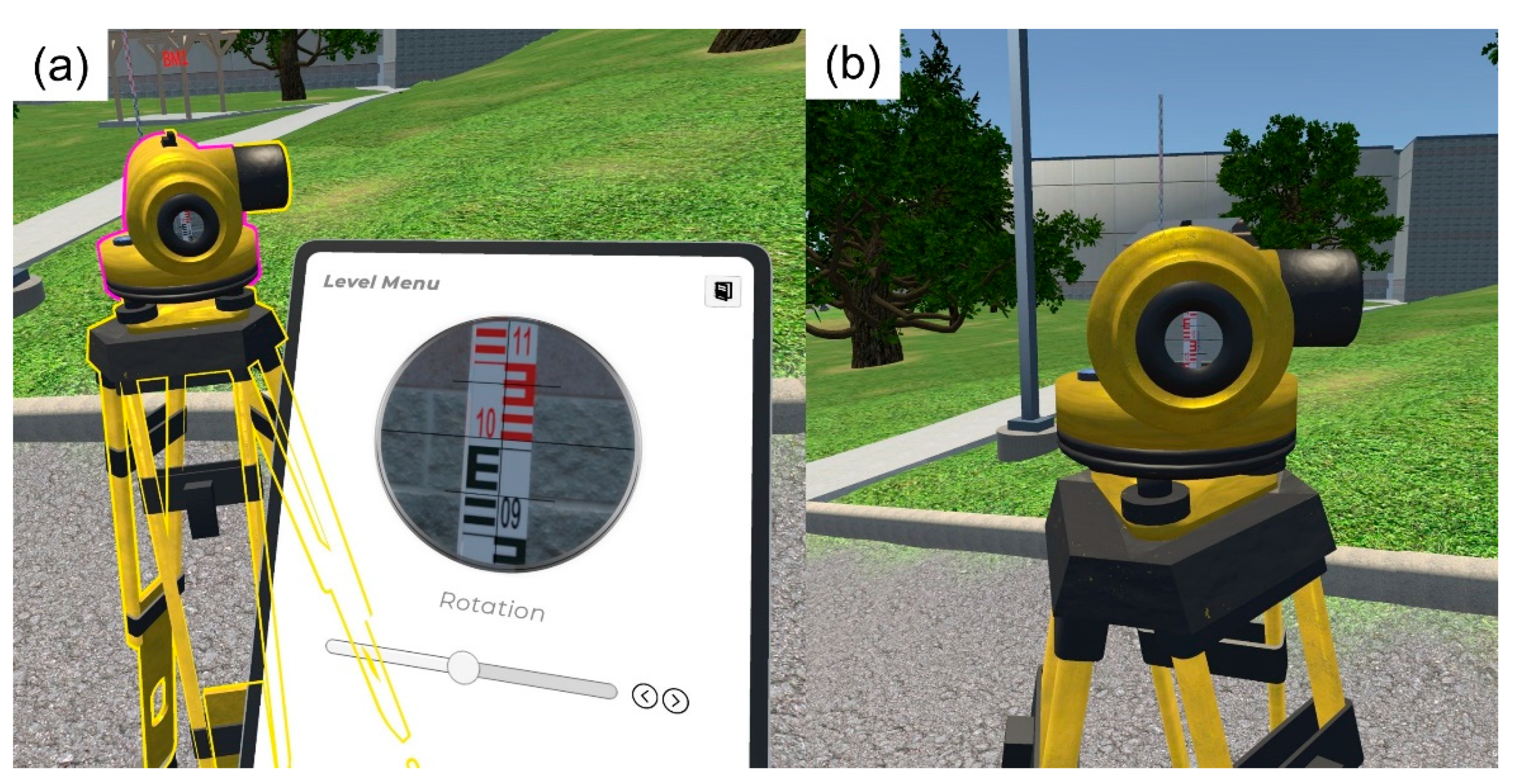

Figure 6d), which allows the user to approximately aim towards the rod. The field of view of this coarse rotation is 20°. The student can then select the main body of the instrument, which brings the fine rotation view and allows for precise aiming (

Figure 7a). The field of view in the fine view is 1°30′, similar to the Topcon instrument used as the model. The user can go towards the instrument and lean towards the telescope to make a measurement (

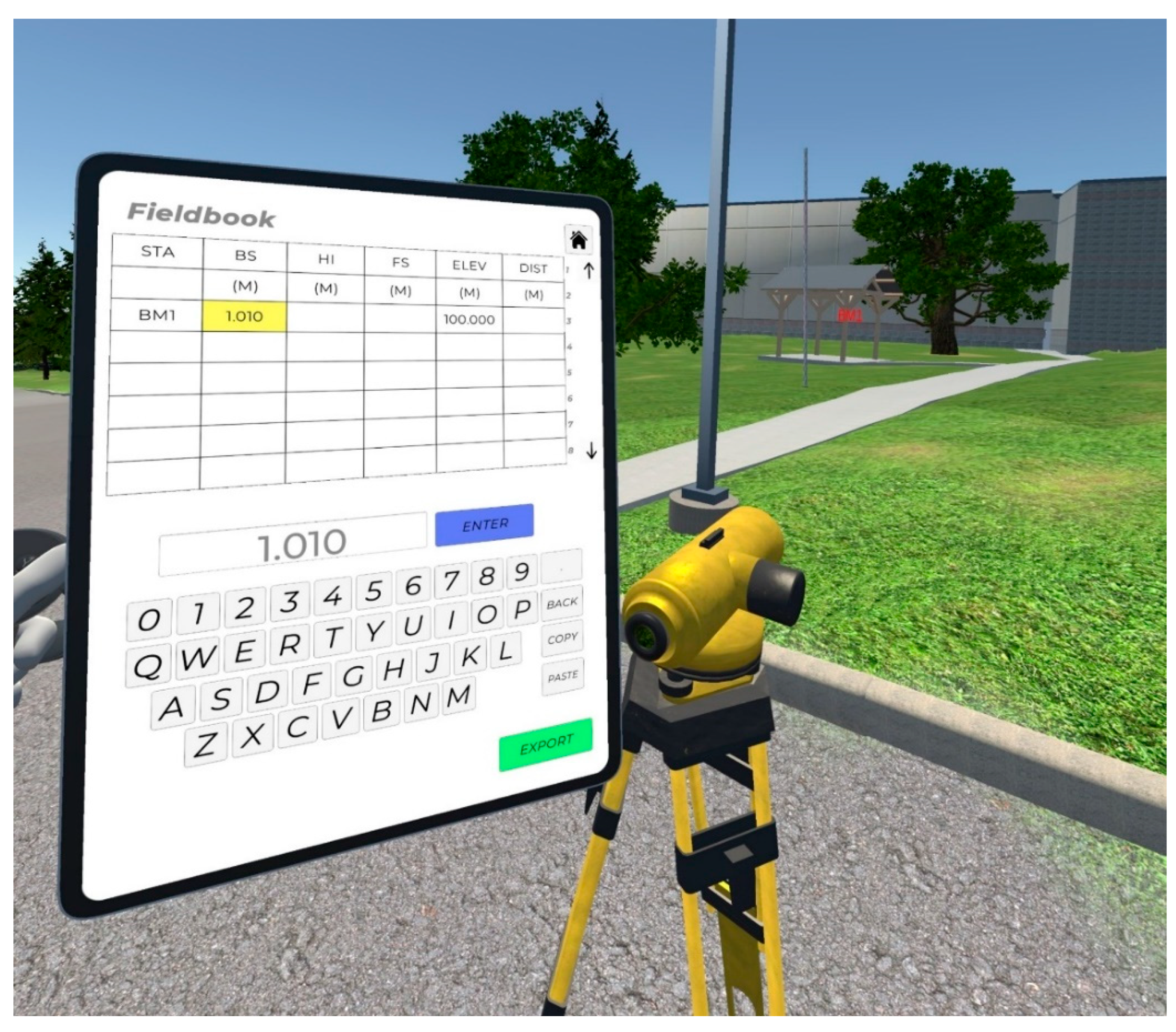

Figure 7b). However, reading the rod by leaning towards the instrument can be difficult in VR because of clipping (when the camera intersects the object); therefore, users can make observations using the projected telescope view on the virtual tablet. For recording measurements, students have a virtual fieldbook. The virtual fieldbook is set up like a typical spreadsheet with numerous cells that are selectable (

Figure 8). When they are finished with a lab, they are able to press an export button (see bottom right corner in

Figure 8) and have the entire fieldbook exported in CSV format for use elsewhere.

The tripod legs and tribrach screws control the leveling of the instrument. As movement of tripod legs and tribrach screws is associated with terrain undulations, a technique had to be developed to position the entire device based on the leg lengths as well as the terrain it was resting on. The following subsection goes into depth on the efficient technique we developed that is used to calculate the proper position and rotation of the differential level instrument.

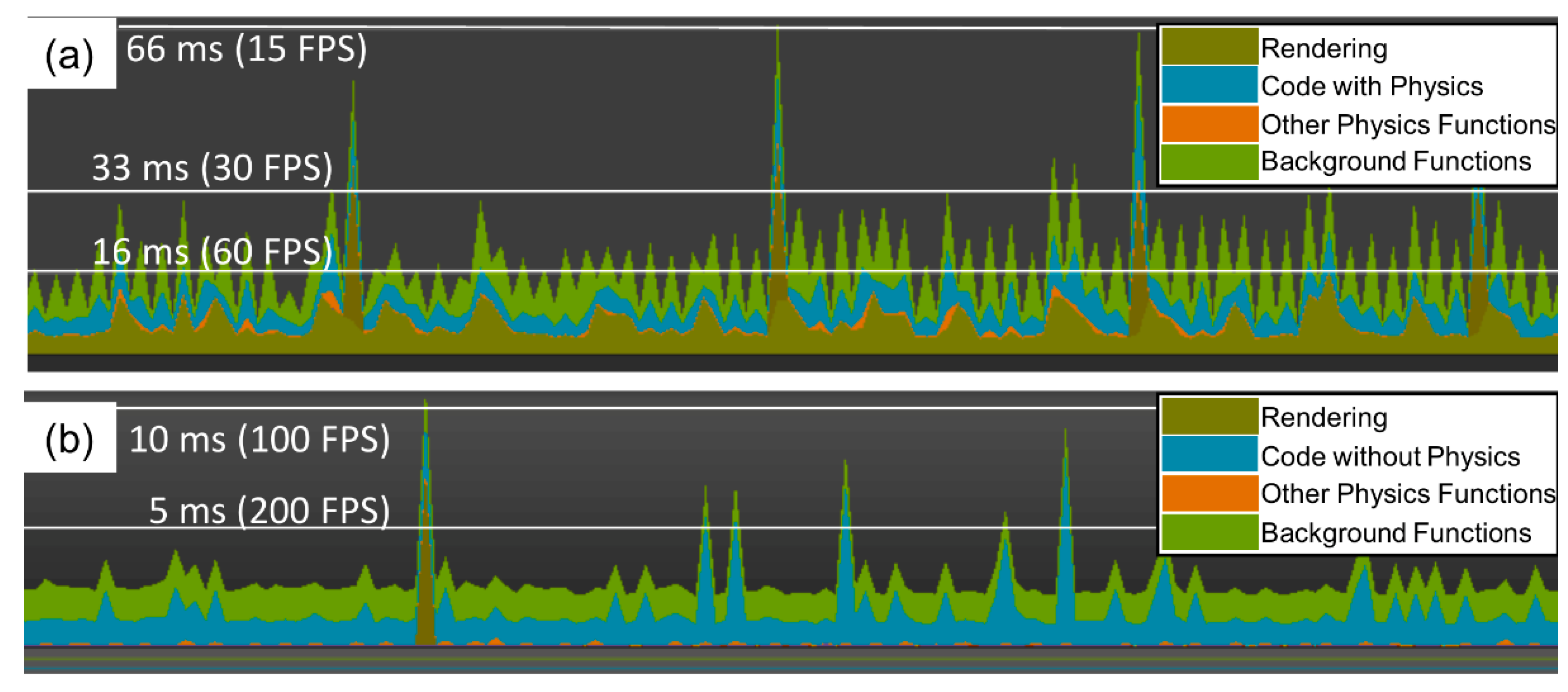

2.5. Efficient Tripod Positioning

When simulating 3D objects in VR, low polygon objects are simple to render, while complex objects such as water are much more resource-intensive to simulate. A naive approach is to fully simulate these objects as physical entities (with mass, velocity, and position parameters) in a physics engine. This physical simulation takes up vast amounts of compute resources, and the cost grows exponentially as more complexity is introduced [

50,

51]. This either slows the 3D game or requires a powerful computer, especially when an object is being tracked in real time [

50,

52]. Tracking a complex 3D object necessitates finding its position and rotation with respect to the reference frame in the virtual world. These are increasingly difficult to calculate as more complex shapes are placed on more complex surfaces. This process can be cut down dramatically if the entire process of physical simulation is eliminated. We have developed a novel technique for the positioning of complex objects on complex surfaces without the use of large amounts of computational overhead. Going forward, it is assumed we are talking about objects with no unique physical characteristics such as bounciness or slipperiness where a physical simulation would be inevitable. We seek to implement this process while maintaining a smooth and immersive experience to retain the benefits of VR [

6,

7,

8,

9].

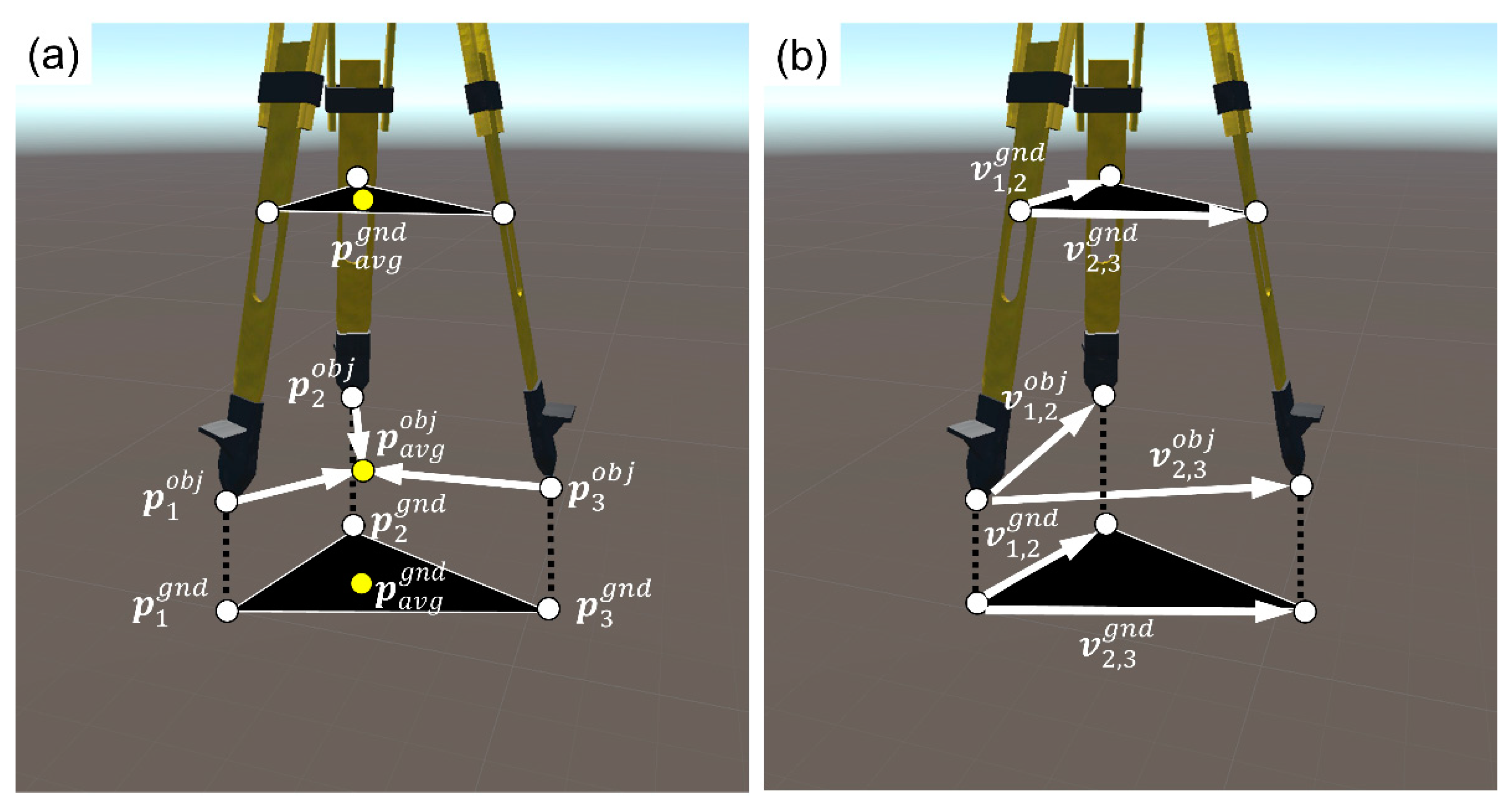

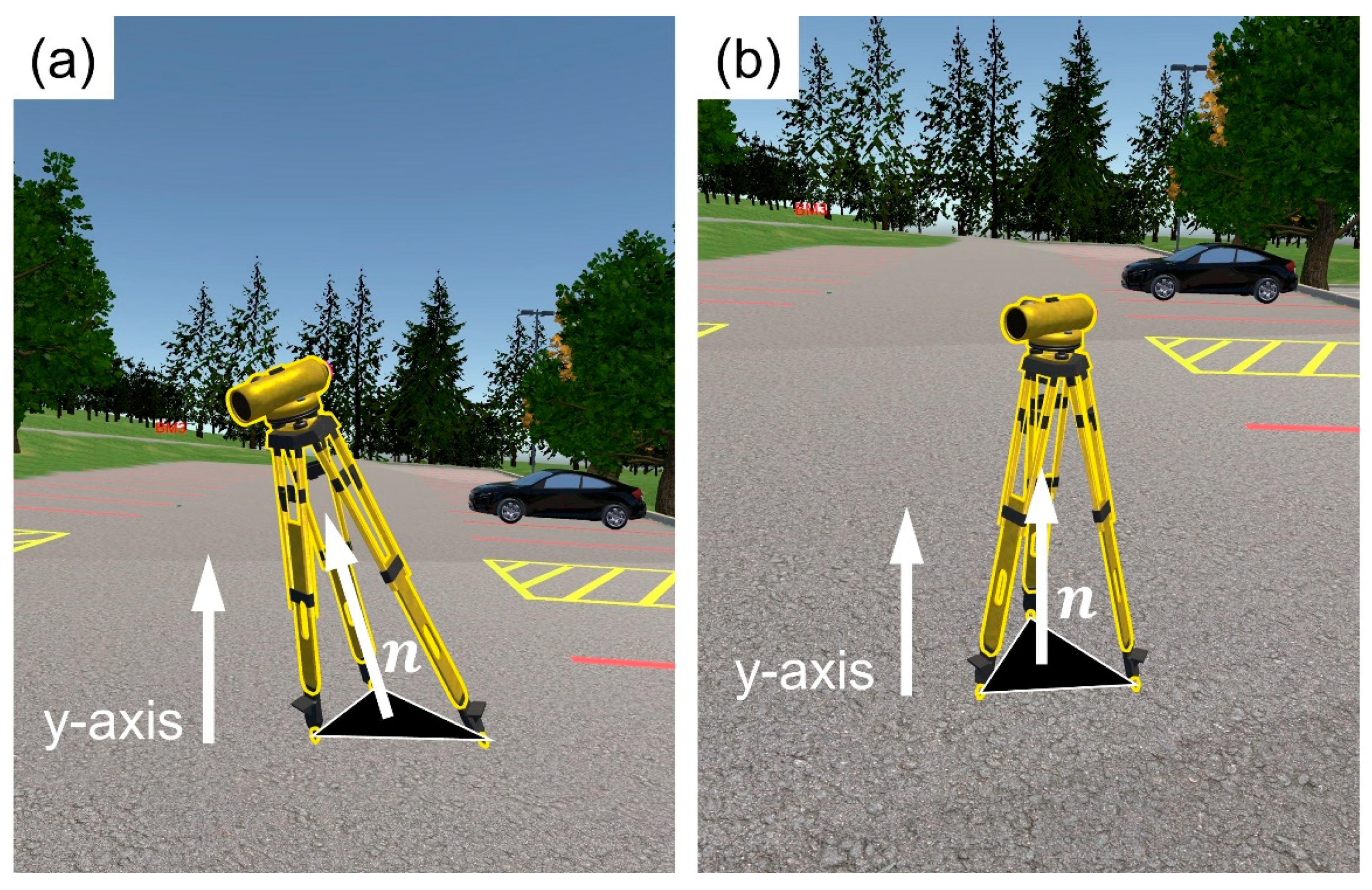

Our technique was originally developed for use with a tripod of varying length legs, and as a result, it works on any object with varying length legs or support points. The technique can be extended to objects with an unlimited finite number of supporting points, on any type of varying terrain. The process can be broken down into two main phases, the positioning phase and the rotating phase. To position the object, it is necessary to know the endpoint positions of each of the supports, for instance, the positions of the ends of the tripod legs. Given these points, we can then find an average point from these support points, where the object should rest (

Figure 9a). We also need to find the corresponding ground point below the tripod, which is calculated from the intersection of the tripod legs with the ground (when the tripod intersects the ground) or the extension of the tripod legs, following the vertical direction, and the apparent intersection of them with the ground (when the tripod is on the air) (

Figure 9a). The average three dimensional vectors are found as follows:

where

is the average three-dimensional vector of the object (tripod) calculated at the support points (endpoints of the tripod legs);

is the number of support points; and

,

, and

are the coordinates of the

th support point.

is the average three-dimensional vector of the ground, calculated as the intersection between the support points and ground or their apparent intersection in the case where the tripod is being held on the air. Terms

,

, and

are the corresponding ground coordinates of the

th support point.

By aligning

to

, we can position the object (tripod) to the ground. The next step is to align the normals that are formed by the support points and their intersection to the ground. We first get a normal of the object’s average point perpendicular to the hyperplane of the supporting points. Similarly, we can get a normal of the ground’s average point perpendicular to the hyperplane at the intersection of the supporting points with the ground. A hyperplane is formed by simply taking two vectors between the endpoints, as shown in

Figure 10b. The vectors of the object hyperplane can be found using the following formulas:

where

and

are the hyperplane vectors for the object and

,

, and

are the position vectors of the endpoints. For the ground hyperplane, we similarly have the following:

where

and

are the hyperplane vectors for the ground and

,

, and

are the position vectors of the points formed by the intersection of the supporting endpoints to the ground.

We can get the normal of this hyperplane by taking the cross product of these two vectors and finding the object normal and ground normal:

where

is the object normal and

is the ground normal. If the support points are at equal height on flat terrain, then the object normal points directly in the up direction. In addition, if the support points move (e.g., when the tripod leg is extended), this object normal moves as well. The rotation of the tripod can be achieved if we simply align the object and ground normal vectors. The rotation angles are found as follows:

where

is a vector of the three rotation angles. When the object is rotated along the

x-axis and

z-axis angles (the

y-axis rotation along the up and down direction is discarded), the support points become aligned with the ground, which completes the process.

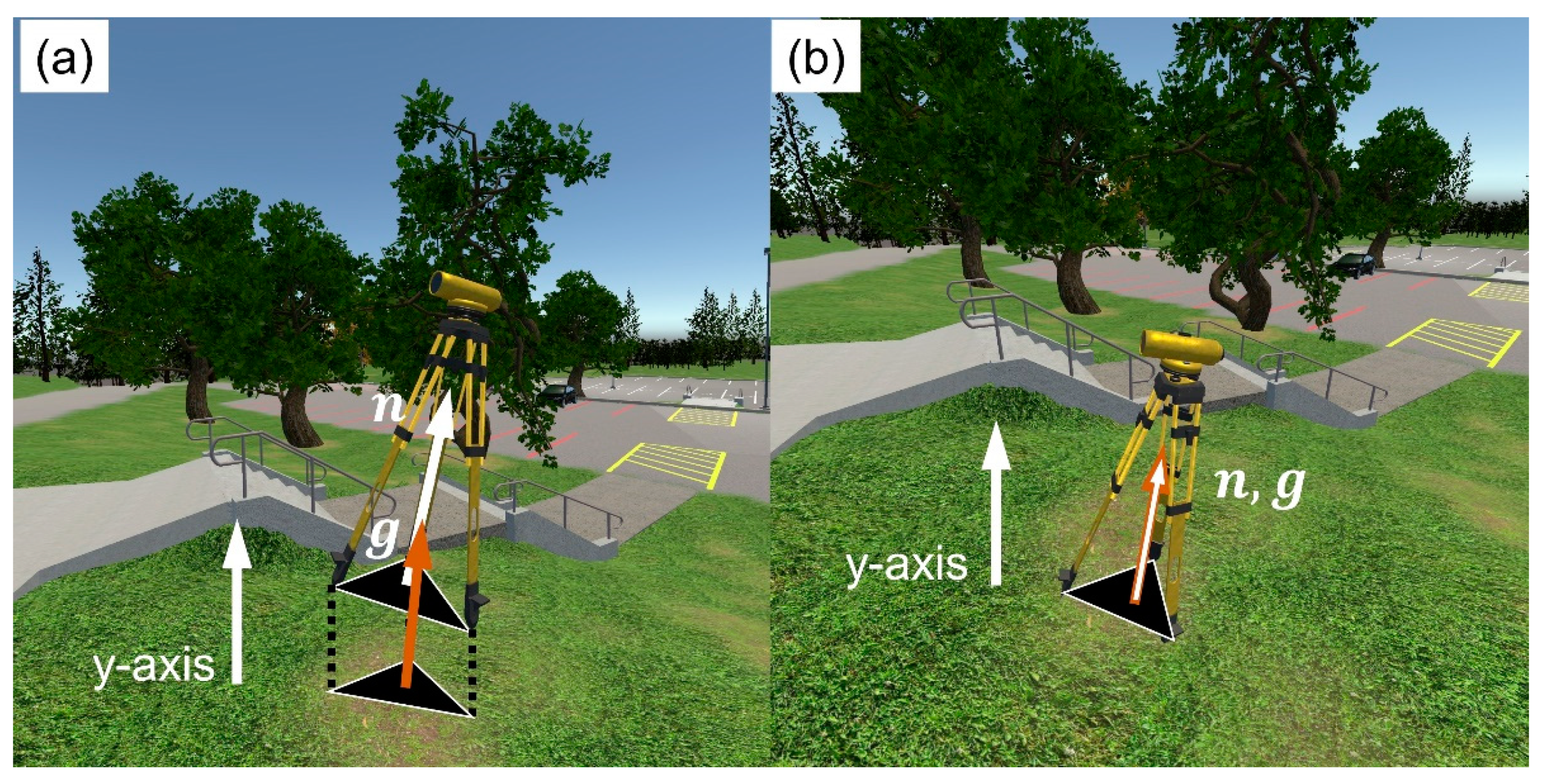

Figure 10a shows an example of the object and ground normals when the tripod is held in the air, and

Figure 10b shows that the two normals align when the user drops the tripod.

2.6. Leveling of the Differential Level

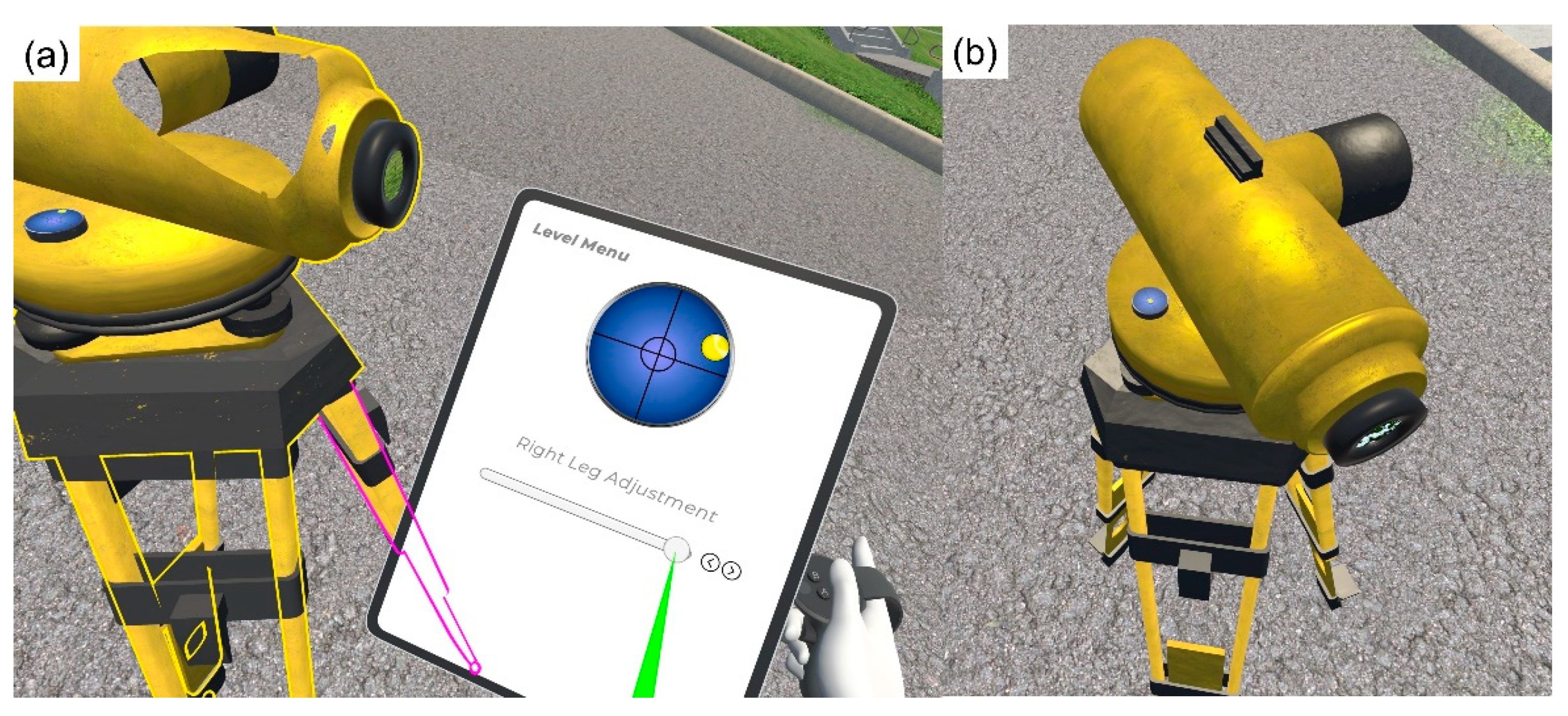

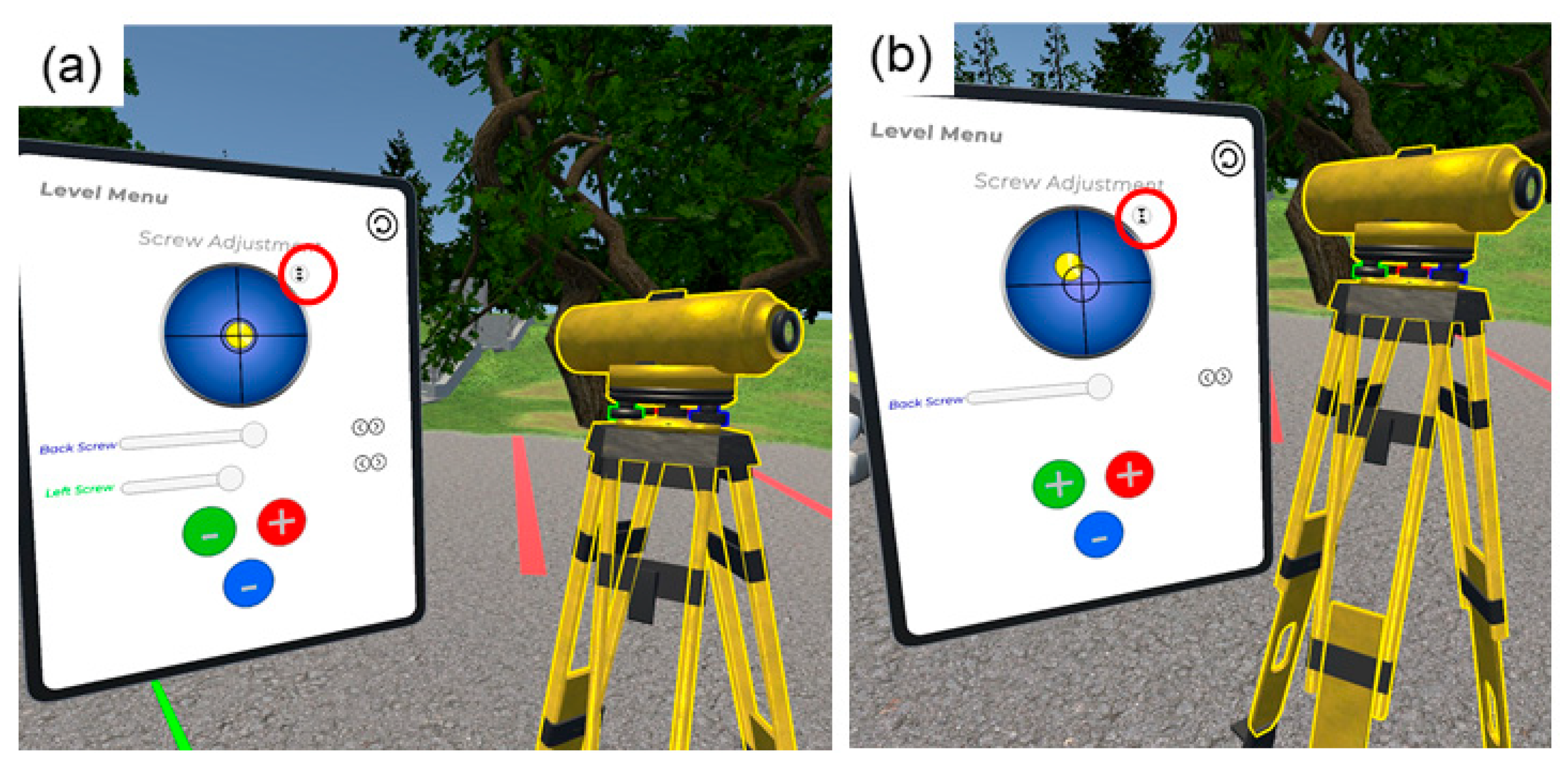

2.6.1. Virtual Circular Bubble

When the user is making efforts to adjust tripod legs and screws, it is important to provide them with the same way of feedback in real time via a virtual circular bubble (

Figure 11). The bubble is controlled directly from the rotation of the telescope’s vertical axis with respect to the up direction (

y-axis in Unity). In the physical world, the up direction would correspond to a plumb line. To provide the circular bubble feedback to the user, we take the

x-axis and

z-axis rotation values and map these values onto a circle using the square to circle formulas:

where

and

are the corresponding cartesian coordinates to plot the bubble in the circle of

Figure 11, and

and

are the rotation angles with respect to the

x-axis and

z-axis, respectively. The local circular bubble space is rotated when the instrument is rotated about the

y-axis, which gives an accurate representation of the rotation of the equipment and bubble system, much like in the real world (

Figure 11). The

and

rotation angles include the rough rotation of the tripod (coarse leveling) and the precise rotation of the tribrach screws (precise leveling) as follows:

where

and

are the rotation angles of the object normal (tripod movement) when the tripod is rotated about the

y-axis, and

and

are the rotation angles of the telescope (tribrach movement) when the instrument is rotated about the

y-axis.

2.6.2. Rough Leveling (Tripod Legs)

Rough leveling of the instrument commences when the user adjusts the tripod legs, which roughly aligns the object normal with the “up” direction (

and

rotation angles from Equations 12 and 13). Then, the tribrach screws are used to achieve precise leveling of the instrument, which aligns the telescope axis, which is perpendicular to the telescope’s collimation axis, with the up direction (

y-axis). The user can select each individual tripod leg (e.g.,

Figure 11). Using the sliding bars and fine control arrows (

Figure 11a), the user can expand or collapse each leg by about 50 cm to achieve approximate/coarse leveling. The coarse circular vial depicts an area that ranges from −3° to +3°, and the fine arrow buttons change the rotation by 1′. Therefore, after this step, the instrument will be a few degrees to a few minutes from being leveled.

2.6.3. Precise Leveling (Screw Rotation)

The tribrach screws create a fine rotation between the telescope’s vertical axis and the up direction. In the physical world, the rotation of the screws translates to a physical change in the height of the tribrach screws. In VR, when the user rotates the screws, the telescope is relatively rotated with respect to the screws. The screws’ “pseudo heights” vary from −1 to 1 (unitless). Then, we can assign a rotational range of values to those values. In this implementation, we have assigned −1 to correspond to a rotation of −3° and 1 to correspond to a rotation of +3°. By changing this correspondence, we can increase or decrease the allowable tilt of the telescope. We map the three “pseudo height” values of the screws to the

x- and

z-axis rotation of the telescope. Recall that, in Unity, the

y-axis corresponds to the up axis. We do this mapping by using the left screw for the positive

x-axis rotation and half of the negative

z-axis rotation (assuming the front is facing in the positive

x-axis direction), the right screw as the negative

x-axis rotation and half of the negative

z-axis rotation, and the back screw as the positive

z-axis rotation. The actual formulas for finding the rotation values are as follows:

where

is the

x-axis rotation of the telescope in degrees,

is the

z-axis rotation of the telescope in degrees,

is the back screw height,

is the left screw height, and

is the right screw height. For example, in our implementation, if the left screw is moved by 0.5 and right screw remains 0, then the

value becomes 0.25, which, in degrees, corresponds to 0.75°. With the back screw also at 0, the

value becomes −0.25, which, in degrees, corresponds to −0.75°. The combination of screw and leg adjustments by the user leads to a leveled instrument as in real-life surveying.

4. Conclusions

We presented a new VR simulation for surveying engineering activities. Specifically, we demonstrated its efficacy in the field of surveying by conducting academic labs in VR. The leveling simulation is immersive and interactive, giving students a first-person experience. The students can conduct virtual leveling much like in the physical world. They can grab, move, center, and level a leveling rod. They can grab, move, and level a differential level instrument. Even simple, but important instrument functions, such as instrument and eyepiece focusing, were replicated. In terms of leveling, students adjust tripod legs to achieve coarse leveling, before moving to adjusting the tribrach screws to achieve precise leveling. This faithfully replicates the leveling process that students encounter in the physical world. In addition, students can record measurements in a virtual fieldbook. Virtual replication of the differential level instrument proved to be the most difficult task, as it had to match its real-world counterpart to a level of accuracy where the student would be able to pick up skills in the simulation and transfer them to the real world. The equipment and the landscape had to work smoothly together to create a continuous experience that does not hinder immersion. We developed a novel technique for leveling multi-legged objects on variable terrains. This technique models the geometric changes of the tripod movement and eliminates the physical simulation, which increases efficiency dramatically and ensures that 60 FPS are always maintained, giving a pleasant experience to users.

Through VR, we can create multiple surveying scenarios in several virtual environments; thus, training students in a variety of surveying conditions that many times is difficult (and sometimes impossible) to replicate in the physical world. Such VR labs can be used to support surveying education when labs are cancelled as a result of weather. There are still some barriers with respect to the needed computer hardware to make this software available for remote learning. The authors are working on adapting the software in Oculus Quest 2, which is untethered, and software can be loaded directly to the HMD). However, at this point, some simplifications on the virtual environment and textures might be necessary.

We conducted two differential leveling labs as a demonstration, a three-benchmark loop and a point-to-point leveling line. In both cases, the misclosure was 1 mm, which is due to observational random errors. This shows that leveling activities can be faithfully replicated in VR with the same precision that surveyors can achieve in the physical world. The environment is realistic, creating realistic challenges for users. For example, we showed how tree leaves move with wind and block the view of the instrument to the rod. The output report offers great instructional feedback that is not attainable in real life. The report captures the leveling condition of the instrument and rod, as well as the true measurements that students should have observed. Thus, students can use the output report and, through reflection, they can understand their mistakes and surveying approach, which is important to help them improve their surveying and engineering skills. The paper focused on the technical aspects of the software, while a comprehensive pedagogical implementation and assessment will follow in the future.

The developed labs are single player labs (one student conducts the virtual lab). Although this approach has some advantages, surveying students would never conduct the entire lab on their own. As one student should be at the instrument making and recording observations and a second student should be holding the rod leveled. Therefore, they experience all the steps that are associated with leveling, giving them an overall experience and a different perspective. Future work will focus on developing a collaborative suite that will allow two or more players to co-exist in the environment and conduct surveying labs as a group, exercising their teamwork skills. Existing work on collaborative learning in VR shows great advantages over individual work such as improving learning of outcomes and reducing anxiety related to tasks [

58]. Furthermore, the software will be expanded to include more environments and surveying instruments such as total stations and global navigation satellite systems.