Classifying Intelligence in Machines: A Taxonomy of Intelligent Control †

Abstract

1. Introduction

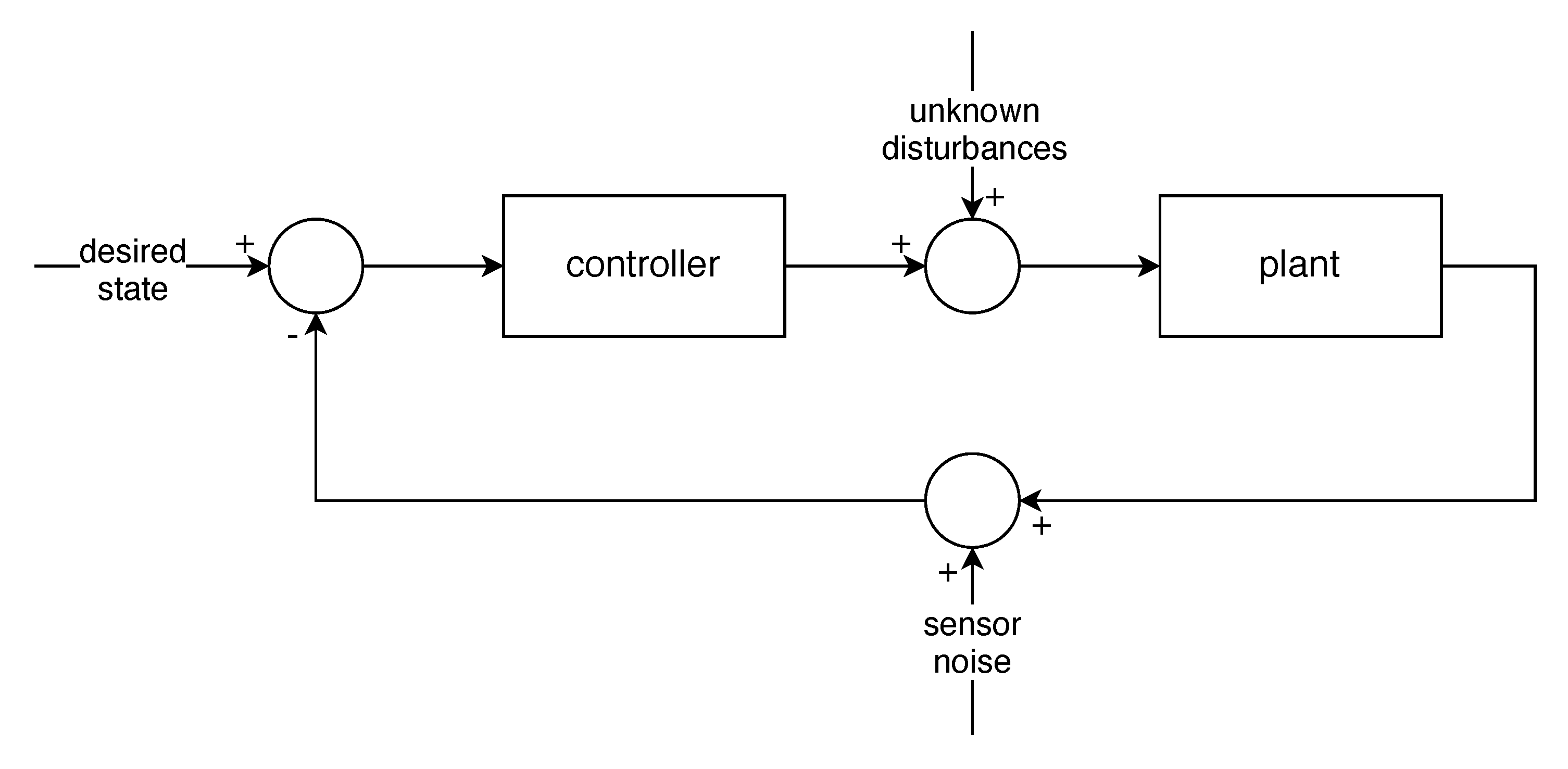

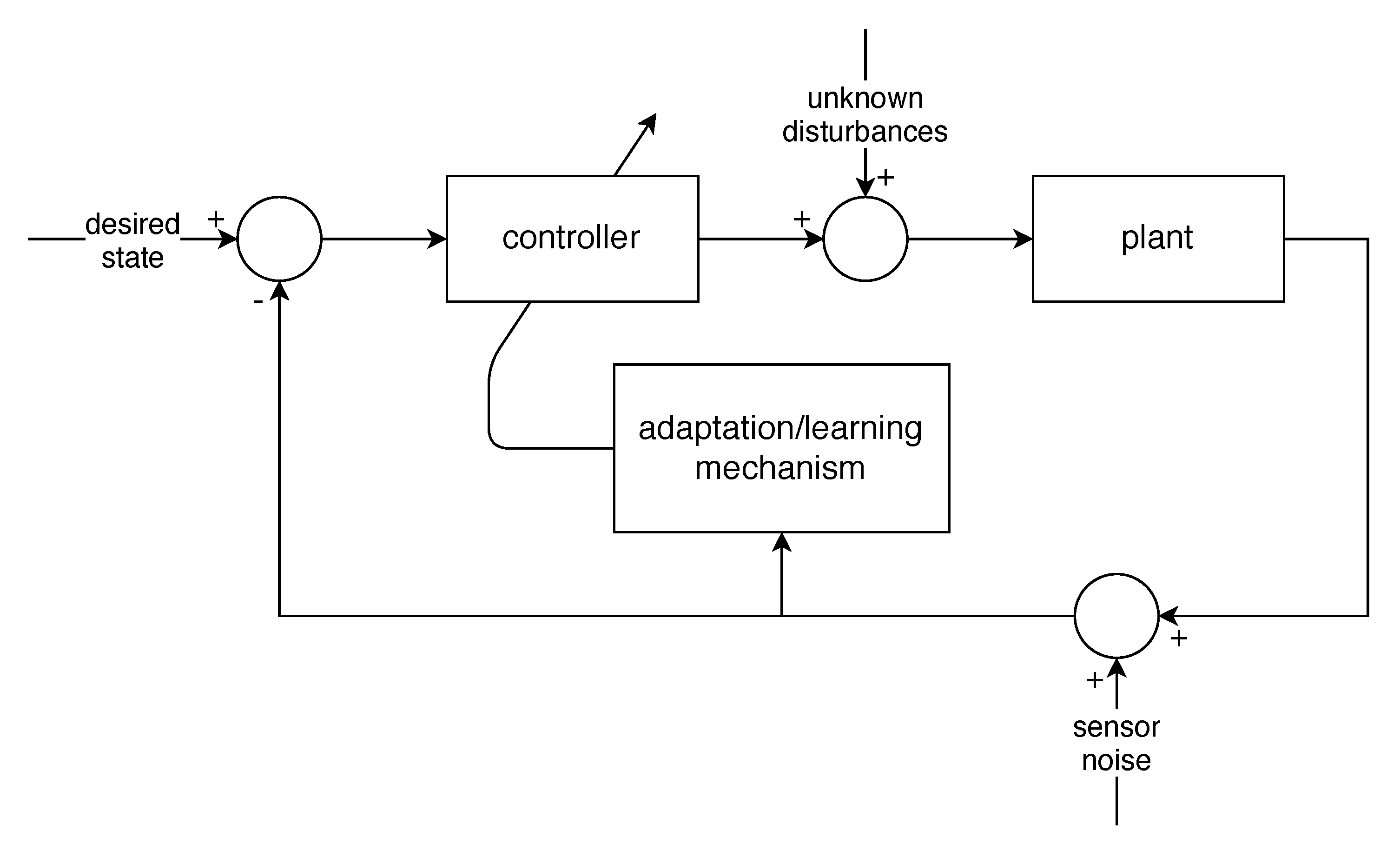

2. Path to Intelligent Control

3. Defining Intelligent Control

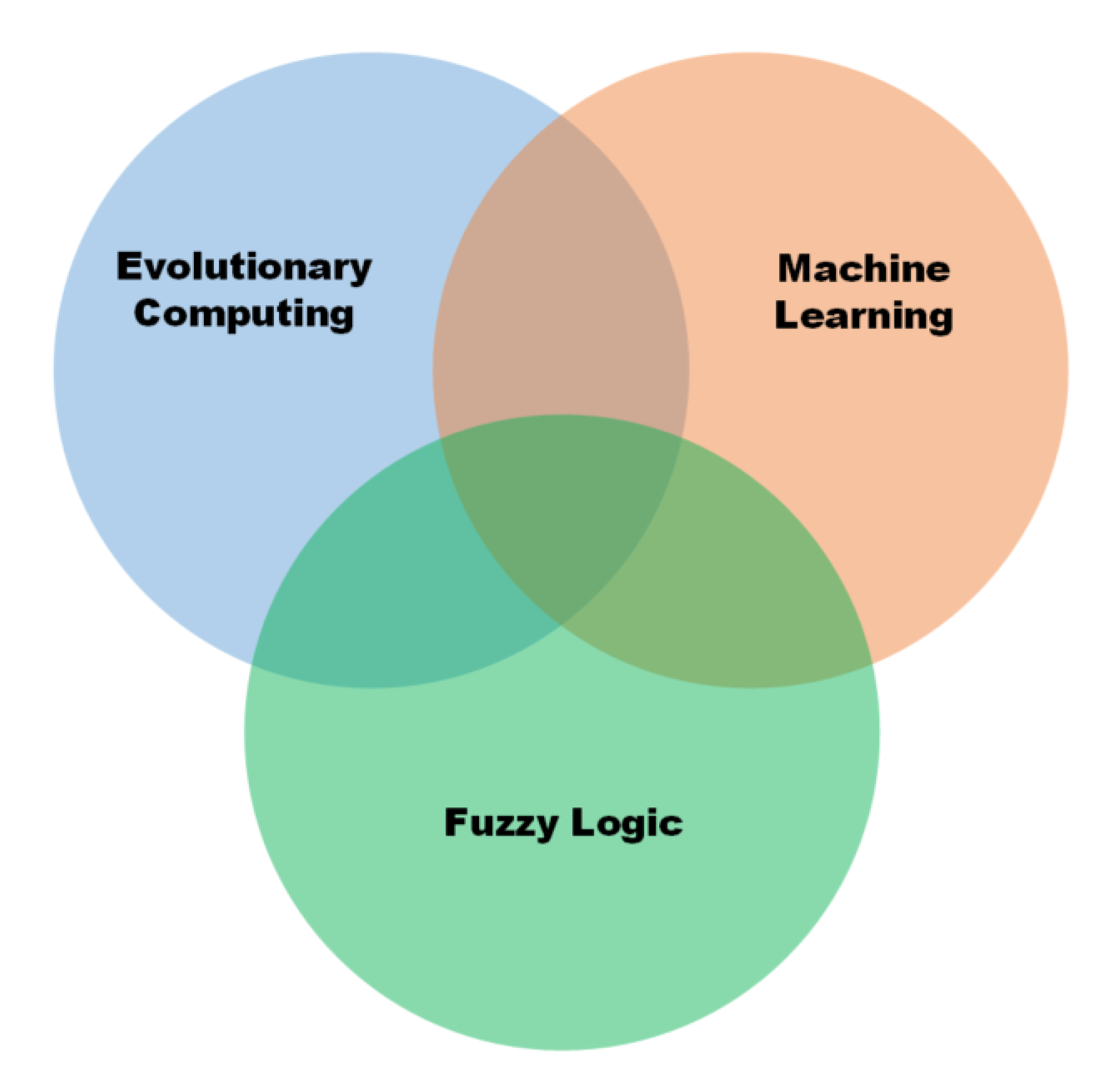

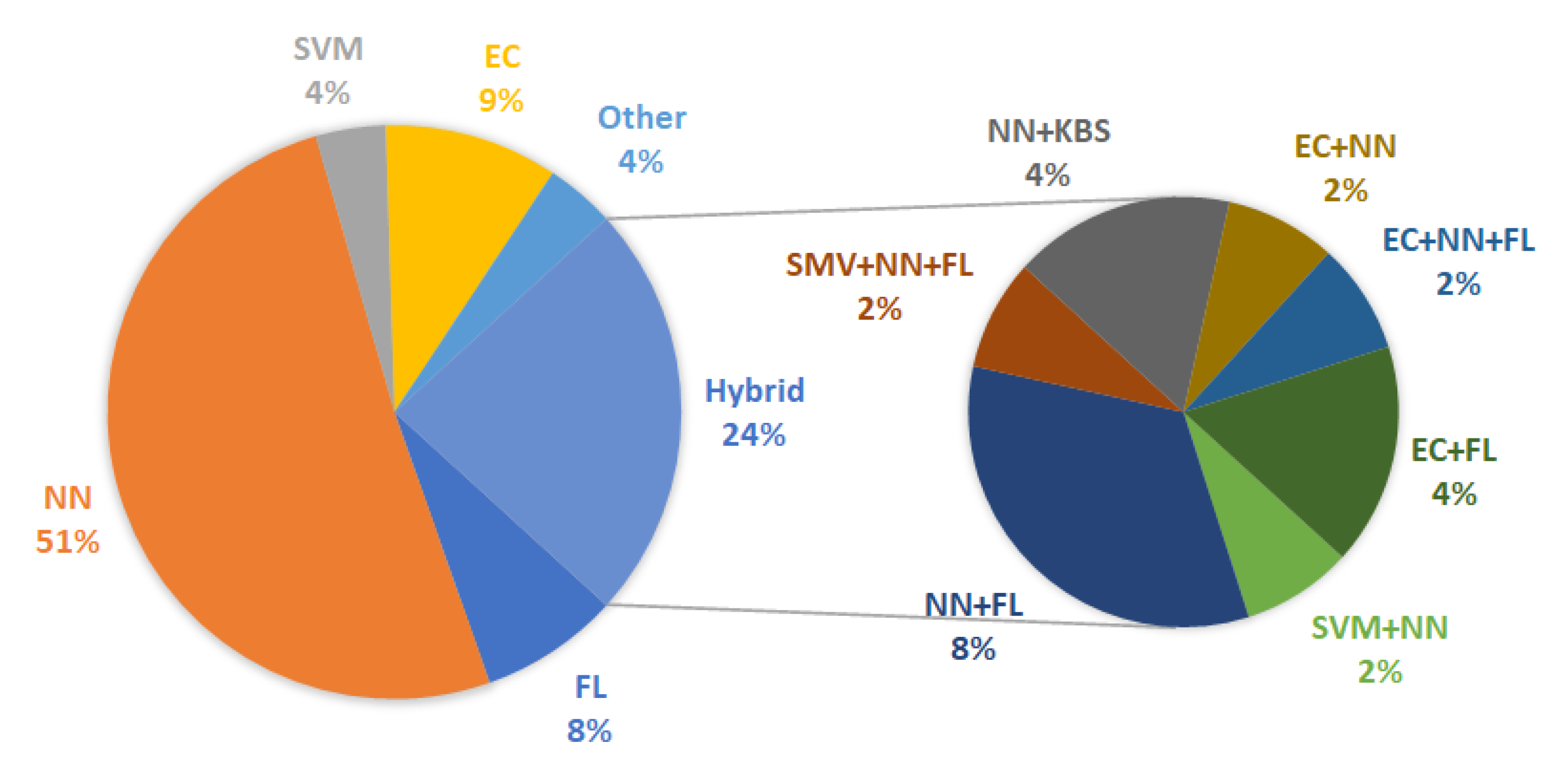

3.1. Methods for Intelligent Control

3.1.1. Machine Learning

3.1.2. Evolutionary Computing

3.1.3. Fuzzy Logic

3.1.4. Hybrid Methods

3.2. Dimensions of Intelligent Control

3.2.1. Environment

3.2.2. Controller

3.2.3. Goals

4. Taxonomy

4.1. Environment Knowledge

- Complete and precise environment model:If the environment is precisely known (where Equation (1) captures all dynamics), an open loop controller could be used, thus requiring no degree of intelligence. In reality there are often aspects of the system that are not perfectly modelled or subject to uncertainties. This then requires a more sophisticated controller.

- Complete environment model subject to minor variations:Any real system can only be modelled to a certain degree of precision. At this level we consider environments which have bounded uncertainties that are small enough such that simple feedback controllers can be used with little or no need for adaptation. These controllers are not necessarily intelligent, since they only require low levels of adaptation for dealing with slight uncertainties and do not learn online. There are still some examples of intelligent controllers within this category.

- Environment subject to change during operation:At this level the environment has time-varying parameters that describe its behaviour (Equation (2)). Now a higher degree of intelligence is required, since substantial changes in the environment cannot always be predicted or may be too complex to model. At this level of uncertainty, some conventional adaptive control methods can still perform sufficiently as well as intelligent ones.

- Underlying physics of environment not well defined:Denoted here as an uncertain mapping from states and actions to future states as in Equation (3). This is an uncommon scenario for Earth applications, however it is a fundamental problem for many space applications, such as Mars entry vehicles. Here some information about the environment is known, but there are still substantial knowledge gaps requiring an intelligent controller.

- No knowledge of environment:Where no model exists for the environment and the control designer cannot incorporate any environmental knowledge into the controller, this requires an intelligent control system to safely explore its environment.

4.2. Controller Knowledge

- Stationary, globally stable controller:Most feedback controllers have guarantees of stability and maintain a certain level of performance under given assumptions. In simple cases, these assumptions allow the control system to perform well with a fixed set of parameters without any need for adaptation (Equation (4)).

- Varying controller parameters:There are many examples of intelligent and non-intelligent applications that vary some control parameters online (Equation (5)). This accounts for a lack of knowledge in the controller parameters, where fixed parameters at design time are insufficient to cover the entire operating range of the system.

- Unknown sensor/actuator behaviour:This comes under the broad category of fault tolerant control, which itself has many dimensions. Here we consider fault tolerance to represent a level of uncertainty in the controller, where measurements may be erroneous and actions may not create the predicted effect (Equation (6)). Some fault tolerant systems use simple thresholds for indicating faults that are specified at design time, but since these are known this does not fall under this category. Here we are instead referring to a control system that must deal with unknown faults.

- Varying controller configurations:At higher levels of intelligence, a controller can alter its own control structure online (Equation (7)). This is commonly done offline using techniques such as evolutionary computation to define the controller structure. An intelligent controller requires online adaptation and therefore an efficient means of adjusting its configuration while operating.

- No known controller structure:The controller itself designs the control system from scratch using, for example, mathematical operations, control blocks, and intelligent architectures. An intelligent controller must be able to do this online, but perhaps with a rudimentary initial controller to give a stable starting point.

4.3. Goal Knowledge

- Goals entirely predetermined by designer:Most control systems, including intelligent ones, have a clearly defined goal that entirely shapes the control system design. In this case the control system is not ’aware’ of its goals and is therefore unable to update its goals or improve its performance with respect to the current goals. An example of such systems are those where the tracking error between a reference state and the current state must be reduced to zero.

- Goal specified implicitly, for example, as a reward function:Many optimal control problems come under this category, since the aim of the controller is often to minimise or maximise a defined cost function when the means of optimising this function are not specified. The high level goal of the controller is then to derive a control policy which achieves optimal control with respect to this cost function. This is also the case where the controller is punished for detrimental actions and must find a control policy which avoids such actions. These examples fit well into the framework of reinforcement learning control, where an agent learns by interacting with the environment and observing its state and a reward.

- Specific goals subject to change during operation with a globally defined goal:In a dynamic environment, the definition of specific goals depends on contingent events and observations. Moreover, if the allocation of goals is performed on ground, such as in a space mission, the robot/spacecraft will have to wait for new instructions every time a new, unforeseen event occurs or a new set of scientific data is available. This requires an intelligent goal planner to elaborate new specific goals based on changes in the environment.

- One or several abstract goals with no clear cost function:There are cases where the goals cannot be easily defined mathematically and so the controller requires an understanding of high level goals. For example, a controller’s goal might be “capture images of scientifically interesting events” or “explore this region and collect data”. The controller must be able to decide what events are scientifically interesting or which data are worth collecting.

- No knowledge of goals:The controller has to deduce what actions to take when, to begin with, it has no knowledge or indication of what actions are favourable.

5. Classification of Relevant Examples

- -

- G: Goal Knowledge

- -

- E: Environmental Knowledge

- -

- C: Controller Knowledge

- G-0, E-0, C-2:In the work of Kankar et al. [53], Neural Network (NN) and Support Vector Machine (SVM) are compared on the task of predicting ball bearing failures. Both techniques prove to be useful for this application. While the presented system is not a complete controller itself, it is a fault detection system that can be integrated in a controller for a rotating machine.

- G-0, E-1, C-1:Ichikawa and Sawa give an early example of Neural Networks (NNs) being used as direct controllers [55]. In their paper they combine a direct NN controller with genetic model reference adaptive control, which trains the NN based on a model of the ideal plant dynamics. This system is designed to deal with changing environment dynamics and continually updates its network to optimise performance.

- G-0, E-1, C-2:A common technology for intelligent control and particularly Fault Detection, Isolation, and Recovery (FDIR) is Adaptive Network-based Fuzzy Inference System (ANFIS), which was developed by Jang [65]. An example of such an application of Adaptive Network-based Fuzzy Inference System (ANFIS) is presented by Wang et al. [66]. Their system comprises an adaptive backstepping sliding mode controller augmented with an Adaptive Network-based Fuzzy Inference System (ANFIS) Fault Detection, Isolation, and Recovery (FDIR) system that controls a robotic airship. The ANFIS observer predicts the environment state at each time step. If these values disagree with those from the sensors, then a sensor fault is declared and the ANFIS output is used as input to the controller. The level of Goal knowledge is 0 since the goal of the control system is to minimise a tracking error following a predetermined trajectory.

- G-0, E-1, C-3:The Neural Network (NN) controller proposed by Wu et al. [31] has a unique feature which makes its classification C-3. The controller can change the network topology and its parameters online based on the output of a learning algorithm. Such a change in the topology requires a trade-off between maintaining sufficient computational speed for online usage and the required precision in its output values.

- G-0, E-2, C-1:One of the most popular IC methods is the neuro-fuzzy controller, which combines the adaptability of a Neural Network (NN) with the human-like reasoning of fuzzy controllers [59]. In this example, the authors apply a neuro-fuzzy model reference adaptive control scheme to an electric drive system. They show the controller is robust to changes in the environment parameters and adapts quickly to suppress vibrations and improve tracking accuracy.

- G-0, E-2, C-2:Another example of Fault Detection, Isolation, and Recovery (FDIR) incorporated into control systems is presented in [37]. Here a fault tolerant control scheme based on a backstepping controller integrated with a Neural Network (NN) is used to recognise unknown faults, with online adaptation of the NN weights. The overall system uses two networks to approximate unknown system faults and compensate for their effect. NN weights are updated online using a modified back-propagation algorithm.

- G-0, E-3, C-1:Such an uncertain environment as a Mars entry vehicle benefits from having an intelligent control system [38]. In this paper the authors develop a Neural Network (NN) based sliding-mode variable structure controller. This controller has a fast loop, which is a conventional PID controller, and a slow loop, which contains the adaptive NN element. The goal is completely defined by the user through the definition of a nominal entry trajectory.

- G-1, E-0, C-0:In this control system of modest intelligence—classified as G-1—a Genetic Algorithm (GA) is used to optimise the temperature for ethanol fermentation online [46]. This process is not an online adaptation of the controller parameters, but instead the optimal fermentation temperature is obtained online in a manner similar to optimal control approaches. What makes this system intelligent, in contrast to classical optimal control approaches, is that the optimisation is performed online according to the plant states.

- G-1, E-1, C-1:As discussed in Section 3, it can be advantageous for IC methods to combine different AI techniques to exploit their benefits. Handelman et al. create such a system which comprises a Knowledge Based System (KBS) for devising learning strategies and a Neural Network (NN) controller, which learns the desired actions and performs these consistently in real-time [61]. This is designed to mimic human learning, which combines rule based initial learning and fine tuning by repetitive learning. The environment and controller considered here have low levels of uncertainty, and the control goals are only implicitly defined.

- G-1, E-1, C-4:Despite being a well known technique for symbolic regression applications, Genetic Programming (GP) is still not widely used in IC. An example of the use of Genetic Programming (GP) for control purposes is presented in [49], where it is used to derive a control law for a mobile robot moving in an environment with both known and unknown obstacles. The use of GP to create a control law gives this system a controller classification of C-4 since it derives the control law only using predefined mathematical functions without any prior knowledge of the controller structure. The environment has slight uncertainty from the unknown obstacles.

- G-1, E-2, C-1:Kawana and Yasunobu present an intelligent controller capable of dealing with a failure in the actuators [16]. Since the introduced failure is known and defined by the user, this example is not classified as G-2, but does constitute a major change in the environment. The peculiarity of this controller is its ability to generate a model of the environment through online learning. This model is then used to update the fuzzy control rules.

- G-1, E-2, C-2:Talebi et al. give another example of Fault Detection, Isolation, and Recovery (FDIR) enhanced with IC [43]. Here two recurrent Neural Networks (NNs) are employed to detect and isolate faults—one for sensor faults and another for actuator faults. These NNs also compensate for these faults directly without the need for an additional subsystem for fault isolation.

- G-1, E-3, C-4:An approach similar to [49], which also uses Genetic Programming (GP), is proposed by Marchetti et al. [50]. Here GP generates a control law online and the controller is tested on different failure scenarios. In addition to these, they consider the case of an unknown environment model at design time.

- G-2, E-1, C-0:In the work of Ceriotti et al. [51], they design a controller capable of modifying the goal of a planetary rover during its mission. The controller fuses navigation data with pieces of scientific data from different sources to yield a single value of “interest” for each point on the map. This map evolves during the mission depending on observed data. The fusion of data from different sensors is realised using the Dezert-Smarandache Theory (DSmT) of plausible and paradoxical reasoning, which can overcome the limitations of both fuzzy logic and evidence theory. In particular, paradoxical reasoning is able to provide a solution even in the case of conflicting information.

- G-2, E-1, C-1:The Autonomous Sciencecraft Experiment onboard NASA’s Earth Observing One is one of the most advanced satellite IC systems [52]. As with many intelligent control systems, this system has a hierarchical structure. In this case the highest level in the control hierarchy is the CASPER planner, which uses information from the onboard science to plan its activities. This is fed to the spacecraft command language, which then carries out the plan using lower level actions. This level can also adapt to environmental changes and make control adjustments as necessary. Below this level is conventional software, which simply carries out control actions as instructed by higher levels. While this system does not operate in a substantially varying environment, it alters its controller parameters online and contains highly autonomous decision making and goal updating.

- G-2, E-2, C-1:WISDOM is a control system for rovers, which is capable of high level planning and adaptive control [64]. Again this control system has a hierarchical structure with three layers. The top layer is responsible for generating plans, which are fed to the adaptive controller at a lower level. This adaptive system deals with immediate changes in the environment and gives instructions to the lowest level in the hierarchy, which is connected directly to the actuators. This system adapts to changing or uncertain environments and has varying parameters. The goals are also evolved over time in the system’s planner.

- Activity planning—G2: All reviewed applications involving planning and reasoning were classified as G2. Although they use a variety of AI techniques, in all these cases the controller needs to choose its desired states and how to achieve them. For example, in [52] the control system uses information from the onboard science subsystem to plan its activities. In [63] a reasoning strategy based on forward chaining is adopted to find optimal concentrations of chemicals for an electrolytic process.

- Robotic navigation and manipulation—E2: Robotic systems that operate in the presence of unknown obstacles or environment characteristics are classified as E2. Under this category fall control systems that deal with parametric uncertainties in the dynamic models of the plant as in [35,36] and robotic exploration in an uncertain environment [64].

- Adaptive intelligent control—C1: Where the system is not subject to unknown faults, all control systems that adapt their parameters online are classified as C1. This can be achieved with various AI techniques, but the common aspect within this category is some adaptation mechanism that updates the control law parameters. By comparing two different applications like [17] and [29], it can be seen that despite the different AI techniques they employ (in this case Fuzzy Logic (FL) and Neural Network (NN)) and their differing overall goal, they are both classified as C1 since they both adjust control law parameters online.

- Fault Detection, Isolation, and Recovery (FDIR)—C2: This encompasses control systems that can deal with failures in its sensors or actuators. An often used technique in this category is Adaptive Network-based Fuzzy Inference System (ANFIS) as in [56] for fault detection and diagnosis of an industrial steam turbine and in [66] where a controller is designed to reliably track the trajectory of a robotic airship in the presence of sensor faults.

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Abbreviations

| AI | Artificial Intelligence |

| ANFIS | Adaptive Network-based Fuzzy Inference System |

| CASPER | Continuous Activity Scheduling Planning Execution and Replanning |

| CMAC | Cerebellar Model Articulation Controller |

| DSmT | Dezert-Smarandache Theory |

| EA | Evolutionary Algorithm |

| EC | Evolutionary Computing |

| FDIR | Fault Detection, Isolation, and Recovery |

| FL | Fuzzy Logic |

| GA | Genetic Algorithm |

| GGAC | General Genetic Adaptive Control |

| GMRAC | Genetic Model Reference Adaptive Control |

| GP | Genetic Programming |

| IC | Intelligent Control |

| KBS | Knowledge Based System |

| ML | Machine Learning |

| NN | Neural Network |

| SOC | Self-Organising Control |

| SVM | Support Vector Machine |

References

- Saridis, G.N. Toward the Realization of Intelligent Controls. Proc. IEEE 1979, 67, 1115–1133. [Google Scholar] [CrossRef]

- Fu, K. Learning control systems and intelligent control systems: An intersection of artifical intelligence and automatic control. IEEE Trans. Autom. Control 1971, 16, 70–72. [Google Scholar] [CrossRef]

- Antsaklis, P.J. Intelligent Learning Control. IEEE Control Syst. 1995, 15, 5–7. [Google Scholar] [CrossRef]

- Antsaklis, P.J. Defining Intelligent Control. Report to the Task Force on Intelligent Control. IEEE Control Syst. Soc. 1993, 58, 4–5. [Google Scholar]

- Linkens, D. Learning systems in intelligent control: An appraisal of fuzzy, neural and genetic algorithm control applications. Control Theory Appl. 1996, 143, 367–386. [Google Scholar] [CrossRef]

- Krishnakumar, K.; Kulkarni, N. Inverse Adaptive Neuro-Control for the control of a turbofan engine. In Proceedings of the AIAA conference on Guidance, Navigation and Control, Portland, OR, USA, 9–11 August 1999. [Google Scholar]

- Lavallee, D.B.; Olsen, C.; Jacobsohn, J.; Reilly, J. Intelligent Control For Spacecraft Autonomy—An Industry Survey. In Proceedings of the AIAA Space Forum (Space 2006), San Jose, CA, USA, 19–21 September 2006. [Google Scholar]

- Bennett, S. A Brief History of Automatic Control. IEEE Control Syst. 1996, 16, 17–25. [Google Scholar] [CrossRef]

- Nyquist, H. Regeneration Theory. Bell Syst. Tech. J. 1932, 11, 126–147. [Google Scholar] [CrossRef]

- Bellman, R. The Theory of Dynamic Programming. Bull. Am. Math. Soc. 1954, 60, 503–515. [Google Scholar] [CrossRef]

- Hunt, K.J.; Sbarbaro, D.; Zbikowski, R.; Gawthrop, P.J. Neural networks for control systems-A survey. Automatica 1992, 28, 1083–1112. [Google Scholar] [CrossRef]

- Fleming, P.; Purshouse, R. Evolutionary algorithms in control systems engineering: A survey. Control Eng. Pract. 2002, 10, 1223–1241. [Google Scholar] [CrossRef]

- Passino, K.M.; Yurkovich, S. Fuzzy Control; Addison-Wesley: Menlo Park, CA, USA, 1998; Volume 42. [Google Scholar]

- Guan, P.; Liu, X.J.; Liu, J.Z. Adaptive fuzzy sliding mode control for flexible satellite. Eng. Appl. Artif. Intell. 2005, 18, 451–459. [Google Scholar] [CrossRef]

- Elkilany, B.G.; Abouelsoud, A.A.; Fathelbab, A.M.; Ishii, H. Potential field method parameters tuning using fuzzy inference system for adaptive formation control of multi-mobile robots. Robotics 2020, 9, 10. [Google Scholar] [CrossRef]

- Kawana, E.; Yasunobu, S. An intelligent control system using object model by real-time learning. In Proceedings of the SICE Annual Conference, Takamatsu, Japan, 17–20 September 2007; pp. 2792–2797. [Google Scholar]

- Yu, Z. Research on intelligent fuzzy control algorithm for moving path of handling robot. In Proceedings of the 2019 International Conference on Robots and Intelligent System, ICRIS 2019, Chengdu, China, 23–25 February 2019; pp. 50–54. [Google Scholar]

- Gu, Y.; Zhao, W.; Wu, Z. Online adaptive least squares support vector machine and its application in utility boiler combustion optimization systems. J. Process. Control 2011, 21, 1040–1048. [Google Scholar] [CrossRef]

- Lee, T.; Kim, Y. Nonlinear Adaptive Flight Control Using Backstepping and Neural Networks Controller. J. Guid. Control Dyn. 2001, 24, 675–682. [Google Scholar] [CrossRef]

- Brinker, J.S.; Wise, K.A. Flight Testing of Reconfigurable Control Law on the X-36 Tailless Aircraft. J. Guid. Control Dyn. 2001, 24, 903–909. [Google Scholar] [CrossRef]

- Johnson, E.N.; Kannan, S.K. Adaptive Trajectory Control for Autonomous Helicopters. J. Guid. Control Dyn. 2005, 28, 524–538. [Google Scholar] [CrossRef]

- Williams-Hayes, P. Flight Test Implementation of a Second Generation Intelligent Flight Control System; Technical Report November 2005; NASA Dryden Flight Research Center: Edwards, CA, USA, 2005.

- Krishnakumar, K. Adaptive Neuro-Control for Spacecraft Attitude Control. In Proceedings of the 1994 IEEE International Conference on Control and Applications, Glasgow, UK, 24–26 August 1994. [Google Scholar]

- Sabahi, K.; Nekoui, M.A.; Teshnehlab, M.; Aliyari, M.; Mansouri, M. Load frequency control in interconnected power system using modified dynamic neural networks. In Proceedings of the 2007 Mediterranean Conference on Control and Automation, Athens, Greece, 27–29 June 2007. [Google Scholar]

- Becerikli, Y.; Konar, A.F.; Samad, T. Intelligent optimal control with dynamic neural networks. Neural Netw. 2003, 16, 251–259. [Google Scholar] [CrossRef]

- Kuljaca, O.; Swamy, N.; Lewis, F.L.; Kwan, C.M. Design and implementation of industrial neural network controller using backstepping. IEEE Trans. Ind. Electron. 2003, 50, 193–201. [Google Scholar] [CrossRef]

- San, P.P.; Ren, B.; Ge, S.S.; Lee, T.H.; Liu, J.K. Adaptive neural network control of hard disk drives with hysteresis friction nonlinearity. IEEE Trans. Control Syst. Technol. 2011, 19, 351–358. [Google Scholar] [CrossRef]

- Yen, V.T.; Nan, W.Y.; Van Cuong, P. Robust Adaptive Sliding Mode Neural Networks Control for Industrial Robot Manipulators. Int. J. Control Autom. Syst. 2019, 17, 783–792. [Google Scholar] [CrossRef]

- Hamid, M.; Jamil, M.; Butt, S.I. Intelligent control of industrial robotic three degree of freedom crane using Artificial Neural Network. In Proceedings of the 2016 IEEE Information Technology, Networking, Electronic and Automation Control Conference (ITNEC 2016), Chongqing, China, 20–22 May 2016; pp. 113–117. [Google Scholar]

- Ligutan, D.D.; Abad, A.C.; Dadios, E.P. Adaptive robotic arm control using artificial neural network. In Proceedings of the 2018 IEEE 10th International Conference on Humanoid, Nanotechnology, Information Technology, Communication and Control, Environment and Management (HNICEM 2018), Baguio City, Philippines, 29 November–2 December 2018. [Google Scholar]

- Wu, Q.; Lin, C.M.; Fang, W.; Chao, F.; Yang, L.; Shang, C.; Zhou, C. Self-organizing brain emotional learning controller network for intelligent control system of mobile robots. IEEE Access 2018, 6, 59096–59108. [Google Scholar] [CrossRef]

- Dai, S.L.; Wang, C.; Luo, F. Identification and learning control of ocean surface ship using neural networks. IEEE Trans. Ind. Inform. 2012, 8, 801–810. [Google Scholar] [CrossRef]

- Nicol, C.; MacNab, C.J.; Ramirez-Serrano, A. Robust adaptive control of a quadrotor helicopter. Mechatronics 2011, 21, 927–938. [Google Scholar] [CrossRef]

- How, B.V.E.; Ge, S.S.; Choo, Y.S. Dynamic load positioning for subsea installation via adaptive neural control. IEEE J. Ocean. Eng. 2010, 35, 366–375. [Google Scholar] [CrossRef]

- He, W.; Chen, Y.; Yin, Z. Adaptive Neural Network Control of an Uncertain Robot with Full-State Constraints. IEEE Trans. Cybern. 2016, 46, 620–629. [Google Scholar] [CrossRef] [PubMed]

- Klecker, S.; Hichri, B.; Plapper, P. Neuro-inspired reward-based tracking control for robotic manipulators with unknown dynamics. In Proceedings of the 2017 2nd International Conference on Robotics and Automation Engineering (ICRAE 2017), Shanghai, China, 29–31 December 2018; pp. 21–25. [Google Scholar]

- Xu, Y.; Jiang, B.; Tao, G.; Gao, Z. Fault tolerant control for a class of nonlinear systems with application to near space vehicle. Circuits Syst. Signal Process. 2011, 30, 655–672. [Google Scholar] [CrossRef]

- Li, S.; Peng, Y.M. Neural network-based sliding mode variable structure control for Mars entry. Proc. Inst. Mech. Eng. Part J. Aerosp. Eng. 2011, 226, 1373–1386. [Google Scholar] [CrossRef]

- Yang, C.; Li, Z.; Li, J. Trajectory planning and optimized adaptive control for a class of wheeled inverted pendulum vehicle models. IEEE Trans. Cybern. 2013, 43, 24–36. [Google Scholar] [CrossRef]

- Johnson, E.; Calise, A.; Corban, J.E. Reusable launch vehicle adaptive guidance and control using neural networks. In Proceedings of the AIAA Guidance, Navigation, and Control Conference and Exhibit, Montreal, QC, Canada, 6–9 August 2001. [Google Scholar]

- Qazi, M.U.D.; Linshu, H.; Elhabian, T. Rapid Trajectory Optimization Using Computational Intelligence for Guidance and Conceptual Design of Multistage Space Launch Vehicles. In Proceedings of the AIAA Guidance, Navigation, and Control Conference and Exhibit, San Francisco, CA, USA, 15–18 August 2005; pp. 1–18. [Google Scholar]

- Wen, C.M.; Cheng, M.Y. Development of a recurrent fuzzy CMAC with adjustable input space quantization and self-tuning learning rate for control of a dual-axis piezoelectric actuated micromotion stage. IEEE Trans. Ind. Electron. 2013, 60, 5105–5115. [Google Scholar] [CrossRef]

- Talebi, H.A.; Khorasani, K.; Tafazoli, S. A recurrent neural-network-based sensor and actuator fault detection and isolation for nonlinear systems with application to the satellite’s attitude control subsystem. IEEE Trans. Neural Netw. 2009, 20, 45–60. [Google Scholar] [CrossRef]

- Zhang, X.; Xu, D.; Liu, Y. Intelligent control for large-scale variable speed variable pitch wind turbines. J. Control Theory Appl. 2004, 2, 305–311. [Google Scholar] [CrossRef]

- Wong, P.K.; Xu, Q.; Vong, C.M.; Wong, H.C. Rate-dependent hysteresis modeling and control of a piezostage using online support vector machine and relevance vector machine. IEEE Trans. Ind. Electron. 2012, 59, 1988–2001. [Google Scholar] [CrossRef]

- Moriyama, H.; Shimizu, K. On-line optimisation of culture temperature for ethanol fermentation using a genetic algorithm. J. Chem. Technol. Biotechnol. 1996, 66, 217–222. [Google Scholar] [CrossRef]

- Caponio, A.; Cascella, G.L.; Neri, F.; Salvatore, N.; Sumner, M. A fast adaptive memetic algorithm for online and offline control design of PMSM drives. IEEE Trans. Syst. Man Cybern. Part Cybern. 2007, 37, 28–41. [Google Scholar] [CrossRef] [PubMed]

- Ponce, H.; Souza, P.V.C. Intelligent control navigation emerging on multiple mobile robots applying social wound treatment. In Proceedings of the 2019 IEEE 33rd International Parallel and Distributed Processing Symposium Workshops (IPDPSW 2019), Rio de Janeiro, Brazil, 20–24 May 2019; pp. 559–564. [Google Scholar]

- Chiang, C.H. A genetic programming based rule generation approach for intelligent control systems. In Proceedings of the 2010 International Symposium on Computer, Communication, Control and Automation (3CA), Tainan, Taiwan, 5–7 May 2010; Volume 1, pp. 104–107. [Google Scholar]

- Marchetti, F.; Minisci, E.; Riccardi, A. Towards Intelligent Control via Genetic Programming. In Proceedings of the 2020 International Joint Conference on Neural Networks (IJCNN), Glasgow, UK, 19–24 July 2020. [Google Scholar]

- Ceriotti, M.; Vasile, M.; Giardini, G.; Massari, M. An approach to model interest for planetary rover through Dezert- Smarandache Theory. J. Aerosp. Comput. Inf. Commun. 2009, 6, 92–108. [Google Scholar] [CrossRef]

- Chien, S.; Sherwood, R.; Tran, D.; Cichy, B.; Rabideau, G.; Castano, R.; Davis, A.; Mandl, D.; Frye, S.; Trout, B.; et al. Using Autonomy Flight Software to Improve Science Return on Earth Observing One. J. Aerosp. Comput. Inf. Commun. 2005, 2, 196–216. [Google Scholar] [CrossRef]

- Kankar, P.K.; Sharma, S.C.; Harsha, S.P. Fault diagnosis of ball bearings using machine learning methods. Expert Syst. Appl. 2011, 38, 1876–1886. [Google Scholar] [CrossRef]

- Ahn, K.K.; Kha, N.B. Modeling and control of shape memory alloy actuators using Preisach model, genetic algorithm and fuzzy logic. Mechatronics 2008, 18, 141–152. [Google Scholar] [CrossRef]

- Ichikawa, Y.; Sawa, T. Neural Network Application for Direct Feedback Controllers. IEEE Trans. Neural Netw. 1992, 3, 224–231. [Google Scholar] [CrossRef]

- Salahshoor, K.; Kordestani, M.; Khoshro, M.S. Fault detection and diagnosis of an industrial steam turbine using fusion of SVM (support vector machine) and ANFIS (adaptive neuro-fuzzy inference system) classifiers. Energy 2010, 35, 5472–5482. [Google Scholar] [CrossRef]

- Gabbar, H.A.; Sharaf, A.; Othman, A.M.; Eldessouky, A.S.; Abdelsalam, A.A. Intelligent control systems and applications on smart grids. In New Approaches in Intelligent Control. Intelligent Systems Reference Library; Nakamatsu, K., Kountchev, R., Eds.; Springer: Cham, Switzerlands, 2016; Volume 107. [Google Scholar] [CrossRef]

- Al-isawi, M.M.A.; Sasiadek, J.Z. Guidance and Control of Autonomous, Flexible Wing UAV with Advanced Vision System. In Proceedings of the 2018 23rd International Conference on Methods & Models in Automation & Robotics (MMAR), Międzyzdroje, Poland, 27–30 August 2018; pp. 441–448. [Google Scholar]

- Orlowska-Kowalska, T.; Szabat, K. Control of the drive system with stiff and elastic couplings using adaptive neuro-fuzzy approach. IEEE Trans. Ind. Electron. 2007, 54, 228–240. [Google Scholar] [CrossRef]

- Kaitwanidvilai, S.; Parnichkun, M. Force control in a pneumatic system using hybrid adaptive neuro-fuzzy model reference control. Mechatronics 2005, 15, 23–41. [Google Scholar] [CrossRef]

- Handelman, D.A.; Lane, S.H.; Gelfand, J.J. Integrating Neural Networks and Knowledge-Based Systems for Intelligent Robotic Control. IEEE Control Syst. Mag. 1990, 10, 77–87. [Google Scholar] [CrossRef]

- Lennon, W.K.; Passino, K.M. Intelligent control for brake systems. IEEE Trans. Control Syst. Technol. 1999, 7, 188–202. [Google Scholar] [CrossRef]

- Wu, M.; Nakano, M.; She, J.H. An expert control strategy using neural networks for the electrolytic process in zinc hydrometallurgy. In Proceedings of the 1999 lEEE International Conference on Control Applications, Kohala Coast, HI, USA, 27–30 August 1999; Volume 16, pp. 135–143. [Google Scholar]

- Vasile, M.; Massari, M.; Giardini, G. Wisdom—An Advanced Intelligent, Fault-Tolerant System for Autonomy in Risky Environments; Technical Report, ESA ITI Contract 18693/04/NL/MV; ESA ESTEC: Noordwijk, The Netherlands, 2004. [Google Scholar]

- Jang, J.S.R. ANFIS: Adaptive-Network-Based Fuzzy Inference System. IEEE Trans. Syst. Man Cybern. 1993, 23, 665–685. [Google Scholar] [CrossRef]

- Wang, Y.; Zhou, W.; Luo, J.; Yan, H.; Pu, H.; Peng, Y. Reliable Intelligent Path Following Control for a Robotic Airship Against Sensor Faults. IEEE/ASME Trans. Mechatron. 2019, 24, 2572–2582. [Google Scholar] [CrossRef]

| G0 | G1 | G2 | ||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| E0 | E1 | E2 | E3 | E0 | E1 | E2 | E3 | E1 | E2 | |||||||

| C2 | C1 | C2 | C3 | C1 | C2 | C1 | C0 | C1 | C4 | C1 | C2 | C4 | C0 | C1 | C1 | |

| FL | [14,15] | [16] | [17] | |||||||||||||

| NN | [18,19,20,21,22,23,24,25,26,27,28,29,30] | [31] | [32,33,34,35,36] | [37] | [38] | [39] | [40,41,42] | [43] | ||||||||

| SVM | [44,45] | |||||||||||||||

| EC | [46] | [47,48] | [49] | [50] | ||||||||||||

| Other | [51] | [52] | ||||||||||||||

| Hybrid Methods | [53] | [54,55] | [56] | [57,58,59,60] | [61,62] | [63] | [64] | |||||||||

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wilson, C.; Marchetti, F.; Di Carlo, M.; Riccardi, A.; Minisci, E. Classifying Intelligence in Machines: A Taxonomy of Intelligent Control. Robotics 2020, 9, 64. https://doi.org/10.3390/robotics9030064

Wilson C, Marchetti F, Di Carlo M, Riccardi A, Minisci E. Classifying Intelligence in Machines: A Taxonomy of Intelligent Control. Robotics. 2020; 9(3):64. https://doi.org/10.3390/robotics9030064

Chicago/Turabian StyleWilson, Callum, Francesco Marchetti, Marilena Di Carlo, Annalisa Riccardi, and Edmondo Minisci. 2020. "Classifying Intelligence in Machines: A Taxonomy of Intelligent Control" Robotics 9, no. 3: 64. https://doi.org/10.3390/robotics9030064

APA StyleWilson, C., Marchetti, F., Di Carlo, M., Riccardi, A., & Minisci, E. (2020). Classifying Intelligence in Machines: A Taxonomy of Intelligent Control. Robotics, 9(3), 64. https://doi.org/10.3390/robotics9030064