Abstract

Space-based manipulators have traditionally been tasked with robotic on-orbit servicing or assembly functions, but active debris removal has become a more urgent application. We present a much-needed tutorial review of many of the robotics aspects of active debris removal informed by activities in on-orbit servicing. We begin with a cursory review of on-orbit servicing manipulators followed by a short review on the space debris problem. Following brief consideration of the time delay problems in teleoperation, the meat of the paper explores the field of space robotics regarding the kinematics, dynamics and control of manipulators mounted onto spacecraft. The core of the issue concerns the spacecraft mounting which reacts in response to the motion of the manipulator. We favour the implementation of spacecraft attitude stabilisation to ease some of the computational issues that will become critical as increasing level of autonomy are implemented. We review issues concerned with physical manipulation and the problem of multiple arm operations. We conclude that space robotics is well-developed and sufficiently mature to tackling tasks such as active debris removal.

1. Introduction

Space-based manipulators have traditionally been tasked with robotic on-orbit servicing functions, but despite several decades of development since the 1980s, this has yet to come to pass. A new application of space manipulators has emerged—active debris removal—and this need has become urgent. Much of the technological development in space robotics over this period is directly applicable to this new task and indeed, given that the more challenging aspects of on-orbit servicing are not required (namely, servicing tasks), the prospect of active debris removal can be met. All the kinematic, dynamic and control issues are identical—this includes the requirement for grappling the target and passivating it. This will require robotic clamps or grippers. Indeed, once a grapple hold has been attained on capture of the target, it may be necessary to adjust the grip configuration to secure it for transport. Relocating the grip may require robotic fingers for stable grip-to-grip transitions, but securing the grip will require robotic latching mechanisms. Servicing tasks typically have involved the deployment of power tools for bolt manipulation and the use of specialised tools for more challenging tasks such as cutting, taping and resealing thermal blankets. For the most part, this will not be required for active debris removal if the robot is latched to the target. However, the attachment of a propulsive stage to the target for disposal will require basic servicing capability including power tooling for bolt handling (ensuring that said bolts remain secure and not become further sources of debris). It is envisaged that the more difficult manipulations involving invasive servicing procedures will not be necessary.

We first consider a brief schematic of recent on-orbit space manipulators employed by the International Space Station (ISS), and thence proceed to describe the rise and fall of robotic on-orbit servicing missions. We then provide a comprehensive review of the growing space debris crisis and proposed solutions. The freeflyer sporting two or more manipulators is the solution of choice to remove large debris pieces. To that end, the bulk of the paper reviews the kinematics, dynamics and control of space manipulators. We pay particular attention to the grappling task. Hence, our interest here is to provide a schematic of on-orbit servicing robotics that can be applied to space debris removal. Since the comprehensive review in [1], there have been many modern developments in space manipulator robotics. Some excellent more modern reviews include [2] which highlights challenges in servicing non-cooperative satellites and [3,4] which emphasise kinematic and dynamic issues. Here, we are more selective and present a tutorial approach. We take an unusual stance in viewing space manipulators from the perspective of their deployment for space debris mitigation of which on-orbit servicing is in fact a part. Rather than aiming at comprehension, our tutorial review should increase the appeal of the paper to a wider audience.

2. On-Orbit Space Manipulators

Space manipulator robotics has played a significant role on the ISS, which has installed on it three manipulator systems: the Canadian Mobile Servicing System (MSS), the Japanese Experiment Module Remote Manipulator System (JEMRMS), and the European Robotic Arm (ERA) [5]. The MSS includes the 17 m long 7 degree-of-freedom Space Station Remote Manipulator System (SSRMS) with its relocatable base (Table 1), which is comparable to the 11 m long 7 degree-of-freedom ERA with its relocatable base in contrast to the 10 m long 6 degree-of-freedom JEMRMS fixed to the JEM. Both SSRMS and ERA are symmetric about their elbows, with latching end effectors at the end of each three degree-of-freedom wrist enabling hand-over-hand relocatability. Both were designed for assembly and servicing, while JEMRMS was designed for experiment payload manipulation from a fixed location.

Table 1.

Denavit-Hartenburg kinematic parameters for the SSRMS manipulator (adapted from Ref. [5]).

If we compare SSRMS with its predecessor the Shuttle remote manipulator system (SRMS) (Table 2), the improvement in performance is evident.

Table 2.

SRMS and SSRMS physical and performance parameters (adapted from Ref. [5]).

The special purpose dextrous manipulator (SPDM) is a bolt-on dual-arm fine manipulation system to the SSRMS comprising two 3.4 m long 7 degree-of-freedom arms with a positioning accuracy of 2 mm. Their joints are similarly configured with three shoulder joints (roll, pitch and yaw), an elbow pitch joint and three wrist joints (roll, pitch and yaw). Lighted cameras are mounted onto each forearm boom and at each end effector. These robot manipulators and their predecessor, the Shuttle RMS (SRMS), have been deployed for human servicing tasks in providing mobile but stable footings for astronauts and, latterly, more sophisticated manipulation tasks, primarily orbital replacement unit (ORU) exchange on the ISS.

One of the most sophisticated space robot platforms is the 150 kg Robonaut, which was delivered to the ISS as an intravehicular manipulator system in 2011 [6]. It is a teleoperated anthropomorphic design to impart astronaut extravehicular activity (EVA)-equivalence in a package that is similar in size to an EVA-suited astronaut with a reach of 0.7 m. It comprises two 7 degree-of-freedom arms with shoulder, elbow and wrist which mount two five-fingered hands, a head mounted onto a pan-tilt-verge orientable neck, a torso and a 6 degree of freedom (DOF) grappling leg. Robonaut’s hand comprises 14 DOF including a forearm, a 2 DOF wrist and a 12 DOF hand (2 × 3 DOF fingers, 1 × 3 DOF thumb, 2 × 1 DOF fingers plus a 1 DOF palm) mounted onto a 5 DOF arm. Each finger possesses a six-axis force sensor and force sensors at each joint to detect applied forces. Robonaut implements 16 sensors per joint and the forearm mounts 14 motors/harmonic drives, 12 circuit boards and the wiring harness. Its vision system mounted within the head comprises two binocular stereocameras with a fixed verge at arm’s length mounted onto a pan-tilt-verge neck with a pitch axis below the camera frame to permit forward translation of the neck. The payload capacity of each arm is modest at under 10 kg. In total, it has 350 sensors and 42 DOF. Robonaut uses a telepresence-based interface—a full immersion telepresence testbed—to allow it to perform EVA-type tasks such as target tracking, peg-in-hole tasks, tether hook securing, power tool handling, connector mate/demating, etc. [7]. The teleoperator wears cybergloves that measure displacement and bending of the operator’s fingers, a tracker to measure the position of the operator’s hand, arms and head relative to a fixed transmitter, and a head-mounted display to display remote camera views as 3D visual graphical overlays and digital force/torque measurement data. Voice commands are used to freeze and re-index Robonaut’s limbs to avoid drift. A 6 DOF force reflecting hand controller within a joystick provides force feedback to the operator. The Robonaut haptic sensor is mounted at several positions in the cyberglove that mirrors the remote tool stiffness/forces—it is based on an electrorheological fluid actuator which alters its viscosity under an electric field [8].

3. Robotic On-Orbit Servicing

Although astronaut on-orbit servicing (OOS) has progressed in leaps and bounds since the Solar Maximum Repair Mission (1984), it nevertheless was foundational in illustrating the difference between the designed-for-servicing ORU exchange and the not-designed-for-servicing main electronics box (MEB) exchange. Robotic on-orbit servicing has not made such advances in comparison to astronaut EVA capabilities. Robotic OOS is a class of mission in which a robotic servicer (chaser) spacecraft intercepts and performs servicing tasks on a client (target) spacecraft. This potentially involves a range of complexity of servicing tasks from observing the state of the target to translating the target into a new orbital state to direct robotic manipulation of the target to repair or upgrade it. A particularly relevant aspect of OOS is its implications for military space situational awareness from ground telescopes [9,10]. In all these cases that we consider, the targets have been cooperative, differentiating them from the targets from active debris removal which will be uncooperative.

There have been several technology demonstrator missions beginning with the foundational ETS-VII mission. The Japanese Experimental Test Satellite, ETS-VII (1997), successfully tested a 6 DOF 2 m long robotic manipulator with a three-fingered hand teleoperated from the ground under a time delay of 5–7 s (introduced by a TDRSS—tracking and data relay satellite system—relay) to demonstrate r-bar and v-bar automated rendezvous and docking tasks of a 248 kg chaser (Hikoboshi) to a 380 kg target (Orihime) followed by taskboard experiments including general peg-in-hole and ORU exchange-type tasks [11]. The DLR GETEX (German Technology Experiment) software that formed the backbone of the teleoperation tasks was based on task-level programming to upload motion sequences for autonomous execution [12]. It supplemented its 2 Hz video frame rate cameras with proximity rangefinders and implemented force feedback control. ETS-VII successfully demonstrated several on-orbit tasks: (i) remote observation; (ii) autonomous target chase and capture; (iii) ORU exchange including bolt fastening and target berthing; (iv) ORU electrical and fluid interfacing; (v) peg-in-hole insertion task based on wrist force/torque sensing; (vi) model-based predictive teleoperation with a 5–7 s time delay: (vii) flexible object handling, mating/demating of electrical connectors, truss joint connections, tightening of latches, etc.

In 2005, DART (demonstration of autonomous rendezvous technology) attempted to rendezvous and dock with another satellite, but its defective autonomous navigation system caused critical propellant consumption invoking an abort to the mission. This failure was followed by a success, Orbital Express (2007) demonstrated a suite of on-orbit tasks, including freeflying capture, ORU exchange and refuelling by an autonomous servicing satellite (ASTRO) that mounted a 6 degree-of-freedom revolute manipulator that performed tasks on a serviceable satellite (NextSat) [13,14]. While the manipulator arm was in motion, ASTRO operated in free drift with a free-floating base. It corrected its attitude once arm motion was complete, so attitude and manipulator control were implemented independently in time. This was similar to the approach adopted on the preceding ETS-VII servicing mission demonstrator. This is a rather cumbersome approach. Once the compliant grapple fixture on NextSat was captured by the manipulator, the join was rigidised and arm controller parameters adjusted to accommodate the new high inertia payload. Repeated demonstrations of autonomous rendezvous and docking, autonomous propellant transfer and changeout of batteries and onboard computers were performed. ASTRO used both visual and LIDAR tracking to compute relative position and velocity. It demonstrated the use of proximity station-keeping and the use of a robotic arm to grapple the target to minimise interaction forces that would be imposed by positive docking.

Geostationary equatorial orbit (GEO) is the most heavily populated orbit for commercial spacecraft—their orbital altitudes are identical and offering 24 h ground-spacecraft communications links. An example of a promising (subsequently cancelled) servicing capability was Europe’s SMART-OLEV (orbital life extension vehicle) to implement life extension to GEO communications satellites [15]. It comprised a spacecraft based on the SMART-1 bus to mechanically latch onto a client satellite at the apogee engine nozzle to provide orbit transfer and/or station-keeping functions using electric propulsion—it required no interfacing with the client spacecraft which operates normally other than the mechanical dock with its apogee engine capture tool. It had heritage from the earlier cancelled ConeXpress [16] (formerly SLES [17]), a service module to provide 12 years of extended life support to aging geostationary satellites. Using Hall thrusters, it would dock with the target satellite until the capture tool on a retractable boom was inserted into the thrust cone of the inert apogee kick engine without the use of robotic manipulators. The apogee engine nozzle—common to almost all GEO satellites for circularising into GEO orbit from elliptical geosynchronous transfer orbit—is used as a docking port by inserting and locking a DLR-type capture probe in the nozzle’s throat and then retracting the probe until the two spacecraft are locked together. The DLR capture tool includes six sensors for feedback on the relationship between the capture tool and the apogee motor volume and locks when fully inserted using a set of spreading pins. An alternative is the launch adapter ring as the sturdiest part of most spacecraft with which to grapple the spacecraft with manipulators and tooling. The American experience has been similar to the European experience in cancelled robotic OOS missions, the most notable being the Ranger Robotic Satellite Servicer. The next robotic servicing mission is to be Robotic Servicer for Geosynchronous Satellites (RSGS), currently slated for launch in 2021 (private communication 2018).

The chief hindrance to the adoption of the OOS philosophy in spaceflight has been the reluctance to implement widespread adoption of modular and serviceable spacecraft designs with standardised interfaces capable of sustaining kick loads imposed by servicing tasks and amenable to modular repair or simple disassembly. Design for servicing requires ORUs, which are modular boxes with standardised grapple fixtures and connectors that serve as containment packages for functional subsystems—wholesale module replacement is much cheaper than entire satellite replacement and easier than complex repair tasks. Typical servicing tasks involve the exchange of ORUs units through simple mechanical procedures afforded by the standardised interfaces. Indeed, SSRMS itself comprises ORUs of two booms joined by an elbow pitch joint. Each end has identical roll-pitch-yaw wrists mounting identical latching end effectors. Similarly, SPDM bolts onto the SSRMS using only four bolts. SPDM’s standard end effector is an ORU/tool changeout mechanism (OTCM) which incorporates a retractable 7/16th socket wrench. Pistol-grip EVA power tools for astronauts were designed to transfer kick loads from the client to the servicer—Robonaut hands are also designed to handle the same EVA power tools as the astronaut. However, for the final grapple, emphasis in space rendezvous and docking has been in latching. A lightweight quick-change mechanism with a novel locking mechanism with high repeatability, reliability and adaptability is described in [18]. The most representative example of a serviceable spacecraft design is the Multimission Modular Spacecraft (MMS) which was the basis of the Solar Maximum Mission spacecraft (1980), Landsat 4 (1982), Landsat 5 (1984), the Upper Atmosphere Research Satellite (1991) and the Extreme Ultraviolet Explorer (1992). Although the functional subsystems were encapsulated into ORUs, the wiring harness and certain electronics boxes beneath thermal blankets were not designed for serviceability. The Hubble Space Telescope (1990) was also designed for serviceability though it was not based on the MMS platform. As HST has amply demonstrated with five servicing missions, serviceability provides not only repair capability but also upgradability with superior instruments. Nevertheless, no serviceable spacecraft have been designed and launched subsequently to 1980s. The trend has been towards throwaway cubesats, but large spacecraft remain, and spacecraft keep failing [19]. Furthermore, each satellite failure diminishes the space environment by introducing either irreparable junk or a spacecraft of diminished capacity or longevity that must be replaced early.

OOS appears to have reached an impasse—much of the robotics technology has been developed, but there has been little in the way of commercial development. However, active debris removal has emerged as another application of the same technology which could potentially provide the final leverage to OOS as a space infrastructure capability. OOS itself also acts as a debris mitigation strategy—refuelling and servicing spacecraft at end-of-life will reduce the rate of creation of space junk using freeflyer tankers in the geostationary ring (perhaps supplying LH/LOX extracted from lunar water). Defunct parts may be replaced and/or upgraded, although this requires supply from Earth (though supply from lunar in situ resources remains an intriguing future possibility [20]). Still-functional parts such as antennas may also be salvaged from irreparable spacecraft and installed on reparable ones. Re-usable infrastructure hardware in the form of serviceable multi-purpose platforms may similarly be serviced and leased for on-orbit services (communications, navigation or remote sensing). However, here we are considering the direct application of space manipulators to active debris removal. Indeed, this scenario eases some of the more complex manipulations, such as the handling of thermal blankets and tape, which are considered the most difficult of manipulations, but these are not required for debris handling. Nevertheless, the requirement for interception, robust grappling, passivation and attachment of de-orbit devices remains.

4. Space Debris Mitigation

The space debris problem has provided an urgent impetus to space manipulator-based missions that have traditionally focussed on on-orbit servicing of satellites. There have been around 5000 successful rocket launches from Earth into orbit and beyond since the dawn of the space age in 1957 (most launchers carry more than one spacecraft). There are currently 1382 active spacecraft in orbit (of which 340 reside in or near GEO) around Earth (446 American, 135 Russian, 132 Chinese and the rest others). Of the additional 15,888 (2015) pieces of orbital debris larger than 10 cm in size (dominantly in LEO), 2682 are defunct spacecraft, 1907 are spent upper stages, and 11,299 are other fragments [21]. This does not include the estimated 500,000 untrackable debris pieces in the range 1–10 cm (similar to or larger than the calibre of many infantry weapons) and a further 108 pieces down to 1 mm in size from paint chips to solid droplets, 98% of which is artificial space debris [22]. Most of the debris has been caused by the first spacefaring nations—predominantly the USA and USSR. In LEO alone, there are an estimated 150 million fragments of human-created junk less than 1 mm in size, all travelling at 8–12 km/s (for comparison, a rifle bullet travels at only 1.2 km/s). Smaller debris ~1 mm in size can be protected against through Whipple shields though at the cost of mass suitable only for large and human-rated spacecraft. However, larger debris ~2–3 mm in size or larger cannot be shielded against. The most debris-polluted orbits reside at 800–1000 km altitude beyond where natural clearing by atmospheric-induced orbital decay can operate. It is estimated that there are also 31 non-operational nuclear reactors which used NaK coolant between 700–1500 km in altitude from Russian radar ocean reconnaissance satellites (RORSAT) launched in 1967–1988. In 1977, the Cosmos-954 reconnaissance satellite, carrying an onboard nuclear reactor supplied with 50 kg of uranium-235, began behaving erratically and re-entered Earth’s atmosphere without ejecting its reactor into a safe orbit as intended and scattered radioactive debris over a 600 km track in northern Canada. Although no injuries resulted, the cost of the subsequent clean-up operation was considerable. Although the probability of injury from re-entered debris is very small, in 1997, a piece of a spent Delta rocket struck a woman in Tulsa Oklahoma (though without injuring her) demonstrating that physical injury on Earth from space debris is plausible if remote. In LEO, the probability that a 10 m2 satellite will be impacted by trackable debris is 1 × 10−4/y, which increases to 2 × 10−3/y for smaller untrackable debris. The estimated risk of a single debris collision in GEO between tracked objects is around 3% over a 30-year mission lifetime. As debris collides with satellites, they fragment into clouds of further debris. The orbital debris population is tracked and mapped by the US Space Surveillance Network—detectable objects are larger than 10 cm in LEO and larger than 1 m in GEO though new telescopes being constructed will reduce these limits significantly.

It has become common operational practice to implement manoeuvres of active spacecraft to avoid collision with debris—for example, the ISS was manoeuvred into a 1 km higher orbit in 2001 to avoid collision with a Russian SL-8 upper stage (launched in 1971), and since then has had to be manoeuvred on average twice every year. In 1996, the boom of the French satellite Cerise was struck by debris from an exploded Ariane launcher shroud, although the mission was recovered through software workarounds. Indeed, NASA has developed a collision risk assessment process for its all high-value robotic spacecraft to prevent loss by collision with debris [23]. Satellite operators are being forced to undertake evasive manoeuvres on an increasingly frequent basis at the cost of scarce fuel—the defunct Telstar 401 and Galaxy 15 satellites have threatened operational satellites on several occasions.

Although there is a natural atmospheric cleansing process operating in LEO below 800 km altitude, it is the LEO population at 800–1000 km sun-synchronous polar orbits around 86–110° inclination that are close to the Kessler limit, which is expected to be reached by 2055. The Kessler limit is the point beyond which the debris population becomes self-perpetuating and grows uncontrollably [24]. The average growth rate of debris over the past 50 years has been around 300 objects/year but this is accelerating. Even if no further satellites were launched, the number of objects larger than 10 cm in the most populous ring (900–1000 km) will treble over the next 200 years due to debris-debris collision, increasing the collision rate by 10 times. The primary culprits for accelerating attainment of the Kessler limit were two specific events, one accidental and the other deliberate:

- (i)

- In 2007, a Chinese anti-satellite test on its defunct meteorological satellite Fengyun FY-1C generated 2400 debris pieces larger than 10 cm and 35,000 pieces below the resolution limit at 860 km altitude—too high for natural atmospheric cleaning—increasing the space debris population by 30%. By contrast, a US ship-mounted Aegis ballistic missile was launched in 2008 in response, destroying one of its ailing spy satellites USA 193 at a low altitude of 250 km to prevent uncontrolled re-entry to Earth and to maximise the rate of atmospheric sweeping and so minimise debris.

- (ii)

- In 2009, a 10 km/s collision occurred at inclination 72° N between the operational Iridium 33 satellite (at 86° inclination) and the decommissioned Cosmos 2251 (at 74° inclination). This generated two clouds of debris, (598 Iridium fragments and 1603 Cosmos fragments larger than 10 cm) at 790 km altitude.

Russian debris also collided with and terminated the mission of Ecuador’s first and only satellite, Pegasus, in 2013. Large objects can collide generating large amounts of debris—such breakups are estimated to occur at around 4–5 fragmentation events per year. If a 10 cm3 debris fragment collides with a 1200 kg spacecraft, ~106 fragments of ~mm size can be produced. Similarly, discarded rocket stages with residual fuel can explode generating large numbers of debris fragments. The collisional cascading effect of the Kessler syndrome will soon cause entrapment of the Earth.

Space debris has become a significant problem at all satellite-populated orbits, including GEO (Table 3). The primary source of debris resides in GTO (geostationary transfer orbit) within which some 239 objects intersect into the GEO ring. Within GEO itself which represents the greatest collision hazard, there are 1036 large tracked objects, only 340 of which are operational. However, a significant debris population of some 2000 small-scale objects greater than 10 cm size has been detected in GEO and GTO, in addition to the 1036 large objects presumably generated by fuel explosions of spent upper stages [25]. The most heavily spacecraft-populated longitudinal slots are around 75° East longitude (over India) and 105° West longitude (over the Pacific), primarily for telecommunications. The chief problem is that uncontrolled GEO platforms drift to these stable regions due to solar and lunar gravitational forces: between 1997–2003, 34 satellites were abandoned (mostly Russian), of which 22 librate over India and 10 over the Pacific. The probability of collision is given by [26]:

where ρ = spatial density (n/km3), vrel = relative collision velocity (km/s), A = collision cross-section of satellite (km2) and Δt = temporal duration of risk. Unlike at LEO, there is no natural cleansing mechanism at GEO.

Table 3.

Debris population in different utility orbits.

The UN General Assembly COPUOS (Committee on the Peaceful Uses of Outer Space) recommend guidelines to minimize further debris generation [27]. The primary means for debris mitigation must be the prevention of on-orbit breakup and de-orbit or re-orbit of dysfunctional satellites. There are three strategies for the mitigation of space debris [28]: (i) prevent on-orbit breakup; (ii) reduction of object creation during the mission; (iii) removal of objects after mission completion. It is the last option that concerns us here for which there are four further options [29]: (i) built-in self-disposal into graveyard orbits; (ii) robotic sweepers to remove small debris; (iii) retrieval of large objects such as spacecraft and spent stages; (iv) re-use of spent hardware (on-orbit servicing). To minimise debris in GEO, it is recommended by the International Telecommunications Union (ITU) that space assets are self-disposable and manoeuvred into a graveyard orbit at a minimum altitude h above GEO given by:

where CR = solar radiation pressure coefficient, A = spacecraft cross sectional area and m = spacecraft mass. This equates to an altitude range of 300–500 km higher than GEO, which equates to approximately 3 months of income-generating mission operations. With GEO satellites, there is a high compliance rate of 80% of 160 EOL satellites being boosted into disposal orbits close to 300 km above GEO despite there being no legislation [30]. However, early mortality can prevent such boosting, e.g., Skynet 4b was boosted into 150 km altitude above GEO. The GSV (geostationary servicing vehicle) was a proposed service concept for the disposal of spent satellites into graveyard orbits 245–435 km above GEO [31]. However, the current GEO graveyard will not be sustainable as its population grows. It has been proposed the GEO satellites might be delivered to a “Necropolis” at 36,386 km altitude (600 km above GEO) where would reside an 8 tonne truss-based stack (terminus) with a 12 m tower to which dead comsats are transported and attached by a 2.4 tonne ion engine-propelled tug spacecraft (hunter) with 0.5 tonnes xenon propellant [32,33]. End-of-life comsats would inject themselves into this new graveyard orbit, but failed comsats would be ferried. To prevent the explosions in upper stages that generate large numbers of fragments, a European code of conduct recommends that European launcher upper stage tanks be fully vented [34].

To minimise debris in LEO, it is recommended, but not mandatory, to ensure that space assets are removed within 25 years of EOL (only 14% and 8% of LEO spacecraft complied with this recommendation in 2010 and 2014 respectively). Perhaps even a 50-year removal timescale might suffice if it were observed [35], but this has never been realised. There have been recent proposals for large constellations of thousands of small satellites such as up to 12,000 for SpaceX. Existing de-orbiting strategies will require much more aggressive solutions than the current 25-year rule. DAMAGE (debris analysis and monitoring architecture to the geosynchronous environment) indicates that there is a nonlinear increase in the number of catastrophic collisions with constellation size [36]. An increase in cross section from 1 m2 to 6 m2 resulted in a much higher collision rate, but extension of satellite lifetime from 3 to 10 years reduced debris generation by 30%. If the strategy of satellite removal within 25 years were enacted in large constellations in LEO, it would obviate the mitigation effects of this strategy, and the prospects for extending it appear bleak [37]. Hence, self-disposal appears to be ineffective at LEO.

Removal of debris is the only effective solution, but small-scale removal of debris will likely be ineffective [38]. Debris sweeper concepts are based on the use of large vanes rotating like a windmill, but such solutions require very large areas. The only effective solution is the active removal of large space debris by deorbiting, especially in critical sun-synchronous orbits, to prevent them from collisionally fragmenting [39]. It has been determined that it would suffice to remove 5–10 large pieces of debris per year to prevent debris population growth in LEO [40]—these could begin with the large 26 m long Envisat spacecraft and 20 Zenith upper stages, especially in polar orbits close to 1000 km altitude [41]. In addition, some 295 identical SL-8/Kosmos upper stages reside in near circular orbits clustered around 760 km/74°, 970 km/83° and 1570 km/74°. This strategy is sensitive to several conditions such as launch rates, compliance with debris mitigation measures, etc. [42]. A strategy that includes both post-mission disposal and active debris removal offers the best approach—a reduction of disposal timescale by one year reduces the annual number of collisions by 0.53 and the removal of one object reduces the annual number of collisions by 2.94 when employed synergistically, i.e., one active debris removal equates to a reduction in post-mission disposal of 5.5 years [43].

Issues that must be addressed during close proximity operations include minimisation of breakup and debris generation during debris removal. The robotic scenario comprises several well-defined phases: (i) approach—chaser acquires position around 10–25 m away aligned to the target with manipulators stowed; (ii) deployment—manipulators are deployed to capture the target by specific grapple points; (iii) grasp—manipulators engage the target at the grapple points; (iv) post-grasp—manipulators are locked and detumbling manoeuvres performed; (v) de-orbit—apogee engine kit is attached for de-orbit manoeuvre to drop perigee to <650 km altitude and engaged (for a 25 year decay by atmospheric drag). A representative scenario has been described in [44]. H-bar approach and approach along orbit motion is passively unsafe, favouring an approach against orbital motion [45]. Passive approaches to de-orbiting debris exploit perturbing forces such as aerodynamic surfaces, electrodynamic tether, etc. A passive strategy that exploits solar radiation pressure and Earth’s oblateness could be adopted for MEO spacecraft such as global navigation satellites but requires high area-to-mass ratios except for satellites in Molniya orbits [46]. A chaser satellite mounting a robotic arm, robotic tentacles, a harpoon or a deployable net have been the commonest proposals for debris removal. The robotic arm and harpoon require precise targeting and the robotic arm and tentacles require close proximity to the target. ROGER (Robotic Geostationary Orbit Restorer) was a net-based concept for GEO satellite recovery to reduce the incidence of large space debris [47]. Two potential capture mechanisms were envisaged—a net capture or tether-based gripper with the former favoured—to stabilise and dampen its relative rotation to zero. The expandable net capture system is based on the deployment of four flying weights to pull out the net. The tether gripper system is a tether deployed free flyer system front end. ESA’s e.Deorbit project before its cancellation was to involve robotic arms to capture its target [48] while the EC’s removeDEBRIS project will explore harpoons and nets for de-orbiting at LEO. Tethered harpoons with conical tips have been proposed for attaching a chaser to debris with a safe standoff distance with perforation energy given by De Marre’s formula: where k = 37 × 107 for aluminium, d = cone base diameter, b = target thickness. Harpoon tests at low temperature indicate brittle fracture in the target but without spallation or fragmentation into the internal space [49]. Tethered nets on the other hand can cause damage to appendages on spacecraft releasing debris. Harpoons and nets are the most likely capture mechanisms to cause breakage and the creation of more debris. Furthermore, both are remote capture mechanisms that are not compatible with tumbling spacecraft targets. Towing with flexible tethers introduces problems with employing thrusters to accelerate/decelerate. A mechanical tether may be employed to re-orbit debris into disposal orbits [50,51] but they are impractically long. This suggests that the e.Deorbit approach was more versatile than that of removeDEBRIS.

Debris mitigation is a role to which robotic on-orbit servicers can naturally adapt themselves. Robotic capture is the most controllable approach in which the debris is captured, manoeuvred to a lower orbit (at LEO) or graveyard orbit (at GEO) and/or a de-orbit device attached (such as propulsive units, tethers, sails or ballutes). Capture requires that the robotic spacecraft control its orbit and attitude to reduce the relative motion between the chaser and the target. A Kalman filter algorithm must estimate the target motion in order to plan the gripper trajectories for grappling with minimum impact [52]. Many of the critical dynamic parameters—specifically kinematic variables—of the target can be obtained by estimation through the Kalman filter based on visual data. CAD models can provide accurate estimates of mass and moment of inertia, but it is feasible to estimate these values if only approximately if shape has been determined visually and assuming a generic spacecraft model with assumptions regarding mass distribution—for a common launch shroud, this should be feasible. Critical to robotic capture is the implementation of force/torque control to acquire and brake the debris target. It has been proposed that electrostatic torques may be employed to detumble the target without physical contact to <1°/s from the typical 30°/s tumbling [53]. This involves controlling the servicer’s electrostatic potential using an electron gun to beam charges to the closest feature on the rotating target. However, the feasibility of this approach has yet to demonstrated. An extendible brush of PTFE bristles at the tips of the robotic fingers may be employed to contact and tap the surface of the target to reduce high rotation rates to a manageable level for robotic capture [54]. The frictional force for a bristle model is defined by:

where where δ(t) = bristle displacement, , k = bristle stiffness, = relative tangential velocity, δmax = maximum bristle displacement, μ = surface friction. During braking, joint error control may be based on impedance control:

where FB = braking force on target, θi = joint angle, = joint stiffness, JT = Jacobian transpose, which relates stiffness at the end effector to stiffness at joints. During capture, an active compliance control law is given by:

where Ii = joint i inertia, τi = measured joint torque, bi = joint viscosity coefficient. It has been determined that for handling and stabilising upper stages during de-orbit, the appropriate clamping points are at the payload fairing adaptor ring, which is designed to tolerate clamping forces and torques [55]. Two or three manipulators permit distribution of grappling forces/torques equally around the ring. For example, the DLR arm can capture a 7-tonne satellite spinning at 4°/s without exceeding its maximum torque limit of 120 Nm. Post-capture detumbling can exploit the kinematic redundancy of the spacecraft-mounted manipulator [56].

Active de-orbiting of debris requires propulsive capabilities attached to the target to ensure disposal. Re-entry may be controlled or passive—controlled direct re-entry is more efficient than passive uncontrolled re-entry, but the latter is simpler. Controlled de-orbiting is essential to remove larger pieces of debris ~500–1000 kg which could potentially survive re-entry. Nominally, this requires robotic attachment of a propulsion stage to the debris to de-orbit it. Contactless methods of disposing of debris include ion beam shepherding. This involves generating a beam of ions from two sets of ion engines along the same axis by pointing in opposite directions. The chaser directs one ion beam onto the debris to generate thrust on it. The reaction thrust on the chaser is cancelled by the other engine, generating an opposing thrust to maintain a constant distance to the debris. Solar concentrators have been proposed to deflect asteroids by inducing thrust from ablated material, a technique that may be adapted to active debris disposal [57] but requires dynamic stability. Electrodynamic propulsion offers a potentially propellantless option for debris transport [58]. A 1–5 km long conducting electrodynamic tether which mounts solar panels rotating at 6–8 times every orbit generates current which interacts with the Earth’s magnetic field. Electrodynamic thrust is generated when current flows through a conductor in the Earth’s geomagnetic field. The conductor must close the current loop through the ambient plasma by electron emission through hollow cathodes and electron collection to the bare conducting cable. The tether is attached to the debris and uses electrodynamic drag generated by Lorentz JxB forces to remove debris from low Earth orbit [59]. Lorenz forces allow it to manoeuvre by altering the current to match the orbit of the target to be captured by a robotic hand or net. ElectroDynamic Debris Eliminator (EDDE) uses a long conducting tether to manoeuvre in the Earth’s magnetic field powered by solar arrays but consuming no propellant. Small nets at either end of the tether with solar arrays attached at the middle catch debris in the nets and release them at lower altitude to increase the rate of orbital decay. A dozen 100 kg electrodynamic debris eliminators (EDDE) mounted onto one launch adaptor ring can remove 80% of all 2 kg + objects in LEO (concentrating on 71–74°, 81–83° and sun synchronous orbits) in 7 years (1000 tonnes in total). The electrodynamic tether linking the chaser and the target debris captured by a compliant grappling end effector at the end of the tether is a variation on the concept [60]. However, electrodynamic tethers are still subject to a significant probability of being severed by debris, though this be can partially obviated through double line tethers [61]. A deployable large-area drag-sail or ballute may be used to de-orbit debris from LEO below 1000 km altitude [62]. SSTL’s TechDemo-Sat-1 was launched in 2014 fitted with a deployable de-orbit sail acting as a drag brake to orient the satellite target for de-orbiting and re-entry disposal. However, deployed drag devices introduce the potential for further collision in crossing multiple orbits with a wide area membrane while rocket apogee engines impose a prohibitive propellant and mass requirement.

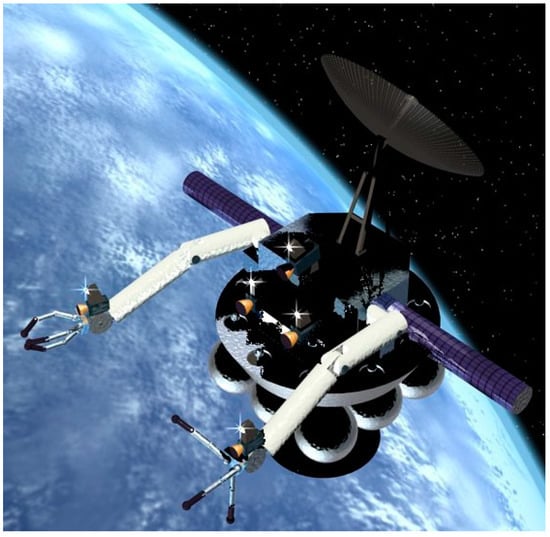

Nevertheless, the most plausible solution for de-orbiting is to either attach a dedicated propulsive stage to de-orbit the debris sacrificially or to employ a reusable, refuellable robotic system that acquires the debris and releases it in a disposal orbit. It has been determined that a single 2000 kg robotic spacecraft (of which 1400 kg is propellant) could remove 35 upper stage rocket bodies from 700–900 km polar orbit using solid rocket de-orbit kits over 7 years with 8 resupply missions [63]. This is similar to the ATLAS concept introduced later (Figure 1). Scheduling of consecutive debris removal operations is an NP-hard travelling salesman problem which has a branch-and-bound solution to minimise the propellant cost and manoeuvre time that is tractable for 5 removals but not for 10 [64]. A branch-and-prune strategy using low-thrust propulsion may be more efficient [65]. A multi-servicer approach invites ant colony optimisation routing and coordination through auction bidding [66]. In GEO orbit, the optimal schedule is to manoeuvre in an anti-orbit direction for minimum Δv [67]. Approximately, 400–500 m/s manoeuvres are required to acquire each target and 250 m/s is required for de-orbiting the upper stage rocket body. It appears that the robotic servicer concept with mounted manipulators is the most viable for active debris removal.

Figure 1.

Dual manipulator servicer ATLAS (advanced telerobotic actuation system) concept.

5. Space Manipulator Operations—Evolution from Teleoperation to Autonomy

All robotic systems employ cameras for visual feedback, and there are several common camera configurations. The camera configuration for a space robot typically comprises at least one mast-mounted binocular camera mounted onto the spacecraft for a global view of the workspace and another camera mounted onto the elbow and/or wrist (eye-in-hand) for close-up observation of manipulation tasks. For example, SRMS mounted one pan-tilt camera at the elbow and another at the end-effector; similarly, SSRMS mounted one pan-tilt camera at each side of the elbow and one pan-tilt camera at each end-effector. Vision-based relative navigation for inspecting uncooperative satellites have been addressed using a stereocamera and three-axis gyroscopes [68]. On-orbit servicing manipulation requires more complex vision processing under variable illumination conditions, however. One plausible assumption on servicing satellite targets is that partial cooperation with attitude control may be available but no visual markers are likely to be discernible [69]. The vision system will have to employ template recognition methods derived from CAD files. However, an unscented Kalman filter can estimate shape and relative attitude, position and velocity of a satellite target by building a 3D map of points from features detected by a monocular camera and LIDAR on the chaser [70]. This suggests that template-matching with a priori data may be dispensed with, but a priori model data does yield superior accuracy [71]. There are multiple transforms between coordinate frames to convert between cameras, pan-tilt units and the end effector [72]. Pose-based visual servoing provides closed-loop feedback control from the error between desired and actual poses in images of the 3D workspace. Pose-based visual servoing is based on the image Jacobian that relates the 3D object pose to the 2D image. The target coordinates (xt,yt,zt) are related to equivalent camera coordinates (xc,yc,zc) by a 3 × 3 rotation (direction cosine) matrix. A pinhole camera model relates 3D camera coordinates (xc,yc,zc) to image projection coordinates (x,y):

where f = camera focal length. To compensate for jittering and other perturbations, an extended Kalman filter may be employed using the pin-hole camera model [73]. In image-based visual servoing, only image coordinates are computed. Feature-based servoing is the most common form of visual servoing [74]. Features begin with object image definition—the “snake” (active contour model) is a deformable curve that extracts object shape by shrink wrapping around object contours on an image based on energy minimisation [75]. The energy minimum lies on the edges of the object image and requires the computation of gradient masks. Feature matching may be achieved through the principle of maximum entropy. To robustify image-based visual servoing, the extended Kalman filter may be employed to estimate feature motion in images [76]. Kalman filters are suited to visual object tracking [77] and an adaptive extended Kalman filter has been applied visual servoing of non-cooperative satellite targets for manipulator capture [78]. Intensity-based servoing offers robustness without the need for feature extraction or pose estimation [79]. Generalised angle representation which is translation, rotation and scale invariant offers a rapid means of computing shape [80]. Laser scanning offers a means to supplement vision with range-finding uncorrupted by scene illumination [81]. The photonic mixer device (PMD) camera exploits phase shift measurements (rather than intensity) to extract range and generate 3D images making them ideal for spacecraft pose estimation at short range during capture [82].

Careful attention must be paid to human-computer interfacing (HCI) technologies for robotic servicer missions [83]. HCI defines the nature of ground station support to the servicer and is critical to the function of the robotic spacecraft. In teleoperative mode, the ground operator controls the remote robot directly through bilateral reflection of operator movements, e.g., Lunakhods 1 and 2 on the moon. End-effector (egocentric) referenced control gives enhanced performance over fixed world (exocentric) referenced control [84]. A minimum time of 20 min is required to capture a tumbling target—this will require more than one ground station for LEO operations which typically has an 8-min ground contact window. Alternatively, a GEO relay such as TDRSS may be used, but this introduces significant signal time delays. There are several challenges to teleoperative on-orbit servicing missions—time delay and jitter [85]. Time delays in teleoperation of a slave manipulator at a remote site by a human operator (master) invoke move-and-wait behaviours in which human input is followed by waiting for the response before initiating further corrective inputs. This is inefficient. PID controllers are sensitive to time delays due to the integral term as it reacts too quickly to errors. The Smith predictor is a model-based predictive controller [86]. It feeds back the predicted response immediately and the prediction error once the real response has been measured after the time delay. There are two feedback loops—a normal outer feedback loop that feeds the output of the plant back to the input subject to time delay and an inner feedback loop which predicts the current unobserved output of the plant. The Smith predictor at the master (operator) side in relation to the slave (remote) side is given by:

where Δt = two-way time delay, = delayed plant, = predicted plant model without delay, τ = plant time constant. Stability requires an accurate model of dynamics to minimise prediction errors and stability is not guaranteed unless model errors are zero. The Smith predictor compensates for known constant time delays of short duration. It is sensitive to mismatches between the actual Gs(s) and estimated . A Kalman filter may be employed to reduce the estimation error. The most common approach to overcoming the problem of time delays is to employ predictive graphical displays [87,88]. Predictive displays involve graphical models of the slave robot and its environment which project its predicted behaviour to compensate for time delays. The higher the fidelity of the model to reality the better the prediction but this has computational costs. It has been demonstrated with the ETS-VII satellite that 7 s time delays in the ground teleoperation control loop can be accommodated readily through predictive graphics based on environmental models [89]. However, this assumes an environment that exhibits only very low-speed dynamic behaviour and is highly predictable. ETS-VII’s environment was a controlled target, but this will not be so for active debris removal. Reduction of the 5–7 s time delay in GEO-based teleoperation may be achieved through dedicated high-capacity lines from the ground station directly connected to the antenna (rather than across traditional internet routing and protocol overheads), which can reduce round trip time delays to GEO to <1 s. There will still be time delays however for LEO operation and beyond through TDRSS. Time delays between the transmission of the commanding signal to the remote robot and the return of feedback to the operator beyond 1–2 s introduce stability issues.

The implementation of force/tactile/haptic feedback control introduces further significant instability difficulties inherent in such time delays. Direct bilateral force teleoperation is based on haptic feedback from the remote robot (slave) to the human operator (master) [90]. A haptic interface is a bidirectional device that permits the human operator to experience the applied forces at the remote site at high update rate. Transparency requires that the impedance from the remote site Ze must be transmitted to the human operator Zt without distortion such that where fm,s = master/slave forces, vm = master/slave velocities. However, perfect transparency requires zero inertia and infinite bandwidth through instantaneous channels. Direct bilateral force-reflecting feedback is a major problem for time-delayed teleoperated systems in generating instability due to mismatched dynamics. Time delays in transmitting force feedback from the slave to the master generate instabilities particularly beyond 500 msec corresponding to human reaction speed. Any latent time delays beyond this tend to increase task error rates and completion times due to move-and-wait strategies being adopted by the human operator. The robust H∞ controller with μ-synthesis can provide stability for prespecified short time delays only [91]. In this case, the use of calibrated 3D graphical predictive displays overlying the actual view of the remote robot permits the operator to interact with the models in real-time [88]. High model dynamics fidelity and accurate least squares calibration is essential. Impedance control has been proposed for bilateral force reflection in teleoperation [92]. Short time delays of up 100 msec have the effect of increasing effective impedance during capture operations while reliable capture favours minimising manipulator impedance to minimise the manipulator contact force [93]. A real-time teleoperative link with telepresence requires a very low latency of ~50 msec or less for effective force reflection. Nevertheless, force feedback of remote forces with a PD feedback controller may be used under significant time delays ~1–2 s and beyond, albeit at much reduced task performance rates. Force reflecting hand controllers can utilise attractive and repulsive potential fields of virtual forces at the remote end effector to form virtual corridors that output predictive forces at the hand controller to indicate the desired motion required by the operator [94,95].

The main approaches to dealing with stable teleoperation with constant time delay are [96]: (a) passivity theory; (b) hybrid compliance control/impedance matching control; (c) model predictive control; and (d) adaptive control. Shared compliant control involves autonomously closing the feedback loop at the robot (slave) side which also employs a passively compliant hand the response of which was fed back to the human operator [97]. It involves reducing the force reflection signal by reducing the error between the master and delayed slave positions. Model predictive controllers which implement learned models will possess similar advantages to predictive graphics. Passivity-based approaches do not require models of the environment, unlike predictive approaches. Passivity theory dictates the transfer of velocity and force information between master and slave [98]. A system is passive if the power of the system is either stored or dissipated:

If power dissipation is positive, the system is passive. Adding a dissipative term to both master and slave PD controllers introduces passivity to guarantee stability [99]:

where = master position error, = master velocity error, = slave position error, = slave velocity error, kd = Δtkp = dissipative gain, Δkd = additional damping, Δt = time delay. There is also a considerably more complex adaptive version of passivity stable to positional drift between master and slave [100]. The master/slave joint torques are determined by the adaptive regressor and synchronised coordinating joint torques and for master and slave arms respectively:

where , , = reference position tracking, Yl(.) = known dynamic parameters, = estimated unknown dynamic parameters, l = master or slave, λ = scale constant > 0, K = gain constant > 0. Adaptive bilateral hybrid position/force control synchronisation of master-slave teleoperation may be enforced through the imposition of holonomic constraints [101]. These schemes require a constant time delay Δt.

For varying time delays, wave variables compute energy flows to determine excess energy and eliminate force reflection waves between the remote and operator robotic devices by matching their characteristic impedances [102,103]. Velocity and force at the master and slave are encoded into time-delay robust wave variables which are subsequently transmitted between master and slave manipulators:

where Z = wave impedance, which tunes the degree of stiffness/compliance. Wave-based communications occurs at a natural frequency , where Δt = time delay. As wave variables encode velocity and force, so wave integrals encode position x and momentum p:

Wave variables automatically exhibit passivity. Wave variables can be combined with Smith predictors to impart stability to the Smith predictor. More recent approaches have incorporated sliding mode controllers and generalised predictive controllers [104].

It has been suggested that passivity-based methods offer guaranteed stability to time delays over shared compliance control which can only tolerate maximum time delays of the order of human reaction speed of ~0.1–0.2 s, but experimental results dispute this limitation [105]. The tele-impedance approach is an alternative to bilateral force-reflecting teleoperation which combines position reference with a stiffness reference, the latter being determined from non-intrusive EMG (electromyographic) measurements from the human operator’s arm [106]. Spring-like compliance at the remote end effector in contact-and-grasp tasks on hard environmental objects dramatically improves teleoperation performance and alleviates the problem of rapid buildup of transient forces on collision [107,108]. Specific applications of teleoperation with time delays of 5–10 s are discussed in [109], which concludes that predictive simulation is essential in time-delayed teleoperation. The implementation of predictors and simulators introduces aspects of autonomy—this is the telerobotic mode. In telerobotic mode, the ground operator uploads designated waypoints which the robot implements locally by autonomously planning and executing a global path while avoiding obstacles, e.g., Sojourner on Mars. The evolution toward autonomy is important for several reasons, but mostly because it requires considerable computational resources onboard. This places a premium on solving manipulator control problems as low down the control hierarchy as is feasible.

6. Freeflyer Manipulator Kinematics

In rendezvous and docking (RVD) manoeuvres, the chaser moves to a proximity position ~100–300 m from the target before manoeuvring a series of inspection flyarounds culminating in a V-bar dock [110]. V-bar involves manoeuvring the chaser horizontally along the same orbital velocity vector of the target; R-bar involves manoeuvring the chaser radially along the radial vector from a different orbit than the target. The relative (circular) orbits of the chaser spacecraft and the target spacecraft are described by the Clohessy-Wilshire version of the Hill equations with respect to the target satellite. The Clohessy-Wiltshire equations describe the phasing manoeuvres for a rendezvous between the chaser and the target. In approaching a tumbling satellite, the chaser matches its angular velocity with the line of sight rotation of the target before grappling. Our concern here is primarily with the subsequent phases of a servicing missions.

A spacecraft mounting one or more manipulators comprises a chain of multiple bodies of n + 1 rigid bodies connected by n single degree of freedom joints. The first body i = 0 is the spacecraft mount, from which n serial links emanate (plus payload n + 1). The dynamics of this multibody system are based on the conservation of momentum. The barycentric approach to multibody dynamics also locates the system centre of mass. The mounting point of a spacecraft-mounted manipulator is not fixed inertially (unlike in a ground-based manipulator). Furthermore, the system operates under zero-gravity conditions. Inertial coordinates are defined with respect to the system centre of mass which is invariant (i.e., a locally inertial coordinate), and spacecraft base coordinates are defined with respect to the centre of mass (centroid) of the spacecraft base. There are two types of dynamic situation: (i) freefloating systems in which the manipulators are the only actuators, so spacecraft attitude is nominally uncontrolled (with six joint actuators controlling a 12 degree of freedom system, this is an underactuated (redundant) system [111]); (ii) freeflying systems in which the manipulators are controlled by manipulator actuators while spacecraft attitude is controlled by attitude actuators. Due to dynamic coupling, motion of the manipulator affects the position and attitude of the spacecraft base in both cases. Manipulator joints incorporate spinning motors which act like gyroscopes on rotating levers with strong Coriolis and centrifugal forces [112]. For example, SRMS had motor gear ratios of 749:1 to 1842:1, so the motors were spinning up to 2000 times the link rotation, enabling high torque amplification at the manipulator joints, but with a significant effect on attitude stabilisation. In freeflyers, the spacecraft base is attitude controlled. While translational control requires the expenditure of propellant, attitude control does not necessarily require fuel expenditure. Although it is possible to stabilise the spacecraft position as well as attitude, this would require the expenditure of propellant thereby limiting the viability of such a spacecraft. Spacecraft position must therefore be uncontrolled in both freeflying and freefloating conditions. Currently, robotic manipulators are operated in free-floating mode with uncontrolled attitude. However, this requires constant adjustment on behalf of the ground operator. For example, the ETS-VII mission employed resolved rate control using the generalised Jacobian computed on the ground, so the attitude control system was switched off [113]. This caused the end effector pitch error to increase rapidly at 0.5–1° over several minutes until compensated for at the end of the motion by reaction wheels. Hence, the generalised Jacobian did not implement effective attitude control due to deficiencies in the available hardware (not the algorithm itself). Gravity gradient torques acting on the ETS-VII spacecraft over short timescales generated free drift of the spacecraft. Given that accuracies of 0.01–0.1° are required for grasping during the final target approach, attitude should be controlled. The control of attitude is also essential to maintain pointing requirements of solar panels to the sun and antennae to the Earth. Active attitude control is desirable particularly under conditions of higher levels of autonomy. A dedicated attitude control system will be required when the manipulators are not in operation, and indeed, all spacecraft possess attitude control systems to compensate for attitude perturbations. Both the SRMS and SPDM had limited angular position accuracy of 1° compared with <0.1° for reaction wheels. The spacecraft base attitude may be controlled using internal angular momentum management devices such as reaction wheels, momentum wheels and/or control moment gyroscopes. The wheels or gimbals are mounted in orthogonal directions often with a fourth skewed wheel for redundancy. The strawman robotic servicer that we have adopted is the ATLAS (advanced telerobotic actuation system) concept for LEO servicing with a wet mass of 1425 kg (of which 476 kg is propellant) (Figure 1). It is configured with two identical manipulators with a mass of 45 kg each (with a similar kinematic structure as the PUMA 560/600 but with inline outboard joints from the shoulder), including grappling end effector but excluding payload (assumed to be a 225 kg ORU typical of MMS design). We consider initially that one manipulator remains stowed to emulate single-manipulator operation and it employs control moment gyroscopes for three-axis stabilisation.

Lie groups is an alternative representation of kinematics that uses the product of exponentials formulation [114,115], but we have adopted the more familiar and more intuitive Denavit-Hartenburg matrix representation. Manipulator kinematics define the relationship between the manipulator joint angles, link geometry and the end effector position and orientation. For ground-mounted manipulators, they are given by:

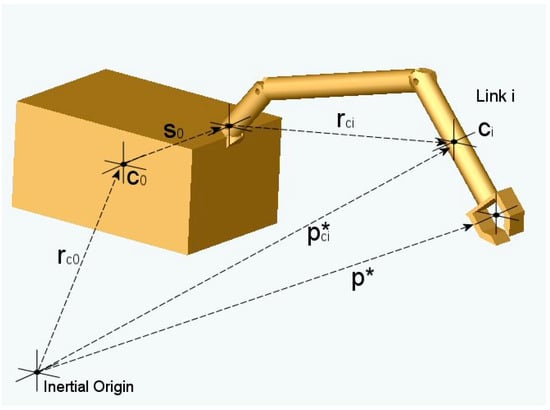

where q = cartesian generalised position/orientation of end effector, = cartesian generalised velocities of end effector, = cartesian generalised accelerations of end effector, v = translational cartesian end effector velocity, w = angular cartesian end effector velocity, θ = manipulator joint angles, = manipulator joint velocities, = manipulator joint accelerations, f(θ) = cumulative Denavit-Hartenburg transform, J = manipulator Jacobian, = differential of Jacobian. For control purposes, these need to be inverted to yield joint kinematic parameters in terms of cartesian kinematic parameters. For a space-based manipulator, the reference body is typically selected to be the mounting spacecraft though it can be any body of the kinematic chain [116] (Figure 2). The situation for space-based manipulators requires the imposition of constraints—conservation of momentum—to yield solutions.

Figure 2.

Kinematic configuration of a single-manipulator mounted spacecraft servicer.

Holonomic systems can be integrated from velocity relations to position relations whereas nonholonomic systems cannot be so integrated. Conservation of linear and angular momentum states:

Conservation of linear momentum as a holonomic system can be integrated into the equilibrium of moments principle:

Conservation of angular momentum is not integrable due to the non-commutativity of rotation and so is a non-holonomic constraint. The non-holonomic character of the freefloating system arises due to the non-integrability of angular momentum. Different end effector paths to the same cartesian end effector position will result in different spacecraft attitudes. Solutions can be obtained through the introduction of manipulator “coning” motion onto the desired manipulator trajectory to maintain attitude stabilisation, but this is a rather ungainly approach, as well as having a high risk of collision. With two arms, one can perform the required task while the other undergoes coning motion to stabilise the base “bucking bronco” style, but the ungainliness, as well as collision hazard, remains. The holonomy/non-holonomy division suggests that separate treatment of linear kinematics and angular kinematics may be warranted. While it is possible to separate the motion of the spacecraft base and the mounted manipulator [117], a more natural separation is between the linear and angular aspects of the system dynamics.

We have adopted a 6-degrees-of-freedom manipulator, but such manipulators can suffer from singular configurations. A singularity occurs when the manipulator Jacobian loses rank, i.e., a degree of freedom. Singular configurations may occur when |JTJ| = 0, but they are predictable from the kinematics. From this, manipulability is defined by . To eliminate singularities, redundant joints may be added. It is often assumed that the spacecraft-mounted manipulator possesses 7 degrees of freedom for kinematic redundancy for greater manipulability, e.g., SSRMS. Kinematic redundancy offers great flexibility of postures for any given task but it requires the Moore-Penrose pseudoinverse of the Jacobian matrix for solution, where = Moore-Penrose pseudoinverse. Several performance conditions can be incorporated:

where represents a projection onto the nullspace of J while z is the nullspace vector. For example, the damped least square inverse Jacobian includes a damping factor for greater smoothness in the neighbourhood of singularities [118,119]: where λ = damping factor. The additional redundant degree of freedom comes at the cost of additional mass imposed by the extra joint and the Moore-Penrose pseudoinverse is more complex to compute compared to the standard Jacobian. Since a freeflying servicing spacecraft provides a re-orientable spacecraft mount for the manipulator, a 6-degrees-of-freedom manipulator may be more appropriate (though the formulation we present is applicable to any kinematic configuration).

We have generalised the approach used for a shuttle-mounted manipulator employing attitude control [120,121] to any kinematic configuration [122,123]. This is the freeflyer approach. For a freeflying manipulator with n links plus a payload n + 1 mounted on a spacecraft of mass m0 employing dedicated attitude control of the spacecraft mount, the Denavit-Hartenburg matrix may be represented as:

where = R = 3 × 3 rotation matrix identical to terrestrial manipulators, n = 3 × 1 normal vector, s = 3 × 1 slide vector, a = 3 × 1 approach vector

= inertial end effector cartesian position

= system centre of mass location (inertially invariant) from equilibrium of moments

= lumped kinematic link parameter, mT = total mass of the system, pci* = inertial position of body i centre of mass, rc0 = vector from inertial coordinates to spacecraft base centre of mass (initially coincident), rn+1 = vector from the end effector to the payload centre of mass (virtual stick), R0 = diag(111) = nominal fixed spacecraft attitude, Ri = rotation matrix for link i with respect to joint i − 1, li = ri + si = length of link i, ri = vector from joint i − 1 to link i centre of mass, si = vector from joint i to link i centre of mass. Our formulation is very similar to that of several others. The connection barycentre defines the location of the centre of mass of each link by weighting joint i by the mass of inboard and outboard links [124]:

The virtual manipulator (VM) approach assumes a serial link chain of zero mass with its base fixed in inertial space at the spacecraft-manipulator system centre of mass (virtual ground) and whose end effector coincides with that of the real manipulator [125,126]. The dynamically equivalent manipulator (DEM) is similar to the VM approach in that it is a virtual manipulator with its base fixed to the centre of mass of the spacecraft-manipulator system. Although the spacecraft base is fixed, the first joint at the manipulator mount is spherical and passive with the end effector coinciding with the real one [127]. According to the dynamically equivalent manipulator model (DEM), the position of the spacecraft mount is given by:

where and

The difference between our formulation and the others is that our formulation maps from joint to joint rather than link centroid to link centroid—this is essential as a step towards driving the joint motors. This permits our formulation to be used directly into the standard terrestrial robotic manipulator algorithms including the Denavit-Hartenburg matrix and Newton-Euler dynamics with only minor changes, namely the link length parameters. Joint trajectories for a space manipulator may be parametrised by polynomials similar to a terrestrial manipulator [128]. The inverse solutions follow from the relative position of the end effector with respect to the manipulator mounting point:

The wrist angles follow from the wrist orientation identically as in the terrestrial manipulator case. We may differentiate our inertial position into velocity:

We retain the general case of a variable attitude permitting derivation of the generalised Jacobian [129,130]:

= manipulator Jacobian

Now,

where Ds = inertia matrix of spacecraft, Dm = inertia matrix of manipulator

Hence,

The generalised Jacobian thus incorporates dynamic as well as kinematic parameters. Its computational complexity can be compared with a traditional Jacobian (Table 4) [131]. For N manipulator arms with n DOF each, the generalised Jacobian has a complexity of (156 + 6N)n + 87 Multiplications and (126 + 6N)n + 6N + 53 additions. The issue of computational complexity becomes acute in the inversion of the Jacobian required for the inverse kinematics. The importance of algorithm development may be illustrated by the replacement of Gaussian elimination (used in Jacobian inversion) with a complexity of O(n3/3) with Levinson’s recursion with a reduced complexity of O(n2 + 2n). The higher computational complexity of the generalised Jacobian favours splitting the computational load between the manipulator control system and a dedicated attitude control system.

Table 4.

Computational complexity of Jacobians.

Singularities in terrestrial manipulators are purely kinematic because the Jacobian is kinematic. Singular joint configurations in freefloaters cannot be mapped into unique points in the cartesian workspace because the generalised Jacobian is a dynamic function rather than a purely kinematic function like the traditional Jacobian. These singularities cannot be predicted from the kinematic configuration alone because they are functions of the history of the end effector path (as a consequence of the non-commutativity of rotations) [132]. The generalised Jacobian suffers from dynamic singularities that are unpredictable [133]. Dynamic singularities are configuration and path dependent making path planning challenging. One approach to combat this is to introduce singular value decomposition to obtain a pseudoinverse of the generalised Jacobian [134,135]. The pseudoinverse has a severe computational cost in making the already complex generalised Jacobian even more complex.

We can simplify the generalised Jacobian formulation by adopting the freeflyer approach, which employs attitude control so that = 0, Js = 0 and w0 = 0 yielding the extended Jacobian:

Since by definition, since s0 is fixed and , because any grasp is invariant:

This extended Jacobian Jm is different to the manipulator Jacobian Jm′ earlier in Equation (35) in that it incorporates parameters related to the spacecraft base. The cross-product formulation of the Jacobian can be derived similarly:

Resolved acceleration follows directly by differentiation:

Whereas the generalised Jacobian suffers from unpredictable dynamic singularities, the extended Jacobian (equivalent to the “dynamic” Jacobian [136]) is dependent on kinematic parameters and masses which are fixed, and so singularities are kinematic in nature and predictable. Hence, the implementation of attitude measurement and control solves the problem of unpredictability of dynamic singularities [119]. Nevertheless, the spacecraft base does translate, but does not rotate:

Due to the desirability of maintaining a stable spacecraft attitude in freefloaters, many efforts have focussed on minimising reaction disturbances from the manipulator to the mounting base, e.g., visual servoing requires stabilisation of spacecraft attitude [137]. The implementation of cycling motion superimposed over the desired trajectory acts equivalently to a set of reaction wheels [138], but this introduces the potential for collision in grasping a target. The reaction null space approach exploited null space to simultaneously control spacecraft attitude and manipulator joint angles by applying a cost function to the pseudoinverse to minimise reaction torques on the spacecraft. [139,140]:

where ζ = null space vector of dimension n − 3. The reactionless approach to satellite capture by a manipulator exploits reaction null space to eliminate attitude movements of the mounting base [141]. To ensure that the robotic arm movements do not affect the base, the coupling momentum must be zero, so the manipulator joint rates are given by:

where Dc = coupling inertia between base and robotic arm, = null space vector of Dc of dimension n − 3. The internal degrees of freedom allow the specification of zero reaction on the base. This is an extremely computationally intensive solution, even more complex than the generalised Jacobian. One means to reduce the computational complexity is to adopt a Jacobian transpose approximation of the form over model-based controllers [142]. For example, the Jacobian transpose can implement a version of the damped least squares inverse [143]:

It trades off accuracy against feasibility through the weighting factor. The enhanced disturbance map (DM) is a path planning algorithm that selects manipulator paths that minimise base attitude disturbances but this approach was also highly complex [144]. A bidirectional approach exploited a Lyapunov function to regulate both manipulator joint angles and spacecraft attitude searching from start-to-goal and from goal-to-start configurations simultaneously, but again at the cost of high computational complexity [145]. It utilises the Moore-Penrose pseudo-inverse of the generalized Jacobian to overcome dynamic singularities using the redundant degrees of freedom. The path-independent workspace is the workspace in which there are no dynamic singularities, but it is smaller than the enclosing path-dependent workspace in which dynamic singularities can occur [146]. The large search spaces invoked by most of these path planning techniques introduce the possibility of using techniques such as genetic algorithms, but they are unsuited to real-time operation. Hence, the computational complexity of these methods renders them unsuitable for space manipulators.

Many of the issues associated with free-floating manipulators vanish with free-flying manipulators with a stabilised spacecraft attitude. Indeed, dedicated attitude control system are employed on all spacecraft. This eases many of the computational complexity problems associated with freefloating systems. The reaction of the robot manipulator dynamics on the mounting spacecraft must be compensated for to maintain a stable attitude.

7. Freeflyer Manipulator Dynamics

The dynamics formulation of robotic manipulators exploits the manipulator kinematics to determine the joint torques. The Lagrange-Euler formulation of manipulator dynamics is given by:

where Dm = manipulator inertia, Cm = manipulator coriolis/centrifugal forces, Fext = external forces, F = friction.

Of particular note, the frictional term in the Lagrange-Euler formulation hides a high degree of complexity. Effective friction compensation requires accurate friction models. The simplest models of friction characterise slip as a combination of static friction, Coulomb (dynamic) friction and viscous friction which varies in proportion to load and opposes motion independent of contact area. Static friction can be reduced below the level of Coulomb friction through the use of lubricants so that stick-slip will be eliminated, e.g., static friction is typically ~0.02 Nm compared with viscous friction of ~0.1 Nm (except in ageing motors). Motor friction is commonly modelled as a nonlinear Stribeck curve, which lumps the effects of backlash, deadzone and velocity-dependent friction together, but it does not model static friction, which yields stick-slip overshoot. Neural networks may be employed to learn manipulator inverse dynamics by storing association patterns which potentially resolve friction difficulties [147]. Although there exist sophisticated friction models such as [148] which has seven parameters, for simplicity, most friction models consider Coulomb friction and viscous friction only additively:

where b = viscous friction coefficient (assumed zero), k = Coulomb friction parameter (assumed zero in most cases). Joint friction is the primary limitation on precision and can lead to stick-slip behaviour or oscillations, especially for low-speed, low-amplitude movements. Torque sensors in the joints can effectively eliminate joint friction (as implemented in DLR manipulator joints). Active force control compensates for unknown friction effects through direct measurement of joint acceleration and forces exploiting a growing/pruning neural network approach [149]. However, self-sensing without the use of specific joint sensors can be achieved by monitoring back EMF generated within the motor (assuming constant field strength) since the back EMF is linearly related to the motor velocity (which may be integrated to give displacement) [150].