1. Introduction

Today robots play an important role in modern manufacturing, service domains, and households providing behaviors of significant complexity. The current mechatronics approach used in the design of modern robotic systems has proven to be sufficient to automate the routine processes. However, the requirement for robotic systems that can complete increasingly complex tasks is growing rapidly. This need calls for a change in how such tasks are performed; in 2016, it was identified that there was a need for novel design principles to make robots more intelligent, adaptive, and capable to collaborate [

1].

The fact is that currently widespread robotic systems are capable to do only pre-programmed tasks, thus lacking adaptivity at the level required by the trend in the modern use of robotic systems. While many specific tasks can be indeed completed at a sufficient quality based on predetermined instructions and a system’s configuration, such systems still require human intervention when changes occur either in the external environment or in the system itself. A good example that illustrates this problem is commercially-used feed pushing robots for cattle farms. Such robots are only capable of following the pre-programmed path; if for some reason they stray off the trajectory, a human worker must move the robot back manually [

2]. Another example is fully automated production lines in manufacturing plants; these systems can only do the work if the task does not change and the system itself is fully functional. If something breaks down, it is not possible for the system to continue its operation even if factually it is capable to carry on. Moreover, usually even insignificant changes in that manufacturing process require full manual reconfiguration to continue the production [

3].

To address these issues, much effort has been put into building increasingly intelligent autonomous systems that would be more adaptive. One of the approaches used during the last years is neural network-based machine learning to acquire new knowledge for adapting to environmental changes [

4]. Significant success has been achieved in specific domains, such as game playing [

5] and autonomous driving [

6]. Still, for now, the ability to learn general models is limited to one domain, or to a single machine (e.g., in case of autonomous cars); neural network-based approaches cannot explain the reasoning behind their decisions.

From a practical perspective, a conditioning control system for all possible scenarios is not considered as a viable solution: The designer simply cannot foresee and design all the possible situations that may occur during the lifetime of a system. It is particularly impractical and nearly impossible in a system with a large number of components and multiple hierarchy layers, such as a multi-robot system. Although there are certain challenges, multi-robot systems in general are considered to have a high potential for future manufacturing plants. The main expected advantage is possible synergy among teaming robots while a single large system might be less effective or too costly. One highly anticipated example of the cooperation is the following: In case of malfunctioning of a single robot within the team, other team members can rearrange the tasks among themselves to continue production until the robot is available again. While the obvious benefits are desired by the robotics and manufacturing communities, there are a number of challenges to develop such systems arising from the fact that multi-robot systems can have innumerable different configurations depending on type and number of robots within the teams. A multi-agent paradigm provides mechanisms for standard situations, for example, Contract-Net protocol for allocating an isolated task to the best performer [

7]. Still, many other mechanisms for allocating multiple tasks, identifying the need to reallocate tasks, etc., are necessary to ensure viability. Implementation of such capabilities requires novel design approaches that manage this complexity.

Based on all these considerations, one can conclude that to enable the full potential of autonomous robot-based solutions, multi-robot systems need capabilities to autonomously adapt to changes in the external environment and system itself; this adaptivity must also be foreseen in the design phase of the system. This is a high bar to achieve in robotic systems, yet in the system theory, in particular, organization theory, where such systems have been researched considerably longer, this challenge has already been tackled. A viable system model (VSM) has been developed to specify the functions that must be implemented within human organizations to make them successful in the long term, (i.e., viable) [

8]. Human organizations and multi-robot systems (just as any system) are viewed as similar from the system theory viewpoint. This leads to the hypothesis that system theory models that refer to organizations can be adapted to technical systems providing benefits that have been available in human organizations for decades.

The paper adapts the VSM to multi-robot systems in combination with novel task allocation mechanisms among robots to enable long-term autonomy viability. The developed model has been tested to ensure adaptivity at a case study of a conveyor system where autonomous robots must process parts arriving via a conveyor belt. The experiments have been carried out within a simulated environment where each robot has been simulated by an intelligent agent.

The remainder of the paper is organized as follows.

Section 2 describes the related works in two parts: First, the current approaches that can be used to develop adaptive autonomous systems, and second, the domains where the VSM has been applied.

Section 3 describes the ViaBots model and VSM adaptation to robotic systems.

Section 4 describes the application of the model to a conveyor belt case study in a simulated environment.

Section 5 analyzes the results achieved by the simulations.

Section 6 discusses the limitations of the ViaBots model and possible challenges in its implementation for other scenarios.

Section 7 concludes the paper and outlines future works.

2. Related Works

The related research can be viewed from two perspectives: Studies and applications addressing long-term autonomy as well as cybernetics approach of long-term autonomy as a general feature of multi-robot systems.

Over the past decade, the research on autonomous multi-robot systems has come much closer to a point where truly cooperative robot-robot and robot-human teams can be developed. Some early approaches like Nerd Herds swarming or ALLIANCE subsumption architectures have been introduced, and are well suited for focused tasks of limited complexity. These approaches, however, are not designed for long-term autonomous operation [

9,

10].

To be fully autonomous, the system needs to address several specific issues. Both robot–robot and human-robot scenarios have been extensively studied by the research community, and as a result, certain challenges have been identified. While these include simpler, technology-related issues, such as hardware and software malfunctioning that leads to performance drops or function failures, or human-machine interaction failures due to improper system use [

11], others are much more complex and relate to the cognitive behavior of the system as a whole. This includes, but is not limited to, improper priority selection in decision making, improper strategy selection, reduced trust, which leads to mission level failures, conflicting goals of team members that leads to reduced collaboration or even mission failures in case of human-machine scenarios [

11]. In different application domains, these challenges are addressed using different methods. For space exploration applications, NASA has elaborated the Autonomous NanoTechnology Swarm (ANTS) concept, which is based on swarm intelligence [

12] ideas and comprises robot teams with the following main features: Self-configuring, self-optimizing, self-healing and self-protecting [

12]. The main enabling functionality in ANTS is awareness, which maintains a dedicated control loop for knowledge updates and checking for changes, thus comprising monitoring, recognition, assessment and learning capabilities. To ensure long-term resilience, ANTS awareness is split into self-awareness and context-awareness [

12].

Some narrow robot team applications like formations and flocking have been in research concentrating on both environmental uncertainties, uncertain robot dynamics, and communications, which usually are wrongly considered as available. Unfortunately, in the long-term, autonomous operations all of the mentioned capabilities are challenging, therefore [

13] proposed to use the extended Brain Emotional Learning Based Intelligent Controller (BELBIC) algorithm. Resilient BELBIC or R-BELBIC is based on flexible biology-inspired intelligent control, in order to achieve a long-term operation [

13]. Ref. [

14] assumed instead that cooperativeness as a key element of resilience is not guaranteed, thus compromising the whole system. Therefore, a Weighted Mean Subsequence-Reduced (W-MSR) algorithm [

15] was used to achieve consensus within the team at all times. Other applications like logistics in [

16] or even single robot resilience in [

17] have been studied extensively as well.

Unfortunately, while most of the studies concentrate on specific missions or applications of robot teams, only very few propose formal frameworks or methods of system design. One of them presented at [

18] used formal design methods, such as Event-B and PRISM, to derive a technical design of the system through iterative steps and assessed the probability of goal achievement thereby providing guidance for further developments.

Overall, it can be concluded that the need for long-term autonomous robotic systems has emerged and rapidly grown over the past decade. The developments now are early and specific to narrow applications. We see an obvious gap of general frameworks enabling a long-term resilient multi-robot system design.

The question of long-term adaptivity and autonomy, however, is not distinctive only to technical systems; it has been one of the key themes in system theory and organizational theory for more than half a century. Initially, the research started with an empirical observation: Some enterprises and organizations are persistent for long periods of time while others cease to exist rather quickly. Research devoted to understanding this phenomenon resulted in the VSM—a framework which specifies the characteristics and functions that ensure long term survival, (i.e., viability) [

8].

Primarily VSM has been intended to design an organization. However, it has been found that VSM can be useful not only in organizations, but also in the technical systems area due to the properties that are shared by organizations and technical systems (such as increasing complexity), and that it is at least theoretically adaptable to technical systems [

19]. The challenges that modern autonomous multi-robot systems and organizations face are very similar. Both types of systems need to work autonomously in a changing environment with a high quantity of components and increasing internal complexity. Moreover, from the systems theory viewpoint, all systems are viewed as having a similar structure [

20], and the field of operation is merely an application. This implies that the approaches that work cybernetically for non-technical systems, should also work in technical systems.

The two main aspects of the VSM are managing complexity and adapting to changes: Organizations, as a rule, have a high quantity of elements, lots of internal links on the inside, and a highly turbulent external environment on the outside [

20]. This closely relates to the autonomous multi-robot systems that have high complexity as well. The adaptivity is ensured, and structural and functional complexity is managed and dissolved in the VSM by two main aspects: A well-defined functional structure that involves information channels among functions and the fractal nature of the model.

All systems, in particular, complex systems, consist of several to many abstraction levels. For the system to be viable, each of these layers must be able to adapt and do so in a similar fashion—this structural and functional hierarchy and repetitiveness on different hierarchy levels is called recursivity. In the organization, recursivity is carried out via departments and the general structure of the organization. When properly recognized, this property grants a crucial benefit—the system can continue to work even if some part of it malfunctions or is completely lost (such level of adaptivity characterizes living natural organisms, (i.e., human beings)). From the perspective of the design, the recursive approach is resource-effective since the same design can be applied to different abstraction levels enabling higher scalability [

19]. In this sense, using a recursive model, such as VSM, for modeling multi-robot systems would be highly beneficial.

In addition to its recursive nature, VSM strictly defines functional blocks and links among these blocks thus defining the functions needed for long-term adaptivity. These functional groups are structured in a way that allows self-determination to components of the system, yet keeps them in touch. Such operational independence allows for a system to be less prone to fatal failures as well as gives freedom to achieve goals in alternative ways, thus ensuring adaptivity. The management in VSM is structurally de-centralized, (i.e., any of the system’s components can perform management functions).

Application of the VSM in organizations provides better fault-tolerance, more effective and responsive management, and an overall structure that supports adaptivity of a system [

19]. These benefits would also be crucial for the autonomy of technical systems; we propose to use a viable systems model [

8] as a core framework for a multi-robot systems design.

The functional structure of VSM consists of five functional blocks that are often called subsystems 1, 2, 3, 4, and 5 [

8]. They are however also denoted by their functionality, respectively operation, coordination, control, intelligence, and policy [

21]. These groups are defined and explained in detail in the next section. The model also defines the links among these functional groups which are characterized from two perspectives: The structure of the channel itself, as well as the dynamics, (i.e., the data, information, or knowledge flowing). Stafford Beer, the author of the model, himself has defined the structure of the channels [

20]; these channels have also been classified based on the semantics and type of flow [

22].

Since the model primarily is focused on organizations, so far it has mostly been validated in organizational structures and related areas. Some examples include information and knowledge circulation modeling for organizations in their usual state [

23] and in case of fluctuations (such as emergency or crisis scenarios) [

24,

25]. The goal of the modeling can be either to prove the organization is viable or more often, find flaws in the organization in case of missing functions or flows [

21,

26]; a key research area is managing complexity either in normal or crisis situations [

27]. Arguably the most massive validation of the model was carried out by establishing a VSM-based economy in Chile during the 1970s [

28].

Increasingly often VSM is applied to the engineering (including IT) field, although mostly from the perspective of project management [

29,

30]. In [

31], VSM was used in the design of large and complex IT projects with the aim to manage complexity. In general, VSM-based analysis is used in designing information systems since slow or non-existent knowledge and information flows can be enhanced or created with the help of information technology [

23]. There has also been recent research on how VSM could be adapted to the design of technical systems [

19]. More specific technical VSM-based designs include the design of smart distributed automation systems [

32] and cyber security management [

33].

It can be concluded that the idea of using a VSM for the design of complex technical systems has been around for some years, yet the model has not been fully validated in technical systems. We see two main reasons for that. First, the requirement for viability in technical systems has become crucial during the past decade with the increasing complexity of the systems themselves as well as the tasks given to the systems—to put it simply—there was no need to explore such models before this requirement emerged. That is why the existing models that mix VSM and technical systems are in an early development stage. Secondly, the fact that VSM is organization-oriented creates several ambiguities and issues open to interpretation when adapting the model to technical systems. While the adaptation of the overall organization of the system is straightforward, the content or semantics of separate functional blocks, as well as the model dynamics, needs to be formalized and validated. The next section explains VSM adaptation to technical domain.

3. ViaBots Model

Applying VSM for technical systems, including robotic systems, requires several interpretations and adaptations. This section describes the ViaBots model for viable multi-robot systems’ implementation. First, the VSM and the necessary adaptation for robotic systems is discussed, followed by the description of the use of the intelligent agent paradigm within the model.

3.1. VSM as a Basis for Technical Systems

VSM serves as both the functional structure and the source of function’s semantic meaning. For this reason, this subsection describes VSM in general and its subsystems in particular. While in general VSM is meant to be used in organizational systems, there have been some efforts to apply it in robotics; some examples of VSM function application to robotic systems are explained here.

The structure of the model can be roughly divided into operation and management. The functional blocks of the VSM mostly contain managerial functions (subsystems 2, 3, 4, and 5) and are based on the idea that the general structure of management is independent of the operation domain of the system [

34].

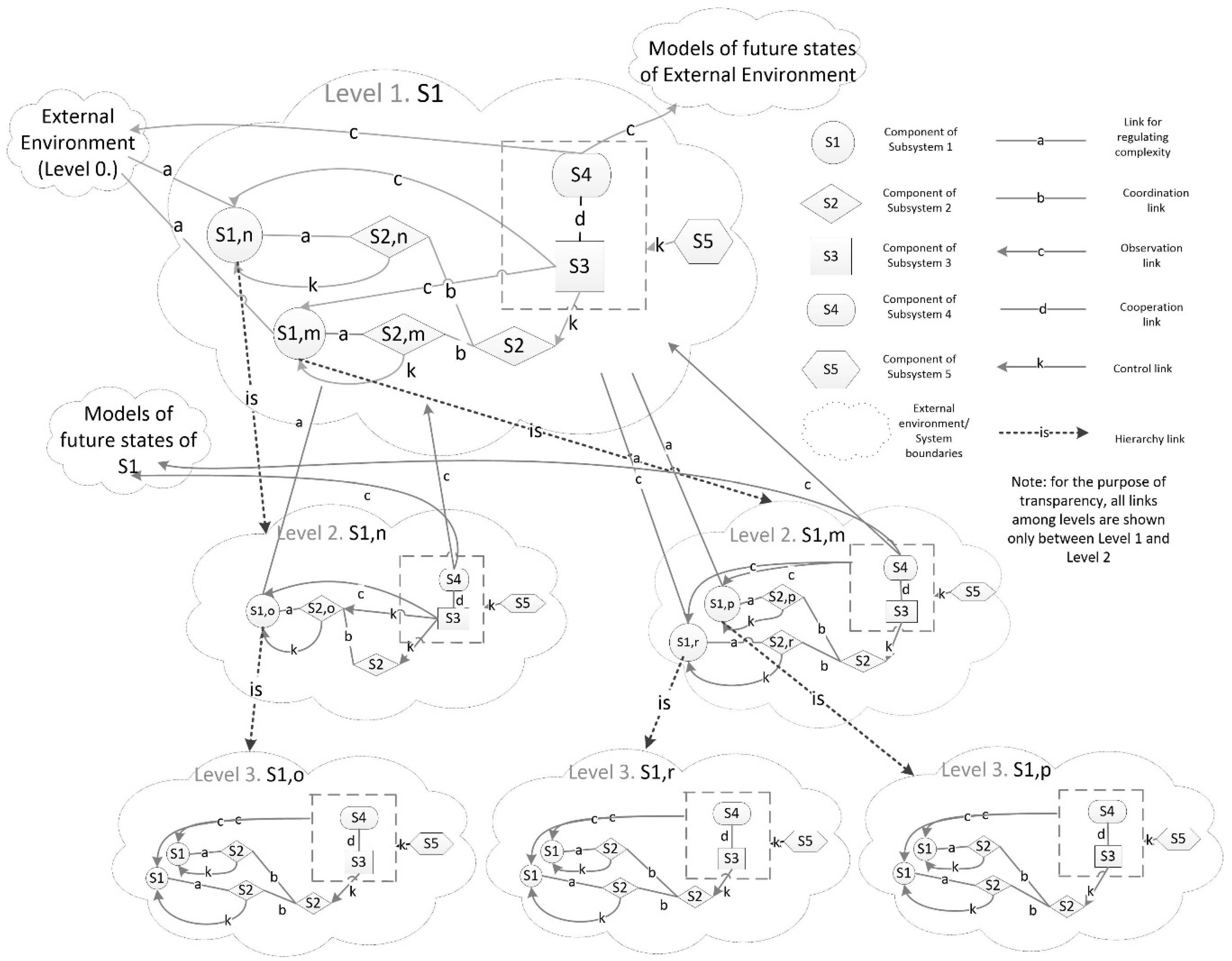

Figure 1 represents the structure of VSM.

S1 is the operation of the system and represents divisions and departments in an organization. In a multi-robot system, depending on the abstraction level S1 functions can be carried out by individual robots or by robot actuators [

19]. There are more than one S1 in each system; S1 is the part of the system that carries out the actual work. S1 also is a crucial part of the implementation of recursivity since each S1 is organized based on VSM one abstraction layer lower (see

Figure 1).

Each S1 has system-level local management attached. The main function of it is coordination and it is the second subsystem, S2. S2 consists of a management unit that directly oversees each S1, and a coordination block that coordinates all the S1 activities. In the organization, these functions are carried out by department heads and their meetings, while in robotic systems the S2 functions are low-level control mechanisms or communication mechanisms among several robots [

19].

The S1 and S2 are needed to carry out the system’s purpose. However, it is not enough to create a viable system. Such a system is fragile to schisms in case S2 units are unable to agree on resource distribution. Moreover, the system cannot follow the same goal and only holds the view of the current situation without any regard for future states. To solve these issues, senior management consisting of S3, S4, and S5 is introduced. S3 exerts control over S1 and S2. It compares the actual state to a desirable state and steers the system towards its goals by allocating resources. In the organization, there are several departments that carry out these functions, including the financial department as well as human resources. In a multi-robot system, planning algorithms are used here [

19].

A system that carries out S1, S2, and S3 functions is a system that has a goal and ability to work towards it in an environment with minor fluctuations. However, such a system is unable to adapt to major changes and a turbulent external environment. To predict the future states of the external environment and the system itself, an intelligence function, or S4, is needed.

S4 tries to predict the future by applying learning and analysis of the current situation. In the case of an organization, S3 and S4 are often integrated. In robotic systems, this part must be carried out by artificial intelligence techniques (e.g., distributed adaptation algorithm) [

19].

Finally, a viable system needs to be able to redefine its goals and policies since otherwise, the system is unable to adapt to drastic changes. S5, or policy function, monitors S3 and S4 as well as the external environment to adjust the policy. In an organization, S5 is executed via shareholders while in robotic systems a more dedicated planning algorithm might be needed [

19]. Alternatively, the role of S5 can be partially executed by the human user by defining the current goal of the system.

Among these subsystems, there are a variety of links. Based on the information carried, frequency, and general qualities of channels these links are classified into five groups denoted by the letters a, b, c, d, and k [

22] in

Figure 1.

Type a links are bidirectional links used to connect functions that have different degrees of variety. In organizations, these are the channels via which the organization acquires the information needed for the operations and communicates the results back to the external environment. These links have a specific construction: Attenuators on one end to reduce the number of states and an amplifier on the other to amplify the effects of the system in order to have an impact. In the case of robotic systems, these links enable interaction with the external environment via sensors and actuators by ensuring that a robot only perceives a finite number of external environment states. It is up to the designer to choose the appropriate physical architecture of the robots.

Type b links are specific to S2 and are used to coordinate (i.e., send content back and forth) several units with the aim to come to consensus between independent units; these links allow for the robots to communicate among themselves and share limited resources, including capabilities, physical restrictions for performing one, or the other task.

Type c links are monitoring links which are directed to the observer and allow for higher systems to make informed decisions. Type d link is an integration link—a bidirectional link between elements of the same variety that cooperate and allow overall functioning of the system by balancing the actual and future states. This means that the robots that fulfill functions connected with type d links need to be in constant communication. Finally, type k links are control links that are used to give the orders; the link itself serves as an amplifier and in the robotic system is used to pass commands.

3.2. Using a Multi-Agent System at the Logical Level of the System

Mobile robots comply with the notion of an intelligent agent that is characterized by interaction with the environment via perceiving inputs and influencing it through the outputs. Main characteristics of intelligent agents within multi-agent systems are autonomy, reactivity, and social capabilities. Robots forming a multi-robot system need all of them as well. For these reasons, we are using an intelligent agent paradigm at the design level by deeming robots as intelligent agents. Using agents at the logical level offers several additional benefits. One of them is the possibility to use already existing interaction mechanisms necessary to build collaborative multi-robot systems. Moreover, this enables using agent-based simulations during the model development to prove the concept before implementing the model on the actual multi-robot system.

Consequently, within the ViaBots model each agent represents a robot and agents, in turn, have functions corresponding to a VSM; within this approach, all the elements of the system (robots, user interface, any type of control algorithms that can run on hardware or software) are designed as agents. The percepts and actions of the agents are taken directly from the VSM links and defined by the design of the multi-agent system; the way how an agent processes the percepts and maps them to the actions, (i.e., the internal architecture of the agents can greatly vary based on the purpose of the system and the complexity of VSM functions). An agent-based approach at the logical level enables defining interconnections between a VSM and technical system implementation. The overall structure of the mappings is depicted in

Figure 2. The concepts of the viable systems have been formulated in the abstract domain which is the VSM itself. At the logical domain, the ViaBots model has been designed based on general concepts of multi-agent systems. At this level, VSM concepts are tied to concepts associated with the multi-agent system domain. Finally, to practically implement the ViaBots model in a real development environment, the third mapping with concepts used at the implementation domain is needed. Such a mapping can be performed on any of the environments for implementing simulation or a real model. For example, Java Agent DEvelopment framework (JADE) [

35] can be used to develop a simulated environment.

First, concrete mappings to the logical domain must be performed which are based on a multi-agent system design. Most multi-agent system design approaches include two general phases: (1) External design, (i.e., designing a multi-agent system as a whole) and (2) internal design, (i.e., the internal structure of individual agents) [

36]. In this model, we focus on the internal design only as far as the VSM functions go. The internal design of the agents involving implementations of the actual tasks depends on the domain. One example of the internal design will be given within the description of the case study.

External design usually consists of two major parts. Firstly, the tasks that the system must do have to be defined, grouped into conceptual structures (for example, roles), and assigned to the agents. Secondly, the interactions must be designed, including simple message passing as well as more complex interaction protocols.

The VSM eases the external design since it already defines the requirements and thus determines what kind of tasks and communications the system should have, as well as how these tasks should be grouped into roles. The overall structure of the agent tasks and roles is depicted in

Table 1. To define ViaBots model based on the VSM, the functions (available in [

19]) have been sorted and analyzed to determine which functions belong to the agent functions and which belong to the interaction design. It included the following four steps.

Organization-typical functions were excluded, in particular, “creation of working atmosphere” which refers directly to the emotional and social needs of humans.

Functions that directly refer to the systems’ communication to other systems (such as “control channel functions”) were assigned to interaction design, (i.e., communication).

The functions that are related to subsystems without the involvement of other subsystems were mapped to tasks (such as “learning”).

Functions that involve communication with other subsystems, as well as specific tasks, were split between the design of tasks and interactions (“resource relocation” serves as both, the task for S3 and as an interaction between two subsystems).

Since the VSM is structurally independent, the subsystems correspond to the roles that can be given to any of the agents (structural units), and tasks correspond to internal design-specific functions acquired before. Tasks are mapped straight-forward on the functions or parts of the channel (in case of amplifier and attenuator). The only exception is operation (or S1) tasks where tasks are designed depending on the domain of the system and thus there can be multiple tasks. The tasks are grouped into roles according to the VSM; there are (5, n) roles since S1 again can, and most probably will, have more than one role. The rest of the systems have one role assigned.

Finally, the role assignment to agents depends on the capabilities of agents. There are several tasks that require specific capabilities. First, when interacting with the environment, the S1 agents should be able to have reduction and amplification capabilities, (i.e., sensors and effectors). S4 agents should have environment monitoring capabilities.

It is also crucial to note, that in the VSM roles are distributed dynamically and the functioning system’s structure can differ from the initial system’s configuration.

Depending on the function and channel it supports, the following interaction types were identified (overview in

Table 2):

Type a and Type c: Channels that transfer information, in this case, a simple message is sent. In the case when variety amplification is needed, a broadcast message is sent.

Type k channels are control flows where feedback is required. For this reason, an inform type message is sent and a mandatory confirmation must be sent back.

Type b and type d channels include cooperation and negotiation that include complex interaction protocols that must be designed separately.

The VSM provides guidelines on how a VSM-based system should be designed, however, there are several functions open for interpretation; these questions (such as the content of the functions) are described in the next subsection.

3.3. Agent-Based Model for ViaBots

This section outlines two contributions for the implementation of the model, namely suggestions on how the tasks can be executed within the multi-agent system and the detailed ViaBots model ensuring adaptation, which is the central part in developing an adaptive system.

For each of the systems S1 to S5 defined in the VSM, a set of functions and some recommendations on how they should be implemented are given; yet task formalization for a technical system is vague in the VSM. Based on the tasks listed in

Table 1,

Table 3 provides a minimal list of tasks to implement adaptivity in autonomous systems, as well as suggestions on how it should be done (last column). All the functions mentioned in VSM are included in this minimal set except “management of external contacts” since the function is needed only if there are multiple autonomous systems in the external environment.

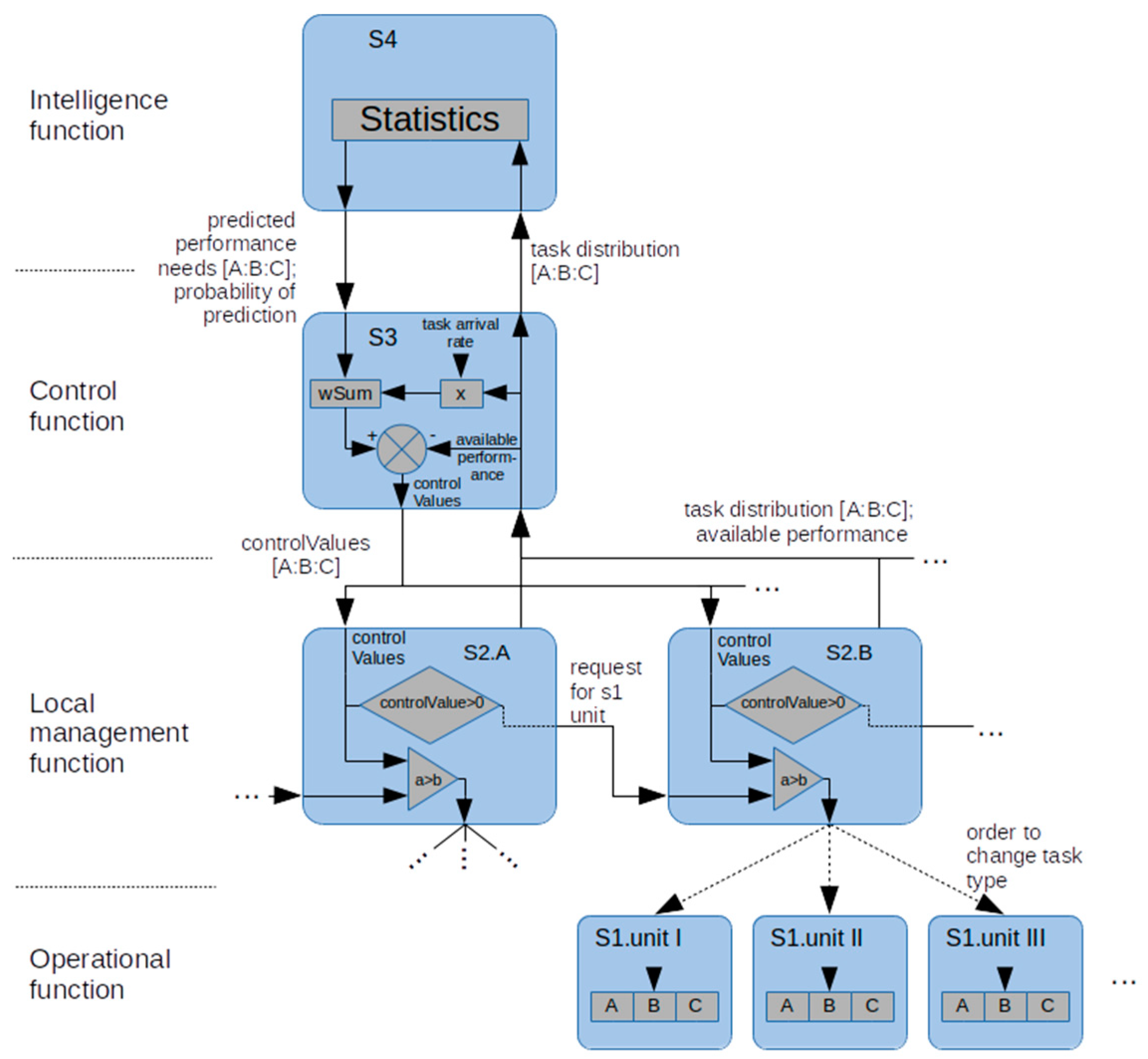

Overall, the ViaBots model functions are further implemented as described and shown in

Figure 3. Operational units (i.e., robots or agents carrying out S1 functions) are capable to execute several tasks. Upon receiving an order from S2, they reconfigure to execute tasks of the requested type. Operational units also perceive the environment and send relevant information about current tasks to the corresponding S2 for aggregation.

S2 straightforwardly implements operational units’ exchange mechanisms, that allow the diverse operational units to be used at the tasks where they are the most productive. The principle for the S2 negotiations is to respond to requests and give away the operational units that are relatively ineffective at their current task. The operational unit exchange is based on a comparison of control values received from the control subsystem, S3. If S2 has positive control value, meaning that task processing power of the corresponding type of operational unit is insufficient, it sends requests to other S2. When S2 receives such a request, it calculates if the commitment of its current least productive operational unit would be valid. The valid commitment of an operational unit means that after fulfilling request the source S2 would stay with a lower control value than receiver S2.

S2 aggregates information from subordinate operational units and provides a control subsystem with information of processing power available for tasks of the corresponding type, and number of available tasks.

S3 collects information from all the S2, to get an overview of the current situation. This way, S3 gets information on a number of tasks of each type available for execution and the total amount of processing power the system has. Using this information, S3 calculates desired power for processing tasks of each type currently available for execution. These values are combined with values of desired power for processing the predicted number of tasks by using a weighted sum. S3 then calculates control values for each of the S2 (i.e., each task type).

S3 provides intelligence subsystem S4 with current information on the system’s state. S4 stores this information and uses it to update its model of the world. By using this model, S4 predicts a possible number of tasks of each type and returns this information to S3.

In the case of technical systems, the mission of the system is human-defined. Also, the available resource definition and other global configuration in many cases are done by the user. The S5 is also the most complex; to balance the complexity of implementation and system’s ability to show adaptation capabilities, policy level decisions can be reduced to determining the number of operational units and thus are implemented as control elements in the user interface of the model. This means that in the current version of the model, the user fulfills the functions of the S5.

5. Results

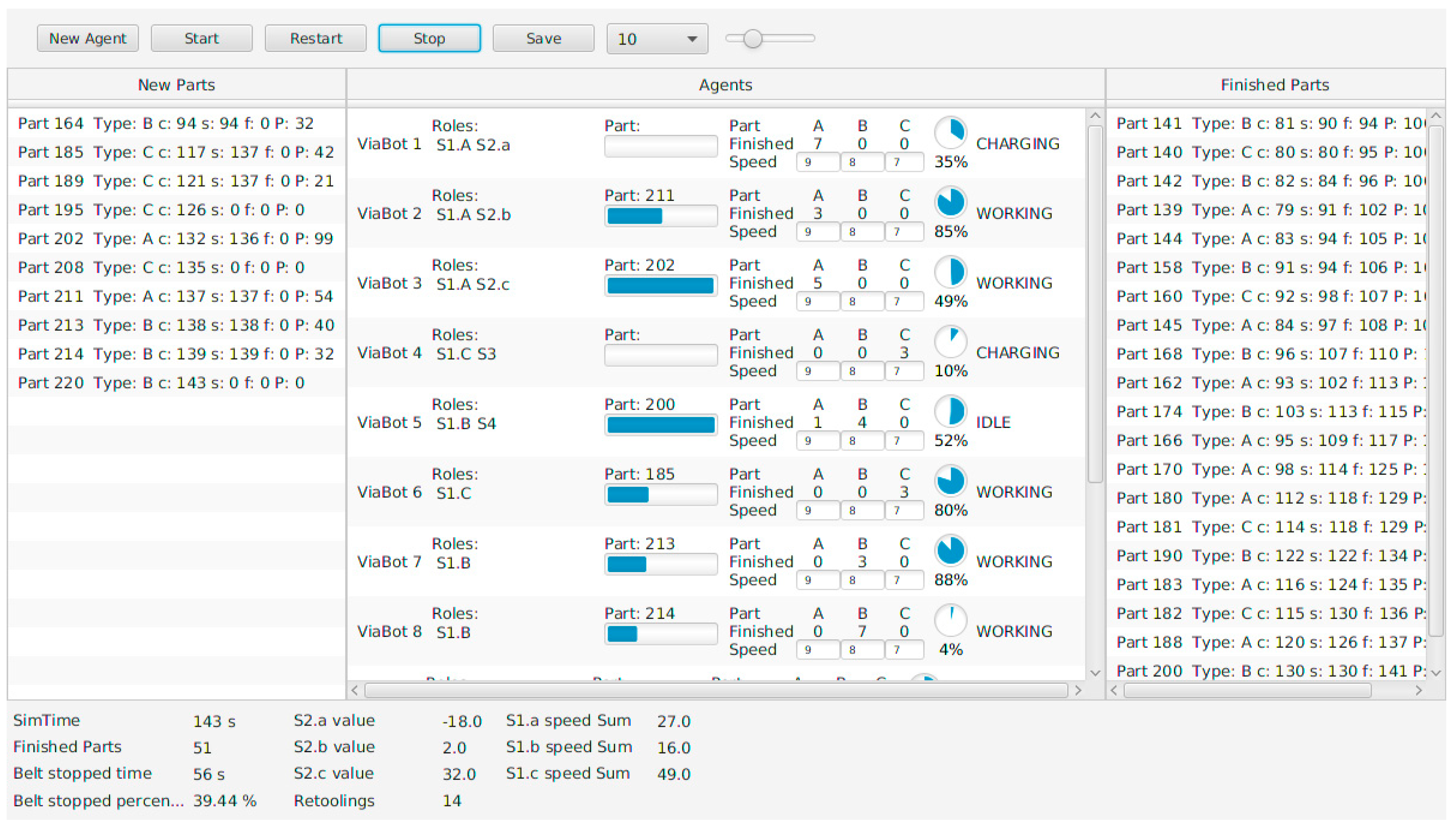

This section describes the experiments done with the simulated multi-robot conveyor system. The following experiments were executed to validate the implemented model. Firstly, the repeatability of the experiments was analyzed to determine what were the differences between different simulations with the same parameters since the environment by its nature is stochastic. Secondly, the importance of prediction and current observations of the part distribution probabilities was analyzed. The model uses both, the observed values and predicted ones, but the weights for both distributions must be determined experimentally. Thirdly, the system’s performance depending on the number of the agents processing the parts was analyzed to choose the number of agents for the analysis of the ViaBots model’s efficiency since the results may differ in case of inadequately large or small number of agents. Finally, the performance of the system with different active parts of ViaBot model was analyzed to validate the proposed model.

In all experiments, a battery capacity of 100 Wh was used. Power measurement unit W used in this simulation corresponds to Watt in the SI system, with the exception that time units were iterations. The battery iwa charged at constant 5 W. Part processing of types A, B and C consumed 2, 3, and 4 W (per iteration), respectively. Execution of any additional management role consumed one extra Watt.

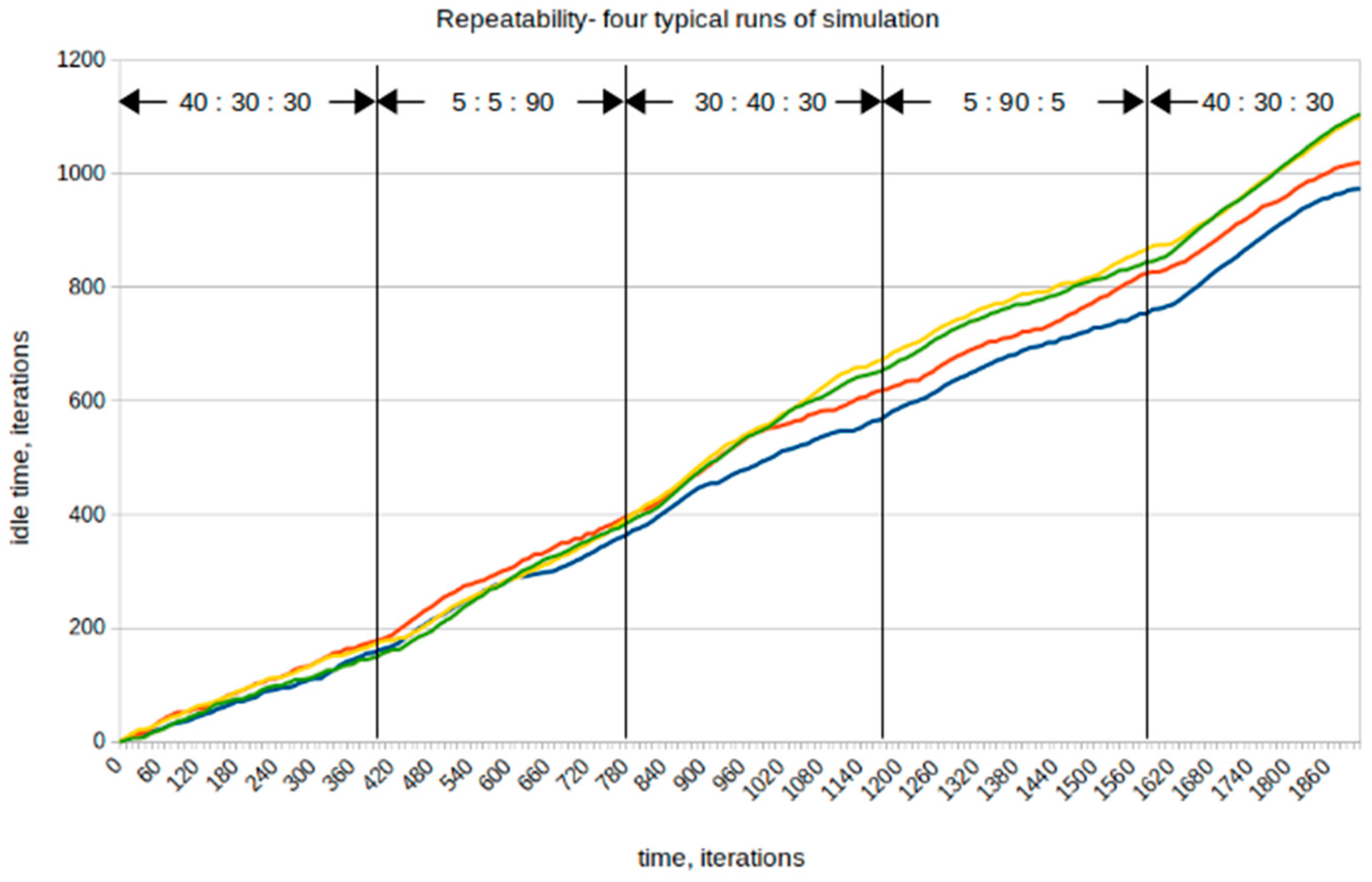

Also, in all experiments, the length of the conveyor belt was equal to the number of agents, so that all the robots, at least in the simulated environment, were able to work simultaneously. The pattern of periods with distinctive average distributions of incoming part types is the same for all experiments and is shown at the top of

Figure 7.

5.1. Repeatability of the Experiments

Due to the stochastic nature of previously described simulation of ViaBot model production line management, several simulation runs were done to determine how different the simulation results can be with the same parameters. Four typical runs of the simulation are given in

Figure 7. Twelve identical robots were used in this experiment. Processing speeds for parts were set as a percentage of the total part processing time per iteration (9% for part type A, 8% for part type B, and 7% for part type C). The results differed up to 10% between different runs. Therefore, the mean values of four different runs were obtained for evaluation of systems performance within further experiments.

5.2. The Balance between Prediction and Observations

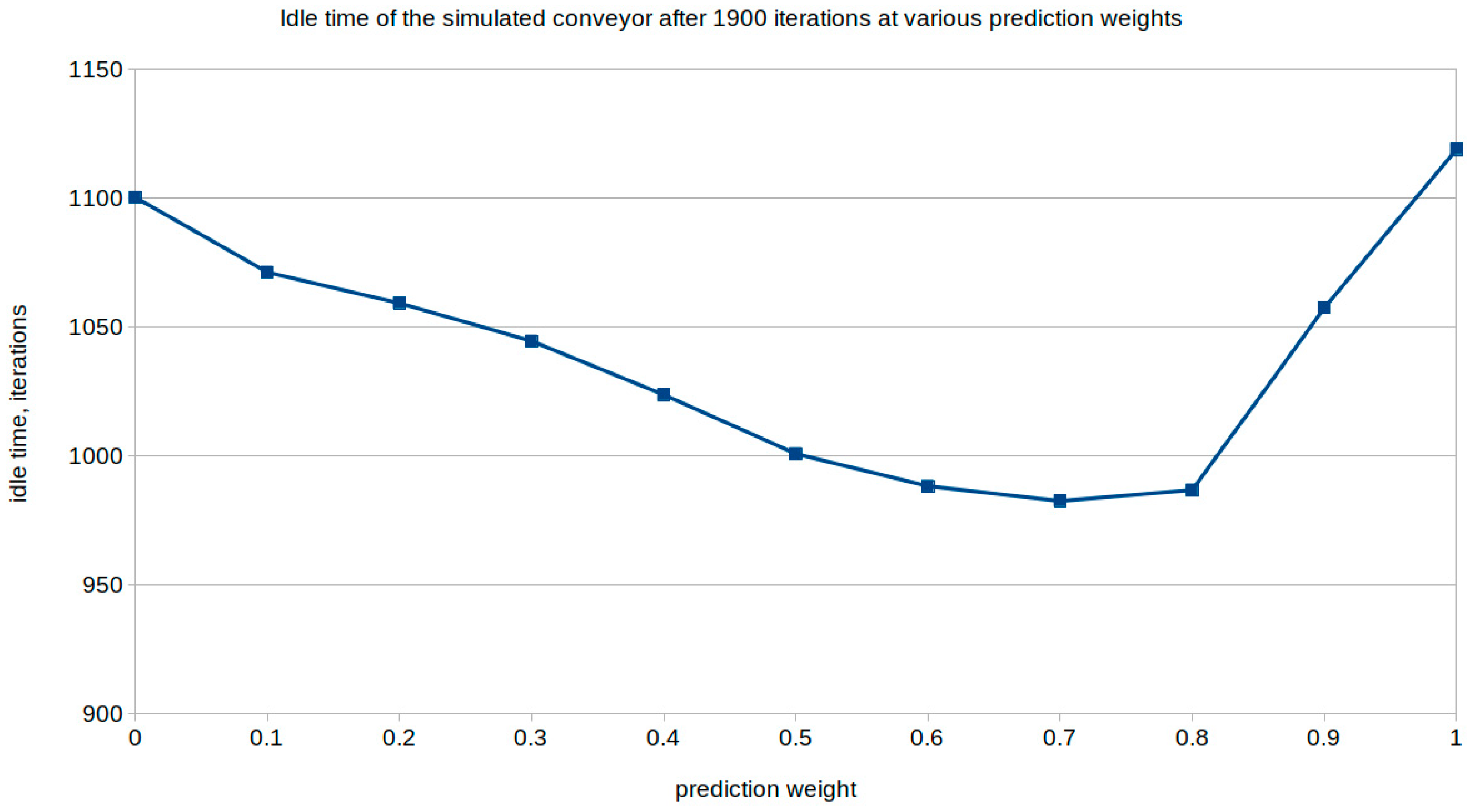

In this evaluation, the balance between the importance of the prediction and the observation in terms of weights to be used by S3 was determined empirically. Generally, optimal weights for predicted and observed incoming part rates would depend on the accuracy of prediction. To avoid analysis of how the prediction accuracy influenced the results, during this study we used the advantage of the simulated environment which allowed exploiting prediction with absolute accuracy. Other factors that influenced these weights were systems characteristics, (e.g., mechanisms for operational unit exchange between S2). The idle time was measured with changing weight distribution. As a result, the dependency shown in

Figure 8 was obtained. The results show that the most efficient output of the production line for this specific setup was achieved using the weight of prediction between 0.6 and 0.8. Results were obtained by taking the average value of four consecutive simulation runs at 1900th iteration after four periods of different average distributions of incoming parts. Again, 12 identical robots were used where processing speeds for parts A, B, and C were 9%, 8%, and 7% per iteration, respectively.

The fact was that the use of large prediction weight even with real prediction numbers caused the system to ignore the actual distribution of incoming parts on the belt. Consequently, S3 used prediction with a weight of 0.7 for further calculations of the control value.

5.3. Number of Agents

The main factor that influenced the performance of the system was a number of operational units (agents in this case). To compare various aspects of the ViaBots model, the quantity of operational units should not oversaturate the system, since the main goal of management is to make the system viable with limited resources. Consequently, management quality would be visible best when the number of operational units is limited.

Figure 9 shows the dependency of the system’s performance (the time, when the system is idle, was measured again) from the number of agents available in the system. As in the previous experiment, identical robots were used where processing speeds for parts A, B, and C were, respectively, 9%, 8%, and 7% per iteration. Based on these experiments, it was determined that at 15 agents effective use of any agent can still significantly change the performance of the system, therefore visibly indicating adaptivity.

5.4. The Efficiency of the ViaBots Model

Finally, the ViaBots model has been validated based on the simulated production line by comparing different levels of ViaBots model engagement using previously acquired parameters. Four different usage scenarios were analyzed:

Normal operation, where ViaBots model is fully applied;

Operation without prediction updates from S4;

Operation without control value updates from S3;

Independent operation of processing units.

Since S5 functions were partially limited by specific implementation structure (i.e., system is configured by the user and the goal of the conveyor system does not change), the implication of S5 subsystem functionality was not considered in this evaluation and only use of systems S2–S4 was validated.

Four periods of distinctive distribution of incoming parts formed environment fluctuations to which a group of 15 operational units of three different ability profiles adapts. Each ability profile had a speed of 0.09 parts per iteration for two types of parts and 0.03 parts per iteration for one remaining type of parts. That means that there were five robots with speeds 3%, 9%, and 9% per iteration for parts A, B, and C, five robots with speeds 9%, 3%, and 9% per iteration, and another five robots with speeds 9%, 9%, and 3% per iteration, respectively.

When S4 was suppressed, production line continued to operate using prediction data (parts distribution) that may have been be outdated and therefore would not correspond to the actual part arrival rate (either current or future). S3 subsystem still calculated somewhat valid control values, because it also used actual data with a weight of 0.3. The loss in performance after 4 distinct periods was 14 % as shown by the red line in

Figure 10.

Suppression of control function S3 led to even less efficiency. When the agent that was carrying out S3 role was paused, local management subsystems S2 stayed with the fixed control value, as no updates were received. That can lead even to a complete stop of part processing if incoming part distribution varies substantially. Nevertheless, the distribution of operational units is based on their performance and matches the distribution of incoming parts of the first period. Furthermore, fixed control values prohibit any operational unit transfer thus reducing the loss of part processing power due to the reconfiguration time. However, inability to adapt to the changing environment due to a lack of management overshadows such benefits as shown by the yellow line in

Figure 10, leading to further loss in performance by 40% compared to a case of fully engaged ViaBots model.

Finally, if local management subsystems S2 had selected operational units regardless of their performance and ceased further collaboration, then it could be considered that there was no ViaBots model applied at all. In this situation, operational units were not managed. The resources were distributed according to the distribution of incoming parts in the first period without taking into account their ability to process the parts of a particular type. The green graph shows such a scenario, where the system’s idle time increased by 90% compared to the performance of a fully applied ViaBots model.