Abstract

This paper presents a comprehensive survey of UAV-centric situational awareness (SA), delineating its applications, limitations, and underlying algorithmic challenges. It highlights the pivotal role of advanced algorithmic and strategic insights, including sensor integration, robust communication frameworks, and sophisticated data processing methodologies. The paper critically analyzes multifaceted challenges such as real-time data processing demands, adaptability in dynamic environments, and complexities introduced by advanced AI and machine learning techniques. Key contributions include a detailed exploration of UAV-centric SA’s transformative potential in industries such as precision agriculture, disaster management, and urban infrastructure monitoring, supported by case studies. In addition, the paper delves into algorithmic approaches for path planning and control, as well as strategies for multi-agent cooperative SA, addressing their respective challenges and future directions. Moreover, this paper discusses forthcoming technological advancements, such as energy-efficient AI solutions, aimed at overcoming current limitations. This holistic review provides valuable insights into the UAV-centric SA, establishing a foundation for future research and practical applications in this domain.

1. Introduction

1.1. Background and Motivation

The emergence of UAVs has brought a promising advancement in capturing SA for diverse applications and offers many advantages, including the ability to perform high-risk flights, precise navigation, and capture high-quality images and videos using a variety of sensors such as multispectral, hyperspectral, thermal, LIDAR, gas, or radioactivity sensors. Their versatility in deployment, mobility, and ability in real-time data acquisition, combined with the latest advancements in onboard processors, make UAVs the perfect choice for SA in various applications.

In the context of vehicle-related SA, it refers to the real-time perception, comprehension, and projection of the surrounding environment by the vehicle’s systems. It involves the fusion and interpretation of multi-sensor data to generate an accurate, dynamic representation of relevant factors, including obstacles, traffic, terrain, and contextual information, enabling informed decision-making and proactive responses to ensure safe and efficient operation.

SA facilitates automatic identification and reasoning on obtained knowledge in a complex and dynamic environment to understand the current state of the environment to anticipate the future state. It is important for an autonomous vehicle, such as a UAV, to operate successfully in dealing with continuously changing situations. SA keeps the vehicle focused on only relevant and meaningful information [1]. UAVs can operate as mobile agents to enable continuous SA of a vast area, penetrate constricted spaces out of reach of humans, and therefore save considerable vital time and effort. Consistently analyzing the information and data captured by distributed sensors throughout operations, including those onboard UAVs, significantly enhances comprehension of the broader situation. However, the substantial volume of generated data requires extensive processes for transfer, handling, and storage. Meanwhile, UAVs must contend with environmental challenges amid limitations like energy scarcity and unpredictable surroundings [2,3].

Despite significant advancements in edge computing technologies, achieving real-time SA via UAVs remains a crucial challenge, as current solutions struggle to meet the demanding operational requisites. The primary challenge revolves around the limitations in onboard processing capabilities, computational efficiency, and the constrained resource envelope of UAVs, which particularly concerns computing prowess, battery capacity, and endurance. These constraints significantly hinder the integration of machine learning (ML) methodologies crucial for adaptive and robust situational awareness. Moreover, ensuring precise positioning and navigation accuracy while optimizing data acquisition, fusion, memory utilization, and transmission efficiency presents multifaceted complexities. Overcoming these challenges is pivotal in enhancing the efficacy and dependability of UAV-based online SA.

1.2. Objectives and Scope of the Review

SA is the perception of the elements in the environment within a volume of time and space, which lays a solid basis for UAV networks to implement a wide variety of missions. Another aspect is the vehicle-related SA, to ensure the UAV has a safe, efficient, and successful operation, given the environmental and vehicular constraints. Accurate SA is an essential challenge to unleashing the potential of UAVs for practical applications. This article strives to offer advanced techniques and creative ideas for addressing challenges in UAV-based SA. Rather than concentrating solely on particular operations or restricted settings, we have taken a more comprehensive overview to cover a wider range of applications. In this paper, we delved into the concept of SA in relation to UAVs by conducting a thorough analysis of the influential academic literature highlighting UAVs’ potential as powerful tools for SA in critical situations. This research capitalizes on the following aspects:

- How SA can enhance the overall autonomy of UAVs in different real-world missions;

- How SA can contribute to persistent and resilient operations;

- The key barriers and the challenges from both software and hardware parts;

- Algorithmic and strategic insights into UAV-centric SA;

- Various perspectives on the effective use of SA in conjunction with UAVs;

- Insights into ongoing and potential future research directions.

Accordingly, in Section 2, we discuss the fundamentals of UAV-centric SA in both mission-related and vehicle-related perspectives. In Section 3, we categorized UAVs according to their respective roles and determined the most suitable role distribution for different types of UAVs, followed by a particular focus on the most common rotary UAVs applied for SA in Section 3.1. Furthermore, this study provides a comprehensive review of the inevitable challenges and limitations associated with rotary UAVs in time-critical operations in Section 3.2. We thoroughly investigate the diverse applications of UAVs for SA using wireless sensor networks (WSN) and distributed sensors, high-calibrated cameras, and LiDARs in Section 4, followed by a discussion of the advantages and drawbacks of each method in the given applications. Section 5 will cover various cutting-edge algorithms, including supervised data-driven ML, both deterministic and nondeterministic, and RL-based approaches, their derivatives, and variations developed to tackle challenges associated with UAV restrictions. Apart from the algorithmic perspective, this section also covers strategic insights into UAV-centric SA, including cooperative awareness, object detection/recognition/tracking, and SA-oriented navigation in Section 6. Through this, we aim to showcase various algorithms and their practical uses in multiple situations, providing readers with helpful insights and motivation for their problem-solving endeavors. Eventually, Section 7 concludes the findings of this research, summarizes the key challenges and potentials, and discusses the future trends and anticipated technological developments on the horizon. Through this review, our ultimate goal is to encourage further exploration and innovative research in this field, enhance mission efficiency, and positively impact the community.

2. Fundamentals of UAV-Centric Situational Awareness

Historically, UAV-centric SA has progressed from basic video feeds and telemetry data to more advanced systems that incorporate AI, ML, and advanced sensor technologies. This refers to the advancements made in delivering real-time intelligence and information to UAVs in autonomous systems or to operators in semi-autonomous systems. Accordingly, this has led to improved target detection, tracking, and identification capabilities, as well as the ability to operate in complex and contested environments.

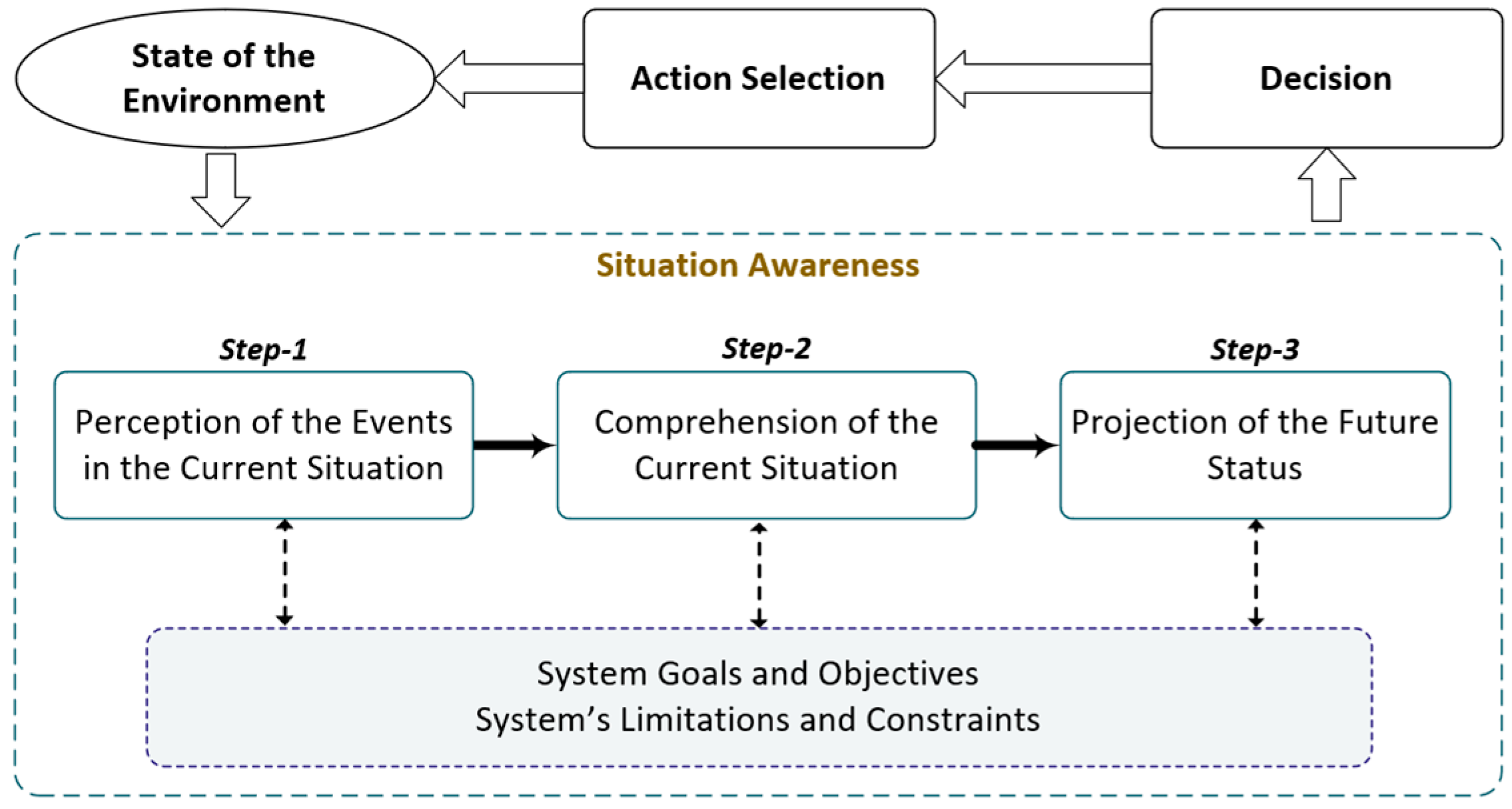

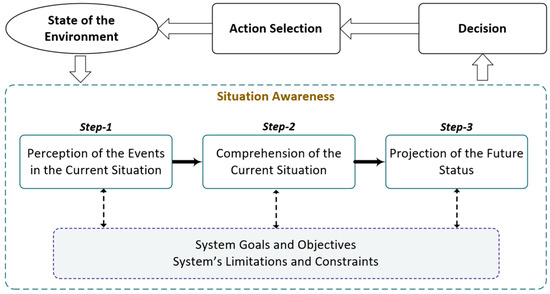

The concept of SA, as proposed by Mica Endsley in 1995, introduced a three-layer model grounded in cognitive principles, shown in Figure 1 [1]. SA involves perceiving environmental information within a defined time and space, comprehending its contextual meaning, and estimating the future states of involved elements. When considering SA in the realm of UAVs, it presents two distinctive perspectives. Distinguishing between these perspectives is crucial as they lead to divergent areas of research.

Figure 1.

Endsley’s model of SA in the context of robotics.

As depicted in Figure 1, at the first level of SA (perception), the attributes and dynamics of critical environmental factors will be perceived. This process will be followed by the second level where the perceived information will be comprehended through the synthesis of disjointed critical factors and understanding what those factors mean in relation to the mission’s tasks. Eventually, the third level of SA involves projecting the next status of the entities in the environment that enables the system to act and respond to the raised situation promptly. The vehicle-related SA functions are classified as follows.

2.1. UAVs for Capturing SA

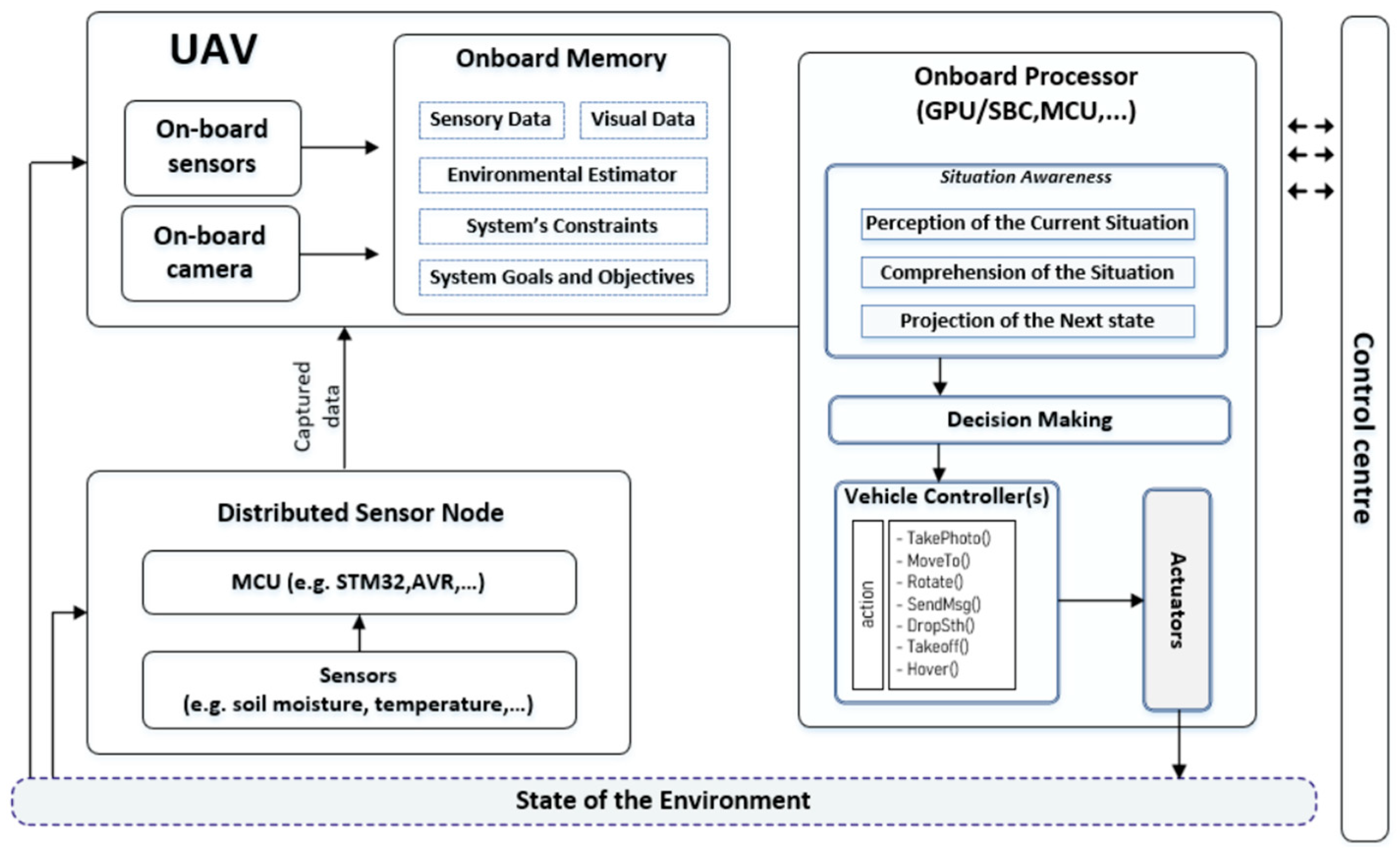

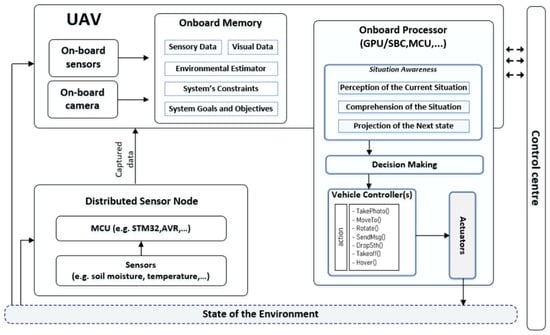

The first perspective involves utilizing UAVs for SA directly in mission scenarios. The emergence of UAVs has brought a promising advancement to capturing SA for diverse applications, which has garnered significant attention in disaster management, SAR, surveillance [4,5,6,7,8], monitoring aging infrastructures [9,10,11], marine operations [12], flood management systems [13,14,15], surveying underground mines [11,16,17], bushfire incidents, and avalanches [18], etc. A core requirement for the majority of these applications is the real-time awareness of the environment to facilitate early detection of the incident, alert the operator promptly about the detected incident in the area of interest, and respond in a timely manner. While promising, current UAV technology and data processing models, especially ML methods, struggle to meet the specific demands of real-time and continuous SA. UAVs used for SA face constraints, notably in battery energy and storage memory, resulting in limited computing capacity compared to high-performance systems. Moreover, ML methods, although powerful for data processing, demand substantial energy and computational resources, posing challenges for their implementation in UAVs. This convergence of UAV limitations and the resource-intensive nature of ML methods highlights a critical question: How can continuous situational awareness be achieved when using resource-constrained UAVs? Bridging this gap necessitates innovative approaches in technology and methodology to overcome the inherent limitations and achieve effective SA in UAV operations. Figure 2 represents the process of capturing SA and EA within a UAV.

Figure 2.

UAV for capturing SA and EA.

2.2. SA within UAV Operations

The second perspective involves vehicle-related SA, encompassing vital functions like self-awareness (internal awareness), fault awareness, cooperation awareness, collision awareness, and weather awareness. These functions are pivotal for ensuring the successful operation of UAVs, enabling the recognition of unforeseen situations and the identification of environmental changes or dynamic factors essential for executing specific operations within defined spatial and temporal contexts [19].

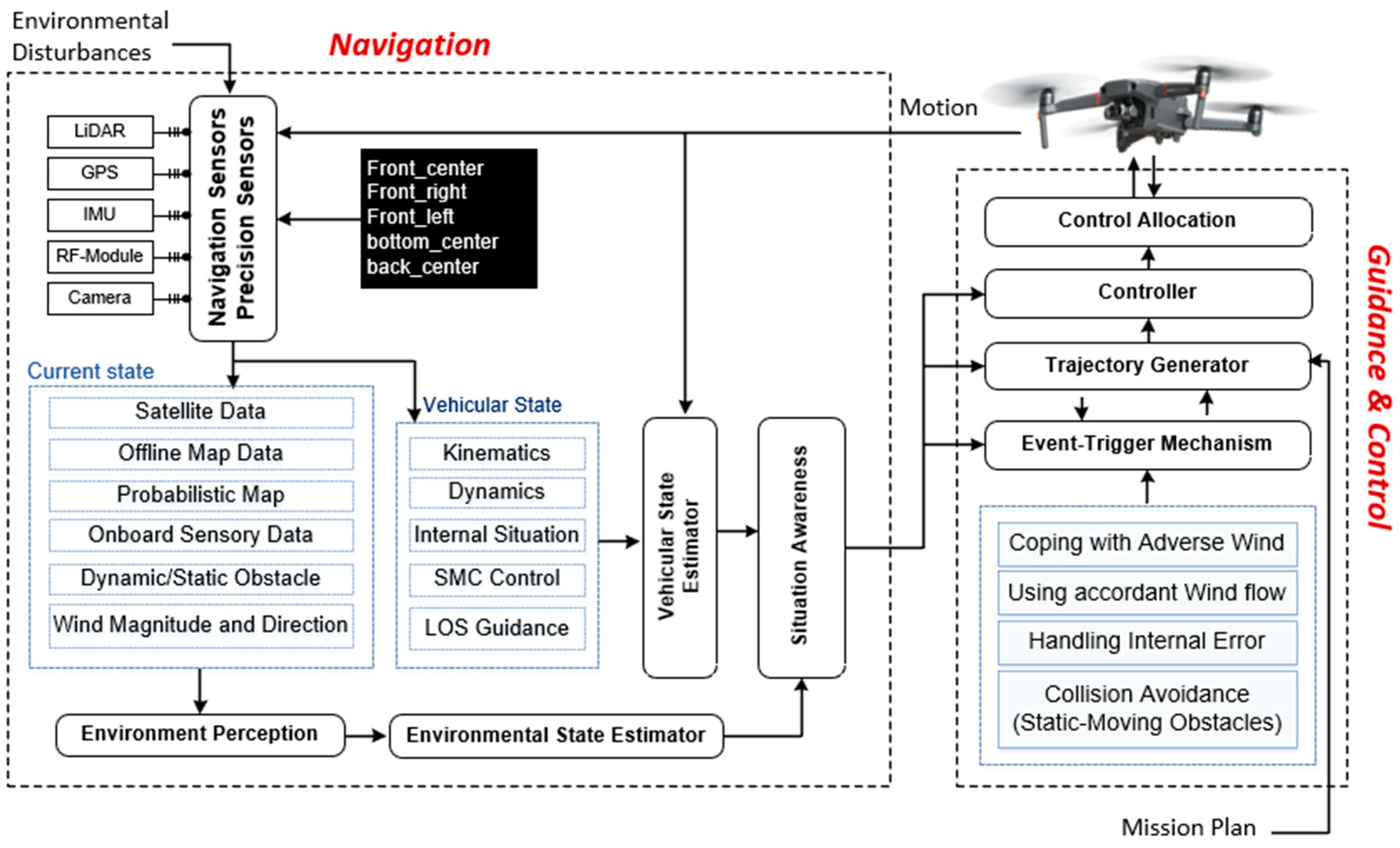

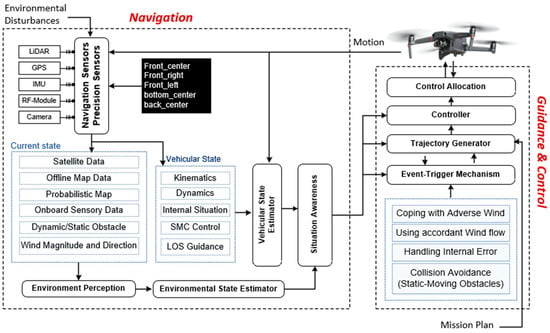

During pre-flight procedures, such information is gathered to chart suitable flight paths and enable prompt responses to unforeseen challenges that may arise during operations. Effective handling of unstructured and unexpected occurrences hinges on the system’s environmental perception. Achieving this demands concurrent processing of object data and their characteristics, combining pre-attentive knowledge in the working memory with incoming information for continual updates on the evolving situation. This updated insight is crucial for projecting potential future events and aiding real-time decision-making processes in UAV operations. This interplay between SA and the decision-making process, along with the environment’s dynamic state, is depicted in Figure 3, illustrating the significant impact of SA on enhancing the UAV’s decision-making capabilities.

Figure 3.

Components of SA within UAV operations.

2.2.1. Weather Awareness

The operation of UAVs often takes place at lower altitudes, exposing them to more complex weather conditions compared to higher altitudes. The vulnerability of smaller UAVs to sudden weather changes due to their size and weight underscores the critical importance of weather awareness in all outdoor UAV operations. This awareness includes considerations such as climate zones, temperature fluctuations, wind conditions, and turbulent dynamics. In UAV operations, anticipating and responding to weather conditions is essential for determining suitable airspaces and timeframes before encountering adverse weather. One of the prominent challenges lies in addressing the need for safer flights during harsh weather conditions. For instance, researchers have employed deep CNNs to estimate 3D wind flow within a short timeframe, approximately 120 s, enabling safer UAV flights [19]. Such technologies contribute to enhancing the understanding and prediction of weather patterns, allowing for informed decision-making during flight operations. Researchers, like Sabri et al., have emphasized the need to determine safer flight paths for UAVs in less than thirty seconds [20].

2.2.2. Collision Awareness

Collision awareness stands as a crucial facet in UAV operations, especially in scenarios where airspace congestion or dynamic environments increase the risk of collisions. Ensuring collision-free flights is essential for the safety and efficacy of UAV missions. Advanced collision avoidance systems play a pivotal role in detecting and mitigating potential collisions, and preventing accidents and damages [21,22]. In UAV operations, collision awareness involves the integration of sensors, such as LiDAR (light detection and ranging), radar, or cameras, to perceive obstacles or potential collisions in the UAV’s flight path. These sensors enable real-time detection of nearby objects or hazards, allowing for timely course corrections or evasive actions. Additionally, technologies like computer vision and ML algorithms aid in object recognition and decision-making, contributing to more accurate collision avoidance strategies. To maintain a comprehensive awareness of the surrounding environment, it is important to identify individual objects as well as the higher-order relations between them in order to identify the level of threat and uncertainty posed by each obstacle. By considering factors such as dynamic deployment, behavior, relative distance, relative angle, and relative velocity to the vehicle, it is possible to classify and recognize groups of obstacles. To appraise potential threats effectively, effective data fusion and accurate SA can provide answers to the important questions considering the following subjects:

- The object’s type, size, and characteristics;

- Identification and elimination of the sensor redundancy;

- The objects’ motion pattern and velocity (moving toward/moving idle);

- The objects’ behavior in one step forward;

- The level of danger posed by a particular object;

- The appropriate reaction when facing a specific object.

Researchers have made significant strides in collision avoidance systems for UAVs. Studies explore diverse approaches, such as sensor fusion techniques and predictive modeling, to enhance collision awareness and avoidance capabilities. For instance, combining data from multiple sensors and employing predictive algorithms allows UAVs to anticipate potential collision scenarios and take proactive measures to avoid them [23,24,25]. However, challenges persist, particularly in scenarios with rapidly changing environments or unpredictable obstacles. Maintaining robust collision awareness systems capable of adapting to dynamic conditions remains an ongoing research focus.

2.2.3. Self-Awareness (Internal Failure Awareness)

Self-awareness, often referred to as internal failure awareness in the domain of UAV operations, encompasses the UAV’s ability to monitor and assess its own system health and functionality in real-time. The concept of self-awareness involves continuous monitoring of the internal components, systems, and functionalities of the UAV, including sensors, actuators, propulsion systems, and onboard computing units. By constantly evaluating its own status, a UAV equipped with robust self-awareness capabilities can detect and respond to internal failures, anomalies, or malfunctions promptly. This proactive monitoring enables the UAV to take corrective actions, such as adjusting flight parameters or altering operational modes, to mitigate potential failures and ensure mission continuity [26,27].

Depending on the severity of the issue, appropriate action can be taken to ensure safety and mission success. The internal fault tolerance system is usually designed to handle fault occurrence. A common architecture includes three levels. The first level is fault detection, which oversees the vehicle’s hardware and software availability. The second level identifies the fault’s severity, impact, and degree of tolerability. The final level is fault accommodation, which is taking appropriate action by either safely aborting the mission in the case of unbearable faults or reconfiguring the vehicle control architecture in the event of bearable malfunctions to continue the mission with new restrictions using alternative sensors or actuators. In fully autonomous missions, onboard sensors and subsystems are constantly monitored to ensure proper functioning, which allows for setting timely and effective recovery plans in the event of any failures. To ensure internal failure awareness, a supervised ML model is recommended [20], where the model is trained using historical data to predict future failures such as sensor malfunction, actuator failure, telemetry issues, or connectivity problems in the UAV [28].

2.2.4. Cooperation Awareness (Cognitive Load Awareness)

Cooperation awareness, sometimes referred to as cognitive load awareness within the scope of UAV operations, pertains to the UAV’s capability to understand, manage, and distribute cognitive workload during cooperative missions or within a team of multiple UAVs. This aspect of SA is essential for optimizing decision-making, task allocation, and communication among UAVs in collaborative scenarios. In multi-UAV missions or cooperative operations, each UAV must possess a heightened awareness of its own tasks, operational constraints, and available resources, while concurrently understanding the roles, actions, and intents of other collaborating UAVs. Effective cooperation awareness facilitates coordination, reduces redundancy, and enhances overall mission efficiency.

Achieving cooperation awareness involves intricate coordination protocols, communication frameworks, and distributed decision-making algorithms. These systems enable UAVs to share information, synchronize actions, and allocate tasks based on collective objectives and individual capabilities. Advanced technologies, including swarm intelligence, decentralized control algorithms, and cooperative learning models, play a pivotal role in fostering effective cooperation awareness among UAVs. For example, cooperative fault-tolerant mission planners have been designed to allow for parallel operations, collective resource sharing, and adaptive re-planning in the event of a fault, as thoroughly discussed in [29,30]. In [31], a distributed cooperation method for UAV swarms using SA consensus, including situation perception, comprehension, and information processing mechanisms, was proposed. The researchers analyzed the characteristics of swarm cooperative engagement and developed a method to use in complex and antagonistic mission environments. Considering the UAVs as intelligent decision-makers, the authors established the corresponding information processing mechanisms to handle distributed cooperation. The proposed method was demonstrated to be practical and effective through theoretical analysis and a case study.

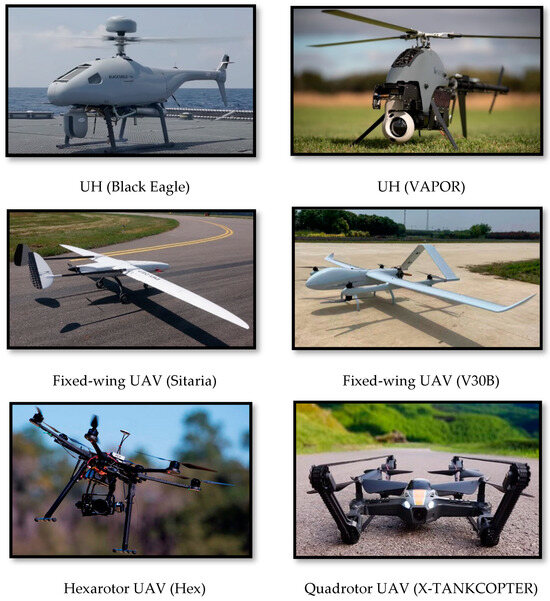

3. Classification of UAVs

This section introduces the most common types of UAVs (drones) used for SA, discussing their advantages, disadvantages, and structural differences [32]. Generally, UAVs can be classified based on body design, kinematics, and DoF, including multirotor, unmanned helicopters (UH), and fixed-wing UAVs, as shown in Figure 4.

Figure 4.

Example of the classified UAVs [4,6,33,34,35,36,37].

- Unmanned Helicopters (UH): A typical UH has a top-mounted motor with a large propeller for lift and a tail motor to balance torque. UHs are agile and suitable for complex environments but have high maintenance costs due to their complex structure. They are ideal for surveillance and tracking missions (e.g., traffic monitoring, border surveillance) due to their hovering capability and maneuverability [38,39].

- Fixed-Wing UAVs: These UAVs share a structure similar to commercial airplanes, generating lift through pressure differences on their surfaces. They are energy efficient, suitable for time-critical and large-scale applications, but require large runways and have limited maneuverability at high speeds [40,41].

- Multirotor UAVs: The most commonly used UAV for SA, multirotors generate lift through multiple high-speed rotating motors and propellers. They offer high maneuverability, quick response, VTOL capabilities, and are cost effective. However, they have high battery consumption and poor endurance [42,43].

Table 1 summarizes the advantages, disadvantages, and dedicated use cases of UHs, fixed-wing, and multirotor UAVs. The focus of this review paper is on multirotor UAVs, with a critical analysis of their capabilities, limitations, and applications provided in Section 3.1.

Table 1.

Summary of the autonomous UAV classification and corresponding pros, cons, and suitable applications.

3.1. Multirotor UAV Classes for SA and Environmental Assessment (EA) Applications

One of the most significant advantages of multirotor UAVs is their mechanical simplicity and flexibility, enabling them to take off vertically and fly in any direction without needing to adjust the propellers’ pitch to exert momentum or control forces. Multirotor UAVs, particularly quadcopters, are relatively cheap and widely available tools with low maintenance costs compared to manned aircraft and other aerial vehicles that can perform excellent maneuverability to tackle environmental disturbances (e.g., obstacle avoidance). Multirotor UAVs can expeditiously arrive at the intended destination, and their agility, hovering capabilities, and ease of deployment, which contribute to their quick dispatch to the operation zones, enable them to promptly respond to the raised situations where time is of the essence. They can penetrate narrow spaces, edifices, ravines, woodlands, or perilous terrains to effectively handle mission-related tasks, maneuver around obstacles, and reach areas that are arduous to reach or inaccessible to humans. Their ability to hover in place provides a stable platform for capturing detailed data and imagery. They can be equipped with various sensors and payloads to enhance their operational performance. Signals that are challenging to perceive visually, for instance, heat, sound, and electromagnetic radiation, can be detected by these sensors. The most popular multirotor UAVs in the market used for SA in various scenarios are shown in Figure 5 and their properties is indicated in Table 2 [44].

Figure 5.

Popular multirotor UAVs for SA [44].

Table 2.

The specifications and properties of the multirotor UAVs used for SA.

Despite multirotor UAVs’ effectiveness in acquiring SA, owing to their maneuverability and small sizes, they are resource-constrained tools with strict processing, memory, battery, and communication (PMBC) limitations as well as maneuverability challenges in harsh conditions. Existing autonomous multirotor UAVs suffer a number of serious restrictions to efficiently providing real-time and continuous SA. Discussing these restrictions is important in order to understand where drones can help us and what needs to be conducted to handle these limitations in dealing with different circumstances.

3.2. Limitations and Challenges

As demonstrated in the table, the most energy-efficient vehicle in the market with a full battery of 5935 mAh and a weight of 6300 g can reach about 55 min and 18.5 km in ideal weather conditions and without payload where the camera is switched off. This battery run-time can be even shorter if the vehicle’s motion entails tackling environmental disturbances. Currently, most of the existing UAVs rely on the communications and decisions assigned by the human supervisor or computations that take place in the cloud or central compartment. The following are some of the highlighted restrictions with the existing multirotor UAVs.

3.2.1. Environmental Constraints

In any general operation scenario, the vehicle and environment experience continuous changes during the mission, and events perceived by the vehicle continuously change its perception of the environment. The critical challenges posed by the environment, such as adverse wind, harsh weather conditions, and the presence of static/dynamic/uncertain collision barriers, significantly impact the UAV’s maneuverability, battery consumption, connectivity, and overall operation performance. Due to their small size and lightweight, multirotor UAVs are susceptible to sudden weather changes. As a result, it is crucial to adapt to dynamic weather conditions in real-time to ensure safe and efficient UAV operations. The urgency of establishing rapid and precise responses to sudden weather changes remains a paramount challenge to be addressed.

3.2.2. Restricted Battery Life

This is one of the significant challenges in the application of drones. The research presented by the authors of [45] studied diverse battery types used on UAVs such as NiMH, NiCad, Pb-acid, and Li-ion, where the outcome concluded that lithium-ion, lithium polymer (LiPo), and lithium polymer high-voltage (LiHV) are the best-suited batteries for UAVs. Even these batteries last less than an hour in an ideal situation, depending on the class of the vehicle, while this period can be even shorter if the vehicle is required to perform some processing, data transmission, photography, or filming, as the camera also supplies its power from the drone’s battery. The speed and acceleration, altitude, and weight/payload of the vehicle can also impact battery consumption. On the other hand, environmental factors, such as strong wind, rain, or tackling obstacles, can also influence the vehicle’s battery run time [46]. Apparently, this durability is not sufficient for applications in immersive cases and large landscapes [47]. The application of solar energy to charge UAVs has been investigated recently in [48] for path planning and [49] for target tracking, converting light into electric current. However, the issue with this approach is the extra payload of solar panels as well as the slow charging ratio, which cannot effectively support UAVs during the flight.

3.2.3. Limited Connectivity and Bandwidth

UAVs not only have restricted battery life but also have connectivity limitations, particularly in remote areas where no internet is accessible or connection is poor. Although the majority of existing UAVs are equipped with various positioning systems including GPS, GNSS, and a range of sensors to navigate and avoid colliding with obstacles, data transmission, as the essential base for real-time SA, cannot be effectively addressed [50]. Harsh weather conditions such as heavy snow/rain or fire can also easily break or interrupt the connection (either GPS or internet). On the other hand, the system’s power usage is proportionally linked to the inter-vehicle or vehicle-to-station distance, which is considerably energy-draining in large operational environments [51].

3.2.4. Limited Memory and Onboard Processing Power

Additionally, UAV processors’ computational power and memory are limited, and therefore, algorithms and solutions that require more memory and processing ability will not be applicable to be implemented in UAVs’ onboard processors [52]. Although relatively powerful onboard processors are being used in some UAVs, their processing power is still comparatively below that of high-performance computers.

3.2.5. Regulatory and Ethical Considerations

In addition, aviation regulations impose certain limitations on drones, including restrictions on flight altitude, airspace, and operating conditions. For example, drones are typically not allowed to fly beyond the visual line of sight of the operator or in certain restricted airspace, which can limit their range and coverage area. Compliance with these regulations is crucial to ensure safe and legal drone operations, but it can also pose challenges in many scenarios such as SAR operations where drones may need to operate in remote or restricted areas.

Generally speaking, UAVs still cannot efficiently cope with power supply limitations, processing power scarcity, maximal physical load size, and maneuverability in harsh conditions. Although power generation techniques exist, they are not sufficiently employed and used in practice. Considering the resource scarcity of existing UAVs, providing onboard computation for ML techniques still is a big challenge in the way of online SA. In some existing research [53,54,55], heavy-computational cloud-centric ML approaches are exploited to tackle the computational restrictions of UAVs, where data are sent, stored, and processed in a centralized server. Although cloud computing is currently employed for big data processing worldwide, it has some critical barriers in terms of requiring wide bandwidth for data communication, a high amount of energy for data transmission, and transmission latency towards the centralized entity, leading to other sorts of challenges when exploiting ML techniques. Thus, devising and exploiting an efficient algorithmic approach to tackle all the given problems is still a widely open area for research.

4. Unveiling the Landscape of UAV-Centric SA in Various Applications

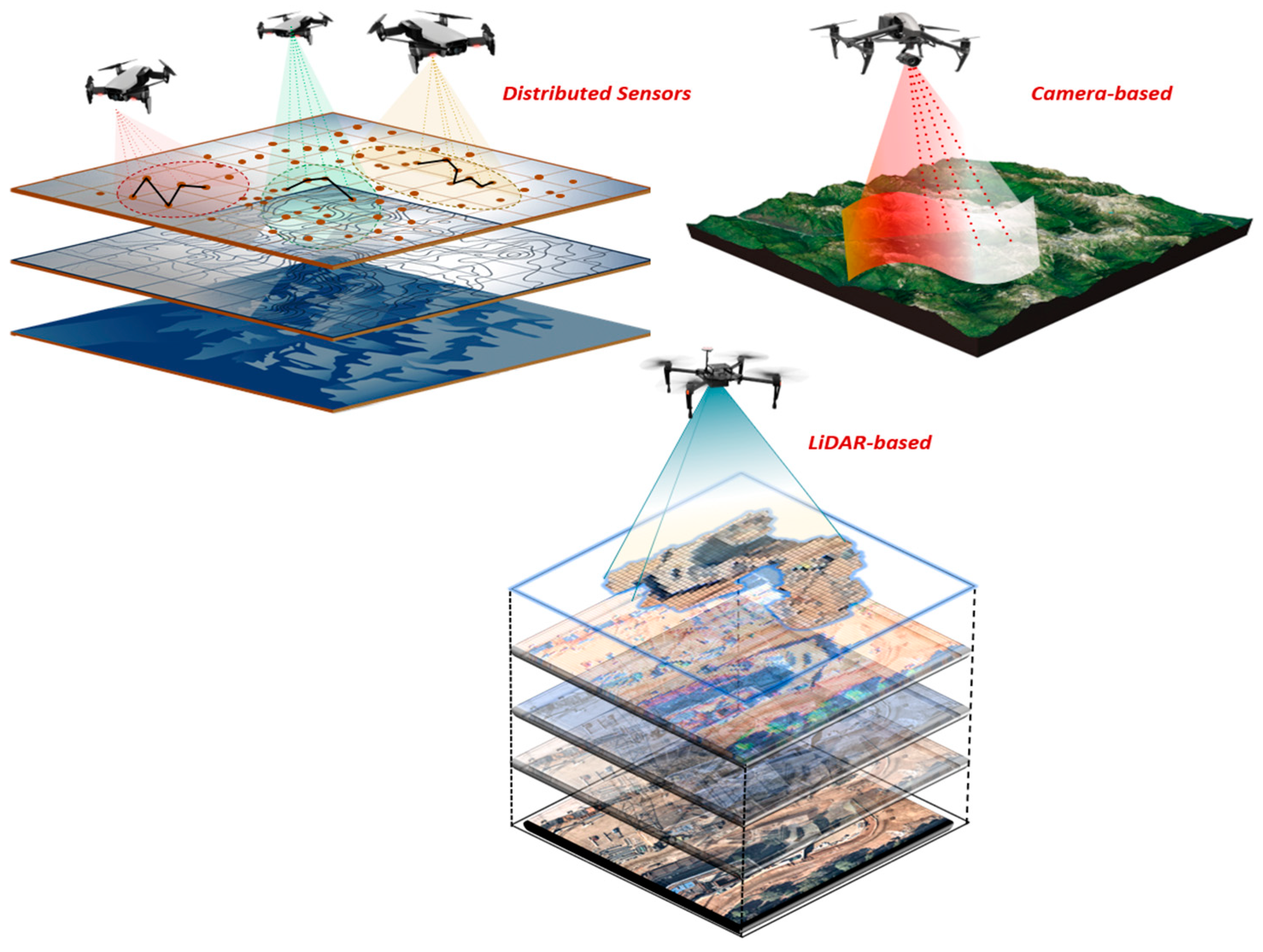

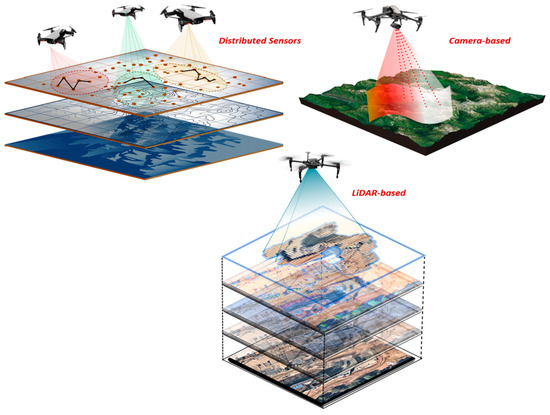

The focus of this section lies in a comprehensive review and critical analysis of existing UAV methodologies concerning both SA and EA in multifaceted contexts [56,57]. These methodologies primarily center on information monitoring and processing for prediction and early detection. In this review, UAV applications for SA are classified into three main categories based on distinct data fusion approaches. Each classification undergoes an in-depth examination of relevant research activities, depicting their methodologies and advancements. Moreover, this review critically addresses persistent research and development challenges that pose ongoing obstacles in the field. Figure 6 represents the conceptual example of capturing SA using distributed sensors, on-board camera, and LiDAR.

Figure 6.

Conceptual example of capturing SA using distributed sensors, on-board camera, and LiDAR.

4.1. Expanding SA with UAVs via WSN and Distributed Sensors

Technological advancements in wireless communication, high-precision sensors, and UAV operational capabilities have enabled enhanced SA through data fusion, integrating various information sources to build a unified understanding of the environment.

The integration of WSN with UAVs creates an ideal system for time-critical missions, such as natural disaster management, enabling the capture of SA for damage assessment before, during, and after disasters to identify threats promptly [58].

Effectively utilizing this capability requires establishing a data collection and monitoring structure of air and ground sensors for accurate data analysis and rapid response. This involves deploying various sensors, such as high-resolution cameras for visual data, LiDAR for 3D mapping, and thermal sensors for heat detection, often mounted on UAVs and coordinated through a central control system. Collected data are transmitted to ground control stations via secure channels for preprocessing, including noise reduction and data fusion, to enhance accuracy and reliability. Advanced algorithms, including machine learning, analyze the data to identify patterns and generate actionable insights. UAVs can also serve as communication hubs, re-establishing damaged communication lines post-disaster [13], and as transportation vehicles for delivering medical equipment [9,59]. Stable weather conditions are necessary for effective UAV operations, making them suitable for hydrological disaster assessments like floods [10].

The study in [11] developed the IMATISSE mechanism, integrating crowd-sensing and UAVs for natural disaster assessment, combining smartphone and WSN data to create comprehensive situational awareness (SA). However, the design overlooked UAV performance in environmental challenges and had energy-intensive data collection. The study presented in [16] introduced a cognitive and collaborative navigation framework for the TU-Berlin swarm of small UAVs, tackling connectivity issues and enhancing SA through local sensor data and inter-vehicle information using non-linear solvers and factor graphs, focusing on data fusion and connection quality but not on the comprehension and projection stages.

Several works focus on using UAVs as signal relays to connect ground-based LoRa nodes with remote base stations [17]. These approaches enhance communication range and reliability by using UAVs equipped with high-gain antennas, signal amplifiers, and advanced signal-processing capabilities. UAVs can quickly establish temporary communication links in remote or disaster-stricken areas, offering flexible network configurations. However, practical limitations include the resilience of UAV relay networks, efficient guidance to critical areas, data fusion, power management, and optimal recharging strategies [60]. In [61], a concept is proposed for UAVs to recharge on public transportation and collect data, while ref. [62] explores using fixed-wing UAVs with detection nodes for bushfire inspection, focusing on optimizing communication intervals and data collection without addressing higher SA levels.

The cited works [10,11,13,16,62] focus on providing environmental SA in natural disaster management using WSN and various UAV classes. Key challenges include operation in severe conditions, diverse data sources, sensor battery limitations, and UAV resource constraints. The complexity of the environment and the unpredictable nature of disasters also remain unaddressed. Addressing these challenges requires considering system properties such as sensor types, UAV classes, and environmental and vehicular constraints.

Another significant concern is communication bandwidth and connectivity, which can become challenging due to damaged infrastructure during disasters. UAVs, wireless sensors, central servers, and processors must actively communicate, and any changes in infrastructure and topology must be instantly addressed to retain connectivity. This is difficult due to the lossy nature of wireless connections, especially without a central node, requiring constant tracking of devices in range. Frequent scanning for new nodes allows faster reactions but increases overhead and battery usage.

While using UAVs as relays in LoRa networks shows promise, challenges remain. Ensuring secure and reliable communication between nodes and base stations, optimizing network performance, and managing power consumption are crucial. Power-hungry sensors may quickly deplete their batteries, making synchronization of network parts during disasters difficult. Communication elements face various resource constraints, from processor capabilities to battery-restricted UAVs. Solutions for low-power, low-memory sensors mainly address WSN tasks like data dispatching to sink nodes, but even these sensors have limited energy that must be managed to maximize data transmission over their lifetime.

Exploiting UAVs for WSN data collection faces limitations like dynamic wireless channels between sensor nodes (SNs) and UAVs. To mitigate energy wastage from continuous idle listening by SNs, a sleep/wake-up mechanism is employed in [63] and location-based data-gathering frameworks prioritize sensors to minimize collisions and energy consumption [64]. Studies like [65] optimize these mechanisms but do not address varying energy consumption and data collection frequencies. UAV battery limitations, critical due to less than an hour of flight time [66,67], are compounded by the energy needed for data transmission. Research focuses on improving path planning and SN mechanisms, with studies like [68,69] addressing issues in fixed-wing UAVs used as mobile data collectors. Despite these challenges, WSN and UAV systems remain optimal for SA, with ongoing advancements paving the way for more efficient communication systems.

4.2. Expanding SA with UAVs Using Vision Systems

Vision systems are crucial for augmenting SA in UAVs, incorporating technologies like cameras and optical sensors to gather high-resolution visual data in real-time. These systems enable UAVs to capture detailed images and videos, facilitating robust visual analysis for terrain mapping, object recognition, and obstacle detection, thereby enriching their SA capabilities [69,70].

Advanced image processing algorithms and computer vision techniques allow UAVs to interpret visual data, extract crucial information, and make informed decisions during missions [71]. These systems enable UAVs to detect and classify objects, navigate complex environments, and respond to dynamic changes in real-time [72]. The fusion of visual data facilitates the creation of detailed 3D maps, aiding in precise localization, route planning, and situational analysis, enhancing the overall effectiveness and safety of UAV operations [73]. In [74], a single UAV-based system uses real-time data from onboard vision sensors to predict fire spread, integrating historical data and online measurements for model updates. However, the limited battery capacity and computational burden restrict the UAV to covering small wildfire zones. A study using a DJI Phantom 4 Pro (P4P) UAV with a 20-megapixel CMOS camera for flood inundation prediction during storms is presented in [14]. This UAV-based multispectral remote sensing platform addresses data collection, processing, and flood mapping to facilitate road closure plans.

A flood monitoring system using a P4P UAV to gather high-resolution images of shorelines was developed in [15]. The collected images were processed to create a digital surface model (DSM) and field measurements for flood risk assessment and georeferencing. This approach requires human-centered processing due to UAV bandwidth limitations and assumed windless conditions, leaving environmental complexities unaddressed. A modular UAV-based SA platform with high-calibrated cameras and optical and thermal sensors is proposed in [75], where onboard processing for object detection and tracking is performed before transmitting data to the Command-and-Control Center (CCC). However, onboard processing imposes a computational burden on the UAV, leading to faster battery depletion and high bandwidth requirements for transmitting abstracted videos. A similar approach for detecting, localizing, and classifying ground elements is proposed in [76], processing visual data for environmental SA during rescue operations. However, fast internet access for video transfer in disaster areas is impractical, and bandwidth limitations remain a significant challenge.

In [77], a UAV vision-based aerial inspection generates a 3D view pyramid for passive collision avoidance during urban SAR operations, facilitating structure identification in unexplored environments. However, the approach lacks practical feasibility for real-time operations due to passive obstacle avoidance and computational burden concerns.

The VisionICE system, used in SAR operations, integrates a camera-mounted helmet, AR display, and UAV vision sensors to enhance air–ground cognition and awareness [78]. Real-time target detection and tracking are performed using a YOLOv7 algorithm, but communication to the cloud server requires significant bandwidth and energy, suffering from latency issues.

Studies in [79,80,81], demonstrate the feasibility of using UAVs for fire monitoring and ISR missions, employing neural networks and multi-UAV coordination for efficient fire perimeter surveying. A distributed control scheme for camera-mounted UAVs to observe fire spread is presented in [82], focusing on minimizing information loss from cameras due to heat. Another study [83] integrates multi-objective Kalman uncertainty propagation for UAVs to provide ground operators with fire propagation data, emphasizing human-centered decision-making.

The ResponDrone platform [84] coordinates a fleet of UAVs to capture and relay critical imagery in disaster areas through a web-based system, though practical challenges with disrupted communications infrastructure remain. In [85], a 3D-draping system dynamically overlays UAV-captured imagery on terrain data for SA, but the perception stage is primarily addressed, leaving the comprehension and projection stages to human operators.

An extensive review in [86] highlights the cost-effectiveness and versatility of UAV-based photogrammetry and geo-computing for hazard and disaster risk monitoring, despite challenges like weather dependency, regulatory constraints, and data quality variability.

Although vision systems are efficient for capturing high-quality data, their use is hindered by UAV resource limitations for onboard computation, data transmission, and battery consumption. Vision sensors are also vulnerable to lighting conditions and obstructed views. Onboard processing is energy-consuming, and UAV processors cannot handle heavy computational algorithms, leading to accuracy trade-offs. Remote computational platforms require continuous data transmission, draining the battery and causing connectivity issues during disasters, making real-time SA challenging.

4.3. Expanding SA with UAVs Using LiDAR

Leveraging LiDAR technology significantly enhances the SA capabilities of UAVs by emitting laser pulses to create highly accurate 3D maps. These elevation data, combined with precise geospatial information, enable UAVs to navigate complex terrains, perceive the environment accurately, and make informed decisions in real-time. LiDAR supports terrain modeling, topographical analysis, and provides detailed 3D representations of landscapes, crucial for applications such as precision agriculture and environmental monitoring [87].

LiDAR-equipped UAVs excel in forestry management, urban planning, and infrastructure development by capturing detailed spatial information. LiDAR systems, like Ouster LiDAR, provide 360° panoramic images and dense 3D point clouds, supporting computer vision applications [88,89]. These systems utilize learning-based approaches compatible with deep learning models for tasks like object detection and tracking, presenting an efficient alternative to vision sensors that require intensive computational resources [90].

In [91], LiDAR-equipped UAVs were used to capture 3D digital terrain models of glacier surfaces, integrating high-resolution terrain data with monitoring systems to create 3D models. A LiDAR-based scanning system for UAV detection and tracking over extended distances was employed in [92], providing precise positioning and navigation in GNSS-denied environments. Tracking UAVs in 3D LiDAR point clouds by adjusting point cloud size based on distance and velocity was extended in [93]. However, the detection and tracking of small UAVs with varied shapes and materials remain challenging.

Learning-based LiDAR positioning techniques leverage machine learning algorithms to extract meaningful information from LiDAR data. In [94], a point-to-box approach rendered ground objects as 3D bounding boxes for easier detection and tracking. Although effective on powerful GPUs, this approach faces computational restrictions on UAVs. Transforming point cloud data into images for visual detection algorithms, as carried out in [95], offers 3D localization but imposes a heavy computational load. To alleviate this, ref. [96] used a 3D range profile from Geiger mode Avalanche Photo Diode (Gm-APD) LiDAR with YOLO v3 for real-time object detection, enhanced by a spatial pyramid pooling (SPP) module.

An adaptive scan planning approach using a building information model (BIM) was proposed in [82,92,94,97] to reduce computational load and battery consumption. Using probabilistic cognition to minimize LiDAR beams while retaining detection accuracy was investigated in [98]. Despite computational limitations, the combination of visual and LiDAR information with learning-based frameworks achieved satisfactory SA [99,100]. However, memory, onboard processor, and battery constraints, as well as environmental factors like wind drift and obstacles, remain critical challenges.

In [97], a centralized ground-based perception platform used UAVs with LiDAR and RGB-D cameras for information fusion, alleviating UAV computational load through ground processing. While effective, long-range data rendering and offloading face communication bandwidth limitations.

Multi-sensor systems combining visual and LiDAR data hold great potential for accurate SA and position estimation [82,92,94,97]. However, integrating these data types to avoid redundancy and preserve essential information is complex. The literature often overlooks important parameters such as computation, memory, and battery restrictions, and the impact of environmental factors on UAV performance. Table 3 summarizes the advantages and disadvantages of three reviewed approaches used for capturing SA by UAVs.

Table 3.

Comparison of three reviewed approaches used for capturing SA by UAVs.

5. Algorithmic and Strategic Insights to UAV-Centric SA

5.1. Data-Driven ML Models

Recent advancements in supervised ML techniques have led to significant improvements in object detection and tracking. These techniques have demonstrated promising performance in tracking-based applications, and their ability to learn complex features and process semantic information makes them powerful tools for addressing some of the challenges in this field [27,75,82].

Supervised learning excels in scenarios such as object detection, image classification, and anomaly detection, where the availability of labeled datasets allows the model to learn precise mappings between inputs and outputs. The supervised approach ensures reliable performance in these tasks, making it preferable for applications requiring high precision and predictability. In contrast, unsupervised learning is typically used for clustering and pattern recognition tasks where labeled data are scarce, but it may not provide the same level of accuracy for specific tasks such as supervised learning.

Several studies devoted to forming SA for autonomous UAVs demonstrate the high efficiency of ML technologies for solving this problem, which will be discussed in Section 5.1.1 and Section 5.1.2 followed by a critical analysis in Section 5.1.3. CNNs and DL are widely used in computer vision applications and are specifically designed to learn and extract features from visionary data through a combination of convolutional layers, pooling layers, and fully connected layers. These layers help to identify patterns and relationships within the image data, allowing for accurate detection, tracking, and analysis. Arguably, a significant portion of the current advancements in tracking are rooted in DL methodologies.

Supervised learning constitutes a fundamental paradigm in leveraging labeled datasets to train models for specific tasks. In the realm of UAV SA, this subsection explores the applications of and advancements in supervised learning techniques, elucidating their contributions to enhancing the capabilities of UAVs.

5.1.1. Object Detection, Recognition, and Tracking

SA requires onboard processing using single- or multi-sensor fusion to capture data from various visual and radio sensors, assessing the environment [101]. The quality and quantity of fused data significantly impact UAVs’ perception and SA, necessitating effective strategies for data acquisition and semantic processing [102,103]. Current models lack cognitive accuracy, highlighting the need for broader, human-like cognition models for robust decision-making and action selection.

Supervised learning plays a pivotal role in object detection and recognition, which are crucial for identifying and categorizing elements within the UAV’s operational environment. This section delves into state-of-the-art supervised learning models such as CNNs and their adaptations for efficient detection of objects like vehicles, structures, or anomalies, contributing to comprehensive SA.

Using CNNs and, in general, DL-based models, various architectural strategies have been devised to enable the integration of sensors and devices, resulting in enhanced autonomy and increased efficiency in search and rescue (SAR) operations. Convolutional layers in CNNs are particularly adept at extracting spatial features from image data, which is crucial for accurate object detection and classification. Pooling layers help in reducing the dimensionality of the data, making the processing more efficient while retaining essential features. Fully connected layers integrate these features to make final predictions, which can be critical in identifying and locating individuals or objects in SAR missions. Additionally, advanced architectures such as ResNet, which introduces residual connections to mitigate the vanishing gradient problem, and YOLO (You Only Look Once), known for its real-time object detection capabilities, have been employed to improve the performance of UAV systems. These models allow for real-time data processing and decision-making, which are vital in time-sensitive SAR operations. By leveraging these architectural advancements, UAVs can effectively process vast amounts of sensory data, leading to quicker and more accurate situational awareness.

In [104], a DL-based person-action-locator approach for SAR operations uses UAVs to capture live video, processed remotely by a DL model on a supercomputer-on-a-module. The Pixel2GPS converter estimates individual locations from the video feeds, addressing limited bandwidth and detection challenges. In [105], UAV-based SA in a SAR mission uses a CNN on a smartphone for human detection. Despite its accuracy and portability, communication bandwidth remains an issue. The choice of a smartphone as the computing unit is unclear, given that more powerful portable devices exist.

Another method uses cellphone GSM signals for target localization through received signal strength indication (RSSI) [106,107,108]. In [107], a deep FFNN with pseudo-trilateration determines the target location, using CNN features as a secondary input. In [108], fixed-wing UAVs collaboratively track SOS signals from cellphones in wilderness applications. The study presented in [109] proposed a hybrid approach integrating Faster R-CNN with a quadrotor’s aerodynamic model for navigating forests and detecting tree trunks. A hierarchical dynamic system for UAV behavior control in [32] uses visual data processed by DL-based pyramid scene parsing network (PSPNet) for semantic segmentation. This system anticipates entity behavior around the UAV, enhancing SA-based action control and decision-making.

A different SAR approach in [110], integrates a pre-trained CNN with UAV cameras for detecting survivors’ vital signs, augmented with bio-radar sensors for accuracy, and using LoRa ad hoc networks for inter-vehicle communication. UAV SLAM technology coupled with CNNs enhances real-time environmental perception and mapping. In [111], real-time indoor mapping integrates SLAM with a CNN-based single image depth estimation (SIDE) algorithm.

End-to-end learning solutions for unknown environments in [112] directly obtain geometric information from RGB images without specialized sensors. The active neural SLAM in [113] combines traditional path planners with learning-based SLAM for fast exploration. While C-SLAM offers a global perspective by integrating local UAV perspectives [114], map accuracy is affected by factors like UAV numbers, communication delays, bandwidth limitations, and reference frames [115,116].

UAVs’ SA is hindered by data acquisition noise and environmental uncertainty [117]. A UAV-based SA system in [97] uses a single camera for real-time individual detection and action recognition, impacting mAP if detection is inaccurate. Geraldes et al. [104] proposed the person-action-locator (PAL) system for recognizing people and their actions using a pre-trained DL model, focusing only on basic perception and neglecting event comprehension and projection.

5.1.2. Data-Driven ML-Based Path Planning and Navigation

Exploring various strategies to optimize the UAV’s flight path and relay operations is another area of interest for many researchers. This involves developing efficient algorithms that determine the most optimal UAV trajectory, considering signal strength, interference, and energy consumption factors.

The application of supervised learning extends to path planning and navigation systems, empowering UAVs to navigate through complex terrains with optimal routes. Exploration of supervised algorithms showcases their role in training UAVs to make informed decisions based on labeled training data, leading to more adaptive and responsive navigation. CNN and DL have also demonstrated their usefulness in addressing challenges associated with positioning, planning, perception, and control in the context of autonomous drones’ collision avoidance.

UAVs must detect surrounding objects to avoid collisions and adjust flight paths. Autonomous navigation systems and onboard cameras estimate paths locally, though this can be computationally intensive. To address this, ref. [118] explored DL methods like RL and CNN for real-time obstacle detection and collision avoidance. Awareness of terrain elevations aids in deconfliction strategies and rerouting paths [119,120,121]. ANN performance for UAV-assisted edge computing and parameter optimization techniques for SA and obstacle avoidance are investigated in [9], though high computational demands pose challenges for real-time UAV-assisted SA, particularly in SAR operations.

Deep CNNs excel in feature identification from input images, making them popular for SA in collision avoidance. For instance, a deep ANN for autonomous indoor navigation in [122] showed efficiency in collision avoidance. Combining LSTM with CNN for UAV navigation was explored in [123] using the Gazebo simulator with obstacles [124].

A LiDAR-based approach for UAV space landing using a hybrid CNN-LSTM model was proposed in [125], offering high pose estimation accuracy but insufficient real-time processing speed. Similarly, ref. [126] proposed a hybrid CNN-LSTM model for early diagnosis of rice nutrient status using UAV-captured images, integrating an SE block to improve accuracy but reduce computation speed.

End-to-end approaches match raw sensory data to actions, learning from skilled pilots’ actions [127,128,129]. These methods often involve depth maps, the UAV position, and odometry for route recalculation [127,130]. A CNN-based relative positioning approach for UAV interception and stable formation was suggested in [131] and DL models were applied for terrain depth maps and obstacle data for collision avoidance in [132].

NNs in flight control methods learn UAVs’ nonlinear dynamics, enhancing adaptability in unknown situations [133]. A cooperative system for UAVs’ collaborative decision-making and adaptive navigation using a trained NN coupled with a consensus architecture was designed in [134], facilitating mission synchronization and environmental adaptation.

Internal SA is crucial for UAVs to address software/hardware failures. Ref. [135] investigated using DNN to classify and detect recovery paths for malfunctioning UAVs, demonstrating its potential for enhancing safety and reliability. Further research could improve autonomous recovery systems, enhancing UAVs’ overall safety and reliability.

5.1.3. Challenges and Future Directions

While supervised learning offers substantial advancements in UAV SA, challenges such as limited labeled datasets, domain adaptation, and real-time processing complexities persist. This section outlines the current challenges and suggests potential directions for future research, aiming to address limitations and propel the application of supervised learning in UAV SA to new heights.

Despite the successful use of supervised learning in the studies mentioned above and meeting the needs of various applications, manual labeling requires tremendous time and effort. On the other hand, the collected datasets should be qualitatively and quantitatively rich enough for the particular environment where the UAV will operate in advance of training. Given that the collected data pertain to specific environments, the entire process must be repeated whenever the model needs to be used in a different setting. Exploiting lightweight supervised algorithms, such as shallow NN, on UAVs is feasible and can be effective at the cost of optimal performance only when sufficient properly labeled data are available or when the environment is fully known.

Nevertheless, since the operating environment is rarely known in real-world applications, relying solely on offline data to train the model is likely inadequate for applications that require real-time sensory data, and the agent has a basic understanding of the environment. Additionally, rendering extensive amounts of data in a dynamic spatiotemporal environment is challenging, while labeling the collected data in such an environment further complicates the process.

Another major challenge with supervised methods, including various types of NN, is the intricate training process requiring extensive computation. Consequently, executing these algorithms in UAVs, as resource-constrained devices, is arduous. Although utilizing DNN can aid in achieving satisfactory performance with high accuracy, it can result in additional computation, power usage, and latency. Integrating extra hardware into UAVs to manage complex computations may reduce the thrust-to-weight ratio, shorten battery life, and delay response time, making it an inefficient solution. Thus, the demand for deployable ML techniques for edge devices, including UAVs, has increased as they face PMBC limitations, making it challenging to perform ML with these devices. This entails transferring the data to an external computation center to be processed on high-performance computers, such as a cloud server [136]. However, data communication is associated with enormous energy consumption and is prone to latency due to bandwidth limitations. Acknowledging the significant contributions of academic research to technology development, the majority of these works remain in the simulation stage using various assumptions that may not apply to real-world applications or present challenges in hardware implementation [133].

Innovative hardware designs are being researched to enhance the computation ability of UAVs while the power supply for heavy computation on UAVs, as well as the communication restrictions, still remain an inevitable challenge.

Given the importance of real-time continuous SA, it becomes essential to incorporate online learning techniques that allow the model to adapt and improve its performance based on real-time feedback. By continuously updating the agent’s understanding of the environment through interaction and feedback, the agent can better navigate dynamic and uncertain conditions, ultimately enhancing its ability to make accurate and timely decisions. This adaptive approach enables the model to stay relevant and effective in complex, evolving environments, ensuring it can handle real-world applications’ demands.

5.2. Stochastic, Deterministic, and Metaheuristics Algorithms

Deterministic and nondeterministic algorithms can provide UAVs with SA in two different aspects. The first is optimizing the route or path the UAV should take to navigate the environment and collect data from its onboard or scattered sensors (see Section 4.1). This approach has been successfully applied in single- and multi-UAV mission planning scenarios (application-based SA).

The second approach is exploiting SA to ensure the UAV’s successful mission completion in unknown environments, considering the important parameters, constraints, and disturbances that can imperil the vehicle’s safety or hamper the operation. This includes enabling vehicles to be aware of and deal with factors such as collision barriers, including trees, cliffs, or objects, the impact of wind on the UAV’s motion, and other harsh environmental conditions that can affect the UAV’s maneuverability, battery consumption, endurance, trajectory length, and overall mission cost (vehicle-based SA).

UAVs are often used to conduct time-critical operations and explore unknown and uncertain environments where neither GPS signal nor an a priori terrain map are available. Thus, devising an effective and feasible exploration strategy, route/path planning, and trajectory tracking are crucial for optimizing the operation performance and minimizing exploration costs. To this end, the UAVs ought to perceive their surroundings in real-time and adjust their strategy accordingly. To achieve this, numerous studies have been dedicated to developing effective exploration strategies.

Both of these perspectives offer great potential for using these groups of algorithms to enhance the vehicle’s autonomy and reduce or eliminate the reliance on remote control or supervision, leading to lower risks of human errors and subjective judgments. This is especially critical in operations like SAR that have strict timelines and require high accuracy.

As one of the crucial considerations for controlling the behavior of autonomous UAVs, threat awareness (mainly obstacles) has been investigated by [23,24,25], wherein [23] the UAV’s collision-free flight over hilly terrain has been addressed by performing an evasive maneuver using the data obtained from the radar. In [24], a similar problem is solved using visual data and integrating a reasoning inference system to discover the relationships between the objects of the observed scene. In [11], collision awareness is also addressed considering different ranges of objects on the Earth’s surface. Available approaches for motion planning and navigation can indeed be categorized based on their nature: deterministic, non-deterministic, and stochastic.

5.2.1. Deterministic Methods for Vehicle-Based SA

Deterministic path and motion planning methods ensure consistent results with the same initial conditions and rules.

Potential field-based methods are commonly used for path planning, creating attractive and repulsive fields to guide vehicle motion. Ref. [137] used an artificial potential field (APF) for UAV navigation in a 3D dynamic environment. Ref. [138] applied an APF-based strategy for bilateral teleoperation with 3D collision-aware planning, incorporating visual and force feedback. Ref. [139] integrated APF with multi-UAV conflict detection and resolution (CDR) using rule priority and geometry-based mechanisms, showing a 46% improvement in near-collision detection. Ref. [140] proposed an improved APF for UAV swarm formation, handling dynamic obstacles to prevent collisions.

While APF is popular for its fast calculations and low computational cost, it can lead to the “repulsive dilemma”, where UAVs may get stuck in local minima. Ref. [141] formulated path planning as a multi-constraint optimization problem using linear programming and adaptive density-based clustering, successfully meeting task completion and execution times. Ref. [142] developed a decentralized PDE-based approach for optimal multi-UAV path planning, leveraging porosity values to model collision risks, and suggested an ML technique for efficient PDE solutions. This method ensures that the UAVs do not collide while taking into account their dynamic properties. The feasibility of on-board implementation is also highlighted, and a simulation study shows the advantages of this method over centralized and sequential planning.

Mixed-integer linear programming (MILP), as an exact methodology, was conducted by [143,144] to facilitate UAV collision-free motion planning in low altitudes flying over a residential area. Cho et al. [145] also used MILP for multi-UAV path planning to maximize the coverage in a maritime SAR operation. In this study, the MILP uses a grid-based decomposed search area translated to a graph form to render an optimal coverage solution. However, due to its deterministic nature, MILP is computationally complex and inefficient for real-time applications, which entails swift responses to environmental changes.

Deterministic algorithms guarantee consistency for the same input, and a predictable performance and are suitable for scenarios where the environment is fully known and static, as they can efficiently compute optimal paths without the need for random sampling or exploration. However, in these algorithms, due to their deterministic nature, computational time heavily relies on the size and complexity of the operation field. These algorithms also face several optimization challenges in dealing with complex environments as they heavily rely on prior knowledge of the surrounding environment, including the potential field, collision cost map, and modeling of the UAV’s kino dynamics in some cases [139,140]. Moreover, these algorithms are highly vulnerable to noisy data, which can adversely affect their accuracy and performance, even in the presence of prior knowledge. The slow convergence and dependence on the size and dynamism of the operation field make them inefficient for real-time applications, which entail a swift response to environmental changes, where the vehicle might be subject to multiple replanning during the mission.

5.2.2. Non-Deterministic and Stochastic Methods for Vehicle-Based SA

Non-deterministic methods can exhibit different behaviors for the same input due to inherent variability. Stochastic and non-deterministic algorithms for UAVs’ target motion prediction and trajectory tracking include an A* algorithm [146], and MDP [147,148], enabling advanced SA for path planning. Since 2011, research has focused on these areas, as discussed in [149].

In [150], a centralized A* algorithm and multi-Bernoulli tracking approach address SA-dependent UAV swarm formation and planning. A mission-centered command and control paradigm using A* for dynamic path planning and UAV-UGV synchronization was proposed in [147]. The A* algorithm also supports UAV path planning in urban air mobility operations, facilitating collision avoidance and deflection updates [151].

Sampling-based approaches like RRT, RRT*, and PRMs [152,153,154,155] support UAV path planning by connecting sampled obstacle-free points. Wu et al. [153] explored RRT variants, highlighting their slow convergence and high computational cost. A hybrid approach combining bidirectional APF and IB-RRT* addresses randomness challenges and controls computational costs. Bias-RRT* was used for collision-free path planning in port inspection operations [155].

Conventional motion planning algorithms, including PRM, RRT derivatives, and A*, involve two stages: observing the surroundings and generating control instructions. These methods suffer from high computational complexity, slow convergence, prolonged flight distance/time, and high energy consumption, making them inefficient for resource-constrained UAVs. Non-deterministic algorithms offer near-optimal solutions quickly but are sensitive to environmental changes and disruptions [156,157]. Open-loop systems [158] lack logical prediction and require frequent decision-making iterations.

Stochastic methods involve randomness in decision-making or environmental representation. PRM struggles with non-holonomic constraints and generates redundant moves based on vertex connections. Sampling-based approaches suffer from slow convergence and high computational costs. Hybrid approaches combining deterministic and stochastic elements handle uncertainty and variability, providing stability and predictability.

5.2.3. Metaheuristic and Evolution-Based Methods for Vehicle-Based SA

Metaheuristic and evolution-based algorithms have shown significant progress in UAV motion planning, leveraging swarm intelligence for better convergence in complex target spaces.

The ant colony optimization (ACO) algorithm was applied for optimal collision-free paths in air force operations [159]. A hybrid ACO-A* algorithm addressed multirotor UAV 3D motion planning, considering energy consumption and wind velocity constraints [160]. In this study, a camera-mounted UAV is utilized for patrol image shooting, while ACO-A* adaptively generates a path plan by considering energy consumption as the cost function and taking wind velocity constraints into account.

Bo Li et al. [161] developed an improved ACO for multi-UAV collision-free path planning and to accommodate the smoothness of the trajectory in sharp turns using the inscribed circle (IC) approach. The primary focus of this research is augmenting ACO with metropolis criteria to hamper the risk of stagnation or getting stuck in local optima. However, the UAV’s flight is assumed to be in a static environment with stationary obstacles, which does not resemble a real-world environmental model, neglecting factors that can affect the UAV’s practical flight in diverse situations.

Cuckoo search and multi-string chromosome genetic algorithms enhanced the multi-dimensional dynamic list programming (MDLS) algorithm for UAV task planning, showing better global optimization and task completion time [162]. They proposed a mission planning method for a UAVs’ course of action that uses a two-segment nested scheme generation strategy incorporating task decomposition and resource scheduling. This method can automatically generate multiple schemes of the entire operational process, which have great advantages in diversity and task completion time compared to other methods. The correlation coefficient can be adjusted to achieve different optimization indexes. The method’s effectiveness was verified through UAV cooperative operation modeling and simulation.

A hybrid grey wolf optimization (GWO) and fruit fly optimization algorithm (FOA) was proposed for UAV route planning in oilfields, improving solution quality in complex environments [163]. However, this work has been considered in 2D terrain which does not resemble the UAV’s kinodynamic tackling of 3D environmental challenges.

Qu et al. [164] introduced a new hybrid algorithm, HSGWO-MSOS, for UAV path planning in complex environments. This algorithm efficiently combines exploration and exploitation abilities by merging a simplified grey wolf optimizer (GWO) with a modified symbiotic organisms search (MSOS). The GWO algorithm phase has been simplified to speed up the convergence rate while maintaining population exploration ability. Additionally, the commensalism phase of the SOS algorithm is modified and synthesized with the GWO to enhance the exploitation ability. To ensure the path is suitable for the UAV, the generated flight route is smoothed using a cubic B-spline curve. The authors provide a convergence analysis of the proposed HSGWO-MSOS algorithm based on the method of linear difference equations. Simulation experiments show that the HSGWO-MSOS algorithm successfully acquires a feasible and effective route, outperforming the GWO, SOS, and SA algorithms.

PSO is a classical meta-heuristic algorithm commonly used for multi-objective path planning problems offering promising performance in UAV path planning and reducing the collision risk without adjusting the algorithm setup [165]. Shang et al. [166], developed a co-optimal coverage path planning (CCPP) method based on PSO that can simultaneously optimize the UAV path and the quality of the captured images while reducing the computational complexity. This method is designed to address the limitations of existing solvers that prioritize efficient flight paths and coverage or reduce the computational complexity of the algorithm while these criteria need to be co-optimized holistically. The CCPP method utilizes a PSO framework that optimizes the coverage paths iteratively without discretizing the space of movement or simplifying the models of perception. The approach comprises a cost function that gauges the effectiveness of a coverage inspection path and a greedy heuristic exploration algorithm to enhance the optimization quality by venturing deep into the viewpoint search spaces. The developed method has shown the ability to significantly improve the quality of coverage inspection while maintaining path efficiency across different test geometries.

In a recent study, Khan et al. [167] proposed a new approach called a capacitated vehicle routing problem (CVRP) using consensus theory for UAV formation control aiming to achieve the safe, collision-free, and smooth navigation of UAVs from their initial position to the site of a medical emergency. The idea is that a patient notifies the nearest hospital through a GSM band about their health condition, and a doctor drone is immediately dispatched to provide medical assistance. In this study, the vehicle routing problem was carried out using CVRP and different evolutionary algorithms, including PSO, ACO, and GA, with a comparative analysis of different vehicle capacities and numbers. Accordingly, the results showed that the CVRP outperformed others in terms of runtime (0.06 s less runtime) and transportation cost, using fewer vehicles with an almost doubled payload capacity. Though this research tackled the computational time and transportation costs, the problem with excessive battery consumption for the increased payload still needs to be addressed.

Zhang et al. [168] introduced a hybrid FWPSALC mechanism for UAV path planning, demonstrating robustness in search operations and constraint handling with improved speed convergence. The study frames UAV global path planning as a multi-constraint optimization problem and proposes a collaborative approach that integrates an enhanced fireworks algorithm (FWA) and PSO. The objective function models the UAV flight path to minimize the length while adhering to stringent constraints posed by multiple threat areas. The α-constrained method, employing a level comparison strategy, is integrated into both FWA and PSO to augment their superior constraint-handling capabilities. The entire population is divided into fireworks and particles, operating in parallel to increase diversity in the search process. The introduction of a novel mutation strategy in fireworks aims to prevent convergence to local optima. An information-sharing stream is designed between fireworks, individuals in FWA, and particles in PSO in this research to improve the global optimization process. The simulation results represent the efficiency of the adjusted hybrid algorithm in solution quality and adhering to the constraints.

One major limitation is the non-deterministic nature of evolutionary algorithms, which poses challenges in escaping local optima, potentially leading to suboptimal solutions. Additionally, creating a decision-making model that can be generalized to unknown environments remains a formidable challenge, even with the computational efficiency of evolutionary algorithms. The computational efficiency of metaheuristic approaches can vary widely. Some evolutionary algorithms and other non-deterministic methods can be computationally intensive, especially as the number of agents or the dimensionality of the problem increases. However, many are indeed suitable for resource-constrained devices due to their ability to provide good-enough solutions quickly, even if they are not the optimal solution [169].

5.3. RL-Based Approaches for UAV-Centric SA

Despite the extensive use of UAVs for SA, their effectiveness in providing accurate SA is hindered by environmental uncertainty. Applying a non-deterministic or centralized optimization algorithm in most environments is challenging due to its uncertain, dynamic, and only partially observable nature. Individual agents must make independent and potentially short-sighted decisions based on the information they receive. RL is suggested in [170] to deal with the shortcomings of supervised, deterministic and nondeterministic algorithms where the agent (UAV) interacts with its surroundings and learns things with trial and error.

RL emerges as a powerful paradigm within UAV-oriented SA, offering a dynamic approach where UAVs learn optimal decision-making strategies through interaction with their environment. RL excels in teaching UAVs to make sequential decisions by learning from feedback received through interactions with the environment. RL also contributes to dynamic object recognition and tracking within UAV SA. By continually learning from interactions, UAVs become adept at recognizing and tracking moving objects in their surroundings. The versatility of RL is highlighted in its ability to facilitate transfer learning across various UAV missions. By accumulating knowledge from one mission, RL-equipped UAVs can adapt and apply learned policies to different scenarios. This section explores how RL algorithms, such as Q-learning and DRL, enable UAVs to formulate optimal decision policies for tasks like navigation, path planning, and adaptive response to environmental changes.