Learning Advanced Locomotion for Quadrupedal Robots: A Distributed Multi-Agent Reinforcement Learning Framework with Riemannian Motion Policies

Abstract

1. Introduction

1.1. Background

1.2. Contributions

- Extending the applicability of RMPs to underactuated systems, such as quadrupedal robots, by leveraging the MARL framework for dynamic space decomposition.

- Learning diverse locomotion modes in a unified framework, such as three-legged, two-legged, and one-legged walking, within a single architecture, enabling the robot to maintain balance and stability in different motion policies by performing reactive center-of-mass tracking.

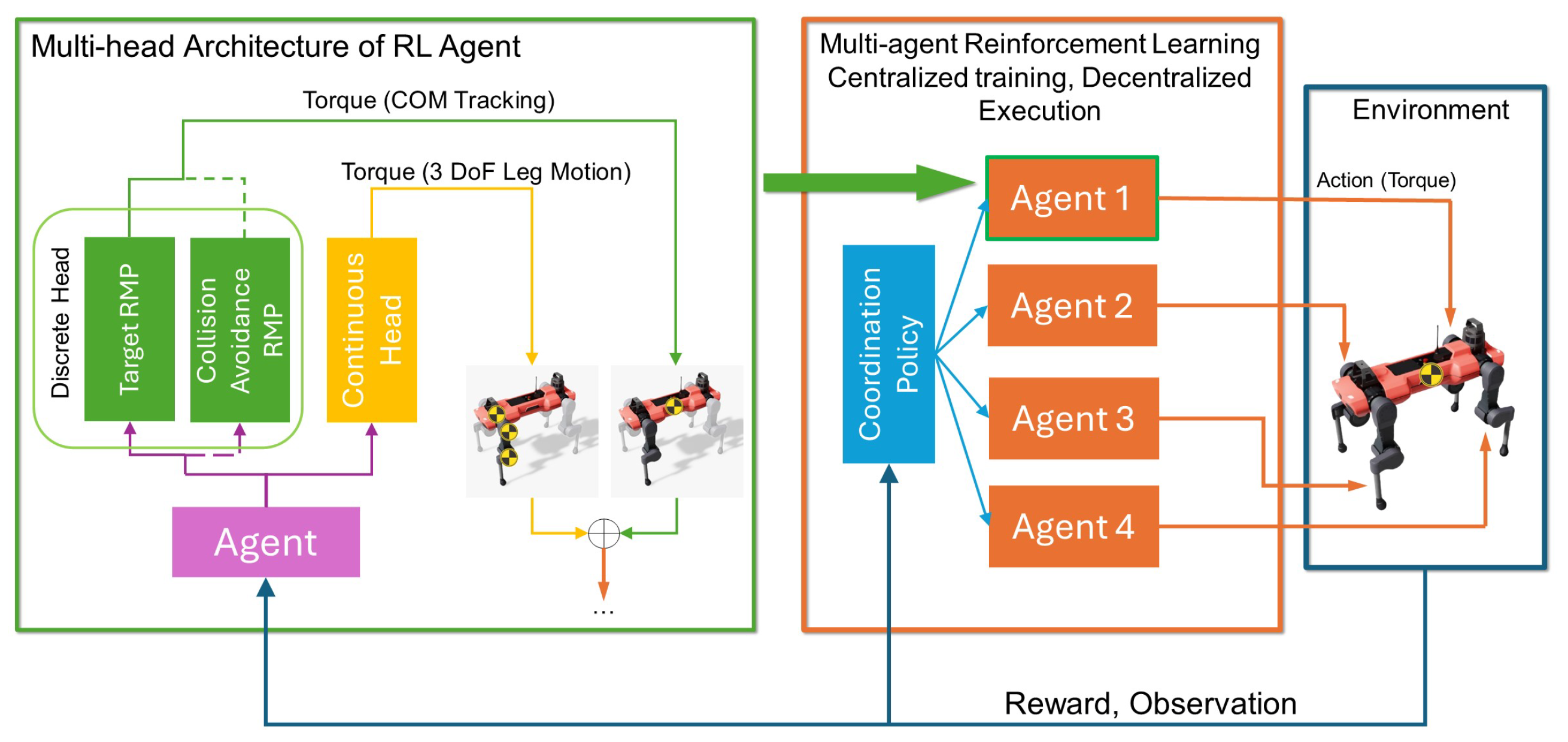

- Introduction of a multi-head structure agent to handle both discrete and continuous actions simultaneously.

- Demonstration of the method’s effectiveness through simulation experiments, showcasing advantages in learning efficiency, stability, and success rate, particularly in challenging tasks.

1.3. Layout

2. Related Work

2.1. RMPs and RMPflow

2.2. Deep Reinforcement Learning for Quadrupedal Robots

2.3. Distributed Multi-Agent Reinforcement Learning Framework

3. Methods

3.1. Overview

3.2. RMPs for Leg Locomotion Control

3.2.1. Target RMP for Center-of-Mass Tracking

3.2.2. Collision Avoidance RMP for Leg Locomotion

3.3. Multi-Head Architecture of RL Agent

3.4. Updating Policies Using Distributed Multi-Agent RL Framework

3.5. Rewards

- , , and represent the current position of the COM in the x-, y-, and z-directions, respectively. , , and denote the target position of the COM in the x-, y-, and z-directions, respectively.

- is the angle between the orientation of the robot trunk and the vertical direction of the world’s coordinate system.

- is the decay factor for the leg contact penalty term. t represents the current time step.

- is a binary variable indicating whether the specified leg is in contact with the ground (1 for contact, 0 for no contact).

4. Experiments and Results

4.1. Experiment Setup

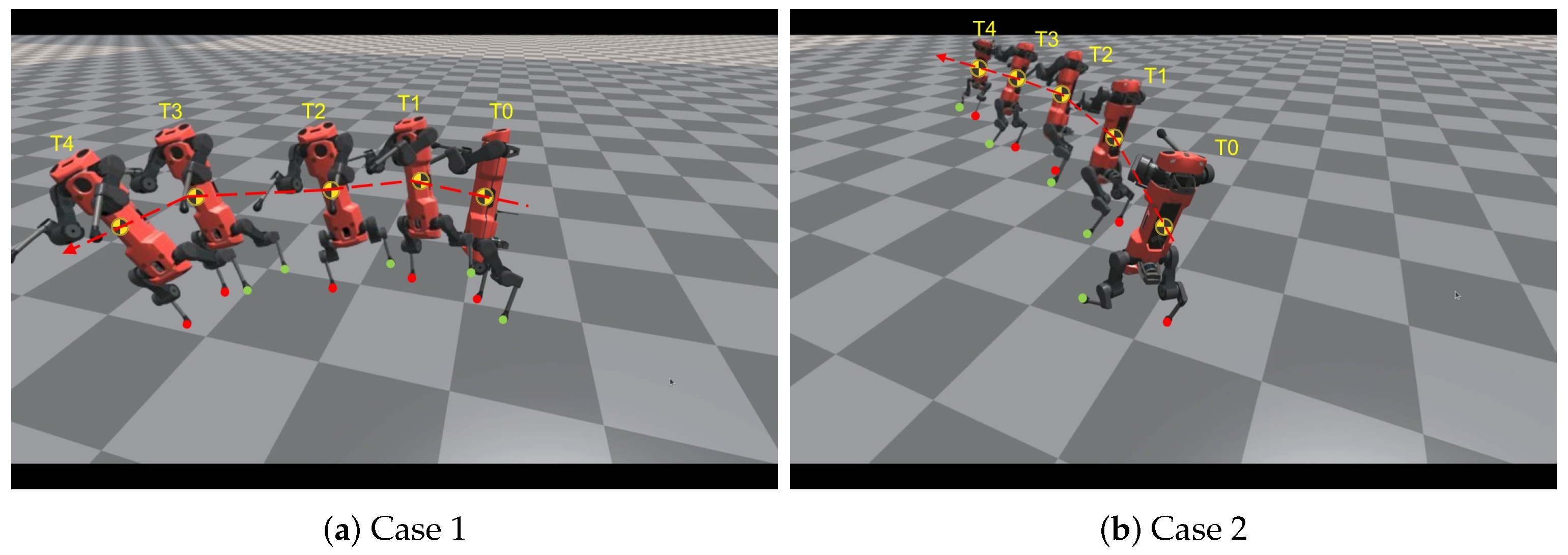

4.2. Training

4.3. Simulation Results

5. Discussion

5.1. Conclusions

5.2. Limitations and Future Work

Supplementary Materials

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Bjelonic, M.; Grandia, R.; Harley, O.; Galliard, C.; Zimmermann, S.; Hutter, M. Whole-body MPC and online gait sequence generation for wheeled-legged robots. In Proceedings of the 2021 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Prague, Czech Republic, 27 September–1 October 2021; pp. 8388–8395. [Google Scholar]

- Nagano, K.; Fujimoto, Y. Zero Moment Point Estimation Based on Resonant Frequencies of Wheel Joint for Wheel-Legged Mobile Robot. IEEJ J. Ind. Appl. 2022, 11, 408–418. [Google Scholar] [CrossRef]

- Smith, L.; Kew, J.C.; Li, T.; Luu, L.; Peng, X.B.; Ha, S.; Tan, J.; Levine, S. Learning and adapting agile locomotion skills by transferring experience. arXiv 2023, arXiv:2304.09834. [Google Scholar]

- Qi, J.; Gao, H.; Su, H.; Han, L.; Su, B.; Huo, M.; Yu, H.; Deng, Z. Reinforcement learning-based stable jump control method for asteroid-exploration quadruped robots. Aerosp. Sci. Technol. 2023, 142, 108689. [Google Scholar] [CrossRef]

- Tang, Z.; Kim, D.; Ha, S. Learning agile motor skills on quadrupedal robots using curriculum learning. In Proceedings of the 3rd International Conference on Robot Intelligence Technology and Applications; Springer: Singapore, 2021; Volume 3. [Google Scholar]

- Vollenweider, E.; Bjelonic, M.; Klemm, V.; Rudin, N.; Lee, J.; Hutter, M. Advanced skills through multiple adversarial motion priors in reinforcement learning. In Proceedings of the 2023 IEEE International Conference on Robotics and Automation (ICRA), London, UK, 29 May–2 June 2023; IEEE: Piscataway, NJ, USA, 2023. [Google Scholar]

- Mattamala, M.; Chebrolu, N.; Fallon, M. An efficient locally reactive controller for safe navigation in visual teach and repeat missions. IEEE Robot. Autom. Lett. 2022, 7, 2353–2360. [Google Scholar] [CrossRef]

- Ratliff, N.D.; Issac, J. Riemannian motion policies. arXiv 2018, arXiv:1801.02854. [Google Scholar]

- Shaw, S.; Abbatematteo, B.; Konidaris, G. RMPs for safe impedance control in contact-rich manipulation. In Proceedings of the 2022 International Conference on Robotics and Automation (ICRA), Philadelphia, PA, USA, 23–27 May 2022; pp. 2707–2713. [Google Scholar]

- Wingo, B.; Cheng, C.A.; Murtaza, M.; Zafar, M.; Hutchinson, S. Extending Riemmanian motion policies to a class of underactuated wheeled-inverted-pendulum robots. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020; IEEE: Piscataway, NJ, USA, 2020. [Google Scholar]

- Marew, D.; Lvovsky, M.; Yu, S.; Sessions, S.; Kim, D. Integration of Riemannian Motion Policy with Whole-Body Control for Collision-Free Legged Locomotion. In Proceedings of the 2023 IEEE-RAS 22nd International Conference on Humanoid Robots (Humanoids), Austin, TX, USA, 12–14 December 2023. [Google Scholar]

- Cheng, C.-A.; Mukadam, M.; Issac, J.; Birchfield, S.; Fox, D.; Boots, B.; Ratliff, N. Rmpflow: A computational graph for automatic motion policy generation. In Algorithmic Foundations of Robotics XIII: Proceedings of the 13th Workshop on the Algorithmic Foundations of Robotics; Springer International Publishing: Cham, Switzerland, 2020; pp. 441–458. [Google Scholar]

- Bellegarda, G.; Chen, Y.; Liu, Z.; Nguyen, Q. Robust high-speed running for quadruped robots via deep reinforcement learning. In Proceedings of the 2022 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Kyoto, Japan, 23–27 October 2022; pp. 10364–10370. [Google Scholar]

- Ladosz, P.; Weng, L.; Kim, M.; Oh, H. Exploration in deep reinforcement learning: A survey. Inf. Fusion 2022, 85, 1–22. [Google Scholar] [CrossRef]

- Mnih, V.; Kavukcuoglu, K.; Silver, D.; Rusu, A.A.; Veness, J.; Bellemare, M.G.; Graves, A.; Riedmiller, M.; Fidjeland, A.K.; Ostrovski, G.; et al. Human-level control through deep reinforcement learning. Nature 2015, 518, 529–533. [Google Scholar] [CrossRef] [PubMed]

- Tan, H. Reinforcement learning with deep deterministic policy gradient. In Proceedings of the 2021 International Conference on Artificial Intelligence, Big Data and Algorithms (CAIBDA), Sanya, China, 23–25 April 2021; pp. 82–85. [Google Scholar]

- Haarnoja, T.; Zhou, A.; Abbeel, P.; Levine, S. Soft actor-critic: Off-policy maximum entropy deep reinforcement learning with a stochastic actor. In Proceedings of the International Conference on Machine Learning (ICML), Stockholm, Sweden, 10–15 July 2018; pp. 1861–1870. [Google Scholar]

- Zhang, K.; Yang, Z.; Başar, T. Multi-agent reinforcement learning: A selective overview of theories and algorithms. In Handbook of Reinforcement Learning and Control; Springer: Cham, Switzerland, 2021; pp. 321–384. [Google Scholar]

- Canese, L.; Cardarilli, G.C.; Di Nunzio, L.; Fazzolari, R.; Giardino, D.; Re, M.; Spanò, S. Multi-agent reinforcement learning: A review of challenges and applications. Appl. Sci. 2021, 11, 4948. [Google Scholar] [CrossRef]

- Wang, Y.; Sagawa, R.; Yoshiyasu, Y. A Hierarchical Robot Learning Framework for Manipulator Reactive Motion Generation via Multi-agent Reinforcement Learning and Riemannian Motion Policies. IEEE Access 2023, 1, 126979–126994. [Google Scholar] [CrossRef]

- Liu, M.; Qu, D.; Xu, F.; Zou, F.; Di, P.; Tang, C. Quadrupedal robots whole-body motion control based on centroidal momentum dynamics. Appl. Sci. 2019, 9, 1335. [Google Scholar] [CrossRef]

- Luo, J.; Li, C.; Fan, Q.; Liu, Y. A graph convolutional encoder and multi-head attention decoder network for TSP via reinforcement learning. Eng. Appl. Artif. Intell. 2022, 112, 104848. [Google Scholar] [CrossRef]

- Yu, C.; Velu, A.; Vinitsky, E.; Gao, J.; Wang, Y.; Bayen, A.; Wu, Y. The surprising effectiveness of PPO in cooperative multi-agent games. In Advances in Neural Information Processing Systems; Curran Associates, Inc.: Red Hook, NY, USA, 2022; Volume 35, pp. 24611–24624. [Google Scholar]

- Lowe, R.; Wu, Y.I.; Tamar, A.; Harb, J.; Abbeel, O.P.; Mordatch, I. Multi-agent actor-critic for mixed cooperative-competitive environments. In Advances in Neural Information Processing Systems 30; Curran Associates, Inc.: Red Hook, NY, USA, 2017. [Google Scholar]

- Schulman, J.; Wolski, F.; Dhariwal, P.; Radford, A.; Klimov, O. Proximal policy optimization algorithms. arXiv 2017, arXiv:1707.06347. [Google Scholar]

- Makoviychuk, V.; Wawrzyniak, L.; Guo, Y.; Lu, M.; Storey, K.; Macklin, M.; Hoeller, D.; Rudin, N.; Allshire, A.; Handa, A.; et al. Isaac Gym: High performance GPU-based physics simulation for robot learning. arXiv 2021, arXiv:2108.10470. [Google Scholar]

- ANYmal C—The Next Step in Robotic Industrial Inspection. Available online: https://www.anybotics.com/news/the-next-step-in-robotic-industrial-inspection (accessed on 20 August 2019).

- Corbères, T.; Flayols, T.; Léziart, P.A.; Budhiraja, R.; Souères, P.; Saurel, G.; Mansard, N. Comparison of predictive controllers for locomotion and balance recovery of quadruped robots. In Proceedings of the 2021 IEEE International Conference on Robotics and Automation (ICRA), Xi’an, China, 30 May–5 June 2021; pp. 5021–5027. [Google Scholar]

- Brohan, A.; Brown, N.; Carbajal, J.; Chebotar, Y.; Chen, X.; Choromanski, K.; Ding, T.; Driess, D.; Dubey, A.; Finn, C.; et al. RT-2: Vision-Language-Action Models Transfer Web Knowledge to Robotic Control. arXiv 2023, arXiv:2307.15818. [Google Scholar]

| Task | Method | X Error | Y Error | Z Error | Success Rate |

|---|---|---|---|---|---|

| 4-Leg Walking | Ours | ±0.04 | ±0.04 | ±0.26 | 99.6% |

| PPO | ±0.04 | ±0.06 | ±0.38 | 98.2% | |

| MAPPO | ±0.07 | ±0.09 | ±0.33 | 99.6% | |

| MPC | ±0.02 | ±0.03 | ±0.24 | 100% | |

| 3-Leg Walking | Ours | ±0.05 | ±0.04 | ±0.36 | 98.6% |

| PPO | ±0.06 | ±0.5 | ±0.58 | 94.6% | |

| MAPPO | ±0.17 | ±0.14 | ±0.63 | 96.5% | |

| MPC | ±0.04 | ±0.03 | ±0.44 | 98.3% | |

| 2-Leg Walking | Ours | ±1.15 | ±1.13 | ±0.62 | 98.3% |

| PPO | ±6.4 | ±5.7 | ±3.5 | 42.7% | |

| MAPPO | ±4.8 | ±4.5 | ±1.44 | 83.5% | |

| MPC | ±1.07 | ±1.32 | ±0.82 | 98.3% | |

| 1-Leg Walking | Ours | ±5.8 | ±7.2 | ±1.82 | 86.2% |

| PPO | ±17.4 | ±14.2 | ±6.7 | Failed | |

| MAPPO | ±10.2 | ±13.7 | ±5.8 | Failed | |

| MPC | ±6.3 | ±10.3 | ±4.6 | 64.2% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, Y.; Sagawa, R.; Yoshiyasu, Y. Learning Advanced Locomotion for Quadrupedal Robots: A Distributed Multi-Agent Reinforcement Learning Framework with Riemannian Motion Policies. Robotics 2024, 13, 86. https://doi.org/10.3390/robotics13060086

Wang Y, Sagawa R, Yoshiyasu Y. Learning Advanced Locomotion for Quadrupedal Robots: A Distributed Multi-Agent Reinforcement Learning Framework with Riemannian Motion Policies. Robotics. 2024; 13(6):86. https://doi.org/10.3390/robotics13060086

Chicago/Turabian StyleWang, Yuliu, Ryusuke Sagawa, and Yusuke Yoshiyasu. 2024. "Learning Advanced Locomotion for Quadrupedal Robots: A Distributed Multi-Agent Reinforcement Learning Framework with Riemannian Motion Policies" Robotics 13, no. 6: 86. https://doi.org/10.3390/robotics13060086

APA StyleWang, Y., Sagawa, R., & Yoshiyasu, Y. (2024). Learning Advanced Locomotion for Quadrupedal Robots: A Distributed Multi-Agent Reinforcement Learning Framework with Riemannian Motion Policies. Robotics, 13(6), 86. https://doi.org/10.3390/robotics13060086