An Advisor-Based Architecture for a Sample-Efficient Training of Autonomous Navigation Agents with Reinforcement Learning

Abstract

:1. Introduction

- Investigate the use of prior knowledge as an advisor to reduce the sample complexity of the DDPG and TD3 algorithms for autonomous navigation tasks in the continuous domain.

- Devise an appropriate procedure to integrate the advisor role that makes the training of an autonomous navigation agent safer and more efficient.

- Implement the proposed method with a physical experimental setup and analyze the performance on real navigation tasks.

2. Preliminaries

3. Related Work

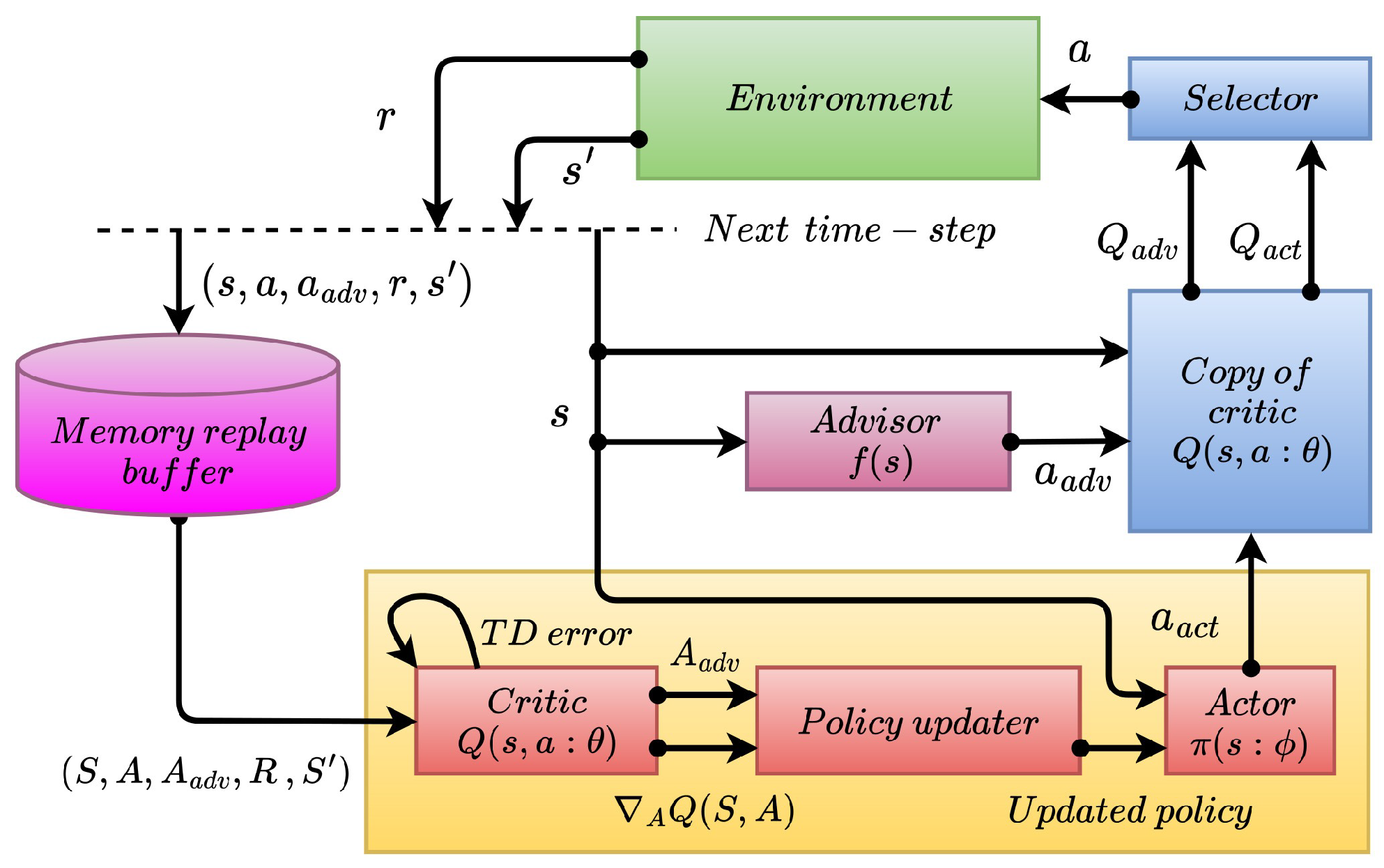

4. Incorporating an Advisor

4.1. Actor–Critic Model-Based Architecture

4.2. Data Collection Process

| Algorithm 1 Data collection with an advisor |

|

4.3. Policy Updating Process

| Algorithm 2 Policy updating with an advisor |

|

5. Experiment Setup

5.1. Simulation Setup

5.2. Physical Setup

5.3. Navigation Agent

5.4. Reward Function

5.5. Advisor

6. Experiments and Results

- Checking the advisor’s influence on the training efficiency when the advisor is being used for the data collection and investigating the optimal way of employing the advisor in the data collection process.

- Checking the performance of the advisor-based architecture and comparing it against the other state-of-the-art methods.

- Checking the applicability of proposed advisor-based architecture on navigation tasks performed with real-world platforms assuring safe and efficient training.

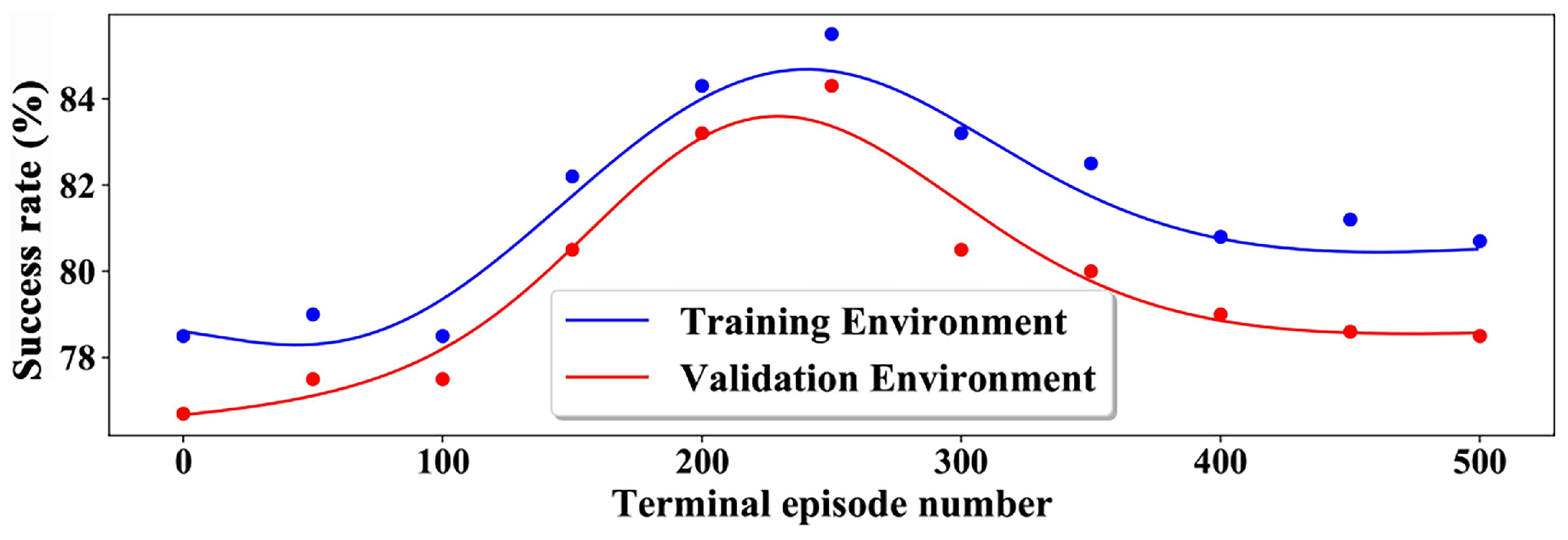

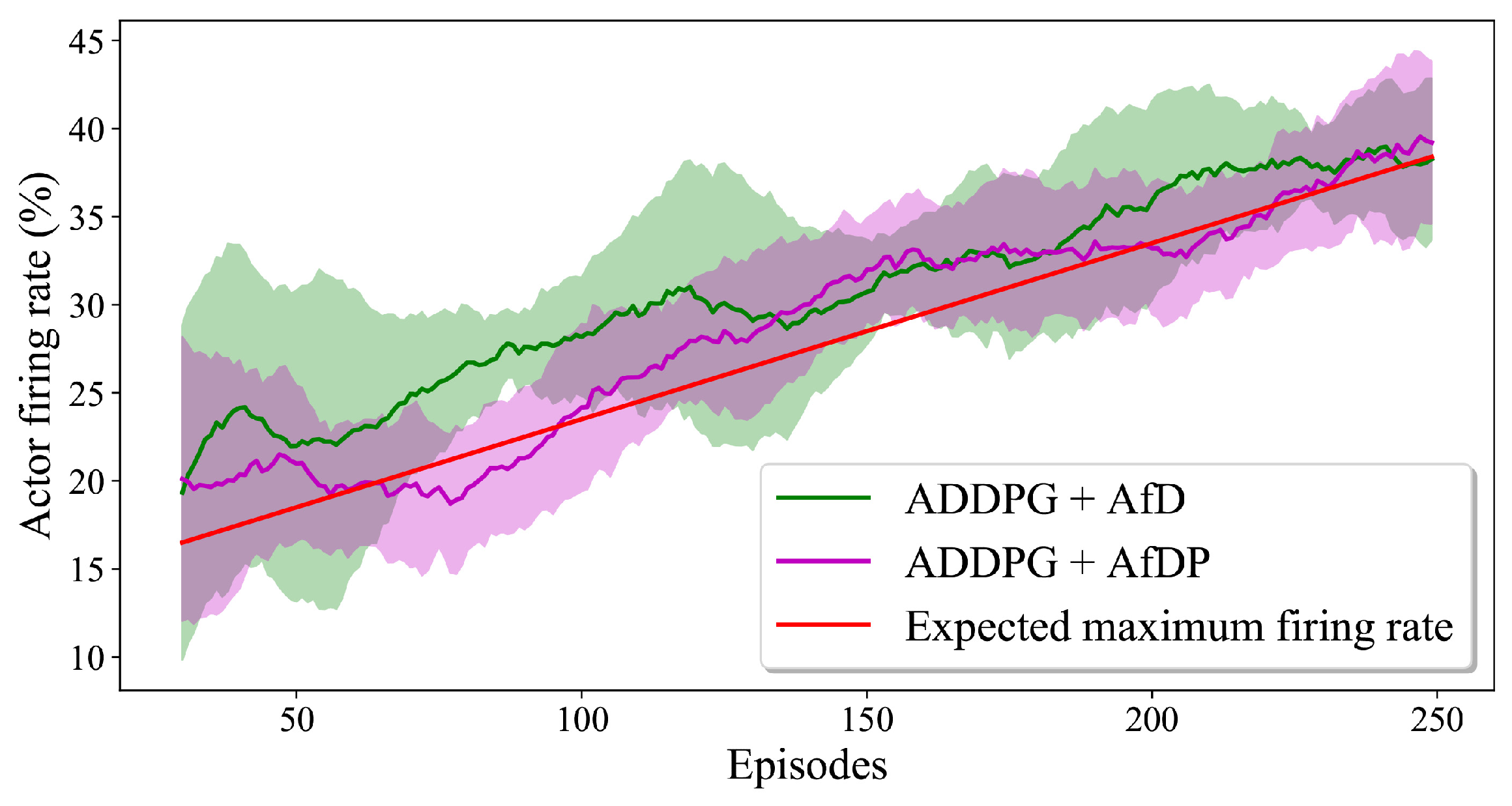

6.1. Advisor’s Influence on Data Collection Process

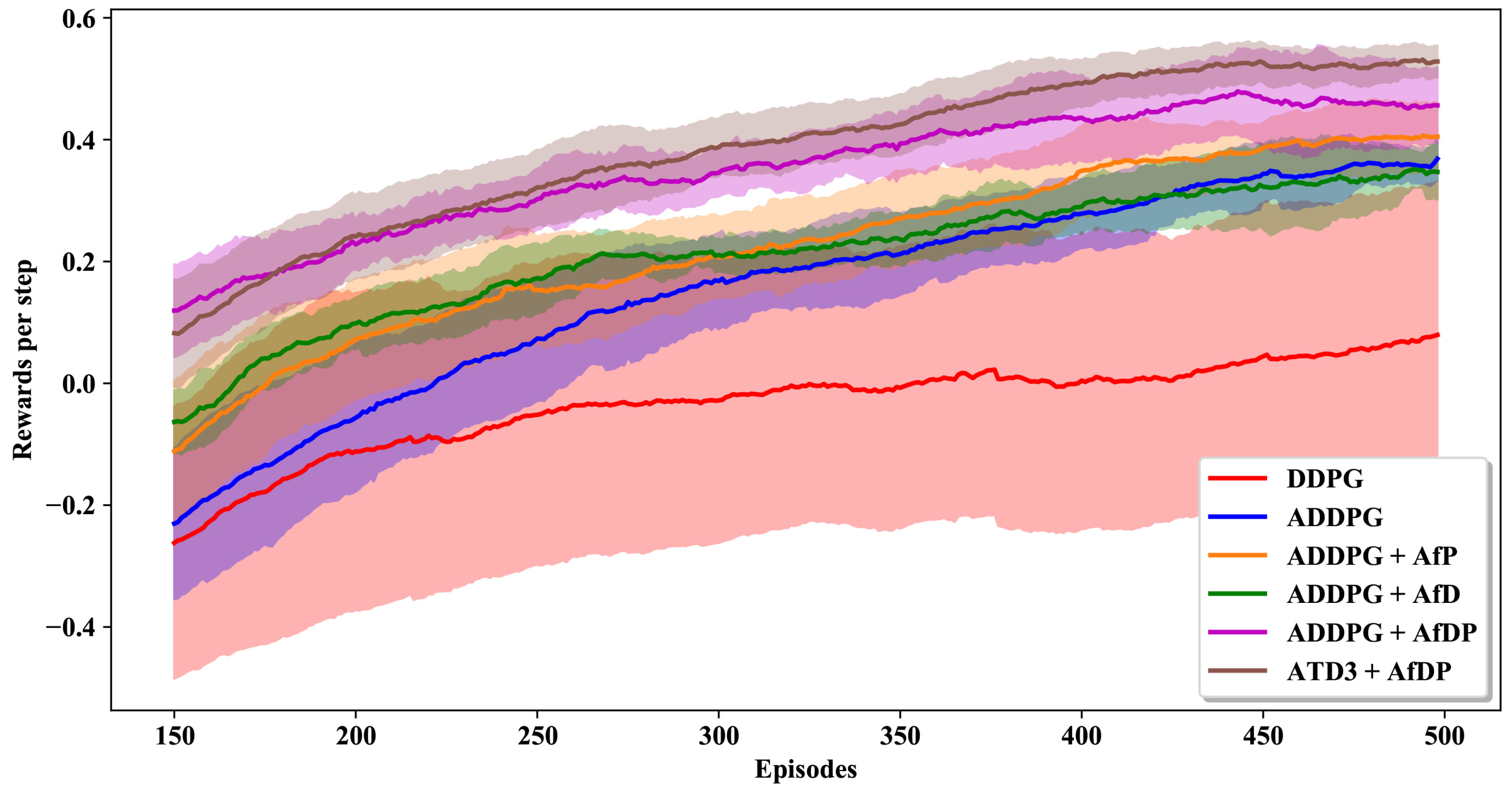

6.2. Performance of Adviser-Based Architecture

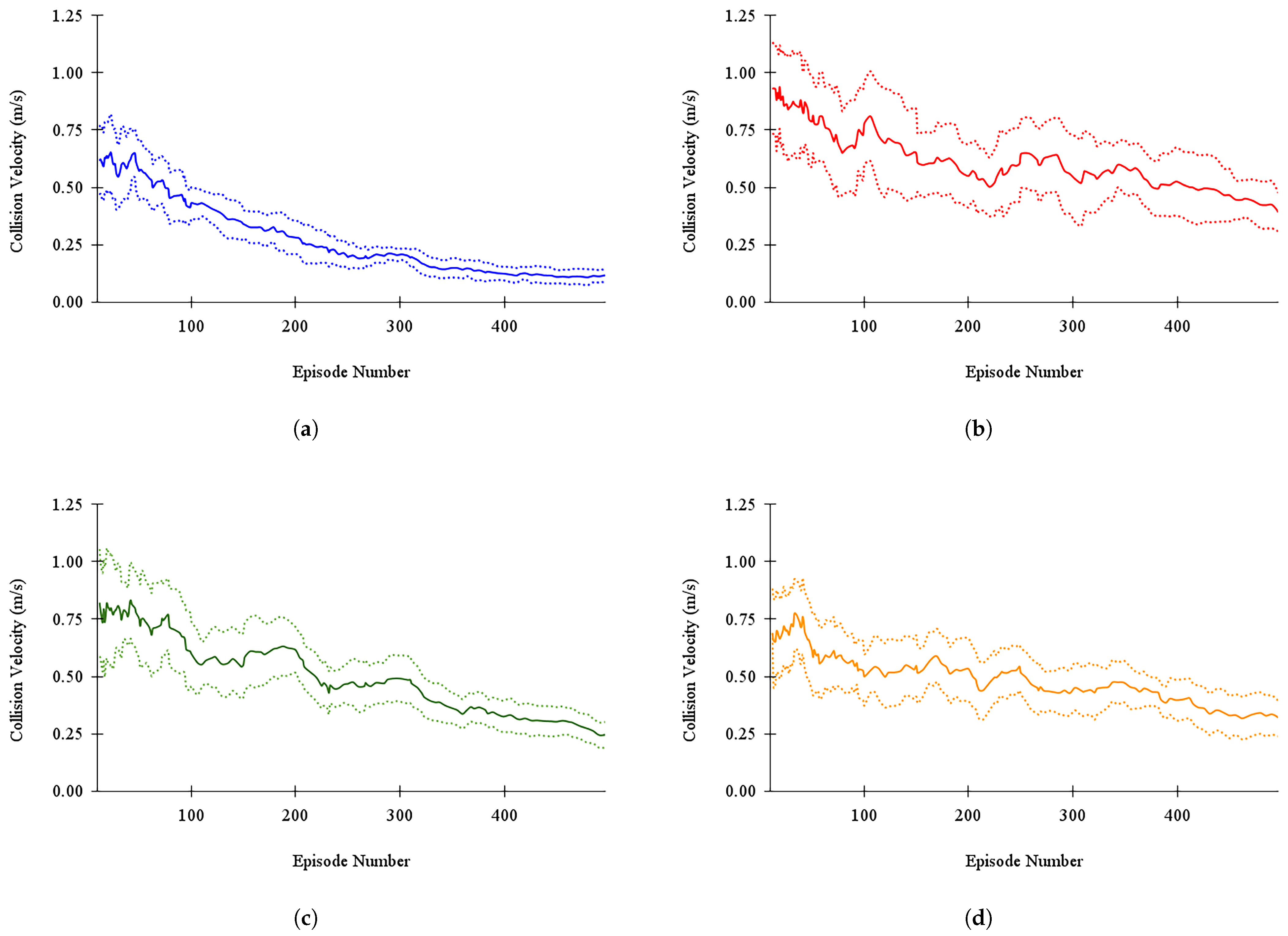

6.3. Performance with the Physical Platform

7. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| RL | Reinforcement learning |

| DQN | Deep-Q-network |

| DDPG | Deep deterministic policy gradient |

| TD3 | Twin-delayed DDPG |

| SLAM | Simultaneous localisation and mapping |

| IntRL | Interactive reinforcement learning |

| IL | Integrating imitation learning |

| BADGR | Berkeley autonomous driving ground robot |

| V-rep | Virtual robot experimentation platform |

| API | Application programming interface |

| RGB | Red green blue |

| PC | Personal computer |

| GPU | Graphical processing unit |

| CPU | Central processing unit |

| RAM | Random access memory |

| FCL | Fully connected layers |

| ADDPG | Adapted deep deterministic policy gradient |

| ATD3 | Adapted twin-delayed DDPG |

| LfD | Learning from demonstration |

| MCP | Model predictive control |

| AfD | Advisor for data collection |

| AfP | Advisor for policy updating |

| AfDP | Advisor for both data collection and policy updating |

Appendix A. Implementation of the Advisor

| Parameter | Value |

|---|---|

| No detect distance () | 0.6 m |

| Maximum detect distance () | 0.2 m |

| Left Braitenberg array () | |

| Right Braitenberg array () | |

| Maximum linear velocity () | 1.0 ms |

| Algorithm A1 Advisor for goal reaching with obstacle avoiding |

|

Appendix B. Parameter Values Used in the Implementation of the Advisor-Based Navigation Agent

| Hyper-Parameter | Simulation Setup | Physical Setup |

|---|---|---|

| Memory replay buffer size | 100,000 | 50,000 |

| Batch size (n) | 1000 | 500 |

| Discount rate () | ||

| Soft updating rate () | ||

| Policy updating rate () | ||

| Actor learning rate () | ||

| Critic learning rate () | ||

| Starting advisor selection probability () | ||

| Selection probability rate (b) | ||

| Maximum number of steps per episode () | 300 | 150 |

| Parameter | Value |

|---|---|

| Threshold of target distance for goal reaching | m |

| Threshold of the minimum proximity reading for collision | m |

| Hyper-Parameter | Simulation Setup | Physical Setup |

|---|---|---|

| 0 | 0 | |

| c | ||

| d | 4 | 3 |

Appendix C. Adapt the TD3 Algorithm to Incorporate an Advisor

| Algorithm A2 Data collection with an advisor |

|

| Algorithm A3 Policy updating with an advisor |

|

Appendix D. Actor’s Expected Maximum Firing Rate

Appendix E. Paths Followed by the Trained Agents with and without the Advisor

References

- Yang, Y.; Wang, J. An overview of multi-agent reinforcement learning from game theoretical perspective. arXiv 2020, arXiv:2011.00583. [Google Scholar]

- Lample, G.; Chaplot, D.S. Playing FPS games with deep reinforcement learning. In Proceedings of the AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 4–9 February 2017; Volume 31. [Google Scholar]

- Mnih, V.; Kavukcuoglu, K.; Silver, D.; Rusu, A.A.; Veness, J.; Bellemare, M.G.; Graves, A.; Riedmiller, M.; Fidjeland, A.K.; Ostrovski, G.; et al. Human-level control through deep reinforcement learning. Nature 2015, 518, 529–533. [Google Scholar] [CrossRef] [PubMed]

- Afsar, M.M.; Crump, T.; Far, B. Reinforcement learning based recommender systems: A survey. ACM Comput. Surv. 2022, 55, 1–38. [Google Scholar] [CrossRef]

- Kober, J.; Bagnell, J.A.; Peters, J. Reinforcement learning in robotics: A survey. Int. J. Robot. Res. 2013, 32, 1238–1274. [Google Scholar] [CrossRef]

- Ni, P.; Zhang, W.; Zhang, H.; Cao, Q. Learning efficient push and grasp policy in a tote box from simulation. Adv. Robot. 2020, 34, 873–887. [Google Scholar] [CrossRef]

- Shi, J.; Dear, T.; Kelly, S.D. Deep reinforcement learning for snake robot locomotion. IFAC-PapersOnLine 2020, 53, 9688–9695. [Google Scholar] [CrossRef]

- Wiedemann, T.; Vlaicu, C.; Josifovski, J.; Viseras, A. Robotic information gathering with reinforcement learning assisted by domain knowledge: An application to gas source localization. IEEE Access 2021, 9, 13159–13172. [Google Scholar] [CrossRef]

- Martinez, J.F.; Ipek, E. Dynamic multicore resource management: A machine learning approach. IEEE Micro 2009, 29, 8–17. [Google Scholar] [CrossRef]

- Ipek, E.; Mutlu, O.; Martínez, J.F.; Caruana, R. Self-optimizing memory controllers: A reinforcement learning approach. ACM SIGARCH Comput. Archit. News 2008, 36, 39–50. [Google Scholar] [CrossRef]

- Wang, C.; Wang, J.; Shen, Y.; Zhang, X. Autonomous navigation of UAVs in large-scale complex environments: A deep reinforcement learning approach. IEEE Trans. Veh. Technol. 2019, 68, 2124–2136. [Google Scholar] [CrossRef]

- Blum, T.; Paillet, G.; Masawat, W.; Yoshida, K. SegVisRL: Development of a robot’s neural visuomotor and planning system for lunar exploration. Adv. Robot. 2021, 35, 1359–1373. [Google Scholar] [CrossRef]

- Xue, W.; Liu, P.; Miao, R.; Gong, Z.; Wen, F.; Ying, R. Navigation system with SLAM-based trajectory topological map and reinforcement learning-based local planner. Adv. Robot. 2021, 35, 939–960. [Google Scholar] [CrossRef]

- Silver, D.; Huang, A.; Maddison, C.J.; Guez, A.; Sifre, L.; Van Den Driessche, G.; Schrittwieser, J.; Antonoglou, I.; Panneershelvam, V.; Lanctot, M.; et al. Mastering the game of Go with deep neural networks and tree search. Nature 2016, 529, 484–489. [Google Scholar] [CrossRef]

- Lillicrap, T.P.; Hunt, J.J.; Pritzel, A.; Heess, N.; Erez, T.; Tassa, Y.; Silver, D.; Wierstra, D. Continuous control with deep reinforcement learning. In Proceedings of the International Conference on Learning Representations (ICLR), San Juan, PR, USA, 2–4 May 2016. [Google Scholar]

- Fujimoto, S.; Hoof, H.; Meger, D. Addressing function approximation error in actor–critic methods. In Proceedings of the International Conference on Machine Learning, PMLR, Stockholm, Sweden, 10–15 July 2018; pp. 1587–1596. [Google Scholar]

- Hasselt, H. Double Q-learning. In Proceedings of the Advances in Neural Information Processing Systems (NeurIPS), Vancouver, BC, Canada, 6–8 December 2010. [Google Scholar]

- Liu, R.; Nageotte, F.; Zanne, P.; de Mathelin, M.; Dresp-Langley, B. Deep reinforcement learning for the control of robotic manipulation: A focussed mini-review. Robotics 2021, 10, 22. [Google Scholar] [CrossRef]

- Kormushev, P.; Calinon, S.; Caldwell, D.G. Reinforcement learning in robotics: Applications and real-world challenges. Robotics 2013, 2, 122–148. [Google Scholar] [CrossRef]

- Wijesinghe, R.; Vithanage, K.; Tissera, D.; Xavier, A.; Fernando, S.; Samarawickrama, J. Transferring Domain Knowledge with an Adviser in Continuous Tasks. In Pacific-Asia Conference on Knowledge Discovery and Data Mining; Springer: Cham, Switzerland, 2021; pp. 194–205. [Google Scholar]

- Sutton, R.S.; Barto, A.G. Introduction to Reinforcement Learning; MIT Press: Cambridge, UK, 1998; Volume 2. [Google Scholar]

- Silver, D.; Lever, G.; Heess, N.; Degris, T.; Wierstra, D.; Riedmiller, M. Deterministic policy gradient algorithms. In Proceedings of the International Conference Machine Learning (ICML), Beijing, China, 21–26 June 2014. [Google Scholar]

- Peters, J.; Schaal, S. Natural actor–critic. Neurocomputing 2008, 71, 1180–1190. [Google Scholar] [CrossRef]

- Van Hasselt, H.; Guez, A.; Silver, D. Deep reinforcement learning with double q-learning. In Proceedings of the AAAI Conference on Artificial Intelligence, Phoenix, AZ, USA, 12–17 February 2016; Volume 30. [Google Scholar]

- Haarnoja, T.; Zhou, A.; Abbeel, P.; Levine, S. Soft actor–critic: Off-policy maximum entropy deep reinforcement learning with a stochastic actor. arXiv 2018, arXiv:1801.01290. [Google Scholar]

- Gu, S.; Lillicrap, T.; Sutskever, I.; Levine, S. Continuous deep q-learning with model-based acceleration. In Proceedings of the International Conference on Machine Learning, New York, NY, USA, 19–21 June 2016; pp. 2829–2838. [Google Scholar]

- Dadvar, M.; Nayyar, R.K.; Srivastava, S. Learning Dynamic Abstract Representations for Sample-Efficient Reinforcement Learning. arXiv 2022, arXiv:2210.01955. [Google Scholar]

- Wen, S.; Zhao, Y.; Yuan, X.; Wang, Z.; Zhang, D.; Manfredi, L. Path planning for active SLAM based on deep reinforcement learning under unknown environments. Intell. Serv. Robot. 2020, 13, 263–272. [Google Scholar] [CrossRef]

- Tai, L.; Paolo, G.; Liu, M. Virtual-to-real deep reinforcement learning: Continuous control of mobile robots for mapless navigation. In Proceedings of the International Conference on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 24–28 September 2017; pp. 31–36. [Google Scholar]

- Kahn, G.; Villaflor, A.; Ding, B.; Abbeel, P.; Levine, S. Self-supervised deep reinforcement learning with generalized computation graphs for robot navigation. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Brisbane, Australia, 21–25 May 2018; pp. 1–8. [Google Scholar]

- Nagabandi, A.; Kahn, G.; Fearing, R.S.; Levine, S. Neural network dynamics for model-based deep reinforcement learning with model-free fine-tuning. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Brisbane, Australia, 21–25 May 2018; pp. 7559–7566. [Google Scholar]

- Arzate Cruz, C.; Igarashi, T. A survey on interactive reinforcement learning: Design principles and open challenges. In Proceedings of the 2020 ACM Designing Interactive Systems Conference, Virtual, 6–10 July 2020; pp. 1195–1209. [Google Scholar]

- Lin, J.; Ma, Z.; Gomez, R.; Nakamura, K.; He, B.; Li, G. A review on interactive reinforcement learning from human social feedback. IEEE Access 2020, 8, 120757–120765. [Google Scholar] [CrossRef]

- Bignold, A.; Cruz, F.; Dazeley, R.; Vamplew, P.; Foale, C. Human engagement providing evaluative and informative advice for interactive reinforcement learning. Neural Comput. Appl. 2022, 35, 18215–18230. [Google Scholar] [CrossRef]

- Millan-Arias, C.C.; Fernandes, B.J.; Cruz, F.; Dazeley, R.; Fernandes, S. A robust approach for continuous interactive actor–critic algorithms. IEEE Access 2021, 9, 104242–104260. [Google Scholar] [CrossRef]

- Bignold, A.; Cruz, F.; Dazeley, R.; Vamplew, P.; Foale, C. Persistent rule-based interactive reinforcement learning. Neural Comput. Appl. 2021, 1–18. [Google Scholar] [CrossRef]

- Zhang, J.; Springenberg, J.T.; Boedecker, J.; Burgard, W. Deep reinforcement learning with successor features for navigation across similar environments. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2017), Vancouver, BC, Canada, 24–28 September 2017; pp. 2371–2378. [Google Scholar]

- Parisotto, E.; Ba, J.L.; Salakhutdinov, R. Actor-mimic: Deep multitask and transfer reinforcement learning. arXiv 2015, arXiv:1511.06342. [Google Scholar]

- Ross, S.; Gordon, G.; Bagnell, D. A reduction of imitation learning and structured prediction to no-regret online learning. In Proceedings of the Fourteenth International Conference on Artificial Intelligence and Statistics, Lauderdale, FL, USA, 11–13 April 2011; pp. 627–635. [Google Scholar]

- Amini, A.; Gilitschenski, I.; Phillips, J.; Moseyko, J.; Banerjee, R.; Karaman, S.; Rus, D. Learning robust control policies for end-to-end autonomous driving from data-driven simulation. IEEE Robot. Autom. Lett. 2020, 5, 1143–1150. [Google Scholar] [CrossRef]

- Kabzan, J.; Hewing, L.; Liniger, A.; Zeilinger, M.N. Learning-based model predictive control for autonomous racing. IEEE Robot. Autom. Lett. 2019, 4, 3363–3370. [Google Scholar] [CrossRef]

- Taylor, M.E.; Kuhlmann, G.; Stone, P. Autonomous transfer for reinforcement learning. In Proceedings of the International Joint Conference on Autonomous Agents and Multiagent Systems (AAMAS 2008)-Volume 1, Estoril, Portugal, 12–16 May 2008; pp. 283–290. [Google Scholar]

- Kinose, A.; Taniguchi, T. Integration of imitation learning using GAIL and reinforcement learning using task-achievement rewards via probabilistic graphical model. Adv. Robot. 2020, 34, 1055–1067. [Google Scholar] [CrossRef]

- Qian, K.; Liu, H.; Valls Miro, J.; Jing, X.; Zhou, B. Hierarchical and parameterized learning of pick-and-place manipulation from under-specified human demonstrations. Adv. Robot. 2020, 34, 858–872. [Google Scholar] [CrossRef]

- Sosa-Ceron, A.D.; Gonzalez-Hernandez, H.G.; Reyes-Avendaño, J.A. Learning from Demonstrations in Human–Robot Collaborative Scenarios: A Survey. Robotics 2022, 11, 126. [Google Scholar] [CrossRef]

- Oh, J.; Guo, Y.; Singh, S.; Lee, H. Self-imitation learning. arXiv 2018, arXiv:1806.05635. [Google Scholar]

- Nair, A.; McGrew, B.; Andrychowicz, M.; Zaremba, W.; Abbeel, P. Overcoming exploration in reinforcement learning with demonstrations. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA 2018), Brisbane, Australia, 21–25 May 2018; pp. 6292–6299. [Google Scholar]

- Hester, T.; Vecerik, M.; Pietquin, O.; Lanctot, M.; Schaul, T.; Piot, B.; Horgan, D.; Quan, J.; Sendonaris, A.; Osband, I.; et al. Deep q-learning from demonstrations. In Proceedings of the Thirty-Second AAAI Conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018. [Google Scholar]

- Kahn, G.; Abbeel, P.; Levine, S. Badgr: An autonomous self-supervised learning-based navigation system. IEEE Robot. Autom. Lett. 2021, 6, 1312–1319. [Google Scholar] [CrossRef]

- Yang, X.; Patel, R.V.; Moallem, M. A fuzzy–braitenberg navigation strategy for differential drive mobile robots. J. Intell. Robot. Syst. 2006, 47, 101–124. [Google Scholar] [CrossRef]

- Konda, V.R.; Tsitsiklis, J.N. Actor–critic algorithms. In Proceedings of the Advances in Neural Information Processing Systems, Denver, CO, USA, 4–9 December 2000; pp. 1008–1014. [Google Scholar]

- Mnih, V.; Badia, A.P.; Mirza, M.; Graves, A.; Lillicrap, T.; Harley, T.; Silver, D.; Kavukcuoglu, K. Asynchronous methods for deep reinforcement learning. In Proceedings of the International Conference on Machine Learning (ICML), New York, NY, USA, 19–24 June 2016; pp. 1928–1937. [Google Scholar]

- Gu, S.; Holly, E.; Lillicrap, T.; Levine, S. Deep reinforcement learning for robotic manipulation with asynchronous off-policy updates. In Proceedings of the International Conference on Robotics and Automation (ICRA), Singapore, 29 May–3 June 2017; pp. 3389–3396. [Google Scholar]

- Uhlenbeck, G.E.; Ornstein, L.S. On the theory of the Brownian motion. Phys. Rev. 1930, 36, 823. [Google Scholar] [CrossRef]

- Rohmer, E.; Singh, S.P.; Freese, M. V-REP: A versatile and scalable robot simulation framework. In Proceedings of the International Conference Intelligent Robots and Systems (IROS), Tokyo, Japan, 3–7 December 2013; pp. 1321–1326. [Google Scholar]

- Jia, T.; Sun, N.L.; Cao, M.Y. Moving object detection based on blob analysis. In Proceedings of the 2008 IEEE International Conference on Automation and Logistics, Qingdao, China, 1–3 September 2008; pp. 322–325. [Google Scholar]

- Li, Y.; Yuan, Y. Convergence analysis of two-layer neural networks with relu activation. In Proceedings of the Advances in Neural Information Processing Systems (NeurIPS), Long Beach, CA, USA, 4–9 December 2017; Volume 30. [Google Scholar]

- Kumar, S.K. On weight initialization in deep neural networks. arXiv 2017, arXiv:1704.08863. [Google Scholar]

- Pfeiffer, M.; Shukla, S.; Turchetta, M.; Cadena, C.; Krause, A.; Siegwart, R.; Nieto, J. Reinforced imitation: Sample efficient deep reinforcement learning for mapless navigation by leveraging prior demonstrations. IEEE Robot. Autom. Lett. 2018, 3, 4423–4430. [Google Scholar] [CrossRef]

| Environment | DDPG | ADDPG | ADDPG + AfD | ADDPG + AfP | ADDPG + AfDP | ATD3 + AfDP | LfD | MCP + Model Free |

|---|---|---|---|---|---|---|---|---|

| Training Environment | 72.2% | 78.3% | 81.3% | 84.5% | 88.1% | 90.8% | 80.2% | 82.3% |

| Validation Environment | 71.3% | 77.1% | 79.3% | 83.0% | 85.2% | 89.4% | 80.7% | 80.1% |

| Agent Type | Total Number of Episodes | ||

|---|---|---|---|

| DDPG | |||

| ADDPG | |||

| ADDPG + AfDP | |||

| ATD3 + AfDP | |||

| LfD | |||

| MCP + Model-free | |||

| Agent Type | Collision Velocity (ms) |

|---|---|

| Advisor | |

| DDPG | |

| ADDPG | |

| ADDPG + AfDP | |

| ATD3 + AfDP | ± |

| LfD | |

| MCP + model-free |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wijesinghe, R.D.; Tissera, D.; Vithanage, M.K.; Xavier, A.; Fernando, S.; Samarawickrama, J. An Advisor-Based Architecture for a Sample-Efficient Training of Autonomous Navigation Agents with Reinforcement Learning. Robotics 2023, 12, 133. https://doi.org/10.3390/robotics12050133

Wijesinghe RD, Tissera D, Vithanage MK, Xavier A, Fernando S, Samarawickrama J. An Advisor-Based Architecture for a Sample-Efficient Training of Autonomous Navigation Agents with Reinforcement Learning. Robotics. 2023; 12(5):133. https://doi.org/10.3390/robotics12050133

Chicago/Turabian StyleWijesinghe, Rukshan Darshana, Dumindu Tissera, Mihira Kasun Vithanage, Alex Xavier, Subha Fernando, and Jayathu Samarawickrama. 2023. "An Advisor-Based Architecture for a Sample-Efficient Training of Autonomous Navigation Agents with Reinforcement Learning" Robotics 12, no. 5: 133. https://doi.org/10.3390/robotics12050133

APA StyleWijesinghe, R. D., Tissera, D., Vithanage, M. K., Xavier, A., Fernando, S., & Samarawickrama, J. (2023). An Advisor-Based Architecture for a Sample-Efficient Training of Autonomous Navigation Agents with Reinforcement Learning. Robotics, 12(5), 133. https://doi.org/10.3390/robotics12050133