Abstract

For older adults, regular exercises can provide both physical and mental benefits, increase their independence, and reduce the risks of diseases associated with aging. However, only a small portion of older adults regularly engage in physical activity. Therefore, it is important to promote exercise among older adults to help maintain overall health. In this paper, we present the first exploratory long-term human–robot interaction (HRI) study conducted at a local long-term care facility to investigate the benefits of one-on-one and group exercise interactions with an autonomous socially assistive robot and older adults. To provide targeted facilitation, our robot utilizes a unique emotion model that can adapt its assistive behaviors to users’ affect and track their progress towards exercise goals through repeated sessions using the Goal Attainment Scale (GAS), while also monitoring heart rate to prevent overexertion. Results of the study show that users had positive valence and high engagement towards the robot and were able to maintain their exercise performance throughout the study. Questionnaire results showed high robot acceptance for both types of interactions. However, users in the one-on-one sessions perceived the robot as more sociable and intelligent, and had more positive perception of the robot’s appearance and movements.

1. Introduction

For older adults, regular exercise can reduce the risk of depression, cardiovascular disease, type 2 diabetes, obesity, and osteoporosis [1]. Older adults who regularly exercise are also more likely to be able to engage in instrumental activities of daily living such as meal preparation and shopping with increased independence [2]. Furthermore, they are less likely to fall and be injured [3]. Even when taken up later in life, older adults can still gain from the benefits of exercise with a decrease in risk of cardiovascular disease mortality [4]. Despite the overwhelming evidence, however, for example, only 37% of older adults aged over 65 in Canada perform the recommended 150 min of weekly physical activities including aerobic (e.g., walking), flexibility exercises (e.g., stretching), and muscle strengthening (e.g., lifting weights) [5]. For the goal of exercise promotion, a handful of social robots have shown potential for use with older adults [6,7,8,9]. These robots can extend the capabilities of caregivers by providing exercise assistance when needed, autonomously tracking exercise progress for multiple individuals over time, and facilitating multiple parallel exercise sessions.

In general, human–robot interaction (HRI) can be often conducted in: (1) group interactions, or (2) one-on-one interaction settings. The ability to meet new people and other social aspects of group sessions have shown to be a major motivator in older adults to participate in exercise [10]. The increase in social interaction among participants, which can be further increased by the facilitation by a robot [11], can stimulate the prefrontal cortex of the participants, regions that are traditionally associated with executive functions such as working memory and attention [12]. This results not only in physical health benefits, but also may add to their cognitive functions by participating in group exercise sessions. One-on-one exercise sessions, on the other hand, can also increase participation as they enable user experience to be directly tailored to the participant by providing individualized feedback [13]. Individualized experiences also increase participants’ attentiveness and retention of the information as they perceive it to be more relevant to them, resulting in increased physical performance results [14].

In human–human interactions, human affect plays a significant role as it guides people’s thoughts and behaviors, and in turn influences how they make decisions and communicate [15]. To effectively interact with people and provide assistance, robots need to recognize and interpret human affect as well as respond appropriately with their own emotional behaviors. This promotes natural interactions in human-centered environments by following accepted human behaviors and rules during HRI, leading to acceptance of the robot in order to build long-term relationships with its users [16].

During exercising, as people are required to perform physical movements, which can also lead to perturbed facial expressions due to the increase in effect and muscle fatigue [17], common physical affective modes such as body movements and facial expressions are not always available for the robot to detect. Furthermore, these physical modes are difficult to use with older adults as they have age-related functional decline in facial expression generation [18], and body movements and postures [19]. On the other hand, Electroencephalography (EEG) signals, which are largely involuntarily, and activated by the central nervous system (CNS) and the autonomic nervous system (ANS) of the human body can be used to detect both affective cognitive states of older adults [20]. EEGs have also been successfully utilized to detect user affect during physical activities (e.g., cycling) [21].

In this paper, we present the first long-term robot exercise facilitation HRI study with older adults investigating the benefits of one-on-one and group sessions with an autonomous socially assistive robot. Our autonomous robot uses a unique emotional model that adapts its assistive behavior to the user affect during exercising. The robot can also track users’ progression towards exercise goals using the Goal Attainment Scale (GAS) while monitoring their heart rate to prevent overexertion. Therefore, herein, we investigate and compare one-on-one and group intervention types to determine their impact on older adults’ experiences with the robot and their overall exercise progress. A long-term study (i.e., 2 months) was conducted to determine the challenges for achieving a successful exercise HRI while directly observing the robot as it adapts to user behaviors over time. The aim was to investigate any improved motivation and engagement in the activity through repeated multiple exercise sessions over time and in different interaction settings with an adaptive socially assistive robot.

2. Related Works

Herein, we present existing socially assistive robot exercise facilitation studies that have been conducted in (1) one-on-one sessions [7,9,22,23], (2) group sessions [6,24], and/or (3) a combination of the two scenarios [8]. Furthermore, we discuss HRI studies which have compared group vs. one-on-one interactions in various settings and for various activities.

2.1. Socially Assistive Robots for Exercise Facilitation

2.1.1. Socially Assistive Robots for Exercise Facilitation in One-on-One Settings

In [7], the robot Bandit was used to facilitate upper body exercise with older adults in a one-on-one setting with both a physical and virtual robot during four sessions over two weeks. The virtual robot was a computer simulation of the Bandit robot that was shown on a 27-inch flat-panel display. The study evaluated the users’ acceptance and perception of the robot as well as investigated the role of embodiment. In comparison, participants in the physical robot sessions evaluated the robot as more valuable/useful, intelligent, helpful, and having more social presence and being a companion than those in the virtual robot sessions. No significant differences were observed in participant performance between the two study groups.

In [9], a NAO robot learned exercises performed by human demonstrators in order to perform them in exercise sessions with older adults in one-on-one settings. The study, completed over a 5-week period, found that participants had improved exercise performance after three sessions for most of the exercises. They had high ratings for the enjoyment of the exercise sessions and accepted the robot as a fitness coach. However, the acceptance of the robot as a friend slightly decreased over the sessions as they reported that they recognize the robot as a machine and they only wanted to consider humans as friends. They also showed confused facial expressions during more complicated exercises (e.g., exercises with a sequence of gestures); however, the occurrence of the confused facial expressions decreased by the last session.

In [22], the NAO robot was used to facilitate the outpatient phase of a cardiac rehabilitation program for 36 sessions over an 18-week period. Participants ranged in age from 43 to 80. Each participant was instructed to exercise using a treadmill, while the robot monitored their heart rate using an electrocardiogram and alerted medical staff if it exceeded an upper threshold, and monitored their cervical posture using a camera and provided the participant with verbal feedback if a straight posture was not maintained. The robot also provided the participants with periodic pre-programmed motivational support through speech, gestures, and gaze tracking. The robot condition was compared to a baseline condition without the robot. The results showed participants that used the robot facilitator had a lower program dropout rate and achieved significantly better recovery of their cardiovascular functioning than those that did not use the robot.

In [23], a NAO robot was also used for motor training of children by playing personalized upper-limb exercise games. Healthcare professionals assessed participants’ motor skills throughout the study at regular intervals using both the Manual Ability Classification Systems (MACS) scale to assess how the children handle objects in daily activities and the Mallet scale to assess the overall mobility of the upper limb. Questionnaire results showed positive ratings over time, indicating that participants considered the robot to be very useful, easy to use, and operating correctly. There was no change in the MACS scale results for all participants over the three sessions. However, participants slightly improved over time on the Mallet score on their motor skills.

2.1.2. Socially Assistive Robots for Exercise Facilitation in Group Settings

In [6], a Pepper robot was deployed in an elder-care facility to facilitate strength building, flexibility exercises, and to play cognitive games. Six participants were invited to a group session with Pepper twice a week for a 10 week-long study. Interviews with the participants revealed that many older adults were originally fearful of the robot, but became comfortable around the robot by the end of the study. In [24], a NAO robot was used to facilitate seated arm and leg exercises with older adults in a group session with 34 participants. Feedback from both the staff and older adults showed that the use of the NAO robot as an exercise trainer was positively received.

2.1.3. Socially Assistive Robots for Exercise Facilitation in Both One-on-One and Group Settings

In [8], a remotely controlled robot Vizzy was deployed as an exercise coach with older adults in both one-on-one sessions and group sessions. The robot has an anthropomorphic upper torso and head with eye movements to mimic gaze. Vizzy would lead participants from a waiting room to an exercise location, give them instructions to follow a separate interface that showed the exercises, and then provided corrective instructions if necessary–the robot did not demonstrate the exercises itself. A camera in each of Vizzy’s eyes was controlled by an operator for gaze direction when the robot spoke. The results demonstrated that the participants perceived the robot as competent, enjoyable, and had high trust in the robot. They found Vizzy looked artificial and had a machine-like appearance, but thought its gaze was responsive and liked the robot.

The aforementioned robots showed the potential in using a robot facilitator for upper-limb strength and flexibility exercises [6,7,8,9,23,24] and for cardiac rehabilitation [22]. The studies focused on perceptions and experiences of the user through questionnaires and interviews [6,8,9,23,24], with the exception of [7,9], which tracked body movements to determine if users correctly performed specific exercises. In general, the results showed acceptance for robots as a fitness coach, as well as a preference for physical robots over virtual ones [7]. Only in [8], were both group and one-on-one sessions considered, however, the two interaction types were not directly compared to investigate any health and HRI benefits between the two. Additionally, the aforementioned studies have only investigated the perception of the robots over short-term durations (one to three interactions) [7,8,9,24], lack quantitative results when performed over a long-term duration (10 weeks) [6], or have not directly focused on the older adult population in their long-term studies [22]. Furthermore, no other user feedback was used by the robots to engage older users in the long-term. In particular, human affect has been shown to promote engagement and encouragement during HRI by adapting robot emotional behaviors to users. Herein, we investigate for the first time a long-term robot exercise facilitation study with older adults to compare and determine the benefits of one-on-one and group sessions with an intelligent and autonomous socially assistive robot which adapts its behaviors to the users.

2.2. General HRI Studies Comparing Group vs. One-on-One Interactions

To-date, only a handful of studies have investigated and compared group and one-on-one interactions for social robots. For example, in [25], the mobile robot Robovie was deployed in a crowded shopping mall to provide directions to visitors in group or one-on-one settings. Results showed that groups in general, especially entitative (family, friends, female) groups of people, interacted longer with the Robovie, and were more social and positive towards it than individuals. In addition, they found that participants who would not typically interact with the robot based on their individual characteristics were more likely to interact with Robovie if other members of their group did.

In [26], intergroup competition was investigated during HRI. A study was performed where participants played dilemma games, where they had the chance to exhibit competitive and cooperative behaviors in four group settings with varying numbers of humans and robots. Results indicated that groups of people were more competitive towards the robots as opposed to individuals. Furthermore, participants were more competitive when they were interacting with the same number of robots (e.g., three humans with three robots or one human with one robot).

In [27], two MyKeepon baby chick-like robots were used in an interactive storytelling scenario with children in both one-on-one and groups settings. The results showed that individual participants had a better understanding of the plot and semantic details of the story. This may be due to the children being more attentive as there were no distractions from peers in the one-on-one setting. However, when recalling the emotional content of the story, there was no difference between individuals and the groups.

These limited studies show differences in user behaviors and overall experience between group and one-on-one interactions. In some scenarios, interactions were more positive in group settings than individual settings as peers where able to motivate each other during certain tasks [25,26], a phenomenon also reported in non-robot-based exercise settings [10]. Individual interactions, however, were less distracting and allowed individuals to focus on the task at hand [27]. As these robot studies did not focus directly on older adults nor the exercise activity, it is important to explore the specific needs and experiences of this particular user group in assistive HRI. This further motivates our HRI study.

3. Social Robot Exercise Facilitator

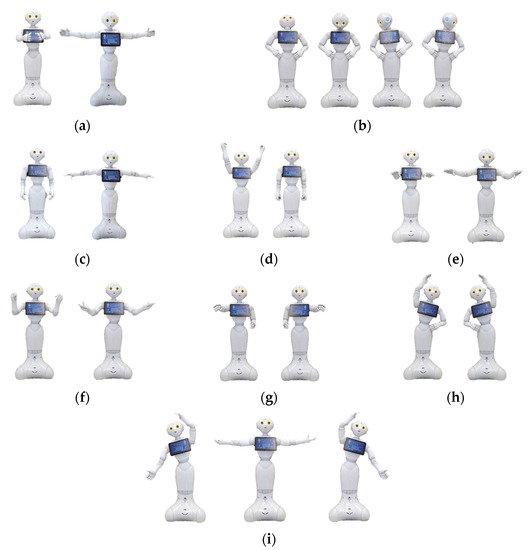

In our long-term HRI study, we utilized the Pepper robot to autonomously facilitate upper-body exercises in both group sessions and one-on-one sessions. The robot is capable of facilitating nine different upper-limb exercises, Figure 1: (1) open arm stretches, (2) neck exercises, (3) arm raises, (4) downward punches, (5) breaststrokes, (6) open/close hands, (7) forward punches, (8) lateral trunk stretches (LTS), and (9) two-arm LTS. These exercises were designed by a physiotherapist at our partner long-term care (LTC) home, the Yee Hong Centre for Geriatric Care. The exercises are composed of strength building and flexibility exercises [28]. Benefits include improving stamina and muscle strength, functional capacity, helping maintain healthy bones, muscle, and joints, and reducing the risk of falling and fracturing bones [29].

Figure 1.

The nine upper-body exercises designed for Pepper: (a) open arm stretches; (b) neck exercises; (c) arm raises; (d) downward punches; (e) breaststrokes; (f) open/close hands; (g) forward punches; (h) lateral trunk stretches; and (i) two-arm lateral trunk stretches.

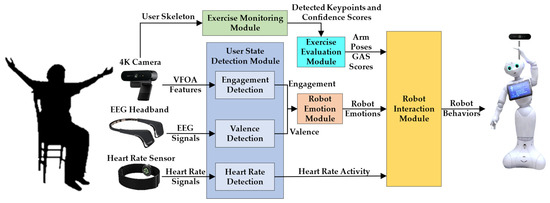

Our proposed robot architecture for exercise facilitation, Figure 2, is comprised of five modules: (1) Exercise Monitoring, (2) Exercise Evaluation, (3) User State Detection, (4) Robot Emotion, and (5) Robot Interaction. The Exercise Monitoring module tracks a user’s skeleton using a Logitech BRIO webcam with 4K Ultra HD video and HDR to estimate the user’s body poses during exercise. The detected poses, in turn, are used as input into the Exercise Evaluation Module, which uses the Goal Attainment Scale (GAS) [30] to determine and monitor the user’s exercise goal achievements to determine performance over time. User states are determined via User State Detection Module in order for the robot to provide feedback to the user while they exercise. User state comprises three submodules: (1) Engagement Detection, which detects the user engagement through visual focus of attention (VFOA) from the 4K camera; (2) Valence Detection to determine the user valence from an EEG headband sensor; and (3) Heart Rate Detection, which monitors the user’s heart rate from a photoplethysmography (PPG) sensor embedded into a wristband. The detected user valence and engagement are then used as inputs to the Robot Emotion Module to determine the robot emotions using an n-th order Markov Model. Lastly, the Robot Interaction Module determines the robot exercise-specific behaviors based on the robot’s emotion, the user’s detected exercise poses, and heart rate activity, and displays them using a combination of nonverbal communication consisting of eye color and body gestures, vocal intonation as well as speech. The details of each module are discussed below and in Appendix A.

Figure 2.

Robot exercise facilitation architecture.

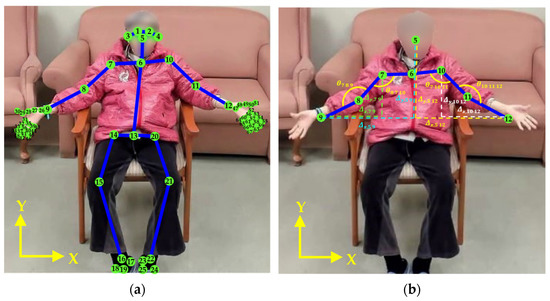

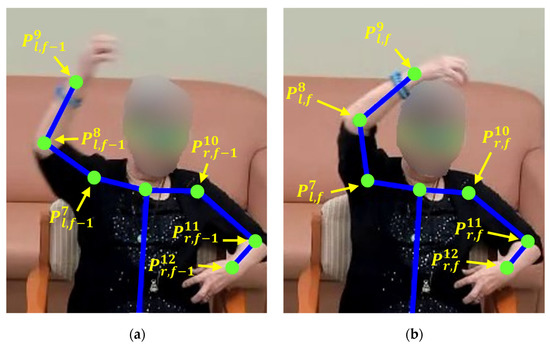

3.1. Exercise Monitoring Module

The Exercise Monitoring Module detects if the user performs the requested exercise poses using the 4K camera placed behind the robot. It tracks the spatial positions of keypoints on the user body detected by the OpenPose model [31] including: (1) five facial keypoints, (2) 20 skeleton keypoints, and (3) 42 hand keypoints. The hand keypoints detection is only enabled when the user is implementing open/close hand exercises. In addition, the OpenPose model also provides the confidence score, , for each detected keypoint, which can be used to represent the visibility of each keypoint [31]. The OpenPose model is a convolutional neural network model trained on the MPII Human Pose dataset, COCO dataset, and images from the New Zealand Sign Language Exercises [31,32]. Once keypoints are acquired, the pose of the user is classified using Random Forest classifier, which achieves an average pose classification accuracy of 97.1% over all exercises considered. Details of the keypoints used, the corresponding features extracted from the keypoints for each exercise, and pose classification are presented in Appendix A.1.

OpenPose can detect up to 19 people at once [31]. However, based on the field of view of the Logitech Brio 4K camera and the participants of the group exercise sessions sitting in a semi-circle around the robot such that they would not occlude each other, the maximum number of users that can be monitored in a single exercise session is 10 participants.

3.2. Exercise Evaluation Module

In order to evaluate exercise progress over time, the Goal Attainment Scale (GAS) is used. GAS is a measurement used in occupational therapy to quantify and assess a person’s progress on goal achievement [33]. GAS is a well-known assessment which uses measures that are highly sensitive to evaluating change over time [34,35]. Concurrent validity of GAS was assessed in [36] by correlation with the Barthel Index (r = 0.86) and the global clinical outcome rating (r = 0.82) for older adults. The repeatable measures can not only provide insight on individual exercise progress but can also be scaled to allow for comparison of change within and between groups of older adults with possibly unique goals [37]. GAS has been used in therapy sessions with robots to evaluate user performance during social skills improvement [38], and motor skills development [39]. The advantages of using GAS are that the goals can be customized based on the specific needs of an individual or a group, GAS then converts these goals into quantitative results to easily evaluate a person’s progress towards them [33].

In this work, GAS is used to evaluate a person’s progress on exercise performance. Each goal is quantified by five GAS scores ranging from −2 to +2, based on a user completing the number of repetitions for each exercise [33]; with −2 not performing all of the repetitions; −1 and 0 all repetitions were implemented with partially pose completion or completed pose repetitions were only performed for less than half of repetitions; +1 and +2 completed poses were performed for more than half of total repetitions or all repetition were performed correctly. The number of repetitions for each exercise is defined as 8 for the first week and 12 for subsequent weeks and is based on the recommendation by the U.S. National Institute of Aging (NIA) [40]. The details of the GAS score criteria for each exercise are summarized in Table 1.

Table 1.

GAS score for performing exercises.

The robot computes one GAS score for each exercise, , where i is the index of each exercise. This computation is completed by monitoring the exercise of the user using the Exercise Evaluation Module and estimating its GAS score. The performance of the Exercise Evaluation Module in estimating the GAS score for each exercise is detailed in Appendix A.2.

After the scores are determined for each exercise, a GAS T-Score, T, which is a singular value that quantifies the overall performance of a user during an exercise session based on all GAS scores combined, can be computed for each user at the end of each exercise session [33]:

where is the corresponding weight for each score. In our work, an equal weight (i.e., = 1) is selected for each exercise score as we considered each exercise as equally important. T-scores range from 30 to 70, with 30 indicating that the user did not perform any exercises at all and 70 indicating complete poses for all repetitions.

3.3. User State Detection Module

The User State Detection Module determines: (1) user valence, (2) engagement, and (3) heart rate. The quality of user experience with social robots during HRIs can be determined based on their positive or negative affect (i.e., valence) and the ability of the robot to engage users in the activity (i.e., engagement). Furthermore, to ensure users do not overexert themselves, we use heart rate to determine that it is not above the upper limit that their cardiovascular system can handle during physical activities [41].

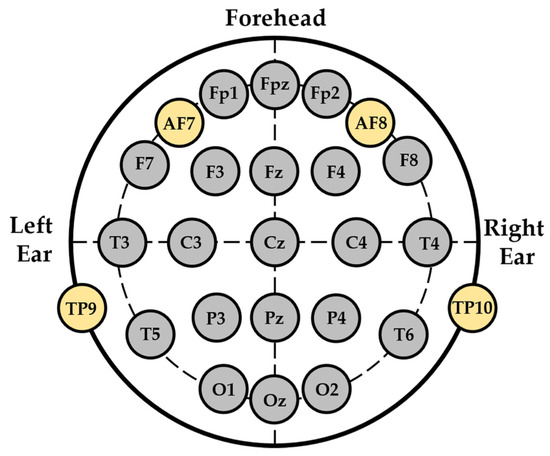

3.3.1. Valence

The user valence refers to the user’s level of pleasantness during the interaction, which can represent whether the interaction with the robot is helpful or rewarding [42]. The valence detection model is adapted from our previous work [43]. The model uses a binary classification method to determine positive or negative valence from the users, which is consistent with the literature [44,45,46,47]. The EEG signals are measured using a four-channel dry electrode EEG sensor, InteraXon Muse 2016. Appendix A.3.1 describes the extracted features obtained from the EEG signals, and the three hidden layer Multilayer Perceptron Neural Network classifier used to classify valence. A classification accuracy of 77% was achieved for valence.

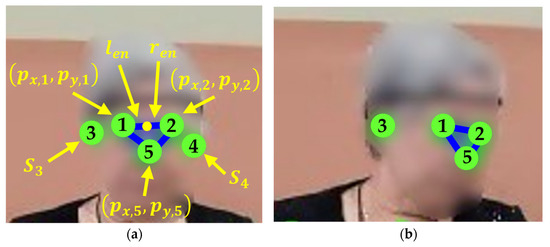

3.3.2. Engagement

For engagement, we use visual focus of attention (VFOA) to determine whether a user is attentive to the robot and exercise activity. VFOA is a common measure of engagement used in numerous HRI studies [48,49,50], including studies with older adults [51,52]. The robot is able to detect the user engagement as either engaged or not engaged towards the robot based on their VFOA. Two different VFOA features are used for classifying engagement: (1) the orientation of the face, ; and (2) the visibility of the ears, measured through the confidence scores of their respective keypoints ( and ) as detected by the OpenPose model [31]. The orientation of the face is estimated using the spatial positions of the facial keypoints (i.e., eyes and nose) detected by the OpenPose model. Appendix A.3.2 explains the features and k-NN classifier for engagement detection. A classification accuracy of 93% was achieved for engagement.

3.3.3. Heart Rate

The maximum heart rate (MHR) of a person can be estimated by [53]:

The maximum target heart rate during anaerobic exercises (e.g., strength building and flexibility) is determined to be 85% of the MHR [53]. We use an optical heart rate sensor, Polar OH1, to measure the user heart rate in bpm at 1 Hz throughout each exercise session. During exercise facilitation, the measured heart rate signals are sent directly via Bluetooth from the heart rate sensor to the Heart Rate Detection sub-module in the User State Detection Module of the Robot Exercise Facilitation Architecture. In this submodule, heart rate measurements are monitored to ensure they remain below the upper threshold (85%) of the MHR to prevent overexertion. If such a condition occurs, the experiment is stopped, and the user is requested to rest by the robot. The heart rate measurements are also saved to a file for post-exercise analysis.

3.4. Robot Emotion Module

This module utilizes a robot emotion model that we have previously developed [54,55], which considers the history of the robot’s emotions and the user states (i.e., user valence and engagement) to determine the robot’s emotional behavior. This model has been adapted herein for our HRI study.

We use an nth order Markov Model with decay to represent the current robot emotion based on the previous emotional history of the robot [55]. An exponential decay function is used to incorporate the decreasing influence of past emotions as time passes.

The robot emotion state–human affect probability distribution matrix was trained with 30 adult participants (five older adults) prior to the HRI study to determine the transition probability values of the robot’s probability distribution matrix. The model is trained in such a way that given the robot’s emotional history and the user state, it chooses the emotion that has the highest likelihood of engaging the user in the current exercise step. Initially, the probabilities of the robot’s emotional states are uniformly distributed to allow each emotion to have the same probability to be chosen and are then updated during training for the exercise activity. From our training, when the user has negative valence, the robot would display a sad emotion, which had the highest probability of user engagement for this particular state. Other patterns we note from training are if the robot’s previous emotion history was sad and the user had negative valence or low engagement, the robot displays a worried emotion which had the highest probability of user engagement. Furthermore, when the user has positive valence and/or high engagement, the robot displays positive emotions such as happy and interested based on the likelihood of these emotions being the most engaging for these scenarios while considering the robot’s emotion history. Additional details of the Robot Emotion Module are summarized in Appendix A.4.

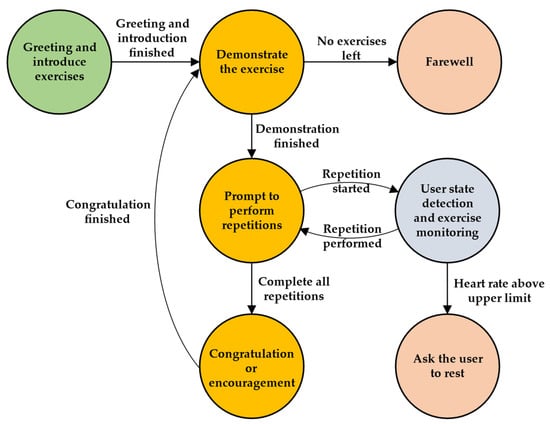

3.5. Robot Interaction Module

The Robot Interaction Module utilizes Finite State Machines (FSMs) to determine the robot behaviors for both types of interactions with the users: (1) one-on-one, and (2) group interaction, Figure 3.

Figure 3.

FSM of the robot interaction module.

During the exercise session, the user states (i.e., valence, engagement, and heart rate) are estimated to determine the robot emotions (represented by happy, interested, worried, and sad) via the Robot Emotion Module, and body poses are tracked using both the Exercise Evaluation Module and User State Detection Module to determine performance via GAS and engagement, respectively. The robot displays its behaviors using a combination of speech, vocal intonation, body gestures, and eye colors. If no movement is detected, the robot prompts the user to try again to perform the exercises. In addition, if the user’s heart rate is above the upper threshold (85% of their MHR), the robot would terminate the exercise sessions and ask the user to rest. At the end of each exercise, the robot congratulates or encourages the user based on performance and affect. After finishing all the exercises, the robot says farewell to the users. For the group interaction, the interaction scenario is similar. The overall response time of the robot during exercise facilitation is approximately 33 s. This is the time it takes for the robot to respond with its corresponding emotion-based behavior based on user states once the user or user group performs a specific exercise.

In a group exercise session, if one individual user’s heart rate exceeds the upper threshold of the MHR, the exercise session is paused, and all participants are asked to rest until that user’s heart rate is reduced below this threshold. This approach is chosen to promote group dynamics among the participants. Namely, encouraging those in a group setting to exercise (and to rest) together can further motivate participation in and adherence to exercise [56].

In HRI, positive and negative user valence has been correlated with liking and disliking certain HRI scenarios [57,58]. We have designed the robot’s verbal feedback to, therefore, validate its awareness of this user state, and in turn encourage the user to continue exercising to promote engagement [54,59]. Table 2 presents examples of the robot activity-specific behaviors during the exercise sessions.

Table 2.

Robot behavior for exercise sessions.

4. Exercise Experiments

A long-term HRI study was conducted at the Yee Hong Centre for Geriatric Care in Mississauga to investigate the autonomous facilitation of exercises with Pepper and older adult residents for both one-on-one and group settings. The Yee Hong Centre has 200 seniors living in the facility with an average age of 87, who require 24-h nursing supervision and care to manage frailty and a range of complex chronic and mental illnesses [60]. The majority of residents speak Cantonese or Mandarin and very few can speak English as a second language. In addition, 60% have a clinical diagnosis of dementia [60].

4.1. Participants

The following inclusion criteria for the participants was used: residents who (1) were at least 60 years or older; (2) were capable of understanding English and/or Mandarin with normal or corrected hearing levels; (3) were able to perform light upper body exercise based on the Minimum Data Set (MDS) Functional Limitation in Range of Motion section with a score of 0 (no limitation) or 1 (limitation on one side) [61]; (4) had no other health problems that would otherwise affect their ability to perform the task; (5) were capable of providing consent for their participation; and (6) had no or mild cognitive impairment (e.g., Alzheimer’s or other types of dementia) as defined by the Cognitive Performance Scale (CPS) with a score lower than 3 (i.e., intact or mild impairment) [62] or the Mini-Mental State Exam (MMSE) with a score greater than 19 (i.e., normal or mild impairment) [63]. Before the commencement of the study, a survey was conducted to determine participants’ prior experience with a robot. All of the participants had either never seen a robot or had seen one through a robot demonstration. None had interacted with a robot previously.

A minimum sample size of 25 participants was determined using a two-tailed Wilcoxon signed-rank test power analysis with an of 0.05, power of 0.8, and effect size index of 0.61. Our effect size is similar to other long-term HRI studies with older adults [64,65], which had effect sizes of 0.61 and 0.6, respectively.

In general, women outnumber men in long-term care homes, especially in Canada and several Western Countries [66], with more than 70% of residents being women [67]. This was also evident in our participant pool. Using our inclusion criteria, 31 participants were recruited of which 27 (3 male and 24 female) completed the study. The participants ranged in age from 79 to 97 (, ), and participated on average in 15 sessions. Written consent was obtained from each participant prior to the start of the experiments. Ethics approval was obtained from both the University of Toronto and the Yee Hong Centre for Geriatric Care.

4.2. Experimental Design

At long-term care facilities, exercise programs with older adults are often delivered in group-based or individual-based settings [68]. The participants in the study were organized randomly into one-on-one or group-based exercise sessions with the robot. The exercise sessions took place twice a week for approximately two months (16 exercise sessions in total for both one-on-one and group settings). Each exercise session was approximately one hour in duration. Eight participants participated in the one-on-one sessions, whereas 19 participants were in the group sessions, which were further split into a group of 9 and a group of 10 participants, respectively. The size of the group sessions was consistent with the group size of the exercise sessions established in the long-term care home.

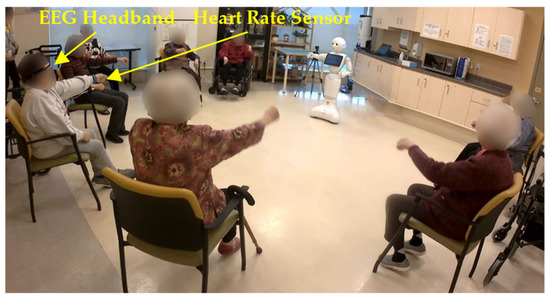

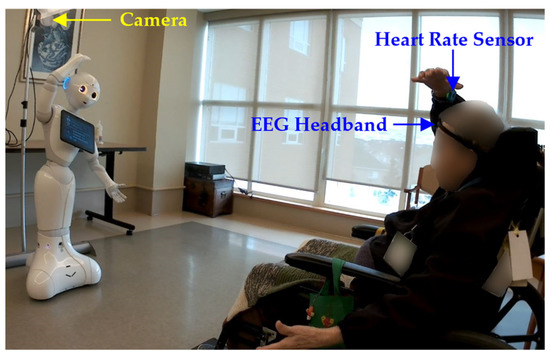

For the group sessions, participants were seated in a half circle with an approximate radius of 2 m in front of the robot in each session, Figure 4. Two random participants from each group session, who sat in the center of the half circle, wore the EEG headband and heart rate sensor for the robot to detect their valence and heart rate. This allowed for direct line-of-sight to the camera for estimating body poses during exercising; however, any two participants could wear the sensors. For the one-on-one sessions, each participant was seated 1.5 m directly in front of the robot while wearing the EEG headband and heart rate sensor, Figure 5. We used adjustable EEG headbands. The headband was adjusted in size for each participant and secured to their forehead by the research team prior to each exercise session. As the exercises did not include any fast or high-impact neck or upper body motions, movements to the band itself were minimized with no anomalies in the sensory data obtained during interactions.

Figure 4.

Group exercise facilitation.

Figure 5.

One-on-one exercise facilitation.

4.3. Experimental Procedure

During each single one-on-one and group exercise session, the robot autonomously facilitated the aforementioned upper body exercises consecutively for the full duration, with no breaks in between exercises. The robot facilitated the exercise sessions mostly in Mandarin for both group and one-on-one sessions. There were two participants in the one-on-one sessions who interacted in English based on their preference.

As the same exercise sessions were repeated every week, new exercises were added to them to increase complexity and exercise variation as time went by. These were in the form of exercises that combined 2 to 3 of the individual exercises together—for example, lateral trunk stretches, forward punches, and downward punches. The combination of two exercises were added during the third week while the combination of three exercises were added during the sixth week. Increasing the complexity of the exercises over time has been commonly implemented in exercise programs with older adults in order to gradually improve their functional capacity [69]. In addition, adding variation to the exercises is beneficial in engaging users over time and improving their adherence to the program [70].

For the one-on-one interaction, the exercise sessions begin with the robot greeting the user and providing exercise information (e.g., number of exercises and repetitions). Next, the robot demonstrates each exercise and asks the user to follow it for n number of repetitions. In the first week, the robot would demonstrate each exercise with step-by-step instructions. Subsequent weeks as the users become familiar with the exercises, the robot only visually demonstrates one repetition of the exercises without the step-by-step instructions. In addition, the number of the repetitions of each exercise increases from 8 to 12 in order to increase the level of difficulty after the first week. Video recordings were taken of every one-on-one and group session, with sensor recordings taken from the same two participants in the group sessions and all participants in the one-on-one sessions.

4.4. Measures

The user study is evaluated based on the: (1) user performance over time via GAS; (2) measured valence and engagement during the activity; (3) the robot’s adapted emotions based on user valence and engagement; (4) user self-reported valence during the interaction using the Self-Assessment Manikin (SAM) scale [71]; and (5) a five-point Likert scale robot perception questionnaire adapted from the Almere model [72] with the following constructs: acceptance (C1), perceived usefulness and ease of use (C2), perceived sociability and intelligence (C3), robot appearance and movements (C4), and the overall experience with the robot, Table A6 in Appendix B.

Self-reported valence is obtained during the first week, after one month, and at the end of the study (i.e., two months) and the robot perception questionnaire was administered after one month and two months to investigate any changes in user valence towards the robot and perceptions of the robot as the HRI study progressed.

5. Results

5.1. Exercise Evaluation Results

The average GAS T-scores for the one-on-one sessions, group sessions, and all users combined for the first week, one month, two months, and the entire duration are detailed in Table 3. Participants, in general, from both one-on-one sessions and group sessions were able to achieve an average GAS T-score of 64.11, which indicates that they were able to follow all repetitions and perform complete exercise poses for more than half of the total repetitions by the end of the study.

Table 3.

Average GAS T-score for different exercise session types during the first week, week of one-month questionnaire, week of two-months questionnaire, and entire duration of study.

5.1.1. One-on-One Sessions

All eight participants in the one-on-one session complied with the robot exercises and had an average GAS T-score of 64.03 ± 4.92 over the duration of two months. User 7, however, only participated in 12 of the 16 exercise sessions and watched the robot in the remaining four. The user indicated that the robot moved too fast for them to always follow it. This resulted in an average GAS T-score of 51.24 based on the days they complied with the robot.

5.1.2. Group Sessions

The users in the group sessions complied with the robot exercises throughout the sessions and had an average GAS T-score of 66.67 at the end of two months.

We investigated if there was any improvement in GAS T-score between the first week and after one month, and the first week and after two months. In general, an increase in the average GAS T-score was observed between the first week for one-on-one sessions () and group sessions () and after one month for both one-on-one () and group sessions (), as well as at the end of two months for one-on-one sessions () and group sessions (). This increase in GAS T-score indicates that users achieved more repetitions of the exercises, and that more of the completed repetitions were achieved with a complete pose as opposed to a partially complete pose. These improvements may suggest improved muscle strength and range of motion of the users. Statistical significance was found in the group sessions using a non-parametric Friedman test; . However, post-hoc non-parametric Wilcoxon Signed rank tests with Bonferroni correction of showed no statistical significance between the first week and one month (), between the first week and after two months, (), or between one month and after two months (). We postulate that the lack of significance could be due to participants becoming familiar with the exercises. As time went by, they were required to perform the exercises for longer and engage in more challenging sequences of repeated exercises, as new combinations of exercises were added to the exercise sessions for variation. This was especially true at 6 weeks where the complexity of the exercises was the highest. Nonetheless, there was an overall increase in the GAS T-scores from week one to the end of the two-months, which demonstrates exercise goal achievement. A non-parametric Friedman test showed no statistical significance was found in the overall GAS T-scores between the group and one-on-one sessions after one week, after one month, and after two months; .

We also investigated if there were differences in GAS T-scores between the one-on-one and the group sessions during the entire study. In general, the group sessions had a higher GAS T-score () compared to the one-on-one session () throughout the study. A statistical significant difference was found in GAS T-scores using a non-parametric Mann–Whitney U-test: , We postulate that the higher GAS T scores in the group sessions were due to high task cohesiveness within the group and people’s general preference to exercise in groups.

5.2. User State Detection and Robot Emotion Results

The average detected valence measured using the EEG sensor, engagement based on the users’ VFOA, and heart rate for users in the one-on-one, group, and for all participants are presented for each time period in Table 4. Participants, on average, from both one-on-one sessions and group sessions had positive valence towards the robot for 87.73% of the interaction of the time. For the group sessions, due to the large number of participants we were unable to accurately measure engagement for every participant, and therefore focus our discussions on the one-on-one sessions. All participants remained engaged towards the robot, regardless of the level of complexity of the exercises, for 98.41% of the interaction time. In addition, participants had an average heart rate of 82.37 bpm during the interactions, and none of their heart rate exceeded the upper limit of the target range (i.e., 120 bpm for 79 years old and 105 bpm for 97 years old).

Table 4.

The average and standard deviation of the percentage of time of the detected positive valence, engagement towards the robot, and heart rate for one-on-one sessions, group sessions, and all users combined.

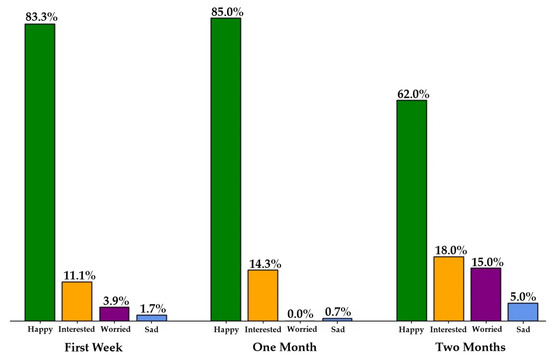

As most users had positive valence and were engaged towards the robot, the robot displayed happy emotions for the exercise sessions for the majority of these interactions. Figure 6 presents the overall percentage of time the robot displayed each of the four emotions. As can be seen from the figure, the happy emotion was displayed for 83.3%, 85%, and 62% of time during the first week, after one month, and after two months, respectively. The change in the emotions displayed by the robot facilitator can be attributed to the increase in the difficulty of the exercises over time, as detailed in Section 4.2. This increase in exercise difficulty resulted in more users displaying varying valence for which the robot adopted other emotions to encourage the users.

Figure 6.

Percentage of time each emotion was displayed by the robot while giving feedback to the users during all exercise sessions for each time period.

Detailed examples of user valence, engagement, and robot emotion for eight users in one-on-one sessions, valence and robot emotions for the two users in the group sessions, and results for all users during the first week, after one month, and after two months are discussed below. We found no statistically significant difference in detected user valence between the three time periods when conducting a non-parametric Friedman test; .

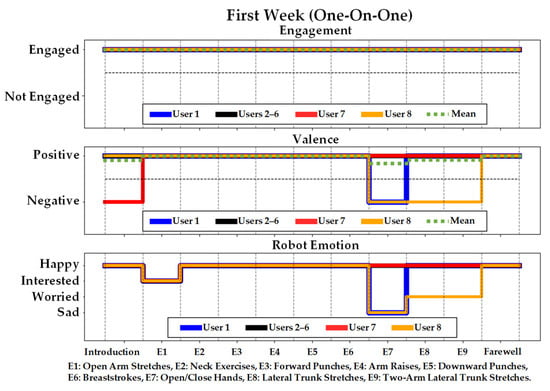

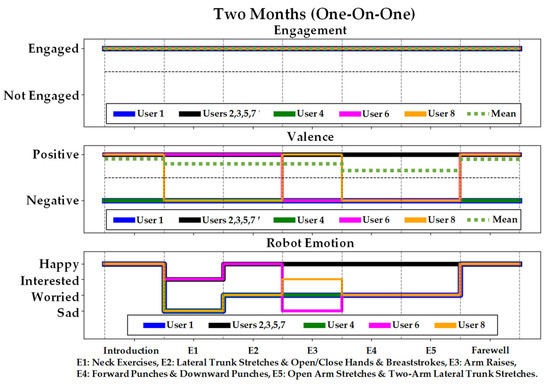

5.2.1. One-on-One Sessions

In all three time periods, the users were engaged above 98% of the time throughout the one-on-one interactions. In the one-on-one sessions after the first week, Figure 7, Users 2–6 had positive valence throughout the session. The robot in turn displayed happy and interested emotions. User 1 had positive valence for the majority of the session, however, they had negative valence only during the open/close hands exercise (denoted as E7). This negative valence was observed to be harder for this user due to the exercise being faster than other exercises (an average of 1.16 s/repetition for E7 versus 3.6 s/repetition for the others). The robot detected this negative valence and in turn displayed a sad emotion to encourage the user to keep trying the exercise.

Figure 7.

User engagement, valence, and the corresponding robot emotion for one-on-one sessions during the first week.

Alternatively, User 7 first had negative valence during the introduction stage and then had positive valence for the rest of the session. In general, Pepper first displayed the happy emotion during the introduction and transitioned to interested. User 8 also had negative valence during the open/close hands exercise (E7), in addition to the lateral trunk stretches (E8), and two-arm lateral trunk stretches (E9) exercises. The robot displayed a combination of sad and worried emotions during these exercises to encourage the user to continue. In general, User 8 had difficulties performing arm exercises that were faster and had larger arm movements due to their observed upper-limb tremors.

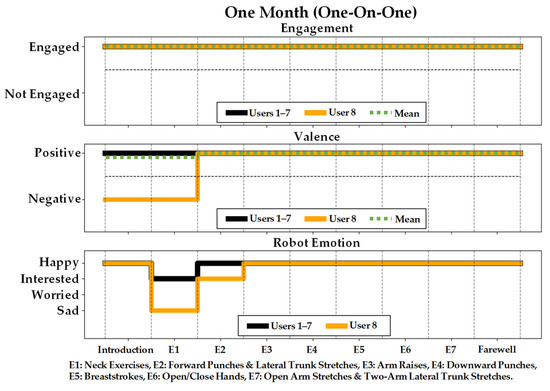

After one month, Figure 8, all users had positive valence, with the exception of User 8 during the introduction and E1 stages. User 8 started with negative valence but then increased to positive valence for the rest of the session. The robot responded by displaying the sad emotion in E1 and then transitioned to interested and happy emotions after detecting positive valence similar to the other users.

Figure 8.

User engagement, valence, and the corresponding robot emotion for one-on-one sessions after one month.

After two months, the users, on average, displayed positive valence throughout the exercise sessions, Figure 9. Four of the eight users always displayed positive valence (Users 2, 3, 5, and 7), for which the robot displayed both interested and happy emotions, Figure 9. Furthermore, two of the four users who experienced negative valence only did so for some exercises and not the entire two-month duration (Users 6 and 8). The overall positive valence of the users was also reported in Table 4, where it was shown that users experience positive valence for approximately 90% of the time after two months of interactions. This result is also consistent with the self-reported positive valence of the users (Section 5.3).

Figure 9.

User engagement, valence, and the corresponding robot emotion for one-on-one sessions after two months.

Both Users 1 and 4 had negative detected valence during this entire two-month duration for which the robot displayed sad and worried emotions for encouragement. This increased the robot’s expressions of the worried and sad emotions. User 6 had positive valence except when performing combined arm raises, combined forward and downward punches, and combined open arm stretches with two-arms LTS (E3-E5), for which the robot emotion transitioned to a worried emotion. User 8, again, had negative valence during the neck exercises (E1) and the combination of LTS and open/close hands and breaststrokes (E2), and thus the robot displayed sad and worried emotions. This user also showed negative valence during the neck exercises after one month but not during the first week, which could be due to the fact that it was more strenuous to perform this exercise with the 12 repetitions than with only 8 repetitions. When User 8 transitioned from negative valence to positive valence in arm raises (E3), the robot transitioned to interested. However, User 8 transitioned back to negative valence while performing the combination of forward and downward punches (E4), and also the combination of open arm stretches and two-arm LTS (E5), and the robot displayed worried emotions. User 8 was the only participant to consistently have negative valence during all three sessions, which we believe could be attributed to their physical impairment in completing the exercises.

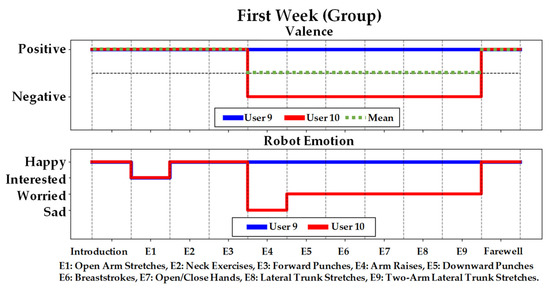

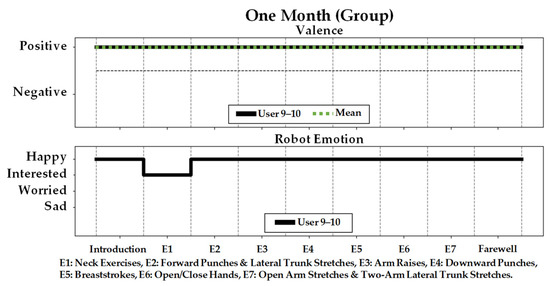

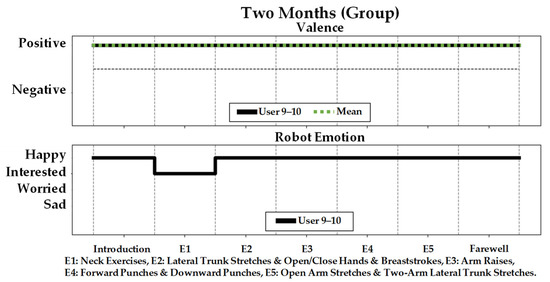

5.2.2. Group Sessions

For the group sessions, as previously mentioned due to the large number of participants we were unable to accurately measure engagement for every participant, we therefore focus our discussion on user valence. However, it can be noted from our video analysis that the majority of the group focused their attention on the robot throughout each session, as they were consistently performing each exercise. Valence is discussed here for two participants as a representative of the group, Figure 10, Figure 11 and Figure 12. The participants had an average detected positive valence of 92.26% of the interaction time after the first week, 90.26% after one month, and 97.49% after two months, respectively.

Figure 10.

User valence and the corresponding robot emotion for group sessions during the first week.

Figure 11.

User valence and the corresponding robot emotion for group sessions after one month.

Figure 12.

User valence and the corresponding robot emotion for group sessions after two months.

User 9, like most participants in the group session, had positive valence during all time periods. During the first week, User 10 started with positive valence, and transitioned to negative valence during several exercises including arm raises, downward punches, breaststrokes, open/close hands, LTS, and two-arm LTS (E4–E9), which resulted in the robot displaying sad and worried emotions.

We investigated if there was any difference in detected valence between the one-on-one sessions and the group sessions. In general, detected valence for the group sessions () was higher than the one-on-one sessions (). However, no statistical significance was found using a non-parametric Mann–Whitney U-test: .

5.3. Self-Reported Valence (SAM Scale)

The reported valence from the five-point SAM scale questionnaire measured during the first week, one month, and two months for one-on-one sessions, group sessions, and all users combined are presented in Table 5. The valence is on a scale of −2 (very negative valence), −1 (negative valence), 0 (neutral), +1 (positive valence), and +2 (very positive valence). All users, in general, reported positive valence throughout the study with the average valence of 1.33, 1.30, and 1.19 after the first week, one month, and two months, respectively.

Table 5.

SAM scale results.

This lower reported valence in the group sessions was due to participants stating they would like the robot to be taller and bigger for them to see it better, as in the group sessions the robot was placed further away from them to accommodate more participants. However, none of the users in the one-on-one sessions had this concern since they were interacting with the robot at a closer distance, Figure 5.

We also investigated if there were any differences between the one-on-one and group sessions during different time periods. One-on-one sessions, in general, had lower reported valence than group sessions during the first week (one-on-one: ; group: ) and one month (one-on-one: ; group: ). However, one-on-one sessions had higher valence () than the group sessions () after two months. No statistically significant difference was observed for these differences using a non-parametric Mann–Whitney U-test for the first week: , one month: , and two months: .

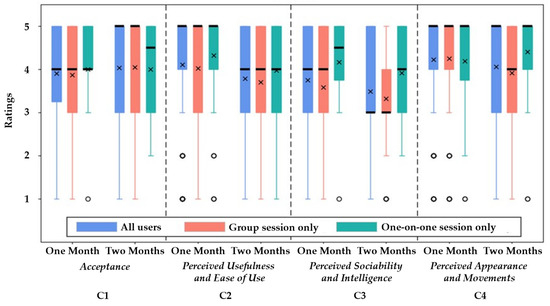

5.4. Robot Perception Questionnaire

The results from the five-point Likert scale robot perception questionnaire measured after one month and two months for one-on-one, group sessions, and all users combined are summarized in Table A7 (in Appendix B) and Figure 13. For each construct, questions that were negatively worded were reverse-scored for analysis. The internal consistency of each construct measured at each month was determined using Cronbach’s [73]. The coefficient for constructs C1–C4, ranged from 0.75 to 0.81 for one-month, and 0.68–0.88 for two-month, respectively. A value of 0.5 and above can be considered as acceptable for short tests [74,75].

Figure 13.

Questionnaire results in box plots for each construct with different user groups with quartiles (box), min-max (whisker), median (black line), and mean (x), and outliers (circles).

5.4.1. Acceptance

Robot Acceptance (C1) results showed that participants from both user groups (one-on-one and group-based) had high acceptance of the robot at the end of two months (). The participants enjoyed using the robot for exercising () and more than half of them (67%) reported they would use the robot again () after two months of interaction. They found the sensors comfortable to wear (negatively worded, ).

In general, an increase in this construct was observed from one month () to two months () for all participants. However, there was no statistically significant difference found using a non-parametric Wilcoxon Signed-rank test: .

On average, participants in the one-on-one sessions had similar Likert ratings as the group sessions for one month (one-on-one: ; group: ) but slightly lower ratings than the group sessions for two months (one-on-one: ; group: ). No statistically significant difference was observed between these two interaction types using a non-parametric Mann–Whitney U-test for the one month: , and two months: .

5.4.2. Perceived Usefulness and Ease of Use

Participants found the perceived usefulness and ease of use (C2) of the robot to be positive after one month () with a slight decrease after two months (). A statistically significant difference between these months was observed using a non-parametric Wilcoxon Signed-rank test: . Users agreed that the exercises with the robot were good for their overall health (), more than half of them (67%) found the robot was helpful for doing exercises (negatively worded, ) and 63% also believed the robot motivated them to exercise (). The majority also (96%) found the robot clearly displayed each exercise () and trusted (74%) its advice (negatively worded, ). However, as users reported that they could not set up the equipment (e.g., robot and computer) by themselves, they were neutral about the ease of use of the robot (negatively worded, ).

In general, participants in the one-on-one sessions had similar ratings for this construct as the group sessions both after one month (one-on-one: ; group: ) and two months (one-on-one ; group: ). However, there was no statistically significant difference for perceived usefulness and ease of use using a non-parametric Mann–Whitney U-test for both one month: , and two months: .

5.4.3. Perceived Sociability and Intelligence

In general, participants in one-on-one sessions (one month: ; two months:) found the robot more sociable and intelligent than those in the group sessions (one month ; two months: ). Statistically significant differences were found for both time periods using a non-parametric Mann–Whitney U-test after one month: , and two months: . As there are more participants in the group sessions and the robot did not respond to the user states of all of them, in general, they were neutral about whether the robot understood what they were doing during the exercises () and if the robot displayed appropriate emotions (). However, the two participants that wore the sensors were able to identify the robot’s emotions through eye color (worded negatively, ) and vocal intonation (). In the one-on-one sessions, they thought the robot understood what they were doing () as a result of direct feedback from the robot, and were able identify the robot’s emotions (70% of participants) mainly through its vocal intonation (), and 50% through the display of eye colors (negatively worded, ), respectively.

Overall, the perceived sociability and intelligence from both interaction types were positive for one month () and then became neutral after two months (). Though, no statistically significant difference was found between one to two months using a non-parametric Wilcoxon Signed-rank test: .

5.4.4. Robot Appearance and Movements

In general, participants reported positive perception of the robot’s appearance and movements (). Seventy percent of them were able to follow the robot movements (negatively worded, ), found it had a clear voice () and were able to understand the robot’s instructions (negatively worded, ). In addition, more than half (59%) found the robot’s size appropriate for exercising () with only 19% from the group sessions preferring the robot to be larger and taller like an adult human.

Participants in the group sessions initially had a high positive rating for this construct () similar to those in the one-on-one sessions () after one month, but as they engaged more with the robot their ratings became less positive in the group sessions (), in contrast to the one-on-one sessions, which remained highly positive () after two months. A statistically significant difference was found after two months between these sessions: . Some participants (19%) in the group sessions noted that the robot’s size could be larger, as they reported slightly less positive rating when asked if the robot’s size was appropriate for exercising () than the participants in the one-on-one sessions ().

5.4.5. Overall Experience

At the end of the study, the participants’ overall experience (in both one-on-one and group sessions) showed that more than half of them (i.e., 56%) thought their physical health was improved () and were motivated to perform daily exercise (). Overall, for the three participants who had a negative rating (i.e., 15%), two of them reported that they hoped the exercise sessions could be longer with more repetitions while the other one stated that they did not think they would get healthier due to their age-related decline in physical functions. Participants, in general, did not find the weekly sessions confusing (negatively worded, ). In addition, more than half of them (i.e., 56%) reported that they were more motivated about performing daily exercises with the robot (). In our study, participants were motivated to exercise and to continue exercising on a daily basis. This is consistent with other multi-session robot exercise facilitation studies [7,9] which have shown that motivation leads to improved user performance, such as decrease in exercise completion time [7] and executing exercise movements correctly [9].

5.4.6. Robot Features and Alternative Activities

Participants ranked from 1 to 6 (1 being most preferred and 6 being least preferred) which features of the robot they preferred the most, Table 6, as well as what other activities they would like to do with the robot, Table 7. For both one-on-one and group sessions, the most common preferred feature of the robot was its human-like arms and movements. The group sessions also preferred the robot eyes (tied with number one preference). The least preferred feature was the lower body as participants preferred to see the robot have legs to engage in leg exercises as well. These findings are consistent with previous studies comparing older age groups’ preferences in robots against other groups [76,77,78]. It was consistently found that older adults preferred features that were more human-like as they were more familiar with human appearances.

Table 6.

Ranking of preferred robot features.

Table 7.

Ranking of preferred activities for the robot to assist with.

Users ranked playing physical or cognitive stimulating games such as Pass the Ball and Bingo games the highest as they wanted the robot to provide helpful interventions to keep them active in daily life. In addition, as some users had difficulty going to their rooms or to the washroom by themselves, Escorting was ranked next to ensure their safety while going to places.

6. Discussions

6.1. User State Detection and Robot Emotion Results

For the one-on-one sessions, in general, users showed a slight decrease in valence for the entire duration. We postulate that this decrease in valence was related to the change of difficulty/complexity of the exercises in later sessions. The participants found the robot increasingly more difficult to use from one month () to two months (); however, the robot’s behavior functionality remained the same and acceptance of the robot was also found to be high throughout the duration of the study. Several exercise studies have reported that an increase in exercise intensity is correlated with a decrease in affective valence [79,80]. For example, a study on aerobic exercise, such as running, found that people’s affective valence began to decline as intensity of the exercise increased [79]. A similar study with older adults using the treadmill found that affect declined across the duration of the one-on-one session and became increasingly more negative as participants became more tired [80]. As mentioned, the intensity of the exercises demonstrated in our study increased from 8 repetitions to 12 repetitions in the second week, a combination of two exercises introduced in the third week and a combination of three exercise introduced in the sixth week. The increase in complexity was noted by the participants as additional comments in the two-month questionnaire, with statements such as “I noticed the exercises are getting more difficult” from User 5, one of the healthier participants.

In the group sessions, the negative valence during the first week for User 10 could be due to the participant not being familiar or comfortable exercising with a robot at first, as this user initially reported before the study that they did not have any prior experience with a robot. Over time and through repetitions, however, the exercises became easier for this user to follow (as observed in the videos) and resulted in them having positive valence during the entire exercise session, Figure 12. Similar robot studies have found that robot exercise systems become easier to understand over repeated sessions (i.e., from 1 to 3 sessions) based on the number of user help requests and an increase in average exercise completion time [7]. We also note that the mean GAS T-scores of User 10 increased from 61.1 (87.3% exercise completion) in the user’s first session to 65.5 (93.5% completion) after the user’s second session, showing a 6% overall improvement when exercising with the robot. This is consistent with the literature where user performance measures and exercise competition times have improved through some repetition (i.e., 1 to 4 sessions) [9].

The robustness of the robot’s emotion-based behaviors can be defined, herein, as its ability to perform exercise facilitation while adapting to the user states in both the one-on-one and group sessions. The changes in the emotions displayed by the robot, Figure 7, Figure 8, Figure 9, Figure 10, Figure 11 and Figure 12, illustrated its ability to adapt to changes in user state–allowing the robot to maintain, on average, positive valence and high engagement from the users.

6.2. Self-Reported Valence (SAM Scale)

Both the detected (Figure 7, Figure 8, Figure 9, Figure 10, Figure 11 and Figure 12) and self-reported valence (Table 5) results show that the majority of users maintained a positive valence throughout the study. Namely, the users generally felt positive valence during the (one-on-one and group) exercise sessions and when having the opportunity to reflect on their experience after the sessions. Our results are consistent to other studies that have also used EEG and self-reported to measure affective responses during and after exercise sessions, however, without a robot [81,82].

Overall, we had a high participation rate for our study with 27 of 31 (87%) participants completing the entire two-month sessions. We postulated that the positive valence with the high participation rate, motivates the use of Pepper as an exercise companion. In [83], it was found that positive affect responses during exercise was consistently linked to a high likelihood in performing exercises in the future. Furthermore, in a non-robot study reporting the role of affective states in the long-term participation of exercise specifically in older adults, similar evidence was found [84]. The study cited positive affective responses during exercise as an influential factor on participant belief on gaining benefit from and participating in regular exercise.

6.3. Acceptance

The overall positive acceptance ratings can be attributed to the clear health benefits of using the robot to motivate and engage users in exercise. In general, the level of acceptance of a technology is high when older adults clearly understand the benefits of using the technology [85,86]. For example, in [87] a robot’s ability to motivate users to dance and improve health outcomes was identified as a facilitator for robot acceptance. In our study, User 1 noted that “[they] hope[d] [they] will be healthier” by participating in the robot study, and User 2 noted that “[they] think exercising is important” and reported that they believed a robot that motivated them to exercise was good for them. The aforementioned users had a clear understanding that Pepper was developed to autonomously instruct and motivate them to engage in exercising, resulting in positive acceptance ratings.

6.4. Perceived Usefulness and Ease of Use

The perceived usefulness of the robot was expected to be similar between successive exercise sessions (both one-on-one and group) as Pepper performed the same exercises. Over time, however, there was a decrease. One of the main reasons for such a decrease in this construct was that after getting familiar with the robot, four of the participants reported they wished the robot could have had legs to facilitate leg exercises as well. As the participants in our study became familiar with the robot, their expectations evolved into wanting the robot to perform additional exercises.

6.5. Perceived Sociability and Intelligence

When validating the robot’s emotion module, after one month, users strongly agreed that the robot displayed appropriate emotions (), while after two months, their responses were more neutral (), however, they agreed that the robot’s overall feedback was appropriate (). We believe this could be due to the robot using the same variation of social dialogue during the two-month duration. In other studies that have observed a similar decrease in perceived sociability over time have noted that this was due to the robot not engaging in on-the-fly and spontaneous dialogue [88,89].

6.6. Robot Appearance and Movements

The position of the participants with respect to Pepper may have influenced their opinion of the robot’s appearance and movements. Participants from the group sessions that interacted with the robot were sitting in a semi-circle around the robot. As a result, some participants were further back from the robot than others in the group session and may have also not faced the robot directly, Figure 4, compared to those in the one-on-one sessions. In the literature, the preferred robot size by older adults is strongly dependent on the robot’s functionality [90,91,92]. In our study, as the robot’s main functionality is to facilitate exercise, we postulate that some participants from the group sessions preferred the robot to be larger so that they could more clearly see the robot and its movements.

6.7. Considerations and Limitations

We consider our HRI study to be long-term as it is repeated for multiple weeks or months, as compared to short-term studies which consider a single session. This is consistent with the literature, where three weeks was explicitly declared as long-term HRI in [93], or two months in [94,95]. The latter is consistent with our study length. Furthermore, length of time is not the only consideration when determining a study is long-term, as the user group and interaction length are also considered. Our study is considered to be long-term based on the older adult user group and the one-hour duration of each interaction session. Other existing studies that have used a robot to facilitate exercise with older adults have either only done so in a single user study session [7,8,23,24], or had users participate in on average of in a total of three sessions [9] lasting on average of 15 min. The only other long-term exercise study we are aware of with older adults is in [6], which was for a 10-week period.

We investigated if group exercise sessions would foster task cohesion. From analysis of the videos, the participants were fully engaged with the robot and showed high compliance with the exercises (there were no drop-outs observed). Participants were focused on following the exercises the robot displayed as closely as possible and continued to do so as the exercise became more complex later in the study. As future work, it would be interesting to identify existing group dynamics prior to the study and after the study to explore if such dynamics change or directly affect group cohesion and participant affective responses. Research has shown that people prefer to exercise in groups and that group cohesion is directly related to exercise adherence and compliance [96,97].

It is possible that participants were extrinsically motivated to participate in the study from factors that we have not examined. For example, social pressure from peers or care staff could have influenced participation in our study; however, participation was completely voluntary, and no rewards were given; participants were allowed to withdraw at any time. We postulate that the participants that completed this two-month HRI study were more intrinsically driven based on their high ratings in their overall experience and perceived usefulness of the robot, as older adults in have been found to be intrinsically motivated by personal health and benefit, in addition to altruistic reasons [98,99].

In our study, participants in both types of interactions had comparable and high acceptance of the robot and high perceived usefulness and ease of use of the robot, which validates the use and efficacy of an exercise robot for both scenario types. The main difference was that users in the one-on-one sessions perceived the robot as more sociable and intelligent, and had provided higher ratings for the robot’s appearance and its movements. We believe that this difference is mainly due to: (1) the group setting design, which directly resembles an exercise session with the human facilitator, participants were seated in a half circle with an approximate radius of 2 m in front of the Pepper robot. The size and distance to the robot may have affected their opinions on these constructs as some participants mentioned they preferred the robot to be larger so that they could more clearly; and, (2) the ability for the robot to provide personalized feedback to each member of the group in addition to the overall group. We do note, however, that the questionnaire results were relatively consistent in the group interactions with an average IQR of 1.48 for all the constructs. This demonstrated that the robot’s emotional behavior being responsive to only a small number of participants in the group did not have a significant impact on their overall experience.

Our recommendation is to consider these aforementioned factors when considering group-based interactions to improve perceptions of the robot as a facilitator. In general, in non-robotic studies, research has shown that people prefer to exercise in groups, including older adults [100,101], and this is worth investigating further in HRI studies with groups while considering the above recommendations. For a robot to be perceived as sociable or intelligent in a group session compared to one-on-one interactions, the robot should provide general group feedback and personalized individual feedback for those in the group that need it. Effective multi-user feedback in HRI remains a challenge due to the majority of research focusing on feedback from one-on-one interactions [102]. Furthermore, group-based emotion models require additional understanding of inter-group interactions, as individuals identifying with the group and having in-group cohesion can also influence user affect [103,104].

The user states of the participants not wearing an EEG headband and heart rate sensor in the group session were not monitored. This was due to the limitations with these Bluetooth devices during deployment, where there would be interference between multiple concurrent connections to the host computer when too many devices were on the same frequency. As we obtained self-reported valence, regardless of this challenge, we found that the self-reported valence was consistent within the overall group, and furthermore the self-reported valence was consistent with the measured valence from the two participants wearing the sensors. In the future, this limitation can be addressed by using a network of intermediary devices that connect to the sensors via Bluetooth and connect to a central host computer through a local network. Other solutions include the use of sensors with communication technology that reduces the restrictions on the number of connected devices (e.g., Wi-Fi-enabled EEG-sensors [105]). Other forms of user state estimation that do not require wireless communication can also be considered. For example, thermal cameras have been used to classify discrete affective states by correlating directional thermal changes of areas of interest of the skin (i.e., portions of the face) with affective states [106,107]. However, this relationship has been mainly with discrete states such as joy, disgust, anger, and stress, and additional investigations would be necessary to determine such a relationship with respect to the continuous scale of valence as considered in this work. Thermal cameras have also been used for heart rate estimation by tracking specific regions of interest of the body [108].

Lastly, the experimental design of our study was developed to accommodate the age-related mobility limitations of older adults. For example, the exercises were conducted with the users in a seated position and their range of motion and speed were designed by a physiotherapist at our partner LTC home. We did note, however, that two participants in the one-on-one sessions experienced some difficulties. As discussed in Section 5.1, User 7 participated in 12 of the 16 exercise sessions, and watched the robot in the remaining four sessions. The robot moved too fast for them to always follow it. Similarly, as discussed in Section 5.2, User 8 had difficulties in performing faster and larger motion arm exercises due to their observed upper-limb tremors.

6.8. Future Research Directions

Future research consists of longer-term studies to investigate health outcomes in long-term care with an autonomous exercise robot and the impact of different robot platforms with varying appearances and functionality on exercise compliance and engagement. Literature has shown that upper-limb exercises can provide benefits in functional capacity, inspiratory muscle strength, motor performance, range of motion, and cardiovascular performance [109,110,111]. It will be worth exploring if robot-facilitated exercising can have the same outcomes.

Robot attributes such as size and type, adaptive (also reported in [112]) and emotional (also reported [113,114]) behaviors, and physical embodiment [7], can influence robot performance and acceptance when interacting with users. Thus, these factors should be further investigated for robot exercise facilitation with older adults.

Furthermore, we will also investigate incorporating other needed activities of daily living with robots to study their benefits with the aim of improving quality of life of older adults in the long-term care home settings.

7. Conclusions

In this paper, we present the first long-term exploratory HRI study conducted with an autonomous socially assistive robot and older adults at a local long-term care facility to investigate the benefits of one-on-one and group exercise interactions. Results showed that participants, in general, had both measured and self-reported positive valence and had an overall positive experience. Participants in both types of interactions had high acceptance of the robot and high perceived usefulness and ease of use of the robot. However, the participants in the one-on-one sessions perceived the robot as more sociable and intelligent and had higher ratings for the robot’s appearance and its movements than those in the group sessions.

Author Contributions

Conceptualization, M.S. (Mingyang Shao), S.F.D.R.A. and G.N.; Methodology, M.S. (Mingyang Shao), S.F.D.R.A. and G.N.; Software, M.S. (Mingyang Shao), S.F.D.R.A. and G.N.; Validation, M.S. (Mingyang Shao), M.P.-H., K.E. and G.N.; Formal analysis, M.S. (Mingyang Shao), M.P.-H. and G.N.; Investigation, M.S. (Mingyang Shao), M.P.-H. and G.N.; Resources, M.S. (Matt Snyder) and G.N.; Data curation, M.S. (Mingyang Shao) and S.F.D.R.A.; Writing—original draft preparation, M.S. (Mingyang Shao) and G.N.; Writing—review and editing, M.S. (Mingyang Shao), M.P.-H. M.S. (Matt Snyder), K.E., B.B. and G.N.; Visualization, M.S. (Mingyang Shao), S.F.D.R.A. and G.N.; Supervision, G.N. and B.B.; Project administration, G.N.; Funding acquisition, G.N. and B.B. All authors have read and agreed to the published version of the manuscript.

Funding