Motif2Mol: Prediction of New Active Compounds Based on Sequence Motifs of Ligand Binding Sites in Proteins Using a Biochemical Language Model

Abstract

1. Introduction

2. Materials and Methods

2.1. Methodological Concept

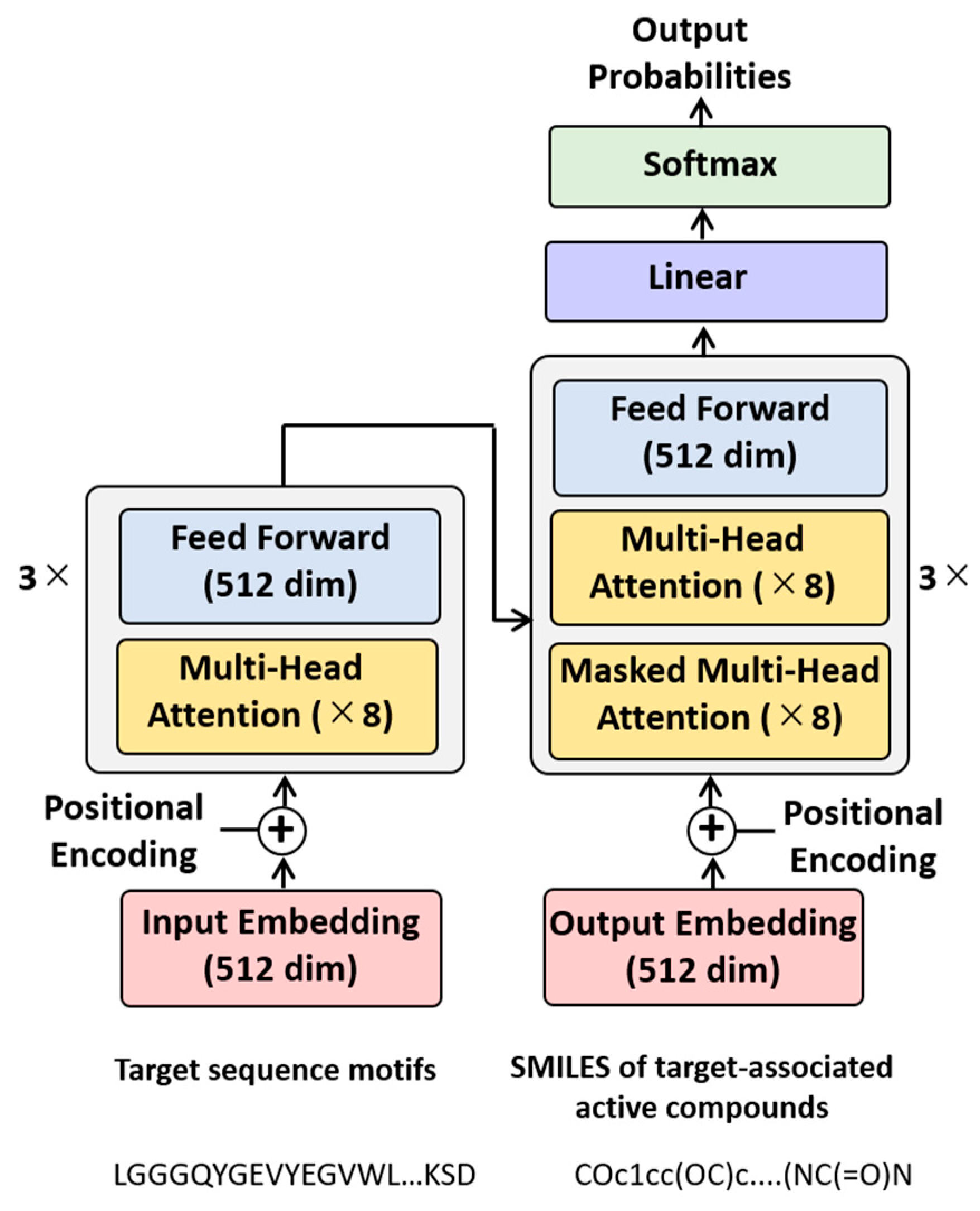

2.2. Model Architecture

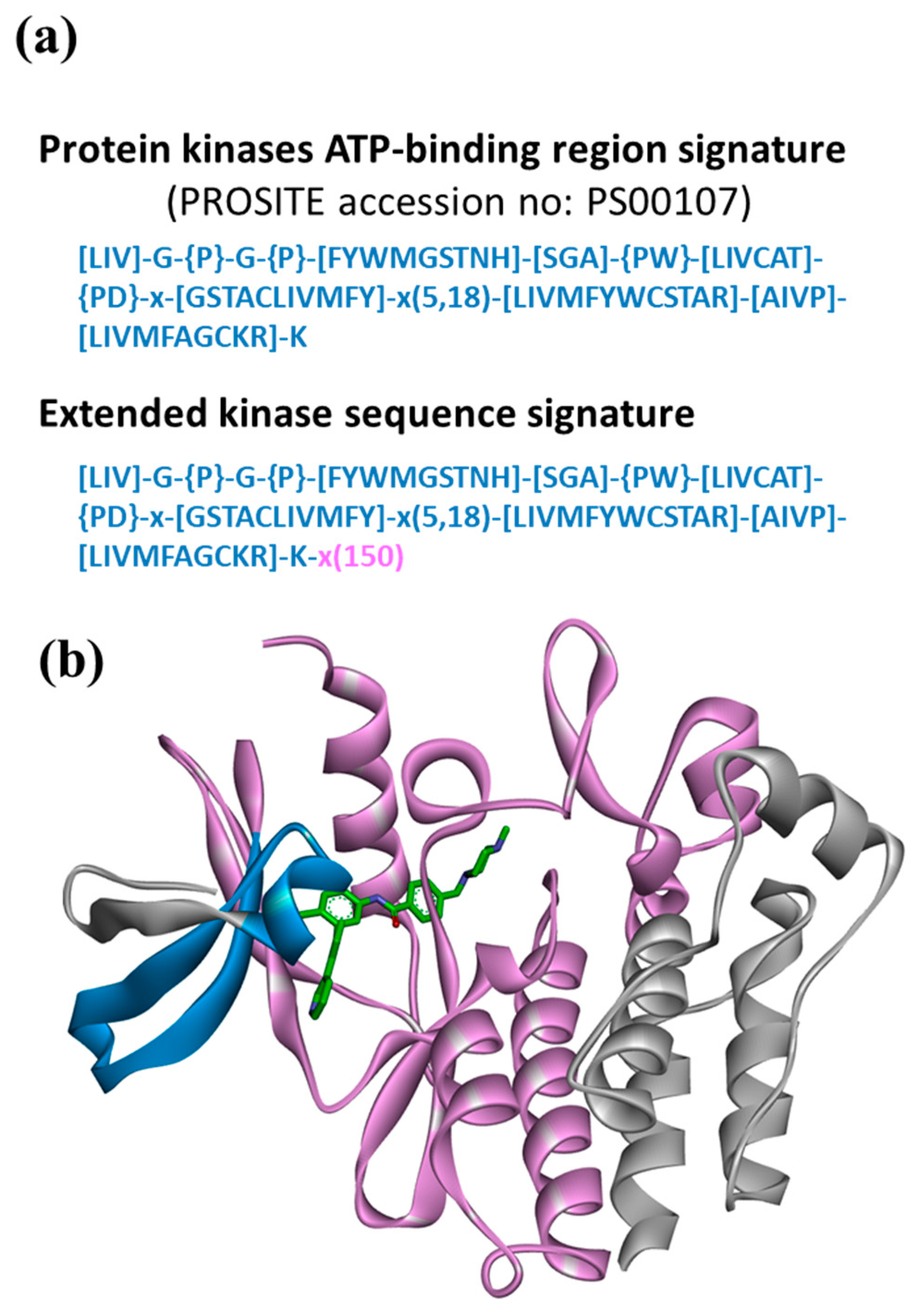

2.3. Proof-of-Concept Application

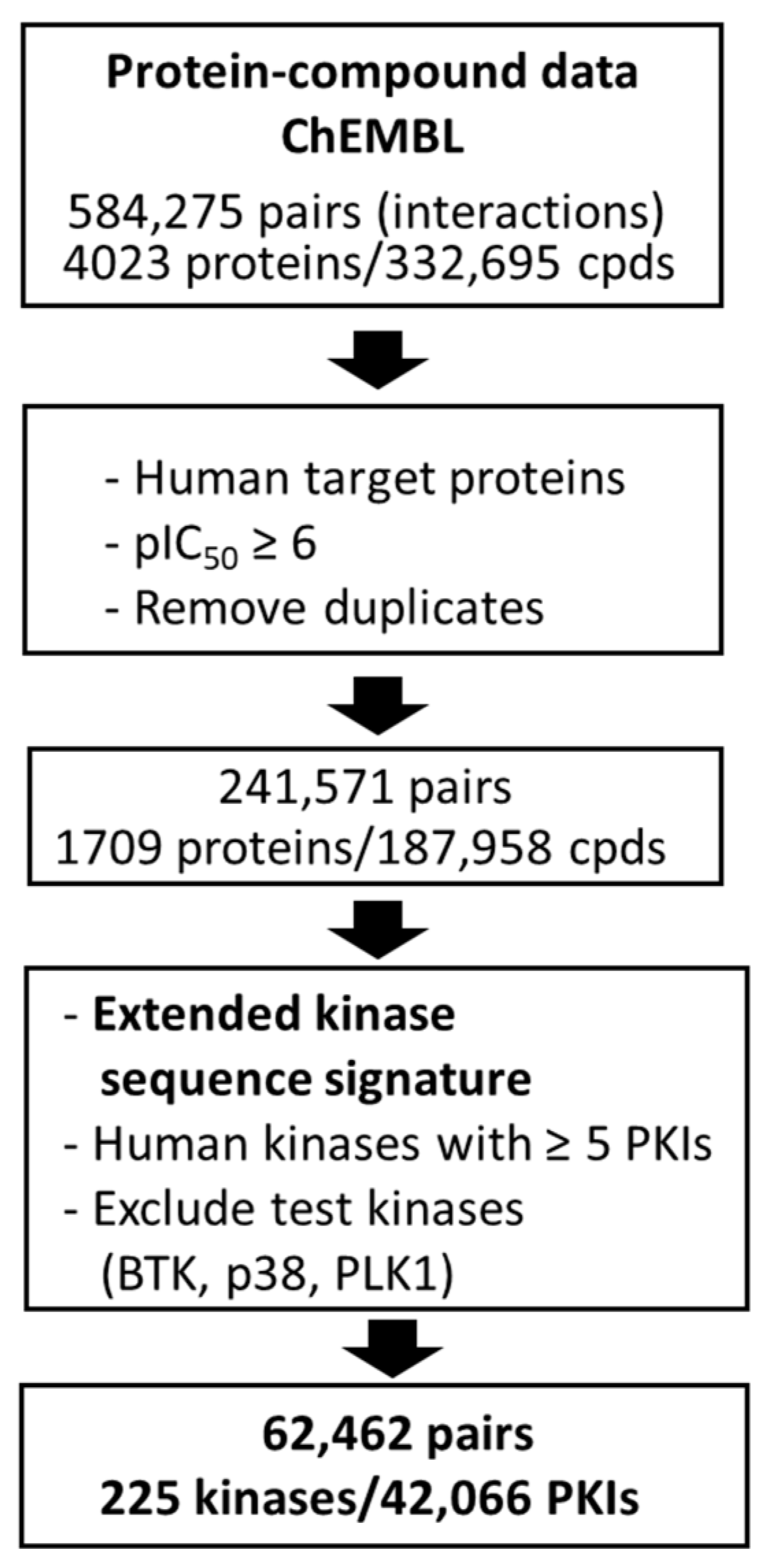

2.4. Model Derivation

2.5. Generation of New Candidate Compounds

2.6. Sequence Comparison

3. Results and Discussion

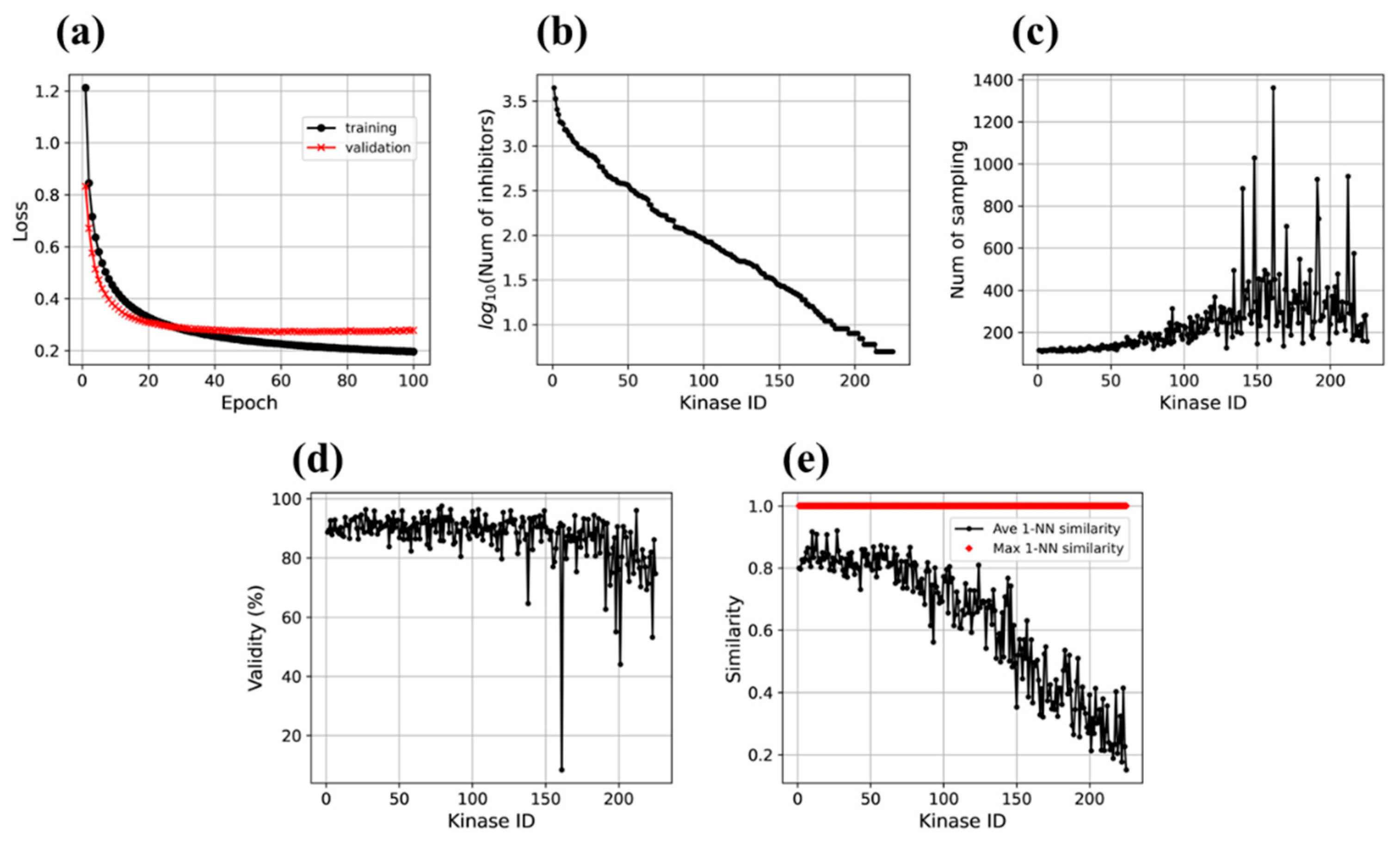

3.1. Motif2Mol Model Evaluation and Performance

3.2. Validity of Generated Molecular Representations

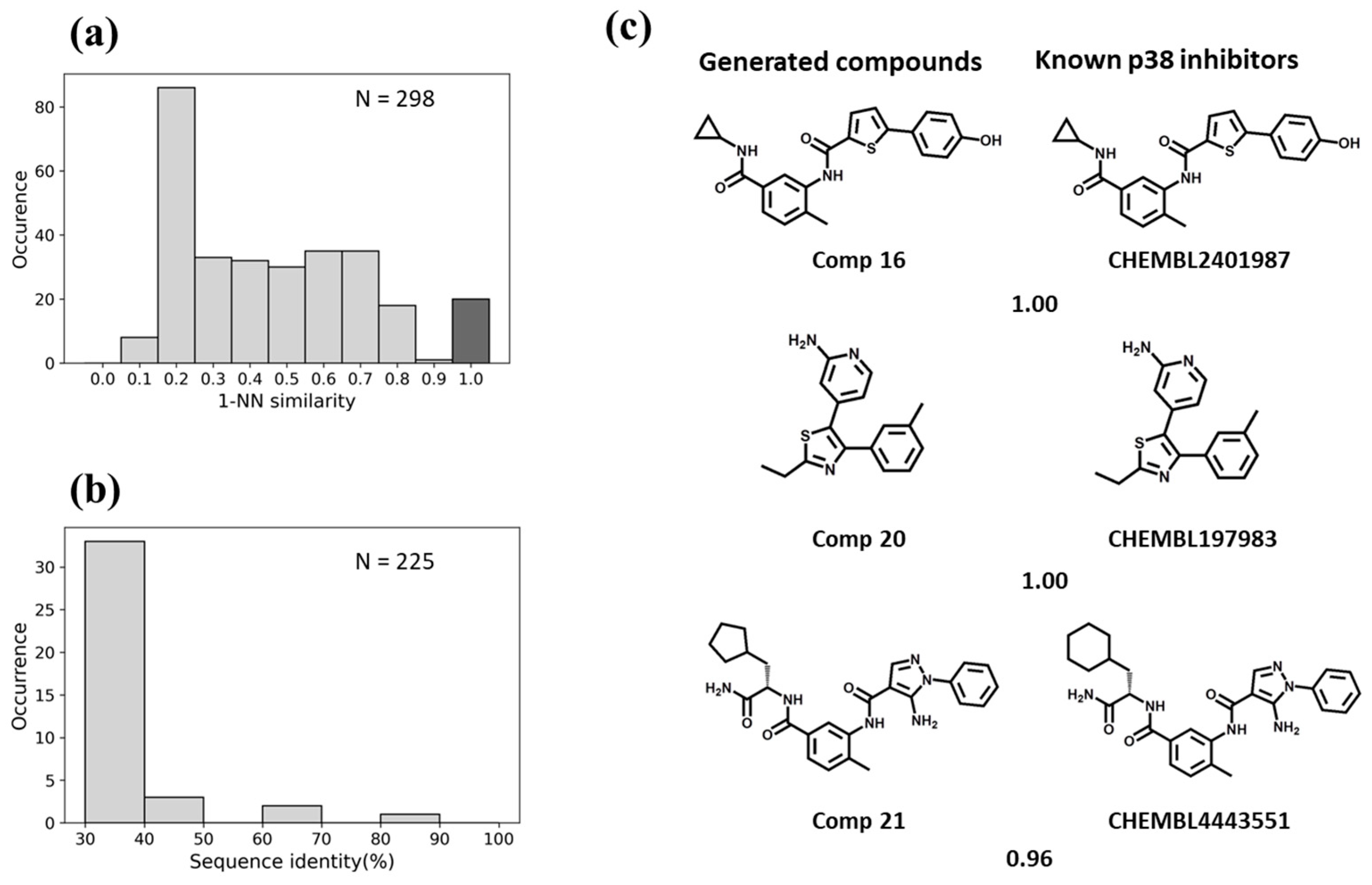

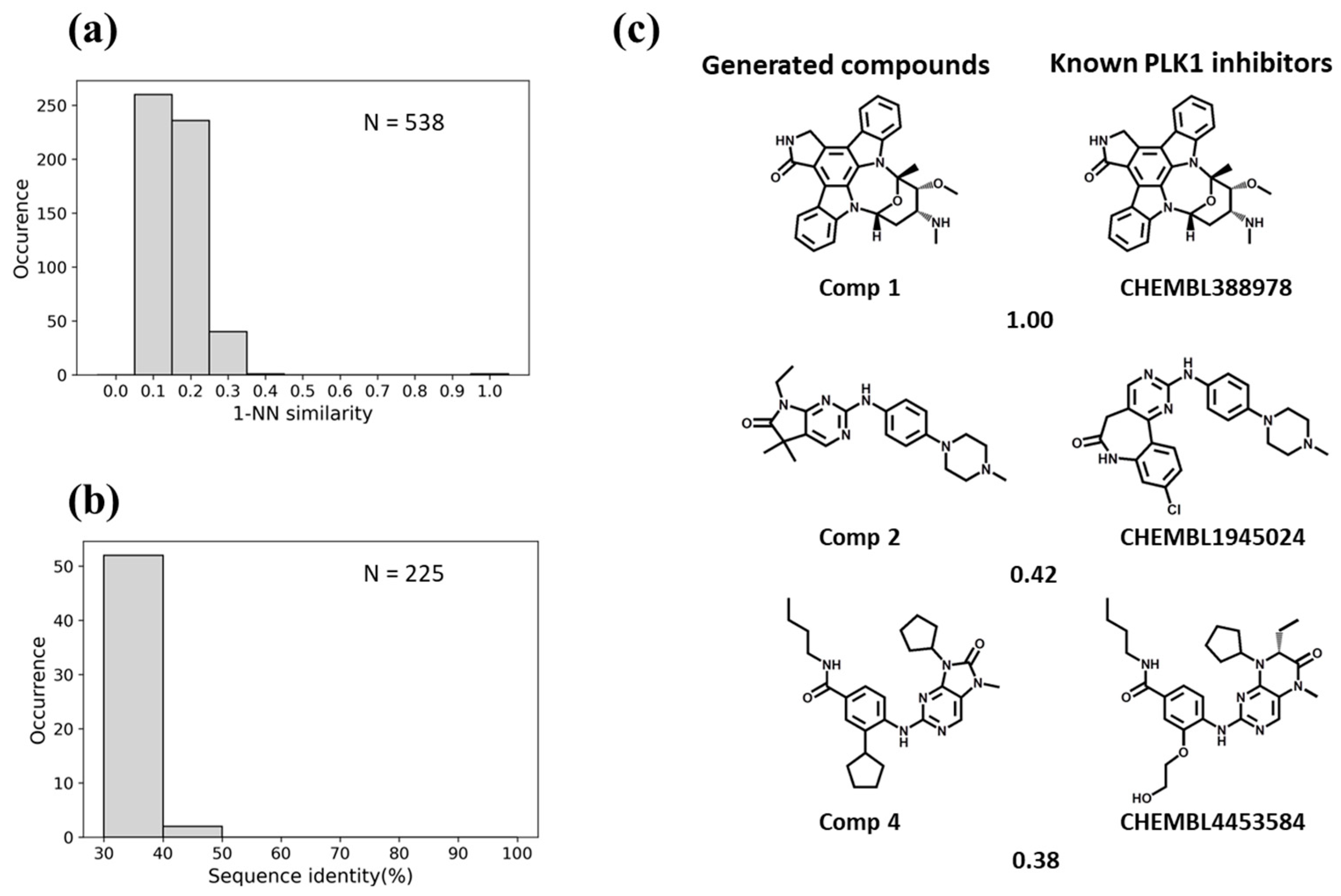

3.3. Similarity Analysis

3.4. Predictions for Test Kinases

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Bock, J.R.; Gough, D.A. Virtual Screen for Ligands of Orphan G Protein-coupled receptors. J. Chem. Inf. Model. 2005, 45, 1402–1414. [Google Scholar] [CrossRef]

- Erhan, D.; L’heureux, P.J.; Yue, S.Y.; Bengio, Y. Collaborative Filtering on a Family of Biological Targets. J. Chem. Inf. Model. 2006, 46, 626–635. [Google Scholar] [CrossRef] [PubMed]

- Jacob, L.; Vert, J.P. Protein-Ligand Interaction Prediction: An Improved Chemogenomics Approach. Bioinformatics 2008, 24, 2149–2156. [Google Scholar] [CrossRef]

- Aumentado-Armstrong, T. Latent Molecular Optimization for Targeted Therapeutic Design. arXiv 2018, arXiv:1809.02032. [Google Scholar]

- Skalic, M.; Varela-Rial, A.; Jiménez, J.; Martínez-Rosell, G.; De Fabritiis, G. LigVoxel: Inpainting Binding Pockets Using 3D-Convolutional Neural Networks. Bioinformatics 2018, 35, 243–250. [Google Scholar] [CrossRef] [PubMed]

- Skalic, M.; Sabbadin, D.; Sattarov, B.; Sciabola, S.; De Fabritiis, G. From Target to Drug: Generative Modeling for the Multimodal Structure-Based Ligand Design. Mol. Pharm. 2019, 16, 4282–4291. [Google Scholar] [CrossRef]

- Kingma, D.P.; Rezende, D.J.; Mohamed, S.; Welling, M. Semi-Supervised Learning with Deep Generative Models. arXiv 2014, arXiv:1406.5298. [Google Scholar]

- Ruthotto, L.; Haber, E. An Introduction to Deep Generative Modeling. GAMM-Mitt. 2021, 44, e202100008. [Google Scholar] [CrossRef]

- Skalic, M.; Jiménez, J.; Sabbadin, D.; De Fabritiis, G. Shape-Based Generative Modeling for De Novo Drug Design. J. Chem. Inf. Model. 2019, 59, 1205–1214. [Google Scholar] [CrossRef]

- Tong, X.; Liu, X.; Tan, X.; Li, X.; Jiang, J.; Xiong, Z.; Xu, T.; Jiang, H.; Qiao, N.; Zheng, M. Generative Models for De Novo Drug Design. J. Med. Chem. 2021, 64, 14011–14027. [Google Scholar] [CrossRef]

- Sousa, T.; Correia, J.; Pereira, V.; Rocha, M. Generative Deep Learning for Targeted Compound Design. J. Chem. Inf. Model. 2021, 61, 5343–5361. [Google Scholar] [CrossRef]

- Blaschke, T.; Olivecrona, M.; Engkvist, O.; Bajorath, J.; Chen, H. Application of Generative Autoencoder in De Novo Molecular Design. Mol. Inform. 2018, 37, e1700123. [Google Scholar] [CrossRef]

- Hochreiter, S.; Schmidhuber, J. Long Short-Term Memory. Neur. Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention Is All You Need. Adv. Neural Inf. Process. Syst. 2017, 30, 5998–6008. [Google Scholar]

- Luong, M.-T.; Pham, H.; Manning, C.D. Effective Approaches to Attention-Based Neural Machine Translation. arXiv 2015, arXiv:1508.04025. [Google Scholar]

- Hirschberg, J.; Manning, C.D. Advances in Natural Language Processing. Science 2015, 349, 261–266. [Google Scholar] [CrossRef]

- Gómez-Bombarelli, R.; Wei, J.N.; Duvenaud, D.; Hernández-Lobato, J.M.; Sánchez-Lengeling, B.; Sheberla, D.; Aguilera-Iparraguirre, J.; Hirzel, T.D.; Adams, R.P.; Aspuru-Guzik, A. Automatic Chemical Design Using a Data-Driven Continuous Representation of Molecules. ACS Cent. Sci. 2018, 4, 268–276. [Google Scholar] [CrossRef]

- Schwaller, P.; Laino, T.; Gaudin, T.; Bolgar, P.; Hunter, C.A.; Bekas, C.; Lee, A.A. Molecular Transformer: A Model for Uncertainty-Calibrated Chemical Reaction Prediction. ACS Cent. Sci. 2019, 5, 1572–1583. [Google Scholar] [CrossRef]

- Flam-Shepherd, D.; Zhu, K.; Aspuru-Guzik, A. Language Models Can Learn Complex Molecular Distributions. Nat. Commun. 2022, 13, e3293. [Google Scholar] [CrossRef]

- Skinnider, M.A.; Stacey, R.G.; Wishart, D.S.; Foster, L.J. Chemical Language Models Enable Navigation in Sparsely Populated Chemical Space. Nat. Mach. Intell. 2021, 3, 759–770. [Google Scholar] [CrossRef]

- Weininger, D. SMILES, A Chemical Language and Information System. 1. Introduction to Methodology and Encoding Rules. J. Chem. Inf. Comput. Sci. 1988, 28, 31–36. [Google Scholar] [CrossRef]

- Ghanbarpour, A.; Lill, M.A. Seq2Mol: Automatic Design of De Novo Molecules Conditioned by the Target Protein Sequences through Deep Neural Networks. arXiv 2020, arXiv:2010.15900. [Google Scholar]

- Grechishnikova, D. Transformer Neural Network for Protein-Specific De Novo Drug Generation as a Machine Translation Problem. Sci. Rep. 2021, 11, e321. [Google Scholar] [CrossRef] [PubMed]

- Qian, H.; Lin, C.; Zhao, D.; Tu, S.; Xu, L. AlphaDrug: Protein Target Specific De Novo Molecular Generation. PNAS Nexus 2022, 1, pgac227. [Google Scholar] [CrossRef] [PubMed]

- Vinyals, O.; Toshev, A.; Bengio, S.; Erhan, D. Show and Tell: A Neural Image Caption Generator. arXiv 2015, arXiv:1411.4555. [Google Scholar]

- Heinzinger, M.; Elnaggar, A.; Wang, Y.; Dallago, C.; Nechaev, D.; Matthes, F.; Rost, B. Modeling Aspects of the Language of Life through Transfer-Learning Protein Sequences. BMC Bioinform. 2019, 20, e723. [Google Scholar] [CrossRef]

- Xu, L. Least Mean Square Error Reconstruction Principle for Self-Organizing Neural-Nets. Neural Netw. 1993, 6, 627–648. [Google Scholar] [CrossRef]

- Gavrin, L.K.; Saiah, E. Approaches to Discover Non-ATP Site Kinase Inhibitors. Med. Chem. Commun. 2013, 4, 41–51. [Google Scholar] [CrossRef]

- Hu, Y.; Furtmann, N.; Bajorath, J. Current Compound Coverage of the Kinome. J. Med. Chem. 2015, 58, 30–40. [Google Scholar] [CrossRef]

- Ferguson, F.M.; Gray, N.S. Kinase Inhibitors: The Road Ahead. Nat. Rev. Drug Discov. 2018, 17, 353–376. [Google Scholar] [CrossRef]

- Sigrist, C.J.; de Castro, E.; Cerutti, L.; Cuche, B.A.; Hulo, N.; Bridge, A.; Bougueleret, L.; Xenarios, I. New and Continuing Developments at PROSITE. Nucleic Acids. Res. 2013, 41, D344–D347. [Google Scholar] [CrossRef]

- Bento, A.P.; Gaulton, A.; Hersey, A.; Bellis, L.J.; Chambers, J.; Davies, M.; Krüger, F.A.; Light, Y.; Mak, L.; McGlinchey, S.; et al. The ChEMBL Bioactivity Database: An Update. Nucleic Acids Res. 2014, 42, D1083–D1090. [Google Scholar] [CrossRef]

- Paszke, A.; Gross, S.; Massa, F.; Lerer, A.; Bradbury, J.; Chanan, G.; Killeen, T.; Lin, Z.; Gimelshein, N.; Antiga, L.; et al. PyTorch: An Imperative Style, High-Performance Deep Learning Library. arXiv 2019, arXiv:1912.01703v1. [Google Scholar]

- Pytorch Tutorial: Language Translation with NN.Transformer and Torchtext. Available online: https://pytorch.org/tutorials/beginner/translation_transformer.html (accessed on 1 December 2022).

- Singh, S.; Sunoj, R.B. A Transfer Learning Approach for Reaction Discovery in Small Data Situations Using Generative Model. iScience 2022, 25, 104661. [Google Scholar] [CrossRef]

- RDKit: Cheminformatics and Machine Learning Software. 2013. Available online: http://www.rdkit.org (accessed on 10 February 2023).

- Bajusz, D.; Rácz, A.; Héberger, K. Why Is Tanimoto Index an Appropriate Choice for Fingerprint-Based Similarity Calculations? J. Cheminf. 2015, 7, 20. [Google Scholar] [CrossRef]

- Glen, R.C.; Bender, A.; Arnby, C.H.; Carlsson, L.; Boyer, S.; Smith, J. Circular Fingerprints: Flexible Molecular Descriptors with Applications from Physical Chemistry to ADME. IDrugs 2006, 9, 199–204. [Google Scholar]

- Cock, P.J.; Antao, T.; Chang, J.T.; Chapman, B.A.; Cox, C.J.; Dalke, A.; Friedberg, I.; Hamelryck, T.; Kauff, F.; Wilczynski, B.; et al. Biopython: Freely Available Python Tools for Computational Molecular Biology and Bioinformatics. Bioinformatics 2009, 25, 1422–1423. [Google Scholar] [CrossRef] [PubMed]

- Henikoff, S.; Henikoff, J.G. Amino Acid Substitution Matrices from Protein Blocks. Proc. Natl. Acad. Sci. USA 1992, 89, 10915–10919. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yoshimori, A.; Bajorath, J. Motif2Mol: Prediction of New Active Compounds Based on Sequence Motifs of Ligand Binding Sites in Proteins Using a Biochemical Language Model. Biomolecules 2023, 13, 833. https://doi.org/10.3390/biom13050833

Yoshimori A, Bajorath J. Motif2Mol: Prediction of New Active Compounds Based on Sequence Motifs of Ligand Binding Sites in Proteins Using a Biochemical Language Model. Biomolecules. 2023; 13(5):833. https://doi.org/10.3390/biom13050833

Chicago/Turabian StyleYoshimori, Atsushi, and Jürgen Bajorath. 2023. "Motif2Mol: Prediction of New Active Compounds Based on Sequence Motifs of Ligand Binding Sites in Proteins Using a Biochemical Language Model" Biomolecules 13, no. 5: 833. https://doi.org/10.3390/biom13050833

APA StyleYoshimori, A., & Bajorath, J. (2023). Motif2Mol: Prediction of New Active Compounds Based on Sequence Motifs of Ligand Binding Sites in Proteins Using a Biochemical Language Model. Biomolecules, 13(5), 833. https://doi.org/10.3390/biom13050833