1. Introduction

Neural networks (NNs) have enabled unparalleled speed and automation in many fields like natural language processing and computer vision and have achieved impressive feats in biology, such as AlphaFold solving challenges in protein folding prediction [

1]; however, these techniques have not gained much traction in the task of metabolite identification and quantification directly from nuclear magnetic resonance (NMR) spectra. NNs are especially promising for the field of NMR metabolomics, given the complexity of NMR spectra and the time and expertise required to process the spectrum into a list of quantified metabolites. NNs have seen scattered use for analyte quantification directly from spectra in scientific publications over the past three decades [

2,

3,

4], and a recent resurgence [

5,

6,

7] has been driven by improvements and innovation in artificial intelligence techniques, computing power, and NMR spectroscopy. This study aims to improve the utility of NNs for NMR metabolomics profiling in complex samples with a high dynamic range of concentrations by investigating methods in dataset development, NN architecture, and model training.

In NMR-based metabolomics research, metabolite identification and quantification are often tedious tasks involving several manual steps. A fully manual process involving resonance deconvolution can easily take up to an hour for complex samples and is limited by human errors. Even if assisted by the popular lineshape fitting software, Chenomx [

8] (

https://www.chenomx.com, (accessed on 10 January 2025)), it can take up to 40 min to process a complex sample, and the semi-automated version of Chenomx can speed this up to ~1 min per spectrum at the cost of reduced accuracy [

9]. Fully automated software like MagMet (

https://www.magmet.ca, accessed on 4 March 2024) can quantify metabolites in serum or plasma in ~2–3 min [

9]. However, none of these approaches used in NMR metabolomics research can match the speed and scalability of a popular class of machine learning algorithms, NNs, which have been used to quantify the lipid profile in 5000 spectra in under 1 s [

7]. NNs are fully automated, rapid, and scalable technologies that can be applied for processing NMR data. NNs have already been applied to a variety of NMR processing tasks, including denoising spectra [

10], reconstructing non-uniformly sampled data [

11,

12], resonance characterization [

13,

14], and analyte quantification [

5,

6,

7]. In the task of analyte quantification, networks such as multi-layered perceptrons (MLPs) [

5,

7], convolutional neural networks (CNNs) [

5], and convolutional recurrent neural networks (CRNNs) [

6] have been explored in a few studies to date; however, the application of NNs in metabolite profiling has been limited compared to software using statistical lineshape fitting approaches for metabolite profiling [

9,

15,

16,

17,

18,

19,

20,

21,

22]. In this research, we intensively investigate methods applying NNs to metabolite profiling to determine good practices for accurate quantification. We apply MLP and CNN models for metabolite quantification in simulated 1D

1H-NMR spectra of complex mixtures. We also implement a NN architecture, the transformer, which has proven exceptionally proficient at a large variety of complex tasks like natural language processing [

23] and vision tasks [

24], however, it has not yet been investigated in the context of analyte quantification for spectroscopic data.

For any NN, there are many considerations that affect the model’s performance. The dataset used to train a model is of major importance. In this study, we examine how varying concentration distributions used for model training affect the accuracy of NNs and further explore dataset modifications like varying the number of metabolites per spectra, log transforming spectral intensities, and more. As model architecture and training parameters also significantly drive model performance, we employ Bayesian hyperparameter optimization to improve the architectures as well as learning parameters for the MLP, CNN, and transformer models.

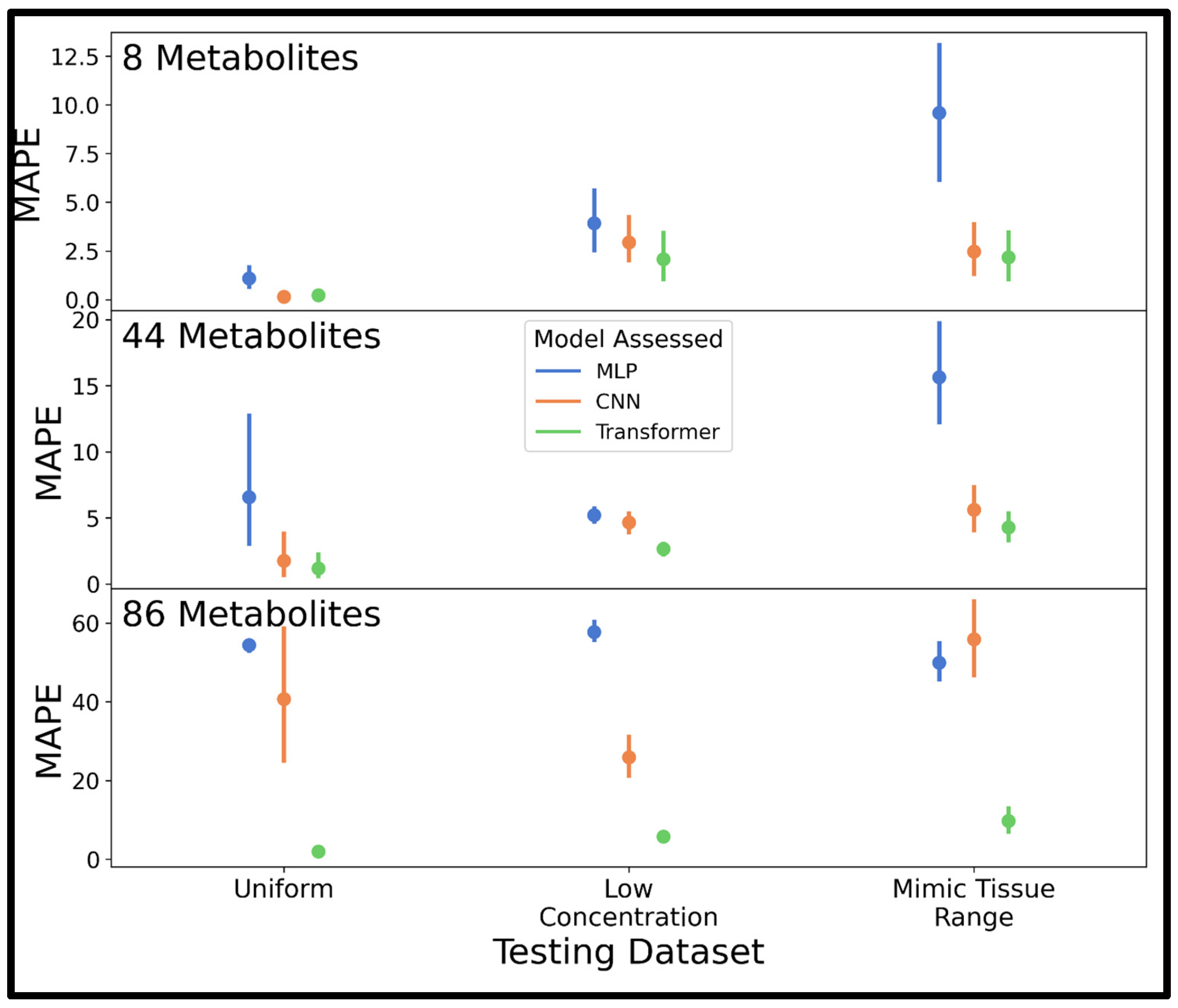

This study explores whether the MLP, CNN, or transformer is best suited for analyte quantification in 1D NMR spectra and validates some good practices in dataset development and parameter selection for NNs as applied to NMR-based metabolomics. The models developed throughout this study on simulated 400-MHz data are further validated in spectra of varying complexity (8, 44, and 86 metabolites—representing a range from simple spectra to the upper end of quantified metabolites seen in

1H-NMR metabolomics studies) and on simulated 100-MHz spectra and 800-MHz spectra, which are relevant with the rise of benchtop instruments [

25,

26,

27] and availability of more high-field NMR spectrometers, respectively. The methods developed in this study are presented as a general workflow for developing NNs for NMR metabolite profiling, and suggestions are provided in the discussion for further improving and adapting this workflow for application on experimental NMR spectra. The results of this study are promising and show NNs can be extremely rapid and accurate for the quantification of analytes in highly complex NMR spectra, but there are limitations provided in the discussion that need to be considered and overcome if NNs are to become the tool of choice for quantitative NMR metabolomics practitioners.

2. Methods

To explore best practices in developing accurate neural networks for quantification of analytes directly from 1D

1H-NMR spectra, we developed networks using three promising architectures, the MLP, CNN, and transformer and examined model performance while varying dataset concentration distributions, dataset modifications, and loss functions, respectively, before optimizing hyperparameters through Bayesian optimization. The best model was further validated on simulated spectra at low- and high-field magnetic field strengths. An overview of this entire process is given in

Figure 1.

2.1. Data Generation Methods

Simulated 400-MHz

1H-NMR spectra of complex metabolite mixtures were used to train neural networks for metabolite profiling. Mixture spectra were generated using simulated metabolite reference spectra downloaded from the human metabolome database (HMDB) [

https://hmdb.ca, accessed 1 February 2024] [

28]. Simulated spectra in the HMDB were calculated by predicting chemical shifts using HOSE-code (Hierarchically Ordered Spherical Environment code) and machine learning methods, estimating coupling with empirical rules, followed by spin matrix calculations [

28]. Signals for 87 metabolites commonly encountered in biological aqueous tissue extracts were downloaded (see

Table S1 in the Supplement). Representative spectra for these metabolites, along with a synthetic mixture spectrum containing all these metabolites, can be found in the

supplement as Figure S1 (with alpha-D-glucose and beta-D-glucose anomers combined into a single spectrum for a total of 86 metabolites). The testing spectra generated in this study are based on aqueous metabolite mixtures seen in animal tissues, and several measures were taken to promote realistic spectra, including maintaining concentration magnitudes and distributions seen in real metabolomics samples, maintaining a signal-to-noise ratio (SNR) similar to those measured in real spectra, incorporating interfering signals at random, incorporating experimental variations (in line-broadening, noise, chemical shifts, and baseline), and combining simulated spectra for glucose anomers into one unified glucose spectrum. Data processing in this study was achieved with Python [

29] (version 3.11.5) using the numpy [

30] library (version 1.24.3) for most operations on matrices and the nmrglue [

31] library (version 0.10) for NMR processing operations (file reading, shifting peaks, and Fourier transformations). Seaborn [

32] (version 0.12.2) was used to generate point plots (plots showing mean and 95% confidence interval). All computations in this study were executed using a Quadro RTX 6000 (Nvidia, Santa Clara, CA, USA) and two Xeon Silver 4216s processors (Intel, Santa Clara, CA, USA).

Spectra downloaded from the HMDB exhibit no noise and have resonance intensities that are consistent with proton numbers contributing to each resonance; thus, each simulated spectrum was assumed to be 1 mM as downloaded from the HMDB, and any scaling produced analyte concentrations with the same magnitude as the scalar. To mimic 3-(Trimethylsilyl)propionic-2,2,3,3-d4 (TSP-d4) salt, a singlet was added at 0.0 ppm and scaled to a concentration of 0.3 mM (for 9 protons) in all spectra generated throughout the study.

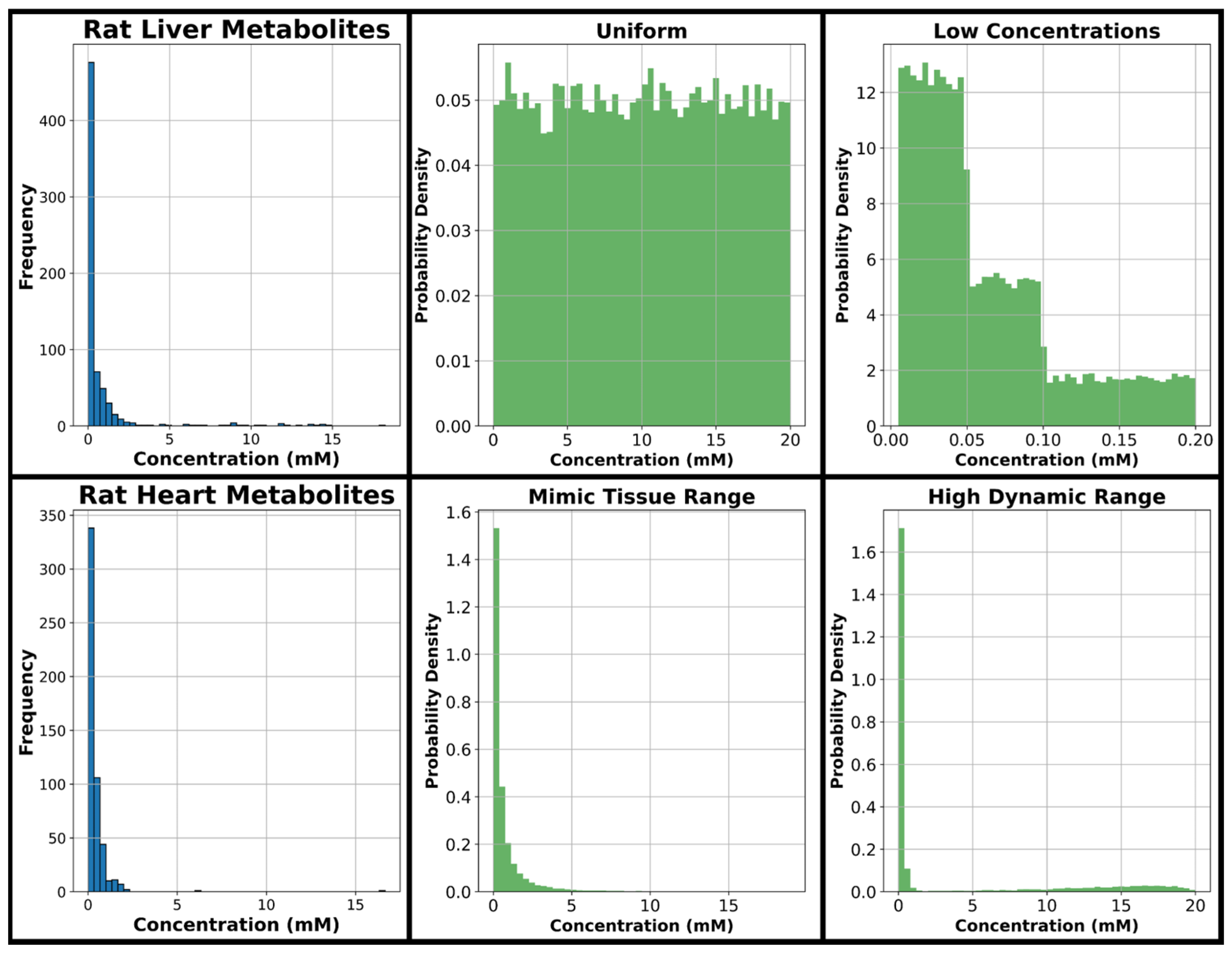

The range of concentrations considered in data generation was 0.005–20 mM based on our previous experimental work in rat liver and heart [

33] tissue extracts measured using a 400-MHz NMR spectrometer. Histograms displaying metabolite concentration frequencies for murine liver and heart tissue samples in our previous study can be seen in

Figure 2, along with four histograms displaying four concentration distributions utilized in this study. The concentration distribution the model sees in training is expected to have a major effect on model performance; therefore, for model training, a uniform distribution is considered covering the entire range of metabolite concentrations evaluated in this study, and several further concentration distributions are considered emphasizing the lower concentrations common in metabolomics spectra which are expected to be more difficult for quantification given their relatively small size in the overall spectra. One distribution is considered with exclusively low concentrations, while two different distributions with a large dynamic range emphasizing low concentrations are evaluated given they are expected to more closely resemble NMR metabolomics spectra of tissue extracts.

The four green histograms in

Figure 2 are the major concentration distributions used in generating training, testing, and validation data throughout this manuscript. The distribution referred to as “uniform” in this manuscript uniformly encompasses the full range from 0.005 to 20 mM, and this uniform approach has been used in several previous NN analyte profiling studies [

5,

7]. The distribution referred to as “low concentration” in this manuscript is an evenly split combination of three uniform distributions that each begin at 0.005 mM and extend to 0.05, 0.1, and 0.2 mM, respectively. Two distributions were created with concentration distributions similar to those seen in tissues where several metabolites have relatively high concentrations and most have much lower concentrations, an approach similar to the one taken by Wang et al. [

6]. The first is referred to as “mimic tissue range” and is a combination of two log-normal distributions, and the second is referred to as “high dynamic range” and is a combination of two gamma distributions and one uniform distribution. The code used to generate each of these four distributions is found in this project’s GitHub repository (

https://github.com/tpirneni/DL-NMR/blob/main/DefineDistributions.ipynb, accessed 10 January 2025). A fifth concentration distribution was developed by using all four of the aforementioned distributions, and this is referred to as the “combined distribution” (i.e., for the combined distribution, 25% of samples are generated using the uniform distribution, 25% are generated using the low concentration distribution, 25% come from the mimic tissue range distribution, and 25% come from the high dynamic range distribution). All five concentration distributions are used to produce training and validation datasets in this study. The uniform, low concentration, and mimic tissue range distributions are used to generate testing datasets of 10 spectra each throughout this study for comparing model accuracies.

For determining realistic SNR magnitude, two aqueous reference standards were weighed targeting concentrations near 1 mM, prepared for NMR in a solution of deuterium oxide buffered to a pH of ~7.4, and scanned on a 400-MHz JEOL NMR spectrometer using a 1D-NOESY pulse sequence with 32 averages, water pre-saturation, a pulse angle of 90°, and a relaxation delay of 15 s. Receiver gain was set at a level to accommodate metabolite concentrations of at least 20 mM for maintaining a realistic SNR dynamic range (determined using autogain in preliminary experiments). Choline (as choline chloride) was purchased from Acros Organics (Geel, Belgium) and was prepared at a concentration of 0.92 mM, and creatine (as creatine monohydrate) was purchased from Acros Organics and was prepared at 1.0 mM. SNR was determined for these experimentally acquired scans by dividing the height of the tallest peak by the standard deviation of the noise. Simulated spectra of choline (scaled by 0.92) and creatine (unscaled) were subjected to the addition of various levels of normally distributed noise until similar SNR values were achieved, and the comparison between experimental and simulated spectra along with computed SNRs can be seen in

Supplementary Figure S2.

A data augmentation workflow was implemented for all spectra generated to produce greater variation in the datasets and imitate potential experimentally encountered signal variations, including adding normally distributed noise (magnitude ranging from 30 to 115% of the noise level determined previously using experimental choline and creatine spectra), varying line-broadening (using exponential apodization values ranging uniformly from 0 to 1), slightly shifting entire metabolite signals along the chemical shift axis (ranging uniformly from 0 to 3.4 ppb left or right per analyte), slightly shifting the baseline up or down (up to ~5.6% of the reference TSP-d4 peak height), and the addition of up to three singlets at random chemical shifts scaled using a gamma distribution promoting mostly concentrations under 5 mM with a mean of 0.85 mM (using the acetic acid signal for a generic singlet, see interference concentration distribution in

https://github.com/tpirneni/DL-NMR/blob/main/DefineDistributions.ipynb, accessed 10 January 2025). Many of these modifications can be seen in

Supplementary Figures S3–S5, which show zoomed-in 100-, 400-, and 800-MHz spectra, respectively, of overlapping 1 mM NADP and nicotinic acid mononucleotide resonances expressing many of the augmentations applied above independently (max peak shift, max noise added, min noise added, max line-broadening, and max base-shift). The following three figures in the

Supplement (Figures S6–S8) display the full spectra of 44 metabolites with the same modifications as in the preceding figures. As D-glucose in aqueous solutions typically reaches an equilibrium of ~36% alpha-D-glucose and ~64% beta-D-glucose [

34], we scaled the simulated spectra of these anomers by 0.36 and 0.64, respectively, before combining them into a single simulated glucose spectrum which was used in generating training, testing, and validation data. For all metabolites, only the region from −0.32 through 10.21 ppm containing 46,000 data points is considered for data generation.

A total of 44 metabolites are considered analytes in this manuscript (see 45 mentioned in

Supplementary Table S1, with two glucose anomers combined). All spectra are scaled to intensity values between zero and one by dividing all training, testing, and validation spectra by the maximum intensity value determined per dataset generated. A brief demonstration of the general data generation workflow with several spectra displayed can be found in the README.md file of this project’s GitHub page (

https://github.com/tpirneni/DL-NMR, accessed 10 January 2025). All datasets are split 80:20 for training and validation, respectively.

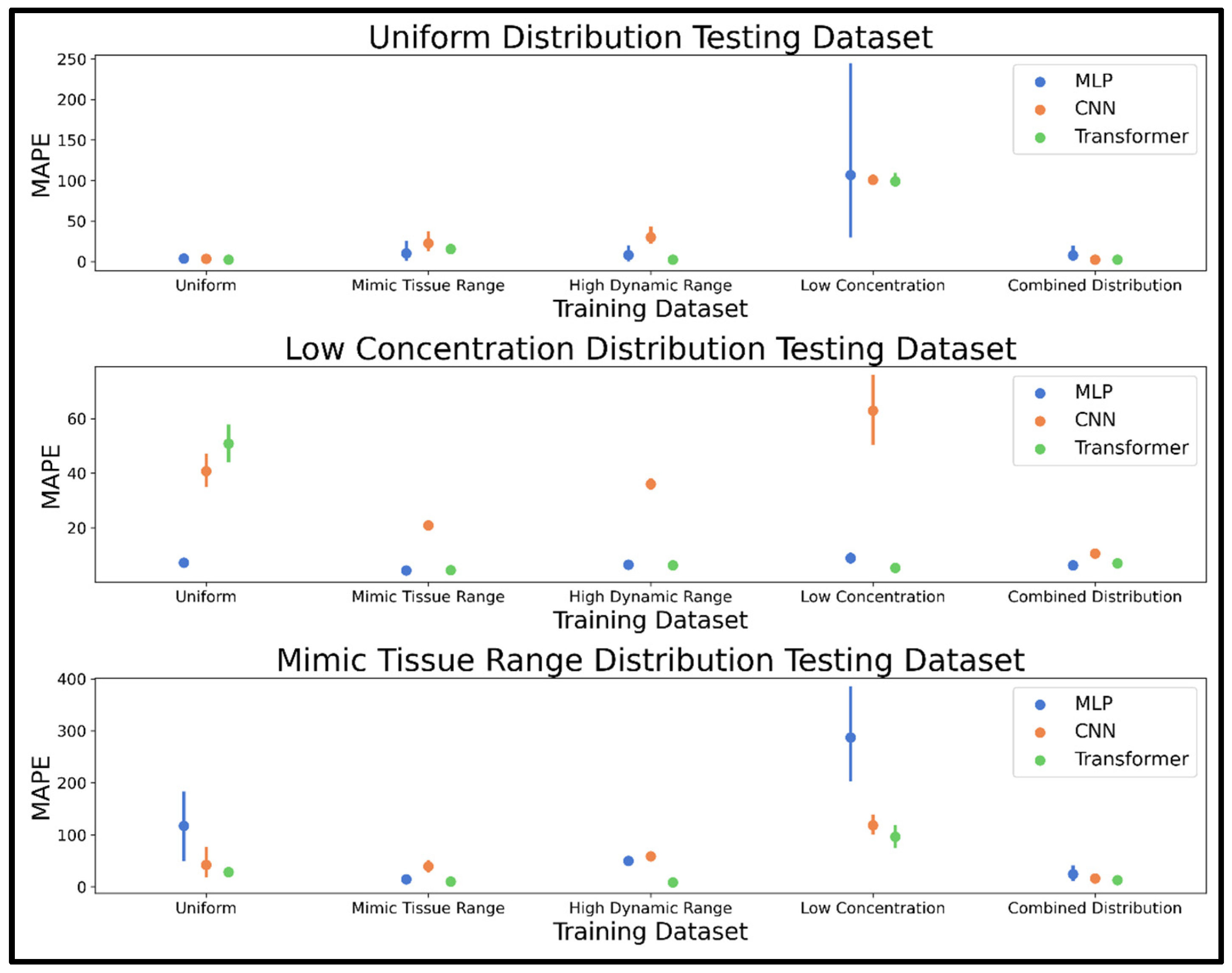

2.3. Dataset Development Phase

As the performance of any neural network depends heavily on the training dataset, we performed an investigation of how varying the concentration distribution and otherwise modifying the training dataset might affect model performances. Concentration distributions were explored first by training the MLP, CNN, and transformer models on datasets using the uniform, low concentration, mimic tissue range, high dynamic range, and combined distribution. Five training and validation datasets of 20,000 spectra were generated by scaling the 44 analytes using scalars pulled from one of the five above-mentioned distributions (one dataset per distribution: uniform, low concentration, mimic tissue range, high dynamic range, and combined distribution). For each dataset, 10,000 spectra included all 44 analytes every time, while 10,000 spectra had a 50% chance to leave out any analyte. Ten testing spectra were generated using the uniform, low concentration, and mimic tissue range distributions, and all 44 metabolites are included to facilitate computation of mean absolute percent error (MAPE), which is used as the metric for comparing model performances on the testing spectra, with mean MAPE used to determine the best performing models.

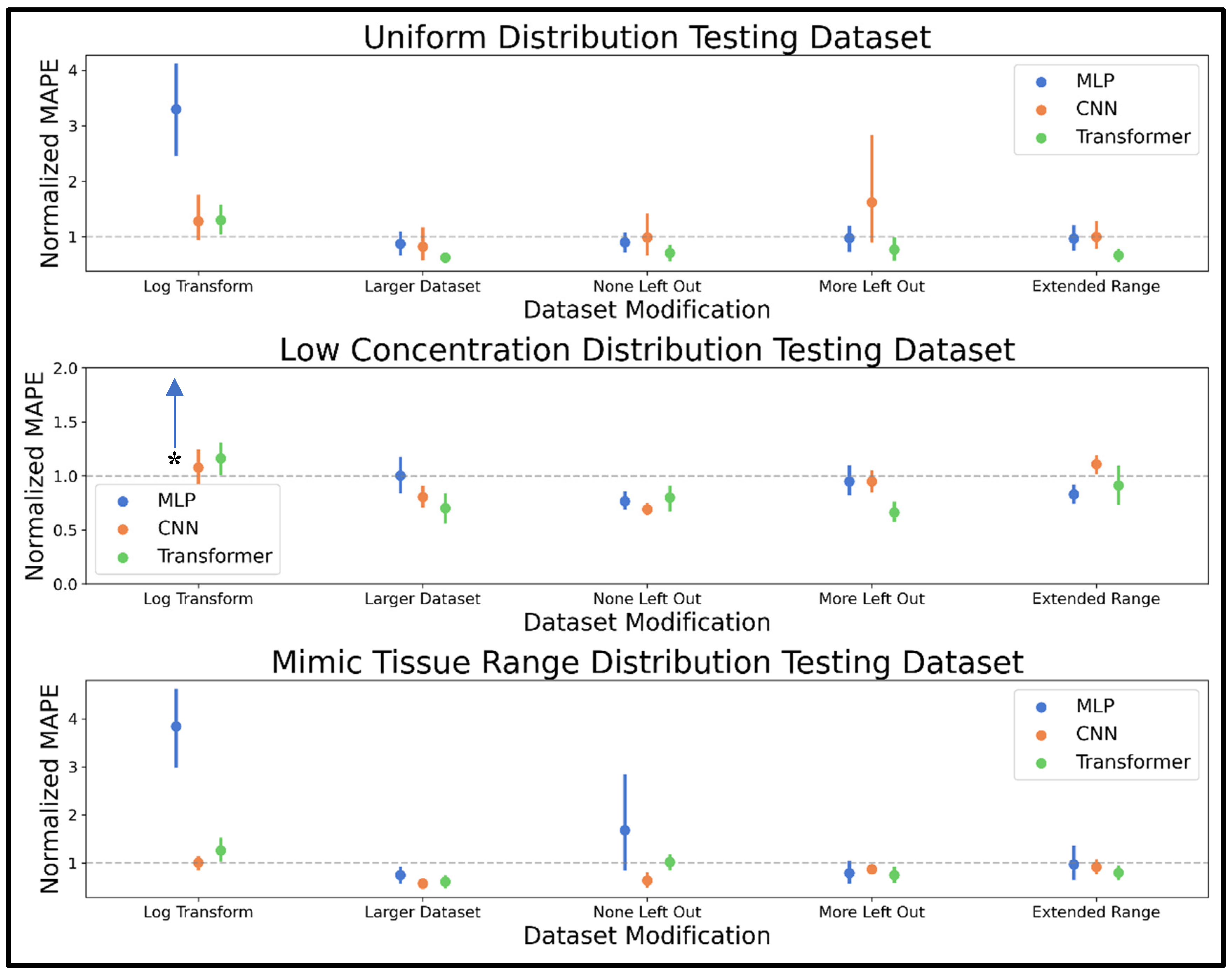

After comparing concentration distributions, the best distribution was used to compare several dataset modifications for their effects on accuracy for the MLP, CNN, and transformer models. The first modification applied to the training dataset was the log-transformation which was applied to all spectra as a means of decreasing the dynamic range of intensities between the largest and smallest peaks. The transformation is described by the equation,

, where ‘

L’ represents the log-transformed spectra intensity and ‘

S’ is intensity in the original spectrum; an example of this transformation is shown in

Supplementary Figure S10.

The second dataset modification involved simply increasing the dataset size from 20,000 to 50,000. The third dataset modification was to not leave any metabolites out of the spectra (as opposed to 10,000 spectra having a 50% chance to leave out any metabolite). The fourth dataset modification was to leave out even more metabolites by having 1/3 of spectra, including all 44 metabolites, 1/3 of spectra having a 50% chance to leave out any metabolite, and 1/3 of spectra having a 75% chance to leave out any metabolite. The fifth dataset modification examined was extending the upper limit of the concentration range from 20 mM to 24 mM (i.e., generating data within the range 0.005 to 24 mM rather than 0.005 to 20 mM). MAPE determined for each testing example in the uniform, low concentration, and mimic tissue range test spectra were normalized by the MAPE determined by the respective model prior to dataset modification (i.e., MAPE determined on the uniform test spectra using the MLP trained on log-transformed is divided by the test MAPE determined by the MLP trained on the unmodified dataset, and so on for all models and testing examples). In this way, modifications with a mean normalized MAPE lower than 1.0 improved performance, and modifications with a mean normalized MAPE higher than 1.0 worsened performance.

4. Discussion

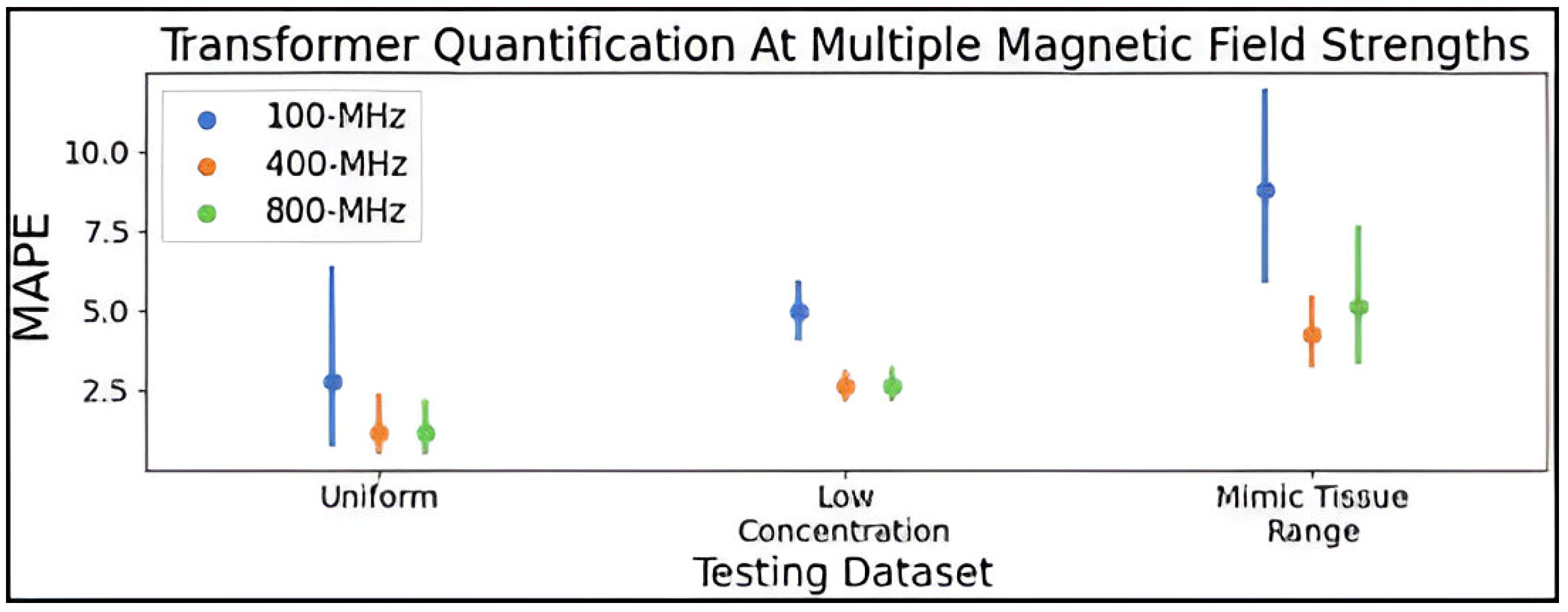

This research explored dataset considerations and parameter optimization for MLP, CNN, and transformer NNs for the task of quantifying analytes in 400-MHz 1D 1H-NMR spectra of metabolite mixtures. All three models were trained with various training dataset concentration distributions and dataset modifications before optimizing hyperparameters and finally deciding which model achieved superior performance. The best model was further assessed for quantification of metabolites at 100- and 800-MHz.

The concentration distribution phase of dataset exploration revealed that a combination of concentration distributions was effective in making all three NN models robust to various test spectra scenarios. This effect was strongest for the CNN, which performed optimally on all examples when using the combined distribution. The MLP and transformer both achieved high performance when trained using the mimic tissue range, high dynamic range, and combined distributions, especially the transformer model demonstrating the best performance overall. The combined distribution was selected moving forward given it was a consistently accurate method among the three models; although, for the MLP and transformer, the mimic tissue range and high dynamic range distributions should not be considered unpromising in future studies analyzing complex spectra with both very high and very low intensities. All three models were fast enough for real-time quantification of metabolites in NMR spectra, with each capable of profiling metabolites in 5000 spectra in milliseconds.

The dataset modification phase of training revealed several dataset alterations that improved analyte quantification accuracy for all or several NNs used in this study. As expected, increasing the dataset size improved performance for all models, especially the CNN and transformer, which conventionally perform well with very large datasets. The MLP saw mixed, non-conclusive results in the testing datasets after training on data modified to not leave out any metabolites or to leave out even more metabolites, while the CNN improved when no metabolites were left out, but the results were not significantly improved when leaving out more metabolites. The transformer improved when both leaving out more or not leaving out metabolites, a trend that may be because the test sets contain all 44 metabolites in all spectra to facilitate MAPE computation. Thus, training on all 44 metabolites every time is more representative of the test set, while training on the dataset where more metabolites are left out might promote model understanding of each metabolite’s signature chemical shifts and line shapes, given the increased potential for an absence of overlap. Extending the upper limit of the concentration range seen in training improved transformer performance without significantly impacting MLP and CNN accuracy. The log transformation was detrimental to the performance of all three models on all three testing datasets. In summary, the dataset modification experiments showed modifications like increasing dataset size, leaving out more metabolites, and extending the concentration range past the expected use range could improve NN performance.

Ten loss functions were assessed for model training in the task of analyte quantification, focusing on a variety of losses with different sensitivities to outliers and the scale of target values given the large range and expected distribution of concentrations generally encountered in NMR-based metabolomics studies. Results confirmed that RAE achieved the highest accuracy for the MLP and transformer, while quantile loss with q = 0.5 was effective for the CNN and transformer.

The final phase of NN development for all three models was hyperparameter optimization. Bayesian hyperparameter optimization results guided the model architectures and training parameters used to train an MLP, CNN, and transformer using a larger dataset than previously (250,000 compared to 20,000 or 50,000 used prior) for a final comparison to decide the most accurate model in spectra of varying complexity (8, 44, or 86 metabolites). Consistent with previous experiments in the current study, the transformer was the highest-performing model across all three testing sets regardless of spectral complexity, although the CNN was competitive at 8 and 44 analytes. These results agree with a trend observed in many applications where transformers are surpassing the benchmarks set by other NN architectures [

42,

43].

The hyperparameter-optimized transformer was additionally validated on spectra simulated at 100-MHz, relevant to benchtop NMR spectroscopy, and 800-MHz, relevant to high-resolution NMR spectroscopy. The transformer saw a modest decrease in performance in quantifying analytes in 100-MHz spectra compared to 400-MHz, while the model trained for quantification at 800-MHz was comparable to the network trained on 400-MHz data. This drop in accuracy at 100-MHz is likely due to the lower SNR and lower spectral resolution in low-field spectra where coupling constants increase beyond peak dispersion relative to the 400- and 800-MHz spectra. It is a promising result that the transformer could quantify 86 metabolites at 100-MHz with only a minor drop in accuracy compared to 800-MHz. It is possible that optimizing hyperparameters on 100- and 800-MHz spectra might improve performance beyond what was achieved using the parameters selected using 400-MHz spectra.

The results of this study emphasize important aspects of applying NNs for NMR metabolite profiling. The comparison of training dataset concentration distributions confirmed that training using samples drawn from multiple distributions as opposed to a single distribution leads to models that are more robust to varied input distributions. The dataset modification phase of model development confirmed that the proportion of analytes left out of samples in the training dataset affected model performance and that extending the concentration range of training data beyond the expected concentration range of inputs can improve quantitative performance. The comparison of optimized models, consistent with results throughout the study, revealed the transformer to be more effective than the MLP or CNN for analyte quantification in NMR spectra, especially as the complexity of spectra increases due to a larger number of metabolites. Finally, results show that the transformer approach can be effective at multiple field strengths, even at field strengths compatible with benchtop NMR.

This work presents a general workflow that could be used to train models for the quantification of NMR spectra, but this work also has important limitations. Despite efforts to promote realism in spectra, including residual protein/lipid signals and more realistic chemical shift dependencies (such as shifting metabolites/peaks based on temperature, pH, concentration, and metabolite interactions) would be invaluable to improving the approach. Improving chemical shift dependencies through NMR simulation methods, empirical rules applied during data augmentation, or otherwise is a critical next step if NNs like those designed in this study are to be applied to experimentally acquired NMR spectra. Using simulated spectra is a limitation compared to proving the method in experimentally acquired spectra; however, the simplicity and customizability afforded by simulated spectra are preferable for an extensive investigation of dataset and model development and validation as was performed in this study. The primary limitation of experimental data is that one cannot easily acquire enough spectra to train a model (tens of thousands to millions of correctly acquired spectra containing accurately weighted compounds). It is possible to obtain experimental spectra of metabolite reference standards individually and use these to generate data as performed in this study with simulated spectra. This would not overcome chemical shift dependencies as described above but could work for some well-defined systems, such as for NMR lipid profiling, as demonstrated in a previous study applying NNs to an experimentally acquired NMR metabolomics dataset [

7]. An alternative approach that could help overcome chemical shift dependency limitations could be to train a model using synthetic spectra, using either a similar generation method as used in this study, i.e., using experimental spectra of individual standards as in Johnson et al., which was successful in quantifying lipids in experimentally acquired spectra of hepatic tissue extracts [

7], or likely the best case would be using simulated spectra as in this study but with more accurate chemical shift dependencies incorporated and then to fine-tune the model on tens to hundreds of experimental spectra. In this way, one could train entirely on synthetic spectra and then adjust model parameters to achieve better performance on experimentally acquired spectra, which already manifest signal effects from pH, concentration, metabolite interaction effects, etc. Fine-tuning may even be a way to tailor quantification to a given scenario such as optimizing quantification for a single NMR instrument or a specific media.

To confirm the best practices determined in this manuscript, these methods should be validated in experimentally acquired metabolomics data. Further, benchmarking the performance against popular peak-fitting methods such as Chenomx is an important next step to confirming if NN methods are superior. To further improve applicability, it should be validated whether a NN such as a transformer could learn hundreds of potential metabolites and still maintain accuracy in examples comparable to those seen in this study (e.g., can a model learn 500 metabolites and maintain accuracy in realistic mixtures of ~30–80 metabolites).

Beyond the methods assessed in this work, further model architectures or modifications are worth consideration, such as encoder/decoder transformers, convolutional transformers, CRNNs, or ensembles of models (for averaging numerous models for predictions, or potentially to ensemble models aimed at different concentration ranges), and dataset modifications such as alternative concentration distributions, time/domain input data, and datasets with 1 million or more training spectra. With NNs, it is also possible to design a model that takes in multidimensional NMR spectra or a NN that takes in multiple spectra for a single metabolite quantification task (i.e., input 1D

1H,

13C, and

31P spectra to obtain a single metabolite profile for given sample). The attention mechanism could potentially be modified for improved performance, perhaps having all data points attend one another (computationally expensive) or some sort of learned or custom attention where all resonances of an analyte attend one another and potentially some overlapping signals among other data points. The attention mechanism could potentially be replaced by another mechanism, such as the recently introduced Hyena operators [

44]. Other model improvements might include using explainable artificial intelligence techniques to discern what input features were most important for analyte quantification [

45], or in the case of transformers, attention maps could serve a similar purpose [

46]. Adding a peak alignment step prior to input into the NN could potentially improve model accuracy and alignment could be a method to limit the detrimental effects of not incorporating chemical shift effects of temperature, pH, metabolite interactions, etc., in training data. The methods from this manuscript could alternatively be applied to other quantitative NMR spectroscopic analyses such as molecular weight determination [

27], or potentially to other forms of spectroscopy.