How to Improve Performance and Diversity of Government-Funded Research Institute Ecosystem? Focus on Result Sharing and Feedback Policy

Abstract

1. Introduction

2. Literature Review

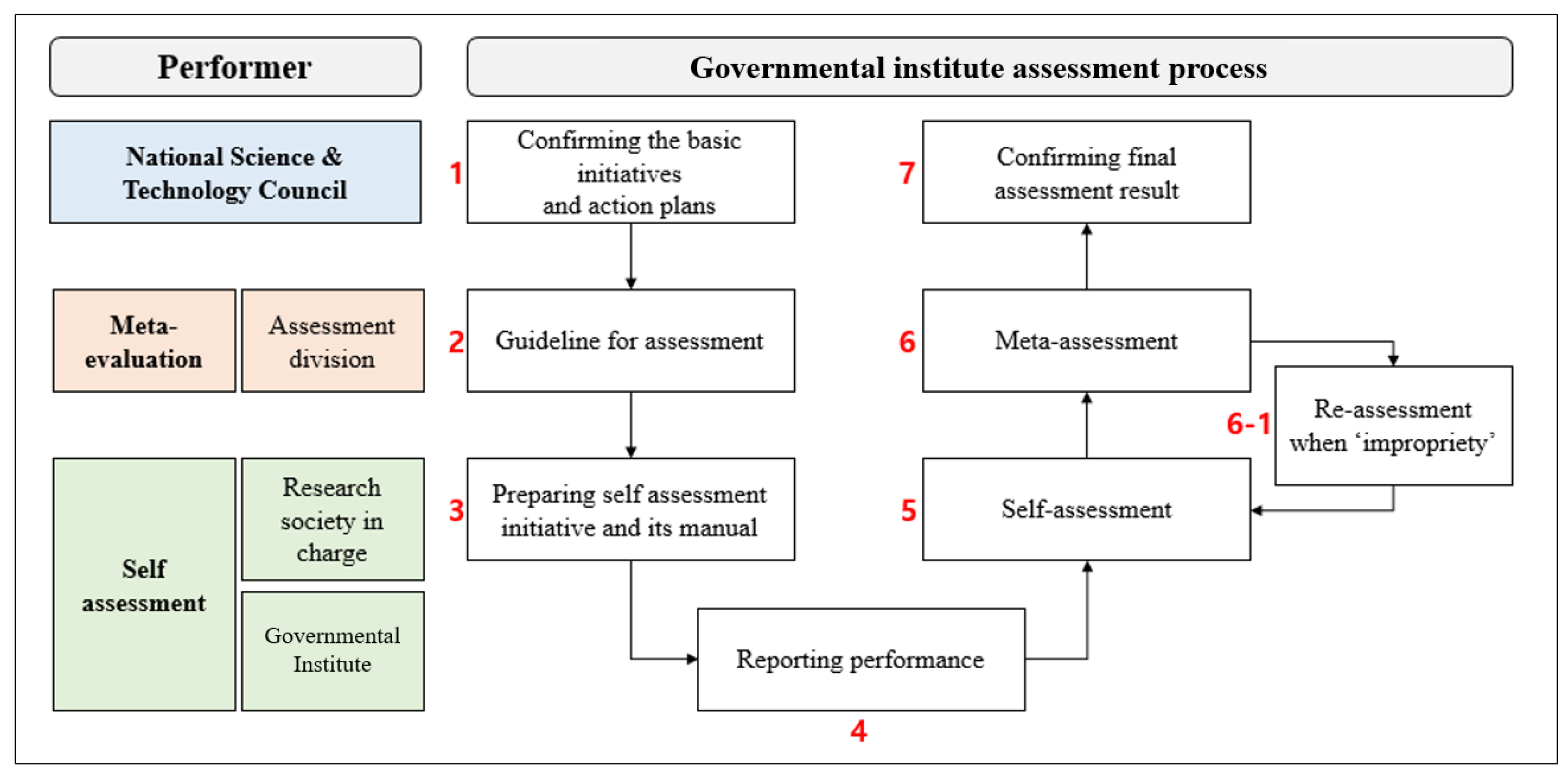

2.1. GI Assessment System and Its Critics

2.2. Organizational Feedback and Its Effect

3. Research Model

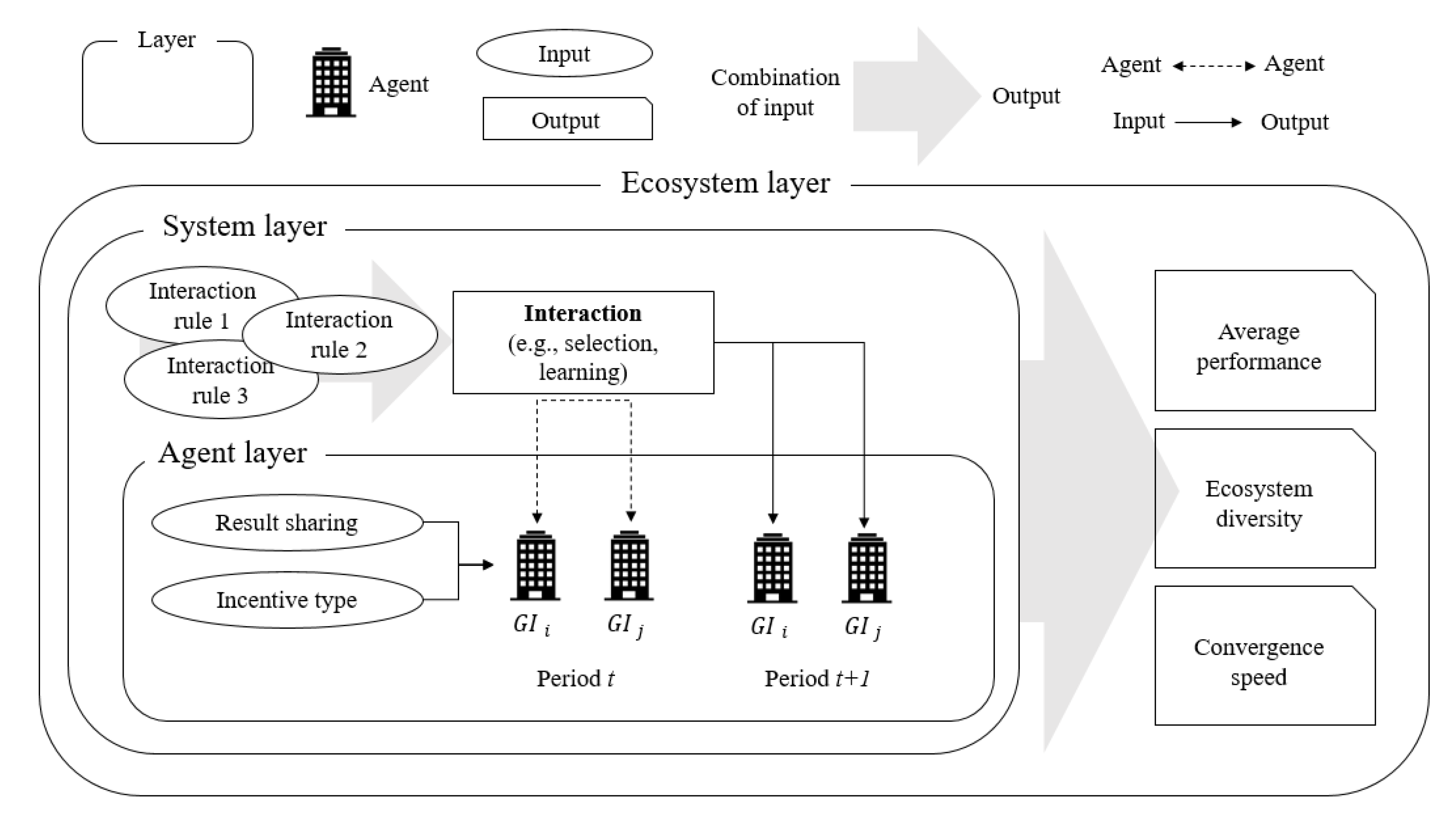

3.1. Agent Based Model Simulation (ABMS)

3.2. Model Layers

3.2.1. Agent Layer

3.2.2. System Layer

3.2.3. Ecosystem Layer

3.3. Experiment

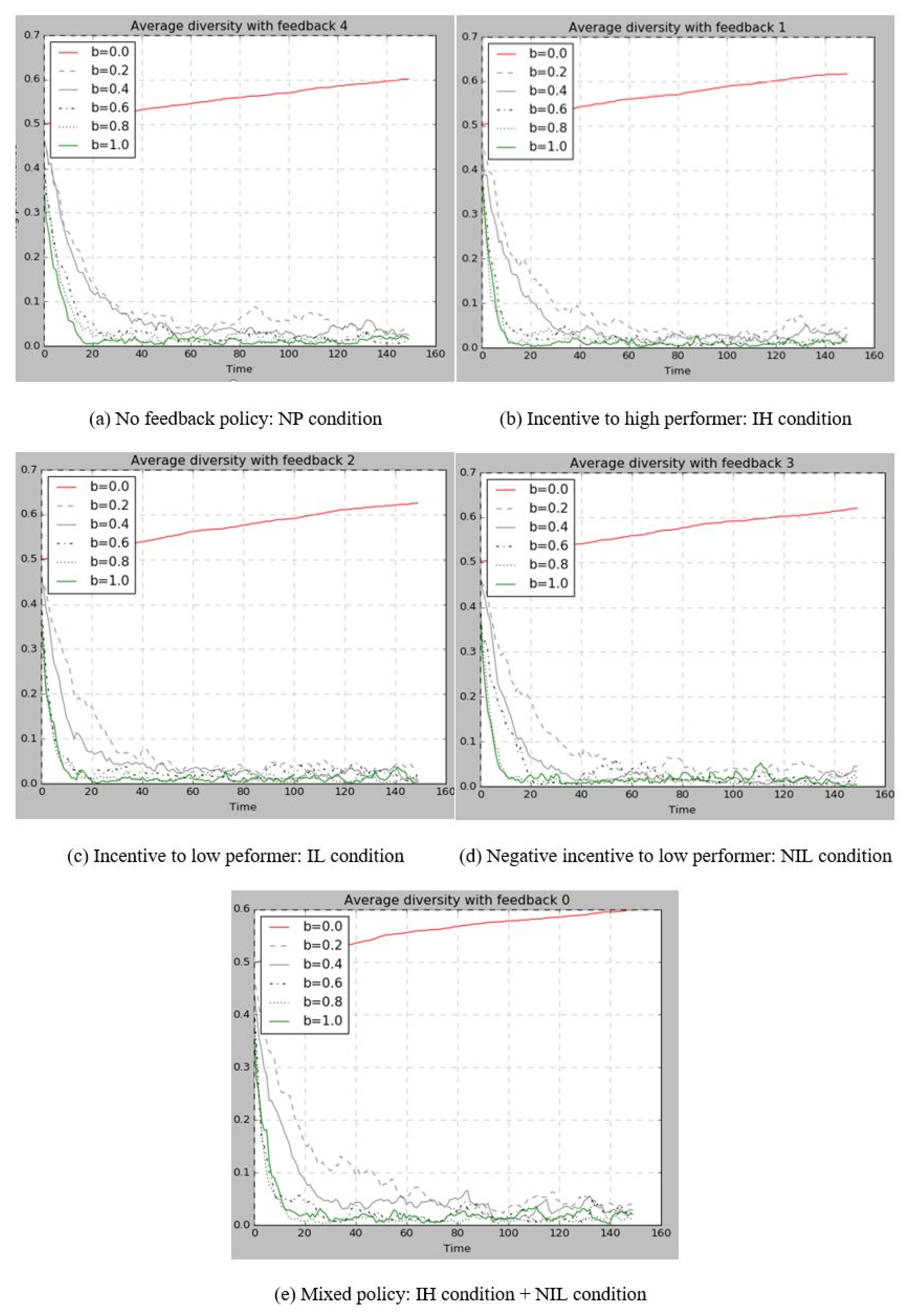

3.3.1. Experiment 1: No Policy

3.3.2. Experiment 2: Positive Feedback to the High-Performance Group

3.3.3. Experiment 3: Positive Feedback to the Low-Performance Group

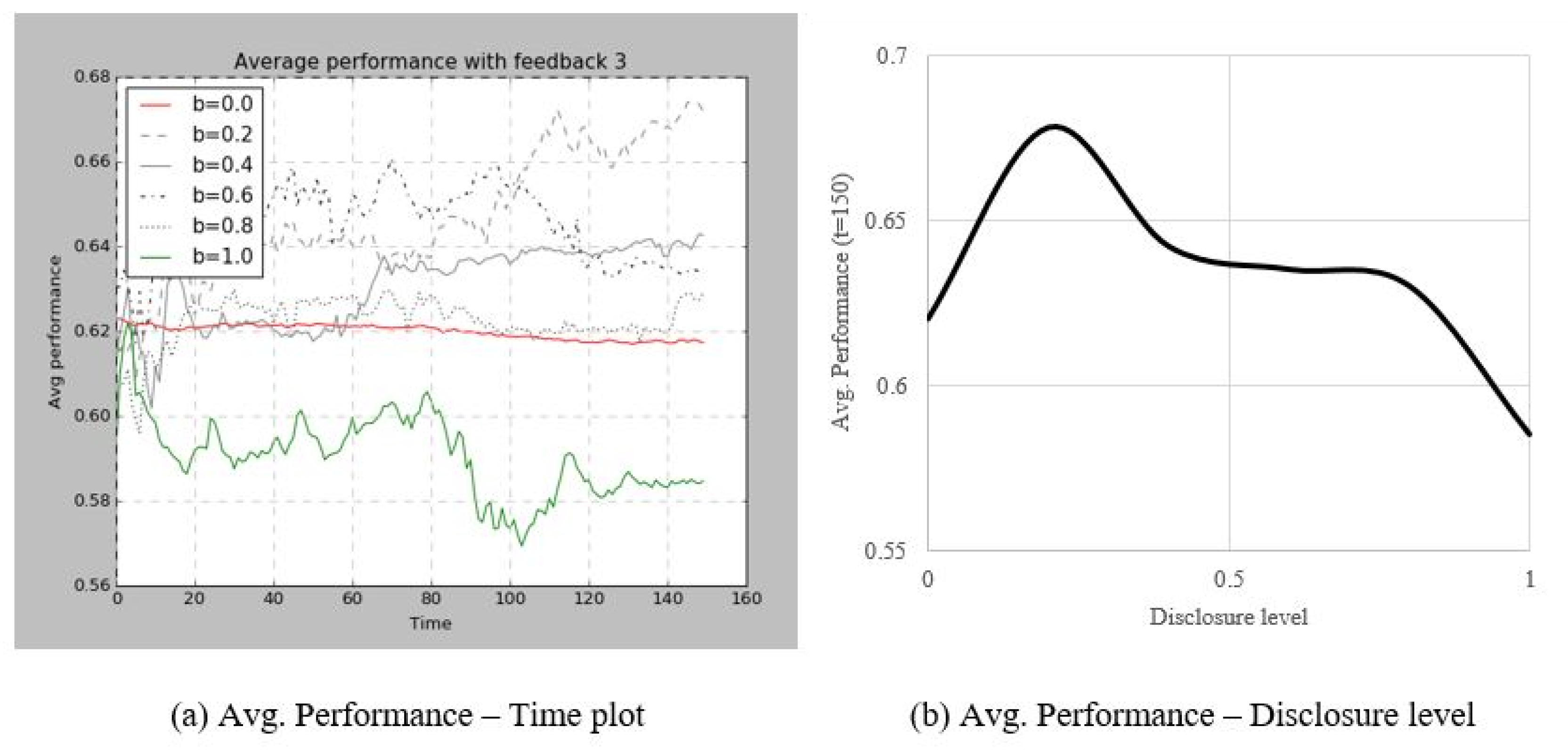

3.3.4. Experiment 4: Negative Feedback to the Low-Performance Group

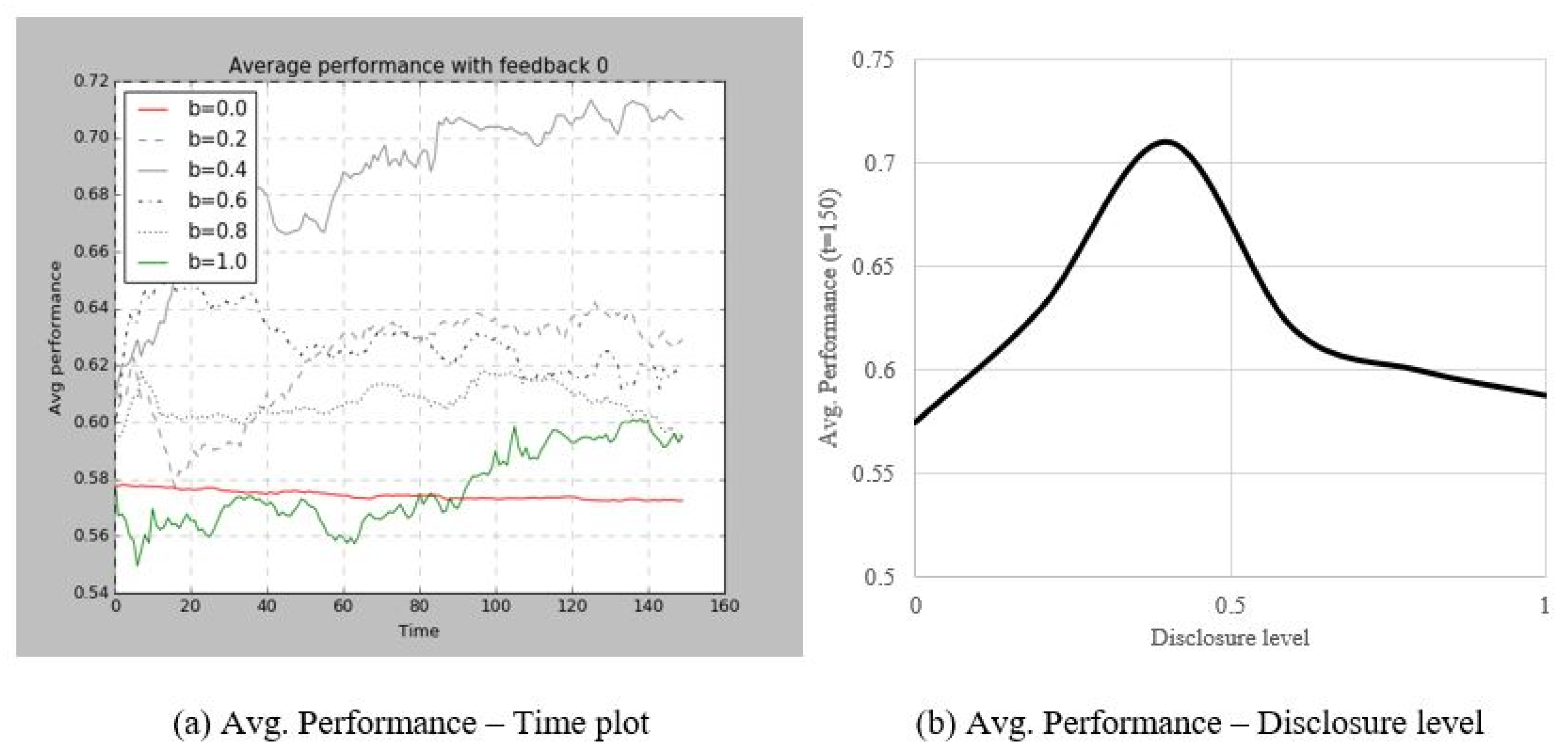

3.3.5. Experiment 5: Positive Feedback to the High-Performance Group and Negative Feedback to the Low-Performance Group (Mixed Policy)

4. Result

4.1. Average Performance

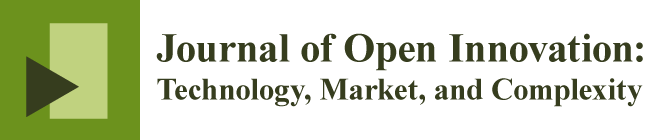

4.1.1. Experiment 1: No Policy

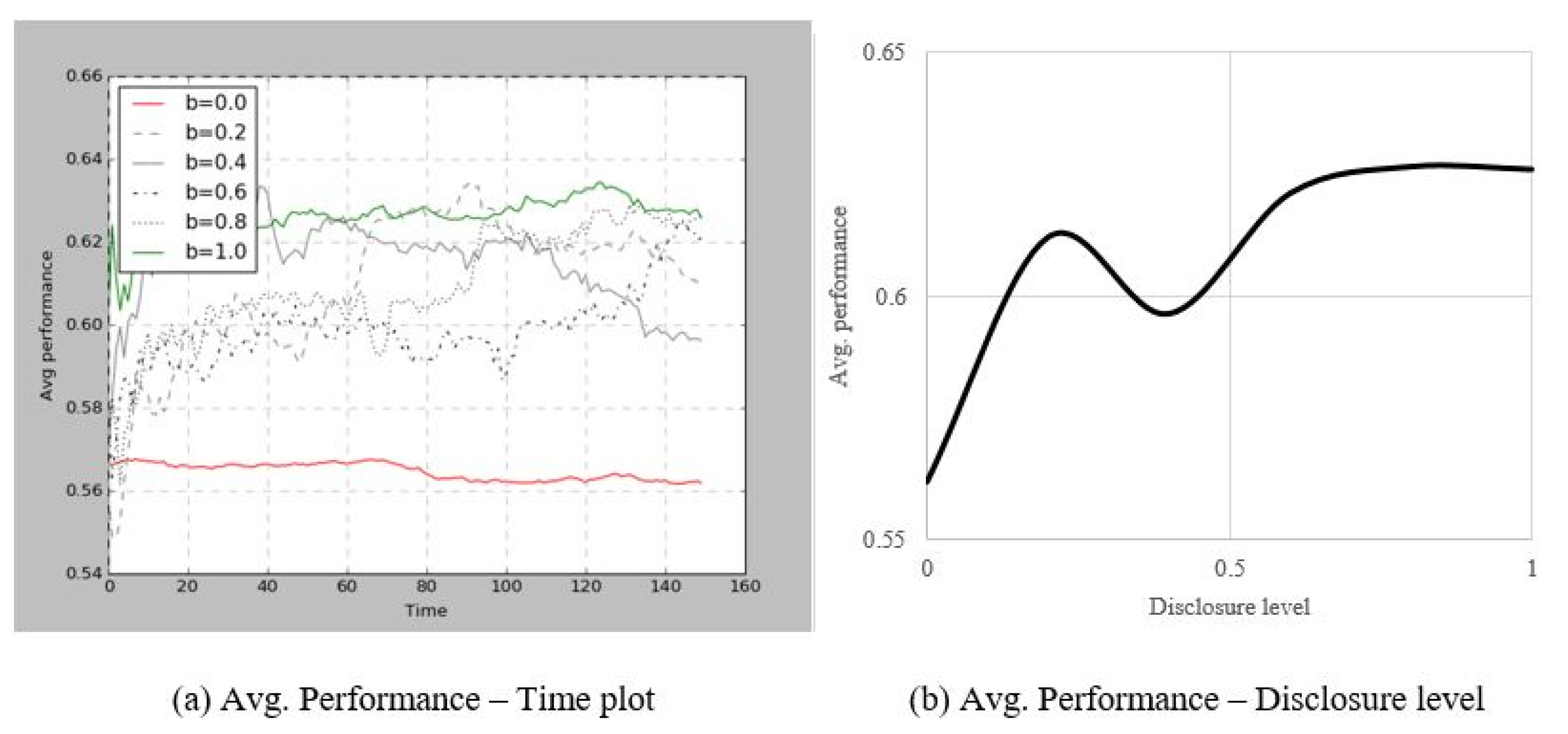

4.1.2. Experiment 2 and 3: Positive Feedback Policy

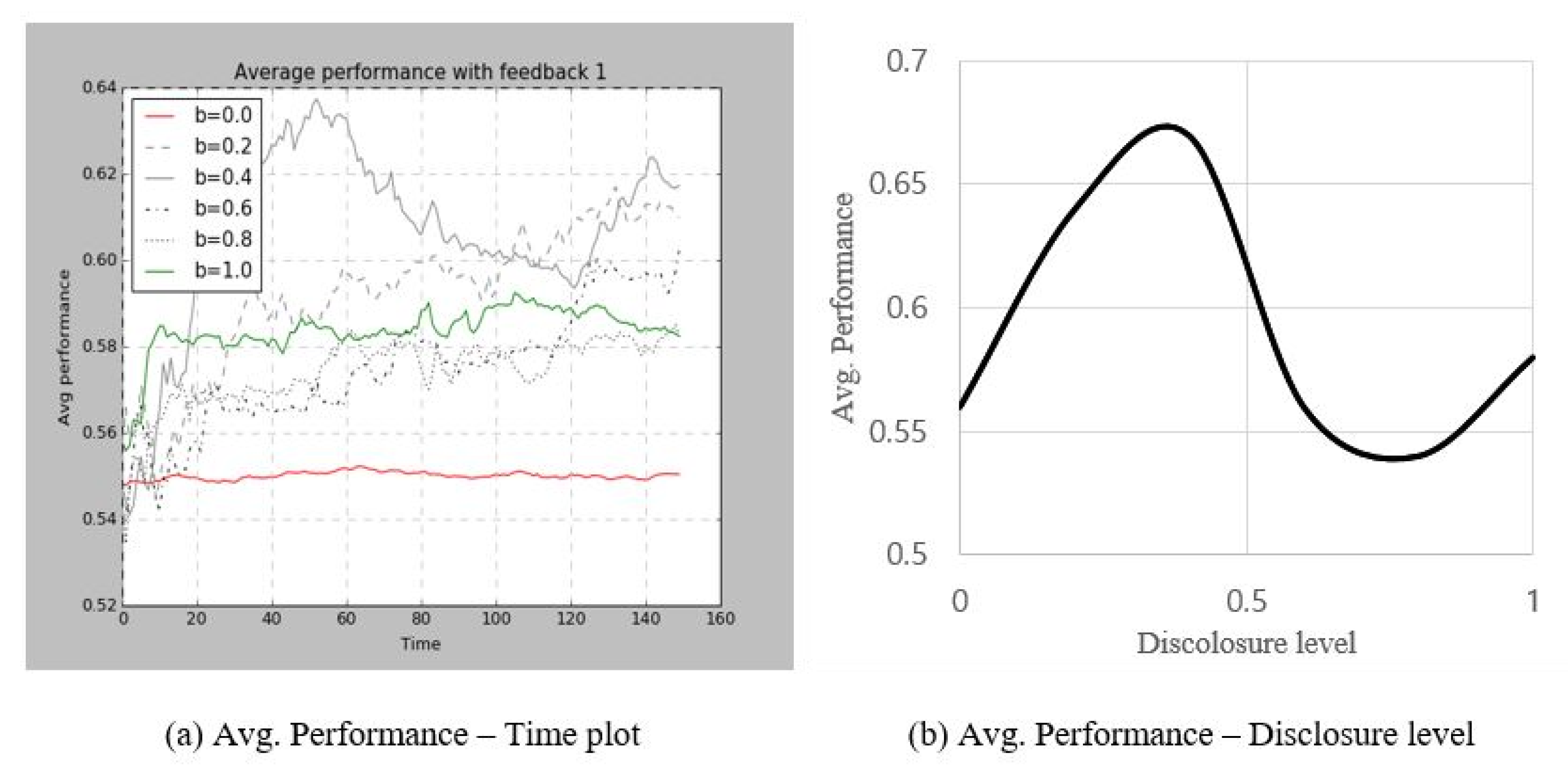

4.1.3. Experiment 4 and 5: Negative Feedback Policy

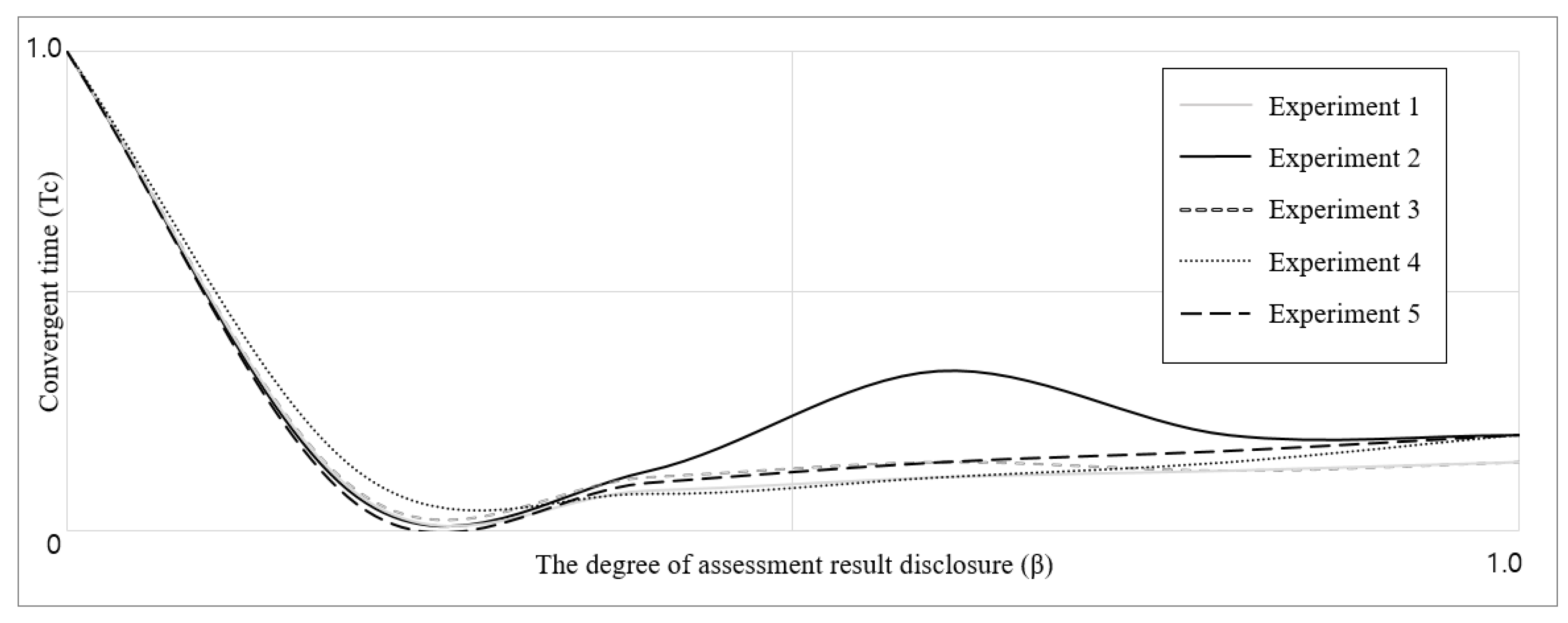

4.2. Convergence Time

5. Discussion and Conclusions

Author Contributions

Funding

Conflicts of Interest

Appendix A

References

- Pavitt, K. The Social Shaping of the National Science Base. Res. Policy. 1998, 27, 793–805. [Google Scholar] [CrossRef]

- Kim, E.; Lee, D.; Kim, J.H. How Collaboration Networks Affect Innovation in Korea’s Information and Communication Technology Industry in the Era of Internet of Things. Asian J. Technol. Innov. 2016, 24, 201–221. [Google Scholar] [CrossRef]

- Gang, K.W.; Abetti, P.A. The Global Competitiveness of South Korea: The Role of Government-Funded Research Institutes. World Rev. Sci. Technol. Sustain. Dev. 2010, 8, 1–28. [Google Scholar] [CrossRef]

- Bourgeois, I.; Whynot, J.; Thériault, É. Application of an Organizational Evaluation Capacity Self-Assessment Instrument to Different Organizations: Similarities and Lessons Learned. Eval. Program Plann. 2015, 50, 47–55. [Google Scholar] [CrossRef]

- Kim, E.; Kim, S.; Kim, H. Development of an Evaluation Framework for Publicly Funded R&D Projects: The Case of Korea’s Next Generation Network. Eval. Program Plann. 2017, 63, 18–28. [Google Scholar]

- Downs, G.W.; Larkey, P.D. The Search for Governement Efficiency: From Hubris to Helplessness; Random House: New York, NY, USA, 1986. [Google Scholar]

- Osborne, D.; Gaebler, T. Reinventing Government: How the Entrepreneurial Spirit Is Transforming Government; Adison Wesley: Boston, MA, USA, 1992. [Google Scholar]

- Rhydderch, M.; Edwards, A.; Elwyn, G.; Marshall, M.; Engels, Y.; Van Den Hombergh, P.; Grol, R. Organizational Assessment in General Practice: A Systematic Review and Implications for Quality Improvement. J. Eval. Clin. Pract. 2005, 11, 366–378. [Google Scholar] [CrossRef]

- Matsui, T.; Okada, A.; Inoshita, O. Mechanism of Feedback Affecting Task Performance. Organ. Behav. Hum. Perform. 1983, 31, 114–122. [Google Scholar] [CrossRef]

- Salmoni, A.W.; Schmidt, R.A.; Walter, C.B. Knowledge of Results and Motor Learning: A Review and Critical Reappraisal. Psychol. Bull. 1984, 95, 355–386. [Google Scholar] [CrossRef]

- Schmidt, A.M.; Dolis, C.M. Something’s Got to Give: The Effects of Dual-Goal Difficulty, Goal Progress, and Expectancies on Resource Allocation. J. Appl. Psychol. 2009, 94, 678–691. [Google Scholar] [CrossRef]

- Ilgen, D.R.; Fisher, C.D.; Taylor, M.S. Consequences of Individual Feedback on Behavior in Organizations. J. Appl. Psychol. 1979, 64, 349–371. [Google Scholar] [CrossRef]

- Reichheld, F. The Ultimate Question; Harvard Business School Press: Boston, MA, USA, 2006. [Google Scholar]

- Ashford, S.J.; Northcraft, G.B. Conveying More (or Less) than We Realize: The Role of Impression-Management in Feedback-Seeking. Organ. Behav. Hum. Decis. Process. 1992, 53, 310–334. [Google Scholar] [CrossRef]

- Choi, Y.; Beg, J. Evaluating Government Research Institutes: Developing an Exploratory, Research-Centered Model for Applying the Balanced Score Cards Method. J. Gov. Study 2006, 12, 163–193. [Google Scholar] [CrossRef]

- Kong, B. Explanatory Research on Governmental Institute Assessement Policy. Korean Repub. Adm. Rev. 2003, 12, 147–176. [Google Scholar]

- Lee, B.; Yoon, S.A. Study on Positive Application of the Evaluating Method of R & D Organizations. Korean Soc. Innov. Manag. Econ. 2001, 3, 133–154. [Google Scholar]

- Lee, S.; Lee, H. Measuring and Comparing the R&D Performance of Government Research Institutes: A Bottom-up Data Envelopment Analysis Approach. J. Informetr. 2015, 9, 942–953. [Google Scholar]

- Rho, W.J.; Rno, S.P.; Kim, T.I. A Study of Modeling the Evaluation System of Public Research Institutes. Korean Repub. Adm. Rev. 1996, 5, 30–54. [Google Scholar]

- Yi, C. Application of Intellectual Capital Model to the Evaluation System on Public Research Institutes. Korean Repub. Adm. Rev. 2005, 39, 195–217. [Google Scholar]

- Alvero, A.M.; Bucklin, B.R.; Austin, J. Performance Feedback in Organizational Settings (1985–1998) An Objective Review of the Effectiveness and Essential Characteristics of Performance Feedback in Organizational Settings. J. Organ. Behav. Manag. 2001, 21, 3–29. [Google Scholar]

- Kluger, A.N.; DeNisi, A. Effects of Feedback Intervention on Performance: A Historical Review, a Meta-Analysis, and a Preliminary Feedback Intervention Theory. Psychol. Bull. 1996, 119, 254–284. [Google Scholar] [CrossRef]

- Dodgson, M. Organizational Learning: A Review of Some Literatures. Organ. Stud. 1990, 14, 375–394. [Google Scholar] [CrossRef]

- Park, J.; Jeong, S.; Yoon, Y.; Lee, H. The Evolving Role of Collaboration in Developing Scientific Capability: Evidence from Korean Government-Supported Research Institutes. Sci. Public Policy 2015, 42, 255–272. [Google Scholar] [CrossRef]

- Oh, Y.A. Study of the Factors Influencing Acceptance of Evaluation of Government-Funded Research Institutions. Korean J. Public Adm. 2015, 9, 151–170. [Google Scholar]

- Kim, M.A. Critical Examination of Evaluating Korean Central Government Agencies. Korean Adm. Policy Anal. Eval. 2003, 13, 1–21. [Google Scholar]

- Hwang, B.; Kang, K. Meta-Evaluation of the Government Funded Research Institute Assessment System. In Summer Conference of Korean Public Administraion Review. 2004. Available online: http://www.dbpia.co.kr/journal/articleDetail?nodeId=NODE06710643 (accessed on 19 August 2019).

- Son, H. A Study on Establishment of a Performance Based Evaluation System for the Government-Supported Research Institutes (GSRIs) in Korea. Audit Insp. 2007, 12, 73–106. [Google Scholar]

- Hwang, B.; Bae, E.; Hong, H.; Kim, D. Operational-Efficiency Improvement of Public R and D Management Agencies in South Korea. J. Open Innov. Technol. Mark. Complex. 2019, 5, 13. [Google Scholar] [CrossRef]

- Jang, H.; Park, J. Institute Size Effect Analysis on the Management Performance of the Public Institute. Korean Public Adm. Rev. 2015, 24, 1–25. [Google Scholar]

- Ruiz-Martin, C.; Paredes, A.L.; Wainer, G.A. Applying Complex Network Theory to the Assessment of Organizational Resilience. IFAC-PapersOnLine 2015, 28, 1224–1229. [Google Scholar] [CrossRef]

- Ammons, R.B. Effects of Knowledge of Performance: A Survey and Tentative Theoretical Formulation. J. Gen. Psychol. 1956, 54, 279–299. [Google Scholar] [CrossRef]

- Forss, K.; Cracknell, B.; Samset, K. Can Evaluation Help an Organization to Learn? Eval. Rev. 1994, 18, 574–591. [Google Scholar] [CrossRef]

- Balcazar, F.; Hopkins, B.L. A Critical, Objective Review of Performance Feedback. J. Organ. Behav. Manag. 1985, 7, 65–89. [Google Scholar] [CrossRef]

- Bandura, A.L. Self-Efficay: Toward a Unifying Theory of Behavioral Change. Psychol. Rev. 1977, 84, 191–215. [Google Scholar] [CrossRef]

- Carver, C.S.; Scheier, M.F. Control Theory: A Useful Conceptual Framework for Personality-Social, Clinical, and Health Psychology. Psychol. Bull. 1982, 92, 111–135. [Google Scholar] [CrossRef]

- Igbaria, M.; Zinatelli, N.; Cragg, P.; Cavaye, A.L.M.; Street, W.G. Personal Computing Acceptance Factors in Small Firms: A Structural Equation. MIS Q. 1997, 21, 279–305. [Google Scholar] [CrossRef]

- Ilies, R.; Judge, T.A. Goal Regulation across Time: The Effects of Feedback and Affect. J. Appl. Psychol. 2005, 90, 453–467. [Google Scholar] [CrossRef]

- Locke, E.A.; Latham, G.P. A Theory of Goal Setting & Task Performance; Prentice-Hall: Upper Saddle River, NJ, USA, 1990. [Google Scholar]

- Ashford, S.J.; Cummings, L.L. Feedback as an Individual Resource: Personal Strategies of Creating Information. Organ. Behav. Hum. Perform. 1983, 32, 370–398. [Google Scholar] [CrossRef]

- Levy, P.E.; Albright, M.D.; Cawley, B.D.; Williams, J.R. Situational and Individual Determinants of Feedback Seeking: A Closer Look at the Process. Organ. Behav. Hum. Decis. Process. 1995, 62, 23–37. [Google Scholar] [CrossRef]

- Hanna, A.; Wells, C.; Maurer, P.; Friedland, L.; Shah, D.; Matthes, J. Partisan Alignments and Political Polarization Online: A Computational Approach to Understanding the French and US Presidential Elections. In Proceedings of the 2nd Workshop on Politics, Elections and Data; ACM: New York, NY, USA, 2013; pp. 15–22. [Google Scholar]

- Tafkov, I.D. Private and Public Relative Performance Information under Different Compensation Contracts. Account. Rev. 2012, 88, 327–350. [Google Scholar] [CrossRef]

- Hannan, R.L.; McPhee, G.P.; Newman, A.H.; Tafkov, I.D. The Effect of Relative Performance Information on Performance and Effort Allocation in a Multi-Task Environment. Account. Rev. 2012, 88, 553–575. [Google Scholar] [CrossRef]

- Becker, L.J. Joint Effect of Feedback and Goal Setting on Performance: A Field Study of Residential Energy Conservation. J. Appl. Psychol. 1978, 63, 428–433. [Google Scholar] [CrossRef]

- Hogg, M.A.; Hains, S.C. Friendship and Group Identification: A New Look at the Role of Cohesiveness in Groupthink. Eur. J. Soc. Psychol. 1998, 28, 323–341. [Google Scholar] [CrossRef]

- Mento, A.J.; Steel, R.P.; Karren, R.J. A Meta-Analytic Study of the Effects of Goal Setting on Task Performance: 1966-1984. Organ. Behav. Hum. Decis. Process. 1987, 39, 52–83. [Google Scholar] [CrossRef]

- Sprinkle, G.B. The Effect of Incentive Contracts on Learning and Performance. Account. Rev. 2000, 75, 299–326. [Google Scholar] [CrossRef]

- Matsui, T.; Okada, A.; Mizuguchi, R. Expectancy Theory Prediction of the Goal Theory Postulate, “The Harder the Goals, the Higher the Performance”. J. Appl. Psychol. 1981, 66, 54–58. [Google Scholar] [CrossRef]

- Simon, D.; Knie, A. Can Evaluation Contribute to the Organizational Development of Academic Institutions? An International Comparison. Eval. Rev. 2013, 19, 402–418. [Google Scholar] [CrossRef]

- Kang, K.; Kang, K.; Oah, S.; Oah, S.; Dickinson, A.M. The Relative Effects of Different Frequencies of Feedback on Work Performance. J. Organ. Behav. Manage. 2005, 23, 21–53. [Google Scholar] [CrossRef]

- Bohn, R. Stop Fighting Fires. Havard Bus. Rev. 2000, 78, 82–91. [Google Scholar]

- Lurie, N.H.; Swaminathan, J.M. Is Timely Information Always Better? The Effect of Feedback Frequency on Decision Making. Organ. Behav. Hum. Decis. Process. 2009, 108, 315–329. [Google Scholar] [CrossRef]

- Anderson, N.H. Foundations of Information Integration Theory; Academic Press: Cambridge, MA, USA, 1981. [Google Scholar]

- Hair, J.F.; Black, W.C.; Babin, B.J.; Anderson, R.E. Multivariate Data Analysis; Prentice Hall: Upper Saddle River, NJ, USA, 2006. [Google Scholar]

- Peeters, G.; Czapinski, J. Positive-Negative Asymmetry in Evaluations: The Distinction between Affective and Informational Negativity Effects. Eur. Rev. Soc. Psychol. 1990, 1, 33–60. [Google Scholar] [CrossRef]

- Stanton, M.; Amsel, A. Adjustment to Reward Reduction (but No Negative Contrast) in Rats 11, 14, and 16 Days of Age. J. Comp. Physiol. Psychol. 1980, 94, 446–458. [Google Scholar] [CrossRef]

- Kahneman, D.; Tversky, A. Prospect Theory: An Analysis of Decision under Risk Author(s): Daniel Kahneman and Amos. Tver. Source. 2014, 47, 263–292. [Google Scholar]

- Carpenter, J.P. Punishing Free-Riders: The Role of Monitoring-Group Size, Second-Order Free-Riding and Coordination; Middlebury College: Bonn, Germany, 2000. [Google Scholar]

- Dickinson, D.L. The Carrot vs. the Stick in Work Team Motivation. Exp. Econ. 2001, 4, 107–124. [Google Scholar] [CrossRef]

- Eldenburg, L. The Use of Information in Total Cost Management. Account. Rev. 1994, 69, 96–121. [Google Scholar]

- Kolstad, J.T. When Motivation Is Intrinsic: Evidence from Surgeon Report Cards. Am. Econ. Assoc. Inf. Qual. 2016, 103, 2875–2910. [Google Scholar]

- Goodman, J.S.; Wood, R.E. Feedback Specificity, Learning Opportunities, and Learning. J. Appl. Psychol. 2004, 89, 809–821. [Google Scholar] [CrossRef]

- Riccobono, F.; Bruccoleri, M.; Größler, A. Groupthink and Project Performance: The Influence of Personal Traits and Interpersonal Ties. Prod. Oper. Manag. 2016, 25, 609–629. [Google Scholar] [CrossRef]

- Andreas, P.; Mueller, M.; Kudic, M. Regional Innovation Systems in Policy Laboratories. J. Open Innov. Technol. Mark. Complex. 2018, 4, 44. [Google Scholar] [CrossRef]

- Simmel, G. On Individuality and Social Forms: Selected Writings; University of Chicago Press: Chicago, IL, USA, 1971. [Google Scholar]

- Levitt, B.; March, J. Organizational Learning. Annu. Rev. Sociol. 1988, 14, 319–340. [Google Scholar] [CrossRef]

- Rand, W.; Rust, R.T. Agent-Based Modeling in Marketing: Guidelines for Rigor. Int. J. Res. Mark. 2011, 28, 181–193. [Google Scholar] [CrossRef]

- Miller, J.H.; Page, S.E. Complex Adaptive Systems: An Introduction to Computational Models of Social Life; Princeton University Pres: Princeton, NJ, USA, 2007. [Google Scholar]

- North, M.J.; Macal, C.M. Managing Business Complexity: Discovering Strategic Solutions with Agent-Based Modeling and Simulation; Oxford University Press: Oxford, UK, 2007. [Google Scholar]

- Brad, L.M.; Goldberg, D.E. Genetic Algorithms, Tournament Selection, and the Effects of Noise. Complex Syst. 1995, 9, 193–212. [Google Scholar]

- March, J.G. Exploration and Exploitation in Organizational Learning. Organ. Sci. 1991, 2, 71–87. [Google Scholar] [CrossRef]

- Jiménez-Jiménez, D.; Sanz-Valle, R. Innovation, Organizational Learning and Performance. J. Bus. Res. 2011, 64, 408–417. [Google Scholar] [CrossRef]

- Posen, H.E.; Lee, J.; Yi, S. The Power of Imperfect Imitation. Strateg. Manag. J. 2013, 164, 12. [Google Scholar] [CrossRef]

- Cantner, U.; Graf, H.; Toepfer, S. Structural Dynamics of Innovation Networks in German Leading-Edge Clusters. Jena Econ. Res. Pap. 2015, 26, 1–24. [Google Scholar]

- Rothgang, M.; Cantner, U.; Dehio, J.; Engel, D.; Fertig, M.; Graf, H.; Hinzmann, S.; Linshalm, E.; Ploder, M.; Scholz, A.M. Cluster Policy: Insights from the German Leading Edge Cluster Competition. J. Open Innov. Technol. Mark. Complex. 2017, 3, 1–20. [Google Scholar] [CrossRef]

- Grimm, V.; Revilla, E.; Berger, U.; Jeltsch, F.; Mooij, W.M.; Railsback, S.F.; Thulke, H.H.; Weiner, J.; Wiegand, T.; DeAngelis, D.L. Pattern-Oriented Modeling of Agent Based Complex Systems: Lessons from Ecology. Am. Assoc. Adv. Sci. 2005, 310, 987–991. [Google Scholar] [CrossRef]

- Chang, P.C.; Huang, W.H.; Ting, C.J. Dynamic Diversity Control in Genetic Algorithm for Mining Unsearched Solution Space in TSP Problems. Expert Syst. Appl. 2010, 37, 1863–1878. [Google Scholar] [CrossRef]

- Becker, B.; Gerhart, B. The Impact of Human Resource Management on Organizational Performance: Progress and Prospects. Acad. Manag. J. 1996, 39, 779–801. [Google Scholar]

- Wilkins, A.L.; Ouchi, W.G. Efficient Cultures: Exploring the Relationship Between Culture and Organizational Performance; Sage Publications: Thousand Oaks, CA, USA, 1983. [Google Scholar]

- Gilbert, N.; Terna, P. How to Build and Use Agent-Based Models in Social Science. Mind Soc. 2000, 1, 57–72. [Google Scholar] [CrossRef]

- Latané, B.; Nowak, A. Attitudes as Catastrophes: From Dimensions to Categories with Increasing Involvement. In Dynamical Systems in Social Psychology; Academic Press: San Diego, CA, USA, 1994; pp. 219–249. [Google Scholar]

| Category | Content | Reference |

|---|---|---|

| Ex-ante factor | Defensive deposition decreases feedback performance. | [40] |

| Public self-consciousness increases the needs of feedback | [41] | |

| Individualism increases feedback performance | [42,43,44] | |

| Difficult goal increases feedback performance | [45,46,47,48] | |

| Gap between goal and status of quo increases feedback performance | [9,49] | |

| Large scale of goal increases feedback performance | [50] | |

| Frequent assessment increase feedback performance | [21,22,34,51] | |

| Frequent assessment decrease feedback performance | [52,53] | |

| Reliability increases feedback performance | [12] | |

| Ex-post factor | Negative feedback system is more effective than positive one | [54,55,56,57,58,59,60] |

| Positive feedback system is more effective than negative one | [22,39,48,61,62] | |

| Positive delivery method increases acceptability of feedback result | [38] | |

| High result sharing decreases the needs of feedback | [14] | |

| Detail feedback increases short-term feedback performance | [10,63] |

| Variable. | Notation | Description |

|---|---|---|

| Institute id | i | i ∈ {1, 2, …., N} |

| Attribute string | , , …., } {0, 1, 2} | |

| Performance | (, … | |

| Learning rate | —uniform distribution | |

| Forgetting rate | —uniform distribution | |

| Self-adjustment rate | , = |

| Variable | Notation | Description |

|---|---|---|

| Population | N | number of institutes in the institute layer |

| Incentive | v | amount of incentive to high performance group |

| Time | t | t ∈ {1, 2, …., max iteration} |

| Sampling ratio | , = |

| Variable | Notation | Description |

|---|---|---|

| Ideal attribute string | , , …., } {0, 1, 2} | |

| Reflection ratio | c | |

| Average performance | ||

| Diversity | Hamming distance at period t |

| Hamming Distance Between Vector X and Y: | Euclidian Distance Between Vector X and Y: |

|---|---|

| Connection matrix: D(X,Y) | Information entropy: PD |

| S(X,Y) = D(X,Y) = 1 − S(X,Y) |

| Type | Target | Experiment |

|---|---|---|

| No feedback | High and low performance group | Experiment 1 |

| Positive feedback | High performance group | Experiment 2 |

| Low performance group | Experiment 4 | |

| Negative feedback | Low performance group | Experiment 3 |

| Positive and negative feedback | High and low performance group | Experiment 5 |

| Model. | Experiment 1 | Experiment 2 | Experiment 3 | Experiment 4 | Experiment 5 |

|---|---|---|---|---|---|

| No Feedback | Positive Feedback to High-Performer | Positive Feedback To Low-Performer | Negative Feedback to Low Performer | Mixed Policy | |

| Average Performance |  |  |  |  |  |

| Model | Experiment 1 | Experiment 2 | Experiment 3 | Experiment 4 | Experiment 5 |

|---|---|---|---|---|---|

| No Feedback | Positive Feedback to High-Performer | Positive Feedback to Low-Performer | Negative Feedback to Low Performer | Mixed Policy | |

| Convergence speed |  |  |  |  |  |

| Strategy | Changing The degree of Result Sharing | Changing the Type of Feedback Policy |

|---|---|---|

| Perfect Sharing → Low Sharing | Mixed Policy → Positive feedback to High-Performance Group | |

| Goal | Mid ~ Long-term objectives | Short ~ Mid-term objectives |

| Benefit | - Maximizing effectiveness of assessment system on the aspect of both performance and diversity - Relatively strong correction towards individual and organizational behavior | - Relatively improving effectiveness of the assessment system on the aspect of both performance and diversity - Keeping public transparency and trust toward the GI assessment system - Fair sharing of the assessment results |

| Cost | - Declining transparency and trust towards the GI assessment system - Unfair selection of institute | - Relative weak correction power toward organizational and individual behavior - Inefficiency of the optimal solution exploration |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cha, N.; Hwang, J.; Kim, E. How to Improve Performance and Diversity of Government-Funded Research Institute Ecosystem? Focus on Result Sharing and Feedback Policy. J. Open Innov. Technol. Mark. Complex. 2019, 5, 66. https://doi.org/10.3390/joitmc5030066

Cha N, Hwang J, Kim E. How to Improve Performance and Diversity of Government-Funded Research Institute Ecosystem? Focus on Result Sharing and Feedback Policy. Journal of Open Innovation: Technology, Market, and Complexity. 2019; 5(3):66. https://doi.org/10.3390/joitmc5030066

Chicago/Turabian StyleCha, Namjun, Junseok Hwang, and Eungdo Kim. 2019. "How to Improve Performance and Diversity of Government-Funded Research Institute Ecosystem? Focus on Result Sharing and Feedback Policy" Journal of Open Innovation: Technology, Market, and Complexity 5, no. 3: 66. https://doi.org/10.3390/joitmc5030066

APA StyleCha, N., Hwang, J., & Kim, E. (2019). How to Improve Performance and Diversity of Government-Funded Research Institute Ecosystem? Focus on Result Sharing and Feedback Policy. Journal of Open Innovation: Technology, Market, and Complexity, 5(3), 66. https://doi.org/10.3390/joitmc5030066