Differentially Private Actor and Its Eligibility Trace

Abstract

1. Introduction

2. Background

2.1. Differential Privacy

2.2. Off-Policy Actor-Critic

| Algorithm 1 Off-Policy Actor-Critic |

|

3. Differentially Private Actor and Its Eligibility Trace Learning

| Algorithm 2 Differentially Private Actor and Its Eligibility Trace Learning |

|

4. Experiments

| Algorithm 3 Differentially Private Critic (DP-Critic) |

|

| Algorithm 4 Differentially Private Actor and Critic (DP-Both) |

|

4.1. Experimental Setup

4.1.1. Patient Treatment Progression

4.1.2. Taxi-V2

4.2. Results

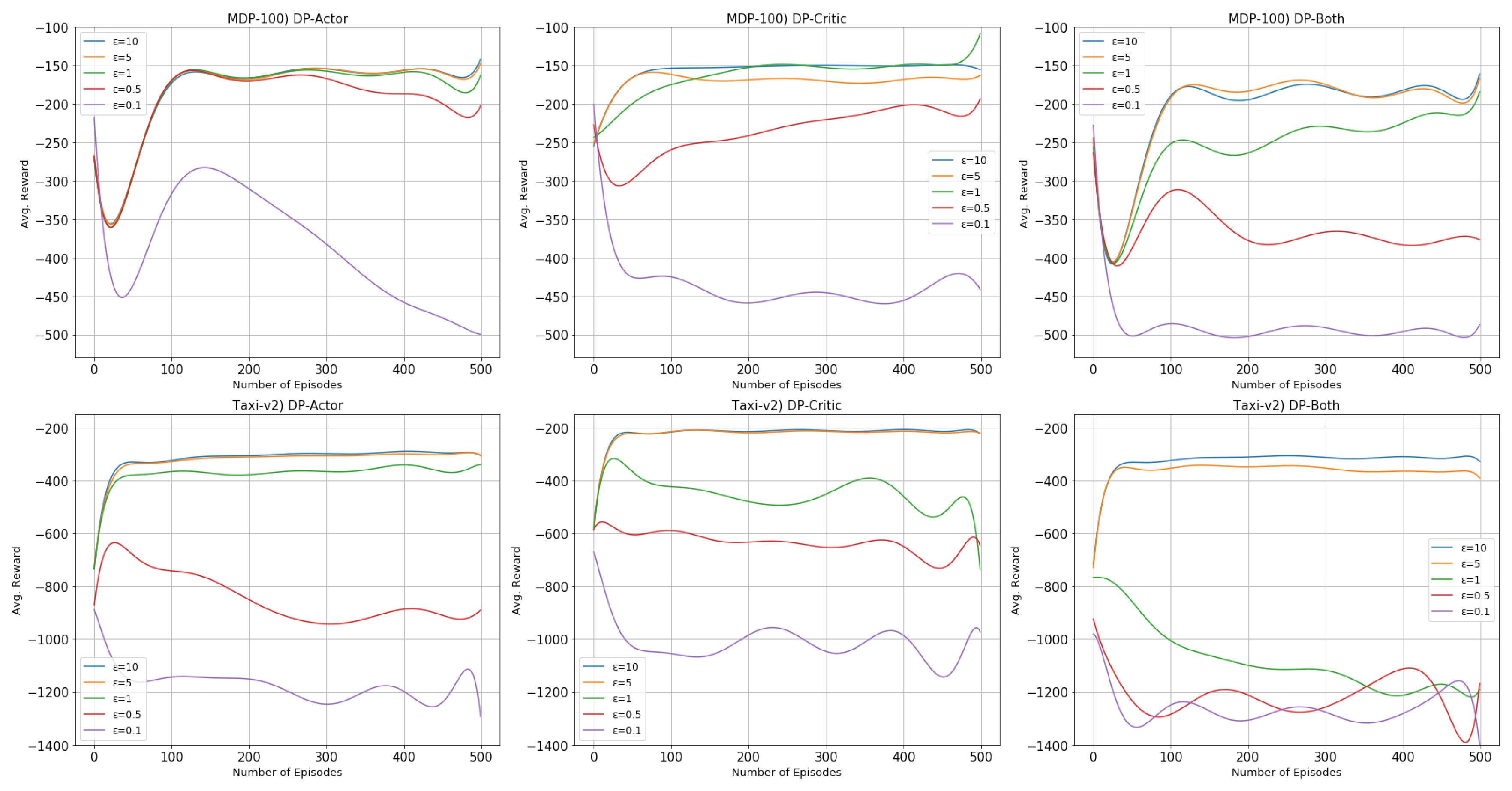

4.2.1. Performance and Privacy Budget

4.2.2. Anonymity for the Eligibility Traces

4.2.3. Reasons of Using the -Greedy Policy

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Sutton, R.S.; Barto, A.G. Reinforcement Learning: An Introduction, 2nd ed.; The MIT Press: Cambridge, MA, USA, 2018. [Google Scholar]

- Dwork, C. Differential Privacy. In Lecture Notes in Computer Science; Automata, Languages and Programming (ICALP 2006), Part II; Springer: Berlin/Heidelberg, Germany, 2006; Volume 4052, pp. 1–12. [Google Scholar]

- Abadi, M.; Chu, A.; Goodfellow, I.J.; McMahan, H.B.; Mironov, I.; Talwar, K.; Zhang, L. Deep Learning with Differential Privacy. In Proceedings of the 2016 ACM SIGSAC Conference on Computer and Communications Security, Vienna, Austria, 24–28 October 2016; pp. 308–318. [Google Scholar]

- Balle, B.; Gomrokchi, M.; Precup, D. Differentially Private Policy Evaluation. In JMLR Workshop and Conference Proceedings; Balcan, M.F., Weinberger, K.Q., Eds.; PMLR: New York City, NY, USA, 2016; Volume 48, pp. 2130–2138. [Google Scholar]

- Lebensold, J.; Hamilton, W.; Balle, B.; Precup, D. Actor Critic with Differentially Private Critic. arXiv 2019, arXiv:1910.05876. [Google Scholar]

- Konda, V.R.; Tsitsiklis, J.N. Actor-Critic Algorithms. In Advances in Neural Information Processing Systems 12; Solla, S.A., Leen, T.K., Müller, K., Eds.; MIT Press: Cambridge, MA, USA, 2000; pp. 1008–1014. [Google Scholar]

- Konda, V.R.; Tsitsiklis, J.N. On Actor-Critic Algorithms. SIAM J. Control Optim. 2003, 42, 1143–1166. [Google Scholar] [CrossRef]

- Dwork, C.; McSherry, F.; Nissim, K.; Smith, A. Calibrating Noise to Sensitivity in Private Data Analysis. In Theory of Cryptography, TCC 2006, Lecture Notes in Computer Science; TCC, Halevi, S., Rabin, T., Eds.; Springer: Berlin/Heidelberg, Germany, 2006; Volume 3876, pp. 265–284. [Google Scholar]

- Xie, T.; Thomas, P.S.; Miklau, G. Privacy Preserving Off-Policy Evaluation. arXiv 2019, arXiv:1902.00174. [Google Scholar]

- Song, S.; Chaudhuri, K.; Sarwate, A.D. Stochastic Gradient Descent with Differentially Private Updates. In Proceedings of the 2013 IEEE Global Conference on Signal and Information Processing, Austin, TX, USA, 3–5 December 2013; pp. 245–248. [Google Scholar]

- Chaudhuri, K.; Monteleoni, C.; Sarwate, A.D. Differentially Private Empirical Risk Minimization. J. Mach. Learn. Res. 2011, 12, 1069–1109. [Google Scholar] [PubMed]

- Sutton, R.S.; Maei, H.R.; Precup, D.; Bhatnagar, S.; Silver, D.; Szepesvári, C.; Wiewiora, E. Fast Gradient-Descent Methods for Temporal-Difference Learning with Linear Function Approximation. In Proceedings of the 26th Annual International Conference on Machine Learning; ACM: New York, NY, USA, 2009; pp. 993–1000. [Google Scholar]

- Wang, B.; Hegde, N. Privacy-Preserving Q-Learning with Functional Noise in Continuous Spaces. In Advances in Neural Information Processing Systems; NeurIPS: Vancouver, BC, Canada, 2019; pp. 11323–11333. [Google Scholar]

- Sutton, R.S. Temporal Credit Assignment in Reinforcement Learning. Ph.D. Thesis, University of Massachusetts, Amherst, MA, USA, 1984. [Google Scholar]

- Degris, T.; White, M.; Sutton, R.S. Linear Off-Policy Actor-Critic. arXiv 2012, arXiv:1205.4839. [Google Scholar]

- Dwork, C. Differential Privacy: A Survey of Results. In Theory and Applications of Models of Computation, TAMC 2008, Lecture Notes in Computer Science; TAMC, Agrawal, M., Du, D., Duan, Z., Li, A., Eds.; Springer: Berlin/Heidelberg, Germany, 2008; Volume 4978, pp. 1–19. [Google Scholar]

- Maei, H.R. Gradient Temporal-Difference Learning Algorithms. Ph.D. Thesis, University of Alberta, Edmonton, AB, Canada, 2011. [Google Scholar]

- Komorowski, M.; Celi, L.A.; Badawi, O.; Gordon, A.C.; Faisal, A.A. The artificial intelligence clinician learns optimal treatment strategies for sepsis in intensive care. Nat. Med. 2018, 24, 1716–1720. [Google Scholar] [CrossRef] [PubMed]

- Dietterich, T.G. Hierarchical reinforcement learning with the MAXQ value function decomposition. J. Artif. Intell. Res. 2000, 13, 227–303. [Google Scholar] [CrossRef]

| Parameters | Ranges |

|---|---|

| {0.001, 0.005, 0.01, 0.05, 0.1, 0.5} | |

| {0.001, 0.005, 0.01, 0.05, 0.1, 0.5} | |

| {0.001, 0.005, 0.01, 0.05, 0.1, 0.5} | |

| {0, 0.2, 0.4, 0.6, 0.8, 0.99} | |

| {0.1, 0.5, 1, 5, 10} |

| MDP-100 | 0.01 | 0.5 | 0.5 | 0.8 | 10 | 10 |

| Taxi-v2 | 0.05 | 0.05 | 0.5 | 0 | 10 | 10 |

| Non-DP | DP-Actor | DP-Critic | DP-Both | |

|---|---|---|---|---|

| MDP-100 | 30.26 | 68.04 | 27.1 | 66.63 |

| Taxi-v2 | 43.47 | 57.21 | 50.03 | 52.72 |

| DP-Actor | DP-Critic | DP-Both | |

|---|---|---|---|

| MDP-100 | 0.021 (0.0042) | 0.839 (0.006) | : 0.014 (0.003) |

| : 0.497 (0.006) | |||

| Taxi-v2 | −0.0008 (0.0003) | 0.035 (0.001) | : −0.0001 (0.0003) |

| : 0.0146 (0.0007) |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Seo, K.; Yang, J. Differentially Private Actor and Its Eligibility Trace. Electronics 2020, 9, 1486. https://doi.org/10.3390/electronics9091486

Seo K, Yang J. Differentially Private Actor and Its Eligibility Trace. Electronics. 2020; 9(9):1486. https://doi.org/10.3390/electronics9091486

Chicago/Turabian StyleSeo, Kanghyeon, and Jihoon Yang. 2020. "Differentially Private Actor and Its Eligibility Trace" Electronics 9, no. 9: 1486. https://doi.org/10.3390/electronics9091486

APA StyleSeo, K., & Yang, J. (2020). Differentially Private Actor and Its Eligibility Trace. Electronics, 9(9), 1486. https://doi.org/10.3390/electronics9091486