Using Augmented Reality and Internet of Things for Control and Monitoring of Mechatronic Devices

Abstract

1. Introduction

1.1. Motivation

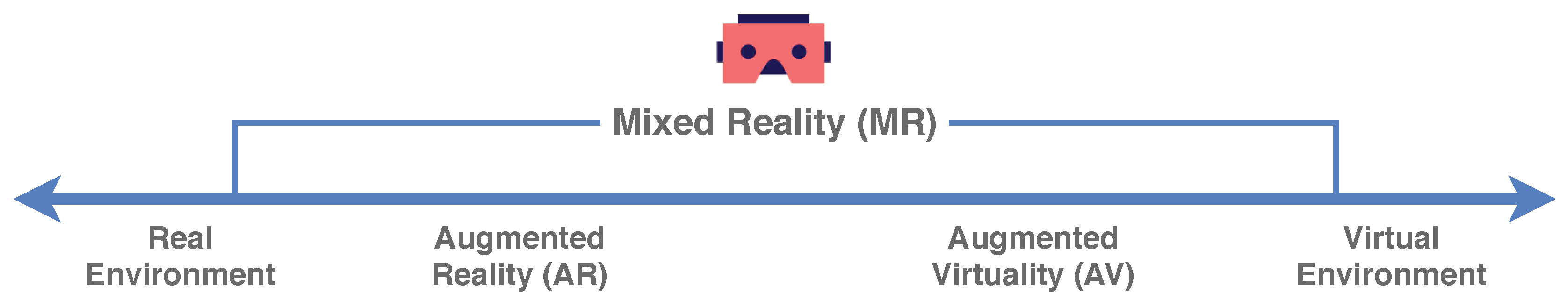

- British company dealing with the implementation of Industry 4.0 principlesOne of the modern trends in the industrial field is the use of increasingly powerful, more durable and more affordable mobile devices, such as a smartphone or tablet. Such devices allow the use of modern technologies that have not been widely used in industry so far. We mean augmented and mixed reality and its applications for control and monitoring of devices. The use of augmented or mixed reality creates a qualitatively new and better way of solving HMI. In conventional approaches, it is necessary to select a specific device (sensor, actuator ⋯) on the display device so we need to know its specific location in the production hall or its ID. After selecting the device, the required data (for example in the form of a graph or a table) is displayed on the display unit. When using the augmented or mixed reality application, it is possible to operatively search for individual sensors within the production hall environment and interactively display the required values or change the settings and parameters of the given device via the display unit. An interesting feature would be the advanced functionality that would allow you to see in the environment which device the selected device is connected to or is forwarding data. It is the localization and identification of individual sensors and actuators that is currently an open problem that can be solved by several approaches.

- Slovak company dealing with tire diagnosticsThe use of augmented or mixed reality in the diagnosis of different devices is also an open question that is being addressed by several companies. During the meeting, one of the industrial partners formulated a request for a tire fault diagnosis system via a headset or mobile device for mixed reality. At the same time, this system should make it possible to display diagnostic information from various devices in the factory, which is a similar requirement as in the previous point.

- Slovak manufacturer of advanced cutting machinesThe use of augmented or mixed reality for the maintenance and operation of complex machines, the area of which reaches several tens of meters, is currently also an open topic, which requires a comprehensive multidisciplinary approach. International leaders in cutting technologies have already begun to implement such solutions. Therefore, a discussion about the possibilities of implementing maintenance and diagnostic systems using extended reality also took place with a Slovak company in this area (Figure 2).

- Control of sophisticated industrial devices with limited accessAnother of the industrial partners demanded the use of augmented or mixed reality in the control and diagnostics of various sophisticated devices, to which only a limited group of employees have access. This eliminates the need to implement physical control panels, to which even a regular employee can have access. The requirement is that the device can be controlled only by an employee who has access to a mobile device (smartphone/tablet) with an augmented or mixed reality application. In addition to security, such an application also brings the advantages described in the previous points.

1.2. Related Research

2. Materials & Methods

- Using a QR Code—The name of the QR code comes from Quick Response, as this code has been developed for quick decoding. It is a two-dimensional bar code printed either on paper or in digital form. Using a mobile device camera we can decode the encoded information. The QR code is a square matrix consisting of square modules. The color of the QR code is black and white. The advantages of using QR codes include the rapid generating of a new QR code for application system build and extension. Next advantage is that each device or sensor can have a unique QR code, so using a QR code we can distinguish the objects with the same shape. The drawback is that we need to keep the mobile device parallel to the code when the recognition process is running, and close enough to the device.

- Using an image—It is possible to generate augmented or mixed reality using the two-dimensional image. The benefit of this approach is that one image is enough for a single object and it is easy to make images so we do not need any complicated or advanced tools or devices. Only a mobile device is needed for development and use of the application. It is also easy to extend the system. However, there are drawbacks while using the images. Also, as in the case of QR code, we must keep the mobile device close enough to the object when recognizing it, and the mobile device must be parallel to the image, or at the same angle as when making the images. The problem may also be with the same-looking objects not to be distinguished by the application based on the image. It is easier to use QR code for those situations. After researching the creation of an appropriate image, we find that the image has to satisfy certain properties. The image size (width and height) should range from 500 to 1000 pixels. The image can not include patterns of repetition, low texture and low contrast. The image color can be tricky because the computer sees the image in shades of gray. With this technology, colors which can be differentiated very well by the human eye, can be almost the same for computer. The textured portion layout must be uniform, contain only little text, and the white portion must be kept as small as possible.

- Using a three-dimensional (3D) model—An interesting option is a creation of 3D map based on 3D objects. This approach is very similar to that used above. An application based on the device’s live camera stream is seeking conformity with the model that was created. This approach has the advantage of being able to locate the target object from a greater distance and from any angle. In addition, the mobile application does not lose as easily found an object as in the case of the previous two approaches. The drawback is that creating of 3D map is a complicated and lengthy task, which may also reduce the ease of scalability of the application system. In practice, image recognition is usually done using convolutional neural networks [24]. At present, convolutional neural networks are also used in natural language processing research and other areas of computational intelligence [25].

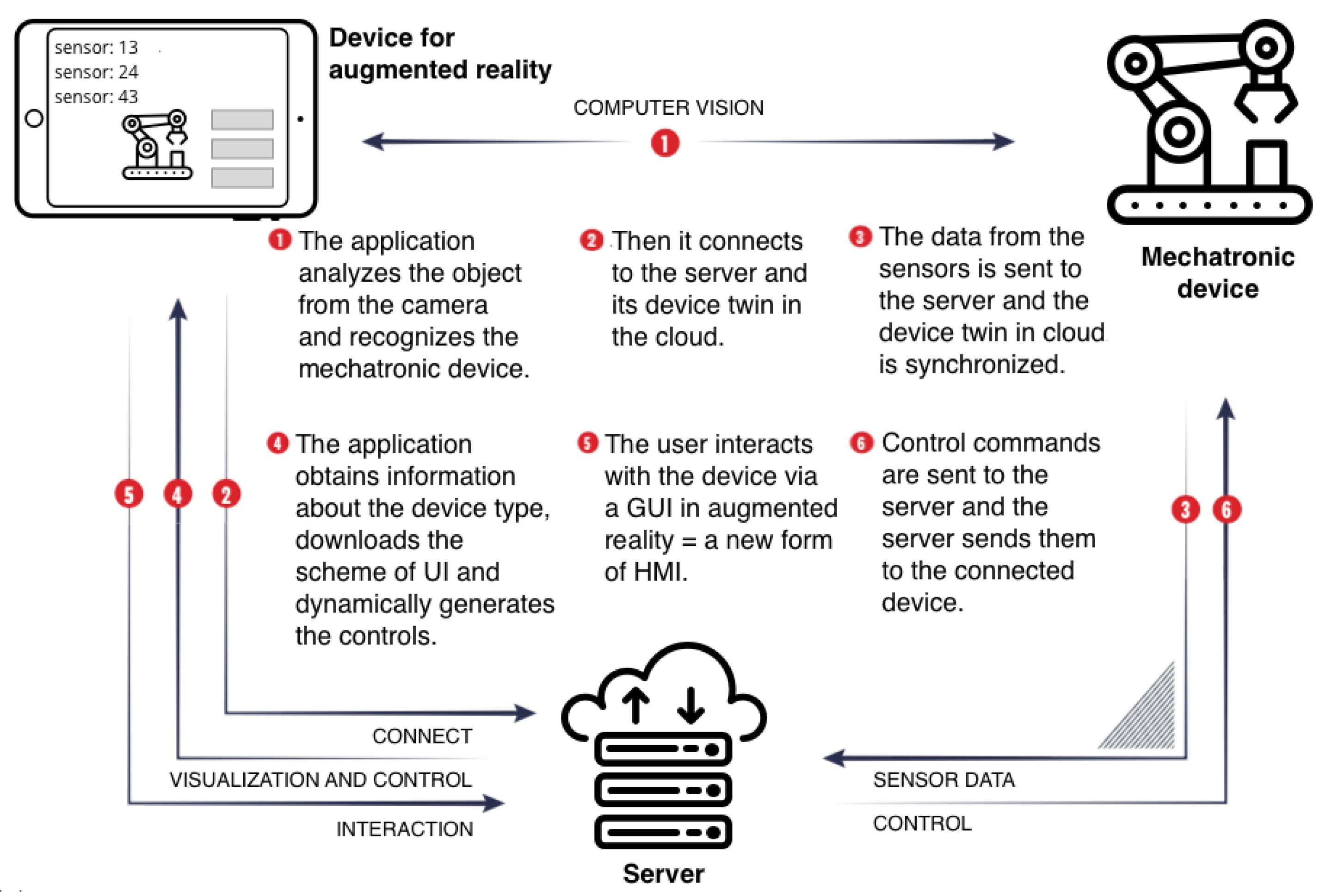

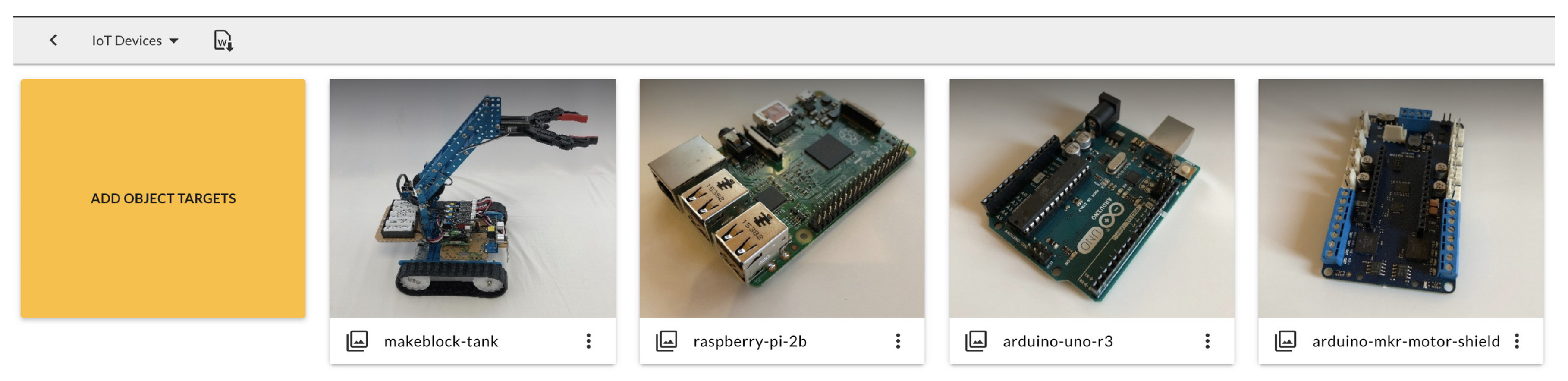

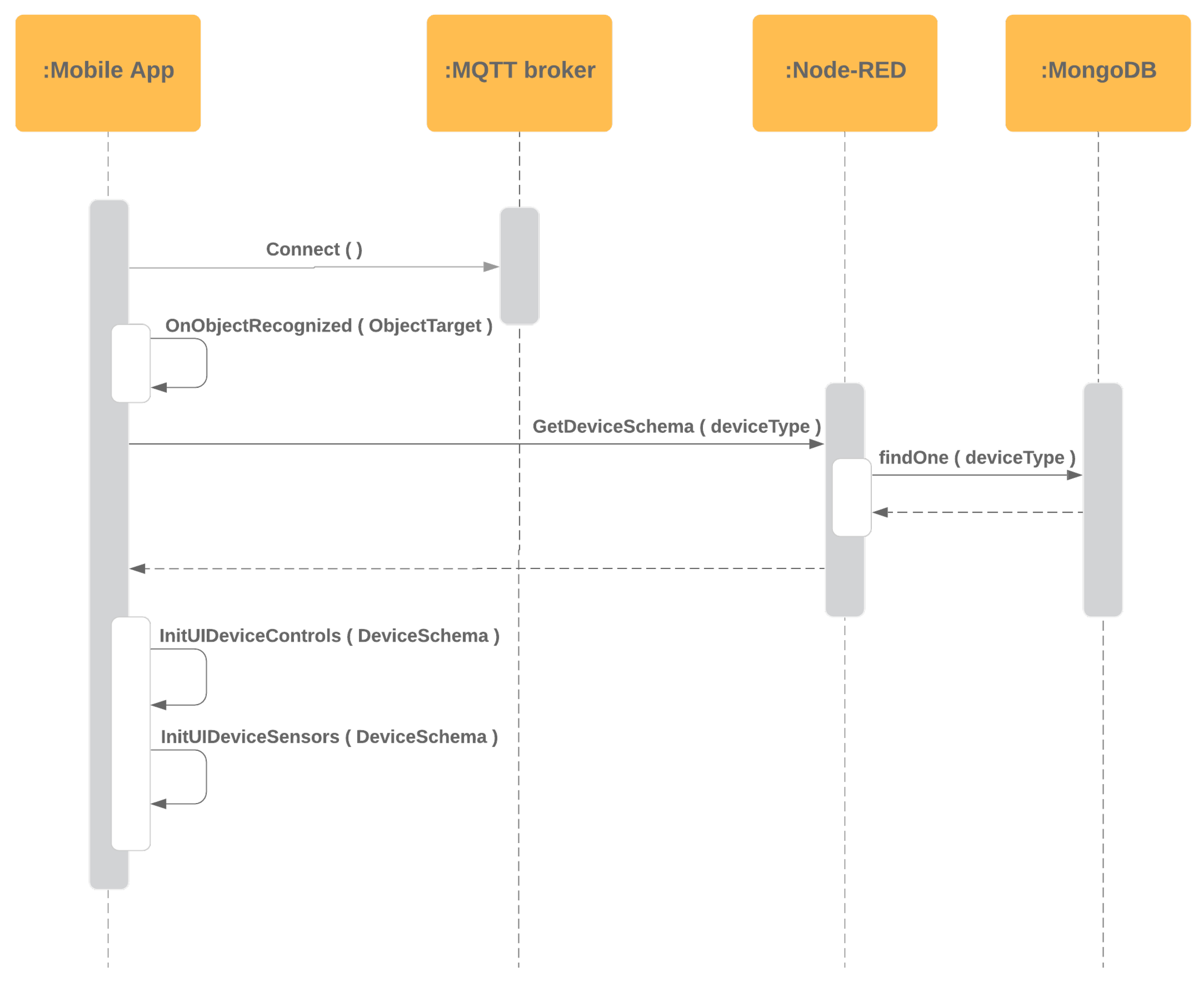

- The software application analyzes the image from the camera of the mobile device and recognizes the mechatronic systemThe augmented reality mobile app recognizes a real mechatronic device using a camera and a 3D map created in the Wikitude Studio [26]. The 3D map of the mechatronic device is created using photographs of the device taken from several angles. Subsequently, the Wikitude SDK (software development kit) augmented and mixed reality library can interpret this 3D map from a database. The database is stored in a software application on an Apple iPad tablet. The advantage of this method is the ability to recognize the object from any angle. Consequently, even with less visibility tracking does not have to be interrupted as Wikitude can also store also close surroundings of the object. Thus, the implementation of the proposed solution can do without conventional methods of recognizing objects relying on QR codes.

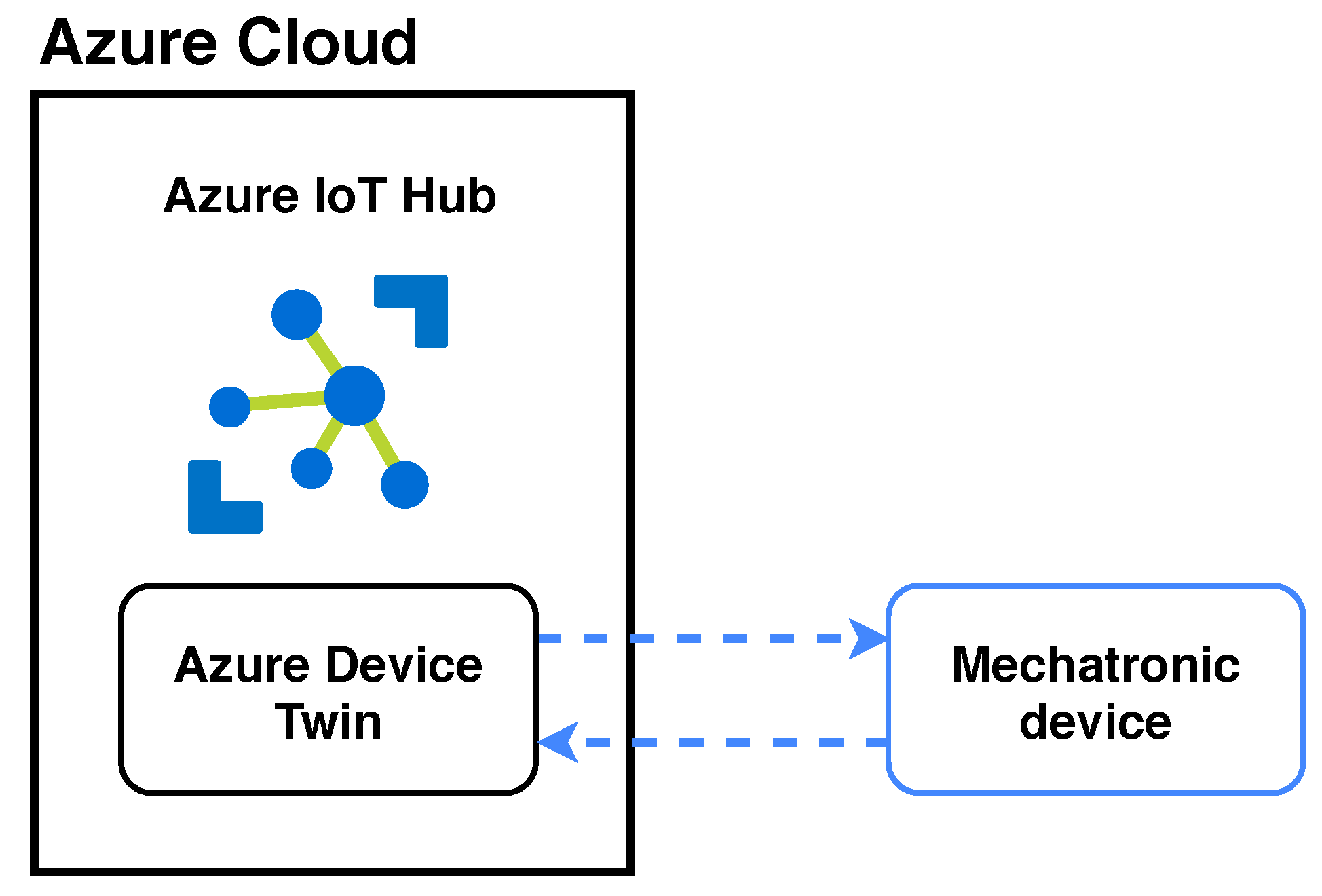

- The mobile device connects to the server and the mechatronic system’s device twin in the cloudThe mobile device is connected to the cloud where the recognized mechatronic system has its digital copy (device twin).

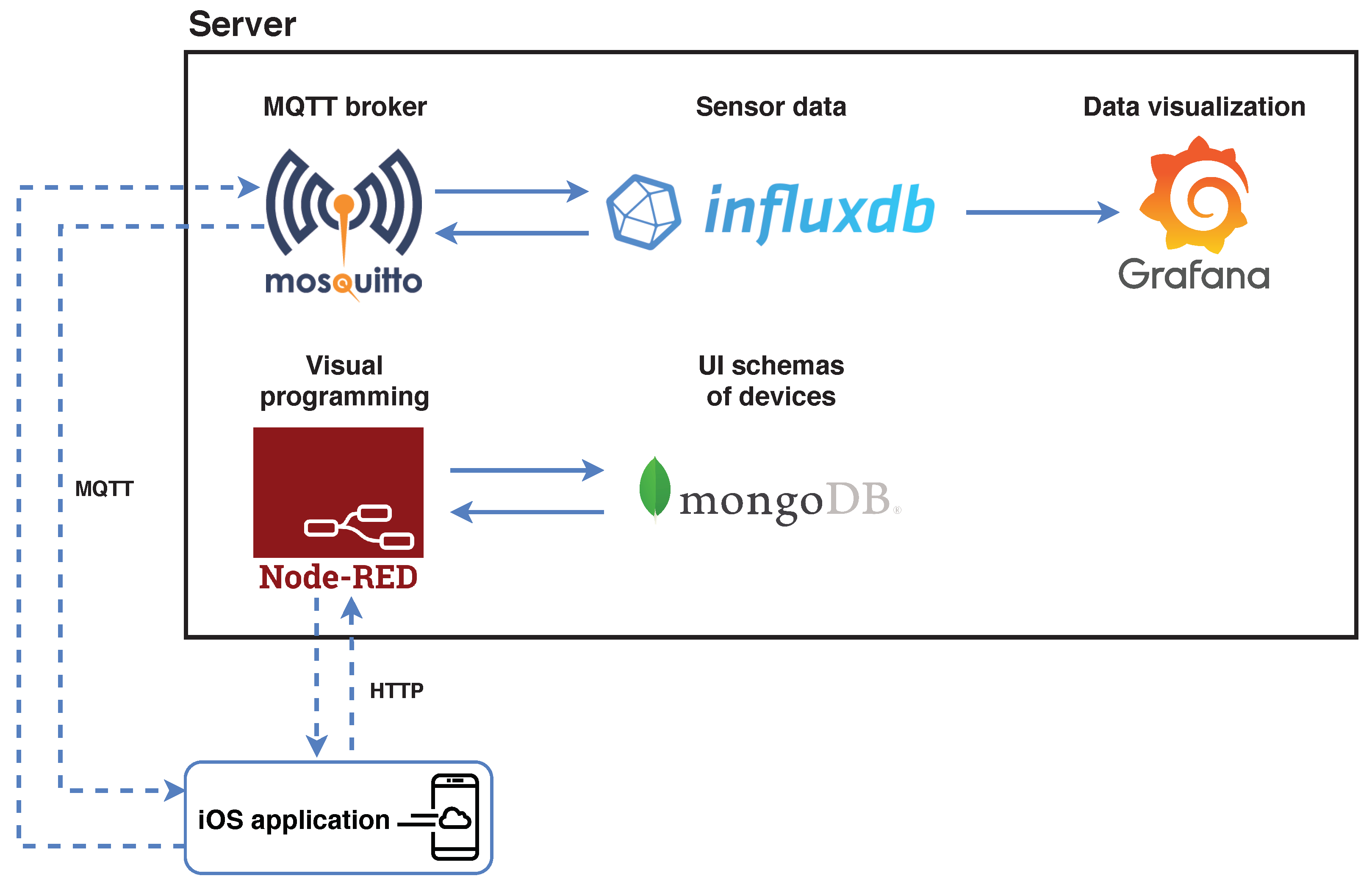

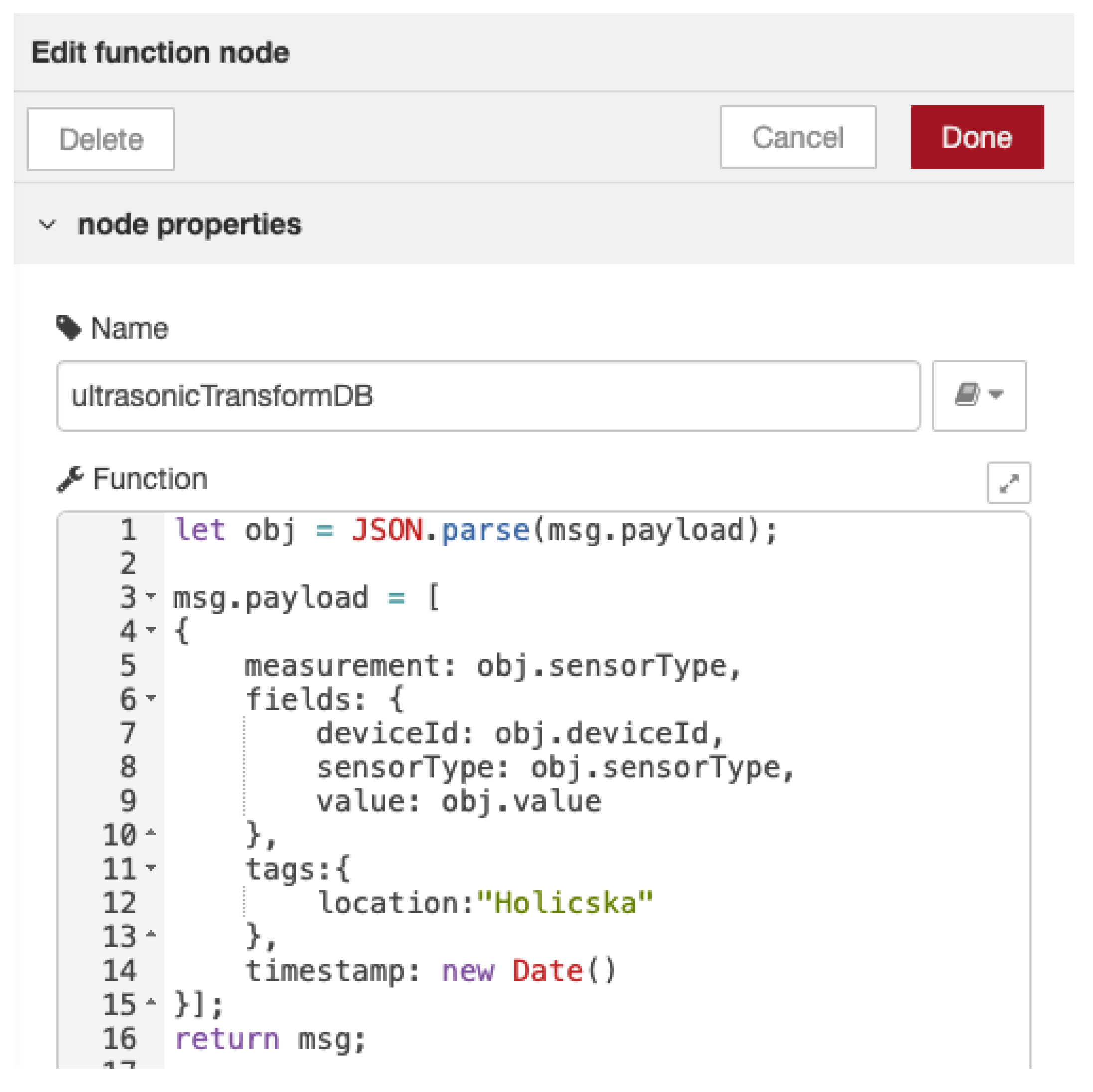

- The data from sensors of the mechatronic device is sent to the server, and the device twin in the cloud is synchronizedThe mechatronic device automatically sends data under its identifier from sensors to the server where the data is also stored. For this purpose, the InfluxDB database is used [27], designed for time-dependent data which can then be visualized in the Grafana environment [28]. At the same time, the digital copy of the mechatronic device is synchronized at the level of the Microsoft Azure Device Twin, which ensures the visibility of current data even in the cloud environment [29].

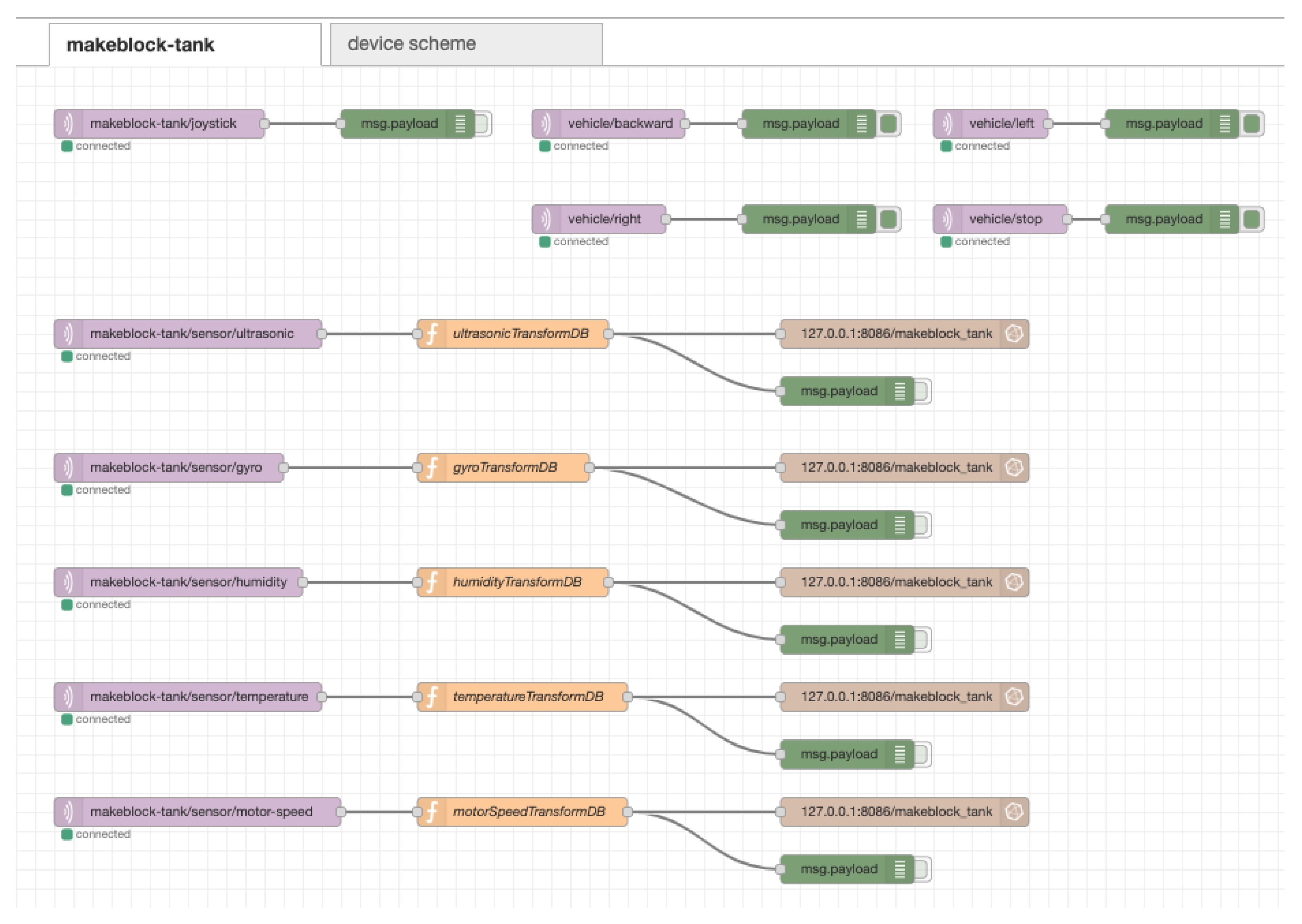

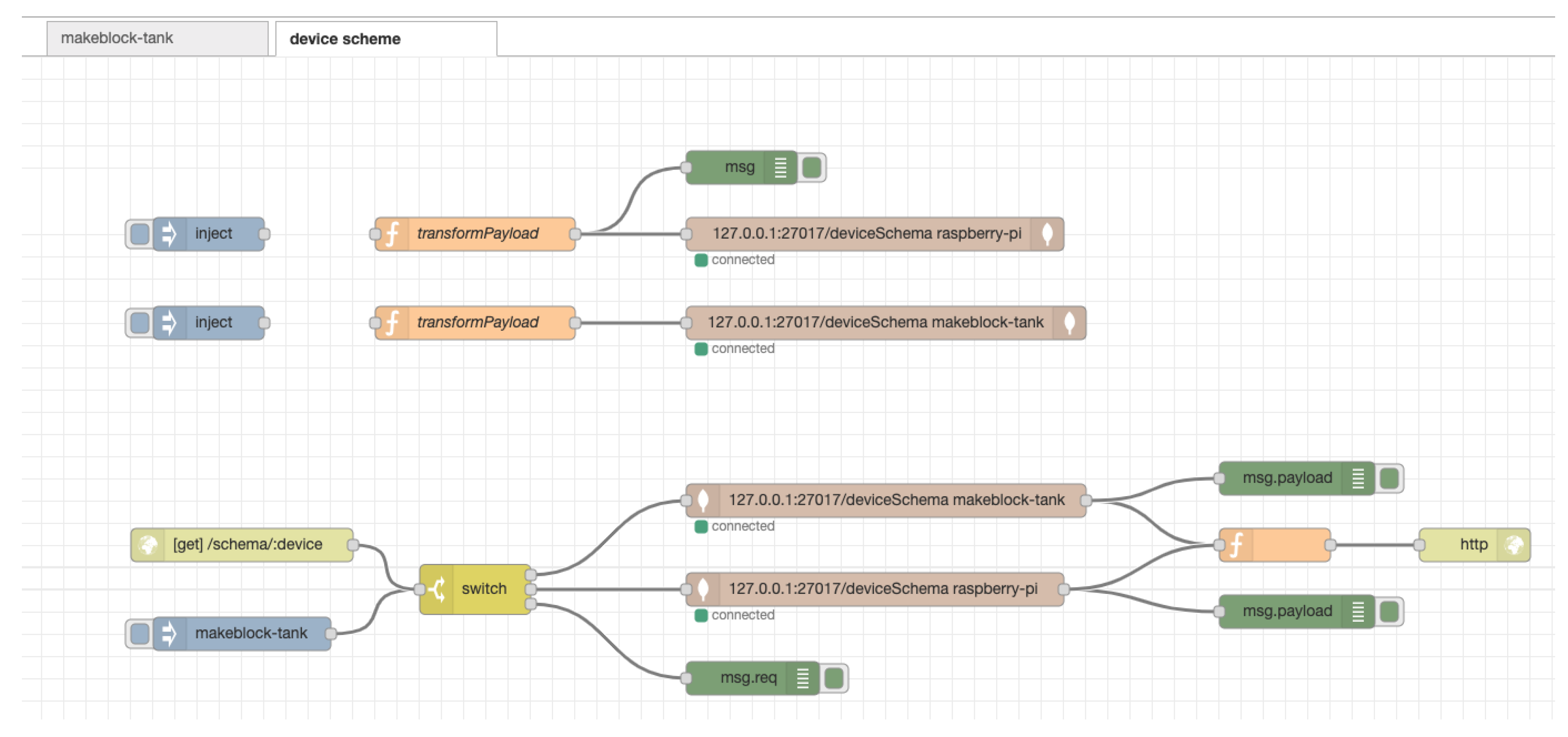

- The application obtains information about the type of the mechatronic device, downloads the definition of the user interface and draws a graphical interface for control and monitoring of the systemThe proposed system works in such a way that the mobile application recognizes the mechatronic device and—according to its identifier- obtains a unique definition scheme of the user interface for the needs of its monitoring and control. The concept of definition schemes for a dynamic generation of a graphical user interface in augmented reality is one of the pillars of modularity of the implemented solution and at the same time one of the application benefits. The mobile application has access to these definition schemes due to the connection to the database. The connection is realized by means of visual flow-based programming in the Node-RED environment [30], where a suitable scheme is obtained based on the parameter.

- The user interacts with the mechatronic device through a graphical interface in the augmented reality—a new form of HMIBased on a unique definition scheme, the mobile application displays a graphical user interface in augmented reality consisting of two parts. The first part is diagnostics and displays current data from available sensors. The second part is control and shows the control elements directly designed for the mechatronic device. Subsequently, the user is allowed to interact with the mechatronic device through a graphical interface in augmented reality, which is one of the new modern forms of human-machine interface (HMI).

- Control commands are sent to the server which sends them to the connected mechatronic deviceControl commands are sent from the mobile device to the server using the MQTT communication protocol. On the server, they are processed and executed. The software application on the mechatronic device listens on the MQTT topic and subsequently sends these requests to sensors and actuators via serial communication.

3. Results

3.1. Server

3.1.1. Flow-Based Programming of Communication Interface Using Node-RED

3.1.2. Definition Scheme for Generating a Graphical User Interface

- element—element name (joystick, button)

- label—is optional, as in the case of a joystick it is not necessary and represents the description of the element on the screen

- posX and posY—determine the generation of the element to the given position, while the elements for control we try to place to the right side of the mechatronic device

- subTopic—specifies the path for a specific control within the MQTT channel, or the path for the sensor from which the data will be read

3.2. Mobile Device and Augmented Reality Application

- Creating photos of an object from different angles (up to 30 photos can be inserted)

- Convert photos to Wikitude Object Target Collection (.wto)

- Use of the .wto file in the project in the Unity 3D engine

Generating of User Interface Using Definition Scheme

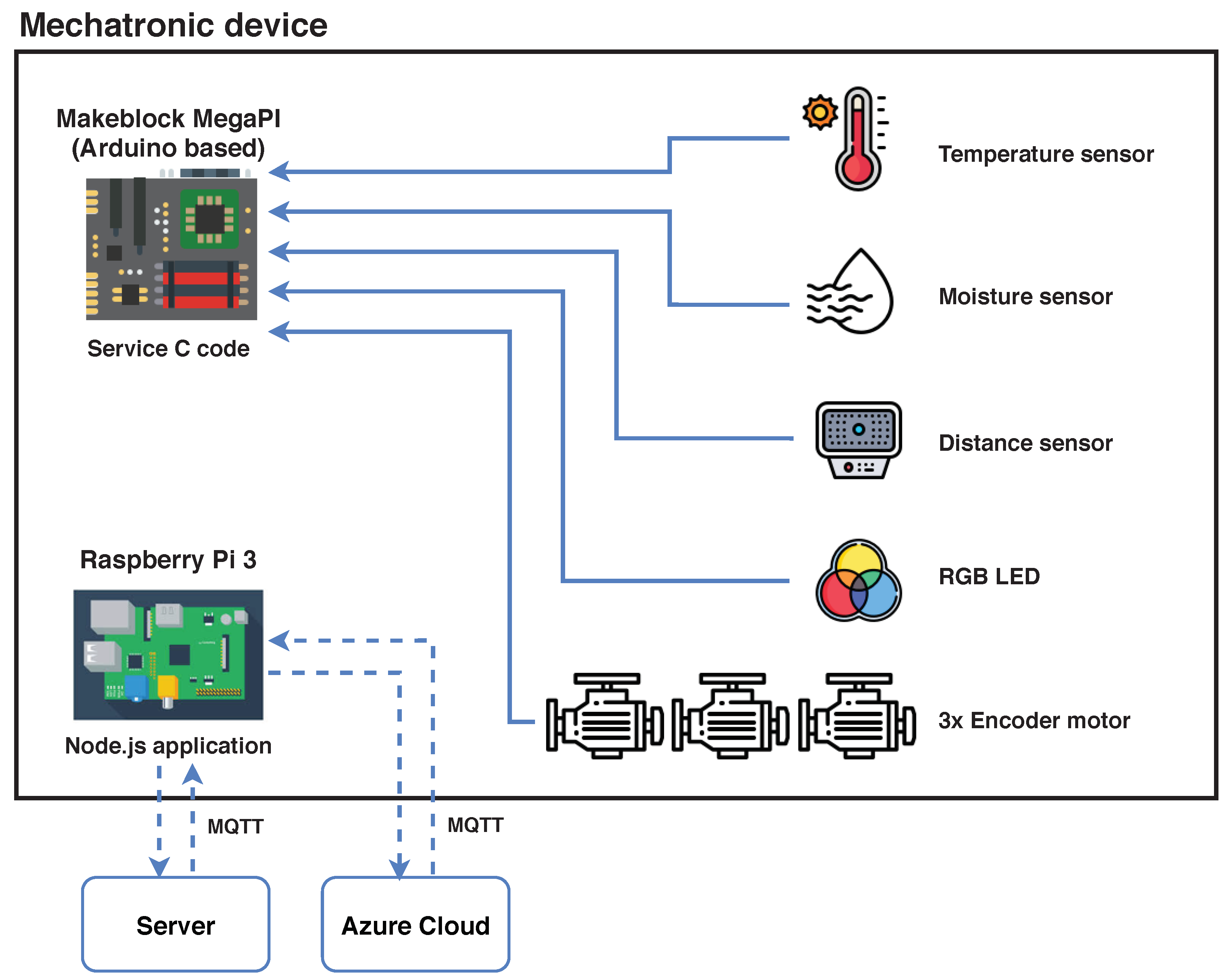

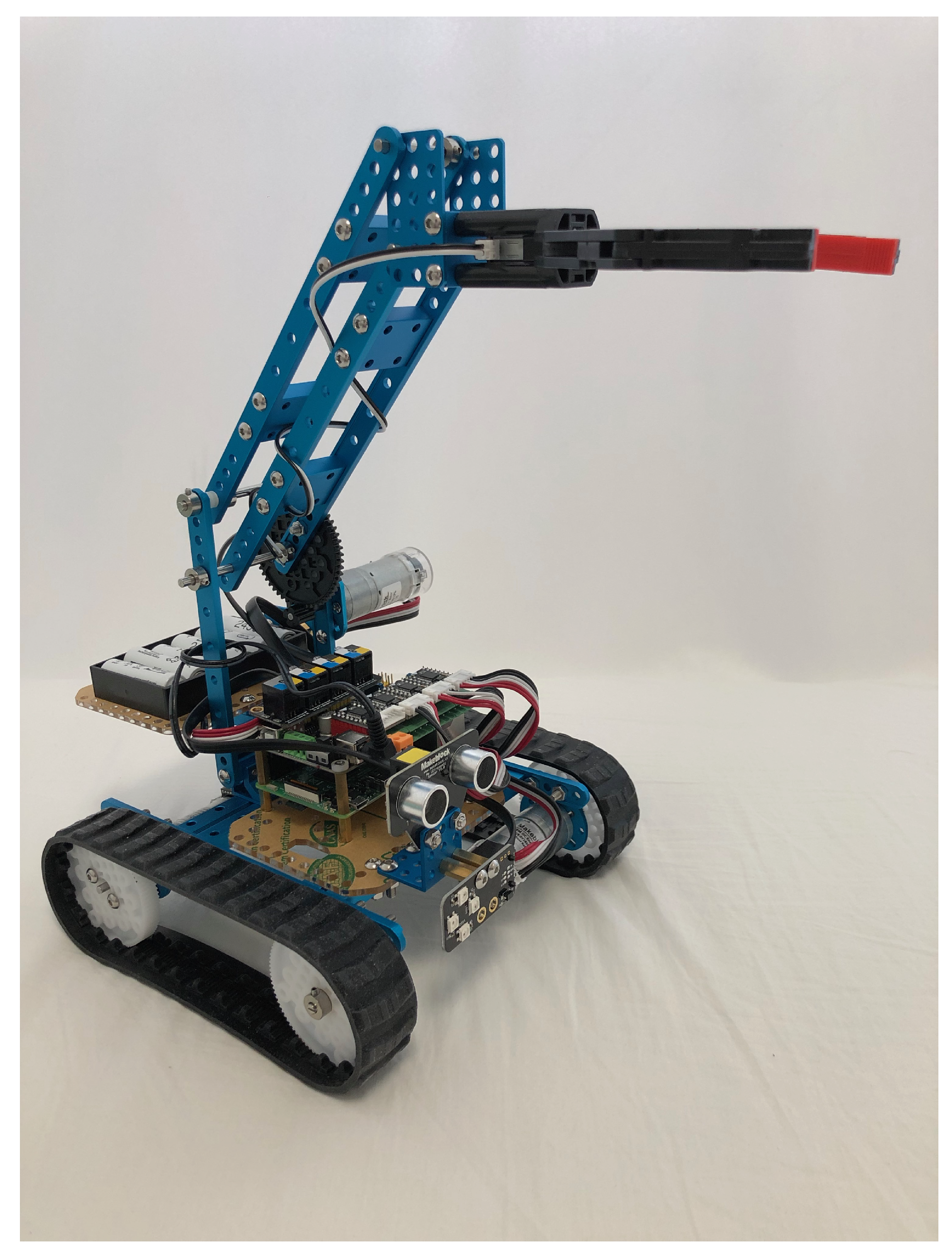

3.3. Laboratory Mechatronic Device

- serial communication access path

- the body of the function to be executed after initialization

3.4. Device Twin in Azure Cloud

4. Conclusions

- Design of a modern form of control and monitoring of mechatronic systems using the Internet of Things and augmented reality.

- Implementation of an application system for control and monitoring of mechatronic systems connected to the Internet of Things with a new form of human-machine interface based on detection and recognition of 3D objects.

- Design and implementation of the concept of definition schemes for dynamic generation of a graphical user interface for control and monitoring of mechatronic systems.

- Verification of the designed and implemented application system on a laboratory mechatronic system.

5. Patents

Author Contributions

Funding

Conflicts of Interest

References

- Kozák, Š; Ružický, E.; Štefanovič, J.; Schindler, F. Research and education for industry 4.0: Present development. In Proceedings of the 2018 Cybernetics & Informatics (K & I), Lazy pod Makytou, Slovakia, 31 January–3 February 2018; pp. 1–8. [Google Scholar] [CrossRef]

- Wolniak, R.; Saniuk, S.; Grabowska, S.; Gajdzik, B. Identification of Energy Efficiency Trends in the Context of the Development of Industry 4.0 Using the Polish Steel Sector as an Example. Energies 2020, 13, 2867. [Google Scholar] [CrossRef]

- Lin, Y.-C.; Yeh, C.-C.; Chen, W.-H.; Hsu, K.-Y. Implementation Criteria for Intelligent Systems in Motor Production Line Process Management. Processes 2020, 8, 537. [Google Scholar] [CrossRef]

- Dobrowolska, M.; Knop, L. Fit to Work in the Business Models of the Industry 4.0 Age. Sustainability 2020, 12, 4854. [Google Scholar] [CrossRef]

- Kościelniak, H.; Łęgowik-Małolepsza, M.; Łęgowik-Świącik, S. The Application of Information Technologies in Consideration of Augmented Reality and Lean Management of Enterprises in the Light of Sustainable Development. Sustainability 2019, 11, 2157. [Google Scholar] [CrossRef]

- The Foundry. VR? AR? MR? Sorry, I’M Confused. Available online: https://www.foundry.com/insights/vr-ar-mr/vr-mr-ar-confused (accessed on 16 June 2020).

- Sánchez-Herrera-Baeza, P.; Cano-de-la-Cuerda, R.; Oña-Simbaña, E.D.; Palacios-Ceña, D.; Pérez-Corrales, J.; Cuenca-Zaldivar, J.N.; Gueita-Rodriguez, J.; Balaguer-Bernaldo de Quirós, C.; Jardón-Huete, A.; Cuesta-Gomez, A. The Impact of a Novel Immersive Virtual Reality Technology Associated with Serious Games in Parkinson’s Disease Patients on Upper Limb Rehabilitation: A Mixed Methods Intervention Study. Sensors 2020, 20, 2168. [Google Scholar] [CrossRef] [PubMed]

- Bucsai, S.; Kučera, E.; Haffner, O.; Drahoš, P. Control and Monitoring of IoT Devices Using Mixed Reality Developed by Unity Engine. In Proceedings of the 2020 Cybernetics & Informatics (K & I), Velke Karlovice, Czech Republic, 29 January–1 February 2020; pp. 1–8. [Google Scholar] [CrossRef]

- Milgram, P.; Kishino, F. A taxonomy of mixed reality visual displays. IEICE Trans. Inf. Syst. 1994, 77, 1321–1329. [Google Scholar]

- Salamone, F.; Belussi, L.; Danza, L.; Galanos, T.; Ghellere, M.; Meroni, I. Design and Development of a Nearable Wireless System to Control Indoor Air Quality and Indoor Lighting Quality. Sensors 2017, 17, 1021. [Google Scholar] [CrossRef] [PubMed]

- Geng, Z.; Chen, N.; Han, Y.; Ma, B. An improved intelligent earlywarning method based on MWSPCA and itsapplication in complex chemical processes. Can. J. Chem. Eng. 2020, 98, 1307–1318. [Google Scholar] [CrossRef]

- Minchala, L.I.; Peralta, J.; Mata-Quevedo, P.; Rojas, J. An Approach to Industrial Automation Based on Low-Cost Embedded Platforms and Open Software. Appl. Sci. 2020, 10, 4696. [Google Scholar] [CrossRef]

- Erasmus, J.; Vanderfeesten, I.; Traganos, K.; Keulen, R.; Grefen, P. The HORSE Project: The Application of Business Process Management for Flexibility in Smart Manufacturing. Appl. Sci. 2020, 10, 4145. [Google Scholar] [CrossRef]

- Trifa, V.; Guinard, D.; Carrera, D. Web Thing Model. Available online: http://model.webofthings.io/ (accessed on 16 June 2020).

- Guinard, D.; Trifa, V.; Pham, T.; Liechti, O. Towards physical mashups in the web of things. In Proceedings of the 2009 Sixth International Conference on Networked Sensing Systems (INSS), Pittsburgh, PA, USA, 17–19 June 2009; pp. 1–4. [Google Scholar]

- Croatti, A.; Ricci, A. Towards the web of augmented things. In Proceedings of the 2017 IEEE International Conference on Software Architecture Workshops (ICSAW), Gothenburg, Sweden, 5–7 April 2017; pp. 80–87. [Google Scholar]

- Rambach, J.; Pagani, A.; Stricker, D.; Aleksy, M.; Schmitt, J.; Langfinger, M.; Schneider, M.; Schotten, H.; Malignaggi, A.; Ko, M. Augmented things: Enhancing AR applications leveraging the Internet of Things and universal 3d object tracking. In Proceedings of the IEEE International Conference on Industrial Technology (ICIT), Nantes, France, 9–13 October 2017; Volume 22, p. 25. [Google Scholar]

- Lewicki, P. Controlling Lights with the Hololens and Internet of Things. Available online: http://blog.htmlfusion.com/controlling-lights-with-the-hololens-and-internet-of-thingsatch-one-of-philippes-appearances-in-june/ (accessed on 1 June 2020).

- Sterlink, I.; Swaroop, P. Control with Your Smart Devices by Staring and Gesturing. Available online: https://blog.arduino.cc/2016/07/26/control-with-your-smart-devices-by-staring-and-gesturing/ (accessed on 16 June 2020).

- Blanco-Novoa, Ó.; Fraga-Lamas, P.; A Vilar-Montesinos, M.; Fernández-Caramés, T.M. Creating the Internet of Augmented Things: An Open-Source Framework to Make IoT Devices and Augmented and Mixed Reality Systems Talk to Each Other. Sensors 2020, 20, 3328. [Google Scholar] [CrossRef] [PubMed]

- Gallash, A. Thingworx–plattform zur integration herausfordernder anforderungen auf dem shopfloor. In Produktions-und Verfügbarkeits-Optimierung Mit Smart Data Ansätzen; sierke VERLAG-Internationaler Wissenschaftsverlag: Goettingen, Germany, 2018; pp. 83–92. [Google Scholar]

- FOXON Automation. What Is the Smart Maintenance Project in ŠKODA AUTO a.s. Available online: https://www.youtube.com/watch?v=v48vZt7aNw4 (accessed on 17 June 2020).

- Leskovský, R. Modern Methods of Control and Diagnostics of Mechatronic Devices Using IoT and Mixed Reality. Ph.D. Thesis, Slovak University of Technology in Bratislava, Bratislava, Slovakia, 2020. (In Slovak). [Google Scholar]

- Han, Y.; Zhang, S.; Geng, Z.; Wei, Q.; Ouyang, Z. Level set based shape prior and deep learning for image segmentation. IET Image Process. 2020, 14, 183–191. [Google Scholar] [CrossRef]

- Han, Y.; Ding, N.; Geng, Z.; Wang, Z.; Chu, C. An optimized long short-term memory network based fault diagnosis model for chemical processes. J. Process. Control 2020, 92, 161–168. [Google Scholar] [CrossRef]

- Fierro, F.A.S.; Manosalvas, C.A.P.; Hidrobo, S.R.A.; Rodríguez, N.N.C. Comparativa técnica de herramientas para realidad aumentada: Wikitude, Vuforia y ARtoolkit. Revista Científica Axioma 2019, 19, 86–96. [Google Scholar]

- Ganz, J.; Beyer, M.; Plotzky, C. Time-Series Based Solution Using InfluxDB. Available online: https://beyermatthias.de/papers/2017/Time-series_based_solution_using_influxdb.pdf (accessed on 17 June 2020).

- Betke, E.; Kunkel, J. Real-time I/O-monitoring of HPC applications with SIOX, elasticsearch, Grafana and FUSE. In International Conference on High Performance Computing; Springer: Cham, Switzerland, 2017; pp. 174–186. [Google Scholar]

- Stackowiak, R. Azure IoT Hub. In Azure Internet of Things Revealed; Apress: Berkeley, CA, USA, 2019; pp. 73–85. [Google Scholar]

- Blanco-Novoa, Ó.; Fraga-Lamas, P.; Vilar-Montesinos, M.A.; Fernández-Caramés, T.M. Towards the Internet of Augmented Things: An Open-source Framework to Interconnect IoT Devices and Augmented Reality Systems. Proceedings 2020, 42, 50. [Google Scholar] [CrossRef]

- Contributors of Azure IoT Hub. Understand and Use Device Twins in IoT Hub. Available online: https://docs.microsoft.com/en-us/azure/iot-hub/iot-hubdevguide-device-twins/ (accessed on 17 June 2020).

- Low light SLAM & Marker Triggering. Available online: https://support.wikitude.com/support/discussions/topics/5000082906 (accessed on 1 August 2020).

- Wikitude. SDK 5 vs. SDK 6 Comparison. Available online: https://www.youtube.com/watch?v=zeu8XIJyxKE (accessed on 1 August 2020).

- Wikitude. Input Plugins API. Available online: https://www.wikitude.com/external/doc/documentation/latest/unity/inputpluginsapiunity.html#input-plugins-api (accessed on 1 August 2020).

- Dykier, M. IMG 0135. Available online: https://www.youtube.com/watch?v=1vBxK-9HQ8c (accessed on 1 August 2020).

| GUI Element | Element Property | Use in a Functional Element |

|---|---|---|

| text | element: string label: string unit?: string posX: float posY: float subTopic string | sensors |

| joystick | element: string posX: float posY: float subTopic string | controls |

| button | element: string label: string posX: float posY: float subTopic string | controls |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Stark, E.; Kučera, E.; Haffner, O.; Drahoš, P.; Leskovský, R. Using Augmented Reality and Internet of Things for Control and Monitoring of Mechatronic Devices. Electronics 2020, 9, 1272. https://doi.org/10.3390/electronics9081272

Stark E, Kučera E, Haffner O, Drahoš P, Leskovský R. Using Augmented Reality and Internet of Things for Control and Monitoring of Mechatronic Devices. Electronics. 2020; 9(8):1272. https://doi.org/10.3390/electronics9081272

Chicago/Turabian StyleStark, Erich, Erik Kučera, Oto Haffner, Peter Drahoš, and Roman Leskovský. 2020. "Using Augmented Reality and Internet of Things for Control and Monitoring of Mechatronic Devices" Electronics 9, no. 8: 1272. https://doi.org/10.3390/electronics9081272

APA StyleStark, E., Kučera, E., Haffner, O., Drahoš, P., & Leskovský, R. (2020). Using Augmented Reality and Internet of Things for Control and Monitoring of Mechatronic Devices. Electronics, 9(8), 1272. https://doi.org/10.3390/electronics9081272