Mitigating Self-Heating in Solid State Drives for Industrial Internet-of-Things Edge Gateways

Abstract

1. Introduction

- We evaluate the impact of a SSD’s micro-architectural parameters in its internal queuing system on the performance of the drive. To the best of our knowledge, we carry this activity for the first time considering the IIoT scenario peculiarities;

- We provide a methodology orthogonal to the state-of-the-art throttling to guard-band self-heating of the drive assuming a monitoring of its internal temperature. This can be achieved by a proper tailoring of the internal drive command queues to achieve the desired power throttling level;

- We base all our explorations on a co-simulation framework that processes Trace-Driven Benchmarks (TPC-IoT [15]) being able to calculate SSD quality metrics (e.g., IOPS, latency, etc.) according to the measurements results of a 3D NAND Flash chip. The adaptation of the benchmark results to the IIoT scenario conferring solidness to our assumptions.

2. Related Works

3. Background and Methods Applied

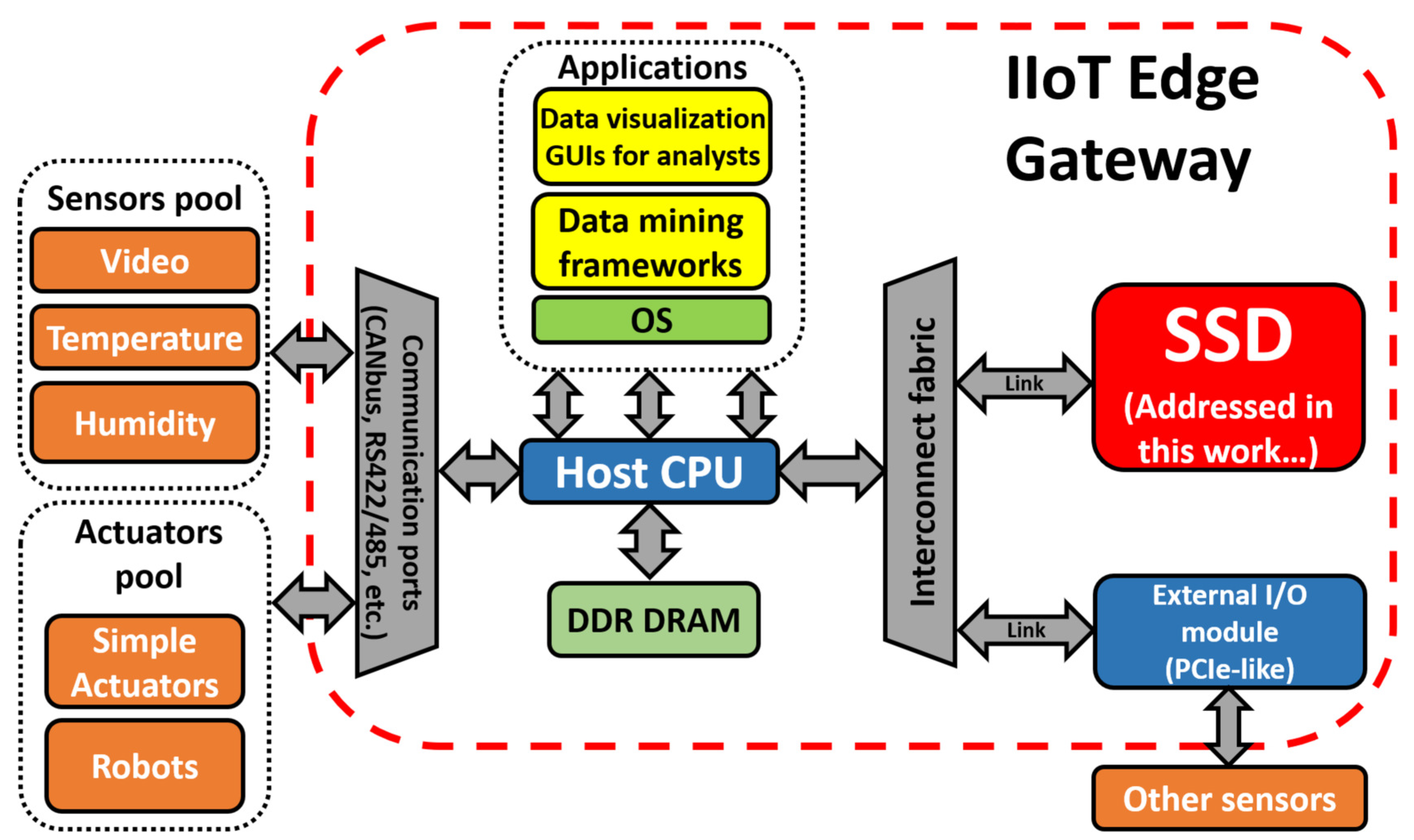

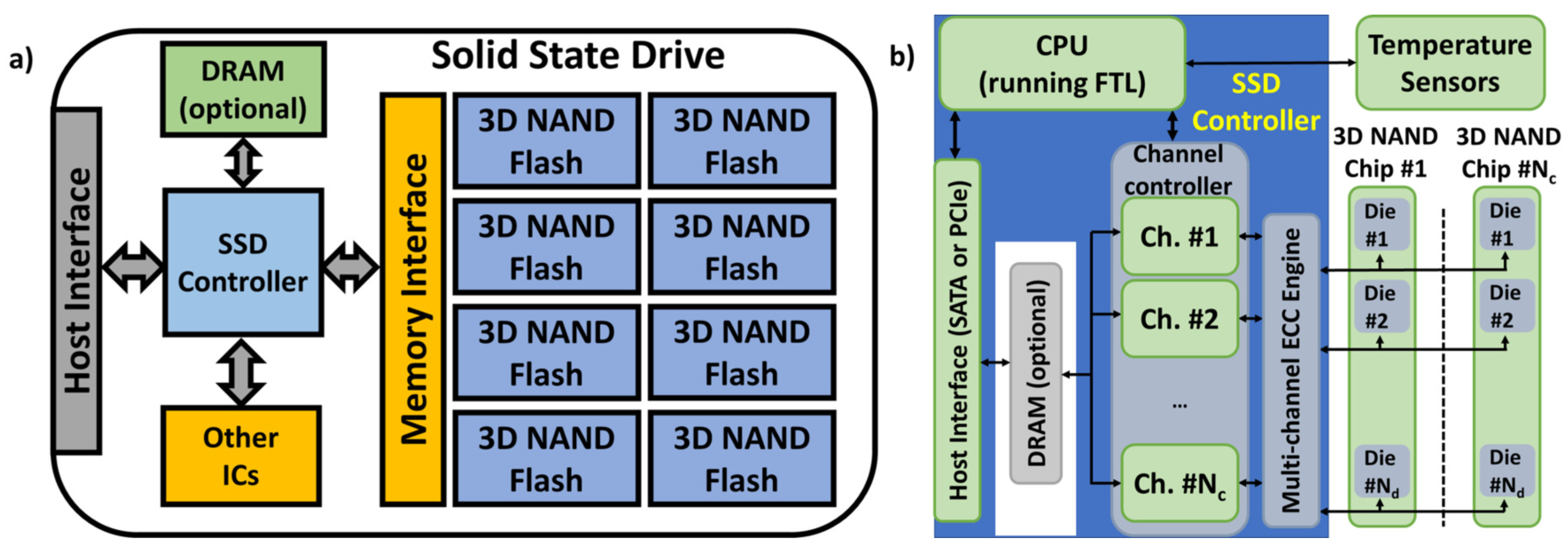

3.1. Edge Gateway and SSD Architectures

3.2. Characterization and Simulation Tools

4. Exploring How to Mitigate the Self-Heating Issue

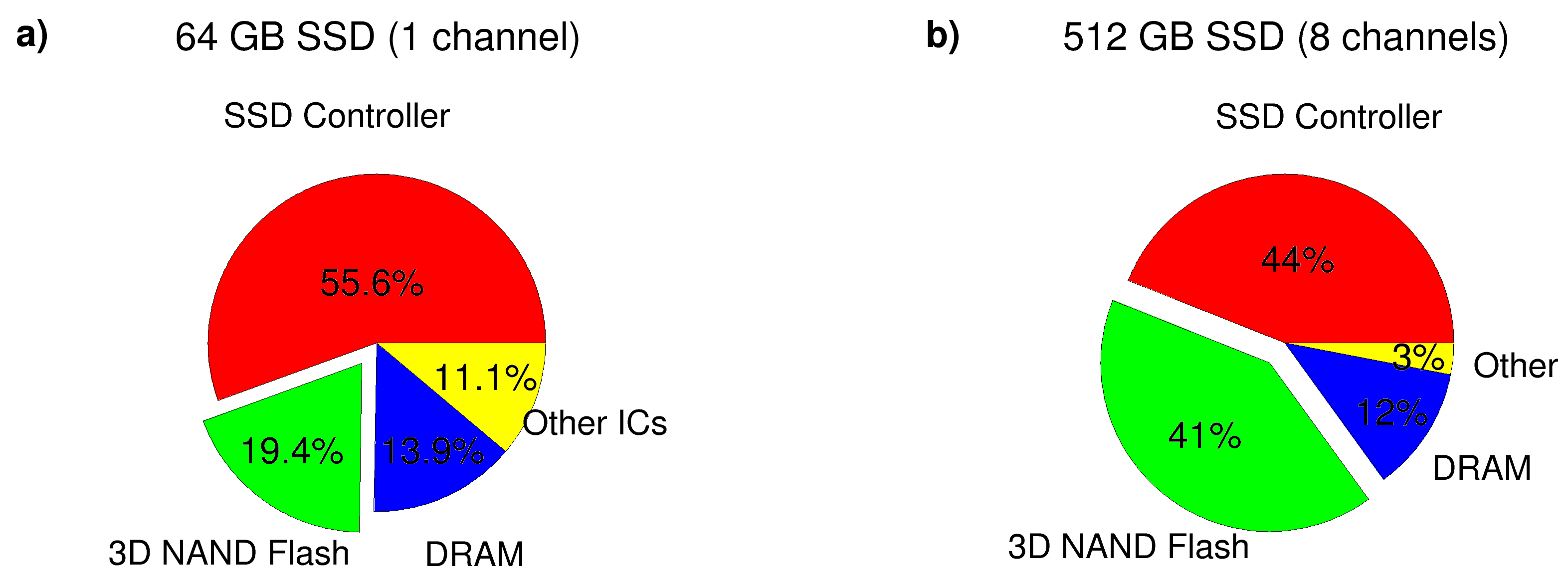

4.1. Characterizing the Power Consumption in SSDs

- The SSD controller that is an Application Specific Integrated Circuit (ASIC) whose power consumption linearly increase with time according to the amount of data to process and manage;

- The 3D NAND Flash memory sub-system whose power contribution depends on the amount of parallel accessed channels and on the operation performed (i.e., data read, write/program or erase);

- The DRAM buffer used as a cache or as a temporary storage for FTL-metadata structures;

- Other ICs and passive components for power supply and temperature control.

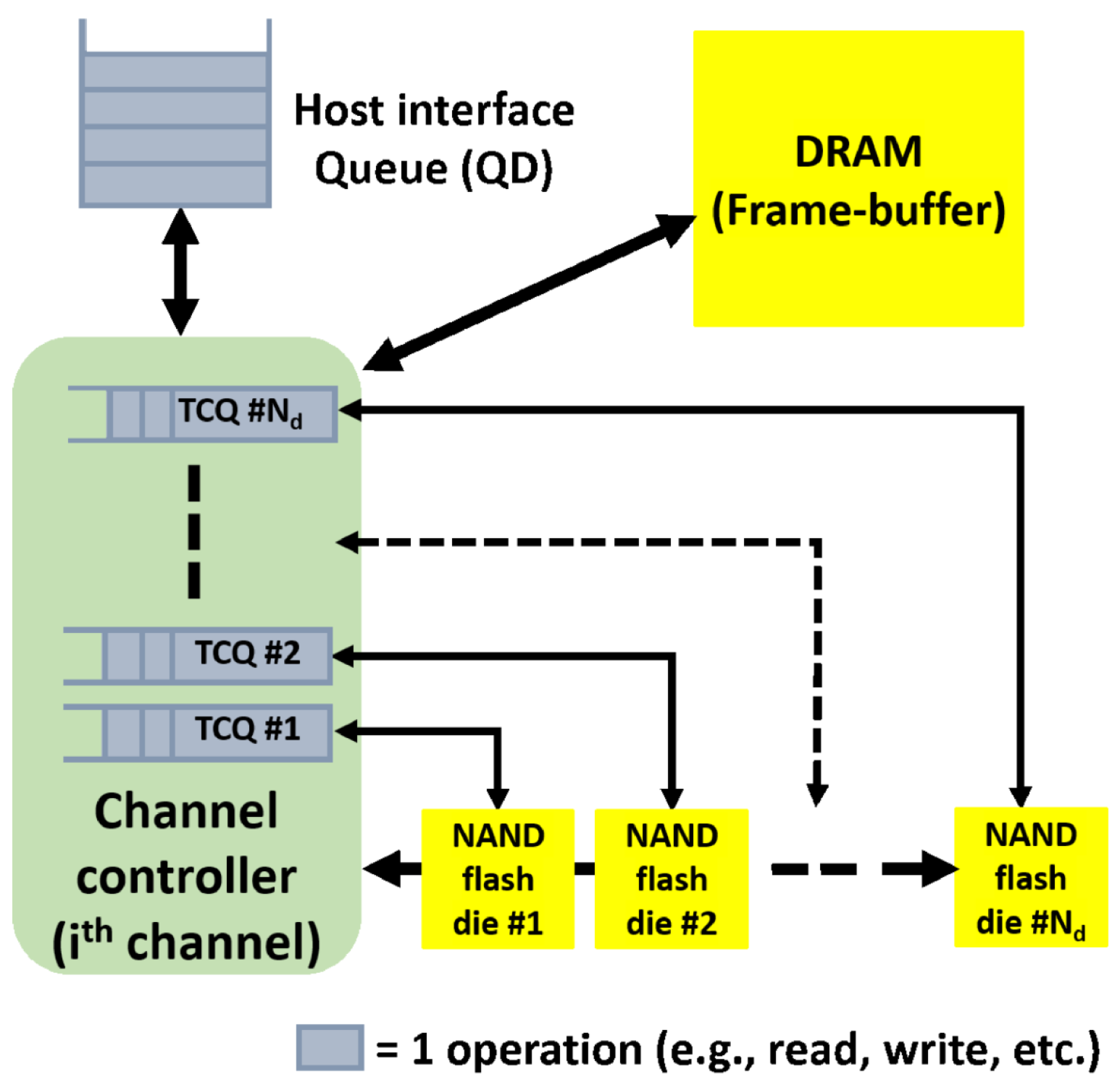

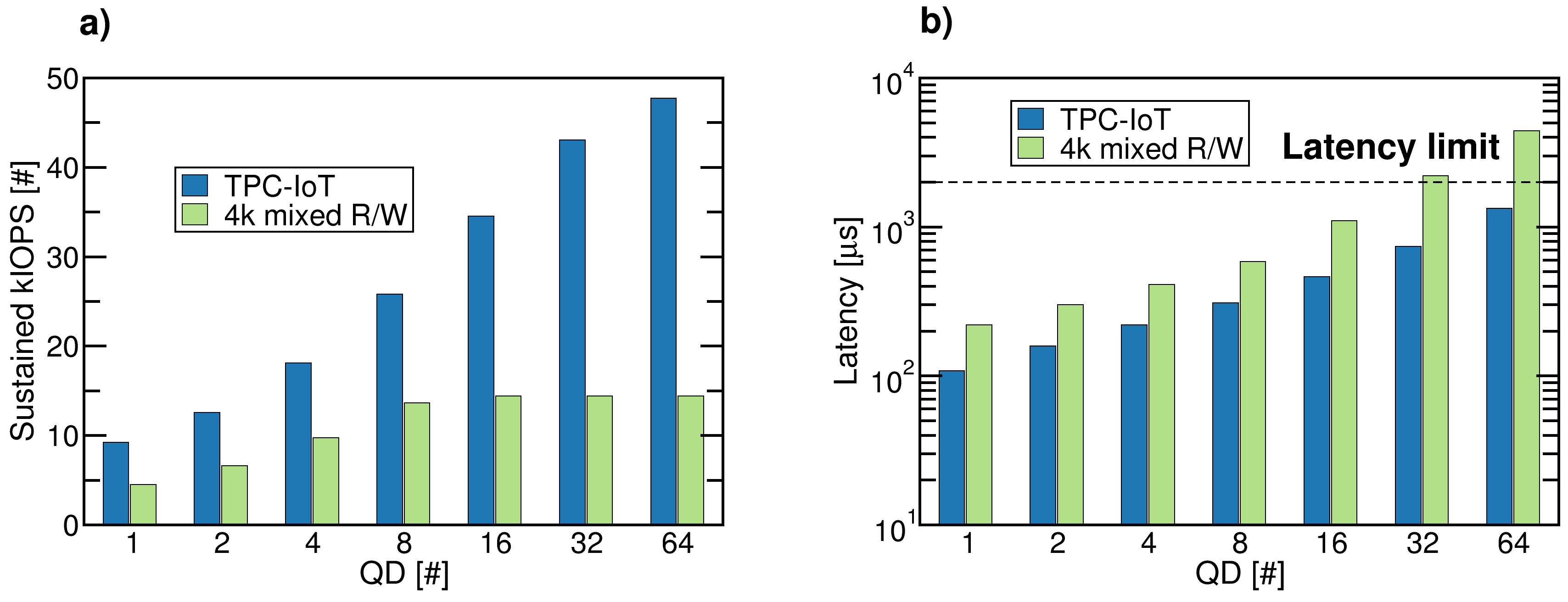

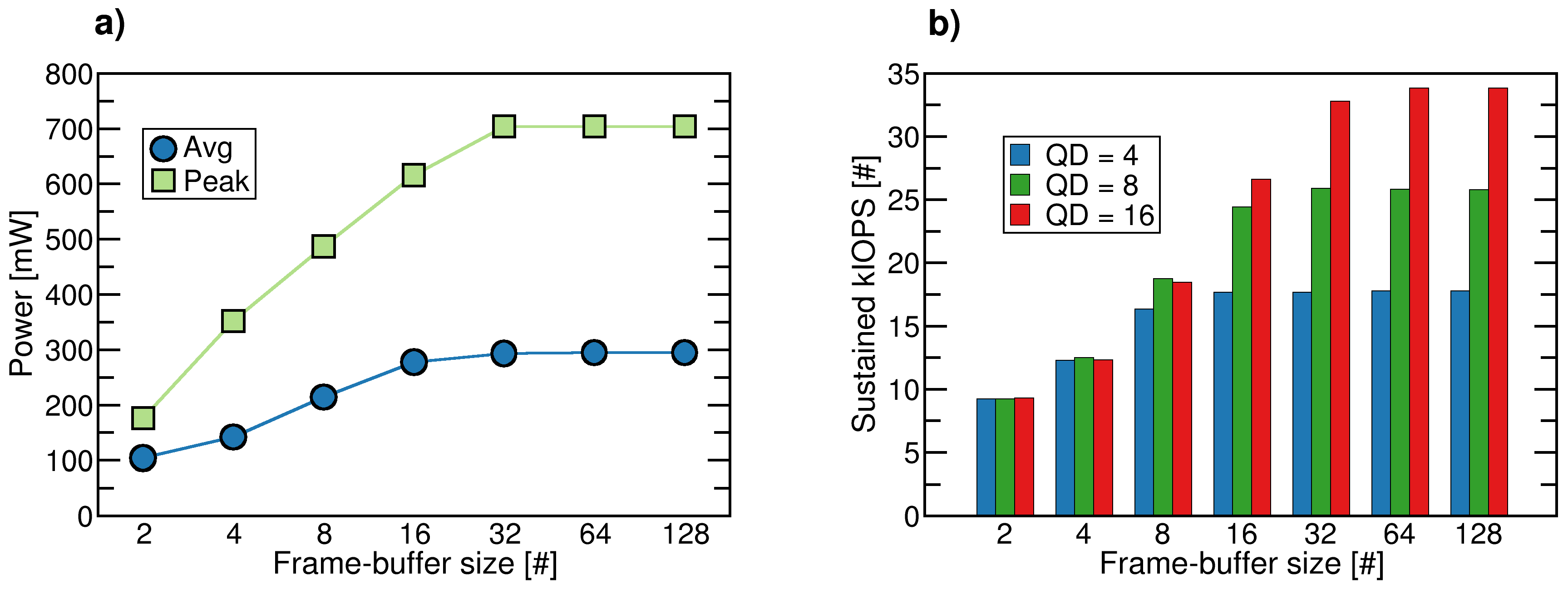

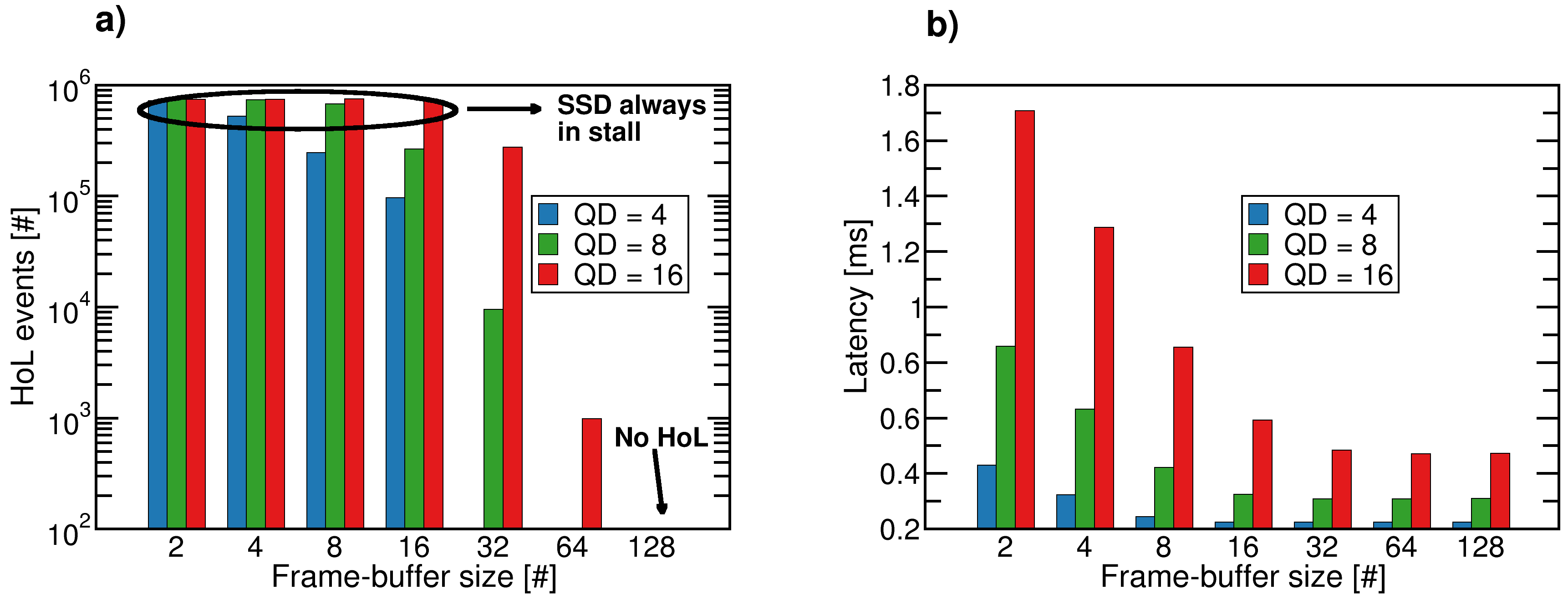

4.2. The Role of SSD Micro-Architecture on Power Consumption

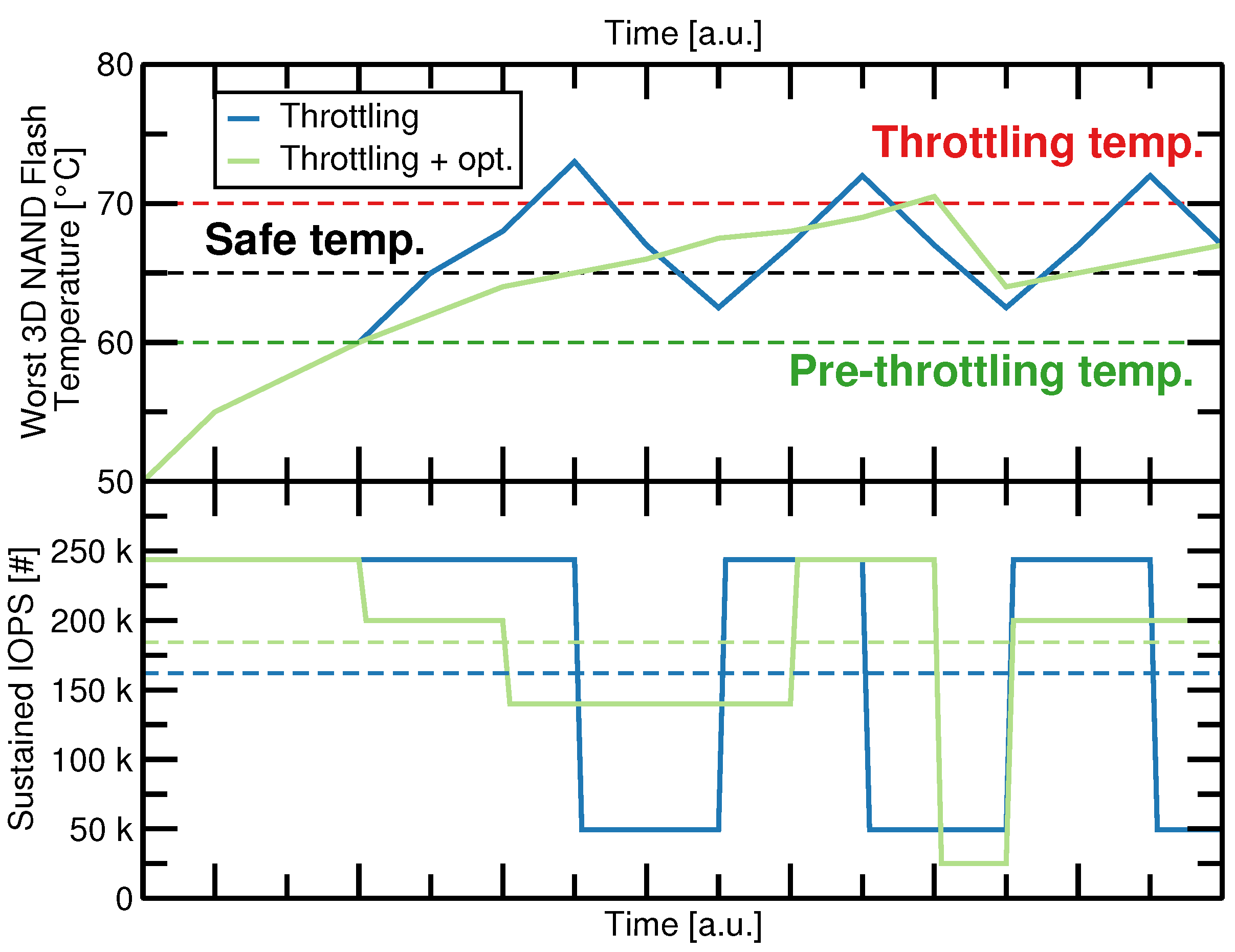

4.3. A Benchmark with Command Submission Time-Based Throttling

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Abbreviations

| IoT | Internet of Things |

| SSD | Solid State Drive |

| IIoT | Industrial Internet of Things |

| AFR | Annualized Failure Rate |

| MTBF | Mean Time Between Failures |

| ECC | Error Correction Code |

| QoS | Quality of Service |

| OS | Operating System |

| FTL | Flash Translation Layer |

| ASIC | Application Specific Integrated Circuit |

| QD | Queue Depth |

| TCQ | Target Command Queue |

| CST | Command Submission Time |

References

- Fu, J.; Liu, Y.; Chao, H.; Bhargava, B.K.; Zhang, Z. Secure Data Storage and Searching for Industrial IoT by Integrating Fog Computing and Cloud Computing. IEEE Trans. Ind. Inform. 2018, 14, 4519–4528. [Google Scholar] [CrossRef]

- Karthikeyan, P.; Thangavel, M. (Eds.) Applications of Security, Mobile, Analytic and Cloud (SMAC) Technologies for Effective Information Processing and Management; IGI Global: Hershey, PA, USA, 2018; chapter Processing IoT Data: From Cloud to Fog-It’s Time to Be Down to Earth; pp. 124–148. [Google Scholar] [CrossRef]

- Chen, H.; Jia, X.; Li, H. A brief introduction to IoT gateway. In Proceedings of the IET International Conference on Communication Technology and Application (ICCTA 2011), Beijing, China, 14–16 October 2011; pp. 610–613. [Google Scholar] [CrossRef]

- Micheloni, R.; Marelli, A.; Eshghi, K. (Eds.) Inside Solid State Drives (SSDs); Springer: Berlin/Heidelberg, Germany, 2012; chapter SSD Market Overview; pp. 1–17. [Google Scholar]

- Zuolo, L.; Zambelli, C.; Micheloni, R.; Olivo, P. Solid-State Drives: Memory Driven Design Methodologies for Optimal Performance. Proc. IEEE 2017, 105, 1589–1608. [Google Scholar] [CrossRef]

- Marquart, T. Solid-State-Drive qualification and reliability strategy. In Proceedings of the IEEE International Integrated Reliability Workshop (IIRW), South Lake Tahoe, CA, USA, 11–15 October 2015; pp. 3–6. [Google Scholar] [CrossRef]

- Schroeder, B.; Merchant, A.; Lagisetty, R. Reliability of nand-Based SSDs: What Field Studies Tell Us. Proc. IEEE 2017, 105, 1751–1769. [Google Scholar] [CrossRef]

- Mielke, N.R.; Frickey, R.E.; Kalastirsky, I.; Quan, M.; Ustinov, D.; Vasudevan, V.J. Reliability of Solid-State Drives Based on NAND Flash Memory. Proc. IEEE 2017, 105, 1725–1750. [Google Scholar] [CrossRef]

- Cai, Y.; Luo, Y.; Haratsch, E.F.; Mai, K.; Mutlu, O. Data retention in MLC NAND flash memory: Characterization, optimization, and recovery. In Proceedings of the 2015 IEEE 21st International Symposium on High Performance Computer Architecture (HPCA), Burlingame, CA, USA, 7–11 February 2015; pp. 551–563. [Google Scholar] [CrossRef]

- Zhang, J.; Shihab, M.; Jung, M. Power, Energy, and Thermal Considerations in SSD-Based I/O Acceleration. In Proceedings of the 6th USENIX Workshop on Hot Topics in Storage and File Systems (HotStorage 14), Philadelphia, PA, USA, 17–18 June 2014. [Google Scholar]

- Takahashi, T.; Yamazaki, S.; Takeuchi, K. Data-retention time prediction of long-term archive SSD with flexible-nLC NAND flash. In Proceedings of the 2016 IEEE International Reliability Physics Symposium (IRPS), Pasadena, CA, USA, 17–21 April 2016; pp. 6C-5-1–6C-5-6. [Google Scholar] [CrossRef]

- Micheloni, R.; Aritome, S.; Crippa, L. Array Architectures for 3-D NAND Flash Memories. Proc. IEEE 2017, 105, 1634–1649. [Google Scholar] [CrossRef]

- Zambelli, C.; Micheloni, R.; Olivo, P. Reliability challenges in 3D NAND Flash memories. In Proceedings of the 2019 IEEE 11th International Memory Workshop (IMW), Monterey, CA, USA, 12–15 May 2019; pp. 1–4. [Google Scholar] [CrossRef]

- Grossi, A.; Zuolo, L.; Restuccia, F.; Zambelli, C.; Olivo, P. Quality-of-Service Implications of Enhanced Program Algorithms for Charge-Trapping NAND in Future Solid-State Drives. IEEE Trans. Device Mater. Reliab. 2015, 15, 363–369. [Google Scholar] [CrossRef]

- Transaction Processing Performance Council (TPC). (TPCx-IoT) Standard Specification Version 1.0.5; TPC: San Francisco, CA, USA, 2020. [Google Scholar]

- ATP Inc. IoT and IIoT: Flash Storage, Sensors and Actuators in Cloud/Edge. 2018. Available online: https://www.atpinc.com/blog/What-is-iiot-vs-iot-actuators-edge-cloud-storage-sensor-data (accessed on 5 May 2020).

- Schada, J. The Striking Contrast Between IoT and IIoT SSDs. 2016. Available online: https://www.electronicdesign.com/technologies/iot/article/21802116/the-striking-contrast-between-iot-and-iiot-ssds (accessed on 5 May 2020).

- Meza, J.; Wu, Q.; Kumar, S.; Mutlu, O. A Large-Scale Study of Flash Memory Failures in the Field. In Proceedings of the 2015 ACM SIGMETRICS International Conference on Measurement and Modeling of Computer Systems, Portland, OR, USA, 15–19 June 2015; pp. 177–190. [Google Scholar] [CrossRef]

- Wang, Y.; Dong, X.; Zhang, X.; Wang, L. Measurement and Analysis of SSD Reliability Data Based on Accelerated Endurance Test. Electronics 2019, 8, 1357. [Google Scholar] [CrossRef]

- JEDEC. JESD218B Solid-State Drive (SSD) Requirements and Endurance Test Method; JEDEC: Arlington, VA, USA, 2016. [Google Scholar]

- Zhang, H.; Thompson, E.; Ye, N.; Nissim, D.; Chi, S.; Takiar, H. SSD Thermal Throttling Prediction using Improved Fast Prediction Model. In Proceedings of the 2019 18th IEEE Intersociety Conference on Thermal and Thermomechanical Phenomena in Electronic Systems (ITherm), Las Vegas, NV, USA, 28–31 May 2019; pp. 1016–1019. [Google Scholar] [CrossRef]

- JEDEC. JESD22-A117 Electrically Erasable Programmable ROM (EEPROM) Program / Erase Endurance and Data Retention Stress Test; JEDEC: Arlington, VA, USA, 2018. [Google Scholar]

- Mizoguchi, K.; Takahashi, T.; Aritome, S.; Takeuchi, K. Data-Retention Characteristics Comparison of 2D and 3D TLC NAND Flash Memories. In Proceedings of the 2017 IEEE International Memory Workshop (IMW), Monterey, CA, USA, 14–17 May 2017; pp. 1–4. [Google Scholar] [CrossRef]

- Park, J.; Shin, H. Modeling of Lateral Migration Mechanism of Holes in 3D NAND Flash Memory Charge Trap Layer during Retention Operation. In Proceedings of the 2019 Silicon Nanoelectronics Workshop (SNW), Kyoto, Japan, 9–10 June 2019; pp. 1–2. [Google Scholar] [CrossRef]

- Luo, Y.; Ghose, S.; Cai, Y.; Haratsch, E.F.; Mutlu, O. Improving 3D NAND Flash Memory Lifetime by Tolerating Early Retention Loss and Process Variation. In Proceedings of the Abstracts of the 2018 ACM International Conference on Measurement and Modeling of Computer Systems, Irvine, CA, USA, 18–22 June 2018; p. 106. [Google Scholar] [CrossRef]

- Deguchi, Y.; Takeuchi, K. 3D-NAND Flash Solid-State Drive (SSD) for Deep Neural Network Weight Storage of IoT Edge Devices with 700x Data-Retention Lifetime Extention. In Proceedings of the 2018 IEEE International Memory Workshop (IMW), Kyoto, Japan, 13–16 May 2018; pp. 1–4. [Google Scholar] [CrossRef]

- Mizushina, K.; Nakamura, T.; Deguchi, Y.; Takeuchi, K. Layer-by-layer Adaptively Optimized ECC of NAND flash-based SSD Storing Convolutional Neural Network Weight for Scene Recognition. In Proceedings of the 2018 IEEE International Symposium on Circuits and Systems (ISCAS), Florence, Italy, 27–30 May 2018; pp. 1–5. [Google Scholar] [CrossRef]

- Karlay Inc. The KalRay Multi-Purpose-Processing-Array (MPPA). 2016. Available online: http://www.kalrayinc.com/kalray/products/#processors (accessed on 5 May 2020).

- Zambelli, C.; Bertaggia, R.; Zuolo, L.; Micheloni, R.; Olivo, P. Enabling Computational Storage Through FPGA Neural Network Accelerator for Enterprise SSD. IEEE Trans. Circuits Syst. II Express Briefs 2019, 66, 1738–1742. [Google Scholar] [CrossRef]

- Yoo, J.; Won, Y.; Hwang, J.; Kang, S.; Choi, J.; Yoon, S.; Cha, J. VSSIM: Virtual machine based SSD simulator. In Proceedings of the IEEE Symposium on Mass Storage Systems and Technologies (MSST), Long Beach, CA, USA, 6–10 May 2013; pp. 1–14. [Google Scholar] [CrossRef]

- Lee, J.; Byun, E.; Park, H.; Choi, J.; Lee, D.; Noh, S.H. CPS-SIM: Configurable and Accurate Clock Precision Solid State Drive Simulator. In Proceedings of the 2009 ACM Symposium on Applied Computing, Honolulu, HI, USA, 9–12 March 2009; pp. 318–325. [Google Scholar] [CrossRef]

- Jung, H.; Jung, S.; Song, Y.H. Architecture exploration of flash memory storage controller through a cycle accurate profiling. IEEE Trans. Consum. Electron. 2011, 57, 1756–1764. [Google Scholar] [CrossRef]

- Dell Inc. Dell Edge Gateway-5000 Series-Installation and Operation Manual. 2019. Available online: https://topics-cdn.dell.com/pdf/dell-edge-gateway-5000_users-guide_en-us.pdf (accessed on 5 May 2020).

- Serial ATA International Organization. SATA Revision 3.4 Specifications. 2020. Available online: www.sata-io.org (accessed on 5 May 2020).

- PCI-SIG. PCI Express Base 3.1 Specification. 2015. Available online: http://www.pcisig.com/specifications/pciexpress/base3/ (accessed on 5 May 2020).

- Microsemi Inc. (A Microchip company). Microsemi PM8609 NVMe2032 Flashtec NVMe Controller. 2019. Available online: https://www.microsemi.com/product-directory/storage-ics/3687-flashtec-nvme-controllers (accessed on 14 June 2019).

- Open Nand Flash Interface (ONFI). Open NAND Flash Interface Specification-Revision 4.2. 2020. Available online: http://www.onfi.org (accessed on 5 May 2020).

- Li, M.; Chou, H.; Ueng, Y.; Chen, Y. A low-complexity LDPC decoder for NAND flash applications. In Proceedings of the 2014 IEEE International Symposium on Circuits and Systems (ISCAS), Melbourne, Australia, 1–5 June 2014; pp. 213–216. [Google Scholar] [CrossRef]

- Zuolo, L.; Zambelli, C.; Micheloni, R.; Indaco, M.; Carlo, S.D.; Prinetto, P.; Bertozzi, D.; Olivo, P. SSDExplorer: A Virtual Platform for Performance/Reliability-Oriented Fine-Grained Design Space Exploration of Solid State Drives. IEEE Trans. Comput. Aided Des. Integr. Circuits Syst. 2015, 34, 1627–1638. [Google Scholar] [CrossRef]

- Zambelli, C.; King, P.; Olivo, P.; Crippa, L.; Micheloni, R. Power-supply impact on the reliability of mid-1X TLC NAND flash memories. In Proceedings of the IEEE International Reliability Physics Symposium (IRPS), Pasadena, CA, USA, 17–21 April 2016; pp. 2B-3-1–2B-3-6. [Google Scholar] [CrossRef]

- QEMU: The FAST! Processor Emulator. 2020. Available online: https://www.qemu.org (accessed on 5 May 2020).

- Poess, M.; Nambiar, R.; Kulkarni, K.; Narasimhadevara, C.; Rabl, T.; Jacobsen, H. Analysis of TPCx-IoT: The First Industry Standard Benchmark for IoT Gateway Systems. In Proceedings of the 2018 IEEE 34th International Conference on Data Engineering (ICDE), Paris, France, 16–19 April 2018; pp. 1519–1530. [Google Scholar] [CrossRef]

- Murakami, K.; Nagai, K.; Tanimoto, A. Single-Package SSD and Ultra-Small SSD Module Utilizing PCI Express Interface. Toshiba Rev. Glob. Ed. 2015, 1, 24–27. [Google Scholar]

- Zambelli, C.; Micheloni, R.; Crippa, L.; Zuolo, L.; Olivo, P. Impact of the NAND Flash Power Supply on Solid State Drives Reliability and Performance. IEEE Trans. Device Mater. Reliab. 2018, 18, 247–255. [Google Scholar] [CrossRef]

- Intel Corp. Partition Alignment of Intel® SSDs for Achieving Maximum Performance and Endurance. 2014. Available online: https://www.intel.com/content/dam/www/public/us/en/documents/technology-briefs/ssd-partition-alignment-tech-brief.pdf (accessed on 5 May 2020).

- Yu, W.; Liang, F.; He, X.; Hatcher, W.G.; Lu, C.; Lin, J.; Yang, X. A Survey on the Edge Computing for the Internet of Things. IEEE Access 2018, 6, 6900–6919. [Google Scholar] [CrossRef]

- Apacer Technology Inc. Thermal Throttling. Available online: https://industrial.apacer.com/en-ww/Technology/Thermal-Throttling- (accessed on 5 May 2020).

- Ferreira, A.P. SMARTER: A Smarter-Device-Manager for Kubernetes on the Edge. 2020. Available online: https://community.arm.com/developer/research/b/articles/posts/a-smarter-device-manager-for-kubernetes-on-the-edge (accessed on 5 May 2020).

- Intel Corp. Intel® Data Center SSDs: Important SMART Attribute Indicators. Available online: https://www.intel.com/content/www/us/en/support/articles/000055367/memory-and-storage/data-center-ssds.html (accessed on 5 May 2020).

- Wu, Q.; Dong, G.; Zhang, T. A First Study on Self-Healing Solid-State Drives. In Proceedings of the 2011 3rd IEEE International Memory Workshop (IMW), Monterey, CA, USA, 22–25 May 2011; pp. 1–4. [Google Scholar] [CrossRef]

| Topic | References |

|---|---|

| SSDs Storage in IoT and IIoT | [1,2,16] |

| SSD temperature failures | [7,8,18,19] |

| Thermal management in SSD | [10,21] |

| 3D NAND Flash technology retention temperature sensitivity and mitigation | [23,24,25,26,27,29] |

| SSD reliability/performance simulators | [30,31,32] |

| Component | Value |

|---|---|

| CPU | Dual-core @ 1 GHz |

| DRAM | 2 GB |

| Interconnection fabric | PCIe gen2 X4 |

| OS | Linux Ubuntu |

| SSD | from 64 GB up to 512 GB |

| Parameter | Value |

|---|---|

| Capacity | 64–512 GB |

| Host interface | PCIe gen2 X4 |

| Queue Depth (QD) | Variable from 1 to 64 |

| Channels | 1–8 |

| Targets (number of Flash die per chip) | 8 |

| Storage medium technology | TLC 3D NAND Flash |

| 3D NAND Flash page | 16 KB+parity (4 sectors of 4320 B) |

| DRAM cache | 64–512 MB |

| ECC | LDPC up to 220 bits |

| Advanced ECC protection | 1 bit soft decoding |

| Over-Provisioning | 30% |

| Write Amplification Factor | 2.4 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zambelli, C.; Zuolo, L.; Crippa, L.; Micheloni, R.; Olivo, P. Mitigating Self-Heating in Solid State Drives for Industrial Internet-of-Things Edge Gateways. Electronics 2020, 9, 1179. https://doi.org/10.3390/electronics9071179

Zambelli C, Zuolo L, Crippa L, Micheloni R, Olivo P. Mitigating Self-Heating in Solid State Drives for Industrial Internet-of-Things Edge Gateways. Electronics. 2020; 9(7):1179. https://doi.org/10.3390/electronics9071179

Chicago/Turabian StyleZambelli, Cristian, Lorenzo Zuolo, Luca Crippa, Rino Micheloni, and Piero Olivo. 2020. "Mitigating Self-Heating in Solid State Drives for Industrial Internet-of-Things Edge Gateways" Electronics 9, no. 7: 1179. https://doi.org/10.3390/electronics9071179

APA StyleZambelli, C., Zuolo, L., Crippa, L., Micheloni, R., & Olivo, P. (2020). Mitigating Self-Heating in Solid State Drives for Industrial Internet-of-Things Edge Gateways. Electronics, 9(7), 1179. https://doi.org/10.3390/electronics9071179