Adaptive Weighted High Frequency Iterative Algorithm for Fractional-Order Total Variation with Nonlocal Regularization for Image Reconstruction

Abstract

1. Introduction

2. Related Works

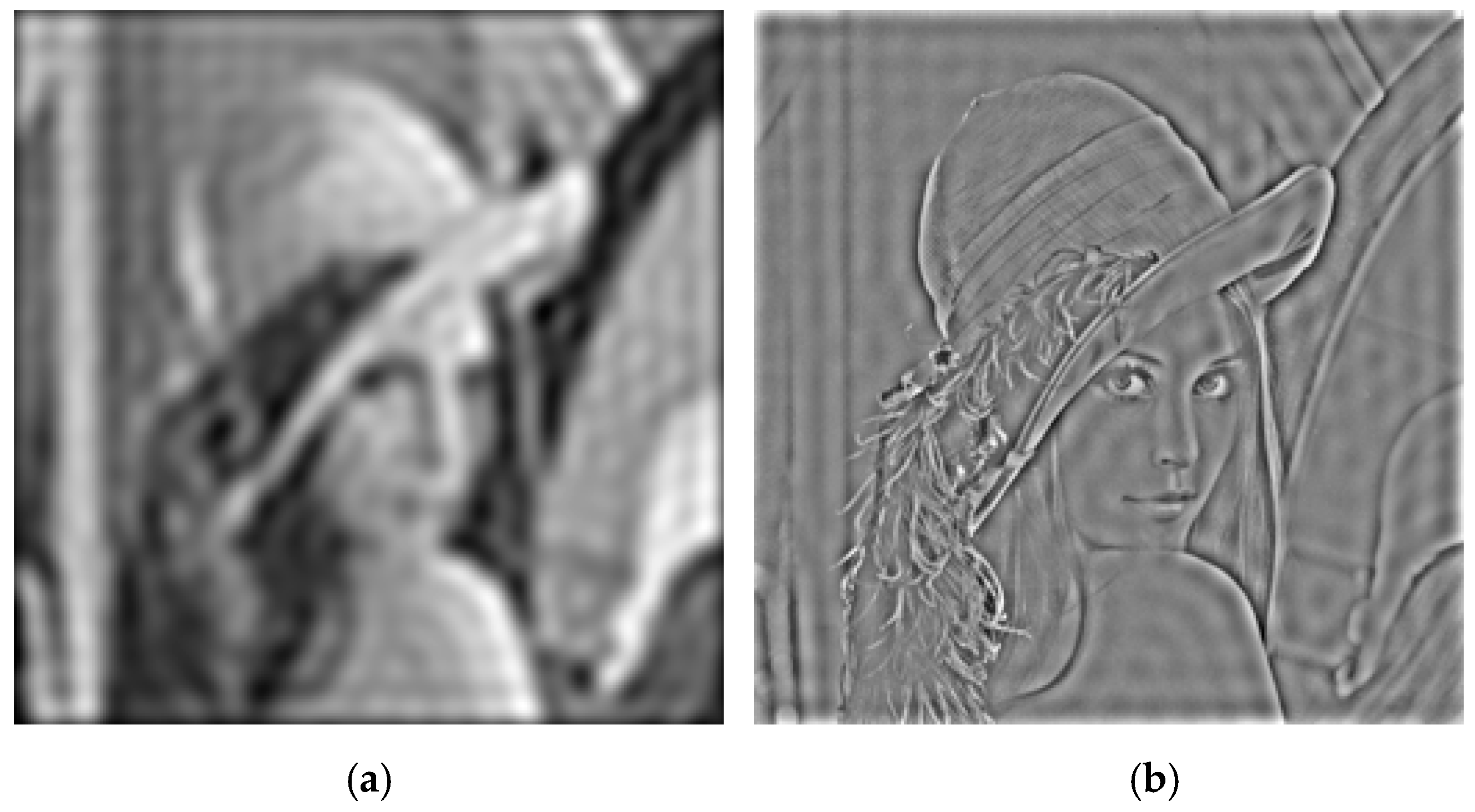

2.1. Fractional-Order Differential Model

2.2. Adpative Reweighted Total Variation Model

2.3. Nonlocal Regularization Model

3. Reweighted Fractional-Order TV Method with Nonlocal Regularization

| Algorithm 1 The proposed algorithm |

| Input: the measurement y, the measurement matrix A |

| Initialization: Initialize image using standard DCT recovery method |

| Set parameters a, b, c, , , , , Outer loop: Compute regularization matrix W by Equations (17) and (18) Decompose the low frequency components and high frequency components Compute weights w Inner loop: Update using Equation (26) Update low frequency gradients and high frequency gradients using Equations (28) and (29) Update using Equation (31) End for Update the Lagrangian multipliers a, b, c using Equation (24) End for Output: the reconstructed image x |

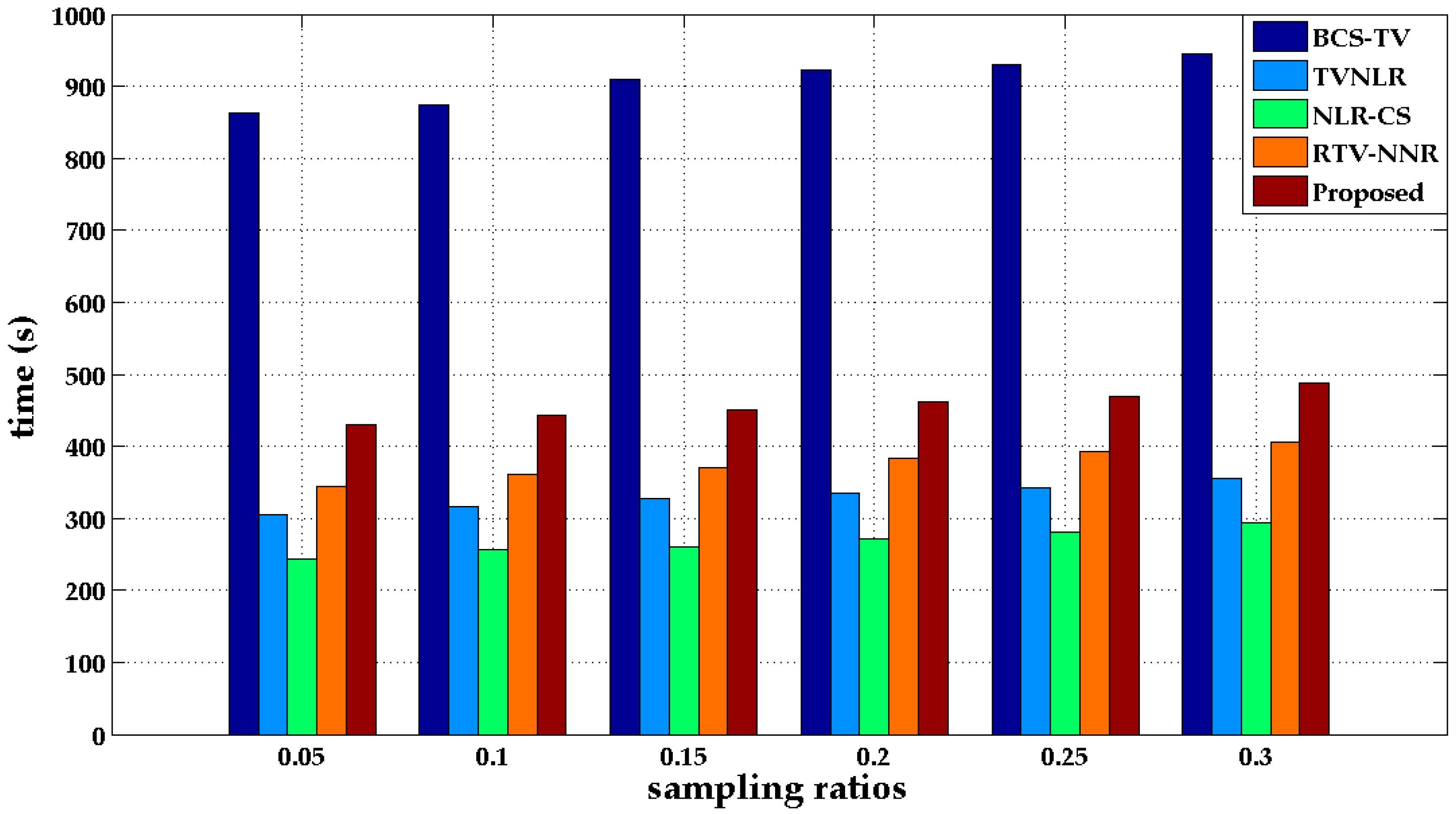

4. Experimental Results and Analysis

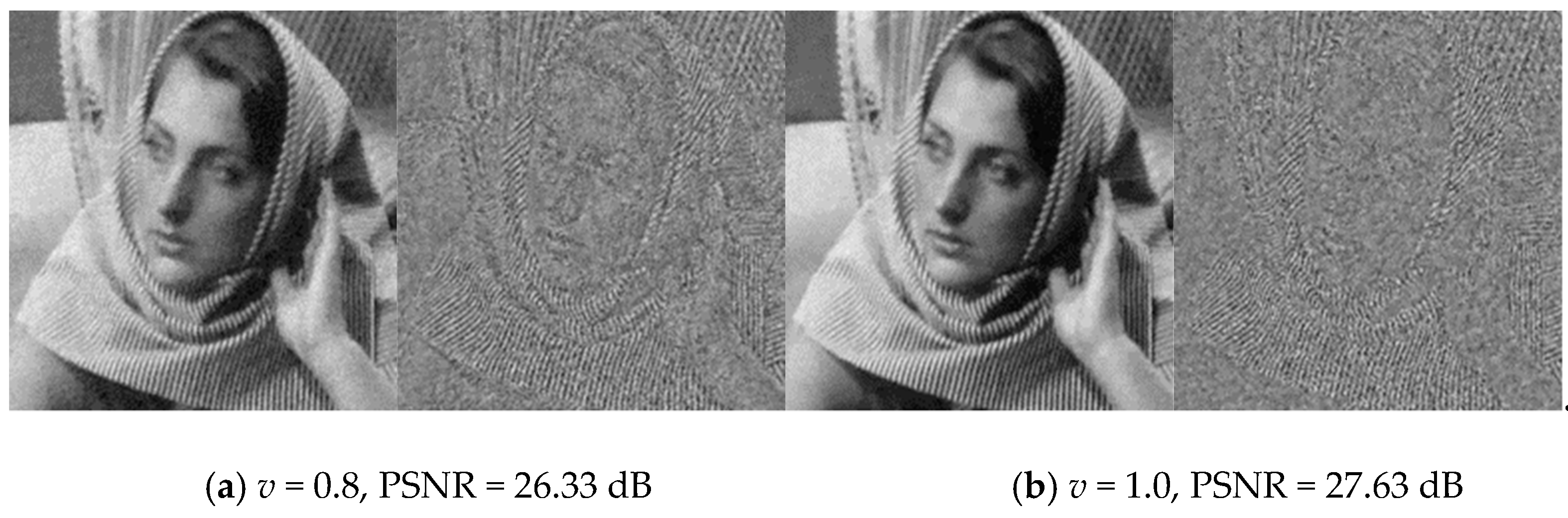

4.1. The Influence of Fractional-Order v

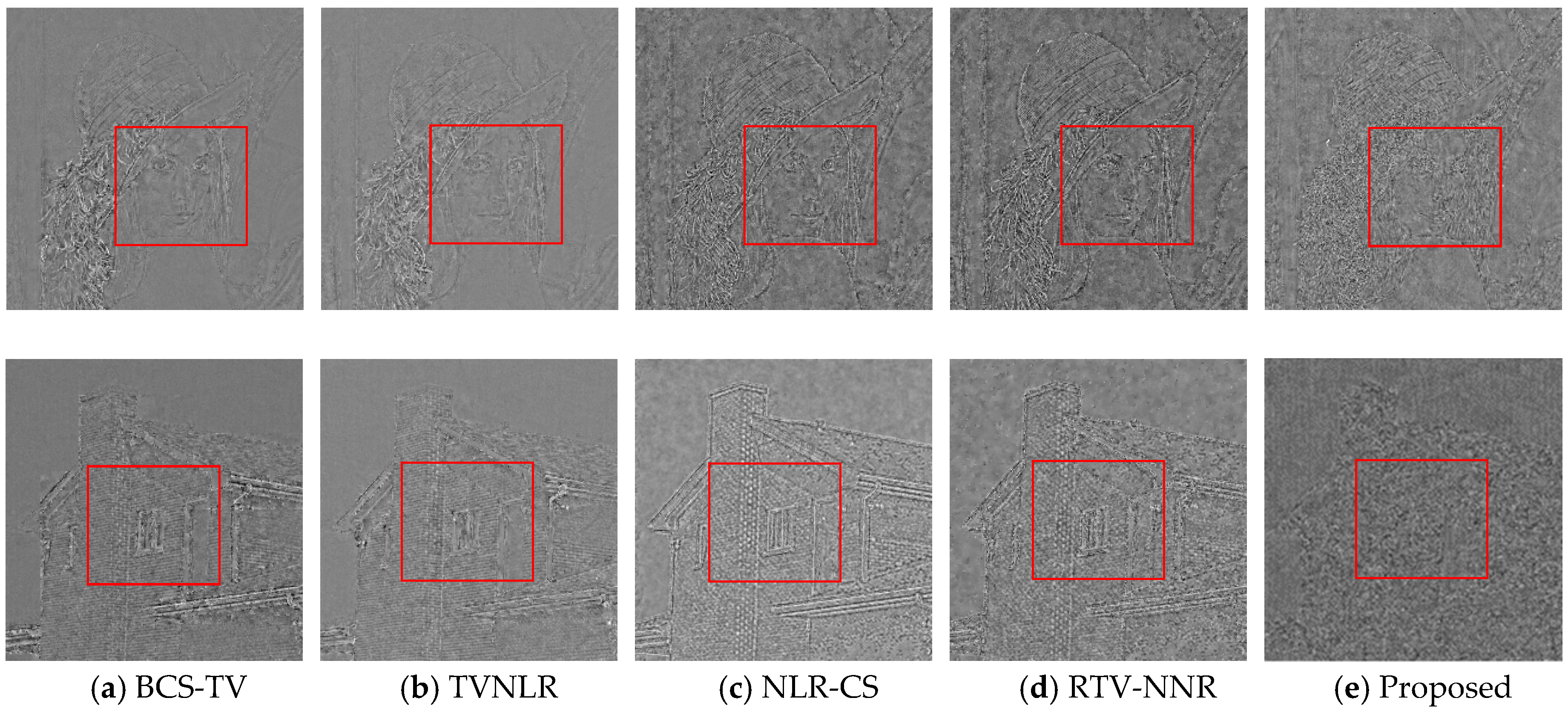

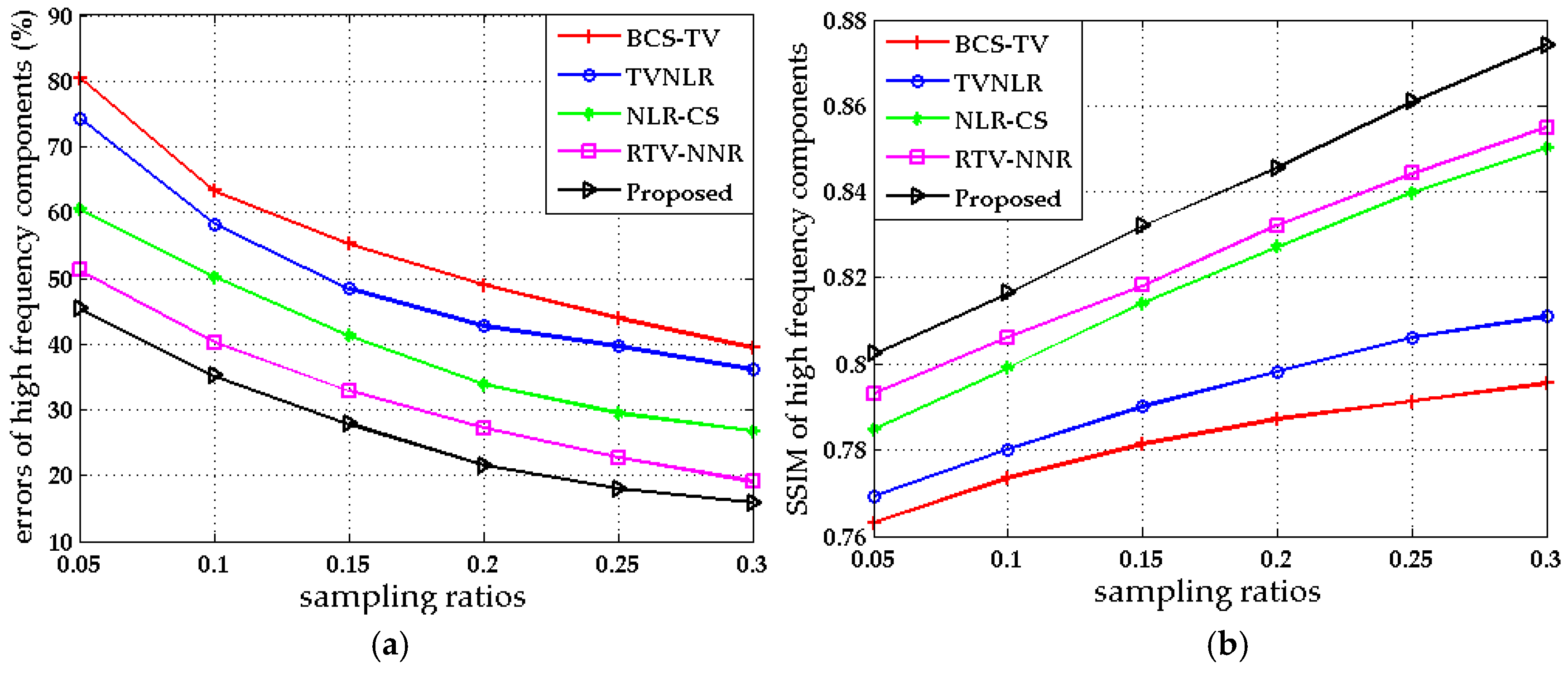

4.2. Experimental Results

5. Discussion and Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Donoho, D.L. Compressed sensing. IEEE Trans. Inf. Theory 2006, 52, 1289–1306. [Google Scholar] [CrossRef]

- Candès, E.J.; Wakin, M.B.; Wakin, M.B. An introduction to compressive sampling. IEEE Signal Process. Mag. 2008, 25, 21–30. [Google Scholar] [CrossRef]

- Edgar, M.P.; Gibson, G.M.; Bowman, R.W.; Sun, B.; Radwell, N.; Mitchell, K.J.; Welsh, S.; Padgett, M.J. Simultaneous real-time visible and infrared video with single-pixel detectors. Sci. Rep. 2015, 5, 10669. [Google Scholar] [CrossRef] [PubMed]

- Ragab, M.; Omer, O.A.; Abdel-Nasser, M. Compressive sensing MRI reconstruction using empirical wavelet transform and grey wolf optimizer. Neural Comput. Appl. 2020, 32, 2705–2724. [Google Scholar] [CrossRef]

- Kanno, H.; Mikami, H.; Goda, K. High-speed single-pixel imaging by frequency-time-division multiplexing. Opt. Lett. 2020, 45, 2339–2342. [Google Scholar] [CrossRef]

- Candès, E.J.; Tao, T. Near-Optimal signal recovery from random projections: Universal encoding strategies? IEEE Trans. Inf. Theory 2006, 52, 5406–5425. [Google Scholar] [CrossRef]

- Edgar, M.P.; Gibson, G.M.; Padgett, M.J. Principles and prospects for single-pixel imaging. Nat. Photon. 2019, 13, 13–20. [Google Scholar] [CrossRef]

- Candès, E.; Romberg, J. Sparsity and incoherence in compressive sampling. Inverse Probl. 2007, 23, 969–985. [Google Scholar] [CrossRef]

- He, L.; Chen, H.; Carin, L. Tree-structured compressive sensing with variational bayesian analysis. IEEE Signal Process. Lett. 2010, 17, 233–236. [Google Scholar]

- He, L.; Carin, L. Exploiting Structure in Wavelet-Based Bayesian Compressive Sensing. IEEE Trans. Signal Process. 2009, 57, 3488–3497. [Google Scholar]

- Di Serafino, D.; Landi, G.; Viola, M. ACQUIRE: An inexact iteratively reweighted norm approach for TV-based Poisson image restoration. Appl. Math. Comput. 2020, 364, 124678. [Google Scholar] [CrossRef]

- Yang, J.-H.; Zhao, X.-L.; Ma, T.-H. Remote sensing images destriping using unidirectional hybrid total variation and nonconvex low-rank regularization. J. Comput. Appl. Math. 2020, 363, 124–144. [Google Scholar] [CrossRef]

- Li, C.; Yin, W.; Jiang, H.; Zhang, Y. An efficient augmented Lagrangian method with applications to total variation minimization. Comput. Optim. Appl. 2013, 56, 507–530. [Google Scholar] [CrossRef]

- Zhang, J.; Liu, S.; Xiong, R.; Ma, S.; Zhao, D. Improved total variation based image compressive sensing recovery by nonlocal regularization. IEEE Int. Symp. Circuits Syst. 2013, 2836–2839. [Google Scholar] [CrossRef]

- Zhang, M.; Desrosiers, C.; Zhang, C. Effective compressive sensing via reweighted total variation and weighted nuclear norm regularization. IEEE Int. Conf. Acoust. Speech Signal Process. 2017, 1802–1806. [Google Scholar] [CrossRef]

- Candes, E.J.; Wakin, M.B.; Boyd, S.P. Enhancing sparsity by reweigh ted l1 minimization. J. Fourier Anal. Appl. 2008, 14, 877–905. [Google Scholar] [CrossRef]

- Dong, W.; Shi, G.; Li, X.; Ma, Y.; Huang, F. Compressive Sensing via Nonlocal Low-Rank Regularization. IEEE Trans. Image Process. 2014, 23, 3618–3632. [Google Scholar] [CrossRef]

- Gu, S.; Zuo, W.; Xie, Q.; Meng, D.; Feng, X.; Zhang, L. Convolutional Sparse Coding for Image Super-Resolution. In Proceedings of the 2015 IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1823–1831. [Google Scholar]

- Yang, X.; Zhang, J.; Liu, Y.; Zheng, X.; Liu, K. Super-resolution image reconstruction using fractional-order total variation and adaptive regularization parameters. Vis. Comput. 2019, 35, 1755–1768. [Google Scholar] [CrossRef]

- Pu, Y.-F.; Zhou, J.-L.; Yuan, X. Fractional differential mask: A fractional differential-based approach for multiscale texture enhancement. IEEE Trans. Image Process. 2010, 19, 491–511. [Google Scholar]

- Wang, W.; Li, F.; Ng, M.K. Structural similarity-based nonlocal variational models for image restoration. IEEE Trans. Image Process. 2019, 28, 4260–4272. [Google Scholar] [CrossRef]

- Jidesh, P.; Kayyar, S.H. Non-local total variation regularization models for image restoration. Comput. Electr. Eng. 2018, 67, 114–133. [Google Scholar] [CrossRef]

- Mun, S.; Fowler, J.E. Block compressed sensing of images using directional transforms. In Proceedings of the IEEE International Conference on Image Processing, Cairo, Egypt, 7–10 November 2009; pp. 3021–3024. [Google Scholar]

| Image | Method | Sampling Ratios | |||||

|---|---|---|---|---|---|---|---|

| 0.05 | 0.1 | 0.15 | 0.2 | 0.25 | 0.3 | ||

| Lena | BCS-TV | 23.34 | 25.71 | 28.08 | 29.61 | 30.94 | 31.82 |

| TVNLR | 24.42 | 26.47 | 28.62 | 30.33 | 31.31 | 32.22 | |

| NLR-CS | 26.68 | 28.70 | 30.46 | 32.02 | 33.58 | 34.67 | |

| RTV-NNR | 27.82 | 30.11 | 31.81 | 33.34 | 34.77 | 36.00 | |

| Proposed | 29.64 | 31.34 | 32.73 | 33.97 | 34.92 | 35.85 | |

| Barbara | BCS-TV | 20.18 | 22.75 | 24.61 | 26.12 | 27.55 | 28.95 |

| TVNLR | 21.26 | 23.01 | 24.72 | 26.68 | 28.33 | 29.69 | |

| NLR-CS | 24.31 | 26.04 | 27.69 | 29.22 | 30.67 | 31.99 | |

| RTV-NNR | 26.09 | 27.88 | 29.50 | 31.12 | 32.72 | 34.15 | |

| Proposed | 27.72 | 29.42 | 30.86 | 32.01 | 33.18 | 34.20 | |

| Cameraman | BCS-TV | 20.43 | 23.29 | 25.47 | 27.82 | 29.96 | 31.14 |

| TVNLR | 21.26 | 23.89 | 25.90 | 28.08 | 30.19 | 31.64 | |

| NLR-CS | 24.12 | 26.29 | 28.05 | 29.87 | 31.60 | 33.07 | |

| RTV-NNR | 25.83 | 27.77 | 29.42 | 31.08 | 32.70 | 34.21 | |

| Proposed | 27.30 | 28.92 | 30.46 | 31.73 | 32.75 | 33.84 | |

| Monarch | BCS-TV | 19.94 | 22.34 | 24.92 | 26.80 | 28.46 | 30.00 |

| TVNLR | 20.83 | 23.27 | 25.98 | 27.58 | 29.16 | 31.02 | |

| NLR-CS | 23.91 | 26.29 | 28.10 | 29.78 | 31.35 | 32.81 | |

| RTV-NNR | 25.36 | 27.46 | 29.31 | 31.19 | 33.04 | 34.66 | |

| Proposed | 26.62 | 28.34 | 30.06 | 31.59 | 33.01 | 34.47 | |

| Parrots | BCS-TV | 26.64 | 28.83 | 30.59 | 31.83 | 32.94 | 34.01 |

| TVNLR | 27.30 | 29.54 | 31.26 | 32.79 | 34.30 | 35.66 | |

| NLR-CS | 29.66 | 31.64 | 33.46 | 35.03 | 36.63 | 38.11 | |

| RTV-NNR | 30.35 | 32.32 | 34.15 | 35.74 | 37.33 | 38.85 | |

| Proposed | 31.14 | 32.98 | 34.57 | 36.02 | 37.41 | 38.63 | |

| Clock | BCS-TV | 24.75 | 27.52 | 29.30 | 31.04 | 32.44 | 32.78 |

| TVNLR | 25.49 | 28.35 | 30.29 | 31.98 | 32.56 | 33.67 | |

| NLR-CS | 27.80 | 29.76 | 31.55 | 33.17 | 34.68 | 35.90 | |

| RTV-NNR | 29.01 | 31.13 | 32.84 | 34.34 | 35.76 | 36.92 | |

| Proposed | 30.48 | 32.23 | 33.89 | 35.21 | 36.34 | 37.10 | |

| House | BCS-TV | 24.12 | 27.51 | 29.50 | 31.23 | 32.43 | 33.36 |

| TVNLR | 26.97 | 29.82 | 31.52 | 33.17 | 34.32 | 35.24 | |

| NLR-CS | 28.77 | 30.91 | 32.68 | 34.03 | 35.26 | 36.40 | |

| RTV-NNR | 29.33 | 31.32 | 33.12 | 34.75 | 35.84 | 36.63 | |

| Proposed | 30.60 | 32.29 | 33.70 | 35.15 | 36.29 | 36.94 | |

| Boats | BCS-TV | 22.26 | 24.29 | 26.44 | 27.98 | 28.98 | 30.22 |

| TVNLR | 22.88 | 24.95 | 27.08 | 28.72 | 29.90 | 31.03 | |

| NLR-CS | 25.05 | 26.94 | 28.75 | 30.28 | 31.66 | 32.84 | |

| RTV-NNR | 26.52 | 28.33 | 29.99 | 31.42 | 32.96 | 34.04 | |

| Proposed | 28.11 | 29.71 | 31.13 | 32.39 | 33.36 | 34.17 | |

| Chart1 | BCS-TV | 15.97 | 19.33 | 22.46 | 26.84 | 30.70 | 33.43 |

| TVNLR | 16.04 | 21.48 | 25.69 | 29.72 | 33.84 | 36.82 | |

| NLR-CS | 21.12 | 25.31 | 28.93 | 32.46 | 35.66 | 38.35 | |

| RTV-NNR | 22.71 | 26.55 | 30.04 | 33.39 | 36.37 | 39.07 | |

| Proposed | 23.19 | 27.20 | 30.33 | 33.25 | 36.04 | 38.44 | |

| Chart2 | BCS-TV | 16.48 | 20.81 | 23.91 | 26.25 | 28.07 | 29.99 |

| TVNLR | 17.74 | 21.73 | 25.45 | 28.04 | 29.79 | 30.69 | |

| NLR-CS | 20.42 | 24.08 | 27.53 | 30.41 | 32.95 | 35.22 | |

| RTV-NNR | 22.10 | 25.65 | 28.90 | 31.75 | 34.14 | 36.23 | |

| Proposed | 23.67 | 26.78 | 29.92 | 32.48 | 34.54 | 36.39 | |

| Image | BCS-TV | TVNLR | NLR-CS | RTV-NNR | Proposed |

|---|---|---|---|---|---|

| Lena | 0.8122 | 0.8178 | 0.8492 | 0.8502 | 0.8616 |

| Barbara | 0.8083 | 0.8127 | 0.8394 | 0.8569 | 0.8671 |

| Cameraman | 0.7974 | 0.8034 | 0.8280 | 0.8363 | 0.8390 |

| Monarch | 0.7632 | 0.7692 | 0.7848 | 0.7931 | 0.7992 |

| Parrots | 0.8587 | 0.8632 | 0.8753 | 0.8832 | 0.8926 |

| Clock | 0.8613 | 0.8680 | 0.8814 | 0.8947 | 0.8982 |

| House | 0.8703 | 0.8775 | 0.8937 | 0.9061 | 0.9082 |

| Boats | 0.8089 | 0.8197 | 0.8316 | 0.8430 | 0.8538 |

| Chart1 | 0.6285 | 0.6320 | 0.6577 | 0.6783 | 0.6850 |

| Chart2 | 0.6593 | 0.6627 | 0.6804 | 0.6922 | 0.7008 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, H.; Qin, Y.; Ren, H.; Chang, L.; Hu, Y.; Zheng, H. Adaptive Weighted High Frequency Iterative Algorithm for Fractional-Order Total Variation with Nonlocal Regularization for Image Reconstruction. Electronics 2020, 9, 1103. https://doi.org/10.3390/electronics9071103

Chen H, Qin Y, Ren H, Chang L, Hu Y, Zheng H. Adaptive Weighted High Frequency Iterative Algorithm for Fractional-Order Total Variation with Nonlocal Regularization for Image Reconstruction. Electronics. 2020; 9(7):1103. https://doi.org/10.3390/electronics9071103

Chicago/Turabian StyleChen, Hui, Yali Qin, Hongliang Ren, Liping Chang, Yingtian Hu, and Huan Zheng. 2020. "Adaptive Weighted High Frequency Iterative Algorithm for Fractional-Order Total Variation with Nonlocal Regularization for Image Reconstruction" Electronics 9, no. 7: 1103. https://doi.org/10.3390/electronics9071103

APA StyleChen, H., Qin, Y., Ren, H., Chang, L., Hu, Y., & Zheng, H. (2020). Adaptive Weighted High Frequency Iterative Algorithm for Fractional-Order Total Variation with Nonlocal Regularization for Image Reconstruction. Electronics, 9(7), 1103. https://doi.org/10.3390/electronics9071103