1. Introduction

The Internet of things (IoT) is changing our lives drastically. Connectivity among people, things, and businesses are increasing exponentially. It enables flexible connectivity and exchange of data among billions of devices and processes. With the rapid increase in IoT devices, energy need is also increasing [

1]. To cope with this issue, energy harvesting (EH) devices have been introduced in the market. EH capture ambient recyclable energy from the environment, such as solar radiation, wind, human motion energy, as well as ambient radio-frequency. Consequently, EH is considered one of the promising techniques to prolong the network lifetime and provide a satisfactory quality of experiences for IoT devices.

A new network paradigm known as Mobile Edge Computing (MEC) is introduced to liberate mobile devices from computationally intensive tasks. Several edge servers are deployed near the mobile devices aiming at a significant reduction in latency, congestion avoidance, and prolonging the network lifetime. Several IoT devices offload the heavy computation workload to the MEC devices such as base stations, access points, and so forth [

2]. Integrating EH and MEC techniques contribute to improving computation performance and open new possibilities for cloud computing [

3,

4].

Computational offloading is a way to help the low memory devices by performing heavy tasks on MEC servers. Tasks are directly associated with IoT devices. A task that is going to be offloaded needs to be transmitted over a wireless access network, and time constraint must be considered during this process [

5]. However, to decide computational offloading in MEC servers systems with multiple energy harvesting devices is still challenging. A critical problem in the computation offloading is to select the optimal MEC device from several candidates in the coverage radio range and data over the networks is also vulnerable to attacks [

6]. There are many computational offloading schemes been presented in fog computing scenarios. Lyapunov optimization-based algorithm is used for offloading and resource allocation purposes by Chang et al. [

7]. Their proposed algorithm can dynamically coordinate and allocate resources to fog nodes. They focused on subproblems such as latency, consumption of power by EH devices, and the priority of mobile devices. To address subproblems, they have minimized the upper bound Lyapunov drift plus penalty function. Liu et al. [

8] have used a queuing model to achieve objective optimization in fog computing scenarios. Their proposed system can help to minimize energy consumption and improve delay performance. They also optimize the payment cost for mobile devices. A distributed computation offloading decision-making problem has been shown by Chen et al. [

9]. They have formulated a problem for multi-user computation offloading to achieve nash equilibrium by using a game-theoretic approach. However, they consider the offloading decision strategy and energy control as separate issues.

It is worth noting that most of the previous works either do not consider the dynamic offloading or only consider the wireless powered single-user MEC system with binary offloading. In this paper, a smart and energy-efficient computation offloading algorithm for multi-user and multi-server MEC system is designed and which have different EH devices. The proposed algorithm is based on integer linear programming for dynamic offloading. It goes around feasible region constraint by different linear functions, and it finds the first interaction between the objective function and feasible region. The proposed algorithm allows choosing the execution mode among local execution, offloading execution, and task dropping for each mobile device. The main contributions of this Paper includes

Proposing a dynamic framework for energy-efficient computation offloading approach based on linear programming in multi-users, multi-servers MEC environment.

Presenting an efficient approach that allows switching between different modes (offloading execution, task dropping, and local execution) based on the executing tasks.

Extensive experiments to evaluate the performance has been conducted, and it is observed that the proposed method performs well as compared to existing models.

The remainder of this paper is organized as follows.

Section 2 discusses the related works of the proposed system.

Section 3 introduces the design of the proposed model.

Section 4 explains the proposed model using linear programming.

Section 6 describes the experimental results and evaluates the performance. Some discussion in

Section 7 is made, and finally, our work is concluded in

Section 8.

2. Related Work

Edge computing refers to those computational tasks which are being done at or near the edge of a network. In contrast, fog computing is the connection between the edge devices and the cloud in the system. Edge computing and fog computing are beneficial technologies. Their importance and usefulness are described by Mach, P. et al. [

10]. Edge computing builds the underlying architecture of computational resources at the edge, and fog computing uses this technique for computational offloading. It also enhances the network connection over different edge devices or edge servers. Sensing observations consume more energy than transmission. The energy consumption of data transmission over the cloud is less than the local execution. Hence, offloading is helpful if performing much computation by broadcasting a relatively small amount of data. It is usefull with the high computation and with small communication [

11]. In the case of multiple devices, there is a need to optimize resource allocation dynamically with EH devices. Liu et al. [

12]. designed a dynamic computation offloading system in the case of fog computing while considering EH devices. They took social relationship to minimize the exeectuion cost of social group while using game theoretic approach.

Table 1 summarized the recent research articles related to MEC based offloading. These articles have been compared according to the offloading task, user support, edge computation model, and compatibility with energy harvesting devices.

Markov process is extensively appropriated in the state transitions of stochastic systems. Many researchers use Markov Decision Processes (MDP) for the modeling of application placement problems in MEC. Doshi, P. et al. [

22] addresses the issue of deterministic behavior of services, which also requires extra monitoring of execution to recover from unexpected behavior of those services. They proposed an MDP-based model for the composition of the workflow. Henriques, D. et al. [

23] developed an algorithm to solve the issue of non-determinism. MDP is a continuation of Markov chains. The distinction between them is the additional options and compensations for motivation. The author reduces MDP to a Markov chain and then applied statistical model checking to find the optimal solution. X. Guo and O. Hernandez Lerma, [

24], wrote a book completely devoted to continuous-time MDP. They discussed the theoretical and applied implications of continuous-time MDP with advanced criteria. Schaefer, A.J. et al. [

25] uses MDP for the medical treatment decisions. Such decisions are very critical because of the uncertainty nature of different patients. Those decisions should be taken in insertion and sequential environment, and the doctor must have to consider all the uncertain factors which make them complex. MDP helps to find the best treatment plan according to the disease. But there are many challenges such as low computational power and requirement of more data.

The design of efficient computation offloading strategies has drawn study concern in current ages. Most works focus on single client-server MEC systems with energy harvesting devices. There is less work done for a system with multiple users and also for numerous servers, which are more typical scenarios in the real world. Huang, D. et al. [

26] proposes a time-varying wireless channel based algorithm. They used Lyapunov optimization to overcome the problem of high energy consumption.

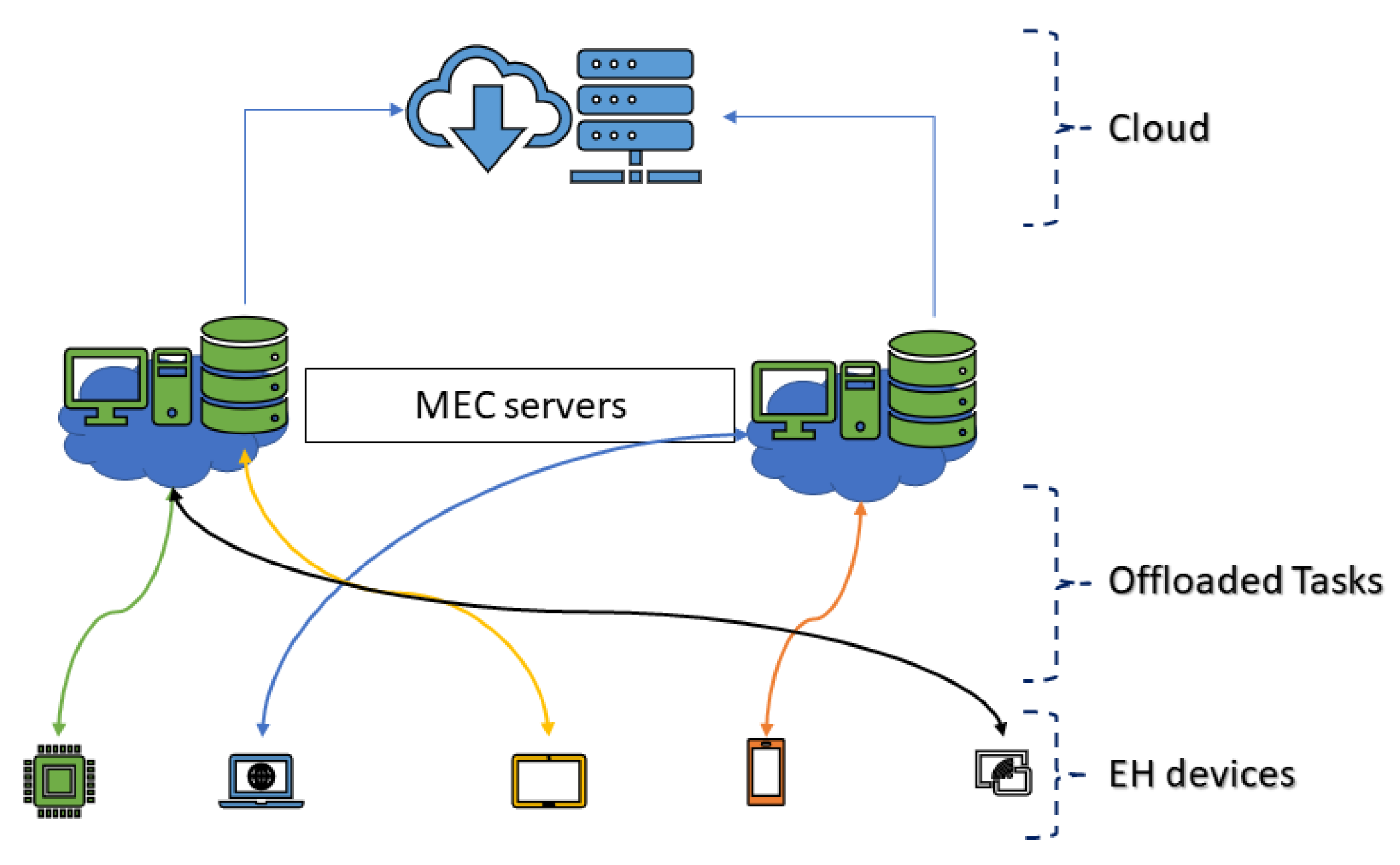

Figure 1 demonstrates a basic structure of multiple energy harvesting clients and a multi-server mobile edge computing system. Son, Y et al. [

27] presented an adaptive method for offloading in a cloud converged virtual machine system. They used a hybrid deep neural network approach to obtain context required for synchronization from cloud-based offloading service.

Task scheduling policy for delay optimization for a single user was proposed by Liu, J. et al. [

28]. Their proposed work determines the offloaded components of a software-based on stochastic control. The efficiency of computation offloading usually depends upon the wireless channel, and these channels should remain static during the execution process. But when the coherence time of a channel is less than the latency requirement, than it becomes difficult for channels to stay static. Optimizing the radio and computational resource usage cna be used [

13]. They demonstrate that to achieve a good quality of the channel, computation offloading must be higher. They use multi-input multi-output (MIMO) channels and proved that with this, more offloading is done. Energy savings are also more significant when compared to single-input single-output (SISO) and multi-input single-output (MISO) channels. Badri, H. et al. [

29], the authors designed a parallel greedy sample average approximation. They solved the problem of placement in mobile edge computing by developing a multi-stage stochastic programming method. Their primary purpose was to decrease communication, computational, and relocation cost.

Edge computing is used to overcome the limitations of mobile devices by offloading. Edge computing saves computational capabilities, battery resources, and storage availability. However, the problem is to find the optimal offloading mechanism for computational offloading. Mao, Y. et al. [

30] proposed a Lyapunov optimization-based dynamic computation algorithm (LODCO) to tackle the problem of offloading mechanism in a multi-user case. It was a low complexity algorithm that reduces computation failures noticeably. Their proposed framework enables adaptive offloading powered by energy harvesting. They focused on energy harvesting MEC servers and energy harvesting mobile devices. For this purpose system operator decides the amount of computation to be offloaded. The LODCO algorithm can make the offloading decision in every time slot, and it can reduce execution time by up to 64 % by offloading to the MEC servers. Zhao, H. et al. [

31] developed a system to obtain low execution cost and also perform offloading tasks based on the Lyapunov optimization dynamic computation algorithm. Their solution can improve the computation of offloading tasks, and they also focus on the quality of experience. They mainly focus on the selection of the optimal server and utilization of resources. But in their work, issues like the collaboration of cross-edge and overlapping of signal coverage are not considered.

Sardellitti, S. et al. [

32] analyzed a situation designed by various mobile clients inquiring for computation offloading of their tasks to a set of edge servers. Moreover, to reduce the energy consumption of mobile devices and to further increase their battery life, which was also a severe issue. In this scenario, where multi-clouds are linked with every small cell Evolved Node B (eNodeB), the energy consumption decreases with the increment of the small cell eNodeBs. In the multi-cloud environment, each cloud is considered as a set of cloud-enabled access points clustered together, and it is regarded as a single entity. Some researchers concentrated on advancing energy harvesting or dynamic voltage scaling technologies. Many mobile applications generated on the IoT Mobile Devices, require high energy consumption because they are computationally intensive. To reduce the execution latency of MEC systems, Mao, Y. et al. [

33] proposed a flow shop scheduling theory-based low-complexity solution. They optimize both allocations of transmission power and offloading tasks. But their presented algorithm can be applied only on single-user and single cloudlet environment. A significant issue among computation offloading and selection of MEC servers in a multi-user scenario is discussed by Dinh et al. [

15]. They implemented a computational offloading structure by simultaneously optimizing the task allocation decision and the CPU frequency of mobile devices. They also proposed semidefinite relaxations based algorithms to find out the optimal task allocation decision while keeping in view the fixed CPU frequency and elastic CPU frequency. If the edge nodes have high computational power, it causes low computation latency. But communication delay can occur more extensively because of the weak communication link. So it is not straightforward to satisfy both latency and reliability at the same time. The increment in the number of edge nodes decreases the computation delay of the task but tends to increase the risk of error probability. This issue makes it more challenging to design an optimal offloading scheme, which takes account of computations and communications.

The integration of MEC with vehicular networks is evolving a new paradigm called vehicular edge computing. But offloading the tasks to edge vehicles poses security and privacy issues. Huang, X. et al. [

34] suggested a decentralized reputation management system to implement security assurance and improve network efficiency in the implementation of vehicular edge computing. But in this scenario, roadside units are vulnerable to intrusions, so that problem still needs to be addressed. Zhang, K. et al. [

35], the authors devised a contract-based computation offloading scheme in cloud-enabled vehicular networks. Their system maximized the advantage of the MEC service provider by improving the allocation of the computing resources based on the scheduling threshold. They used the MEC servers to offer computation offloading services and designed an optimal offloading algorithm. Their system considers the resource limitation of MEC servers, latency tolerance, and transmission cost. The MEC server also gets the payment from vehicles based on the computational offloading task. It uses a wireless communication service for the contract information payment. One of the main issues is to analyze the effect of transmission control between data of mobile users and vehicles. Another problem in vehicular edge computing networks is to minimize the cost of service sharing as a volunteer node. Such as providing a video streaming service that requires resources from mobile users. A valid service control mechanism was composed in an online vehicle MEC environment to address these issues by Nguyen, T. et al. [

36]. They propose a video streaming service model based on the lower cost of obtaining data from nearby vehicles. They also recommend an incentive mechanism to encourage users to rent their resources. Roadside units play a vital role in vehicular edge computing networks. Liu, Q. et al. [

37] proposed an adequate disseminated computation offloading algorithm to enable better offloading arrangements for the vehicle to vehicle networks. To guarantee the most beneficial use of underutilized vehicular computational resources. Feng, J. et al. [

38] introduced an autonomous vehicular edge (AVE) structure in controlling the unused computational resources of vehicles. They select MEC based environment to address various network related issues in vehicular technology. The authors present an efficient scheduling and adaptive offloading scheme that reduces the computation complexities in a VANET environment.

MEC has the potential to achieve an optimal trade-off for delay-sensitive and computation- intensive tasks. Hou, X. et al. [

39] observed two types of vehicles (parked vehicles and slow vehicles) as a foundation in implementing computational resources for vehicles with computation-intensive jobs in the vehicular edge computing. Vehicle to vehicle communication requires service-deployment latencies below a few tens of milliseconds. They proposed a new paradigm with the name of Vehicular Fog Computing. Their system satisfies basic requirements such as location awareness, low latency, and mobility.

The abundance of IoT devices has risen significantly in licensed and unlicensed band networks (e.g., wireless fidelity). Ho, J. et al. [

40] introduced a queueing model for energy storage and battery consumption behavior of IoT devices and a low energy probability model. The proposed model provides a formula for the downlink speed of IoT devices by calculating battery exhaustion, download opportunities, and the likelihood of the initial window size of the licensed assisted access channel backoff. They have distinguished how to transmit power packs for wireless EH devices, which exist on authorized secondary access channels with WiFi channels. They continued this notion to wireless networks using the power pack concept practiced in wired networks. However, their work did not focus on diminishing interference by downloading licensed and unlicensed bands. Another offloading model for EH devices is proposed through providing sustainable energy supply and adequate computer skills in a work by Li, C. et al. [

41]. Nowadays, MEC is combined with wireless power transmission to improve wireless devices’ performance in IoT systems called wirelessly powered MEC. In their work, they used non-orthogonal multiple access technologies to handle a boot collision where two or more devices could identify the same syncword. syncword is used for synchronizing data transmission. The results of theoretical performance analysis show that the power consumption of the system is performing well. But they have not considered the task dropping scenarios for mobile EH devices.

5. System Flow

The proposed method allows switching between different modes (i.e., offloading execution, task dropping, and local execution) based on the executing tasks.

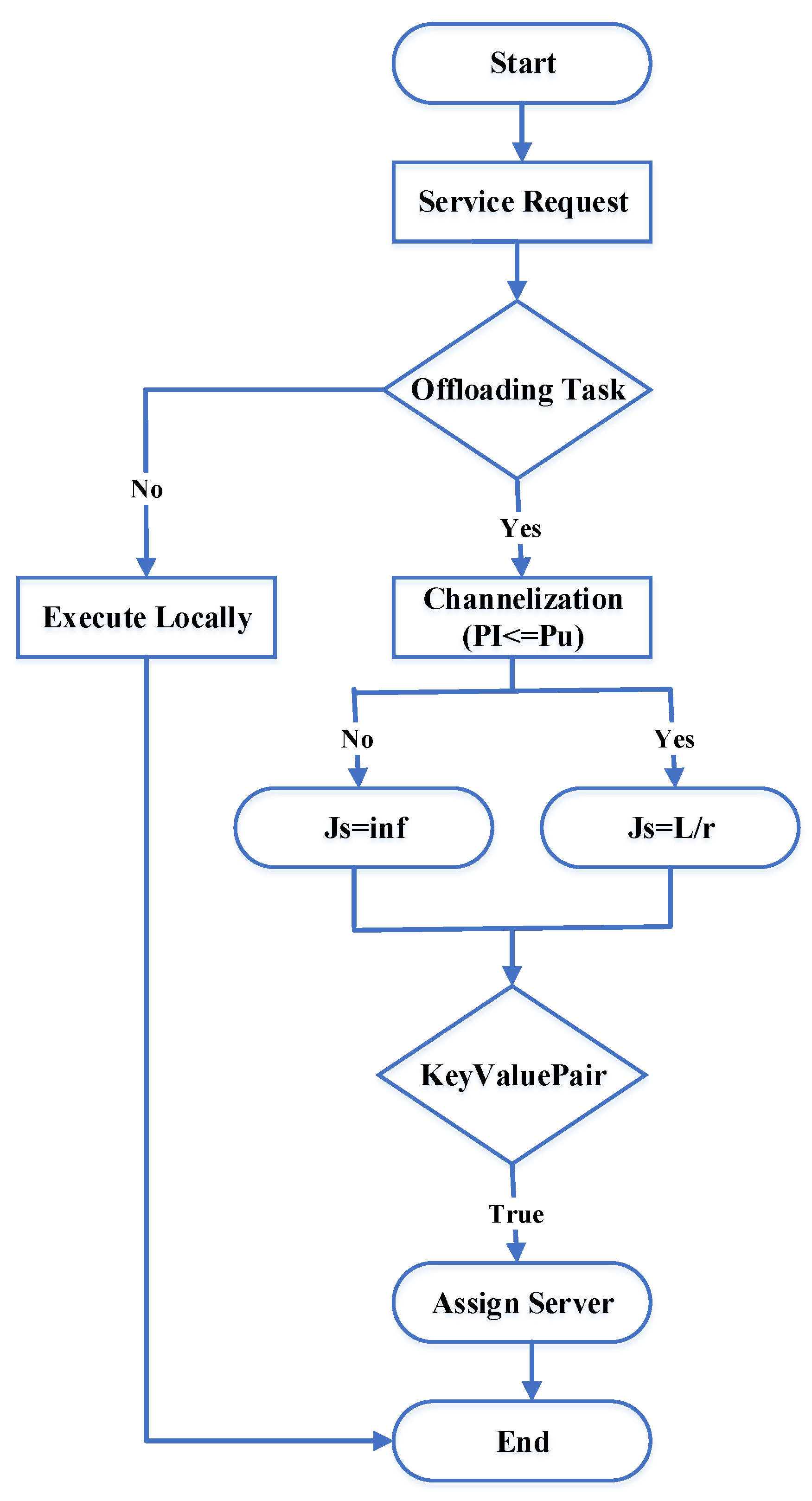

Figure 3 shows the process flow diagram of the proposed system. It initializes the map of the number of EH devices. If the task is for offloading, the system will calculate the channelization of the EH device. Then it will check the status of the Boolean variable

. If the status is false, then it will calculate position variable

; Otherwise, it will assign the server for offloading.

The proposed methodology starts with initializing the map with null then stores the number of mobile devices connected to each MEC server. The function produces a computational task, find out the best energy harvesting devices, and then calculates the delay of execution. After that, from the first server and a mobile device that is limited to a distance within 0 to 60, it derives channelization of the mover. Then, it assigns the server or alternative mode for those tasks that users choose to uninstall to perform and finds mobile devices with minimal transmission latency of MEC server pairs. We store the values of key pair, device i, and optimal server j, in map. and is obtained corresponding to the minimum delay. At this point, only offloading or uninstall execution is considered. Then the key-value pair is removed from the map and synchronize a series of commonly maintained variables. When the mobile device has a server that can be selected, it will continually look for the shortest delay that can be found. It will then return the outermost while loop to start inspecting for the lowest again. It will then remove the key-value pair from the map and synchronize a series of co-maintained variables. The time complexity of Algorithm 1 is represented by , where T and EH denote the total number of time slots and energy harvesting devices, respectively.

Detailed steps of the proposed algorithm are explained in Algorithm 1 and its sub-algorithms.

Table 3 describe the variables which are used in algorithms.

| Algorithm 1 Energy-efficient computation offloading. |

| Ensure: Initialize flags with null |

| Ensure: Initialize |

| while do |

| while do |

| generate and |

| if then |

| generate |

| calculate |

| else |

| set equal to infinity |

| set as true |

| end if |

| end while |

| calculate channelization of mover // Execute Algorithm 2 |

| if is false then |

| use integer linear programming // Execute Algorithm 3 |

| else |

| assigns the server for those tasks needs to be offloaded // Execute Algorithm 4 |

| end if |

| slice iteration++ |

| end while |

5.1. Computation Offloading

The primary purpose of this system is to choose the mode for offloading or local execution. In Algorithm 1, the proposed energy-efficient computation offloading mechanism is discussed. This algorithm works for every iteration of Time T initializing from 1. It starts with the mapping null and initializing flags,

, and

.

and a matrix of zeros initializes

with the dimension of

, where

is the number of time slices, and

N is the number of mobile devices. Flags are initialized with the matrix of

, where

M is the number of MEC servers.

is to save the current energy consumption of the mobile device to each MEC server separately in a matrix.

is to save the best transmission power of the current mobile device to each MEC server separately.

is to store the locally executed delay of each mobile device separately for use in secondary decision making. The number of devices is set as N, and a loop is initialized for every device. A variable zeta is initialized with binomial distribution and generate Lower frequency

and upper frequency

of local execution by N mobile devices, using Equation (

12) and Equation (

13) respectively. If

is less than or equal to

, then the function will calculate the execution delay. Calculate energy consumption performed locally by

mobile device

and generate

using Equation (

14). The

set to be False. However,

is True when

is greater than

, where

is infinity otherwise

will remain False.

After that, the channelization of mover is calculated using Algorithm 2 to decide between using integer linear programming or assigning of the task to the server. After the execution of Algorithm 2 system will check the status of

. If

is false, then use integer linear programming mechanism using Algorithm 3. Otherwise, the tasks that need to be offloaded are assigned to a server using Algorithm 4. In the end, the time slice iteration is incremented, and the whole procedure is repeated until the maximum time T is reached.

| Algorithm 2 Calculate channelization of mobile devices. |

| Ensure: Initialize |

| Ensure: Calculate , |

| if then |

| set as inf |

| set as true |

| else |

| calculate by dividing L to r; |

| set as true |

| end if |

| set as ; |

| calculate container number of the execution |

5.2. Channelization of EH Device

One of the major reasons for anomalies is the incorrect calculation of the channels’ access mechanism. In this research, the adaptable width of channelization is calculated. It helps in minimizing performance anomalies. The calculation of the channelization of mobile devices is explained in Algorithm 2. To calculate the mover’s channelization, initialize

with a matrix

, where

h is channel power gain from the mobile device to the server. Then calculate temporary achievable rate

using Equation (

8) and power needed for execution of computation task

using Equation (

9). If

is less than or equal to

, set

as infinity and set

as true. Otherwise calculate

by dividing L to r; and set

as true. then set

as

. If the mode is equal to two, then calculate the value of the map.

5.3. Integer Linear Programming

The use of integer linear programming is explained in Algorithm 3. For this, we initialize the target as a matrix of zeros. We also initialize variables intcon, A, b,

and calculate an updated target and a goal. Intcon is the vector of positive integers which ranges from 1 to

. Then, we return the calculation result of the system operation and set the value of position variable (

) where system operation is true. If pos is equal to 1, then set indicator

as 1. If pos is equal to 2, then set indicator

as 3. Otherwise, set indicator

as 2.

| Algorithm 3 Use integer linear programming. |

| Ensure: Initialize target as a matrix of zeros |

| Ensure: Initialize intcon, A, b, |

| while do |

| calculate updated target and goal |

| returns calculation result of system operation |

| set pos where system operation as true |

| if pos is equal to 1 then |

| set indicator as 1; |

| if pos is equal to 2 then |

| set indicator as 3; |

| else |

| set indicator as 2; |

| end if |

| end if |

| end while |

5.4. Assigning the Server

The steps for assigning the server for those tasks which need to be offloaded are described in Algorithm 4. For this purpose, start with setting the movement of the maximum CPU time of the server and calculate the upper bound

. Then repeat the next steps until the map is not equal to null. The function to find a mobile device with minimal transmission latency also finds minimum i and j corresponding to the minimum delay. At this point, the algorithm only considers uninstalling execution tasks. If

is less than or equal to

than delete the key-value pair from the map and synchronize a series of commonly maintained variables. Corresponding to the MEC server, Increment 1 in the flag and Set

to

and if min value of

is not equal to inf then return the outermost while to start looking for the smallest

again. Otherwise, initialize the indicator variable and delete the key-value pair from the map and synchronize a series of co-maintained variables. Here,

is to save the delay value of the current mobile device to each MEC server. In a case where the mode is equal to 2, the current optimal mode will still execute for uninstallation if

are less then or similar to

, than remove the key-value pair from the map and synchronize a series of commonly maintained variables. Set

to infinity, and if min value of

is not equal to inf, then return the outermost variable and reset the indicator variable. After that, set the new optimal mode and initialize indicator

to mode, Also remove the key-value pair from the map. This algorithm is used to assign the server or alternative mode for those tasks that users choose to uninstall to perform.

| Algorithm 4 Assigns the server for those tasks needs to be offloaded. |

| Require: movement of maximum CPU time period of server |

| Ensure: Calculate |

| while do |

| Found device with minimal transmission latency |

| ifthen |

| if then |

| Corresponding to the MEC server Increment 1 in flag |

| Set |

| else |

| Reset indicator variable |

| end if |

| else |

| if then |

| Current optimal mode is still executed for uninstallation |

| if is less than or equal to UB then |

| 1++ |

| Set |

| else |

| if then |

| Returns the outermost |

| else |

| Reset indicator variable |

| end if |

| end if |

| else |

| initialize indicator to mode |

| Remove the key-value pair from the map |

| end if |

| end if |

| end while |