K-ONE Playground: Reconfigurable Clusters for a Cloud-Native Testbed

Abstract

1. Introduction

- We list the functional requirements of SmartX Playgrounds for composable playground. These requirements are clearly explained to provide a clear mapping with design choices for reconfigurable clusters effectively.

- To handle the functional requirements for a composable playground properly, we propose a unique design of reconfigurable clusters. We also provide a practical implementation of reconfigurable clusters for a real-world infrastructure.

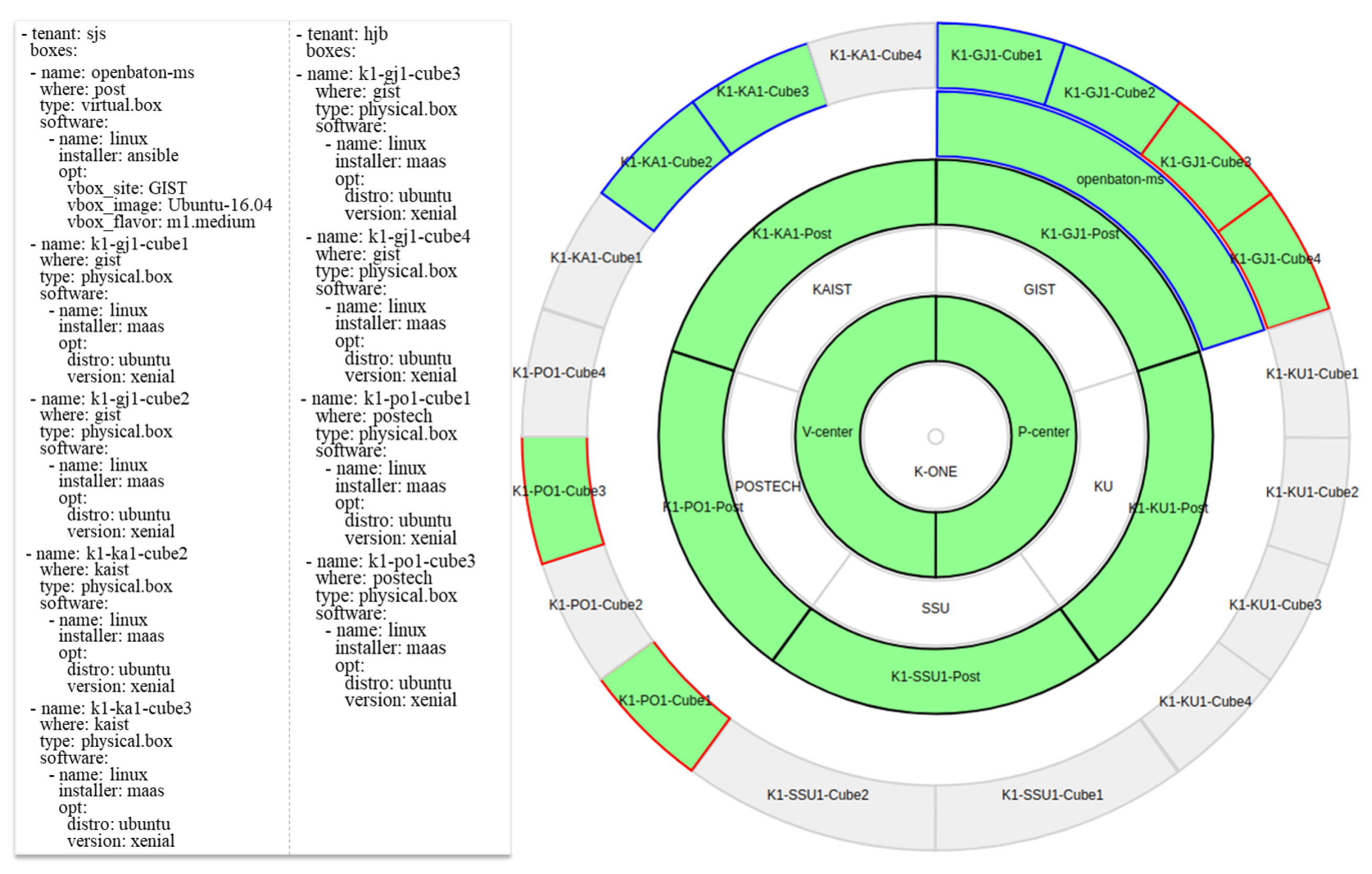

- We describe how we operate reconfigurable clusters to provide different combinations of physical servers and virtual machines to multiple tenants easily. In addition, we verify the feasibility with practical use cases, which respectively demand different playground topologies for developing cloud-native DevOps services.

2. SmartX Playground with Reconfigurable Clusters

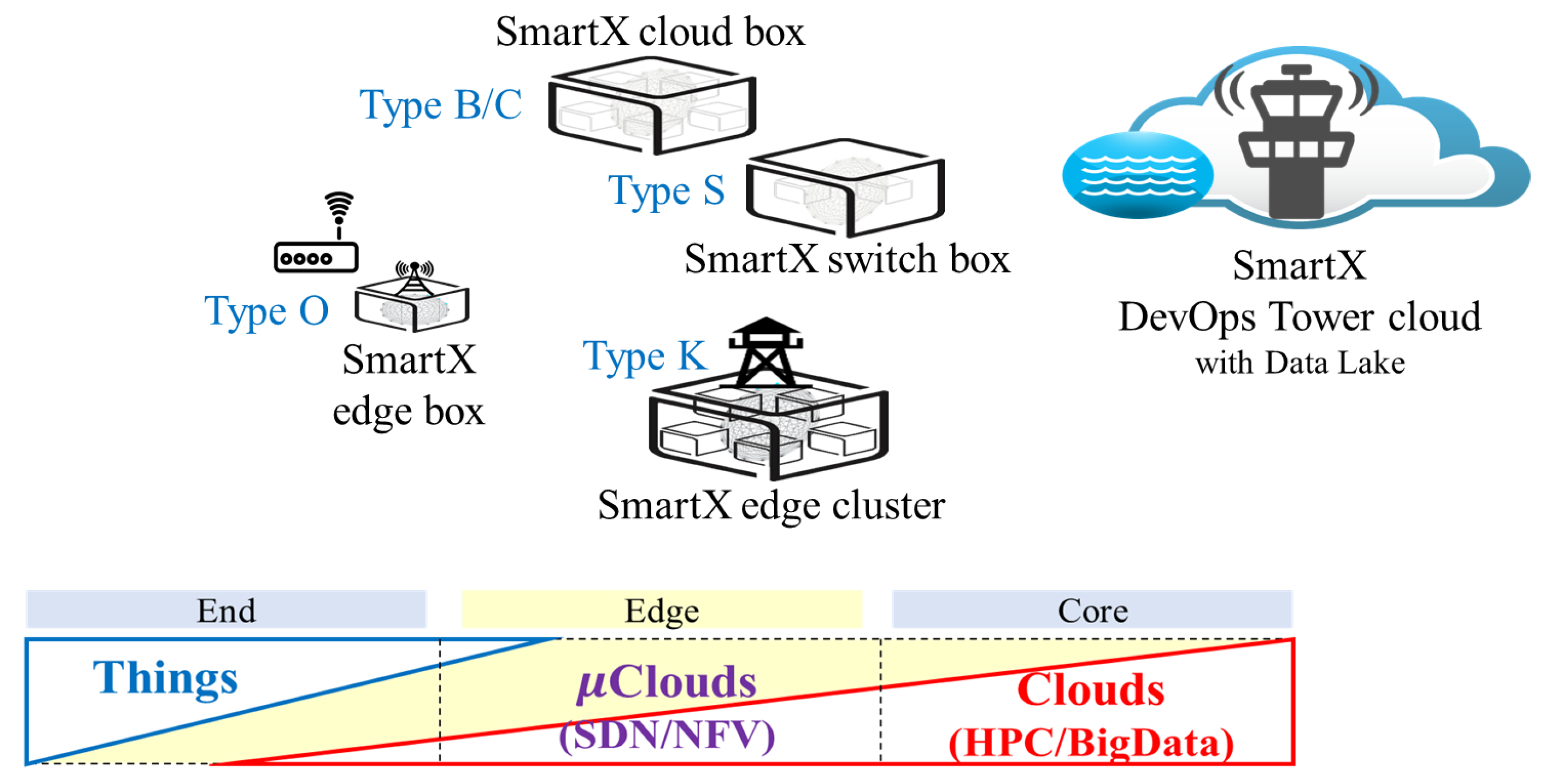

2.1. SmartX Playground: Concept

2.2. SmartX Playground: Requirements for a Composable Playground

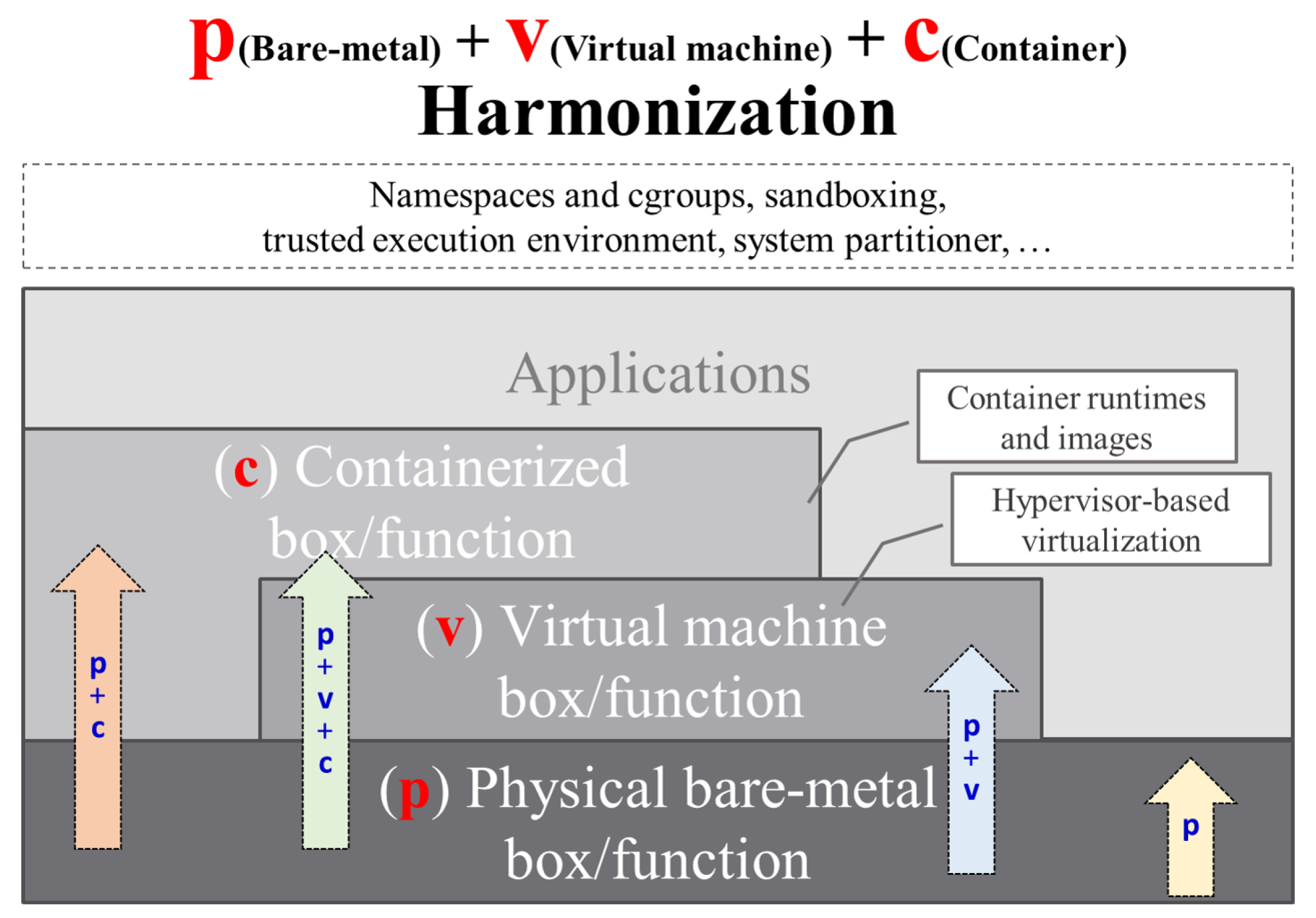

- R1 (Requirement 1). Providing the p+v+c harmonization testbed: The cloud-native computing paradigm introduces a container layer above physical/virtual layers. With the trend, typical cloud-based infrastructure and diversified DevOps services are adopting combinations of physical (p), virtual (v), and container (c) boxes. To simplify the complicated view, we introduce the concept of p + v + c harmonization, as shown in Figure 2. A composable playground should flexibly compose inter-connected boxes and functions across the layers, described as the dashed upward arrows in Figure 2, to support diversified services properly. Furthermore, a composable playground should clearly define actual forms of physical, virtual, and container boxes, as well as software tools for creating these boxes from distributed clusters.

- R2 (Requirement 2). Supporting multiple networks for flexible and reliable composition: For flexible and reliable composition from distributed clusters, a composable playground should support multiple networking connectivity within, as well as between clusters, while properly limiting the access of developers. Typical DevOps services can generate control traffic in addition to service data traffic for efficiency, reliability, and security. A composable playground should provide multiple networks for tenants to match this traffic to playground networks effectively. Meanwhile, heavy workloads and any incidents incurred from multiple tenants, especially in networks, and over shared clusters can result in failures of operations, as well as incorrect results of DevOps services. Thus, for a composable playground, SmartX Playground should separate operational traffic from service traffic.

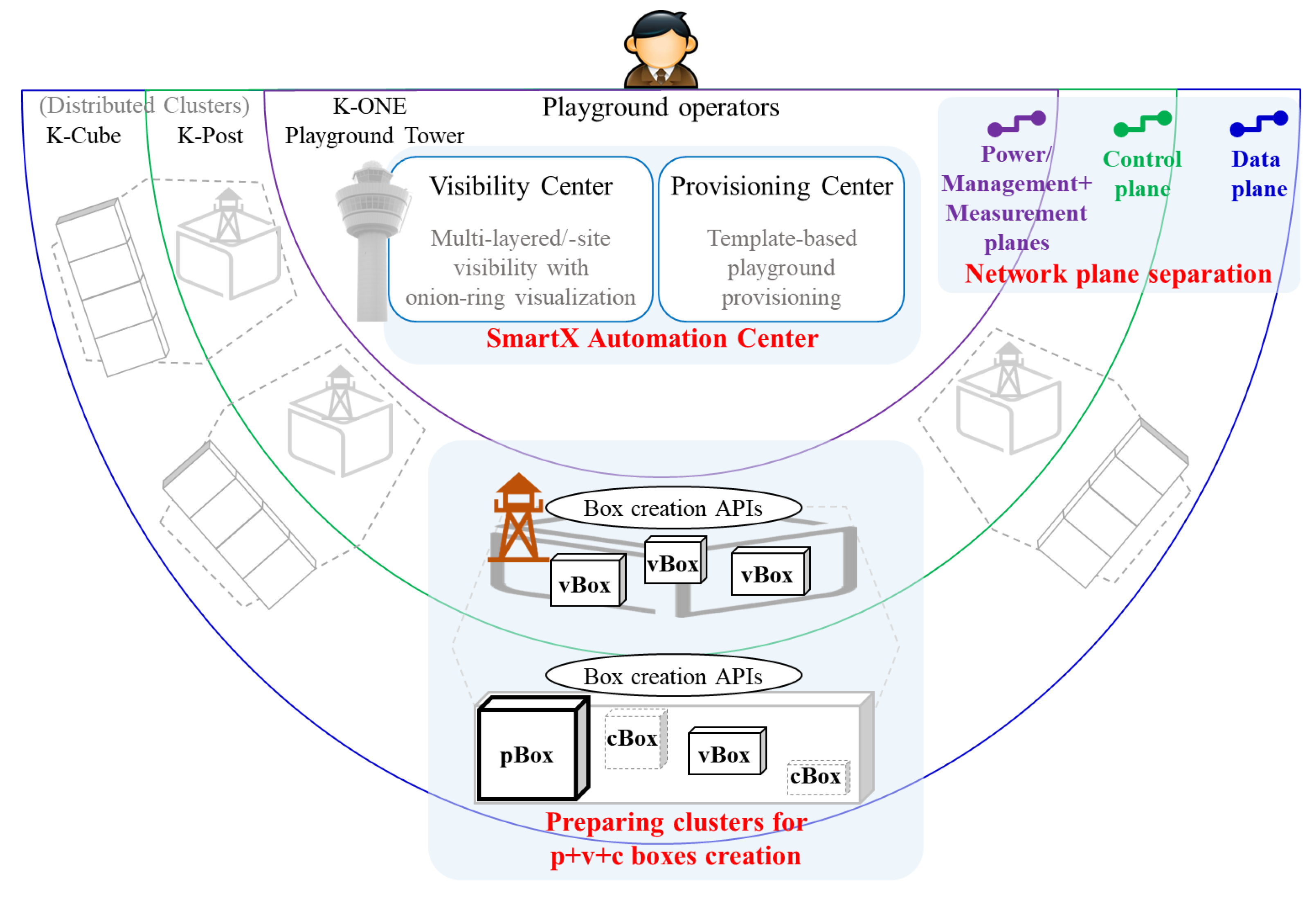

- R3 (Requirement 3). Automating reconfiguration for a multi-site playground topology: A composable playground should automate the complicated procedures of playground topology reconfiguration for efficient operations. For playground topology reconfiguration, operators should understand the overall playground topology and properly compose unused boxes to satisfy the requirements of DevOps services. In a multi-site playground with distributed clusters, the reconfiguration can be more difficult due to the complicated topology and geographical separation. Thus, reconfiguring the playground topology without automation features can incur time waste and even human errors, which can disturb reliable operations and services. Thus, SmartX Playground should have automated features with DevOps tools to help operators at Tower servers easily grasp visibility and compose boxes from distributed clusters.

3. Design of Reconfigurable Clusters

3.1. Overall Design of K-ONE Playground

3.2. Components’ Design for K-ONE Playground

3.2.1. Reconfigurable Servers for Physical and Virtual Boxes

3.2.2. Networking Plane Separation

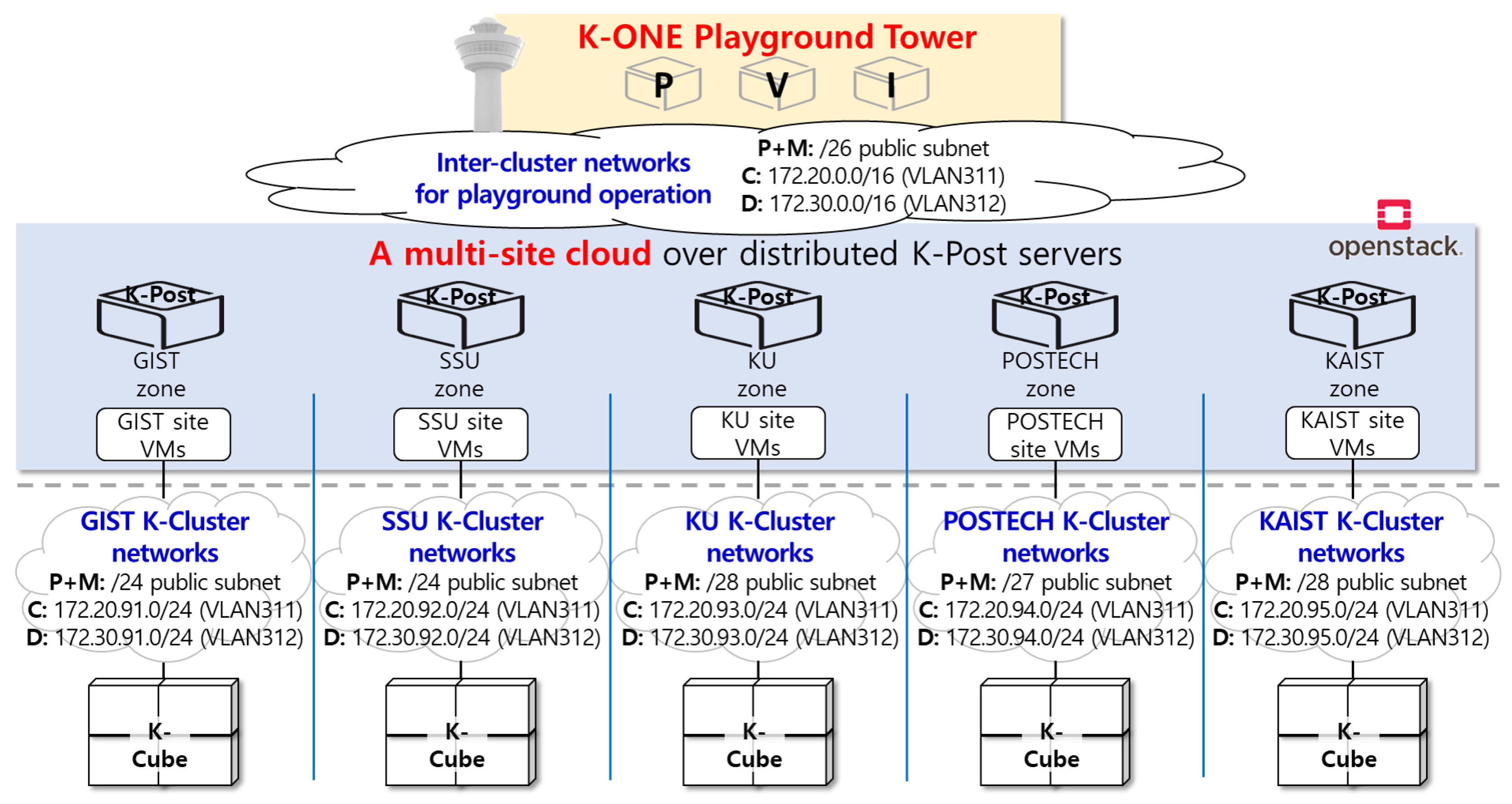

- The P (Power) plane allows operators to maintain the hardware status of physical servers remotely, such as power status, temperature, event logs, and remote console access through the IPMI (Intelligent Platform Management Interface)-based remote management access [25]. The P plane can guarantee constant monitoring and control of physical servers even if other networking planes are unavailable due to, for example, operating system failures or shutdown.

- The M (Management + Measurement) plane is used for operating system management of physical boxes and virtual boxes. The M plane can accommodate SSH traffic to remote boxes for debugging and troubleshooting. Besides, SmartX Automation Centers in centralized Tower servers can use this plane to install and configure software packages for remote boxes. In addition, visibility data measured from boxes can be transferred to Visibility center through this plane.

- The C (Control) plane is mainly used for developers’ control traffic generated from SDI-oriented services. For example, SDN controllers and switches exchange control and monitoring messages with each other and internal components in cloud/cloud-native computing exchange control messages with each other.

- The D (Data) plane can accommodate any service-level traffic of user experiments such as videos and voice data. With the D plane Playground, tenants can handle control traffic and data traffic, respectively. Thus, the developers can acquire relatively accurate results regarding their experiments.

3.2.3. SmartX Provisioning and Visibility Centers

3.3. Resource Infrastructure with Distributed Clusters

4. Implementation of Reconfigurable Clusters

4.1. Preparing Distributed Clusters to Compose Physical and Virtual Boxes Remotely

4.2. Networking Plane Separation

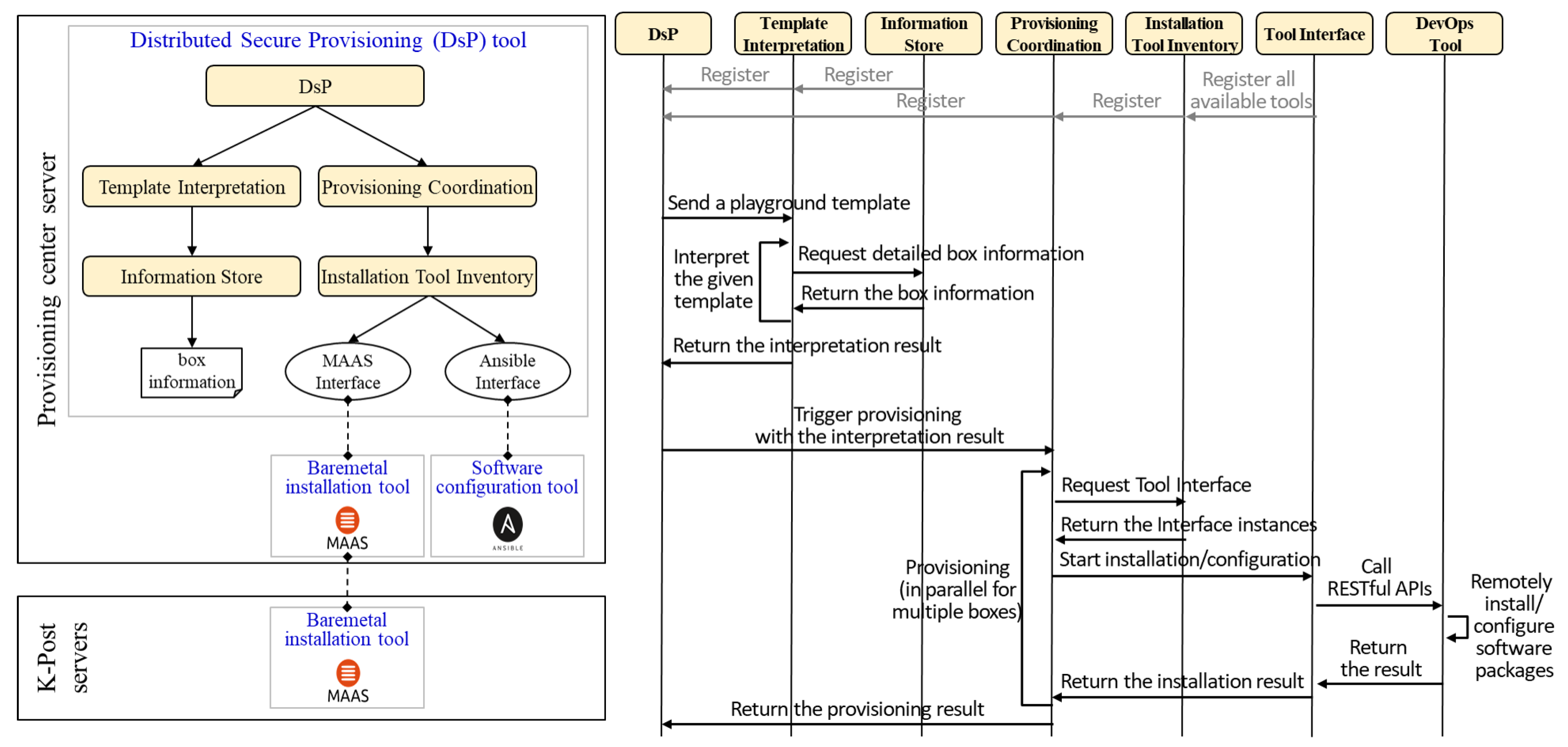

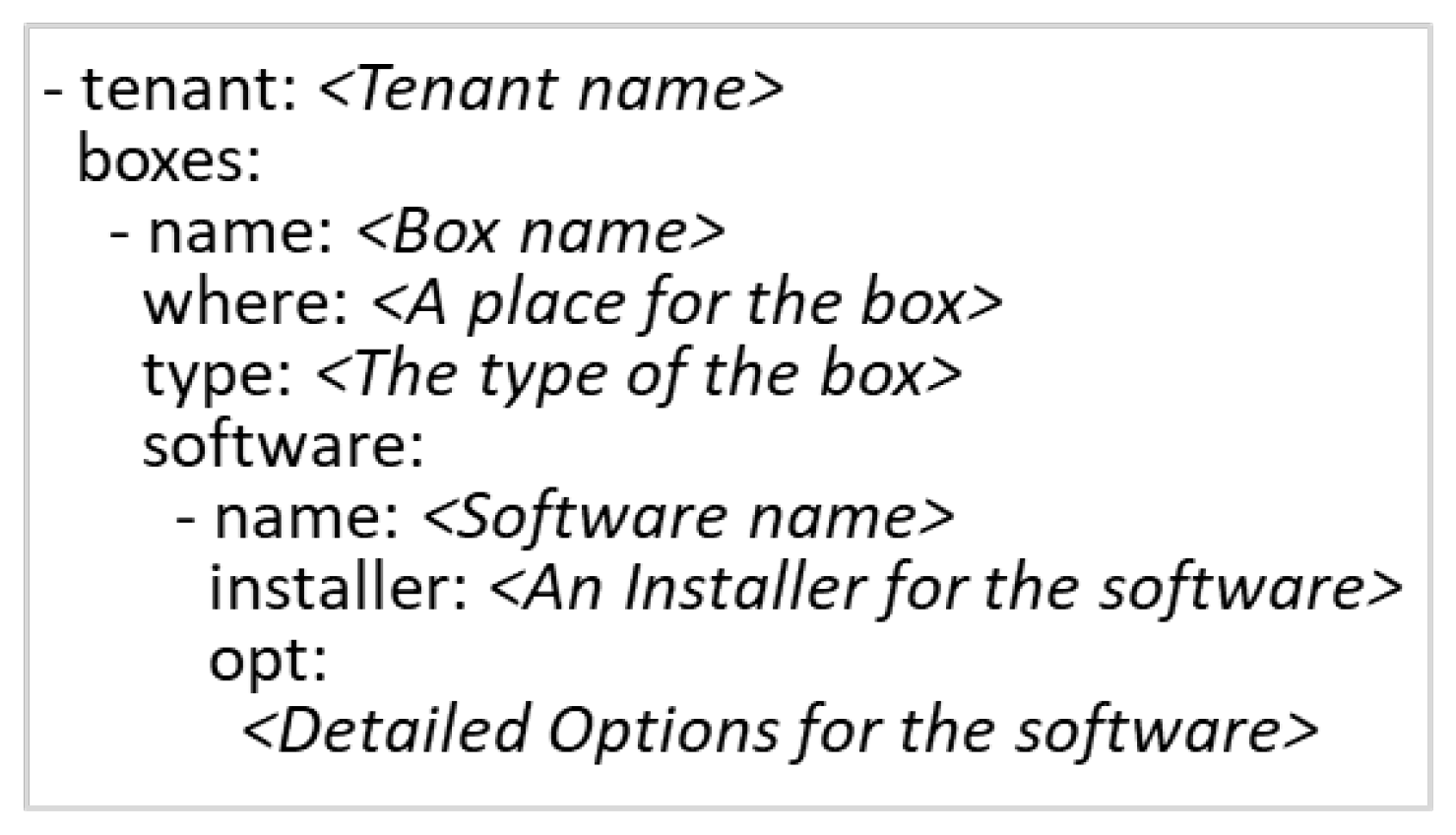

4.3. SmartX Provisioning and the Visibility Center

5. K-ONE Playground: Operations and Utilization

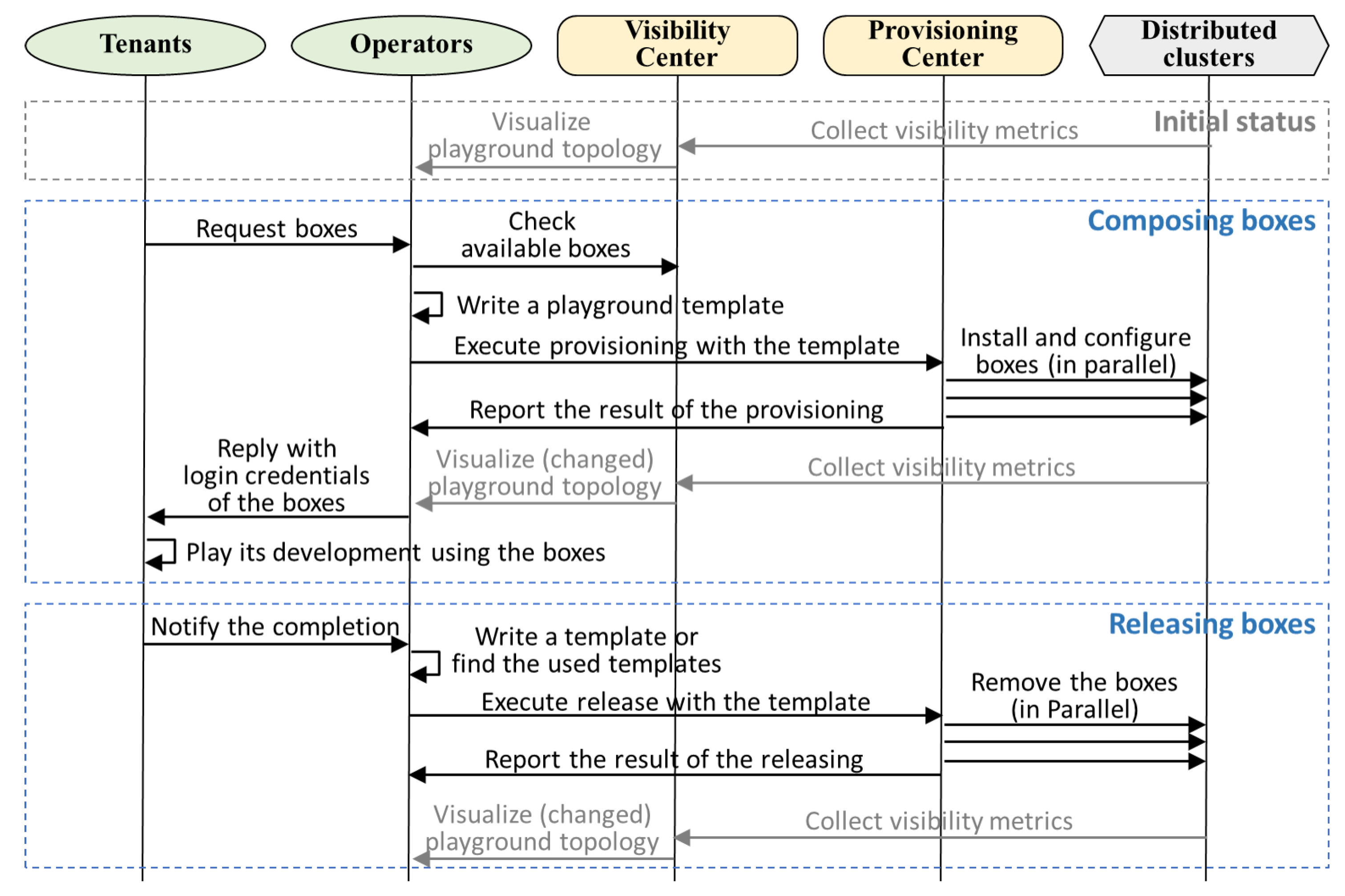

5.1. Operations of K-ONE Playground

5.2. K-ONE Playground Utilization

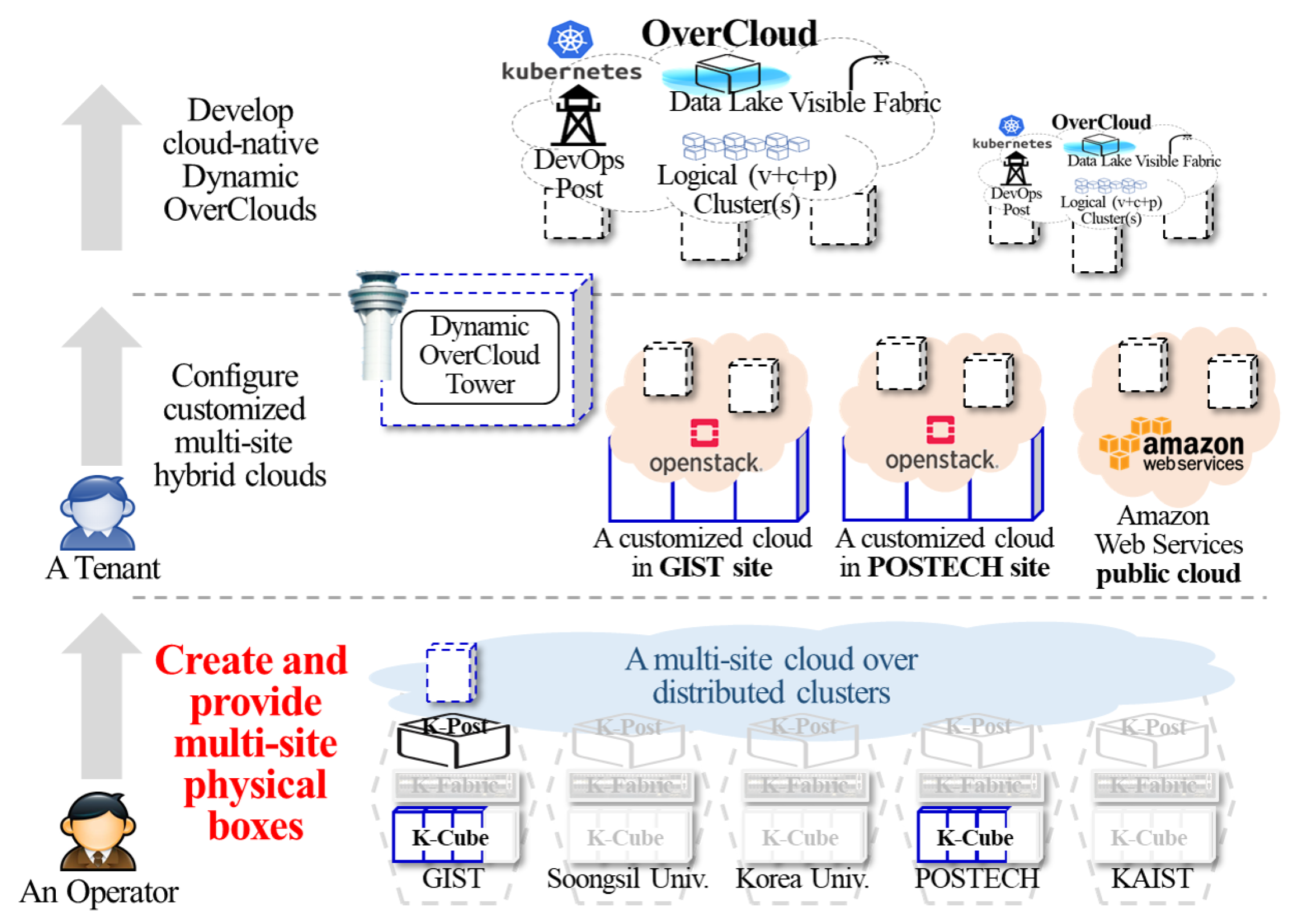

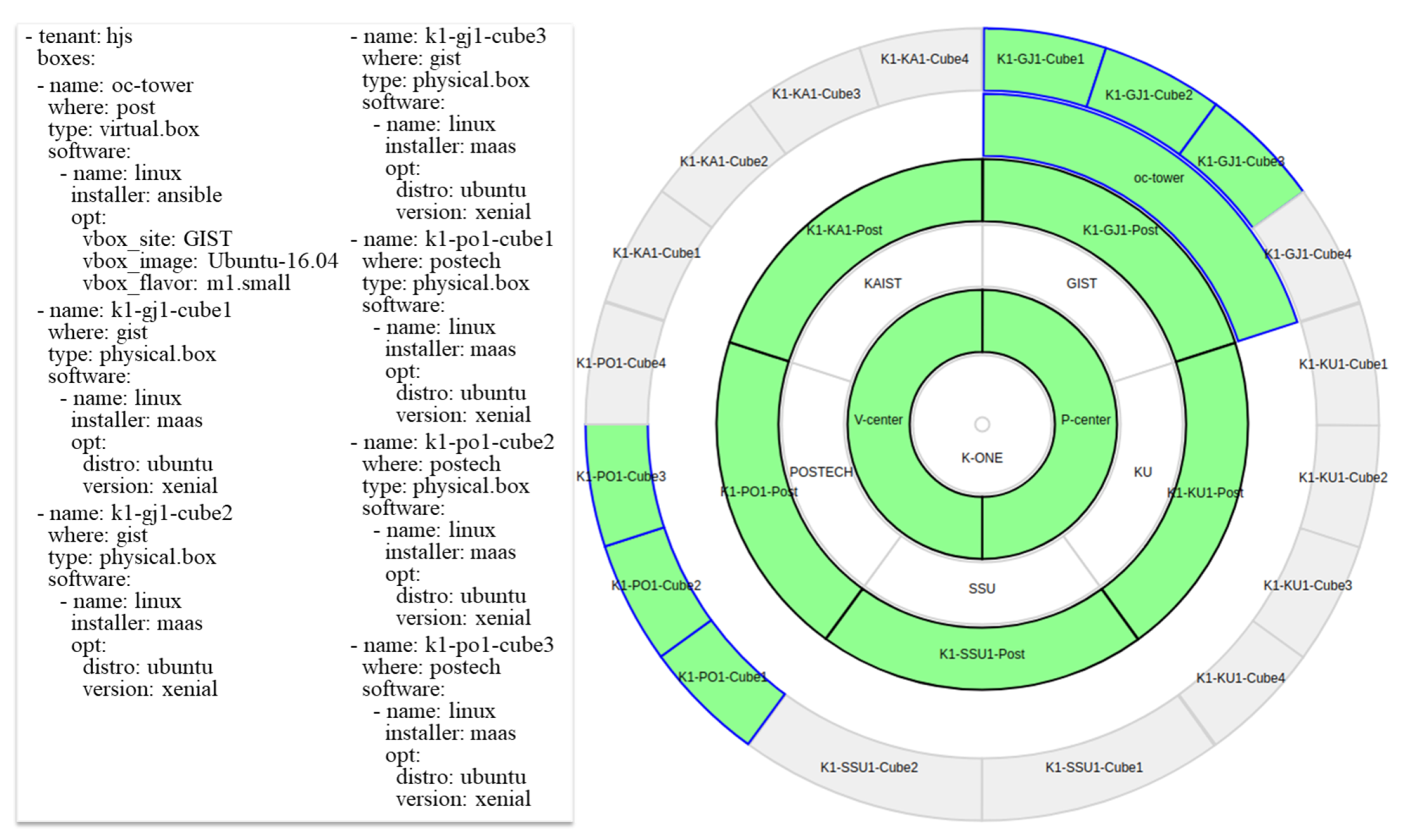

5.2.1. Utilization #1: Multi-Site Physical Boxes for Cloud-Native Dynamic Overcloud

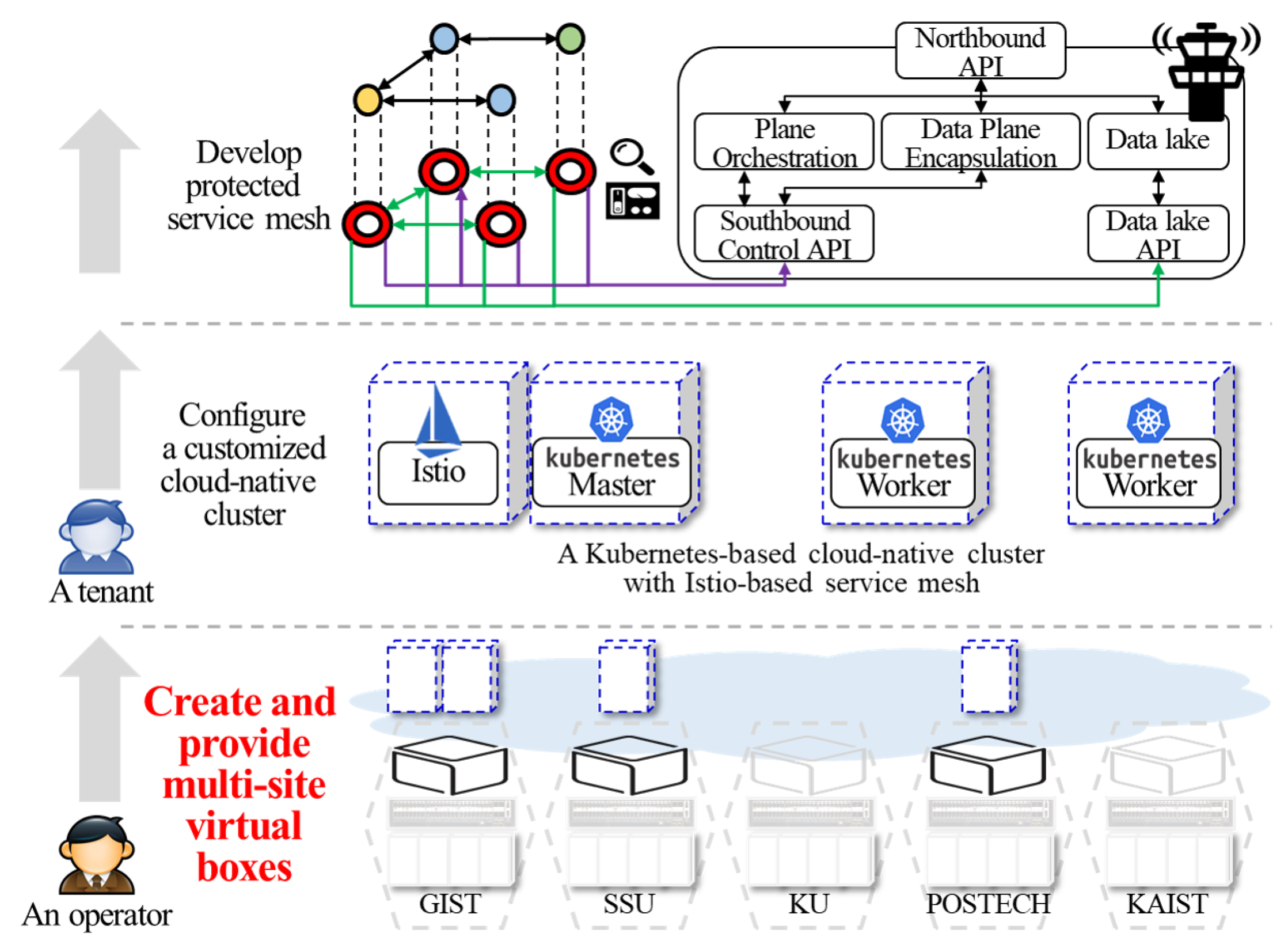

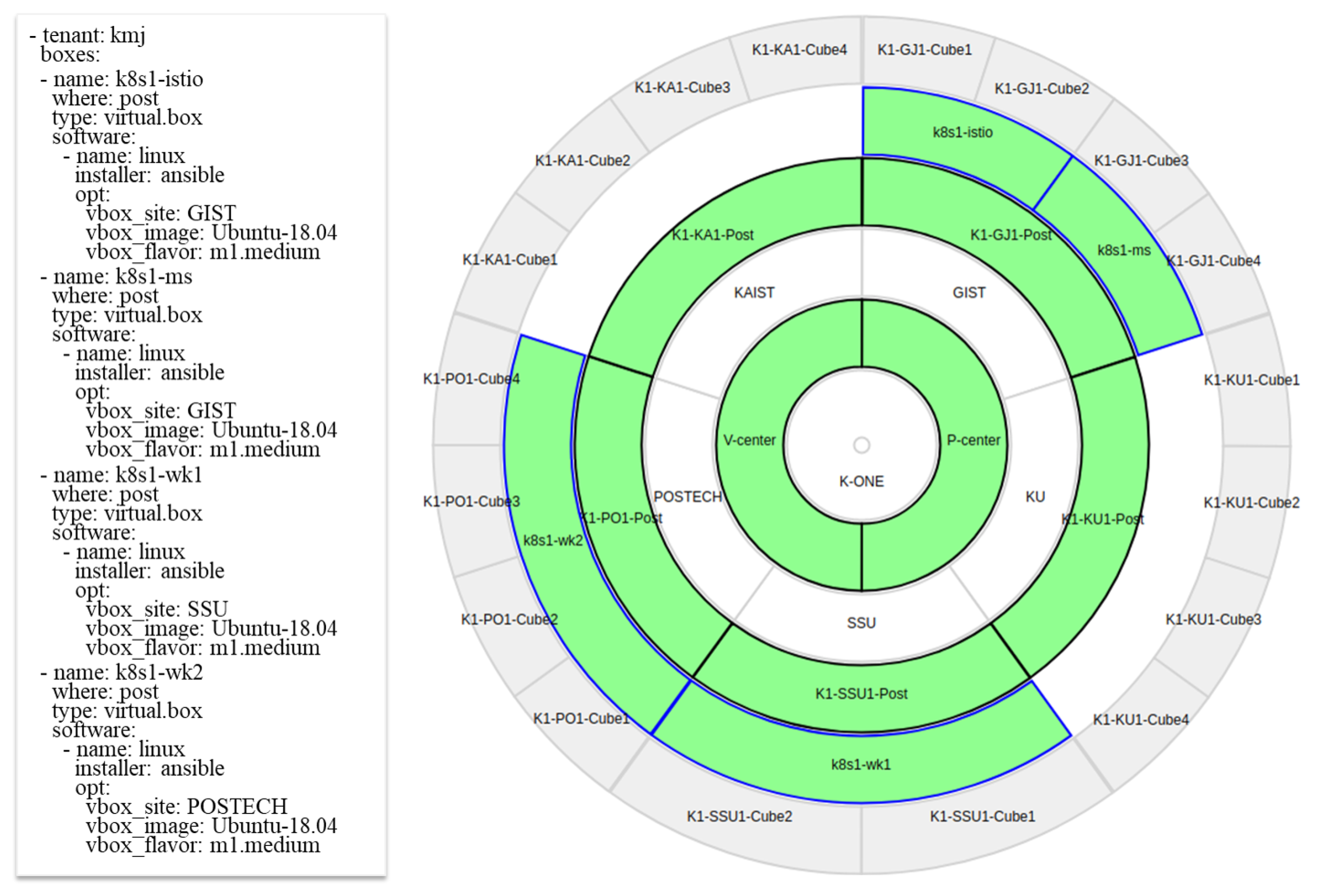

5.2.2. Utilization #2: Multi-Site Virtual Boxes for Cloud-Native Service Mesh Service

5.2.3. Utilization #3: Multi-Tenants Testbeds for Open Source Software Development

6. Related Work

7. Conclusions

Author Contributions

Funding

Conflicts of Interest

Abbreviations

| DevOps | Development and Operations |

| DHCP | Dynamic Host Configuration Protocol |

| DsP | Distributed Secure Provisioning |

| GUI | Graphical User Interface |

| IPMI | Intelligent Platform Management Interface |

| KREONET | Korea Research Environment Open NETwork |

| KVM | Kernel-based Virtual Machine |

| K-ONE | Korea-Open Networking Everywhere |

| LXD | LinuX container Daemon |

| LAN | Local Area Network |

| MAAS | Metal as a Service |

| M-CORD | Mobile-Central Office Re-architected as a Data Center |

| NFV | Network Function Virtualization |

| NOS | Network Operating Systems |

| OF@TEIN | OpenFlow at Trans-Eurasia Information Network |

| PXE | Pre-eXecution Environment |

| REST | Representational State Transfer |

| SDI | Software-Defined Infrastructure |

| SDN | Software-Defined Networking |

| MVF | MultiView Visibility Framework |

| SSH | Secure Shell |

| VLAN | Virtual Local Area Network |

| VXLAN | Virtual Extensible Local Area Network |

| VM | Virtual Machine |

| YAML | YAML Ain’t Markup Language |

References

- CNCF Community. CNCF Cloud Native Definition v1.0. Available online: https://github.com/cncf/toc/blob/master/DEFINITION.md (accessed on 9 February 2020).

- Chang, H.; Hari, A.; Mukherjee, S.; Lakshman, T.V. Bringing the Cloud to the Edge. In Proceedings of the IEEE Conference on Computer Communications Workshop 2014, Toronto, ON, Canada, 27 April–2 May 2014; pp. 346–351. [Google Scholar]

- O’Keefe, M. Edge Computing and the Cloud-Native Ecosystem. Available online: https://thenewstack.io/edge-computing-and-the-cloud-native-ecosystem (accessed on 9 February 2020).

- Kumar, S.; Du, J. KubeEdge, a Kubernetes Native Edge Computing Framework. Available online: https://kubernetes.io/blog/2019/03/19/kubeedge-k8s-based-edge-intro (accessed on 9 February 2020).

- Loukides, M. What Is DevOps? O’Reilly Media, Inc.: Newton, MA, USA, 2012. [Google Scholar]

- Gedawy, H.; Tariq, S.; Mtibaa, A.; Harras, K. Cumulus: A Distributed and Flexible Computing Testbed for Edge Cloud Computational Offloading. In Proceedings of the 2016 Cloudification of the Internet of Things, Paris, France, 23–25 November 2016. [Google Scholar]

- Pan, J.; Ma, L.; Ravindran, R.; TalebiFard, P. HomeCloud: An Edge Cloud Framework and Testbed for New Application Delivery. In Proceedings of the 2016 23rd International Conference on Telecommunications, Thessaloniki, Greece, 16–18 May 2016. [Google Scholar]

- Bavier, A.; McGeer, R.; Ricart, G. PlanetIgnite: A Self-Assembling, Lightweight, Infrastructure-as-a-Service Edge Cloud. In Proceedings of the 2016 28th International Teletraffic Congress, Würzburg, Germany, 12–16 September 2016; pp. 130–138. [Google Scholar]

- Lin, T.; Park, B.; Bannazadeh, H.; Leon-Garcia, A. Deploying a Multi-Tier Heterogeneous Cloud: Experiences and Lessons from the SAVI Testbed. In Proceedings of the 2018 IEEE/IFIP Network Operations and Management Symposium, Taipei, Taiwan, 23–27 April 2018. [Google Scholar]

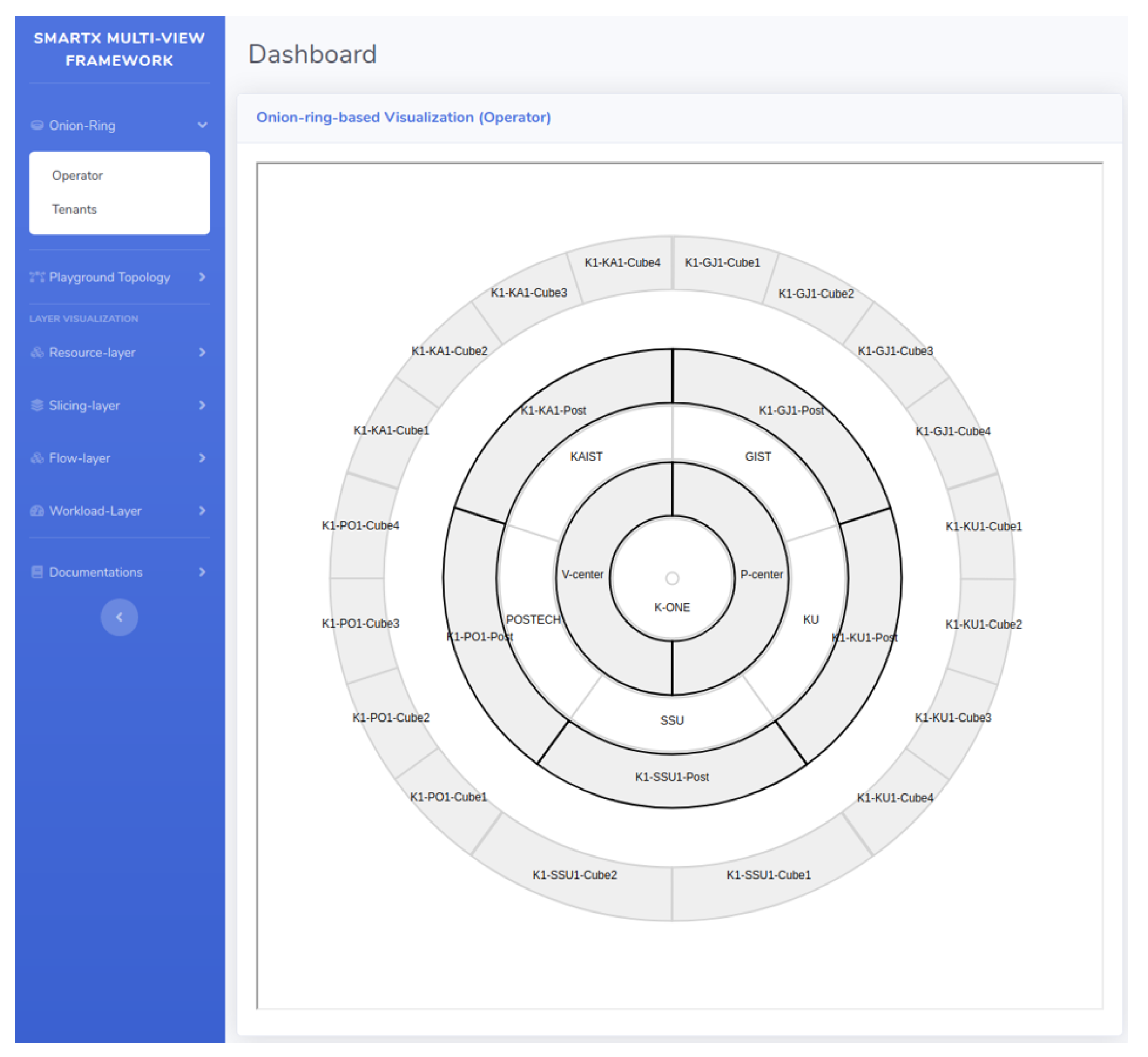

- Usman, M.; Risdianto, A.C.; Han, J.; Kim, J. Interactive Visualization of SDN-Enabled Multisite Cloud Playgrounds Leveraging SmartX MultiView Visibility Framework. COMPUT J. 2019, 62, 838–854. [Google Scholar] [CrossRef]

- Risdianto, A.C.; Kim, N.L.; Shin, J.; Bae, J.; Usman, M.; Ling, T.C.; Panwaree, P.; Thet, P.M.; Aswakul, C.; Thanh, N.H.; et al. OF@TEIN: A community effort towards open/shared SDN-Cloud virtual playground. In Proceedings of the 12th APAN- Network Research Workshop 2015, Kuala Lumpur, Malaysia, 10 August 2015; pp. 22–28. [Google Scholar]

- Han, J.; Shin, J.S.; Kwon, J.C.; Kim, J. Cloud-Native SmartX Intelligence Cluster for AI-Inspired HPC/HPDA Workloads. In Proceedings of the ACM/IEEE Supercomputing Conference 2019 (SC19), Denver, CO, USA, 17–22 November 2020. Poster 123. [Google Scholar]

- Risdianto, A.C.; Usman, M.; Kim, J. SmartX Box: Virtualized Hyper-Converged Resources for Building an Affordable Playground. Electronics 2019, 8, 1242. [Google Scholar] [CrossRef]

- Usman, M.; Rathore, M.A.; Kim, J. SmartX Multi-View Visibility Framework with Flow-Centric Visibility for SDN-Enabled Multisite Cloud Playground. Appl. Sci. 2019, 9, 2045. [Google Scholar] [CrossRef]

- Li, H.; Xu, X.; Ren, J.; Dong, Y. ACRN: A big little hypervisor for IoT development. In Proceedings of the 15th ACM SIGPLAN/SIGOPS International Conference on Virtual Execution Environment, New York, NY, USA, 13–14 April 2019; pp. 31–44. [Google Scholar]

- Sinitsyn, V. Jailhouse. Linux J. 2015, 252, 78–90. [Google Scholar]

- Kivity, A.; Kamay, Y.; Laor, D.; Lublin, U.; Liguori, A. kvm: The Linux virtual machine monitor. Linux Symp. 2007, 1, 225–230. [Google Scholar]

- Barham, P.; Dragovic, B.; Fraser, K.; Hand, S.; Harris, T.; Ho, A.; Neugebauer, R.; Pratt, I.; Warfield, A. Xen and the art of virtualization. ACM Sigops Oper. Syst. Rev. 2003, 37, 164–177. [Google Scholar] [CrossRef]

- LXD—System Container Manager. Available online: https://lxd.readthedocs.io/en/latest/ (accessed on 13 April 2020).

- Merkel, D. Docker: Lighteweight Linux containers for consistent development and deployment. Linux J. 2014, 2014, 2. [Google Scholar]

- Madhavapeddy, A.; Scott, D.J. Unikernels: Rise of the virtual library operating system. Queue 2013, 11, 30–44. [Google Scholar] [CrossRef]

- Randazzo, A.; Tinnirello, I. Kata Containers: An Emerging Architecture for Enabling MEC Services in Fast and Secure Way. In Proceedings of the 2019 Sixth International Conference on Internet of Things: Systems, Management and Security (IOTSMS 2019), Granada, Spain, 22–25 October 2019; pp. 209–214. [Google Scholar]

- Young, E.G.; Zhu, P.; Caraza-Harter, T.; Arpaci-Desseau, A.C.; Arpaci-Dusseau, R.H. The True Cost of Containing: A gVisor Case Study. In Proceedings of the 11th USENIX Workshop on Hot Topics in Cloud Computing (HotCloud 19), Renton, WA, USA, 8 July 2019. [Google Scholar]

- Kurtzer, G.M.; Sochat, V.; Bauer, M.W. Singularity: Scientific containers for mobility of compute. PLoS ONE 2017, 12, e0177459. [Google Scholar] [CrossRef]

- Intel; Hewlett-Packard; NEC; Dell. Intelligent Platform Management Interface Specification Second Generation. Available online: https://www.intel.com/content/dam/www/public/us/en/documents/product-briefs/ipmi-second-gen-interface-spec-v2-rev1-1.pdf (accessed on 9 February 2020).

- Shin, J.-S.; Kim, J. Template-based Automation with Distributed Secure Provisioning Installer for Remote Cloud Boxes. In Proceedings of the 7th International Conference on Information and Communication Technology Convergence, Jeju, Korea, 19–21 October 2016. [Google Scholar]

- Usman, M.; Kim, J. SmartX Multi-View Visibility Framework for Unified Monitoring of SDN-enabled MultiSite Clouds. Trans. Emerg. Telecomm. Tech. 2019. [Google Scholar] [CrossRef]

- Shin, J.-S.; Kim, J. Multi-layer Onion-ring Visualization of Distributed Clusters for SmartX MultiView Visibility and Security. In Proceedings of the 15th IEEE Symposium on Visualization for Cyber Security, Berlin, Germany, 22 October 2018. [Google Scholar]

- Kim, J.; Nam, T. Cluster Visualization Apparatus. U.S. Patent Application 16,629,299, 7 January 2020. [Google Scholar]

- Risdianto, A.C.; Tsai, P.-W.; Ling, T.C.; Yang, C.-S.; Kim, J. Enhanced ONOS SDN Controllers Deployment for Federated Multi-domain SDN-Cloud with SD-Routing-Exchange. Malays. J. Comput. Sci. 2017, 30, 134–153. [Google Scholar] [CrossRef]

- Sefraoui, O.; Aissaoui, M.; Eleuldj, M. Openstack: Toward an open source solution for cloud computing. Int. J. Comput. Appl. 2012, 55, 38–42. [Google Scholar] [CrossRef]

- Cobbler—Linux Install and Update Server. Available online: https://cobbler.github.io/ (accessed on 14 April 2020).

- Marschall, M. Chef Infrastructure Automation Cookbook; Packt Publishing Ltd.: Birmingham, UK, 2015. [Google Scholar]

- MAAS|Metal as a Service. Available online: https://maas.io/ (accessed on 14 April 2020).

- OpenStack Docs: DevStack. Available online: https://docs.openstack.org/devstack/latest/ (accessed on 14 April 2020).

- Hochstein, L.; Moser, R. Ansible Up & Running: Automating Configuration Management and Deployment the Easy Way; O’Reilly Media, Inc.: Sebastopol, CA, USA, 2017. [Google Scholar]

- Han, J.; Kim, J. Design of SaaS OverCloud for 3-tier SaaS Compatibility over Cloud-based Multiple Boxes. In Proceedings of the 12th International Conference on Future Internet Technologies, Fukuoka, Japan, 14–16 June 2017. [Google Scholar]

- Li, W.; Lemieux, Y.; Gao, J.; Zhao, Z.; Han, Y. Service Mesh: Challenges, State of the Art, and Future Research Opportunities. In Proceedings of the 2019 IEEE International Conference on Service-Oriented System Engineering (SOSE 2019), San Francisco, CA, USA, 4–9 April 2019; pp. 122–127. [Google Scholar]

- Kang, M.; Shin, J.-S.; Kim, J. Protected Coordination of Service Mesh for Container-based 3-tier Service Traffic. In Proceedings of the 33rd International Conference on Information Networking, Kuala Lumpur, Malaysia, 9–11 January 2019; pp. 427–429. [Google Scholar]

- Shin, J.-S.; de Brito, M.S.; Magedanz, T.; Kim, J. Automated Multi-Swarm Networking with Open Baton NFV MANO Framework. In European Conference on Parallel Processing; Springer: Cham, Switzerland, 2018; Volume 11339, pp. 82–92. [Google Scholar]

- Hong, J.; Kim, W.; Yoo, J.-H.; Hong, J.W.-K. Design and Implementation of Container-based M-CORD Monitoring System. In Proceedings of the 20th Asia-Pacific Network Operations and Management Symposium, Matsue, Japan, 18–20 September 2019. [Google Scholar]

| Options | Management Tools | |

|---|---|---|

| Container box | Machine container | Machine container runtime (LXD) |

| Application container | Application container runtime (Docker) | |

| Virtual box | Virtual machine | Virtual hypervisor (KVM, Xen) |

| Physical box | Bare-Metal Server | - |

| Partitioned cell | Hardware/system partitioner (ACRN Hypervisor, Linux Jailhouse) |

| Scenario | Elapsed Time | |

|---|---|---|

| 1-1. Composing multi-site physical boxes from K-Cube servers | 8 min 58 s | |

| 1-2. Composing virtual boxes on cloud-enabled physical boxes | 1-2-1. OpenStack control node | 14 min 33 s |

| 1-2-2. OpenStack compute node | 5 min 40 s | |

| 1-2-3. Virtual boxes | 1 min 12 s | |

| 1-3. Release physical boxes | 3 min 39 s | |

| 2-1. Composing multi-site virtual boxes from the K-Post servers | 1 min 29 s | |

| 2-2. Release the virtual boxes | 16 s | |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shin, J.-S.; Kim, J. K-ONE Playground: Reconfigurable Clusters for a Cloud-Native Testbed. Electronics 2020, 9, 844. https://doi.org/10.3390/electronics9050844

Shin J-S, Kim J. K-ONE Playground: Reconfigurable Clusters for a Cloud-Native Testbed. Electronics. 2020; 9(5):844. https://doi.org/10.3390/electronics9050844

Chicago/Turabian StyleShin, Jun-Sik, and JongWon Kim. 2020. "K-ONE Playground: Reconfigurable Clusters for a Cloud-Native Testbed" Electronics 9, no. 5: 844. https://doi.org/10.3390/electronics9050844

APA StyleShin, J.-S., & Kim, J. (2020). K-ONE Playground: Reconfigurable Clusters for a Cloud-Native Testbed. Electronics, 9(5), 844. https://doi.org/10.3390/electronics9050844