A Machine Learning and Integration Based Architecture for Cognitive Disorder Detection Used for Early Autism Screening

Abstract

1. Introduction

- Proposing an architecture focused on integration of different heterogeneous data and data analytics. Ontologies are used to integrate the different data sources.

- The proposed architecture allows distributed processing of the data.

- Our approach was tested on ASD data, producing satisfactory results.

- In the experimentation, correlation between different features and the identification of relevant features relating to ASD was carried out.

2. Previous Work

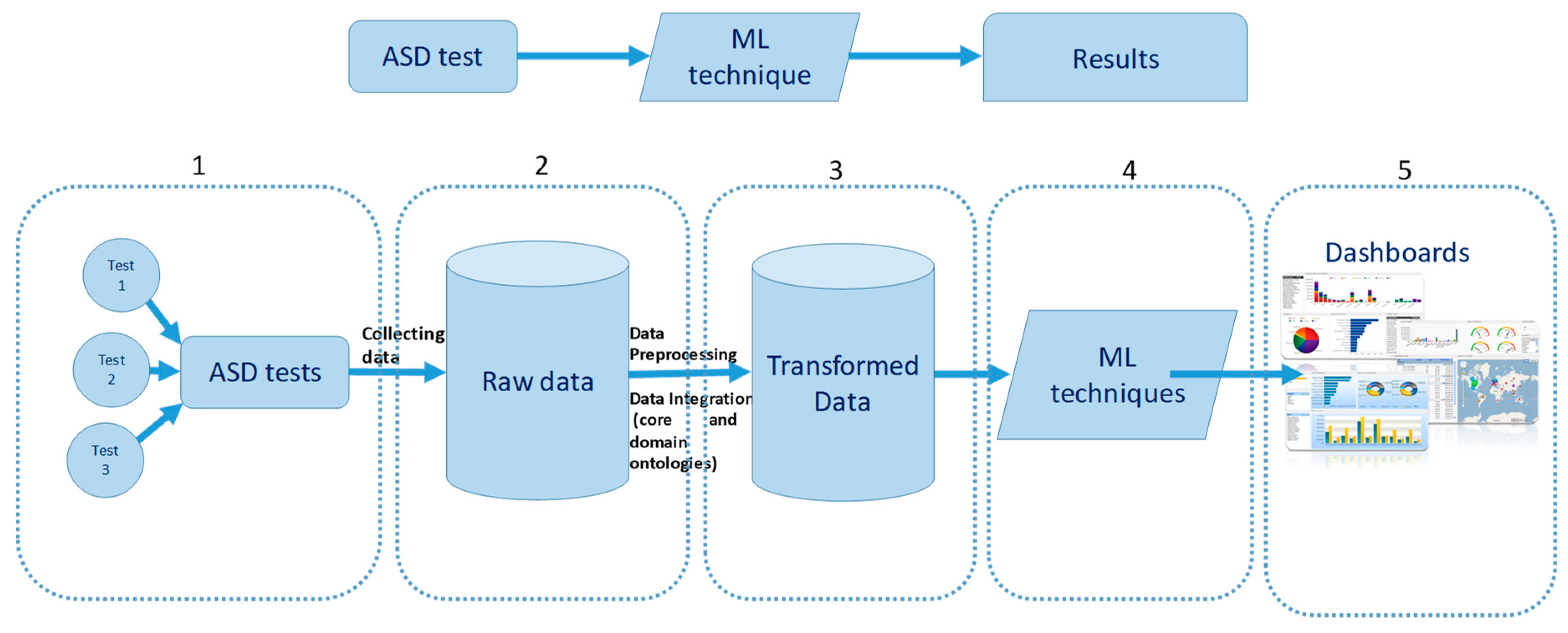

3. The Proposed Architecture

- (1)

- ASD tests (data collection):The first step is to collect data from every source, including manual observations/evaluations by experts. It is common for data to be generated in many diverse centers and areas, such as medical or intervention centers, hospitals, and academic centers with help/support. However, it is also common for the data to be stored separately, and they are not always processed or analyzed. This was one the main objectives of this work: to be able to gather data from many diverse data sources and incorporate them into a unique database in order to apply the same preprocessing techniques to the whole dataset.

- (2)

- Raw data (data warehousing):

- (3)

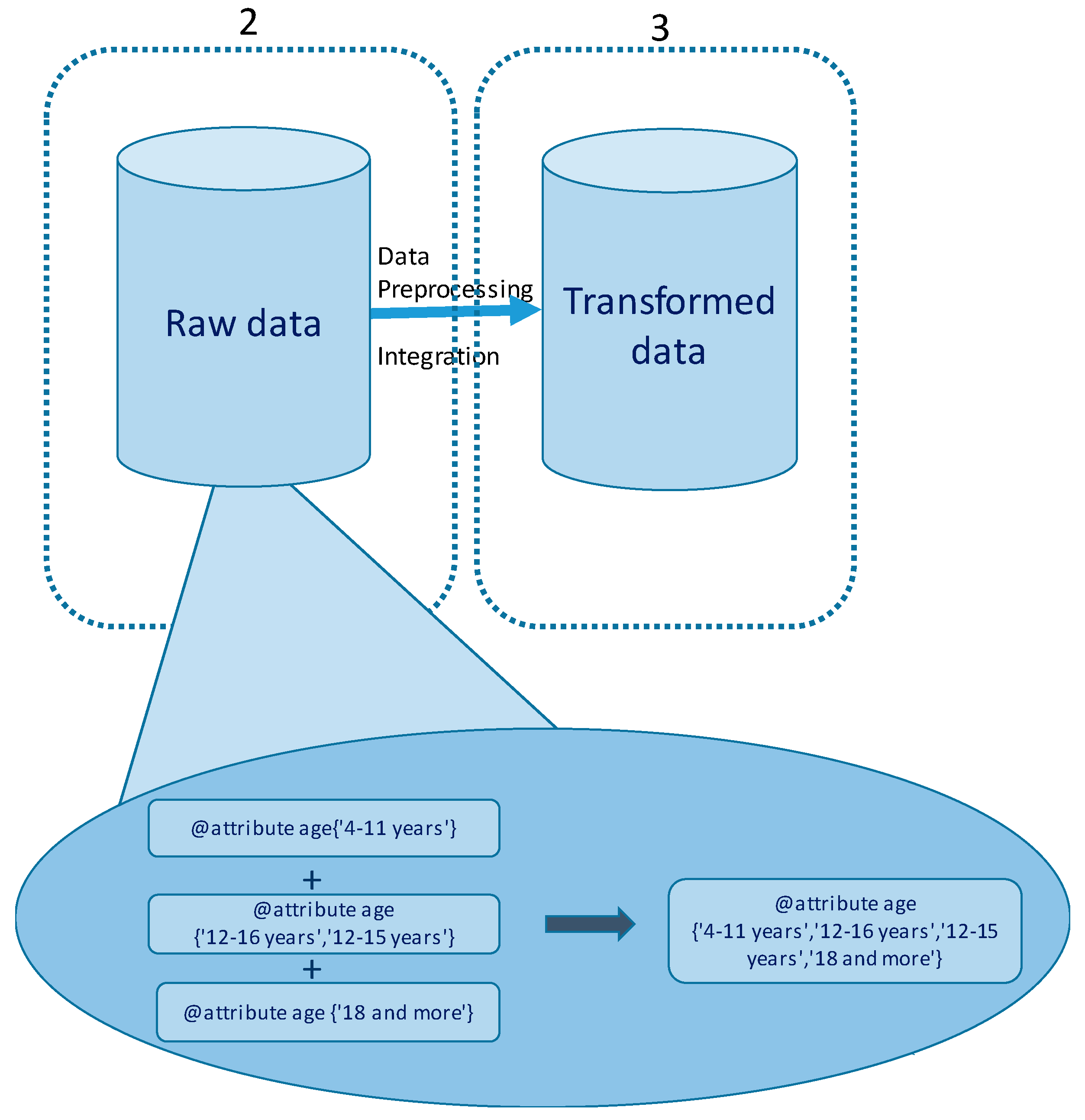

- Data transformation:It is typical to identify this phase, which is also known as data preprocessing, as the most time-consuming task. This task can easily be divided into several subtasks, such as normalization, feature reduction, and integration. All of them are complex and, in this paper, we focused on the integration and feature reduction subtasks. In addition, the difficulty of each of these subtasks depends on the taxonomy of the data.

- Ontology-based semantic tagging of different data sources. The objective of this task was to identify the semantic concept of each field of the source databases in order to subsequently integrate them. All the database attributes were searched in the domain ontology, thus obtaining their semantic concepts. If a concept had different semantic types, word sense disambiguation (WSD) [29,30] techniques were applied to obtain a unique semantic type.

- Core ontology selection. Different data sources have their own ontologies or semantic resources (domain ontologies). The information from each source was semantically enriched/associated with the information extracted from its specific domain ontology. The core ontology was defined as a Knowledge Base (KB). This ontology was used when the integration of all information was carried out.

- Ontology mapping. To accomplish the integration, data sources were described in terms of the core ontology via equivalent concepts and relations (ontology mapping) between the core ontology and the specialized domain ontologies of different sources. Peral et al. [31] used a similar approach to carry out the integration of heterogeneous data applied to telemedicine systems.

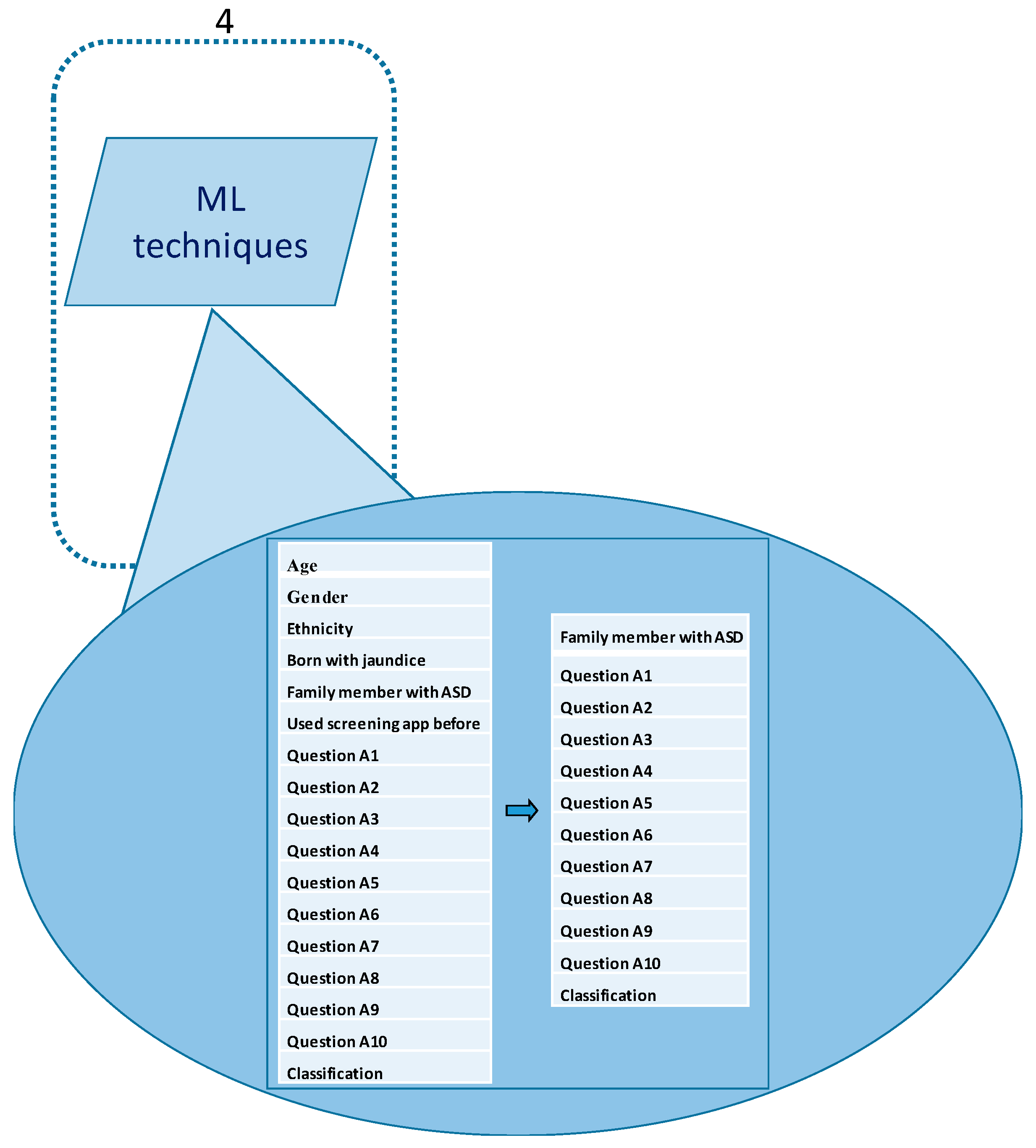

- (4)

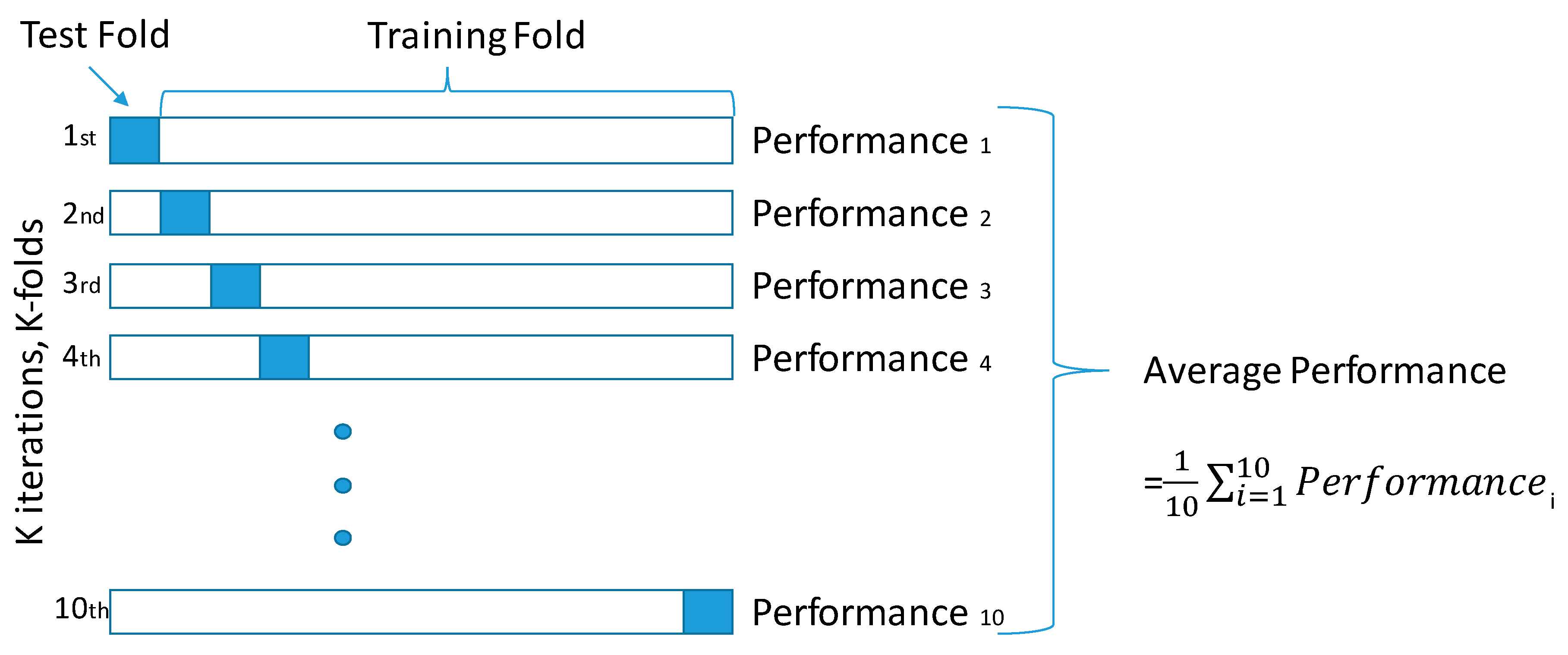

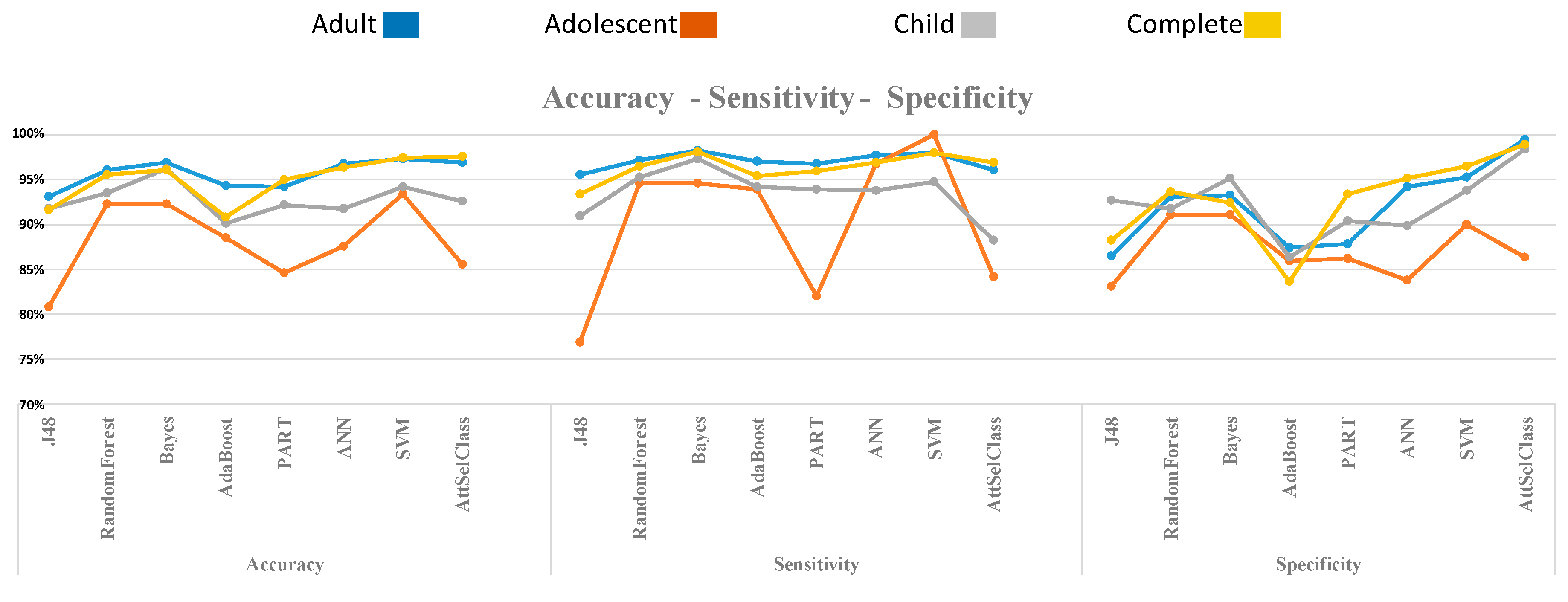

- Application of Machine Learning (ML) techniques:In the fourth phase, ML techniques were applied. There are many ML methods that could be used to obtain indicators of a target class, such as children with ASD. In the proposed architecture it is possible to carry out experiments using these diverse ML algorithms to obtain the precision measure for each algorithm. In this phase, we use the cross-validation method in order to divide the whole dataset into two subsets, training and test datasets. Using the test datasets, we were able to test the correctness, validity, and reliability of the model without overfitting the system.

- (5)

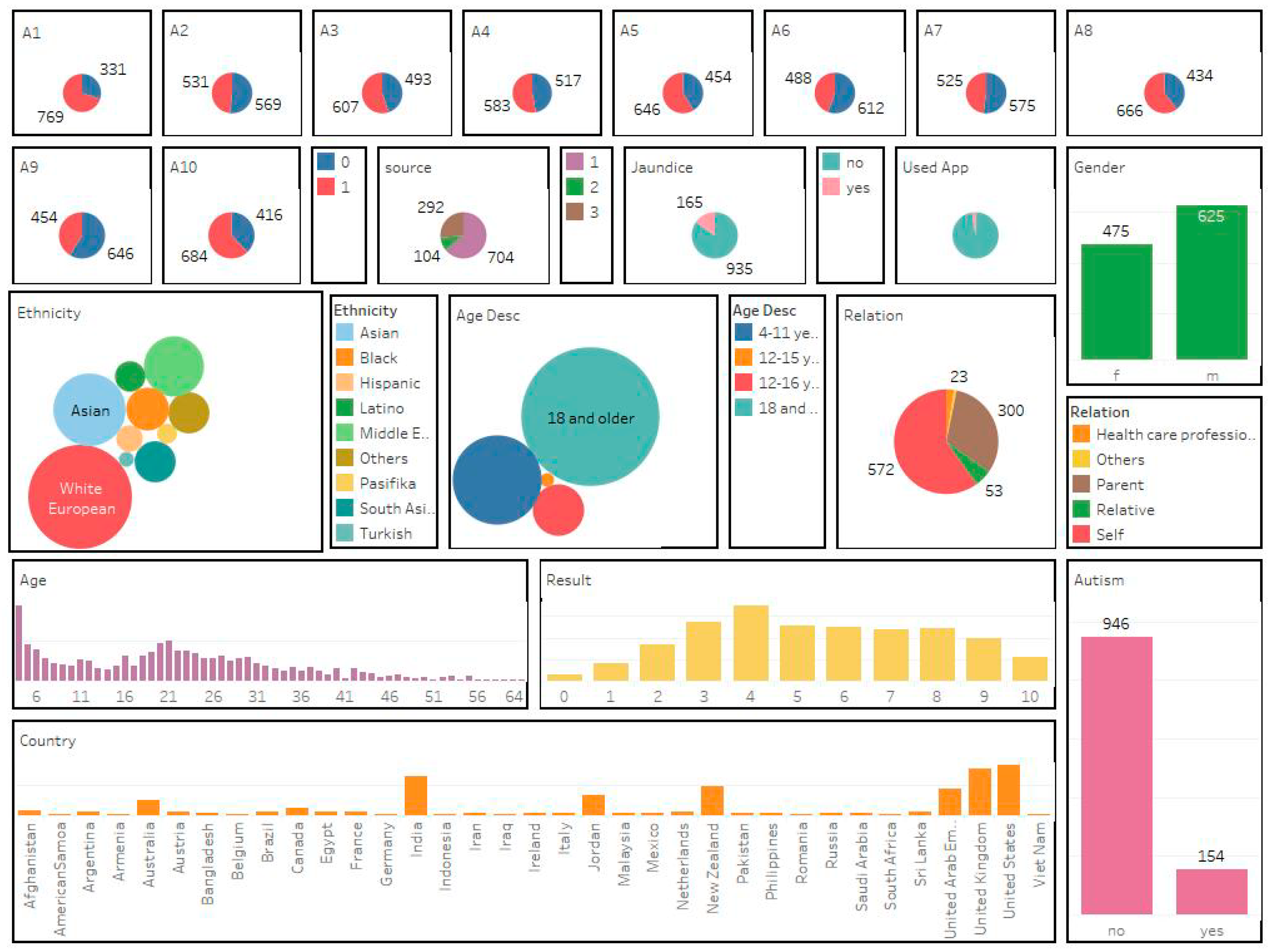

- Dashboard generation:The purpose of creating a dashboard is to visualize data types according to specific purposes and needs [35]. Organizations use dashboards to manage an organization’s performance, providing an overall suite of applications such as strategy maps, balanced scorecards, and business intelligence, and to make information available for them in a specific format for decision-making [36]. One of the methods used to create dashboards is a graphical system called Tableau, which is used to perform temporary discovery and analysis of customer datasets [37]. In our approach, dashboards were used to discover the key indicators, correlations among data, hidden patterns, most important features (this may produce a feature reduction), detection, prediction, etc.

4. Experiment—Case Study

5. Discussion

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- The World Bank (IBRD-IDA). 2019. Available online: https://www.worldbank.org/en/topic/disability (accessed on 26 January 2020).

- Lyall, K.; Croen, L.; Daniels, J.; Fallin, M.D.; Ladd-Acosta, C.; Lee, B.K.; Park, B.Y.; Snyder, N.W.; Schendel, D.; Volk, H.; et al. The changing epidemiology of autism spectrum disorders. Ann. Rev. Public Health 2017, 38, 81–102. [Google Scholar] [CrossRef]

- Kessler, R.C.; van Loo, H.M.; Wardenaar, K.J.; Bossarte, R.M.; Brenner, L.A.; Cai, T.; Ebert, D.D.; Hwang, I.; Li, J.; de Jonge, P.; et al. Testing a machine-learning algorithm to predict the persistence and severity of major depressive disorder from baseline self-reports. Mol. Psychiatry 2016, 21, 1366–1371. [Google Scholar] [CrossRef]

- Chekroud, A.M.; Zotti, R.J.; Shehzad, Z.; Gueorguieva, R.; Johnson, M.K.; Trivedi, M.H.; Cannon, T.D.; Krystal, J.H.; Corlett, P.R. Cross-trial prediction of treatment outcome in depression: A machine learning approach. Lancet Psychiatry 2016, 3, 243–250. [Google Scholar] [CrossRef]

- Torous, J.; Staples, P.; Onnela, J.P. Realizing the potential of mobile mental health: New methods for new data in psychiatry. Curr. Psychiatry Rep. 2015, 17, 61. [Google Scholar] [CrossRef]

- Mohr, D.C.; Burns, M.N.; Schueller, S.M.; Clarke, G.; Klinkman, M. Behavioral intervention technologies: Evidence review and recommendations for future research in mental health. Gener. Hosp. Psychiatry 2013, 35, 332–338. [Google Scholar] [CrossRef]

- Gil, D.; Johnsson, M.; Chamizo, J.M.; Paya, A.S.; Fernandez, D.R. Application of artificial neural networks in the diagnosis of urological dysfunctions. Expert Syst. Appl. 2009, 36, 5754–5760. [Google Scholar] [CrossRef]

- Gil, D.; Johnsson, M. Using support vector machines in diagnoses of urological dysfunctions. Expert Syst. Appl. 2010, 37, 4713–4718. [Google Scholar] [CrossRef]

- Gil, D.; Johnsson, M.; García Chamizo, J.M.; Paya, A.S.; Fernández, D.R. Modelling of urological dysfunctions with neurological etiology by means of their centres involved. Appl. Soft Comput. 2011, 11, 4448–4457. [Google Scholar] [CrossRef]

- Gil, D.; Girela, J.L.; De Juan, J.; Gomez-Torres, M.J.; Johnsson, M. Predicting seminal quality with artificial intelligence methods. Expert Syst. Appl. 2012, 39, 12564–12573. [Google Scholar] [CrossRef]

- Girela, J.L.; Gil, D.; Johnsson, M.; Gomez-Torres, M.J.; De Juan, J. Semen parameters can be predicted from environmental factors and lifestyle using artificial intelligence methods. Biol. Reprod. 2013, 88, 99–100. [Google Scholar] [CrossRef]

- Gil, D.; Girela, J.L.; Azorín, J.; De Juan, A.; De Juan, J. Identifying central and peripheral nerve fibres with an artificial intelligence approach. Appl. Soft Comput. 2018, 67, 276–285. [Google Scholar] [CrossRef]

- Yoo, I.; Alafaireet, P.; Marinov, M.; Pena-Hernandez, K.; Gopidi, R.; Chang, J.F.; Hua, L. Data mining in healthcare and biomedicine: A survey of the literature. J. Med. Syst. 2012, 36, 2431–2448. [Google Scholar] [CrossRef]

- Beykikhoshk, A.; Arandjelović, O.; Phung, D.; Venkatesh, S.; Caelli, T. Data-mining Twitter and the autism spectrum disorder: A pilot study. In Proceedings of the 2014 IEEE/ACM International Conference on Advances in Social Networks Analysis and Mining (ASONAM 2014), Beijing, China, 17–20 August 2014; pp. 349–356. [Google Scholar] [CrossRef]

- Latkowski, T.; Osowski, S. Data mining for feature selection in gene expression autism data. Expert Syst. Appl. 2015, 42, 864–872. [Google Scholar] [CrossRef]

- Bellazzi, R.; Ferrazzi, F.; Sacchi, L. Predictive data mining in clinical medicine: A focus on selected methods and applications. Wiley Interdiscip. Rev. Data Min. Knowl. Discov. 2011, 1, 416–430. [Google Scholar] [CrossRef]

- Wall, D.P.; Kosmicki, J.; Deluca, T.F.; Harstad, E.; Fusaro, V.A. Use of machine learning to shorten observation-based screening and diagnosis of autism. Transl. Psychiatry 2012, 2, e100. [Google Scholar] [CrossRef]

- Kosmicki, J.A.; Sochat, V.; Duda, M.; Wall, D.P. Searching for a minimal set of behaviors for autism detection through feature selection-based machine learning. Transl. Psychiatry 2015, 5, e514. [Google Scholar] [CrossRef]

- Deshpande, G.; Libero, L.; Sreenivasan, K.R.; Deshpande, H.; Kana, R.K. Identification of neural connectivity signatures of autism using machine learning. Front. Human Neurosci. 2013, 7, 670. [Google Scholar] [CrossRef]

- Bone, D.; Goodwin, M.S.; Black, M.P.; Lee, C.C.; Audhkhasi, K.; Narayanan, S. Applying machine learning to facilitate autism diagnostics: Pitfalls and promises. J. Autism Dev. Disord. 2015, 45, 1121–1136. [Google Scholar] [CrossRef]

- Rosenberg, R.E.; Landa, R.; Law, J.K.; Stuart, E.A.; Law, P.A. Factors affecting age at initial autism spectrum disorder diagnosis in a national survey. Autism Res. Treat. 2011, 2011. [Google Scholar] [CrossRef]

- Rotholz, D.A.; Kinsman, A.M.; Lacy, K.K.; Charles, J. Improving early identification and intervention for children at risk for autism spectrum disorder. Pediatrics 2017, 139, e20161061. [Google Scholar] [CrossRef]

- Daniels, A.M.; Mandell, D.S. Explaining differences in age at autism spectrum disorder diagnosis: A critical review. Autism 2014, 18, 583–597. [Google Scholar] [CrossRef] [PubMed]

- Zuckerman, K.E.; Lindly, O.J.; Sinche, B.K. Parental concerns, provider response, and timeliness of autism spectrum disorder diagnosis. J. Pediatr. 2015, 166, 1431–1439. [Google Scholar] [CrossRef] [PubMed]

- Fang, H. Managing data lakes in big data era: What’s a data lake and why has it became popular in data management ecosystem. In Proceedings of the 2015 IEEE International Conference on Cyber Technology in Automation, Control, and Intelligent Systems (CYBER), Shenyang, China, 8–12 June 2015; pp. 820–824. [Google Scholar] [CrossRef]

- Miloslavskaya, N.; Tolstoy, A. Big data, fast data and data lake concepts. Procedia Comput. Sci. 2016, 88, 300–305. [Google Scholar] [CrossRef]

- Noy, N.F.; McGuinness, D.L. Ontology Development 101: A Guide to Creating Your First Ontology; Stanford University: Stanford, CA, USA, 2001. [Google Scholar]

- Guarino, N. Formal Ontology in Information Systems: Proceedings of the First International Conference (FOIS’98), Trento, Italy, 6–8 June 1998; IOS Press: Amsterdam, The Netherlands, 1998. [Google Scholar]

- Stevenson, M.; Wilks, Y. Word Sense Disambiguation. The Oxford Handbook of Computational Linguistics; Oxford University Press: London, UK, 2003; pp. 249–265. [Google Scholar]

- Navigli, R. Word Sense Disambiguation: A survey. ACM Comput. Surv. (CSUR) 2009, 41, 1–69. [Google Scholar] [CrossRef]

- Peral, J.; Ferrandez, A.; Gil, D.; Munoz-Terol, R.; Mora, H. An ontology-oriented architecture for dealing with heterogeneous data applied to telemedicine systems. IEEE Access. 2018, 6, 41118–41138. [Google Scholar] [CrossRef]

- Matuszek, C.; Witbrock, M.; Kahlert, R.C.; Cabral, J.; Schneider, D.; Shah, P.; Lenat, D. Searching for Common Sense: Populating Cyc from the Web; UMBC Computer Science and Electrical Engineering Department Collection: Baltimore, MD, USA, 2005; pp. 1430–1435. [Google Scholar]

- Bodenreider, O. The Unified Medical Language System (UMLS): Integrating Biomedical Terminology. Nucleic Acids Res. 2004, 32, D267–D270. [Google Scholar] [CrossRef]

- Fellbaum, C. WordNet. In Theory and Applications of Ontology: Computer Applications; Springer: Berlin, Germany, 2010; pp. 231–243. [Google Scholar] [CrossRef]

- Matheus, R.; Janssen, M.; Maheshwari, D. Data science empowering the public: Data-driven dashboards for transparent and accountable decision-making in smart cities. Gov. Inf. Q. 2018, 101284. [Google Scholar] [CrossRef]

- Rouhani, S.; Zamenian, S.; Rotbie, S. A Prototyping and Evaluation of Hospital Dashboard through End-User Computing Satisfaction Model (EUCS). J. Inf. Technol. Manag. 2018, 10, 43–60. [Google Scholar] [CrossRef]

- Morton, K.; Bunker, R.; Mackinlay, J.; Morton, R.; Stolte, C. Dynamic workload driven data integration in tableau. In Proceedings of the 2012 ACM SIGMOD International Conference on Management of Data, Scottsdale, AZ, USA, 20 May 2012; pp. 807–816. [Google Scholar] [CrossRef]

- Witten, I.H.; Frank, E.; Hall, M.A.; Pal, C.J. Data Mining: Practical Machine Learning Tools and Techniques; Morgan Kaufmann Series in Data Management Systems; Elsevier: Montreal, QC, Canada, 2016. [Google Scholar]

- Olivé, A. The Universal Ontology: A Vision for Conceptual Modelling and the Semantic Web (Invited Paper). In International Conference on Conceptual Modelling (Lecture Notes in Computer Science); Springer: Berlin, Germany, 2017; Volume 10650, pp. 1–17. [Google Scholar] [CrossRef]

- Shvaiko, P.; Euzenat, J. Ontology matching: State of the art and future challenges. IEEE Trans. Knowl. Data Eng. 2011, 25, 158–176. [Google Scholar] [CrossRef]

- Arnold, P.; Rahm, E. Enriching ontology mappings with semantic relations. Data Knowl. Eng. 2014, 93, 1–8. [Google Scholar] [CrossRef]

| Attribute | Type | Description |

|---|---|---|

| Age | Number | Age in years |

| Gender | String | Male or female |

| Ethnicity | String | List of common ethnicities in text format |

| Born with jaundice | Boolean (yes or no) | Whether the person was born with jaundice |

| Family member with PDD | Boolean (yes or no) | Whether any immediate family member has a PDD (Pervasive Developmental Disorder) |

| Who is completing the test | String | Parent, self, caregiver, medical staff, clinician, etc. |

| Country of residence | String | List of countries in text format |

| Used screening app before | Boolean (yes or no) | Whether the user has used a screening app |

| A1 | Binary (0, 1) | The answer code of: “I often notice small sounds when others do not.” |

| A2 | Binary (0, 1) | The answer code of: “I usually concentrate more on the whole picture rather than the small details.” |

| A3 | Binary (0, 1) | The answer code of: “I find it easy to do more than one thing at once.” |

| A4 | Binary (0, 1) | The answer code of: “If there is an interruption, I can switch back to what I was doing very quickly.” |

| A5 | Binary (0, 1) | The answer code of: “I find it easy to ‘read between the lines’ when someone is talking to me.” |

| A6 | Binary (0, 1) | The answer code of: “I know how to tell if someone listening to me is getting bored.” |

| A7 | Binary (0, 1) | The answer code of: “When I’m reading a story, I find it difficult to work out the characters’ intentions.” |

| A8 | Binary (0, 1) | The answer code of: “I like to collect information about categories of things (e.g., types of car, types of bird, types of train, and types of plant).” |

| A9 | Binary (0, 1) | The answer code of: “I find it easy to work out what someone is thinking or feeling just by looking at their face.” |

| A10 | Binary (0, 1) | The answer code of: “I find it difficult to work out people’s intentions.” |

| Classification | Class (Yes, no) | The final classification (yes = 189, he/she has ASD; no = 516, he/she does not have ASD) |

| True Condition | |||

|---|---|---|---|

| Total Population | Condition Positive | Condition Negative | |

| Predicted Condition | Predicted condition positive | True positive (TP) | False positive (FP) |

| Predicted condition negative | False negative (FN) | True negative (TN) | |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Peral, J.; Gil, D.; Rotbei, S.; Amador, S.; Guerrero, M.; Moradi, H. A Machine Learning and Integration Based Architecture for Cognitive Disorder Detection Used for Early Autism Screening. Electronics 2020, 9, 516. https://doi.org/10.3390/electronics9030516

Peral J, Gil D, Rotbei S, Amador S, Guerrero M, Moradi H. A Machine Learning and Integration Based Architecture for Cognitive Disorder Detection Used for Early Autism Screening. Electronics. 2020; 9(3):516. https://doi.org/10.3390/electronics9030516

Chicago/Turabian StylePeral, Jesús, David Gil, Sayna Rotbei, Sandra Amador, Marga Guerrero, and Hadi Moradi. 2020. "A Machine Learning and Integration Based Architecture for Cognitive Disorder Detection Used for Early Autism Screening" Electronics 9, no. 3: 516. https://doi.org/10.3390/electronics9030516

APA StylePeral, J., Gil, D., Rotbei, S., Amador, S., Guerrero, M., & Moradi, H. (2020). A Machine Learning and Integration Based Architecture for Cognitive Disorder Detection Used for Early Autism Screening. Electronics, 9(3), 516. https://doi.org/10.3390/electronics9030516