First Order and Second Order Learning Algorithms on the Special Orthogonal Group to Compute the SVD of Data Matrices

Abstract

1. Introduction

2. Helmke–Moore Learning and Advanced Numerical Methods

2.1. An HM-Type Neural SVD Learning Algorithm

2.2. Euler Method in and Its Extension to

- Manifold exponential: The exponential map derived from the geodesic associated with the connection is denoted by . Given and , the exponential of v at a point x is denoted as . On a Euclidean manifold , ; therefore, an exponential map may be thought of as a generalization of “vector addition” to a curved space.

- Parallel transport along geodesics: The parallel transport map is denoted by . Given two points , parallel transport from x to y is denoted as . Parallel transport moves a tangent vector between two points along their connecting geodesic arc. Given and , the parallel transport of a vector v from a point x to a point y is denoted by . Parallel transport does not change the length of a transported vector, namely it is an isometry; moreover, parallel transport does not alter the angle between transported vectors, namely, given and , it holds that , i.e., we say that parallel transport is a conformal map. Formally, on a Euclidean manifold , ; therefore, a vector transport may be thought of as a generalization of the familiar geometric notion of the “rigid translation” of vectors to a curved space.

2.3. Heun and Runge Methods in and Their Extension to

- The sum between a variable representing a point on a manifold and a vector field at that point are replaced by the exponential map applied to the point and to the vector field at that point.

- The sum between two tangent vectors belonging to two different tangent spaces may be carried out only upon transporting one of the vectors to the tangent space where the other vector lives by means of parallel transport.

2.4. Application of the Euler, Heun, and Runge Method to Solving an HM System

2.4.1. Forward Euler Method

2.4.2. Explicit Second-Order Heun Method

2.4.3. Explicit Second-Order Runge Method

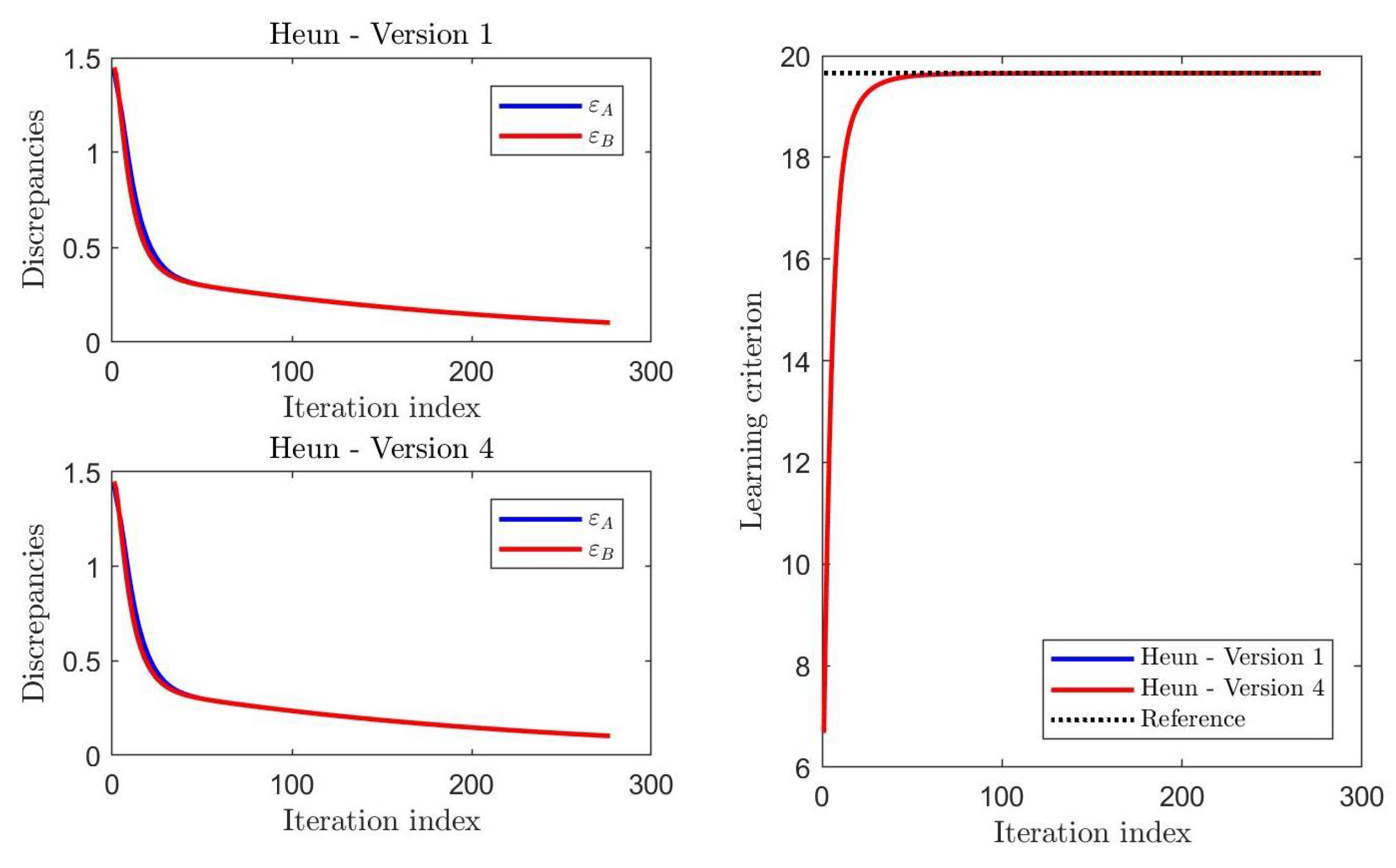

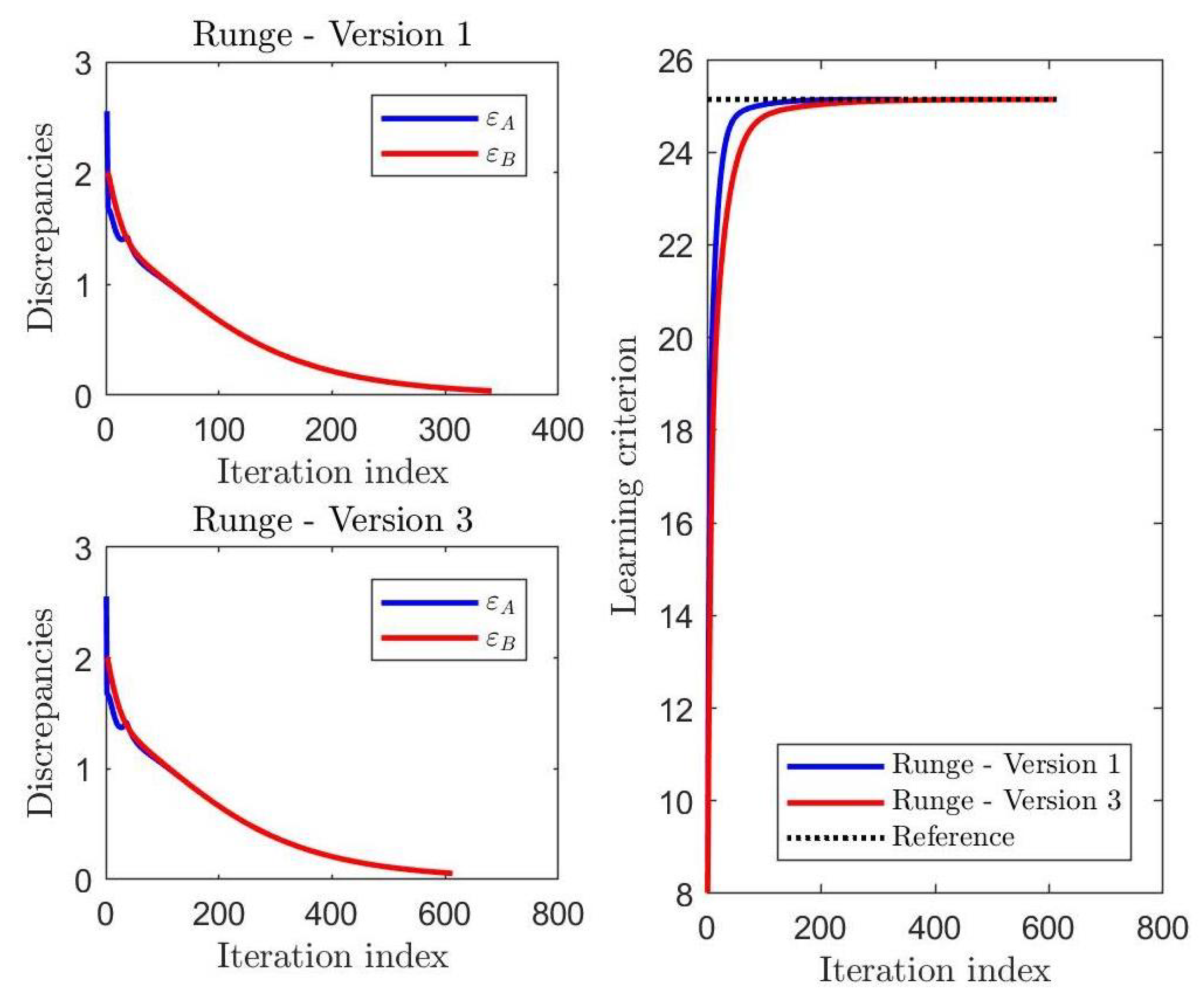

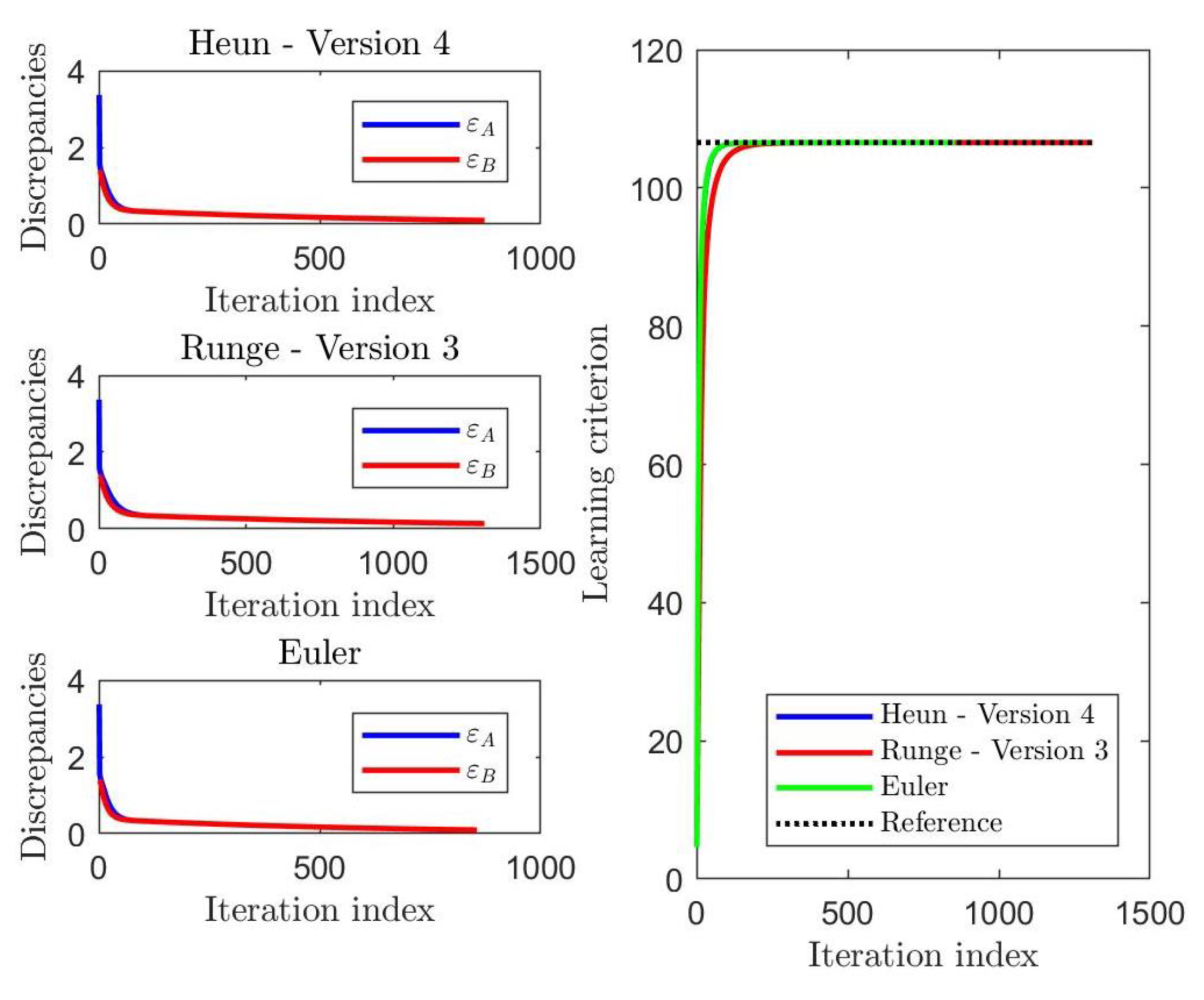

2.5. Preliminary Pyramidal Numerical Tests on the HM Learning Methods

3. Reduced Euler Method

3.1. Derivation of a Reduced Euler Method

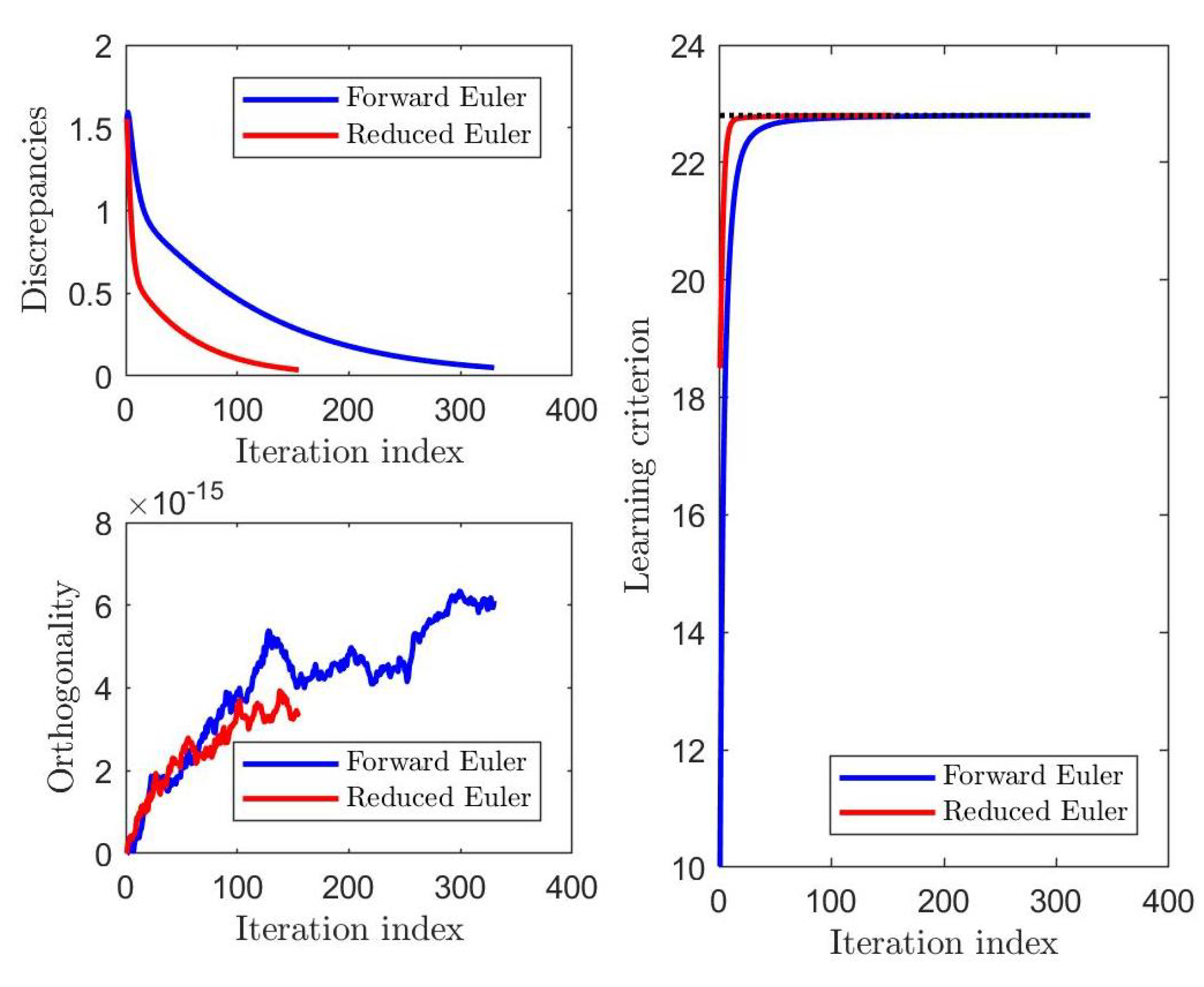

3.2. Numerical Comparison of the Euler Method and Its Reduced Version

4. Experiments on Optical Flow Estimation by a Helmke–Moore Neural Learning Algorithm

4.1. Optical Flow Estimation by Total Least Squares

| Algorithm 1 Total least squares based algorithm to estimate a displacement vector. |

|

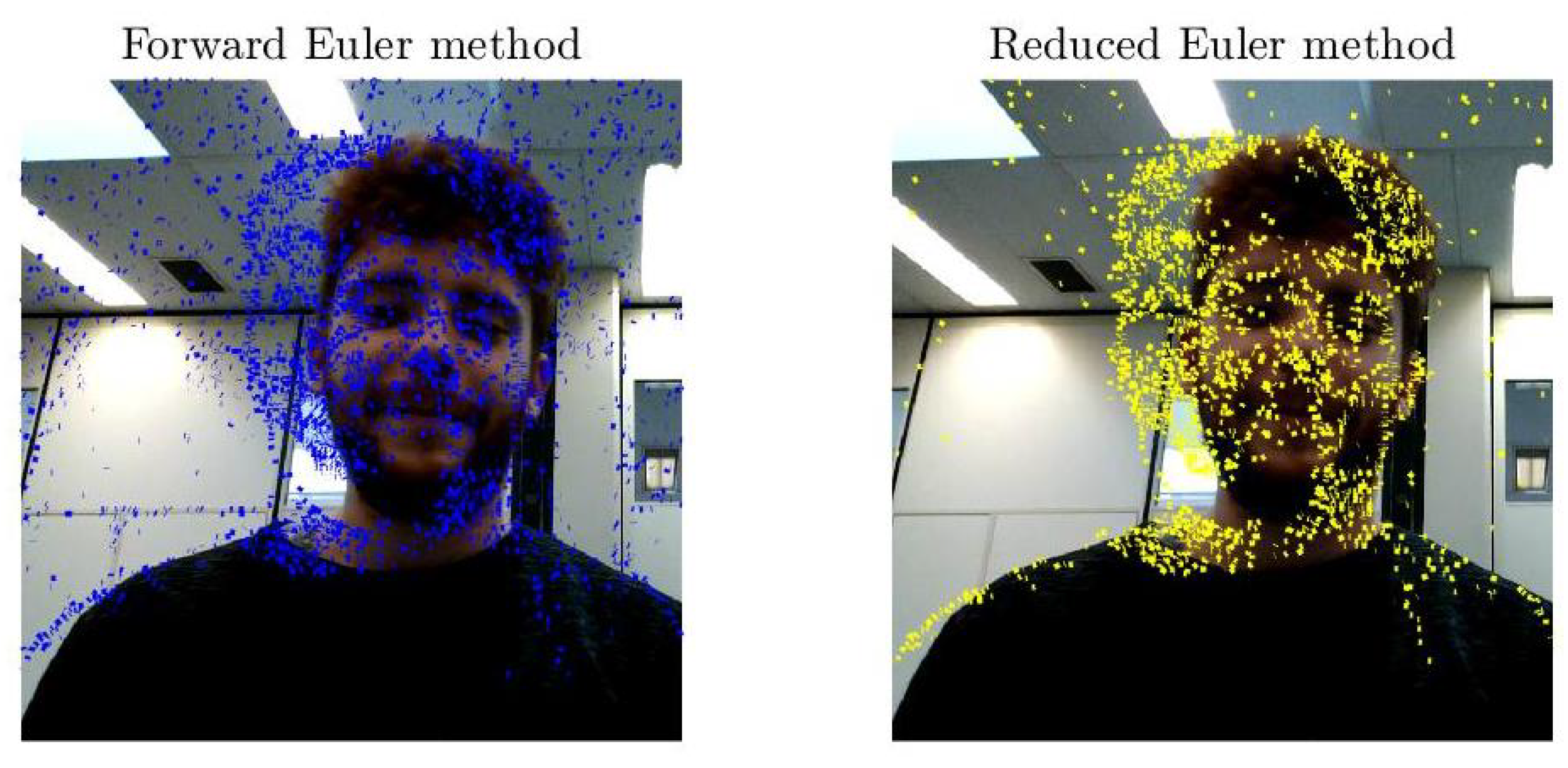

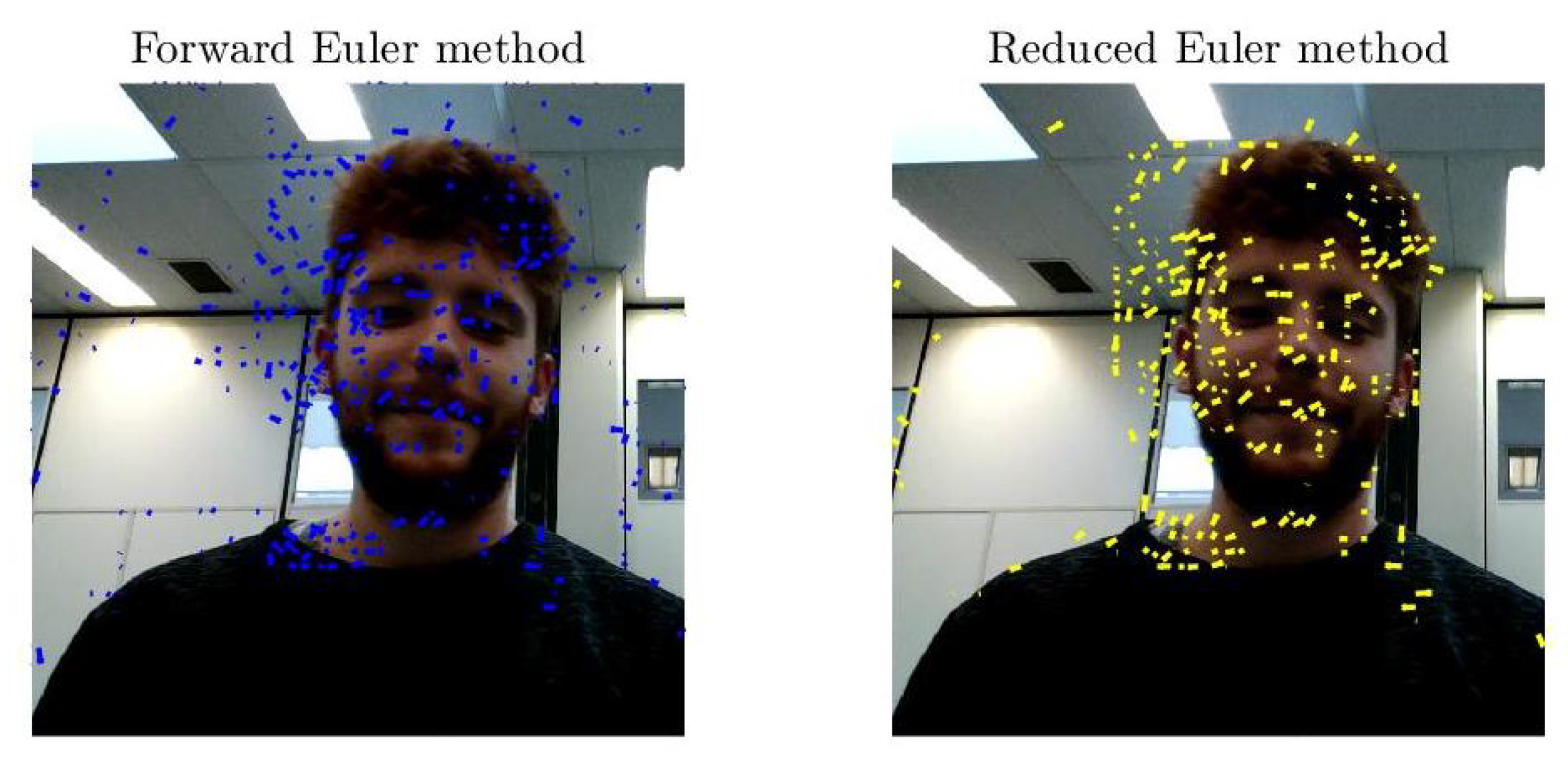

4.2. Numerical Experiments on Optical-Flow Learning

- The optical-flow was estimated between Frame 130 and Frame 135.

- The original colored still images drawn from the two considered frames were converted from RGB to gray scale using the luminance formula as prescribed by the recommendation ITU-R BT.601-7 [55].

- The threshold to stop iteration was set to .

- The learning stepsizes were set as .

- Given the frame size of , each frame was subdivided into windows of size , , or pixels (corresponding to 57,600 matrices of size to compute the SVD, 20,736 matrices of size to compute the SVD, and to 2304 matrices of size to compute the SVD, respectively).

- Computing platform: Intel i7-8550U 4-Core CPU, 1.8 GHz clock, 16 GB RAM. Coding platform: MATLAB R2018b.

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Helmke, U.; Moore, J.B. Singular value decomposition via gradient and self-equivalent flows. Linear Algebra Appl. 1992, 169, 223–248. [Google Scholar] [CrossRef][Green Version]

- Hori, G. A general framework for SVD flows and joint SVD flows. In Proceedings of the 2013 IEEE International Conference on Acoustics, Speech and Signal Processing, Vancouver, BC, Canada, 26–31 May 2013; Volume II, pp. 693–696. [Google Scholar]

- Smith, S.T. Dynamic system that perform the SVD. Syst. Control Lett. 1991, 15, 319–327. [Google Scholar] [CrossRef]

- Alter, O.; Brown, P.O.; Botstein, D. Singular value decomposition for genome-wide expression data processing and modeling. Proc. Natl. Acad. Sci. USA 2000, 97, 10101–10106. [Google Scholar] [CrossRef] [PubMed]

- Ciesielski, A.; Forbriger, T. Singular value decomposition of tidal harmonics on a rigid Earth. Geophys. Res. Abstr. 2019, 21, EGU2019-16895. [Google Scholar]

- Claudino, D.; Mayhall, N.J. Automatic partition of orbital spaces based on singular value decomposition in the context of embedding theories. J. Chem. Theory Comput. 2019, 15, 1053–1064. [Google Scholar] [CrossRef]

- Ernawan, F.; Ariatmanto, D. Image watermarking based on integer wavelet transform-singular value decomposition with variance pixels. Int. J. Electr. Comput. Eng. 2019, 9, 2185–2195. [Google Scholar] [CrossRef]

- Garcia-Pena, M.; Arciniegas-Alarcon, S.; Barbin, D. Climate data imputation using the singular value decomposition: An empirical comparison. Revista Brasileira de Meteorologia 2014, 29, 527–536. [Google Scholar]

- Hao, Z.; Cuia, Z.; Yue, S.; Wang, H. Amplitude demodulation for electrical capacitance tomography based on singular value decomposition. Rev. Sci. Instrum. 2018, 89, 074705. [Google Scholar] [CrossRef]

- Jha, A.K.; Barrett, H.H.; Frey, E.C.; Clarkson, E.; Caucci, L.; Kupinski, M.A. Singular value decomposition for photon-processing nuclear imaging systems and applications for reconstruction and computing null functions. Phys. Med. Biol. 2015, 60, 7359–7385. [Google Scholar] [CrossRef]

- Liu, Y.; Sun, Y.; Li, B. A two-stage household electricity demand estimation approach based on edge deep sparse coding. Information 2019, 10, 224. [Google Scholar] [CrossRef]

- Montesinos-López, O.A.; Montesinos-Lxoxpez, A.; Crossa, J.; Kismiantini; Ramírez-Alcaraz, J.M.; Singh, R.; Mondal, S.; Juliana, P. A singular value decomposition Bayesian multiple-trait and multiple-environment genomic model. Heredity 2019, 122, 381–401. [Google Scholar] [CrossRef] [PubMed]

- Vezyris, C.; Papoutsis-Kiachagias, E.; Giannakoglou, K. On the incremental singular value decomposition method to support unsteady adjoint—Based optimization. Numer. Methods Fluids 2019, 91, 315–331. [Google Scholar] [CrossRef]

- Moore, B.C. Principal component analysis in linear systems: Controllability, observability and model reduction. IEEE Trans. Autom. Control 1981, AC-26, 17–31. [Google Scholar] [CrossRef]

- Lu, W.-S.; Wang, H.-P.; Antoniou, A. Design of two-dimensional FIR digital filters by using the singular value decomposition. IEEE Trans. Circuits Syst. 1990, CAS-37, 35–46. [Google Scholar] [CrossRef]

- Salmeron, M.; Ortega, J.; Puntonet, C.G.; Prieto, A. Improved RAN sequential prediction using orthogonal techniques. Neurocomputing 2001, 41, 153–172. [Google Scholar] [CrossRef]

- Costa, S.; Fiori, S. Image compression using principal component neural networks. Image Vision Comput. J. 2001, 19, 649–668. [Google Scholar] [CrossRef]

- Nestares, O.; Navarro, R. Probabilistic estimation of optical-flow in multiple band-pass directional channels. Image Vision Comput. J. 2001, 19, 339–351. [Google Scholar] [CrossRef]

- Cichocki, A.; Unbehauen, R. Neural networks for computing eigenvalues and eigenvectors. Biol. Cybern. 1992, 68, 155–164. [Google Scholar] [CrossRef]

- Sanger, T.D. Two iterative algorithms for computing the singular value decomposition from input/output samples. In Advances in Neural Processing Systems; Cowan, J.D., Tesauro, G., Alspector, J., Eds.; Morgan-Kauffman Publishers: Burlington, MA, USA, 1994; Volume 6, pp. 1441–1451. [Google Scholar]

- Weingessel, A. An Analysis of Learning Algorithms in PCA and SVD Neural Networks. Ph.D. Thesis, Technical University of Wien, Wien, Austria, 1999. [Google Scholar]

- Afrashteh, N.; Inayat, S.; Mohsenvand, M.; Mohajerani, M.H. Optical-flow analysis toolbox for characterization of spatiotemporal dynamics in mesoscale optical imaging of brain activity. Neuroimage 2017, 153, 58–74. [Google Scholar] [CrossRef]

- Ayzel, G.; Heistermann, M.; Winterrath, T. Optical flow models as an open benchmark for radar-based precipitation nowcasting (rainymotion v0.1). Geosci. Model Dev. 2019, 12, 1387–1402. [Google Scholar] [CrossRef]

- Caramenti, M.; Lafortuna, C.L.; Mugellini, E.; Khaled, O.A.; Bresciani, J.-P.; Dubois, A. Matching optical-flow to motor speed in virtual reality while running on a treadmill. PLoS ONE 2018, 13, e0195781. [Google Scholar] [CrossRef] [PubMed]

- Rahmaniar, W.; Wang, W.-J.; Chen, H.-C. Real-time detection and recognition of multiple moving objects for aerial surveillance. Electronics 2019, 8, 1373. [Google Scholar] [CrossRef]

- Rosa, B.; Bordoux, V.; Nageotte, F. Combining differential kinematics and optical-flow for automatic labeling of continuum robots in minimally invasive surgery. Front. Robot. AI 2019, 6, 86. [Google Scholar] [CrossRef]

- Ruiz-Vargas, A.; Morris, S.A.; Hartley, R.H.; Arkwright, J.W. Optical flow sensor for continuous invasive measurement of blood flow velocity. J. Biophotonics 2019, 12, 201900139. [Google Scholar] [CrossRef] [PubMed]

- Vargas, J.; Quiroga, J.A.; Sorzano, C.O.S.; Estrada, J.C.; Carazo, J.M. Two-step interferometry by a regularized optical-flow algorithm. Opt. Lett. 2011, 36, 3485–3487. [Google Scholar] [CrossRef]

- Serres, J.R.; Evans, T.J.; Åkesson, S.; Duriez, O.; Shamoun-Baranes, J.; Ruffier, F.; Hedenström, A. Optic flow cues help explain altitude control over sea in freely flying gulls. J. R. Soc. Interface 2019, 16, 20190486. [Google Scholar] [CrossRef]

- Lazcano, V. An empirical study of exhaustive matching for improving motion field estimation. Information 2018, 9, 320. [Google Scholar] [CrossRef]

- Liu, L.-K.; Feig, E. A block-based gradient descent search algorithm for block motion estimation in video coding. IEEE Trans. Circuits Syst. Video Technol. 1996, 6, 419–422. [Google Scholar]

- Hsieh, Y.; Jeng, Y. Development of home intelligent fall detection IoT system based on feedback optical-flow convolutional neural network. IEEE Access 2018, 6, 6048–6057. [Google Scholar] [CrossRef]

- Núñez-Marcos, A.; Azkune, G.; Arganda-Carreras, I. Vision-based fall Detection with convolutional neural networks. Wirel. Commun. Mob. Comput. 2017, 2017, 9474806. [Google Scholar] [CrossRef]

- Toda, T.; Masuyama, G.; Umeda, K. Detecting moving objects using optical-flow with a moving stereo camera. In Proceedings of the 13th International Conference on Mobile and Ubiquitous Systems: Computing Networking and Services (MOBIQUITOUS 2016, Hiroshima, Japan, 28 November–1 December 2016; pp. 35–40. [Google Scholar]

- Hou, Y.; Wang, J. Research on intelligent flight software robot based on internet of things. Glob. J. Technol. Optim. 2017, 8, 221. [Google Scholar] [CrossRef]

- Shi, X.; Wang, M.; Wang, G.; Huang, B.; Cai, H.; Xie, J.; Qian, C. TagAttention: Mobile object tracing without object appearance information by vision-RFID fusion. In Proceedings of the 27th IEEE International Conference on Network Protocols, Chicago, IL, USA, 7–10 October 2019; pp. 1–11. [Google Scholar]

- Zulkifley, M.A.; Samanu, N.S.; Zulkepeli, N.A.A.N.; Kadim, Z.; Woon, H.H. Kalman filter-based aggressive behaviour detection for indoor environment. In Lecture Notes in Electrical Engineering; Springer: Berlin/Heidelberg, Germany, 2016; Volume 376, pp. 829–837. [Google Scholar]

- Hussain, T.; Muhammad, K.; Khan, S.; Ullah, A.; Lee, M.Y.; Baik, S.W. Intelligent baby behavior monitoring using embedded vision in IoT for smart healthcare centers. J. Artif. Intell. Syst. 2019, 1, 110–124. [Google Scholar] [CrossRef]

- IBM Research Editorial Staff. Building Cognitive IoT Solutions Using Data Assimilation. Available online: https://www.ibm.com/blogs/research/2016/12/building-cognitive-iot-solutions-using-data-assimilation/ (accessed on 9 December 2016).

- Saquib, N. Placelet: Mobile Foot Traffic Analytics System Using Custom Optical Flow. Available online: https://medium.com/mit-media-lab/placelet-mobile-foot-traffic-analytics-system-using-custom-optical-flow-19bbebfc7cf8 (accessed on 3 March 2016).

- Patrick, M. Why Machine Vision Matters for the Internet of Things. Available online: http://iot-design-zone.com/article/knowhow/2525/why-machine-vision-matters-for-the-internet-of-things (accessed on 12 December 2019).

- Deshpande, S.G.; Hwang, J.-N. Fast motion estimation based on total least squares for video encoding. Proc. Int. Symp. Circuits Syst. 1998, 4, 114–117. [Google Scholar]

- Liu, K.; Yang, H.; Ma, B.; Du, Q. A joint optical-flow and principal component analysis approach for motion detection. In Proceedings of the 2010 IEEE International Conference on Acoustics, Speech and Signal Processing, Dallas, TX, USA, 14–19 March 2010; pp. 1178–1181. [Google Scholar]

- Fiori, S. Unsupervised neural learning on Lie groups. Int. J. Neural Syst. 2002, 12, 219–246. [Google Scholar] [CrossRef]

- Fiori, S. Singular value decomposition learning on double Stiefel manifold. Int. J. Neural Syst. 2003, 13, 155–170. [Google Scholar] [CrossRef]

- Celledoni, E.; Fiori, S. Neural learning by geometric integration of reduced ‘rigid-body’ equations. J. Comput. Appl. Math. 2004, 172, 247–269. [Google Scholar] [CrossRef]

- Fiori, S. A theory for learning by weight flow on Stiefel-Grassman manifold. Neural Comput. 2001, 13, 1625–1647. [Google Scholar] [CrossRef]

- Fiori, S. A theory for learning based on rigid bodies dynamics. IEEE Trans. Neural Netw. 2002, 13, 521–531. [Google Scholar] [CrossRef]

- Fiori, S. Fixed-point neural independent component analysis algorithms on the orthogonal group. Future Gener. Comput. Syst. 2006, 22, 430–440. [Google Scholar] [CrossRef]

- Lambert, J.D. Numerical Methods for Ordinary Differential Systems: The Initial Value Problem, 1st ed.; Wiley: Hoboken, NJ, USA, 1991; ISBN 978-0471929901. [Google Scholar]

- Celledoni, E.; Marthinsen, H.; Owren, B. An introduction to Lie group integrators—Basics, new developments and applications. J. Comput. Phys. Part B 2014, 257, 1040–1061. [Google Scholar] [CrossRef]

- Golub, G.H.; van Loan, C.F. Matrix Computations, 3rd ed.; The John Hopkins University Press: Baltimore, MD, USA, 1996. [Google Scholar]

- Kong, S.; Sun, L.; Han, C.; Guo, J. An image compression scheme in wireless multimedia sensor networks based on NMF. Information 2017, 8, 26. [Google Scholar] [CrossRef]

- Wang, D. Adjustable robust singular value decomposition: Design, analysis and application to finance. Data 2017, 2, 29. [Google Scholar] [CrossRef]

- RECOMMENDATION ITU-R BT.601-7. Studio Encoding Parameters of Digital Television for Standard 4:3 and Wide-Screen 16:9 Aspect Ratios. Version 2017. Available online: https://www.itu.int/dms_pubrec/itu-r/rec/bt/R-REC-BT.601-7-201103-I!!PDF-E.pdf (accessed on 10 February 2020).

| Size of A | ||||

|---|---|---|---|---|

| Method | ||||

| Heun, Version 1 | 0.3836 | 0.3468 | 34.5559 | |

| Heun, Version 4 | 0.2264 | 0.1148 | 9.1531 | |

| Size of A | ||||

|---|---|---|---|---|

| Method | ||||

| Runge, Version 1 | 0.4536 | 0.8816 | 65.1238 | |

| Runge, Version 3 | 0.3645 | 0.5274 | 21.7729 | |

| Size of A | ||||

|---|---|---|---|---|

| Method | ||||

| Heun, Version 4 | 0.2033 | 0.3219 | 20.0415 | |

| Runge, Version 3 | 0.2060 | 0.3601 | 26.3659 | |

| Forward Euler | 0.0740 | 0.1096 | 8.5624 | |

| Size of A | ||||

|---|---|---|---|---|

| Method | ||||

| Forward Euler | 0.2003 | 0.1190 | 7.7378 | |

| Reduced Euler | 0.0833 | 0.0441 | 0.4350 | |

| Method | Forward Euler Method | Reduced Euler Method | Time Saving (%) | |

|---|---|---|---|---|

| Window Size | ||||

| 1833 | 907 | 50.52% | ||

| 1177 | 606 | 48.51% | ||

| 15,243 | 241 | 98.42% | ||

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Fiori, S.; Del Rossi, L.; Gigli, M.; Saccuti, A. First Order and Second Order Learning Algorithms on the Special Orthogonal Group to Compute the SVD of Data Matrices. Electronics 2020, 9, 334. https://doi.org/10.3390/electronics9020334

Fiori S, Del Rossi L, Gigli M, Saccuti A. First Order and Second Order Learning Algorithms on the Special Orthogonal Group to Compute the SVD of Data Matrices. Electronics. 2020; 9(2):334. https://doi.org/10.3390/electronics9020334

Chicago/Turabian StyleFiori, Simone, Lorenzo Del Rossi, Michele Gigli, and Alessio Saccuti. 2020. "First Order and Second Order Learning Algorithms on the Special Orthogonal Group to Compute the SVD of Data Matrices" Electronics 9, no. 2: 334. https://doi.org/10.3390/electronics9020334

APA StyleFiori, S., Del Rossi, L., Gigli, M., & Saccuti, A. (2020). First Order and Second Order Learning Algorithms on the Special Orthogonal Group to Compute the SVD of Data Matrices. Electronics, 9(2), 334. https://doi.org/10.3390/electronics9020334