Rate-Invariant Modeling in Lie Algebra for Activity Recognition

Abstract

1. Introduction

- The mapping into the tangent space on the identity element does not cause distortions in the proposed approach as the transformations are considered for the same body segment across frames, and thus, the resulting points on the special euclidean group are close to the identity element.

- We perform a spatio-temporal modeling of skeleton sequences as trajectories on the special euclidean group.

- The rigid transformations of the object are modeled as an additional trajectory in the same manifold, while in [7], only the joint-based approaches were proposed.

- An elastic metric of the trajectories is proposed to model the time independently of the execution rate.

- Exhaustive experiments and comparative studies are presented on three benchmarks: a benchmark for action without objects (MSR-Action dataset), a benchmark for actions with object interaction (SYSU3D Human-Object Interaction dataset), and a benchmark with a mixture of action and human–object interaction (MSR Daily Activity dataset).

2. State-of-the-Art

2.1. Depth-Based Representation

2.2. Skeleton-Based Representation

2.3. RGB-D-Based Development

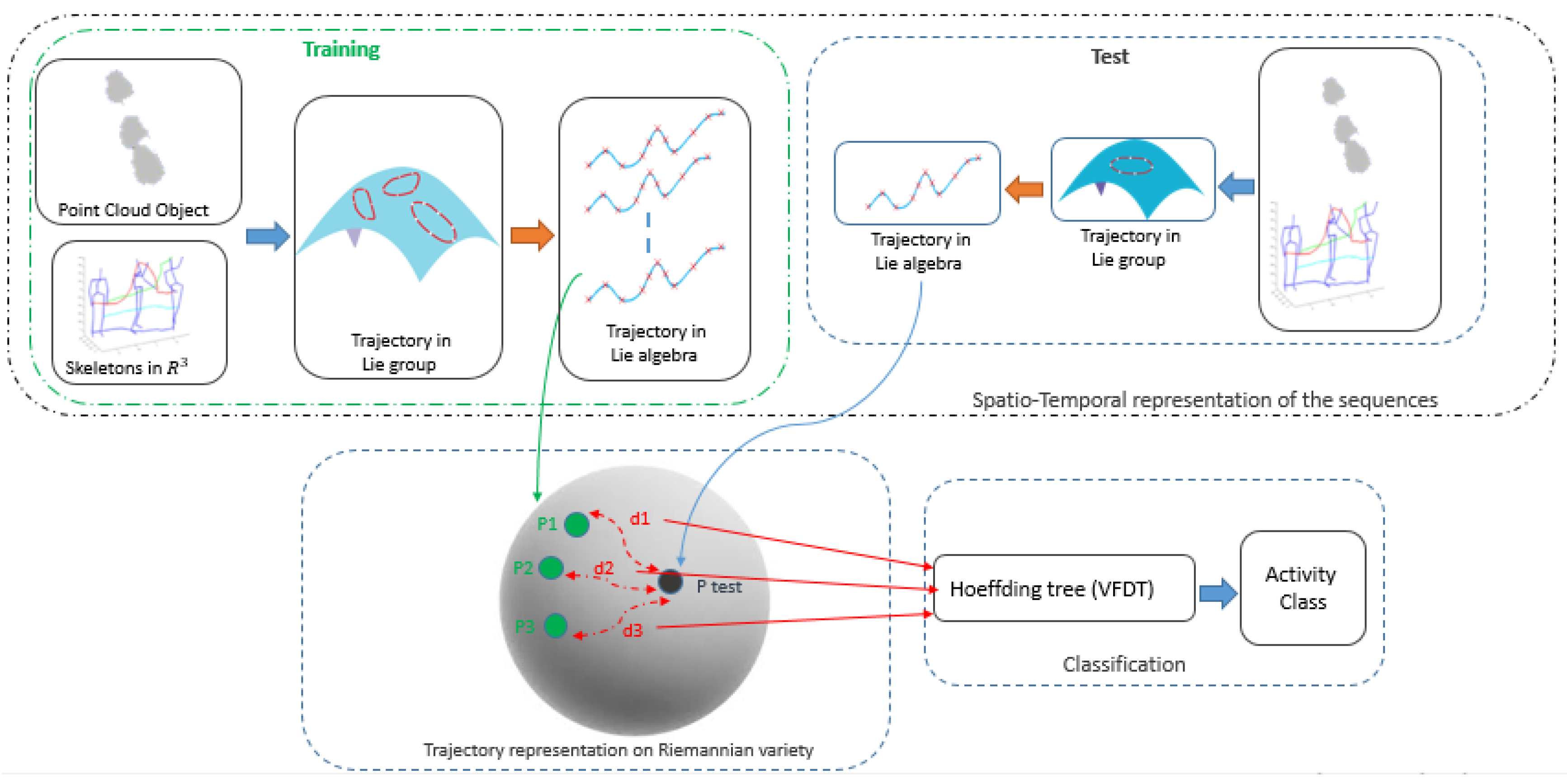

3. Spatio-Temporal Modeling

3.1. Proposed Approach

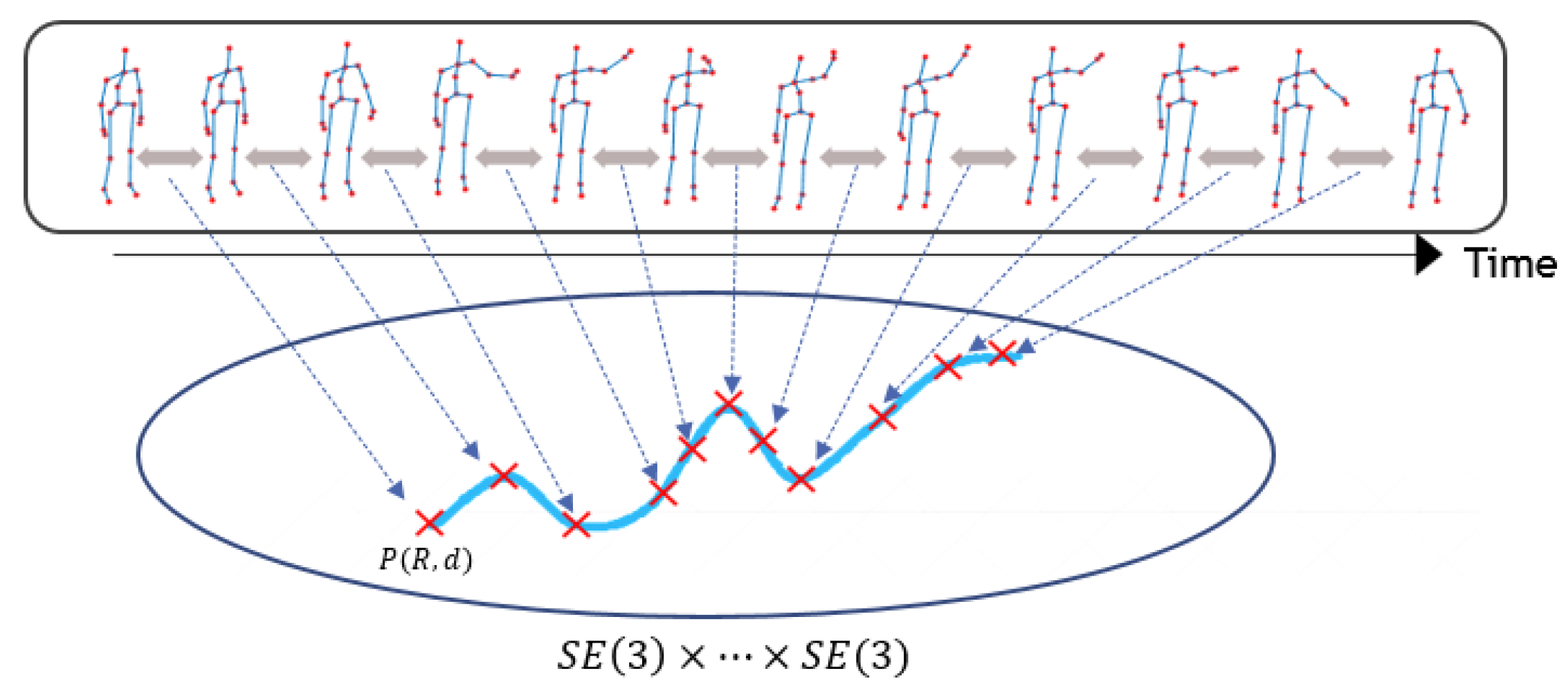

3.2. Skeleton Motion Modeling

3.3. Object Modeling

3.3.1. Object Detection

3.3.2. Object Trajectory

4. Rate Invariance Modeling and Classification

4.1. Elastic Metric for Trajectories

4.2. Feature Vector Building and Classification

| Algorithm 1 Action sequences’ classification. |

|

5. Experimentation and Results

5.1. MSR Action 3D

5.1.1. Data Description and Protocol

5.1.2. Experimental Result and Comparison

5.2. MSR Daily Activity 3D

5.2.1. Data Description and Protocol

5.2.2. Experimental Result and Comparison

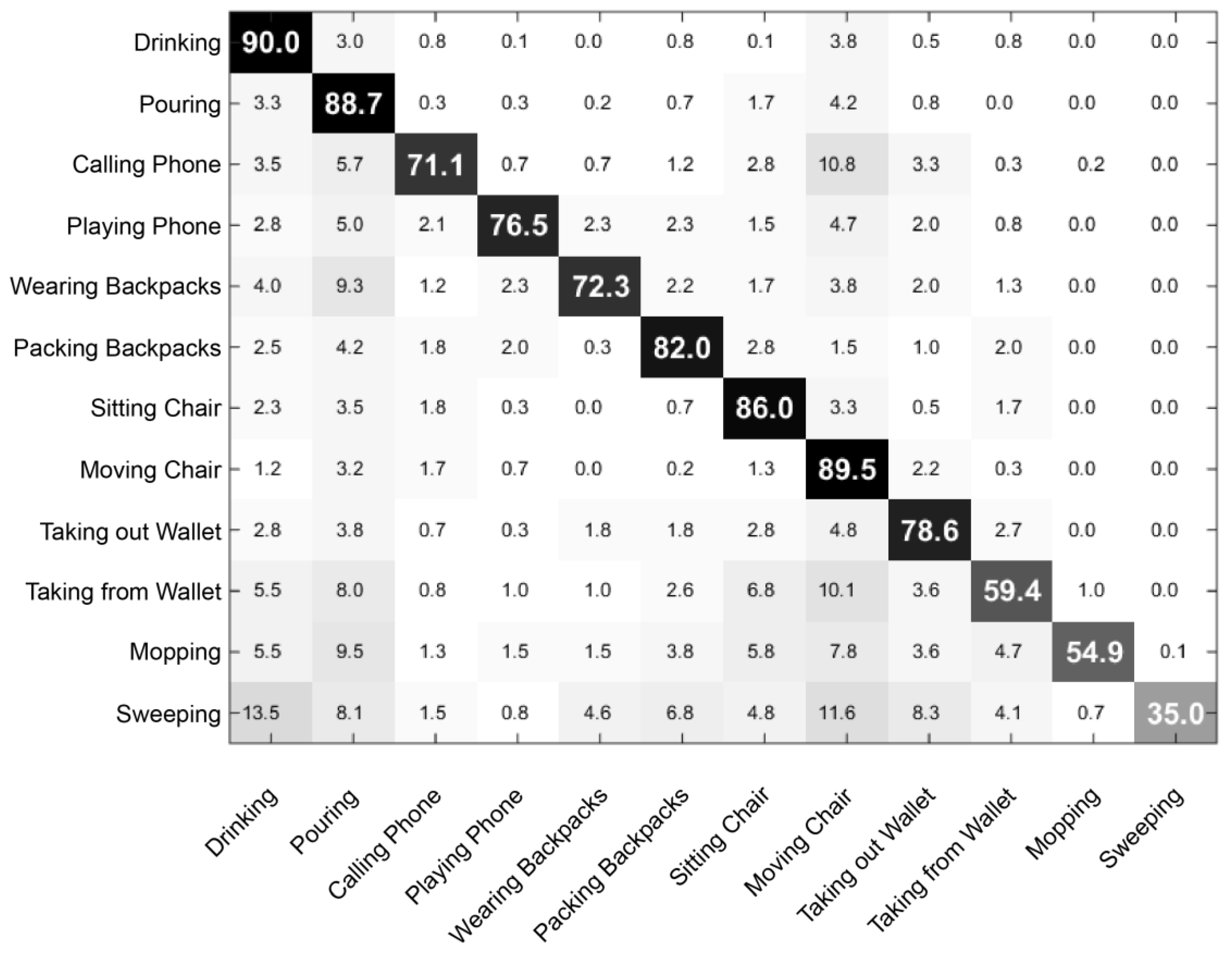

5.3. SYSU 3D Human-Object Interaction Set

5.3.1. Data Description and Protocol

5.3.2. Experimental Result and Comparison

6. Conclusions and Future Direction

Author Contributions

Funding

Conflicts of Interest

References

- Shotton, J.; Sharp, T.; Kipman, A.; Fitzgibbon, A.; Finocchio, M.; Blake, A.; Cook, M.; Moore, R. Real-time human pose recognition in parts from single depth images. Commun. ACM 2013, 56, 116–124. [Google Scholar] [CrossRef]

- Vemulapalli, R.; Arrate, F.; Chellappa, R. Human Action Recognition by Representing 3D Skeletons as Points in a Lie Group. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Columbus, OH, USA, 23–28 June 2014. [Google Scholar]

- Oreifej, O.; Liu, Z. Hon4d: Histogram of oriented 4d normals for activity recognition from depth sequences. In Proceedings of the IEEE International Conference on Computer Vision and Pattern Recognition, Portland, OR, USA, 23–28 June 2013; pp. 716–723. [Google Scholar]

- Ofli, F.; Chaudhry, R.; Kurillo, G.; Vidal, R.; Bajcsy, R. Sequence of the most informative joints (smij): A new representation for human skeletal action recognition. J. Vis. Commun. Image Represent. 2014, 25, 24–38. [Google Scholar] [CrossRef]

- Ohn-bar, E.; Trivedi, M.M. Joint Angles Similarities and HOG for Action Recognition. In Proceedings of the 2013 IEEE Conference on Computer Vision and Pattern Recognition Workshops, Portland, OR, USA, 23–28 June 2013. [Google Scholar]

- Vemulapalli, R.; Chellapa, R. Rolling Rotations for Recognizing Human Actions From 3D Skeletal Data. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 4471–4479. [Google Scholar]

- Boujebli, M.; Drira, H.; Mestiri, M.; Farah, I.R. Rate invariant action recognition in Lie algebra. In Proceedings of the 2017 International Conference on Advanced Technologies for Signal and Image Processing (ATSIP), Fez, Morocco, 22–24 May 2017. [Google Scholar]

- Li, W.; Zhang, Z.; Liu, Z. Action recognition based on a bag of 3d points. In Proceedings of the IEEE International Conference on Computer Vision and Pattern Recognition Workshops, San Francisco, CA, USA, 13–18 June 2010; pp. 9–14. [Google Scholar]

- Zhu, Y.; Chen, W.; Guo, G. Evaluating spatiotemporal interest point features for depth-based action recognition. Image Vis. Comput. 2014, 32, 453–464. [Google Scholar] [CrossRef]

- Yang, X.; Tian, Y. Super normal vector for activity recognition using depth sequences. In Proceedings of the IEEE International Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 24–27 June 2014; pp. 804–811. [Google Scholar]

- Lu, C.; Jia, J.; Tang, C.-K. Range-sample depth feature for action recognition. In Proceedings of the IEEE International Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 24–27 June 2014; pp. 772–779. [Google Scholar]

- Luo, J.; Wang, W.; Qi, H. Group sparsity and geometry constrained dictionary learning for action recognition from depth maps. In Proceedings of the 2013 IEEE International Conference on Computer Vision, Sydney, Australia, 1–8 December 2013. [Google Scholar]

- Du, Y.; Wang, W.; Wang, L. Hierarchical recurrent neural network for skeleton based action recognition. In Proceedings of the IEEE International Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1110–1118. [Google Scholar]

- Hussein, M.E.; Torki, M.; Gowayyed, M.A.; El-Saban, M. Human action recognition using a temporal hierarchy of covariance descriptors on 3d joint locations. In Proceedings of the International Joint Conference on Artificial Intelligence, Beijing, China, 3–9 August 2013; pp. 2466–2472. [Google Scholar]

- Lv, F.; Nevatia, R. Recognition and segmentation of 3-d human action using hmm and multi-class adaboost. In Proceedings of the European Conference on Computer Vision, Graz, Austria, 7–13 May 2006; pp. 359–372. [Google Scholar]

- Yang, X.; Tian, Y. Eigenjoints-based action recognition using naivebayes-nearest-neighbor. In Proceedings of the IEEE International Conference on Computer Vision and Pattern Recognition Workshops, Providence, RI, USA, 16–21 June 2012; pp. 14–19. [Google Scholar]

- Lillo, I.; Soto, A.; Niebles, J.C. Discriminative hierarchical modeling of spatio-temporally composable human activities. In Proceedings of the IEEE International Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 812–819. [Google Scholar]

- Zanfir, M.; Leordeanu, M.; Sminchisescu, C. The moving pose: An efficient 3d kinematics descriptor for low-latency action recognition and detection. In Proceedings of the IEEE International Conference on Computer Vision, Sydney, Australia, 1–8 December 2013; pp. 2752–2759. [Google Scholar]

- Zhu, Y.; Chen, W.; Guo, G. Fusing spatio-temporal features and joints for 3d action recognition. In Proceedings of the IEEE International Conference on Computer Vision and Pattern Recognition Workshops, Portland, OR, USA, 23–28 June 2013; pp. 486–491. [Google Scholar]

- Devanne, M.; Wannous, H.; Berretti, S.; Pala, P.; Daoudi, M.; Del Bimbo, A. 3D Human Action Recognition by Shape Analysis of Motion Trajectories on Riemannian Manifold. IEEE Trans. Cybern. 2015, 45, 1340–1352. [Google Scholar] [CrossRef] [PubMed]

- Meng, M.; Drira, H.; Boonaert, J. Distances evolution analysis for online and off-line human–object interaction recognition. Image Vision Comput. 2018, 70, 32–45. [Google Scholar] [CrossRef]

- Meng, M.; Drira, H.; Daoudi, M.; Boonaert, J. Human-object interaction recognition by learning the distances between the object and the skeleton joints. In Proceedings of the 2015 11th IEEE International Conference and Workshops on Automatic Face and Gesture Recognition (FG), Ljubljana, Slovenia, 4–8 May 2015. [Google Scholar]

- Wang, J.; Liu, Z.; Wu, Y.; Yuan, J. Mining Actionlet Ensemble for Action Recognition with Depth Cameras. In Proceedings of the IEEE International Conference, Providence, RI, USA, 16–21 June 2012. [Google Scholar]

- Chaudhry, R.; Ofli, F.; Kurillo, G.; Bajcsy, R.; Vidal, R. Bio-inspired Dynamic 3D Discriminative Skeletal Features for Human Action Recognition. In Proceedings of the 2013 IEEE Conference on Computer Vision and Pattern Recognition Workshops, Portland, OR, USA, 23–28 June 2013. [Google Scholar]

- Guo, S.; Pan, H.; Tan, G.; Chen, L.; Gao, C. A High Invariance Motion Representation for Skeleton-Based Action Recognition. Int. J. Pattern Recognit. Artif. Intell. 2016, 30, 1650018. [Google Scholar] [CrossRef]

- Liu, L.; Shao, L. Learning discriminative representations from rgb-d video data. In Proceedings of the International Joint Conference on Artificial Intelligence, Beijing, China, 3–9 August 2013; pp. 1493–1500. [Google Scholar]

- Shahroudy, A.; Wang, G.; Ng, T.-T. Multi-modal feature fusion for action recognition in rgb-d sequences. In Proceedings of the International Symposium on Control, Communications, and Signal Processing, Athens, Greece, 21–23 May 2014; pp. 1–4. [Google Scholar]

- Yu, M.; Liu, L.; Shao, L. Structure-preserving binary representations for rgb-d action recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 38, 1651–1664. [Google Scholar] [CrossRef] [PubMed]

- Chaaraoui, A.A.; Padilla-Lopez, J.R.; Florez-Revuelta, F. Fusion of skeletal and silhouette-based features for human action recognition with rgb-d devices. In Proceedings of the IEEE International Conference on Computer Vision Workshops, Sydney, Australia, 1–8 December 2013; pp. 91–97. [Google Scholar]

- Koppula, H.S.; Gupta, R.; Saxena, A. Learning human activities and object affordances from rgb-d videos. Int. J. Robot. Res. 2013, 32, 951–970. [Google Scholar] [CrossRef]

- Lei, J.; Ren, X.; Fox, D. Fine-grained kitchen activity recognition using rgb-d. In Proceedings of the ACM Conference on Ubiquitous Computing, Pittsburgh, PA, USA, 5–8 September 2012; pp. 208–211. [Google Scholar]

- Wen, Z.; Yin, W. A feasible method for optimization with orthogonality constraints. Math. Program. 2013, 142, 397–434. [Google Scholar] [CrossRef]

- Zhao, Y.; Liu, Z.; Yang, L.; Cheng, H. Combing rgb and depth map features for human activity recognition. In Proceedings of the IEEE Asia-Pacific Signal & Information Processing Association Annual Summit and Conference (APSIPA ASC), Hollywood, CA, USA, 3–6 December 2012; pp. 1–4. [Google Scholar]

- Murray, R.M.; Li, Z.; Sastry, S.S. A Mathematical Introduction to Robotic Manipulation; CRC Press: Boca Raton, FL, USA, 1994. [Google Scholar]

- Joshi, S.H.; Klassen, E.; Srivastava, A.; Jermyn, I. A novel representation for riemannian analysis of elastic curves in Rn. In Proceedings of the IEEE International Conference on Computer Vision and Pattern Recognition, Minneapolis, MN, USA, 17–22 June 2007; pp. 1–7. [Google Scholar]

- Xu, W.; Qin, Z. Constructing Decision Trees for Mining High-speed Data Streams. Chin. J. Electron. 2012, 21, 215–220. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Drira, H.; Ben Amor, B.; Srivastava, A.; Daoudi, M.; Slama, R. 3D Face Recognition under Expressions, Occlusions, and Pose Variations. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 2270–2283. [Google Scholar] [CrossRef] [PubMed]

- Xia, B.; Ben Amor, B.; Drira, H.; Daoudi, M.; Ballihi, L. Combining face averageness and symmetry for 3D-based gender classification. Pattern Recognit. 2015, 48, 746–758. [Google Scholar] [CrossRef]

- Xia, B.; Amor, B.B.; Drira, H.; Daoudi, M.; Ballihi, L. Gender and 3D facial symmetry: What’s the relationship? In Proceedings of the 2013 10th IEEE International Conference and Workshops on Automatic Face and Gesture Recognition (FG), Shanghai, China, 22–26 April 2013. [Google Scholar]

- Amor, B.B.; Drira, H.; Ballihi, L.; Srivastava, A.; Daoudi, M. An experimental illustration of 3D facial shape analysis under facial expressions. Ann. Telecommun. 2009, 64, 369–379. [Google Scholar] [CrossRef]

- Mokni, R.; Drira, H.; Kherallah, M. Combining shape analysis and texture pattern for palmprint identification. Multimed. Tools Appl. 2017, 76, 23981–24008. [Google Scholar] [CrossRef]

- Xia, B.; Amor, B.B.; Daoudi, M.; Drira, H. Can 3D Shape of the Face Reveal your Age? In Proceedings of the 2014 International Conference on Computer Vision Theory and Applications (VISAPP), Lisbon, Portugal, 5–8 January 2014; pp. 5–13. [Google Scholar]

- Hu, J.-F.; Zheng, W.-S.; Lai, J.; Zhang, J. Jointly learning heterogeneous features for rgb-d activity recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 2186–2200. [Google Scholar] [CrossRef] [PubMed]

- Shotton, J.; Fitzgibbon, A.; Cook, M.; Sharp, T.; Finocchio, M.; Moore, R.; Kipman, A.; Blake, A. Real-time human pose recognition in parts from single depth images. In Proceedings of the CVPR, Providence, RI, USA, 20–25 June 2011. [Google Scholar]

- Yacoob, Y.; Black, M.J. Parameterized Modeling and Recognition of Activites. In Proceedings of the Sixth International Conference on Computer Vision, Bombay, India, 4–7 January 1998. [Google Scholar]

- Xia, L.; Chen, C.C.; Aggarwal, J.K. View Invariant Human Action Recognition Using Histograms of 3D Joints. In Proceedings of the 2012 IEEE Computer Society Conference on Computer Vision and Pattern Recognition Workshops, Providence, RI, USA, 16–21 June 2012. [Google Scholar]

- Wang, C.; Wang, Y.; Yuille, A.L. An Approach to Pose-based Action Recognition. In Proceedings of the 2013 IEEE Conference on Computer Vision and Pattern Recognition, Portland, OR, USA, 23–28 June 2013. [Google Scholar]

- Muller, M.; Roder, T. Motion templates for automatic classification and retrieval of motion capture data. In Proceedings of the ACM SIGGRAPH/Eurographics Symposium on Computer Animation, Vienna, Austria, 2–4 September 2006; pp. 137–146. [Google Scholar]

- Wang, J.; Liu, Z.; Wu, Y.; Yuan, J. Learning actionlet ensemble for 3d human action recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2014, 36, 914–927. [Google Scholar] [CrossRef] [PubMed]

- Kong, Y.; Fu, Y. Bilinear heterogeneous information machine for rgbd action recognition. In Proceedings of the IEEE International Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1054–1062. [Google Scholar]

- Xia, L.; Aggarwal, J. Spatio-temporal depth cuboid similarity feature for activity recognition using depth camera. In Proceedings of the IEEE International Conference on Computer Vision and Pattern Recognition, Portland, OR, USA, 23–28 June 2013; pp. 2834–2841. [Google Scholar]

- Cao, L.; Luo, J.; Liang, F.; Huang, T.S. Heterogeneous feature machines for visual recognition. In Proceedings of the IEEE International Conference on Computer Vision, Kyoto, Japan, 29 September–2 October 2009; pp. 1095–1102. [Google Scholar]

- Cai, Z.; Wang, L.; Qiao, X.P.Y. Multi-view super vector for action recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 24–27 June 2014; pp. 596–603. [Google Scholar]

- Zhang, Y.; Yeung, D.-Y. Multi-task learning in heterogeneous feature spaces. In Proceedings of the Conference on Artificial Intelligence, San Francisco, CA, USA, 7–11 August 2011. [Google Scholar]

- Hu, J.-F.; Zheng, W.-S.; Ma, L.; Wang, G.; Lai, J. Real-time RGB-D activity prediction by soft regression. In Proceedings of the European Conference on Computer Vision (ECCV), Amsterdam, The Netherlands, 8–16 October 2016; pp. 280–296. [Google Scholar]

- Liu, J.; Shahroudy, A.; Xu, D.; Wang, G. Spatio-Temporal LSTM with Trust Gates for 3D Human Action Recognition; Springer: Cham, Switzerland, 2016; pp. 816–833. [Google Scholar]

| Dataset | JP [14] | RJP [16] | JA [5] | BPL [46] | Proposed |

|---|---|---|---|---|---|

| 91.65 | 92.15 | 85.80 | 83.87 | 94.66 | |

| 75.36 | 79.24 | 65.47 | 75.23 | 85.08 | |

| 94.64 | 93.31 | 94.22 | 91.54 | 96.76 | |

| Average | 87.22 | 88.23 | 81.83 | 83.54 | 92.16 |

| MSR-Action3D Dataset (Protocol of [8]) | |

|---|---|

| Histograms of 3D joints [47] | 78.97 |

| EigenJoints [16] | 82.30 |

| Joint angle similarities [5] | 83.53 |

| Spatial and temporal part sets [48] | 90.22 |

| Co-variance descriptors [14] | 90.53 |

| Random forests [19] | 90.90 |

| Body parts (BP)+SRVF [20] | 92.10 |

| Intra-frame modeling [2] | 92.49 |

| Proposed approach: skeleton | 92.16 |

| eat | drink | use laptop |

| read book | call cellphone | cheer up |

| write on a paper | use vacuum cleaner | play guitar |

| use laptop | sit still | stand up |

| toss paper | play game | sit down |

| walk | lie down on sofa |

| Methods | Accuracy % |

|---|---|

| (G) Dynamic Temporal Warping [49] | 54 |

| (G) 3D Joints and Local occupancy patterns (LOP) [50] | 78 |

| (G) Histogram of Oriented 4D Normals (HON4D) [3] | 80.00 |

| (G) Spar-Sity learning to Fuse atomic Features (SSFF) [27] | 81.9 |

| (G) Deep Model-Restricted Graph-based Genetic Programming (RGGP ) [26] | 85.6 |

| (G) Action-let Ensemble [50] | 85.75 |

| (G) Super Normal [10] | 86.25 |

| (G) Bilinear [51] | 86.88 |

| (G) Depth Cuboid Similarity Feature (DCSF) + Joint [52] | 88.2 |

| (G) Local Flux Feature (LFF) + Improved Fisher Vector (IFV) [28] | 91.1 |

| (G) Group Sparsity [12] | 95 |

| (G) Range Sample [11] | 95.6 |

| (G) Heterogeneous Feature Machines (HFM) [53] | 84.38 |

| (G) Model of Probabilistic Canonical Correlation Analyzers (MPCCA) [54] | 90.62 |

| (G) Multi-Task Discriminant Analysi (MTDA) [55] | 90.62 |

| (G + D + C) JOULE [44] | 95 |

| Our Method:(G) Skeleton | 87.55 |

| Our Method:(G + D) Skeleton + Obj(D) | 88 |

| Our Method:(G + C) Skeleton + Obj(RGB) | 94.44 |

| Our Method:(G + D + C) Skeleton + Obj(RGB) + Obj(D) | 95 |

| Methods | Accuracy % |

|---|---|

| (G) Local Accumulative Frame Feature (LAFF) [56] | 54.2 |

| (G) Dynamic skeletons [44] | 75.5 ± 3.08 |

| (G) LSTM-trust gate [57] | 76.5 |

| (G + D + C) LAFF [56] | 80 |

| (G + D + C) JOULE [44] | 84.9 ± 2.29 |

| Our Method:(G) Skeleton | 73.48 ± 5.91 |

| Our Method:(G + D) Skeleton + Obj (D) | 74.51 ± 5.47 |

| Our Method:(G + C) Skeleton + Obj (RGB) | 86.76 ± 4.82 |

| Our Method:(G + D + C) Skeleton + Obj (RGB) + Obj (D) | 87.40 ± 5.04 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Boujebli, M.; Drira, H.; Mestiri, M.; Farah, I.R. Rate-Invariant Modeling in Lie Algebra for Activity Recognition. Electronics 2020, 9, 1888. https://doi.org/10.3390/electronics9111888

Boujebli M, Drira H, Mestiri M, Farah IR. Rate-Invariant Modeling in Lie Algebra for Activity Recognition. Electronics. 2020; 9(11):1888. https://doi.org/10.3390/electronics9111888

Chicago/Turabian StyleBoujebli, Malek, Hassen Drira, Makram Mestiri, and Imed Riadh Farah. 2020. "Rate-Invariant Modeling in Lie Algebra for Activity Recognition" Electronics 9, no. 11: 1888. https://doi.org/10.3390/electronics9111888

APA StyleBoujebli, M., Drira, H., Mestiri, M., & Farah, I. R. (2020). Rate-Invariant Modeling in Lie Algebra for Activity Recognition. Electronics, 9(11), 1888. https://doi.org/10.3390/electronics9111888