Abstract

Distributed stream processing engines (DSPEs) deploy multiple tasks on distributed servers to process data streams in real time. Many DSPEs have provided locality-aware stream partitioning (LSP) methods to reduce network communication costs. However, an even job scheduler provided by DSPEs deploys tasks far away from each other on the distributed servers, which cannot use the LSP properly. In this paper, we propose a Locality/Fairness-aware job scheduler (L/F job scheduler) that considers locality together to solve problems of the even job scheduler that only considers fairness. First, the L/F job scheduler increases cohesion of contiguous tasks that require message transmissions for the locality. At the same time, it reduces coupling of parallel tasks that do not require message transmissions for the fairness. Next, we connect the contiguous tasks into a stream pipeline and evenly deploy stream pipelines to the distributed servers so that the L/F job scheduler achieves high cohesion and low coupling. Finally, we implement the proposed L/F job scheduler in Apache Storm, a representative DSPE, and evaluate it in both synthetic and real-world workloads. Experimental results show that the L/F job scheduler is similar in throughput compared to the even job scheduler, but latency is significantly improved by up to 139.2% for the LSP applications and by up to 140.7% even for the non-LSP applications. The L/F job scheduler also improves latency by 19.58% and 12.13%, respectively, in two real-world workloads. These results indicate that our L/F job scheduler provides superior processing performance for the DSPE applications.

1. Introduction

With the generation of large data streams and the demand for real-time response, research on distributed stream processing engines (DSPEs) are becoming very active. Representative DSPEs include Apache Storm [1,2,3,4], Apache Spark Streaming [5], Apache Flink [6,7], Apache Samza [8], and Apache S4 [9]. They distribute applications submitted by developers to distributed servers for processing in parallel, ensuring high throughput and low latency. In general, a DSPE application consists of one or more jobs, and a job consists of many tasks. Each task processes messages emitted from data sources or other tasks and emits the transformed messages to data destinations or other tasks. Thus, a lot of network communication occurs between tasks while the DSPE applications process the data stream. At this point, the sender tasks (upstreams) must select one (or more) of the many receiver tasks (downstreams) to send the messages. This downstream selection procedure is called a stream partitioning [2,6,10]. In the DSPE, the stream partitioning method is the most important factor affecting the DSPE performance because it determines whether or not network communication occurs.

Many DSPEs introduce locality in the stream partitioning to allow upstream to send messages without network communication to improve the performance. This locality-aware stream partitioning (LSP) allows upstream to send messages only to downstreams in the same process as itself and to evenly distribute messages to all downstreams if no such downstream exists. Representative LSP methods include Local-or-Shuffle grouping of Apache Storm [2] and Rescaling of Apache Flink [7]. This paper focuses on improving the processing performance of LSP.

However, the LSP often fails to consider the locality due to job scheduling that deploys the submitted jobs on the distributed servers. Apache Storm, a representative DSPE, uses an even job scheduler as its default job scheduler, which deploys tasks of a job as evenly as possible on the distributed servers. An even job scheduler aims for high fairness by deploying tasks so that they can use system resources fairly, whereas the pre-scheduled tasks are likely to be geographically located far from each other. Even considering the high fairness, this completely contradicts the purpose of LSP. The LSP applications deployed by the even job scheduler must send messages downstream of other processes without considering the locality. After all, this incurs a high communication cost, which significantly makes the DSPE performance worse.

In this paper, we propose a Locality/Fairness-aware job scheduler (L/F job scheduler, in short) that enhances both locality and fairness. In the job scheduling, the locality means placing tasks as close as possible, and the fairness means placing tasks as far as possible. Therefore, considering both locality and fairness which are opposite concepts, is a challenging problem. To take account of both locality and fairness, we first present the concepts of cohesion and coupling between tasks. The tasks of a DSPE job have a horizontally contiguous relationship with each other and a vertically parallel relationship with each other. The contiguous tasks transmit messages to each other according to a given stream partitioning, and the parallel tasks operate independently without message transmission. This indicates that the contiguous tasks should be close to each other to increase the locality, i.e., to increase the cohesion, and the parallel tasks should be far from each other to increase the fairness, i.e., to decrease the coupling.

We construct stream pipelines from the tasks so that the L/F job scheduler achieves high cohesion and low coupling. A pipeline is a collection of one or more tasks so that messages can flow without network communication. Therefore, the tasks in the pipeline need to be contiguous but not parallel to each other. The proposed L/F job scheduler consists of two steps. First, the pipeline extraction step extracts the pipelines repeatedly until all tasks are consumed in a given job. Second, the pipeline assignment step assigns all pipelines as evenly as possible to each process in the distributed server.

We experiment and evaluate the proposed L/F job scheduler in Apache Storm. To experiment with various DSPE applications in Storm, we conduct extensive synthetic workloads and real-world workloads deployed in industry. For the synthetic workloads, we classify the DSPE applications into five configurations: linear-, ascent-, descent-, diamond-, and star-parallelisms. In addition, we divide each parallelism into a low parallelism environment representing a simple job and a high parallelism environment representing a complex job. In these various configurations, we compare the processing performance of the existing even job scheduler and the proposed L/F job scheduler. Experimental results show that our L/F job scheduler improves latency by up to 139.2% for LSP applications and 140.7% even for non-LSP applications while maintaining the same or similar throughput compared to the existing even job scheduler in all environments. Also, for the real-world workloads, we evaluate the L/F job scheduler on two page view count applications. The experimental results show that the L/F job scheduler improved latency by 19.58% and 12.13%, respectively, in the two applications, thereby demonstrating its superiority.

Our contributions can be summarized as follows:

- (1)

- In DSPEs, we present the locality problems that the even job scheduler deploys tasks on different nodes, even though they can be processed on the same node.

- (2)

- We propose a novel DSPE job scheduler, called L/F job scheduler, which improves both locality and fairness. For this, we present the concepts of cohesion and coupling between tasks, and also describe how to create a stream pipeline of contiguous tasks to increase the cohesion and reduce the coupling.

- (3)

- We implement the L/F job scheduler in Apache Storm, a representative DSPE, and show that it outperforms the existing even job scheduler. In particular, we classify the various DSPE applications into five configurations based on the parallelism and utilize two general and practical workloads deployed in industry for a variety of experiments.

The rest of this paper is organized as follows. Section 2 presents DSPEs as the background of this paper, and the limitations of the even job scheduler as our motivation. We design the proposed L/F job scheduler in Section 3. We experiment and evaluate the L/F job scheduler implemented in Storm and the even job scheduler provided by Storm in Section 4. Section 5 describes the case studies of job scheduling for distributed processing engines. Finally, Section 6 summarizes and concludes the paper.

2. Preliminaries

This section describes the generic procedure for DSPE applications and presents the limitations of the existing job scheduler. Section 2.1 explains the components of a DSPE application and the stream partitioning methods of DSPEs. Section 2.2 describes the existing job scheduler that deploys tasks as evenly as possible. Section 2.3 presents the reason why the existing job scheduler cannot handle the most of the LSP methods appropriately and degrades the processing performance of applications.

2.1. Distributed Stream Applications

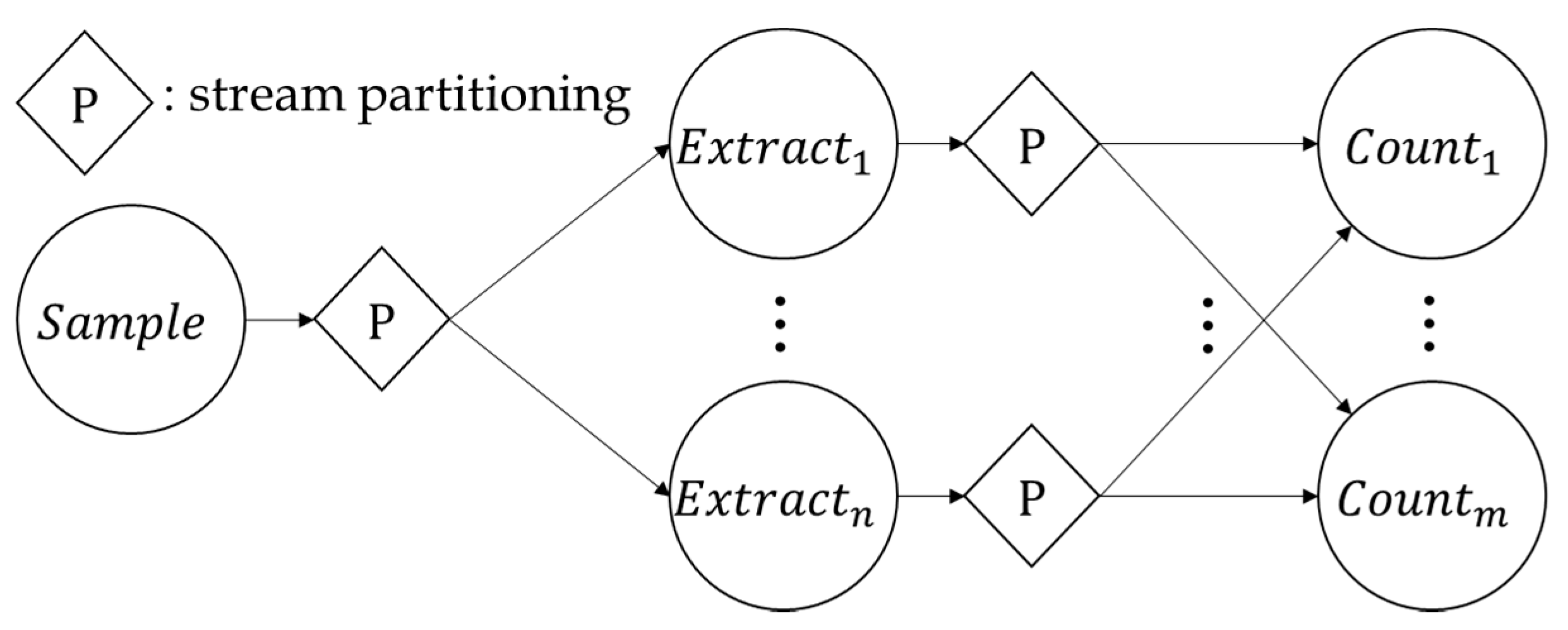

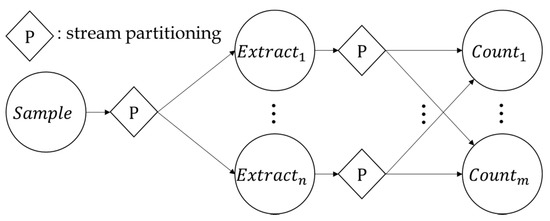

DSPE applications can be represented as directed acyclic graphs (DAGs) (an application may involve several jobs, and the extension to multiple jobs is straightforward from a single job; thus, in this paper we simply deal with a single job for each application for better understanding.), where a node is a processing operator (PO) that sends data streams through the edges [10,11]. Each PO replicates a number of instances (POIs) and deploys them on distributed servers. Figure 1 shows a DAG example of a representative DSPE application that counts Twitter hashtags. reads tweets in real time, extracts hashtags from each tweet, and counts the number of hashtags. In the DSPE application, the sender POI is an upstream and the receiver POI is a downstream. For example, in a situation where sends a message to , is an upstream and is a downstream. If there are multiple downstreams, the upstream requires a stream partitioning method (diamond in the figure) to select a downstream in order to send a message.

Figure 1.

Example of a distributed stream processing engine (DSPE) application: counting Twitter hashtags.

Most DSPEs provide a variety of stream partitioning methods. The stream partitioning is classified into a key stream partitioning method, which distributes a stream according to the key of each message for stateful PO, and a non-key stream partitioning method, which sends a message to any downstream regardless of a key for stateless PO. Among the non-key stream partitioning methods, Shuffle grouping in Storm and Random partitioning or Rebalancing in Flink evenly distribute the stream to each downstream for load balancing. On the other hand, Local-or-Shuffle grouping in Storm and Rescaling in Flink are locality-aware stream partitioning (LSP) methods which considerably improve the performance by considering task locality. We note that the non-key stream partitioning method can consider the system status for the performance improvement. Thus, in this paper we focus on the performance improvement of DSPE applications using such non-key stream partitioning methods.

2.2. Even Job Scheduler in Distributed Stream Processing Engines (DSPEs)

DSPEs have one or more job schedulers, but application developers usually use the default job scheduler. The default job scheduler provided by Apache Storm [1,2,3,4], a representative DPSE, distributes POIs of a given application as evenly as possible to all processes in the distributed servers. In this paper, we call this scheduler an even job scheduler, and it targets fairness of Definition 1.

Definition 1.

In the job scheduling of DSPE, the fairness means that POIs of an application are evenly deployed to distributed servers so that each POI can use resources as equally as possible.

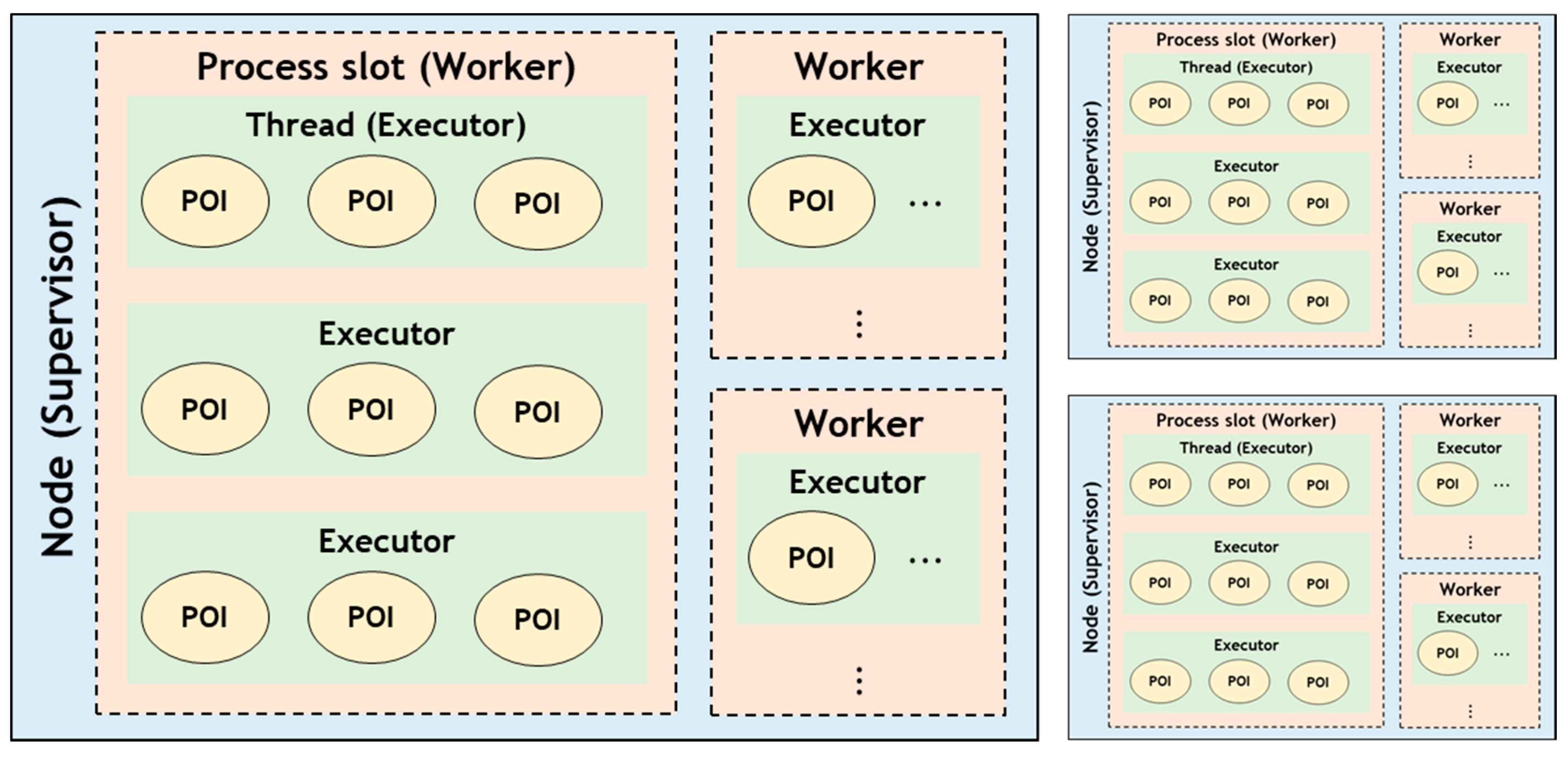

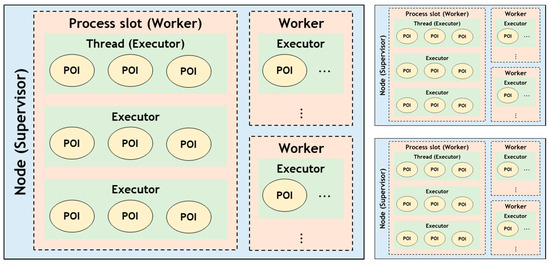

For the fairness, the even job scheduler regards a process slot on which the POIs will operate as a resource. In DSPEs, a process slot is a space unit for allocating POIs to processes on each server. In fact, as shown in Figure 2, Storm deploys a number of process slots (i.e., workers in Storm) in distributed servers at the cluster starting time. The job scheduler bundles POIs into threads (i.e., executors in Storm) and allocates them into the process slots when deploying applications. Storm allocates resources such as a heap size to the workers according to the configuration, and the workers process streams by sharing resources to the executors. Then, the worker sends and receives the processed stream messages from/to other workers through the port assigned to each worker, and at this time, intra- or inter-node communications occur. The even job scheduler deploys POIs as evenly as possible in these process slots so that each POI in the application can use resources as fairly as possible. In addition, to take into account the fairness of the applications to be submitted later, the POIs are deployed in order from a server with the largest number of available process slots. That is, the even job scheduler sorts by the number of process slots each server has, and uses one by one each process slot in order from a server with a large number of process slots.

Figure 2.

Hierarchy of processing operator instances (POIs) allocated to process slots in Apache Storm.

Algorithm 1 shows the process slot sorting algorithm for determining the order of process slots so that the even job scheduler can use them evenly for the fairness [12,13]. Line 2 classifies process slots by node, and Line 3 sorts them by the size of process slots in each node. Lines 4 to 12 read the first process slot from each process slot list and connect them to a list. By using as many process slots as the job scheduler needs from the process slots sorted by this procedure, the fairness of job scheduling can be maximized.

| Algorithm 1 Process slots sorting algorithm for the fairness | |

| 1. | Procedure sortSlots(availableSlots) |

| 2. | Input: |

| 3. | availableSlots [1..s]: a list of available slots. |

| 4. | Output: |

| 5. | sortedSlots[1..s]: a sorted list of available slots. |

| 6. | begin |

| 7. | slotGroups := Classify availableSlots by node; |

| 8. | Sort slotGroups by the slots size; |

| 9. | first := nil; |

| 10. | whileslotGroups is not empty do |

| 11. | for eachnodeinslotGroupsdo |

| 12. | Add the first slot in node to first; |

| 13. | Remove the first slot from node; |

| 14. | ifnode is empty then |

| 15. | Remove node from slotGroups; |

| 16. | end-if |

| 17. | end-for |

| 18. | end-while |

| 19. | sortedSlots := first; |

| 20. | end |

Algorithm 2 shows the even job scheduler algorithm for evenly deploying POIs using the sortSlots() algorithm. First, Line 2 sorts the available process slots in the distributed cluster with sortSlots(). Next, Lines 3 and 4 calculate the process slots that actually allocate the submitted job. Finally, Lines 5 to 8 allocate all POIs to process slots one by one, and if the number of POIs is greater than the number of process slots, the scheduler repeatedly allocates from the first process slot.

| Algorithm 2 Even job scheduler algorithm | |

| 1. | Procedure evenJobScheduler(job, availableSlots) |

| 2. | Input: |

| 3. | job: a job to be scheduled. |

| 4. | availableSlots: available slots in a cluster. |

| 5. | Output: |

| 6. | assignment: a map of <POI, Slot>. |

| 7. | begin |

| 8. | sorted[1..n] := sortSlots(availableSlots); |

| 9. | r := The number of slots requested by job; |

| 10. | slots[1..r] := Extract r from sorted; |

| 11. | POIs[1..p] := Get all POIs from job; |

| 12. | for each i 1..p do |

| 13. | Put <POIs[i], slots[i % r]> to assignment; |

| 14. | end-for |

| 15. | end |

2.3. Problems of Even Job Scheduler

In the LSP application scheduled by the even job scheduler, we can classify two contiguous POs into three use cases based on the locality as shown in Table 1. In the table, is the number of upstreams, is the number of downstreams, and is the number of process slots. First, if the contiguous POs are the best locality configuration, the locality of LSP is maximized because at least one downstream is working in all processes. Second, if the contiguous POs are the general locality configuration, one or more upstreams have no downstream in the same process as themselves, so the locality is only partially guaranteed. Third, if the contiguous POs are the worst locality configuration, all upstreams have no downstream in the same process as themselves, so the locality is not guaranteed at all. Due to the characteristics of these three use cases, the DSPE application developer wants to configure the application with the best locality to increase the processing performance of the LSP.

Table 1.

Three use cases for DSPE applications classified by the locality.

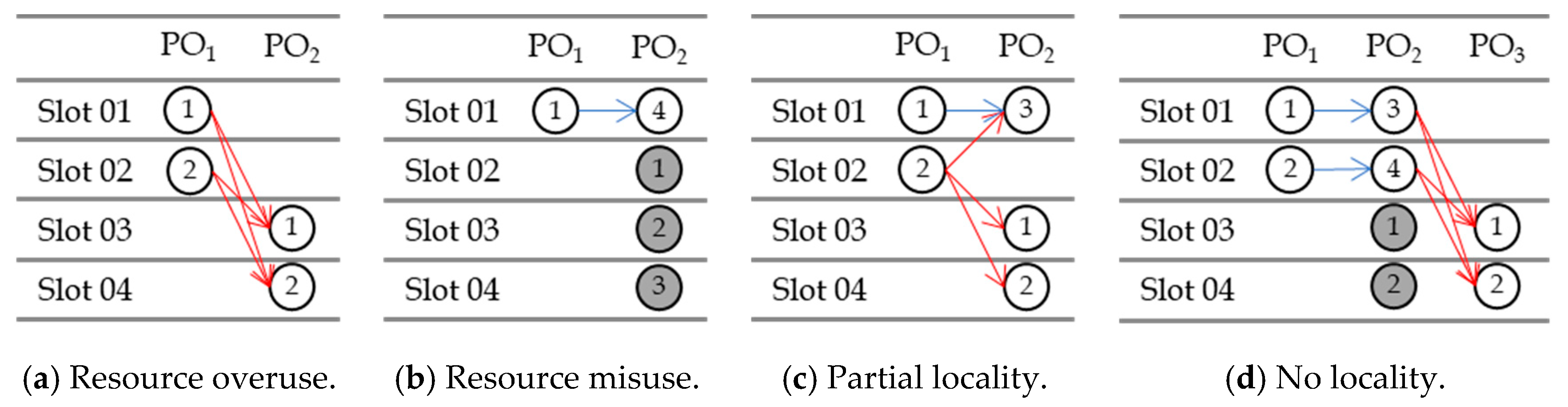

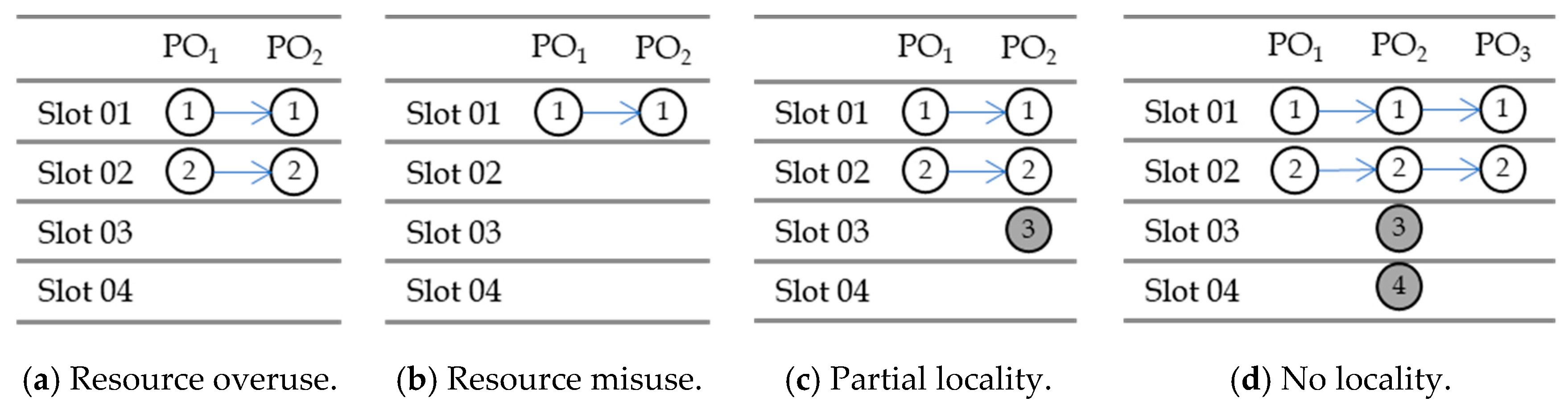

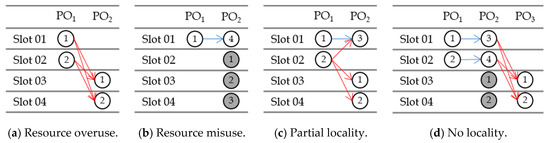

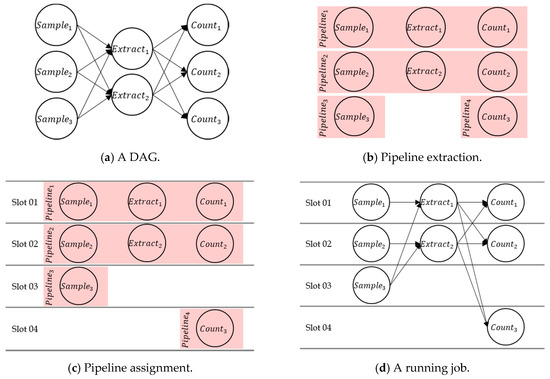

We can derive the following four problems when we use the existing even job scheduler to schedule an LSP application from the three use cases described above. The problems are (1) resource overuse, (2) resource misuse, (3) partial locality, and (4) no locality. Figure 3 shows examples of these four problems. In the figure, a circle represents POI, and the number in each circle is the order in which the POIs are deployed.

Figure 3.

Examples of problems that even job scheduler encounters in DSPE applications with locality-aware stream partitioning (LSP).

First, the resource overuse is a problem where the even job scheduler uses too many process slots. Let the number of POs in a given job be and the number of instances (POIs) of the i-th PO be . We note that if the total number of POIs in the job , all process slots should be used. Therefore, as the number of POs in a job and the number of POIs in each PO increase, the number of process slots to be used rapidly increases, which means that the even job scheduler uses too many resources. Figure 3a corresponds to an example of this problem, and the total number of POIs in a simple application is , so all four process slots must be used. We call this problem the resource overuse.

Second, the resource misuse is a problem where the best locality, the developer of which enforces to ensure the locality of the LSP, requires too many resources to be not used by a DSPE. To configure the application with the best locality, the number of downstreams should be greater than or equal to the number of process slots. We here note that, if the number of upstreams is much less than the number of process slots, some downstreams are not working as many as the difference (i.e., ). For example, in Figure 3b, the number of upstreams (i.e., ) is 1, and the number of downstreams (i.e., ) is 4. Since is very small compared to , it needs to create three extra downstreams that do not receive messages for the locality. We call this problem the resource misuse.

Third, the partial locality is a problem where the locality of LSP cannot be guaranteed even partially in the general locality. In the general locality, upstreams require network communications. Figure 3c corresponds to an example of this problem, where the number of upstreams (i.e., ) is 2, and the number of downstreams (i.e., ) is 3. Looking at the upstream , the even job scheduler allows one POI to have downstream in the same process slot as itself, but the other has no downstream in the same process slot as itself. That is, an upstream allocated to a process slot where no downstreams exists does not guarantee the locality and requires the network communication. Of course, the less general but worst locality configuration requires the higher communication costs. We call this problem the partial locality.

Fourth, no locality is a problem where the general locality configuration cannot guarantee the locality of LSP at all according to the upstream status. The three use cases identified earlier are simple fragmentation of the LSP between up and downstreams in only two POs. An actual DSPE application, however, consists of multiple POs, and the LSP among them can behave differently from the three use cases. For example, assume a DSPE application consisting of three POs as shown in Figure 3d. Since and , the best locality is configured between and , and the general locality is configured between and . and operate in completely different process slots, because and are the same. In this configuration, two POIs of () do not receive messages, and all POIs of operate in the same process as them. Therefore, although and are configured as the general locality, they operate in the same manner as the worst locality because the locality is not guaranteed at all. We call this problem the no locality.

These four problems are inherent in the even job scheduler trying to allocate POIs to different process slots as far as possible. In this paper, we propose a new job scheduler to solve the four problems of the even job scheduler while considering the locality.

3. Locality/Fairness-Aware Job Scheduler

In this section, we design and analyze the proposed L/F job scheduler in detail. Section 3.1 presents the requirements for solving the problems of even job scheduler by introducing locality to the job scheduling. Section 3.2 designs the L/F job scheduling method that considers both locality and fairness. Section 3.3 analyzes whether the L/F job scheduler solves the problems of even job scheduler.

3.1. Requirement Analysis

In this section, we present the requirements for solving the problems of even job scheduler by introducing the locality to the job scheduling. The even job scheduler deploys POIs geographically far from each other and, thus, it cannot guarantee the locality of POIs. This results in high network communication costs because LSP cannot take advantage of the locality. Therefore, for the LSP to have high performance, we need to guarantee the locality of POIs deployed by the job scheduling.

In this paper, we target to introduce the locality of Definition 2 in the job scheduling to solve the problems of the even job scheduler considering only the fairness.

Definition 2.

In the job scheduling of DSPE, the locality means that contiguous POIs are deployed close to each other so that they can work in the same or near processes.

The most naïve approach to increasing the locality in the job scheduling is to isolate a DSPE application into one process, so that the process handles only one DSPE application. In this case, the locality is the highest, so network communication does not occur and resources are not shared with other applications. However, this naïve approach does not take advantage of DSPE at all. Since DSPE applications operate only in one process, there is very high resource contention between POIs, and in severe cases, there is no guarantee of fault tolerance for the process failure. Therefore, rather than allocating all POIs to one process, we need an efficient technique of “assigning contiguous POIs to one process” as described in Definition 2.

In this paper, we propose an L/F job scheduler that considers both locality and fairness in the job scheduling. The only locality-aware job scheduling may cause high resource contention and non-fault tolerance problems between POIs, and the only fairness-aware job scheduling may cause performance degradation due to high network communication. In order to increase the locality, POIs should be deployed close to each other, and to increase the fairness, POIs should be deployed far from each other. This means that the locality and the fairness are the trade-off relationship in the job scheduling. In this tradeoff, our main goal is to increase both locality and fairness of the job scheduling.

First, we consider the locality that has a greater impact on the processing performance of LSP. According to Definition 2, the contiguous POIs must be allocated to the same process slot in order to increase the locality. To quantify the locality of contiguous POIs, we present the concept of cohesion, which represents how much close the contiguous POIs are. The cohesion is calculated by Equation (1), where means the j-th POI of .

The cohesion of Equation (1) is the sum of closeness with the closest downstream POI for all upstream POIs. The closeness means how close geographically two different POIs are, and we simply calculate it as the inverse of the distance. At this time, the distance may be measured differently according to the cluster configuration. Since LSP identifies the locality by process, we simply assume that the distance is 1 for intra-process and 40 for inter-process. This ratio comes from the intra-process requiring memory access and the inter-process requiring network access (assume that memory access takes about 0.25 ms and network access takes about 10 ms. Refer to http://brenocon.com/dean_perf.html). This cohesion has a higher value as the contiguous POIs are closer to each other, so the higher the cohesion, the higher the locality.

Next, in order to increase the fairness according to Definition 1, parallel POIs should be allocated to different process slots as much as possible. The parallel POIs are a collection of instances replicated by a PO. They do not transmit any messages from each other, so the fairness trivially increases. To quantify the fairness of parallel POIs, we present the concept of coupling, which represents how much close the parallel POIs are. The coupling calculated by Equation (2) is the sum of the closeness of the POIs closest to each other between the parallel POIs. This coupling means that the parallel POIs have a lower value as they are closer to each other, so the lower the coupling, the higher the fairness.

Ultimately, the goal of our L/F job scheduler is to increase the cohesion of contiguous POIs for the high locality and at the same time to reduce the coupling of parallel POIs for the high fairness. We use cohesion and coupling in Section 4.2 to evaluate the locality and the fairness of even job scheduler and L/F job scheduler in the various DSPE configurations.

3.2. Design of Locality/Fairness-Aware (L/F) Job Scheduler

In this section, we design the L/F job scheduler that improves both locality and fairness described in Section 3.1. We connect the contiguous POIs into one stream pipeline. A pipeline is a collection of contiguous POIs composed of distinct POIs. We use this pipeline to schedule multiple jobs through two steps as follows.

Step 1. Pipeline extraction: this extracts the pipelines repeatedly until all POIs of a given job have been consumed. This step increases the cohesion of contiguous POIs.

Step 2. Pipeline assignment: this assigns the pipelines to the sorted process slots. This step decreases the coupling of parallel POIs.

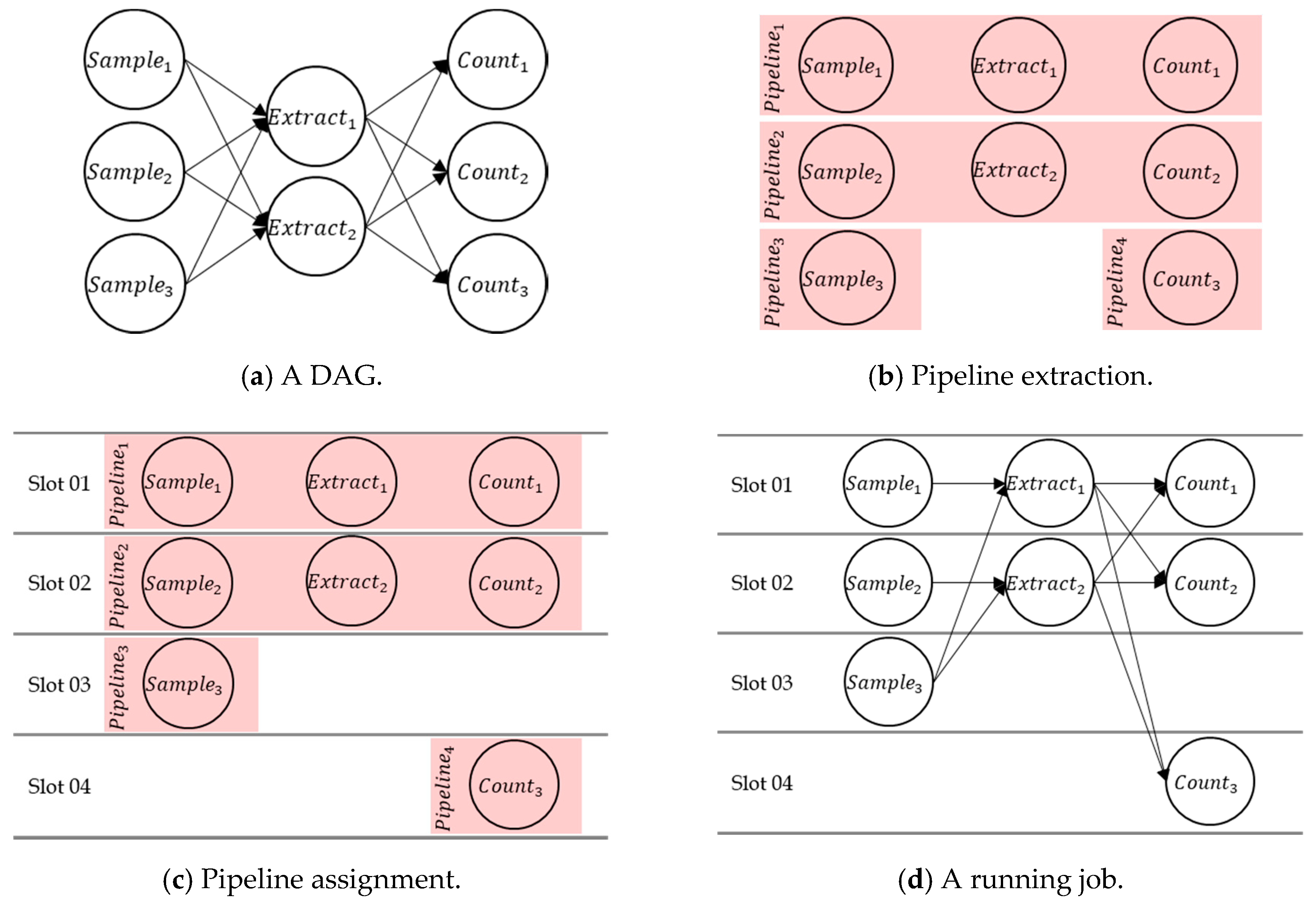

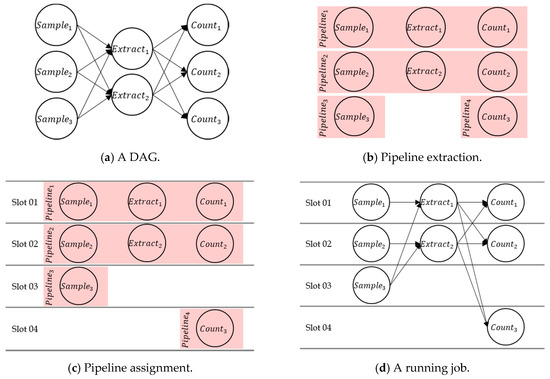

Example 1 shows how we schedule a job through the L/F job scheduler.

Example 1.

Figure 4 shows the process of scheduling a given job into four process slots using the L/F job scheduler. First, Figure 4a depicts a DAG configured by a DSPE application developer to count Twitter hashtags. In the job, the number of is 3, the number of is 2, and the number of is 3. Second, Figure 4b depicts the first step, pipeline extraction, which connects contiguous POIs to a pipeline for all POIs. In and , there is one by one POI every PO. At this time, since the remaining POIs, and , are not contiguous with each other, they are extracted to different pipelines. Third, Figure 4c depicts the second step, pipeline assignment, which assigns pipelines to process slots as far as possible. Since there are four process slots, one pipeline is assigned for each process slot. Finally, Figure 4d depicts the flow of stream in the job scheduled through the L/F job scheduler. Since is a stateful PO, requires a key stream partitioning; while is a stateless PO, so needs a non-key stream partitioning. Thus, if LSP is applied to , can send messages with few network communications. Although less effective than LSP, other stream partitioning methods also reduce network communications as in .

Figure 4.

Example of pipeline extraction and pipeline assignment in Locality/Fairness-aware (L/F) job scheduler.

First, the pipeline extraction step finds all pipelines from a given job. This step connects POs into a pipeline by visiting POs connected with any PO repeatedly and visiting all connected POs. Due to the different parallelism of POs, all the POIs of a certain PO can be consumed before all pipelines are extracted. If all POIs of any PO are consumed during the pipeline extraction, the preceding POs and succeeding POs are divided into different pipelines on the basis of the PO. The PO with all POIs included in the pipelines is removed from the job and the pipelines are extracted until there are no more POs in the job.

Algorithms 3 and 4 show the pipeline extraction step of the L/F job scheduler. Procedure getPipelines() in Algorithm 3 extracts the pipelines until all POs are consumed in the job. Line 3 reads all POs from the job, and Lines 4 to 9 are repeated until all these POs are consumed. Line 6 gets an arbitrary PO since a DSPE application with multiple sources does not know the starting PO of the DAG. Lines 7 and 8 extract a pipeline and store it in a list. Procedure connectToPipeline() in Algorithm 4 connects contiguous POIs into a pipeline. Line 2 attempts to visit the current PO if the PO is not in the pipeline or if the PO has a POI. Line 3 visits the current PO and stores one POI in the pipeline. Lines 4 to 6 remove the current PO from the job if all POIs are consumed. Lines 7 to 12 visit the preceding POs and the succeeding POs of the current PO. If it visits all connected POs, one pipeline is extracted. The pipeline has the high locality, and thus, the scheduler assigns it to the single process in the next step.

Next, the pipeline assignment step assigns all the pipelines extracted earlier to the sorted process slots. This step is similar to the even job scheduler in Algorithm 2 to increase the fairness. That is, it evenly assigns the pipelines one by one to the process slots sorted through sortSlots() of Algorithm 1. Algorithm 5 shows the L/F job scheduler algorithm for assigning pipelines. Lines 2 to 4 sort the process slots through sortSlot() and calculate the required slots. Line 5 extracts pipelines from a given job. Lines 6 to 9 assign POIs belonging to each pipeline to the same process. By allocating the pipelines to the process slots as evenly as possible, the L/F job scheduler can have the high fairness.

| Algorithm 3 Procedure getPipelines() to extract pipelines from a job | |

| 1. | Procedure getPipelines(job) |

| 2. | Input: |

| 3. | job: a job to be scheduled. |

| 4. | Output: |

| 5. | pipelines[1..l]: a list of connected pipelines. |

| 6. | begin |

| 7. | pipelines := nil; |

| 8. | POs := Get POs from job;//POs are not sorted. |

| 9. | whilePOs is not empty do |

| 10. | pipeline := new Map<key: PO, value: POI>; |

| 11. | arbitrary := Get an arbitrary PO from POs; |

| 12. | connectToPipeline(POs, arbitrary, pipeline); |

| 13. | Add pipeline to pipelines; |

| 14. | end-while |

| 15. | end |

| Algorithm 4 Procedure connectToPipeline() to connect POIs into a pipeline | |

| 1. | Procedure connectToPipeline(POs, now, pipeline) |

| 2. | Input: |

| 3. | POs: a map of <PO, POI>. |

| 4. | now: the PO currently visited. |

| 5. | pipeline: a pipeline connected based on locality. |

| 6. | begin |

| 7. | ifpipeline not contains now and now is not empty then |

| 8. | Put <now, first POI of now> to pipeline; |

| 9. | ifnow is empty then |

| 10. | Remove now from POs; |

| 11. | end-if |

| 12. | for eachprecedingin the POs preceding now do |

| 13. | connectToPipeline(POs, preceding, pipeline); |

| 14. | end-for |

| 15. | for eachsucceedingin the POs succeeding now do |

| 16. | connectToPipeline(POs, succeeding, pipeline); |

| 17. | end-for |

| 18. | end-if |

| 19. | end |

| Algorithm 5 Locality/Fairness-aware job scheduler algorithm | |

| 1. | Procedure LFJobScheduler(job, availableSlots) |

| 2. | Input: |

| 3. | job: a job to be scheduled. |

| 4. | availableSlots: available slots in a cluster. |

| 5. | Output: |

| 6. | assignment: a map of <POI, Slot>. |

| 7. | begin |

| 8. | sorted[1..n] := sortSlots(availableSlots); |

| 9. | r := The number of slots requested by job; |

| 10. | slots[1..r] := Extract r from sorted; |

| 11. | pipelines[1..l] := getPipelines(job); |

| 12. | for each i 1..p do |

| 13. | for eachPOIinpipelines[i] do |

| 14. | Put <POI, assign[i % r]> to assignment; |

| 15. | end-for |

| 16. | end-for |

| 17. | end |

3.3. Analysis of Job Schedulers

In this section, we analyze whether the L/F job scheduler solves the four problems of the even job scheduler described in Section 2.3. Figure 5 shows an example where the problems in Figure 3 are solved by the L/F job scheduler. First, the resource overuse comes from that the even job scheduler uses too many process slots. The L/F job scheduler greatly reduces the number of process slots required to by connecting the contiguous POIs to the pipeline. This difference will increase further in large clusters. Figure 3a requires four process slots for four POIs, while Figure 5a uses only two process slots for four POIs. Therefore, the L/F job scheduler can reduce the resources used by the application. Second, the resource misuse comes from the fact that the even job scheduler requires unnecessary downstreams. Figure 3b requires four downstreams for one upstream to ensure the locality, while Figure 5b requires only one downstream for one upstream to ensure the locality. Therefore, the L/F job scheduler needs to generate the same number of downstreams as that of upstreams without unnecessary downstreams for the best locality. Third, the partial locality comes from the fact that the even job scheduler cannot guarantee the locality for upstreams in the general locality. The L/F job scheduler significantly reduces the number of upstreams that do not guarantee the locality, so the locality of upstreams is not guaranteed only at . This is very small compared to the even job scheduler. In Figure 3c, network communication occurs in one upstream, while in Figure 5c no network communication occurs. We here note that, in the L/F job scheduler, some upstreams should be downstreams in the same process, so the worst locality cannot be configured unlike the even job scheduler. That is, some upstreams necessarily guarantee the locality. Fourth, the no locality comes from that the general locality behaves like the worst locality in a complex job scheduled with the even job scheduler. The L/F job scheduler guarantees the locality because the contiguous POIs are connected to a single pipeline even in complex jobs. In Figure 3d, must have network communication in order to send messages to , while in Figure 5d all locality of is guaranteed, resulting in no network communication. In summary, the L/F job scheduler can solve all four problems that occur in the even job scheduler.

Figure 5.

Examples of four problems solved by L/F job scheduler.

4. Experimental Evaluation

In this section, we evaluate the existing even job scheduler and the proposed L/F job scheduler using extensive synthetic workloads and real-world workloads deployed in the industry. Comparative metrics are throughput, latency, and system resource usage. Table 2 represents the abbreviations for the metrics and the workloads. We implement the proposed L/F job scheduler in Apache Storm [1,2,3,4], a representative DSPE. Storm uses the even job scheduler as its default job scheduler and also provides a variety of stream partitioning methods, making it suitable for evaluating job schedulers. We use Local-or-Shuffle grouping for LSP and Shuffle grouping for non-LSP as stream partitioning methods [2]. The hardware specifications of the cluster are as follows: one master node with Intel Xeon E5-2630 v3 @ 2.4 GHz 8 cores with 16 threads and 64 GB RAM server, and eight worker nodes with Intel Xeon E5-2620 v3 @ 2.4 GHz 6 cores with 12 threads and 64 GB RAM. As a software configuration, we use CentOS 7.3 and Java 1.8 on all nodes, and we adopt Apache Storm 1.2.3, the latest version as a DSPE.

Table 2.

Abbreviations for metrics and workload terms.

Section 4.1 designs the synthetic workloads for extensive scalable experiments. Section 4.2 compares cohesion and coupling in various configurations of DSPE applications to confirm whether the L/F job scheduler considers locality and fairness well. Section 4.3 measures and compares processing performance in DSPE applications using LSP. Section 4.4 further confirms that the L/F job scheduler improves performance even in non-LSP that does not consider the locality. Section 4.5 evaluates the L/F job scheduler in two real-world workloads.

4.1. Synthetic Workloads

To evaluate job schedulers, we classify DSPE applications into five configurations, as shown in Table 3. First, means that eight POs have only one POI without parallelism, so that all POIs process the same number of messages. Second, has more and more POIs in order for eight POs, and the number of messages to be processed by each POI decreases as it moves to the succeeding POs. Third, has fewer and fewer POIs in order for eight POs contrary to , and the number of messages to be processed by each POI increases as it moves to the succeeding POs. Fourth, increases the number of POIs up to the middle of POs and then decreases thereafter. Fifth, as opposed to , decreases the number of POIs up to the middle of POs and then increases thereafter. Among these configurations, and are expected to have a high throughput because there are many messages to be processed due to the large number of . In this paper, we focus on the network communication of the LSP, so each PO emits the received messages to the succeeding PO. We create eight process slots in eight nodes and schedule these configurations, which we call the low parallelism environment. In addition, we create 80 process slots in eight nodes to configure a more complex environment with a similar structure and schedule POIs 10 times of low parallelism, which we call the high parallelism environment. In all experiments, we use a synthetic data stream that generates a 1 KB message every 1 millisecond in to generate a very high load.

Table 3.

Five configurations with low parallelism ( 10 times for high parallelism).

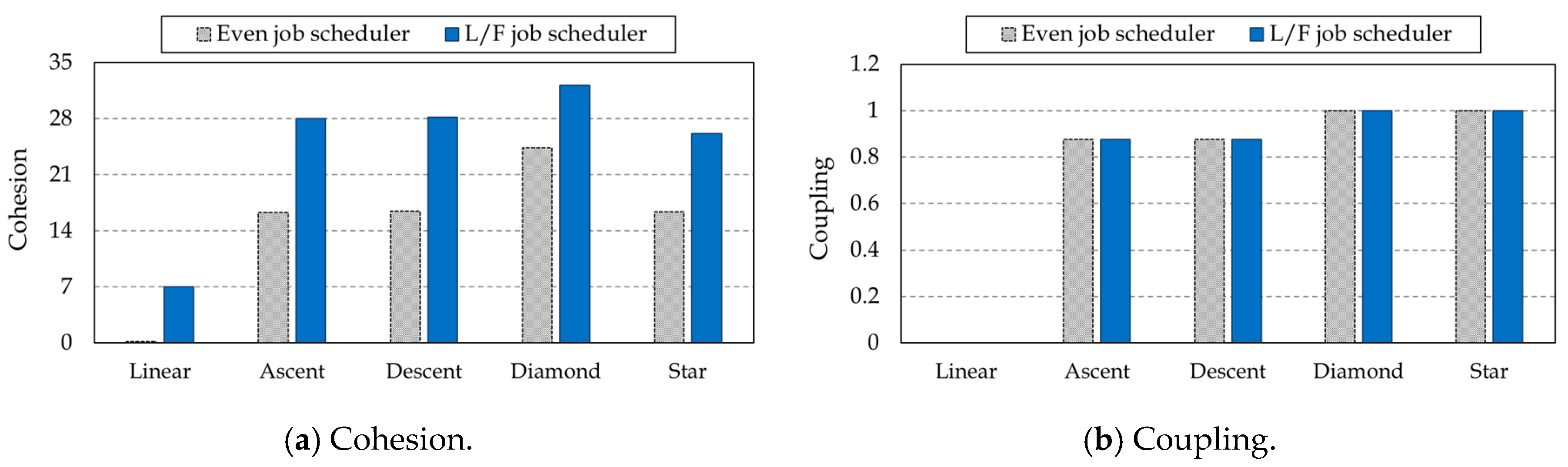

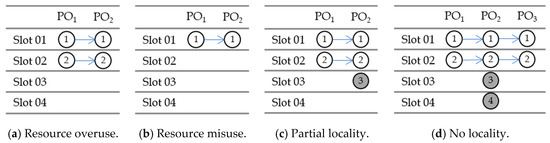

4.2. Comparison of Cohesion and Coupling

In this section, we compare cohesion and coupling of jobs scheduled by the even and L/F job schedulers in various configurations. Figure 6 shows cohesion and coupling of the two schedulers calculated in the low parallelism environment. We empirically assume that the distance is 1 for intra-process and 40 for the inter-process as described in Section 3. The even job scheduler minimizes coupling by considering only fairness. On the other hand, the L/F job scheduler considers locality as well as fairness. Thus, the L/F job scheduler aims to reduce the coupling and increase the cohesion. Comparing cohesion in Figure 6a, the L/F job scheduler shows much higher cohesion than the even job scheduler by considering the locality. In detail, the L/F job scheduler has a higher cohesion of 3900% for , 71.78% for , 71.02% for , 32.03% for , and 59.45% for than the even job scheduler. In particular, in the , since the LSP application scheduled by the even job scheduler does not consider locality at all, it shows very low cohesion. In contrast, since the LSP application scheduled by the L/F job scheduler completely considers the locality, it shows very high cohesion. Figure 6b shows that both schedulers have the same coupling in all configurations. The even job scheduler already presents the best coupling, the L/F job scheduler presents the same coupling, and thus, we can say that both schedulers show the same fairness. Both cohesion and coupling measured in a high parallelism environment are 10 times the results of Figure 6, and the ratios are the same. Therefore, the L/F job scheduler is a job scheduling technique with a very high locality while maintaining the same fairness compared to the even job scheduler.

Figure 6.

Cohesion and coupling of even and L/F job schedulers.

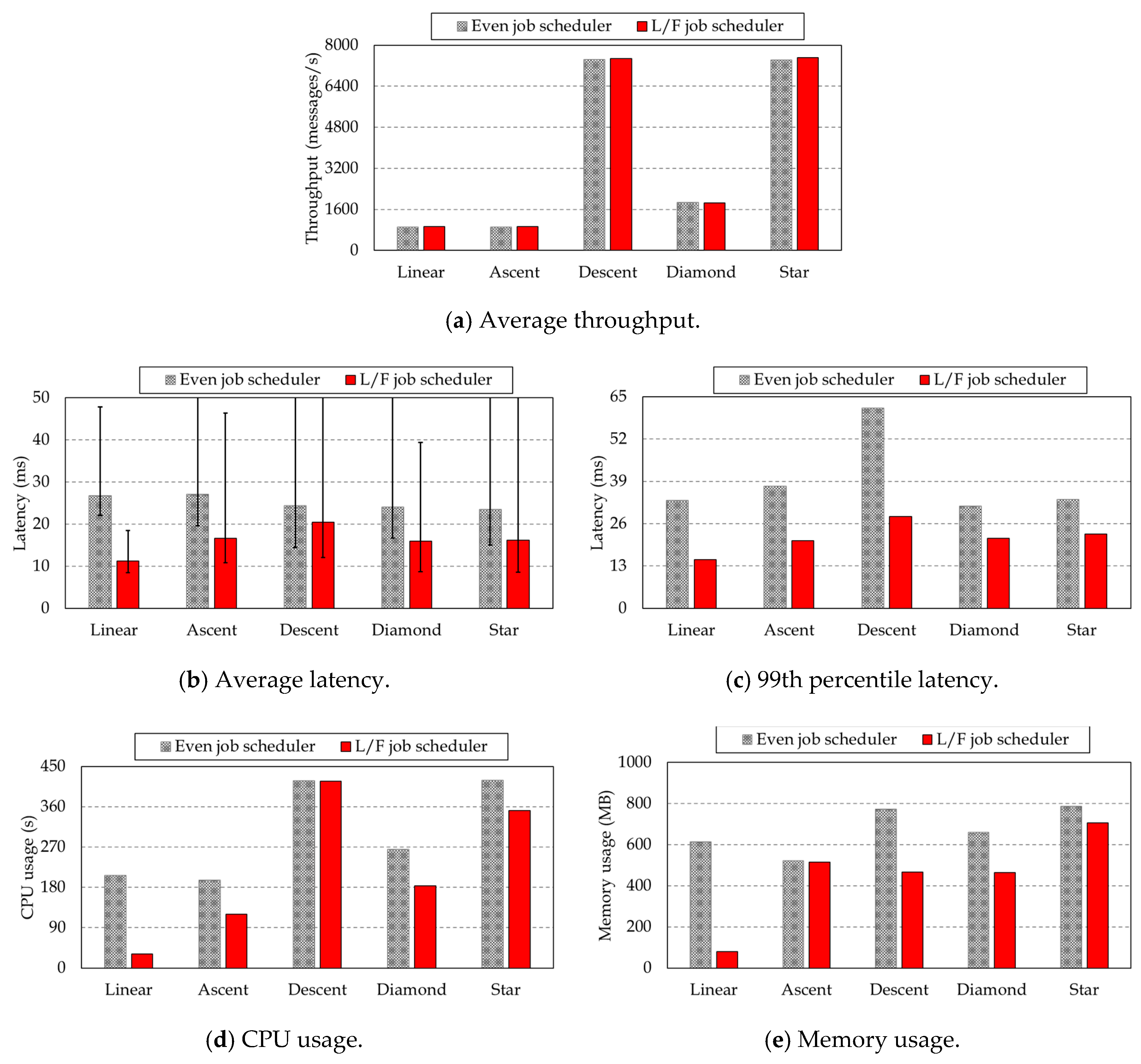

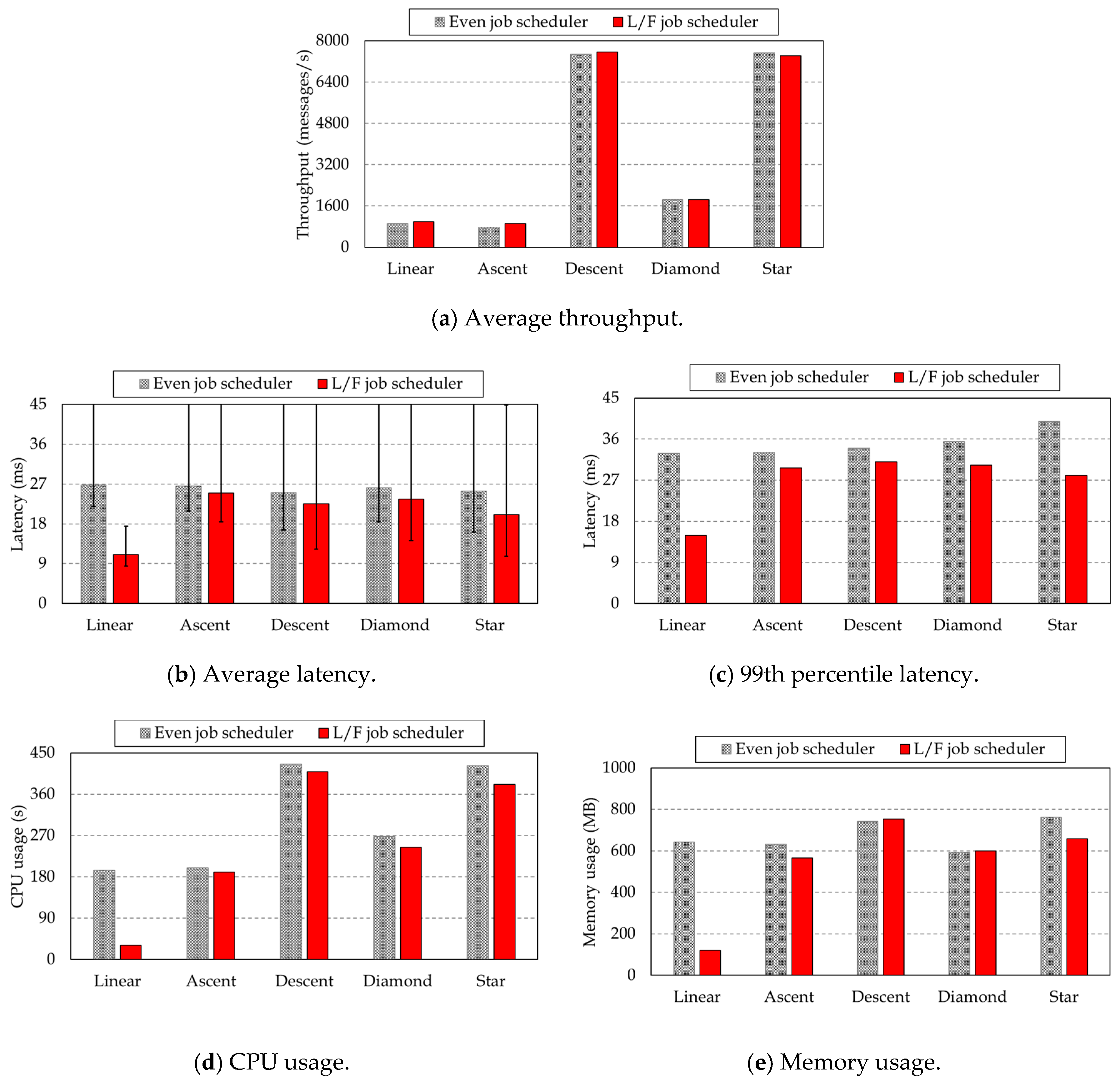

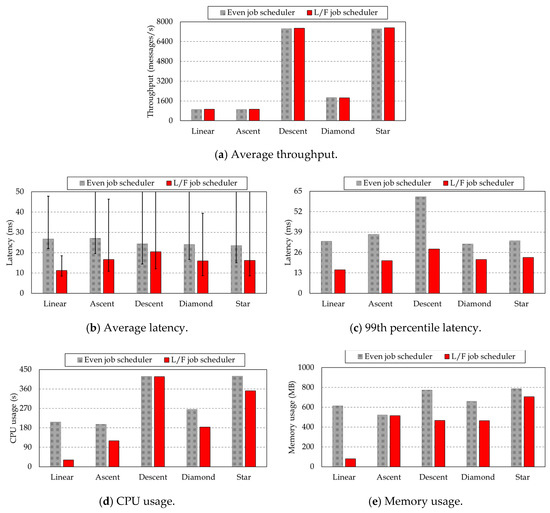

4.3. Experiment on Performance with Locality-Aware Stream Partitioning (LSP)

In this section, we experiment whether the L/F job scheduler works well for LSP applications. Figure 7 shows performance of LSP applications for five configurations in the low parallelism environment. As shown in Figure 7a, both schedulers show the similar because the jobs process all messages with very little load. The is very high in and because they generate a lot of messages from eight , which are very large, unlike other configurations. The performance difference between the two schedulers can be seen in the latency. As shown in Figure 7b, the L/F job scheduler has lower than the even job scheduler in all configurations. This is because the L/F job scheduler significantly reduces network communication by maximizing the locality of LSP applications. Experimental results show that the L/F job scheduler reduces the by 139.2% in , 62.24% in , 19.55% in , 51.00% in , and 45.10% in compared to the even job scheduler. In addition, according to the min-max error bar shown in Figure 7b, the L/F job scheduler greatly reduces the variation in latency by reducing the network communication. In fact, the of the even job scheduler is hundreds of milliseconds, but that of the L/F job scheduler is only tens of milliseconds. We further show the of the two schedulers in Figure 7c to make sense of the latency constancy. As a result, the L/F job scheduler decreases the by 121.4% in , 81.01% in , 118.3% in , 46.79% in , and 46.80% in compared to the even job scheduler. That is, the L/F job scheduler has a stable low latency in all configurations by introducing the locality. As explained in Section 3.3, the L/F job scheduler guarantees the locality of LSP applications and avoids unnecessary resource use. Therefore, we also need to pay attention to the system resource used by the two schedulers. Figure 7d,e show and , respectively, and we can see that the L/F job scheduler uses much less resources than the even job scheduler. More precisely, the L/F job scheduler reduces by up to 553.6% and by 664.0%. In particular, the resource usage greatly decreases in . because the even job scheduler uses all eight processes, while the L/F job scheduler uses only one process. In the other configurations, both schedulers use eight processes, but system resource usage is also reduced as the number of contexts switches and buffer copies for network communication is greatly reduced in the L/F job scheduler by guaranteeing the locality of the LSP.

Figure 7.

Performance of even and L/F job schedulers with LSP applications in low parallelism.

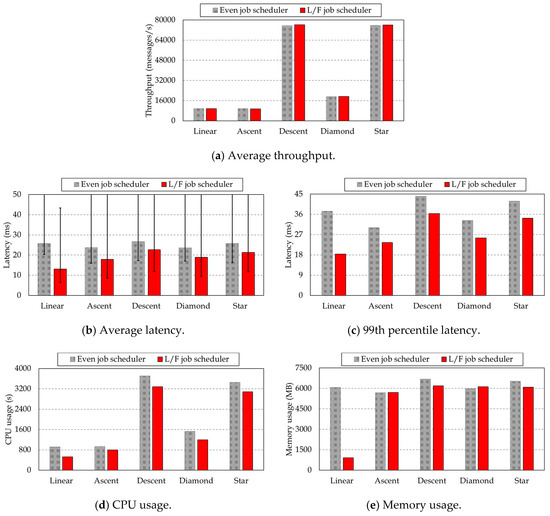

Figure 8 shows performance of the two schedulers in the high parallelism environment. In this experiment, the number of POIs is increased by 10 times, so the amount of messages is also increased by 10 times. Thus, the of Figure 8a is 10 times higher than that of Figure 7a. However, the of two schedulers is still the same because both schedulers process all messages with little load. In contrast, the L/F job scheduler much improves the than that of the even job scheduler as shown in Figure 8b. It significantly reduces the by up to 97.06%. This difference also appears in the , and in Figure 8c, the L/F job scheduler reduces the by up to 103.6% in than the even job scheduler. The difference in latency between the two schedulers is somewhat lower than in a low parallelism environment. This is because the even job scheduler incurs intra-process and inter-node communications only in a low parallelism environment, but some intra-node communication is faster than inter-node communication in a high parallelism environment. As the number of POIs and the amount of messages increase 10 times, the amount of system resources also increases significantly. According to Figure 8d,e, the L/F job scheduler reduces the by up to 72.79% in and the by up to 569.0% in compared to the even job scheduler. Among the workloads, and show poor performance, but both are negligible compared to other improvements. Therefore, we believe that the L/F job scheduler shows the excellent performance not only in simple low parallelism but also in complex high parallelism.

Figure 8.

Performance of even and L/F job schedulers with LSP applications in high parallelism.

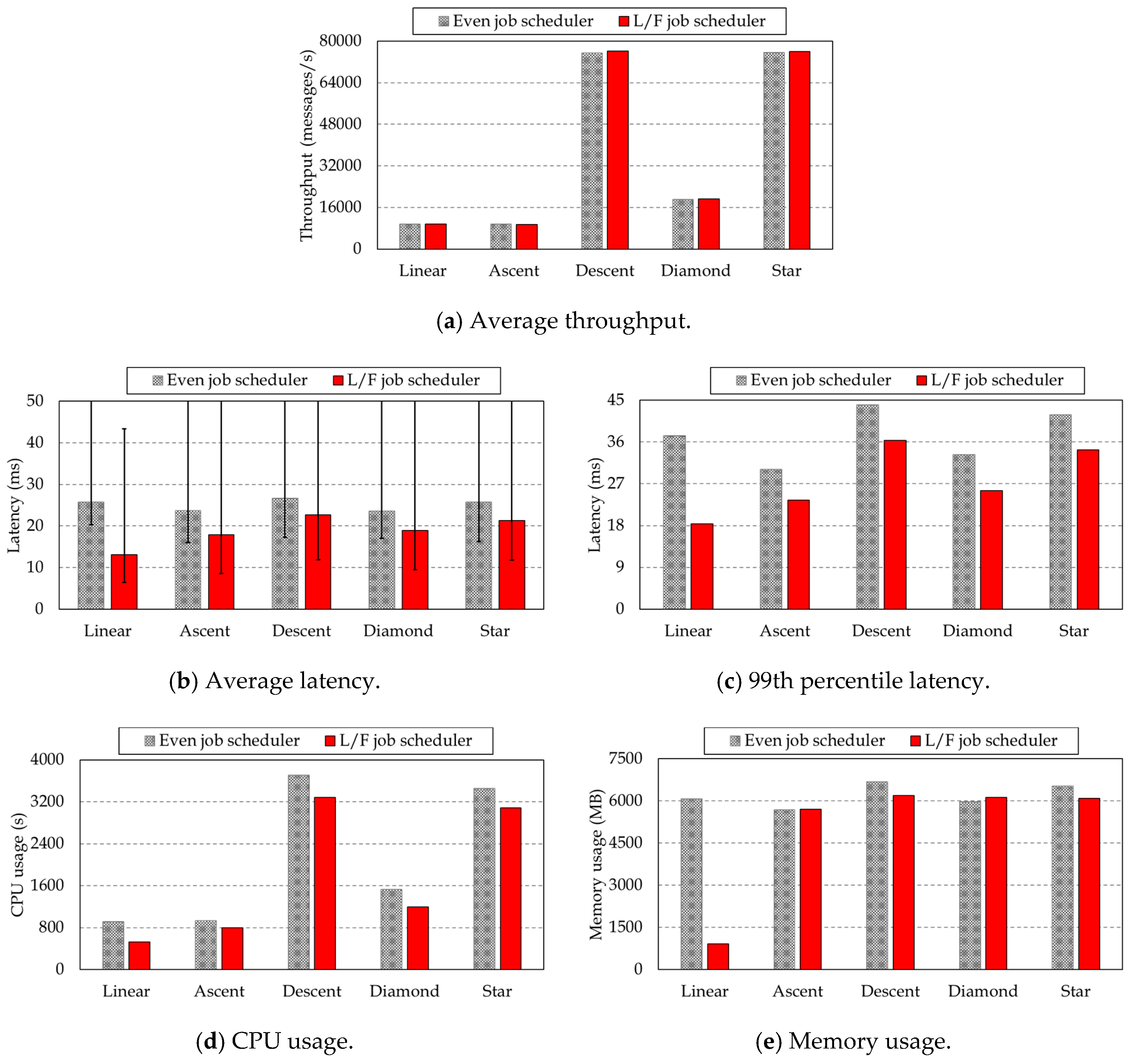

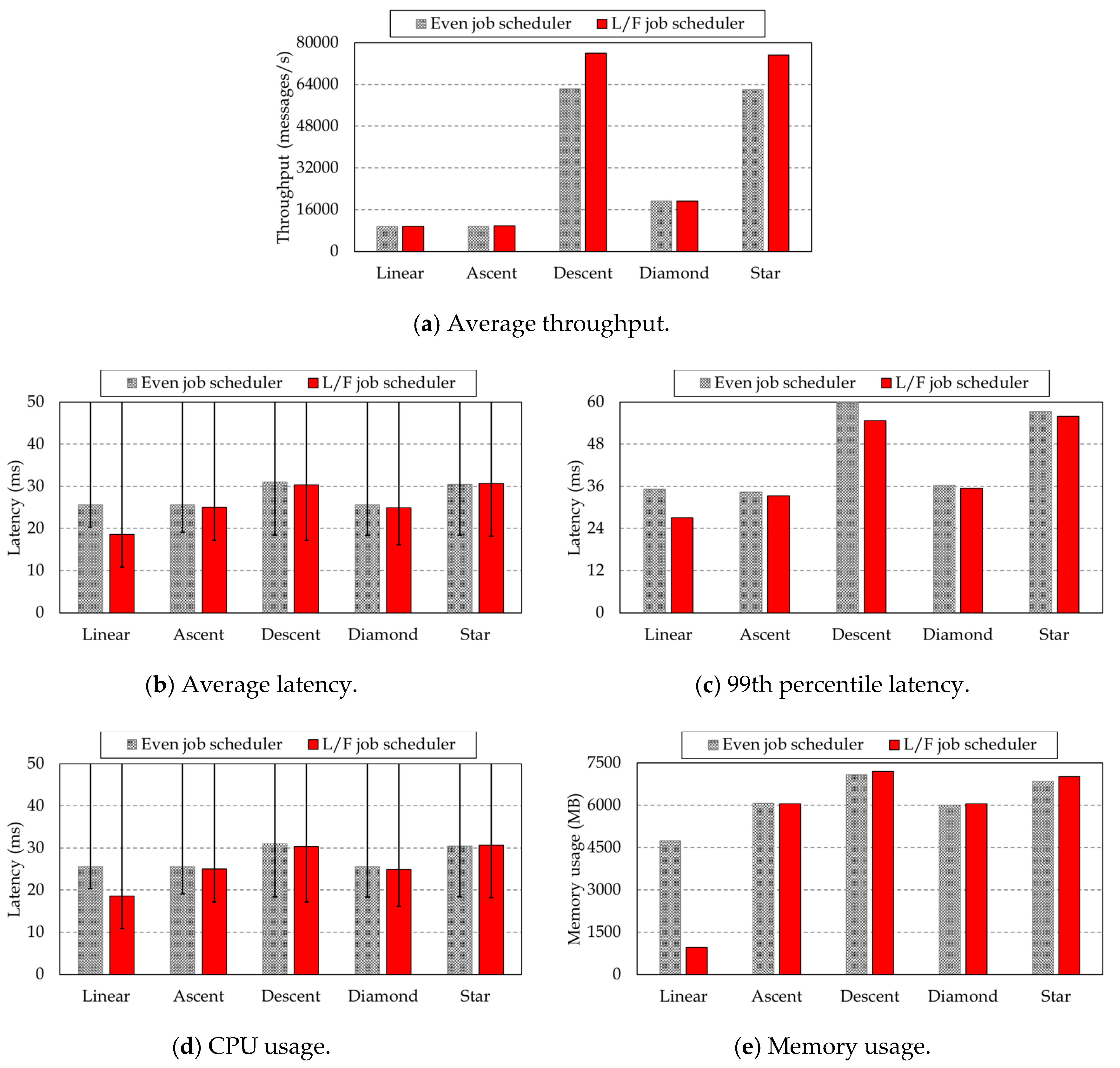

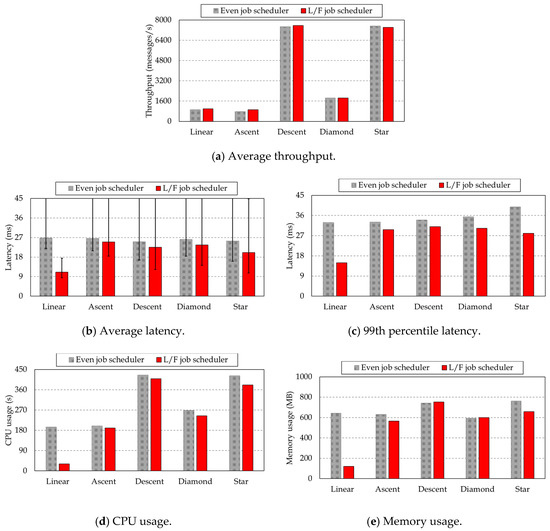

4.4. Experiment on Performance with Non-LSP

In this section, we experiment with the processing performance in the non-LSP application which is stream partitioning without considering the locality. The non-LSP sends the same amount of messages to all downstreams because the upstream does not consider the locality. Therefore, non-LSP applications have more frequent network communication than LSP applications. Although the purpose of this paper is to improve the performance of LSP applications, we need to confirm the excellent performance of the L/F job scheduler in such frequent network communication of non-LSP applications. Figure 9 shows the processing performance of non-LSP applications in the low parallelism environment. In Figure 9a, both job schedulers show still the same because of low load. In Figure 9b, the L/F job scheduler improves the by 140.7% in , 6.233% in , 11.43% in , 11.14% in , and 26.25% in , compared to the even job scheduler. In addition, as shown in Figure 9c, the L/F job scheduler improves the by 120.1% in , 11.32% in , 9.514% in , 17.10% in , and 42.26% in . This is because the L/F job scheduler deploys contiguous tasks close to each other, even though the non-LSP application randomly distributes the stream. As shown in Figure 9d,e, the system resource usage of L/F job scheduler is higher than that of LSP applications of Figure 8d,e, but it still shows significant performance improvement in non-LSP applications. We improve the by up to 521.6% and the by up to 437.7%. In particular, like an LSP application, the L/F job scheduler uses low system resources in . Since only one downstream works for each upstream, it can exhibit high performance regardless of stream partitioning.

Figure 9.

Performance of even and L/F job schedulers with non-LSP applications in low parallelism.

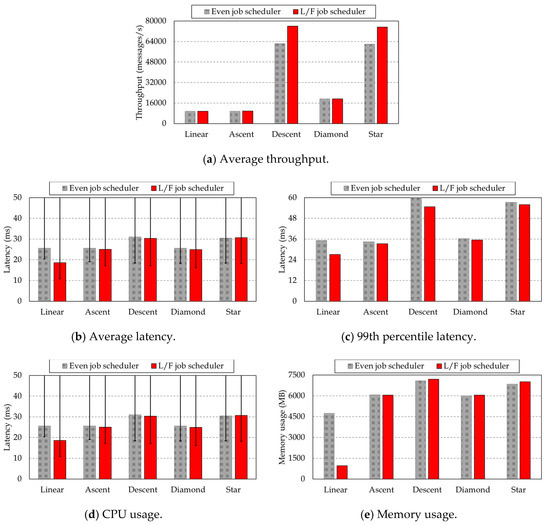

Finally, Figure 10 shows the experimental results of the non-LSP applications in the high parallelism environment. In Figure 10a, the of the two schedulers shows different results from the previous experiments. In , , and , the of the two schedulers is very similar within 1%. However, the L/F job scheduler shows a improvement of 21.95% in and 21.42% in . This is because and generate significantly more messages than other configurations, so the even job scheduler incurs a considerable load. In the comparison of in Figure 10b, the difference between the two schedulers is up to 38.03%. In addition, in Figure 10c, the L/F job scheduler improves the by up to 30.46% compared to the even job scheduler. As a result, the difference is slightly reduced compared to the LSP applications, but in most cases, the L/F job scheduler shows lower than the even job scheduler. In Figure 10d,e, the L/F job scheduler reduces the by up to 53.90% and the by up to 390.4% in , and uses similar resources to the even job scheduler in other workloads. That is, the L/F job scheduler shows high processing performance while using system resources almost similarly except for . Therefore, we can say that the proposed L/F job scheduler efficiently schedules jobs compared to the existing even job scheduler even in the non-LSP applications.

Figure 10.

Performance of even and L/F job schedulers with non-LSP applications in high parallelism.

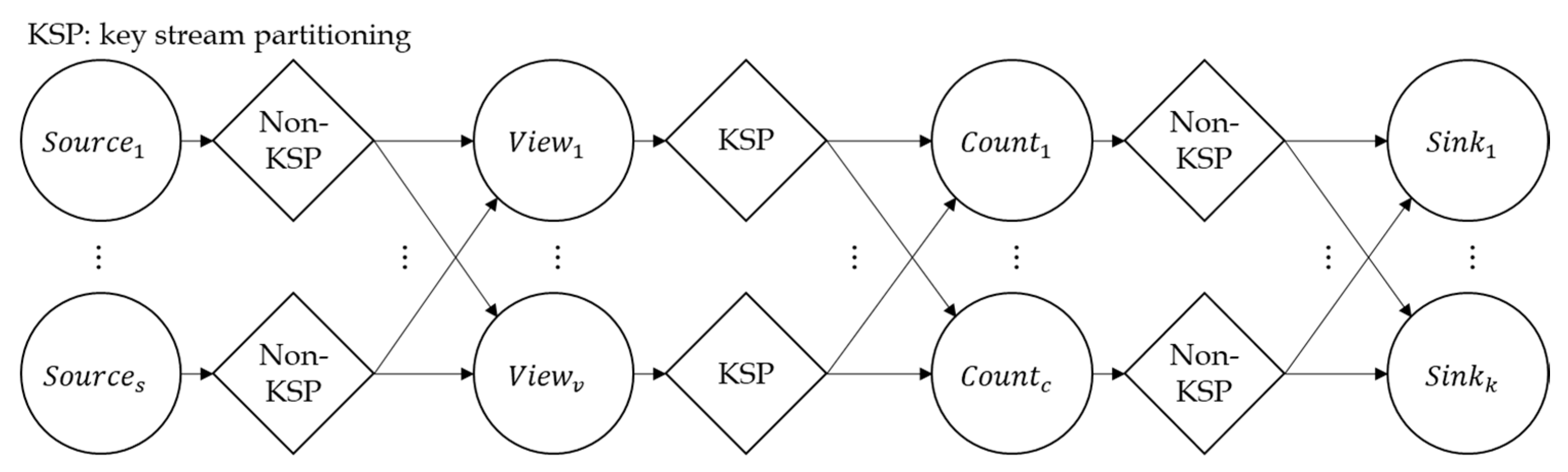

4.5. Experiment on Performance wth Real-World Workloads

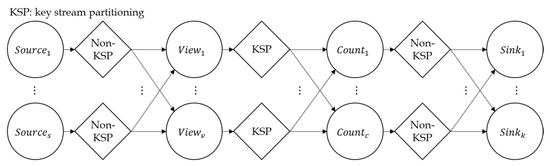

In this section, we evaluate the performance of the L/F job scheduler in two general and practical real-world workloads. The workloads used in the experiment are a page view count application [13,14] that aggregates the number of users who have visited Web pages, and these are common cases that use key stream partitioning and non-key stream partitioning together. Figure 11 shows the architecture of the page view count application, and the application is divided into two workloads according to . PO. In the figure, the application consists of four POs, and we replicate each PO into four POIs for the experiment. Each PO operates as follows [13,14]:

Figure 11.

Real-world workload application: page view count.

- (1)

- emits a pseudo real-world data stream according to the distribution of Web page click streams used in the realistic application of DSPE [13] and the real-world workload of the DSPE benchmark tool [14]. The Web page click stream consists of {page, http status, user zip code, user id}. First, the page field means the three Web page addresses with a probability distribution of 70%, 20%, and 10%. Second, the http status field follows a probability distribution of 95% and 5%, respectively, for successful response and failure response. Third, the two user zip codes follow the same probability. Fourth, the 100 user ids follow the same probability. Each click message in the stream occurs very frequently every 1 ms.

- (2)

- extracts the fields needed for aggregation from the click stream of the Web page. Since the application aggregates the number of users per Web page, it extracts the page field and the user id field and emits them to the next PO. Since is a stateless PO, it receives messages from using LSP, a non-key stream partitioning method.

- (3)

- aggregates the number of users who have visited the Web page. We divide it into two workloads according to the aggregation method. First, for simple page view count (), increases the number of visitors of the Web page visited by the user, and emits the corresponding page and the number of visitors to the next PO. Second, for unique page view count (), aggregates the number of unique users who visited each page for a certain period of time, and emits all pages and the number of visitors to the next PO. The uses a disjoint rolling window to aggregate streams over a period of time unlike the . Since is a stateful PO, it uses a key stream partitioning method.

- (4)

- records the received number of Web page visitors as a fully processed message. Since is a stateless PO, it uses LSP, a non-key stream partitioning method.

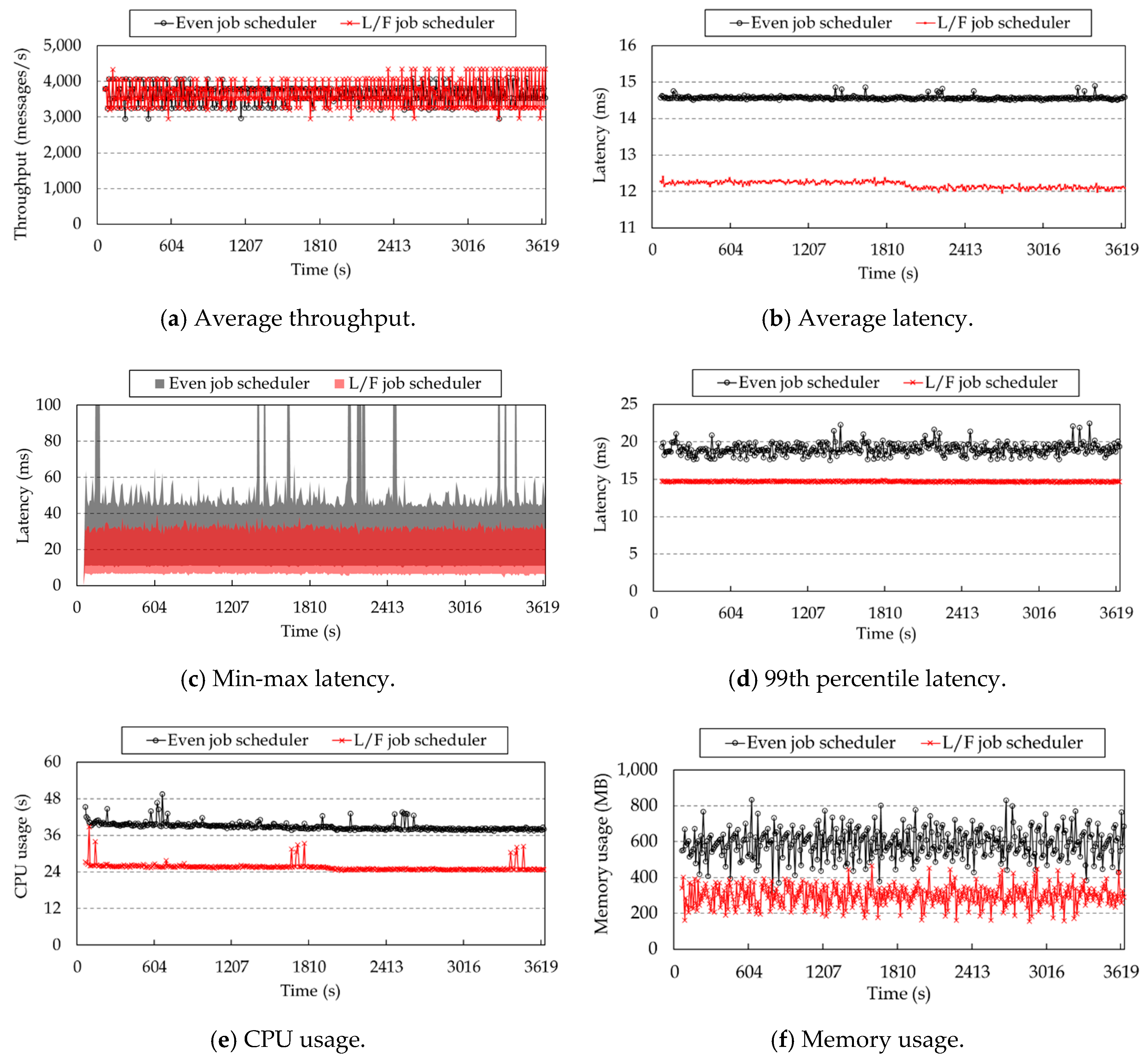

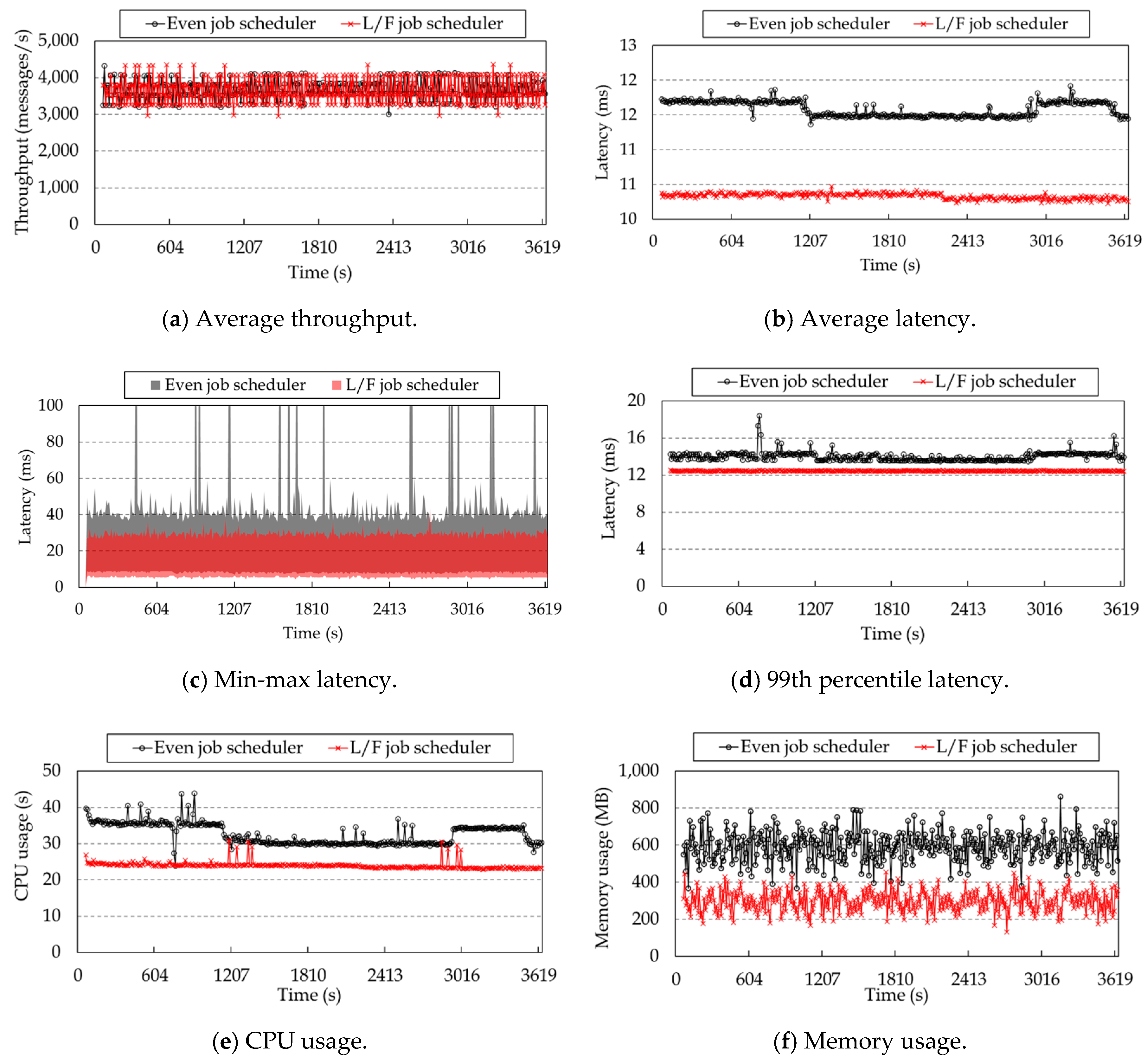

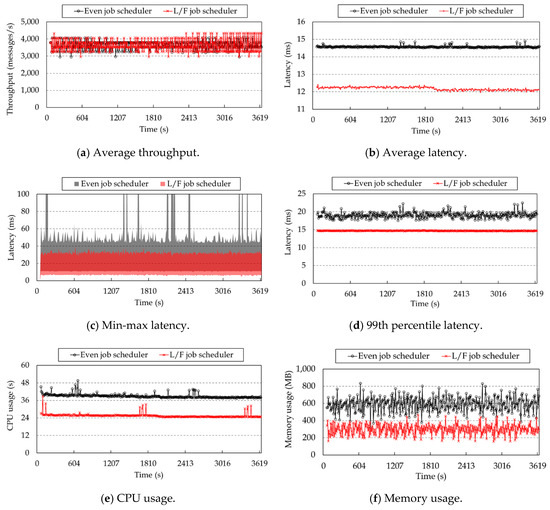

First, we compare the performance of even job and L/F job schedulers in the . The evaluation metrics are measured every 10 s for 1 h. Figure 12 shows the experimental results of the two schedulers. In Figure 12a, the of the two schedulers shows little difference. Actually, the of L/F job scheduler is slightly higher than that of the even job scheduler, but it is negligible to about 1%. In Figure 12b, the between the two schedulers shows a greater difference compared to the . The of the even job scheduler is 14.57 milliseconds, and that of the L/F job scheduler is 12.18 milliseconds, improving by 19.58%. This difference in latency is because the L/F job scheduler greatly reduces network communication. In Figure 12c, the min-max latency of the two schedulers are significantly different from each other. The min-max latency of the even job scheduler not only shows quite a large deviation, but also has a large number of peaks in the hundreds of milliseconds, while the L/F job scheduler shows a stable low latency. This trend is more clearly distinguished from the in Figure 12d. The of the even job scheduler shows a lot of fluctuation at about 20 milliseconds, but that of the L/F job scheduler shows a stable trend at about 15 milliseconds. The L/F job scheduler can greatly decrease system resource usage by reducing the number of network communications. Figure 12e,f show the and of the two schedulers, respectively. The of the even job scheduler is 38.94 s on average, and that of the L/F job scheduler is 25.56 s on average, reducing by 52.32%. The of the even job scheduler is 591.2 MB on average, and that of the L/F job scheduler is 298.5 MB on average, improving by 98.03%.

Figure 12.

Performance of even and L/F job schedulers on simple page view count.

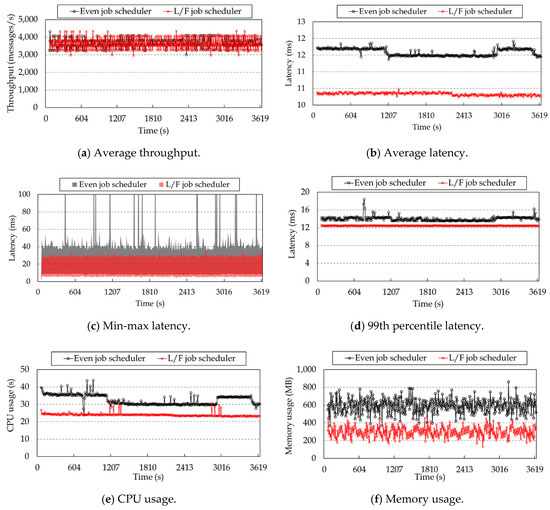

Second, Figure 13 shows the experimental results in a . Since the is aggregated for a certain period of time unlike the , the amount of messages that sends to is very small. That is, the message communication between two POs is not frequent regardless of the job scheduler. In the experiment, emits the aggregate result every 3 s. In Figure 13a, the difference between the two schedulers is negligible. In Figure 13b, the latency of the two schedulers is similar to that of Figure 12b. The of the even job scheduler is 11.59 milliseconds, and that of the L/F job scheduler is 10.33 milliseconds, improving the latency by 12.13%. In addition, although the has fewer network communications than the , the latency of the L/F job scheduler in Figure 13c is still much more stable than the even job scheduler. Although the of the two schedulers is similar, the latency deviation of the even job scheduler is very large, and many outliers of hundreds of milliseconds occur. In Figure 13d, the of the even job scheduler shows a lot of fluctuation at about 14 milliseconds, but that of the L/F job scheduler is very stable at about 12 milliseconds. The system resource usage of the two schedulers still shows a big difference. In Figure 13e, the of the even job scheduler is about 32.62 s, and that of the L/F job scheduler is about 23.97 s, showing a 36.10% reduction in . In Figure 13f, the of the even job scheduler is 592.5 MB, and that of the L/F job scheduler is 299.1 MB, reducing by 98.12% in . Through these experiments, we can say that the proposed L/F job scheduler not only works well in the real-world workloads, but also presents superior performance compared to the existing even job scheduler.

Figure 13.

Performance of even and L/F job schedulers on unique page view count.

5. Related Work

Until now, many researchers have been studying scheduling methods in the field of cloud computing, focusing on distributed processing techniques [15,16,17]. Their main interest is performance improvement, load balancing, and resource utilization through an improved scheduler. In particular, we paid attention to the scheduling methods of various distributed processing techniques mainly used in the cloud. This section discusses case studies of distributed processing techniques that introduce locality to job scheduling. First, Apache Hadoop [18,19], a representative distributed processing engine, stores and processes a large amount of data in a distributed environment, so the tasks deployed in Hadoop require large communication costs to send and receive those data. There are many research efforts that introduce the locality in job scheduling of Hadoop to reduce these network costs [20,21,22,23]. In a representative way, Zaharia et al. [20] propose a delay scheduling that deploys the tasks after waiting for a period of time if a job already exists on the same node as the input data for the locality, and uses max-min fair sharing to ensure that the running jobs use resources evenly for the fairness. To reduce latency caused by delay scheduling, Jin et al. [21] propose BAR (Balance-Reduce) that reduces the job completion time by partitioning jobs first and gradually tuning them based on the locality. Guo et al. [22] consider the data locality in MapReduce and deploy the task on a data node that needs a map task so as to increase the proportion of map tasks with the data locality. Palanisamy et al. [23] propose Purlieus to place the map tasks close to the required data and the reduce tasks close to the map tasks to improve the MapReduce performance in the cloud. Hadoop has shown high performance through a variety of examples by considering the locality.

In DSPEs, there have been a few attempts at improving job scheduling to increase the processing performance of distributed stream applications [24,25,26,27,28,29]. Aniello et al. [24] are the pioneers of such job schedulers, and they divide the job scheduling in DSPEs into a static offline phase and a dynamic online phase. The following studies mainly focus on the dynamic scheduling, and their common point is that tasks are dynamically deployed by continuously monitoring communication patterns and resource usage of a job. T-Storm proposed by Xu et al. [25] is an empirical and reactive approach, based on resource usage information hand-tuned by users. Eskandari et al. [26] propose P-Scheduler that calculates the required number of nodes for a job by considering the data transmission rate and traffic pattern, and consolidates the clusters according to the estimated load. D-Storm proposed by Liu et al. [27] monitors resource usage and communication patterns and coordinates jobs at runtime. These dynamic approaches monitor the running job and adjust the job if there is a change in the stream pattern. Thus, a long time is required while adjusting the job with the repetitive cost of monitoring the job. In fact, the rebalancing operation that adjusts the job in Storm takes from as few as tens of seconds to as many as hundreds of seconds, and the stream cannot be processed during the period. In particular, dynamic methods may cause a cold start problem, so the cost can change considerably depending on the initial static scheduling. The proposed L/F job scheduler already shows high performance in a static phase, and is an orthogonal method that can be used with other dynamic scheduling methods. Some studies have focused on static schedulers. Representatively, R-Storm proposed by Peng et al. [28] is a resource-aware job scheduler to maximize resource utilization, and can satisfy soft and hard resource constraints. Furthermore, Muhammad et al. [29] propose TOP-Storm (Topology-based resource-aware scheduler for Storm) to optimize the resource usage of Storm in heterogeneous clusters. Existing job schedulers have commonly tried to reduce cluster resource usage and inter-node network communication. The proposed L/F job scheduler also efficiently introduces the concept of locality and fairness to the job scheduler to reduce the network communication frequency of LSP and non-LSP applications for the purpose of improving the performance of DSPE applications and at the same time considering the fairness. As a result, it shows high processing performance and low resource usage in various application configurations and workloads.

Apache Storm [1,2,3,4], a representative DSPE, provides not only an even job scheduler but also an isolation scheduler and a resource-aware scheduler. The isolation scheduler easily and securely shares resources by isolating only some nodes for certain jobs. The resource-aware scheduler precisely allocates resources by limiting memory and CPU to tasks, processes, and nodes, respectively. Since these are used for resource management rather than processing performance, they are far from the focus of this paper. The default job scheduler provided by another DSPE, Apache Flink [6,7], organizes the successive tasks into a pipeline and assigns them to the task slot (thread). The job scheduler of Flink is similar to the L/F job scheduler proposed in this paper, but no reports have been published about applying the locality and the fairness.

6. Conclusions

In this paper, we have proposed the L/F job scheduler that significantly improves the processing performance of DSPE applications by considering both locality and fairness in job scheduling of DSPE. The existing even job scheduler only considers the fairness, so it deploys tasks in the job to all process slots as evenly as possible. This allows the tasks to use the cluster resources fairly, but it deploys the tasks far away from each other regardless of the relationship of the tasks, resulting in expensive network communication. In order to solve these problems, the L/F job scheduler introduces locality to improve the processing performance of DSPE applications and also introduces fairness to assure fault-tolerance and reduce resource contention of the tasks as much as the even job scheduler. In other words, we guarantee locality by increasing cohesion of contiguous tasks and fairness by decreasing coupling of parallel tasks. In the experiment, we implement the L/F job scheduler in Apache Storm, and compare it with the even job scheduler. We construct extensive synthetic workloads and real-world workloads for the experimental evaluation. Experimental results show that the L/F job scheduler reduces the latency and the system resource usage in all configurations while maintaining the same throughput compared to the even job scheduler. In particular, it improves the latency by up to 139.2%, the CPU usage by up to 553.6%, and the memory usage by up to 664.0% for LSP applications and the latency up to 140.7%, the CPU usage by up to 521.6%, and the memory usage by up to 437.7% even for non-LSP applications. Moreover, even in two real-world workloads, the L/F job scheduler shows excellent results in reducing the latency by up to 19.58%, the CPU usage by up to 52.32%, and the memory usage by up to 98.12% compared to the even job scheduler. In conclusion, we believe that the proposed L/F job scheduler is a very efficient job scheduler that maximizes the processing performance of DSPE applications by sufficiently utilizing the resources of the cluster.

The proposed L/F job scheduler has greatly improved the performance by efficiently introducing locality to the even job scheduler. Similarly, R-Storm [28] and TOP-Storm [29] improved performance by considering the resource usage of DSPE applications. In the future, we will study a job scheduler that considers locality and resource usage together and improve the processing performance with high scalability in a large cluster through an extensive comparison with recent schedulers [28,29].

Author Contributions

S.S. and Y.-S.M. conceived and designed the experiments; S.S. performed the experiments; S.S. and Y.-S.M. analyzed the experimental results; S.S. and Y.-S.M. wrote the paper. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by Korea Electric Power Corporation (Grant number:R18XA05) and National Research Foundation of Korea (No. NRF-2019R1A2C1085311).

Acknowledgments

This research was partly supported by Korea Electric Power Corporation (Grant number:R18XA05). This research was also partly supported by the National Research Foundation of Korea (NRF) grant funded by the Korea government (MSIT) (No. NRF-2019R1A2C1085311).

Conflicts of Interest

The authors declare no conflict of interest.

References

- Apache Storm. Available online: http://storm.apache.org/ (accessed on 1 September 2020).

- Toshniwal, A.; Donham, J.; Bhagat, N.; Mittal, S.; Ryaboy, D.; Taneja, S.; Shukla, A.; Ramasamy, K.; Patel, J.M.; Kulkarni, S.; et al. Storm@twitter. In Proceedings of the 2014 ACM SIGMOD International Conference on Management of Data—SIGMOD ’14; Association for Computing Machinery (ACM), ACM SIGMOD, Snowbird, UT, USA, 22–27 June 2014; pp. 147–156. [Google Scholar]

- Goetz, P.; O’Neill, B. Storm Blueprints: Patterns for Distributed Real-Time Computation; Packt Publishing Ltd.: Birmingham, UK, 2014. [Google Scholar]

- Iqbal, M.H.; Szabist; Soomro, T.R. Big Data Analysis: Apache Storm Perspective. Int. J. Comput. Trends Technol. 2015, 19, 9–14. [Google Scholar] [CrossRef]

- Shoro, A.G.; Soomro, T.R. Big Data Analysis: Apache Spark Perspective. Glob. J. Comput. Sci. Technol. 2015, 15, 7–14. [Google Scholar]

- Carbone, P.; Even, S.; Haridi, S. Apache Flink: Stream and Batch Processing in a Single Engine. Bull. IEEE Comput. Soc. Tech. Comm. Data Eng. 2015, 36, 28–38. [Google Scholar]

- Deshpande, T. Learning Apache Flink; Packt Publishing Ltd.: Birmingham, UK, 2017. [Google Scholar]

- SNoghabi, A.; Paramasivam, K.; Pan, Y.; Ramesh, N.; Bringhurst, J.; Gupta, I.; Campbell, H.R. Samza: Stateful Scalable Stream Processing at LinkedIn. Proc. VLDB Endow. 2017, 10, 1634–1645. [Google Scholar] [CrossRef]

- Apache S4. Available online: http://incubator.apache.org/projects/s4.html (accessed on 1 September 2020).

- Nasir, M.A.U.; Morales, G.D.F.; Garcia-Soriano, D.; Kourtellis, N.; Serafini, M. The Power of Both Choices: Practical Load Balancing for Distributed Stream Processing Engines. In Proceedings of the 2015 IEEE 31st International Conference on Data Engineering, Seoul, Korea, 13–17 April 2015; pp. 137–148. [Google Scholar]

- Wang, C.; Meng, X.; Guo, Q.; Weng, Z.; Yang, C. Automating Characterization Deployment in Distributed Data Stream Management Systems. IEEE Trans. Knowl. Data Eng. 2017, 29, 2669–2681. [Google Scholar] [CrossRef]

- Apache Storm Scheduler. Available online: https://storm.apache.org/releases/1.2.3/Storm-Scheduler.html (accessed on 1 September 2020).

- Apache Spark Git Repository. Available online: https://github.com/apache/spark (accessed on 1 September 2020).

- Storm Benchmark Git Repository. Available online: https://github.com/intel-hadoop/storm-benchmark (accessed on 1 September 2020).

- Chen, C.-L.; Chiang, M.-L.; Lin, C.-B. The High Performance of a Task Scheduling Algorithm Using Reference Queues for Cloud- Computing Data Centers. Electronics 2020, 9, 371. [Google Scholar] [CrossRef]

- Souravlas, S. ProMo: A Probabilistic Model for Dynamic Load-Balanced Scheduling of Data Flows in Cloud Systems. Electronics 2019, 8, 990. [Google Scholar] [CrossRef]

- Cascajo, A.; Singh, D.E.; Carretero, J. Performance-Aware Scheduling of Parallel Applications on Non-Dedicated Clusters. Electronics 2019, 8, 982. [Google Scholar] [CrossRef]

- Shvachko, K.; Kuang, H.; Radia, S.; Chansler, R. The Hadoop Distributed File System. In Proceedings of the 2010 IEEE 26th Symposium on Mass Storage Systems and Technologies (MSST), Incline Village, NV, USA, 3–7 May 2010. [Google Scholar]

- Dean, J.; Ghemawat, S. MapReduce: A Flexible Data Processing Tool. Commun. ACM 2010, 54, 72–77. [Google Scholar] [CrossRef]

- Zaharia, M.; Borthakur, D.; Sarma, J.S.; Elmeleegy, K.; Shenker, S.; Stoica, I. Delay Scheduling: A Simple Technique for Achieving Locality and Fairness in Cluster Scheduling. In Proceedings of the 5th European Conference on Computer Systems, Paris, France, 13–16 April 2010; pp. 265–278. [Google Scholar]

- Jin, J.; Luo, J.; Song, A.; Dong, F.; Xiong, R. BAR: An Efficient Data Locality Driven Task Scheduling Algorithm for Cloud Computing. In Proceedings of the 2011 11th IEEE/ACM International Symposium on Cluster, Cloud and Grid Computing, Newport Beach, CA, USA, 23–26 May 2011; pp. 295–304. [Google Scholar]

- Guo, Z.; Fox, G.; Zhou, M. Investigation of Data Locality in MapReduce. In Proceedings of the 2012 12th IEEE/ACM International Symposium on Cluster, Cloud and Grid Computing (ccgrid 2012), Ottawa, ON, Canada, 13–16 May 2012; pp. 419–426. [Google Scholar]

- Palanisamy, B.; Singh, A.; Liu, L.; Jain, B. Purlieus: Locality-aware Resource Allocation for MapReduce in a Cloud. In Proceedings of the 2011 International Conference for High Performance Computing, Networking, Storage and Analysis, Seattle, WA, USA, 12–18 November 2011; pp. 58:1–58:11. [Google Scholar]

- Aniello, L.; Baldoni, R.; Querzoni, L. Adaptive Online Scheduling in Storm. In Proceedings of the 7th ACM International Conference on Distributed Event-Based Systems, Arlington, TX, USA, 29 June–3 July 2013; pp. 207–218. [Google Scholar]

- Xu, J.; Chen, Z.; Tang, J.; Su, S. T-Storm: Traffic-Aware Online Scheduling in Storm. In Proceedings of the 2014 IEEE 34th International Conference on Distributed Computing Systems, Madrid, Spain, 30 June–3 July 2014; pp. 535–544. [Google Scholar]

- Eskandari, L.; Huang, Z.; Eyers, D. P-Scheduler: Adaptive Hierarchical Scheduling in Apache Storm. In Proceedings of the Australasian Computer Science Week Multiconference, Canberra, Australia, 2–5 February 2015; pp. 1–10. [Google Scholar]

- Liu, X.; Buyya, R. D-Storm: Dynamic Resource-Efficient Scheduling of Stream Processing Applications. In Proceedings of the 2017 IEEE 23rd International Conference on Parallel and Distributed Systems (ICPADS), Shenzhen, China, 15–17 December 2017; pp. 485–492. [Google Scholar]

- Peng, B.; Hosseini, M.; Hong, Z.; Farivar, R.; Campbell, R. R-Storm: Resource-Aware Scheduling in Storm. In Proceedings of the 16th Annual Middleware Conference, Vancouver, BC, Canada, December 2015; Association for Computing Machinery: New York, NY, USA, 2015; pp. 149–151. [Google Scholar]

- Muhammad, A.; Aleem, M.; Islam, M.A. TOP-Storm: A topology-based resource-aware scheduler for Stream Processing Engine. Clust. Comput. 2020, 1–15. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).