Exploring the Effects of Scale and Color Differences on Users’ Perception for Everyday Mixed Reality (MR) Experience: Toward Comparative Analysis Using MR Devices

Abstract

1. Introduction

2. Related Works

3. Experiment 1: Scale Perception

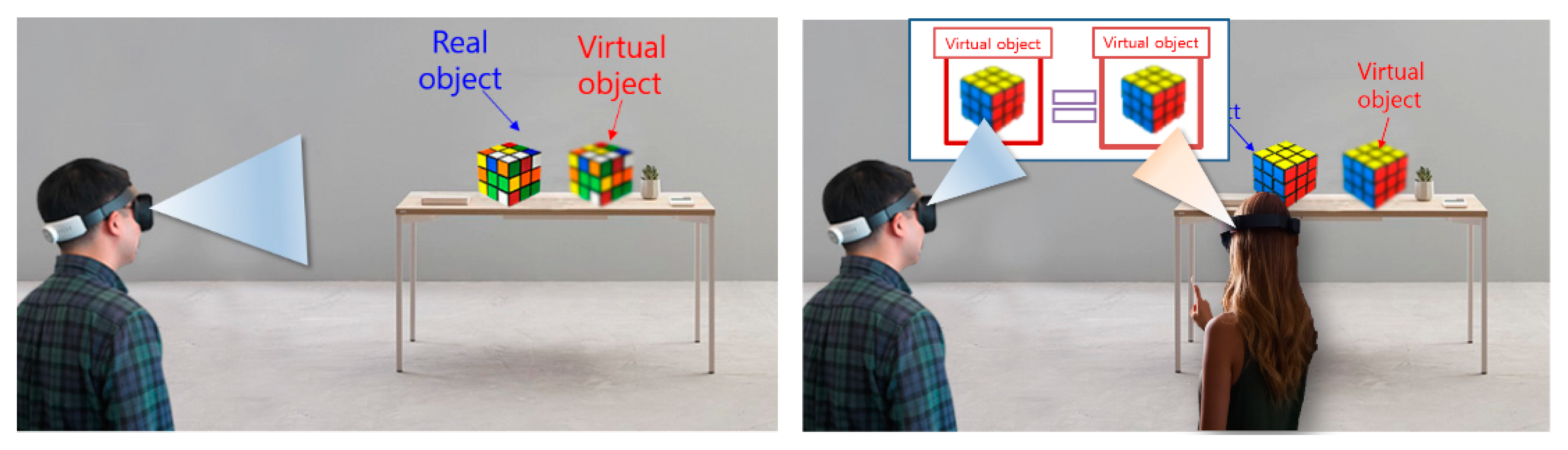

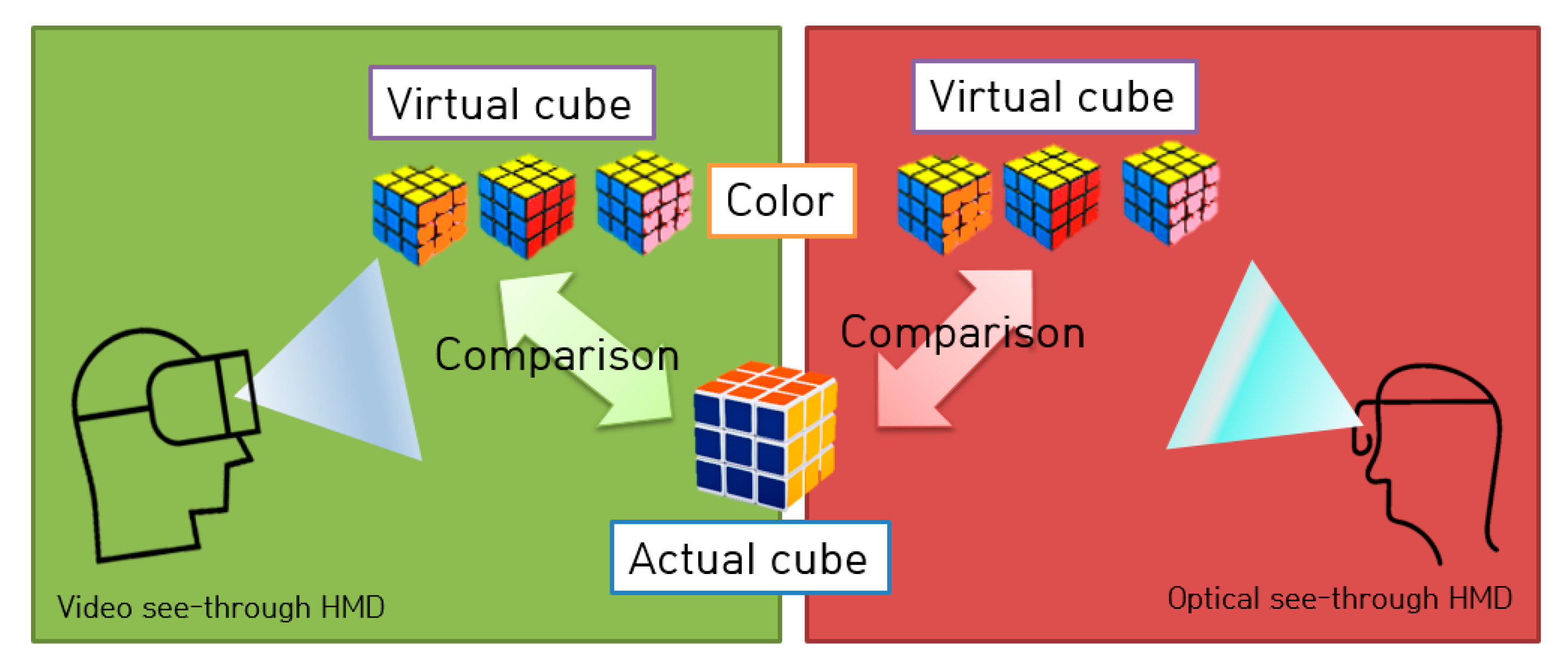

3.1. Overview of Experimental Design

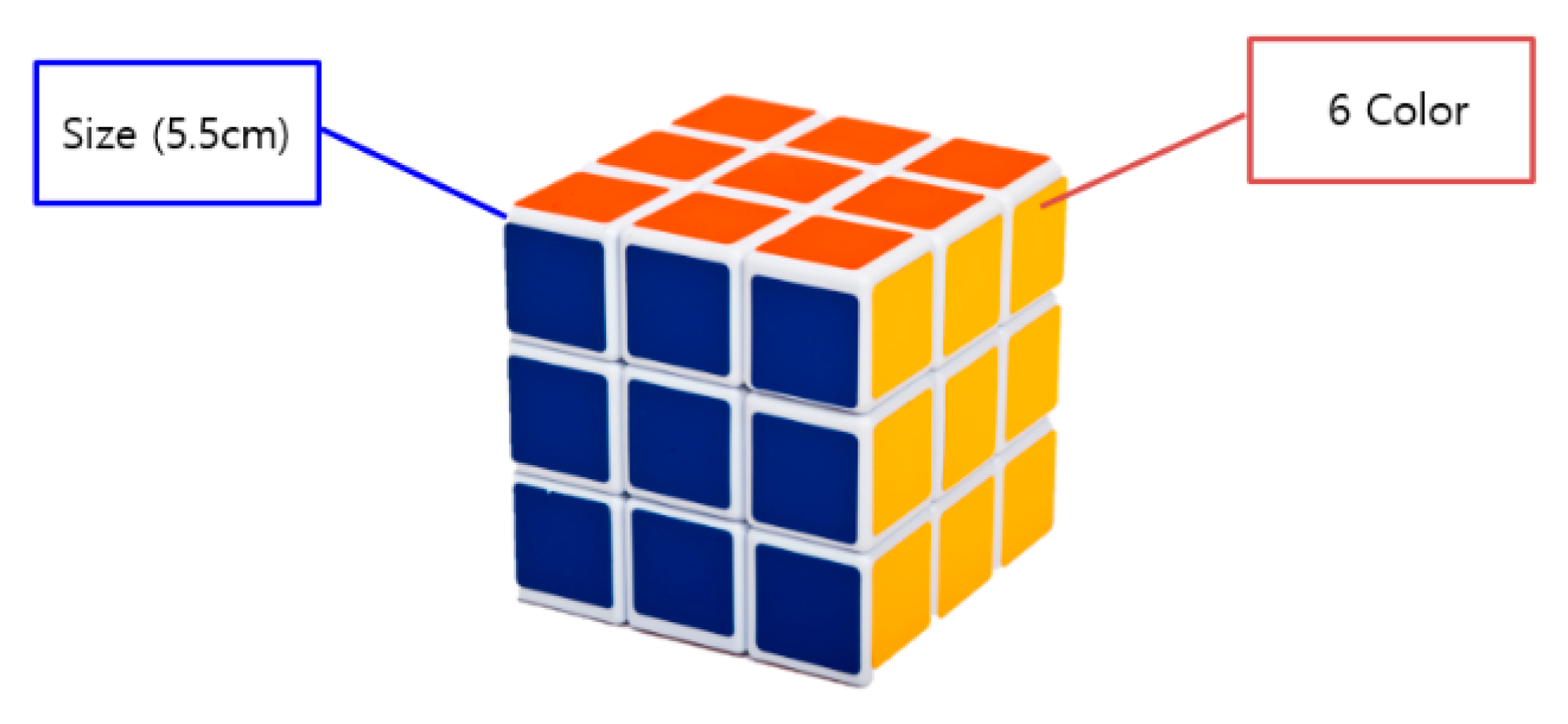

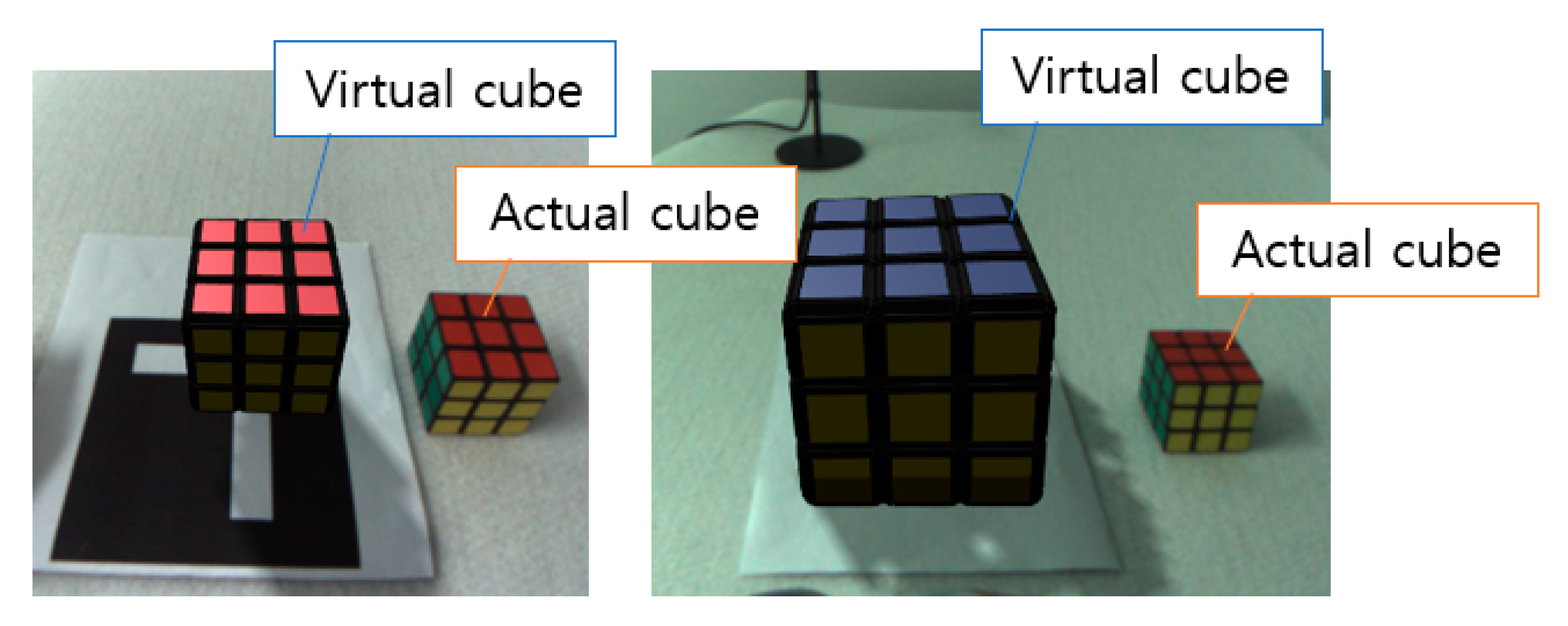

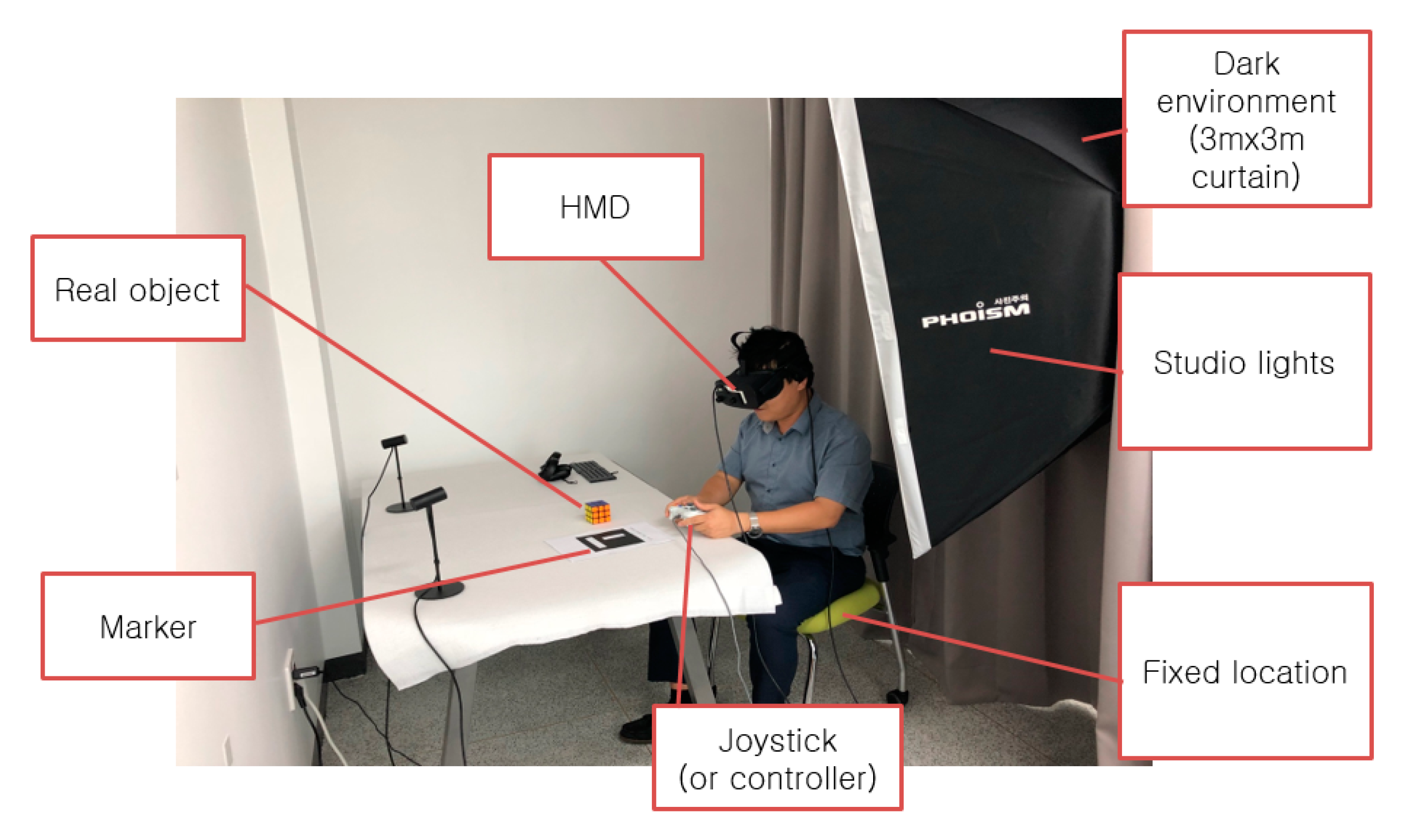

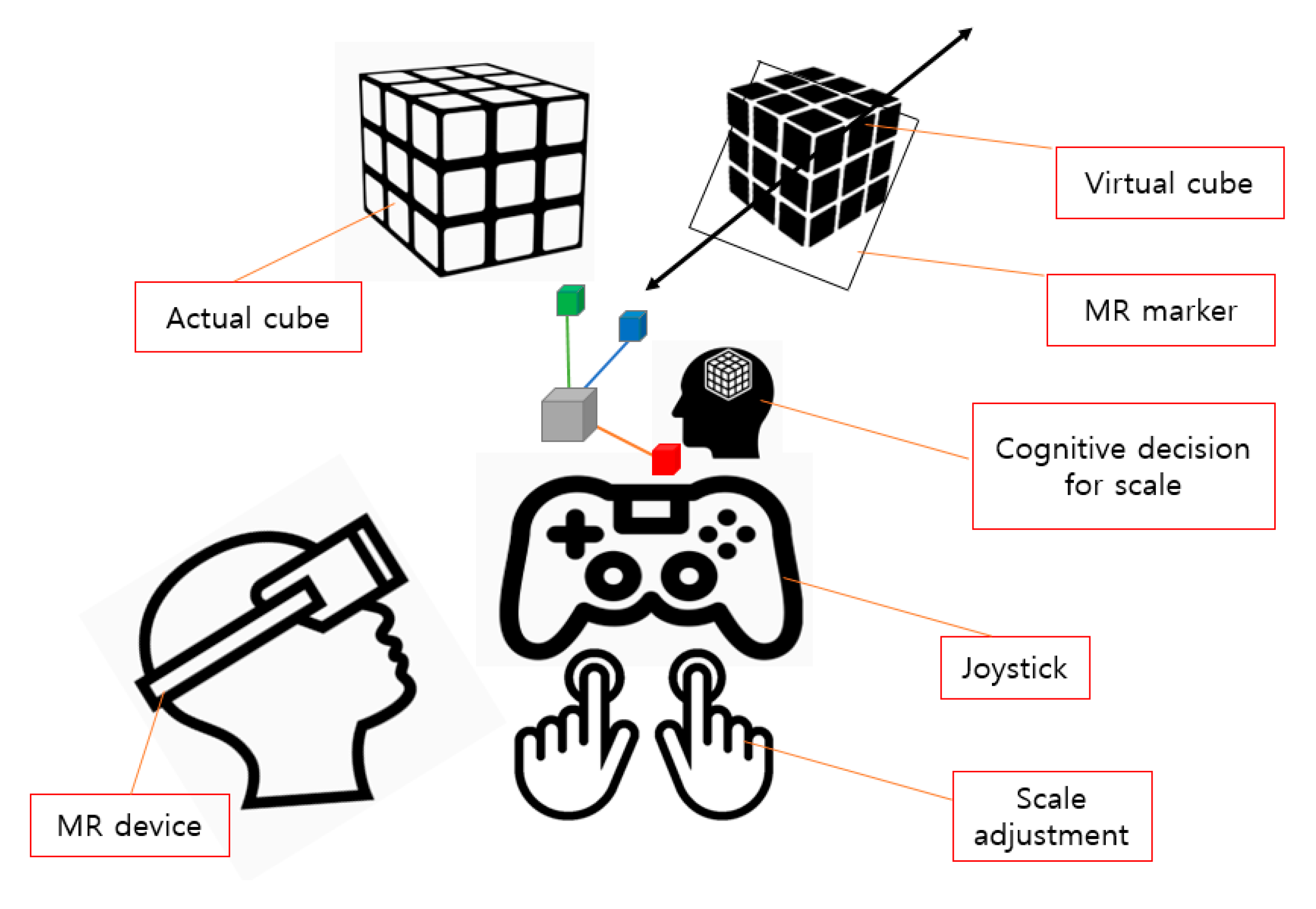

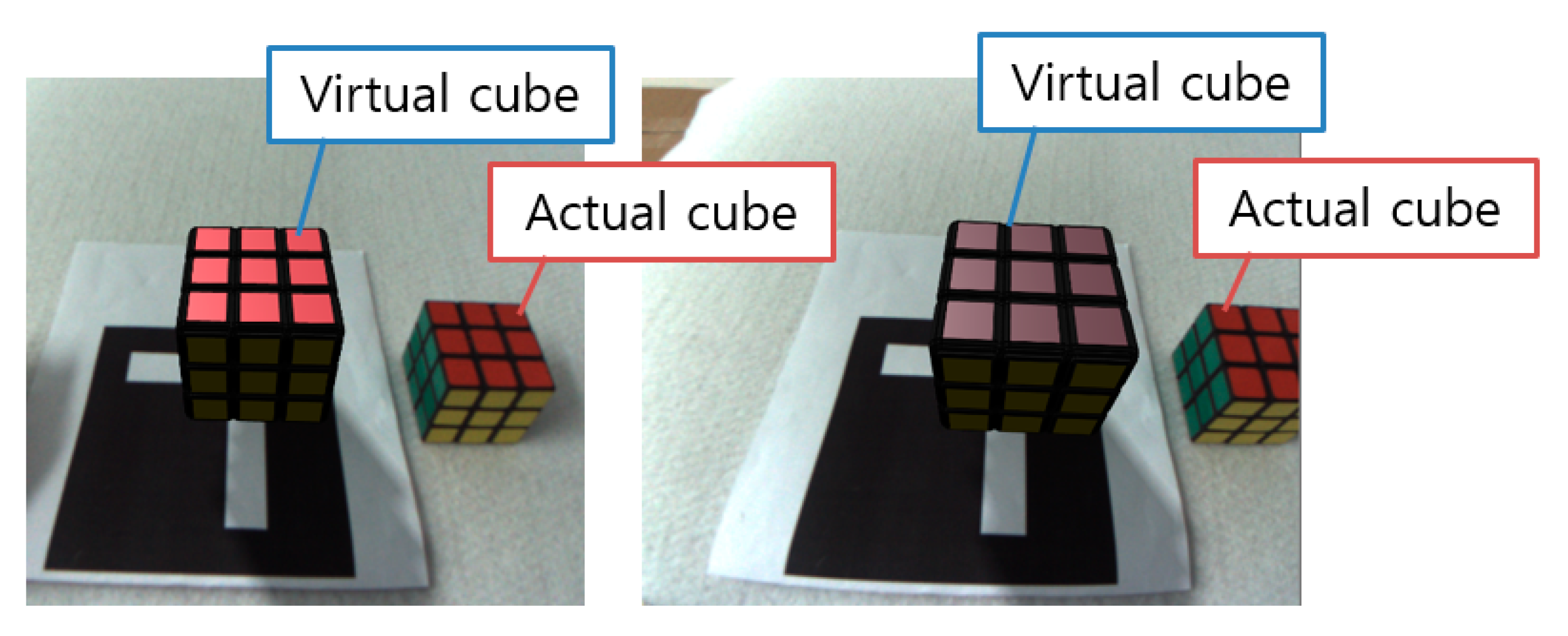

3.2. Experimental Set-Up for Scale Perception

3.3. Experimental Task and Procedure

3.4. Results and Discussions

4. Experiment 2: Color Perception

4.1. Overview of the Experimental Design

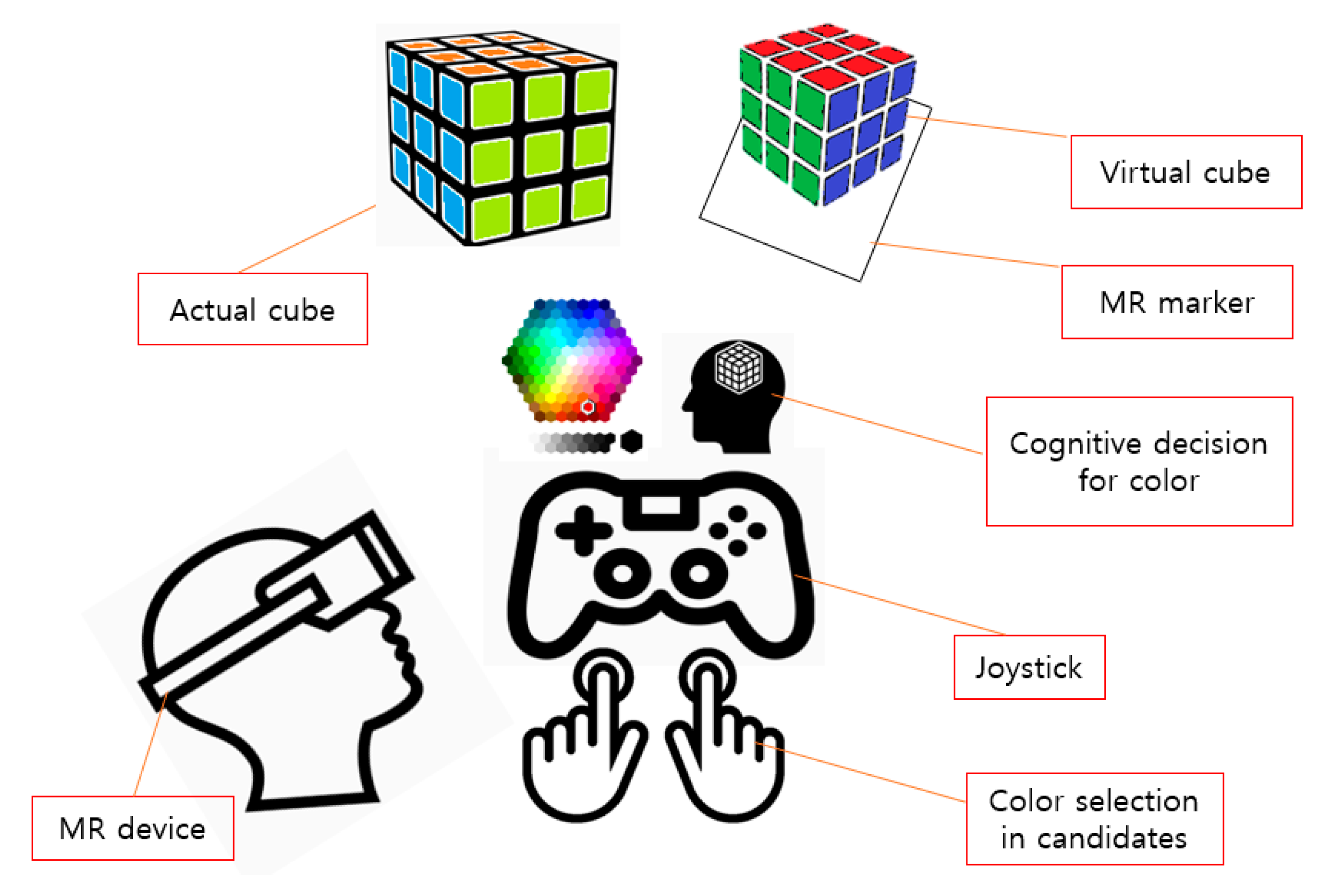

4.2. Experimental Set-Up for Color Perception

4.3. Experimental Task and Procedure

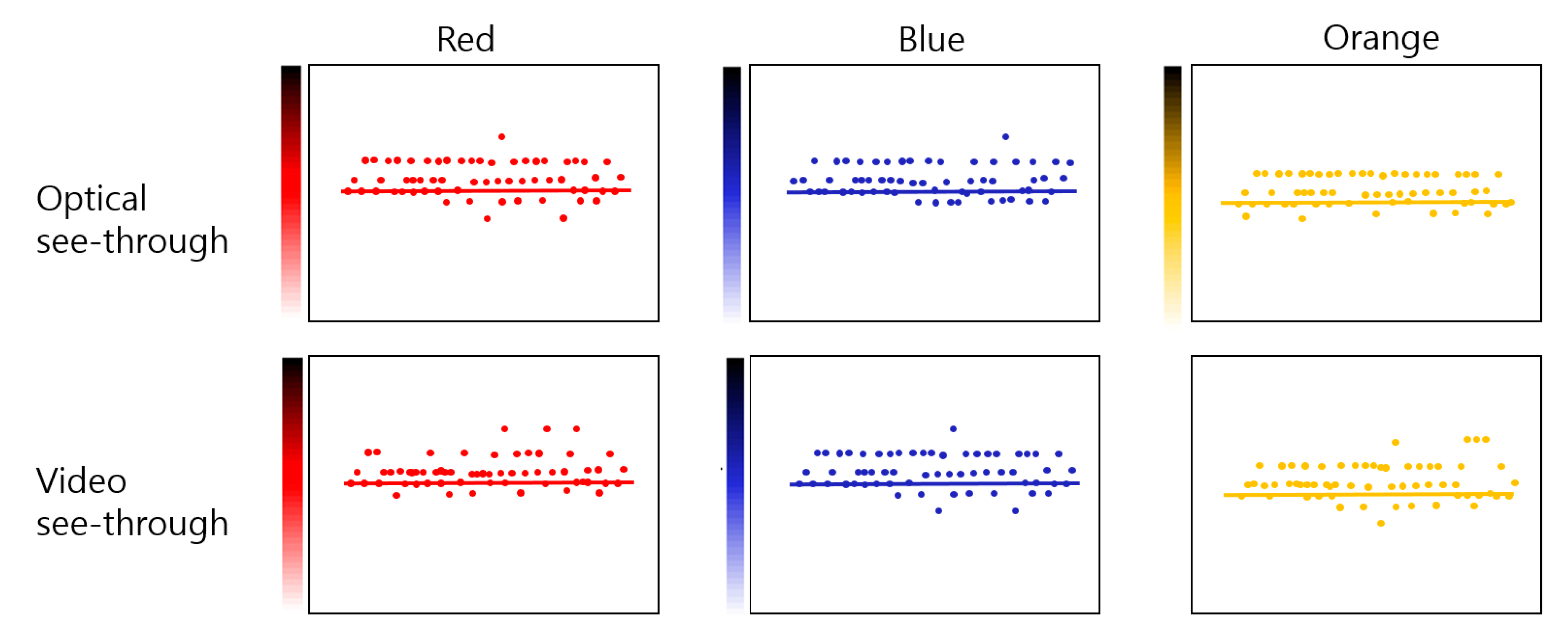

4.4. Results and Discussions

5. Conclusions and Future Works

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Jo, D.; Kim, K.-H.; Kim, G.J. Spacetime. Adaptive control of the teleported avatar for improved AR tele-conference experience. Comput. Anim. Virtual Worlds 2015, 26, 259–269. [Google Scholar] [CrossRef]

- Xue, H.; Sharma, P.; Wild, F. User satisfaction in augmented reality-based training using Microsoft Hololens. Computers 2019, 8, 9. [Google Scholar] [CrossRef]

- Muller, L.; Aslan, I.; Kruben, L. GuideMe: A mobile augmented reality system to display user manuals for home appliances. In Advances in Computer Entertainment; Springer: Cham, Switzerland, 2013; pp. 152–167. [Google Scholar]

- Maimone, A.; Yang, X.; Dierk, N.; State, A.; Dou, M.; Henri, F. General-purpose telepresence with head-worn optical see-through displays and projector-based lighting. In Proceedings of the 2013 IEEE Virtual Reality (VR), Lake Buena Vista, FL, USA, 18–20 March 2013; pp. 23–26. [Google Scholar]

- Wilson, A.; Hua, H. Design and prototype of an augmented reality display with per-pixel mutual occlusion capability. Opt. Express 2017, 25, 30539–30549. [Google Scholar] [CrossRef] [PubMed]

- Kruijff, E.; Swan, E.; Feiner, S. Perceptual issues in augmented reality revisited. In Proceedings of the 2010 IEEE International Symposium on Mixed and Augmented Reality, Seoul, Korea, 13–16 October 2010; pp. 3–12. [Google Scholar]

- Albarelli, A.; Colentano, A.; Cosmo, L.; Marchi, R. On the inter-play between data overlay and real-world context using see-through displays. In Proceedings of the 11th Biannual Conference on Italian SIGCHI Chapter (CHItaly 2015), New York, NY, USA, 28–30 September 2015; pp. 58–65. [Google Scholar] [CrossRef]

- Debernardis, S.; Gattullo, M.; Monno, G.; Uva, A. Text readability in head-worn displays: Color and style optimization in video versus optical see-through devices. IEEE Trans. Vis. Comput. Graph. 2014, 20, 125–139. [Google Scholar] [CrossRef] [PubMed]

- Draelos, M.; Keller, B.; Viehland, C.; Zevallos, C.; Kuo, A.; Izatt, J. Real-time visualization and interaction with static and live optical coherence tomography volumes in immersive virtual reality. Biomed. Opt. Express 2018, 9, 2825–2843. [Google Scholar] [CrossRef] [PubMed]

- Rolland, J.P.; Henri, F. Optical versus video see-through head-mounted displays in medical visualization. Presence 2000, 9, 287–309. [Google Scholar] [CrossRef]

- Jang, C.; Lee, S.; Lee, B. Mixed reality near-eye display with focus cue. In Proceedings of the 3D Image Acquisition and Display: Technology, Perception and Applications, Orlando, FL, USA, 25–28 June 2018. [Google Scholar] [CrossRef]

- Wang, Y.; Cheng, D.; Xu, C. Freeform optics for virtual and augmented reality. In Proceedings of the Freeform Optics 2017, Denver, CO, USA, 9–13 July 2017. [Google Scholar] [CrossRef]

- Kim, K.; Schubert, R.; Welch, G. Exploring the impact of environmental effects on social presence with a virtual human. In Intelligent Virtual Agents (IVA); Springer: Cham, Switzerland, 2016; pp. 470–474. [Google Scholar]

- Balenson, J.-N.; Blascovich, J.; Beall, A.-C.; Loomis, J.M. Interpersonal distance in immersive virtual environments. Pers. Soc. Psychol. Bull. 2003, 29, 819–833. [Google Scholar] [CrossRef] [PubMed]

- Volante, M.; Babu, S.-V.; Chaturvedi, H.; Newsome, N.; Ebrahimi, E.; Roy, T.; Daily, S.-B.; Fasolino, T. Effects of virtual human appearance fidelity on emotion contagion in affective inter-personal simulations. IEEE Trans. Vis. Comput. Graph. 2016, 22, 1326–1335. [Google Scholar] [CrossRef] [PubMed]

- Diaz, C.; Walker, M.; Szafir, D.A.; Szafir, D. Designing for depth perceptions in augmented reality. In Proceedings of the 2017 IEEE International Symposium on Mixed and Augmented Reality (ISMAR), Nantes, France, 9–13 October 2017; pp. 111–122. [Google Scholar]

- Baumeister, J.; Ssin, S.Y.; Elsayed, N.A.E.; Dorrian, J.; Webb, D.P.; Walsh, J.P.; Simon, T.M.; Irlitti, A.; Smith, R.T.; Kohler, M.; et al. Cognitive cost of using augmented reality displays. IEEE Trans. Vis. Comput. Graph. 2017, 23, 2378–2388. [Google Scholar] [CrossRef] [PubMed]

- Juan, M.-C.; Inmaculada, G.; Molla, R.; Lopez, R. Users’ perceptions using low-end and high-end mobile-rendered HMDs: A comparative study. Computers 2018, 7, 15. [Google Scholar] [CrossRef]

- Rohmer, K.; Jendersie, J.; Grosch, T. Natural environment illumination: Coherent interactive augmented reality for mobile and non-mobile devices. IEEE Trans. Vis. Comput. Graph. 2017, 23, 2474–2484. [Google Scholar] [CrossRef] [PubMed]

- Rhee, T.; Petikam, L.; Allen, B.; Chalmers, A. MR360: Mixed reality rendering for 360o panoramic videos. IEEE Trans. Vis. Comput. Graph. 2017, 23, 1379–1388. [Google Scholar] [CrossRef] [PubMed]

- Menk, C.; Koch, R. Truthful color reproduction in spatial augmented reality applications. IEEE Trans. Vis. Comput. Graph. 2012, 19, 236–248. [Google Scholar] [CrossRef] [PubMed]

- AR-RIFT: Aligning Tracking and Video Spaces. Available online: https://willsteptoe.com/post/67401705548/ar-rift-aligning-tracking-and-video-spaces-part (accessed on 1 September 2019).

- Kun, L.; Qionhai, D.; Wenli, X. Collaborative color calibration for multi-camera systems. Signal Process. Image Commun. 2011, 26, 48–60. [Google Scholar]

| Input (Specifications of MR Devices) | Perception | |

|---|---|---|

| Device Characteristics | Display type (video or optical) | Scale, color, naturalness |

| Resolution | Scale, naturalness | |

| Aspect ratio | Scale, readability | |

| Brightness | Color, visibility, readability | |

| Contrast | Color, visibility, readability | |

| FOV (viewing angle) | Scale, visibility | |

| Refresh rate | Naturalness, readability | |

| Response time (tracking) | Naturalness, readability | |

| User’s Surrounding Environment | Light condition | Color, visibility |

| Occlusion | Visibility | |

| Virtual Object Characteristics | Realistic representation | Naturalness, readability |

| Texture quality | Naturalness, readability | |

| Distance between the user and the object | Scale | |

| Viewing direction | Visibility, readability | |

|  |  |  |  |  |  | |

|---|---|---|---|---|---|---|---|

| H | 169 | 169 | 169 | 169 | 169 | 169 | 169 |

| S | 169 | 169 | 169 | 169 | 169 | 169 | 169 |

| L | 230 | 200 | 170 | 140 | 110 | 80 | 50 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shin, K.-s.; Kim, H.; Lee, J.g.; Jo, D. Exploring the Effects of Scale and Color Differences on Users’ Perception for Everyday Mixed Reality (MR) Experience: Toward Comparative Analysis Using MR Devices. Electronics 2020, 9, 1623. https://doi.org/10.3390/electronics9101623

Shin K-s, Kim H, Lee Jg, Jo D. Exploring the Effects of Scale and Color Differences on Users’ Perception for Everyday Mixed Reality (MR) Experience: Toward Comparative Analysis Using MR Devices. Electronics. 2020; 9(10):1623. https://doi.org/10.3390/electronics9101623

Chicago/Turabian StyleShin, Kwang-seong, Howon Kim, Jeong gon Lee, and Dongsik Jo. 2020. "Exploring the Effects of Scale and Color Differences on Users’ Perception for Everyday Mixed Reality (MR) Experience: Toward Comparative Analysis Using MR Devices" Electronics 9, no. 10: 1623. https://doi.org/10.3390/electronics9101623

APA StyleShin, K.-s., Kim, H., Lee, J. g., & Jo, D. (2020). Exploring the Effects of Scale and Color Differences on Users’ Perception for Everyday Mixed Reality (MR) Experience: Toward Comparative Analysis Using MR Devices. Electronics, 9(10), 1623. https://doi.org/10.3390/electronics9101623