3-D Synapse Array Architecture Based on Charge-Trap Flash Memory for Neuromorphic Application

Abstract

1. Introduction

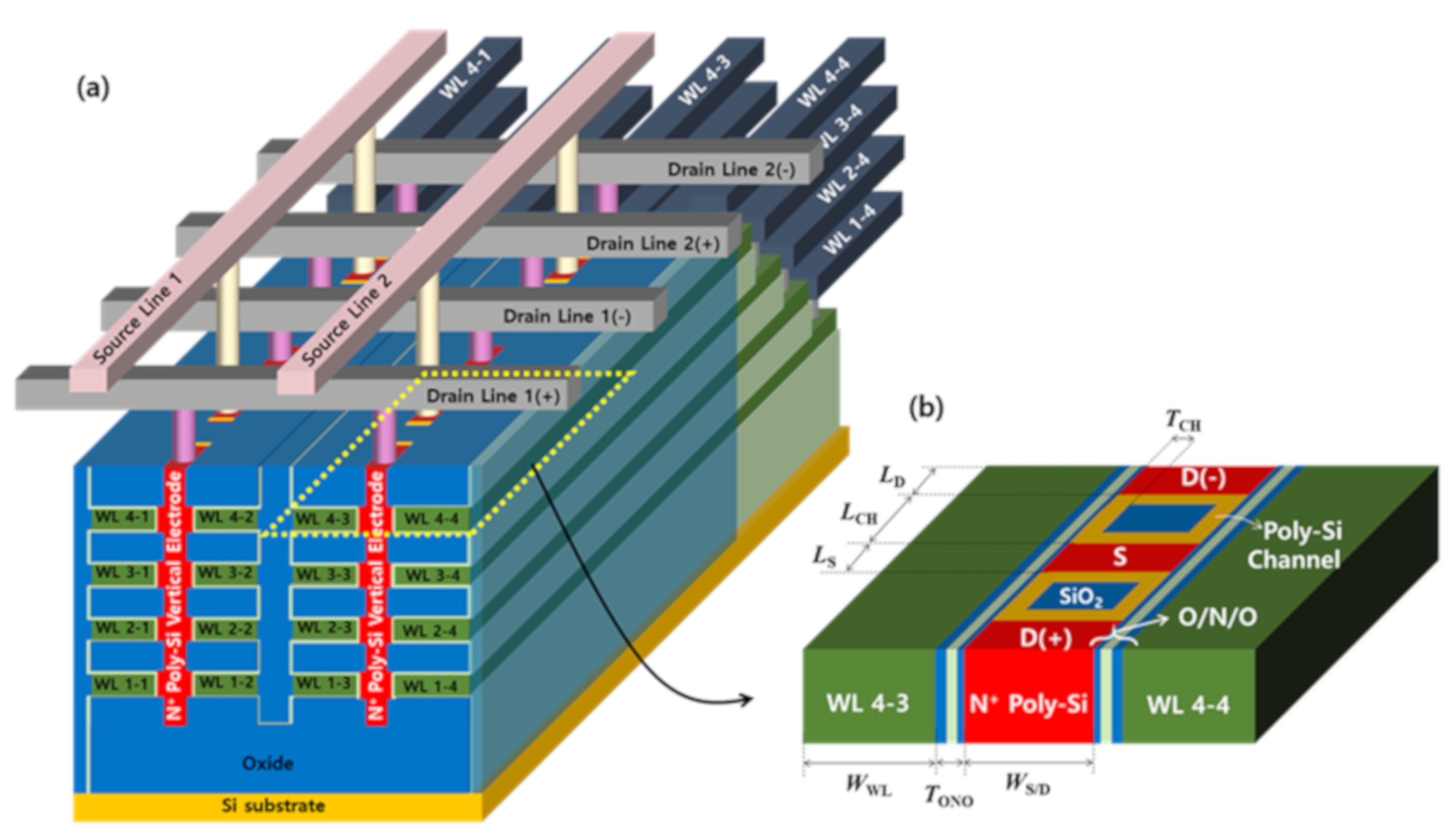

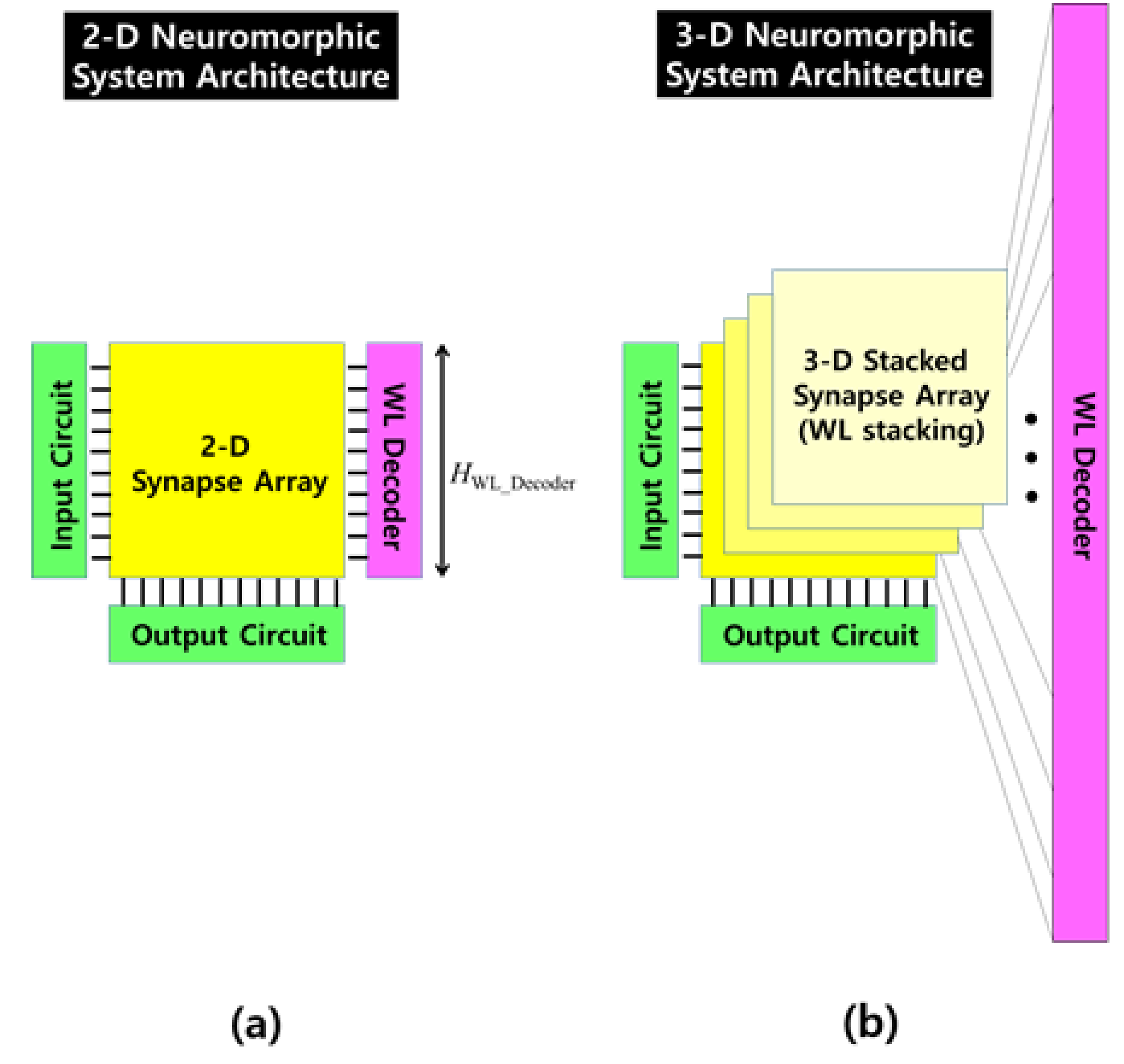

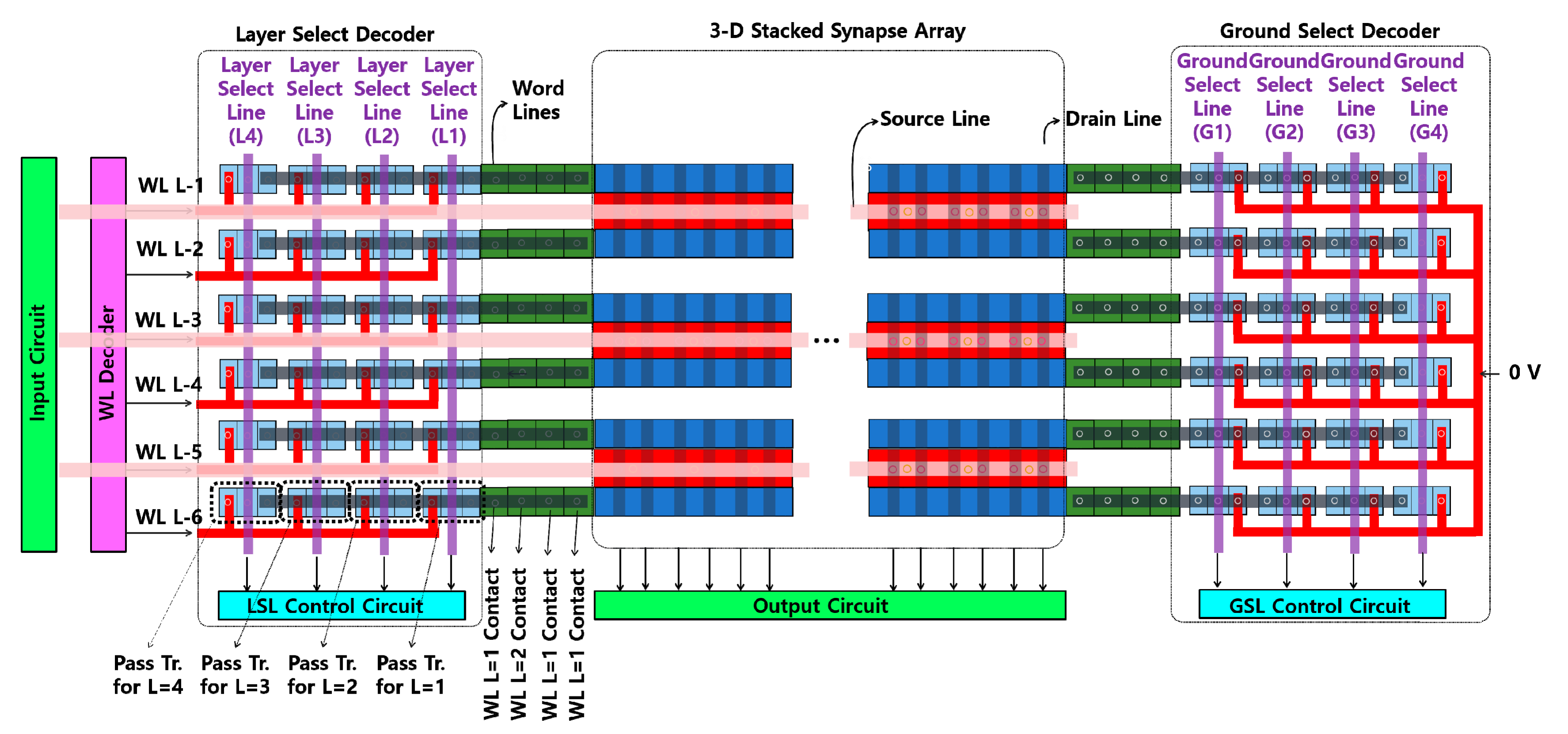

2. Design Methods of 3-D Synapse Array Architecture

3. Results

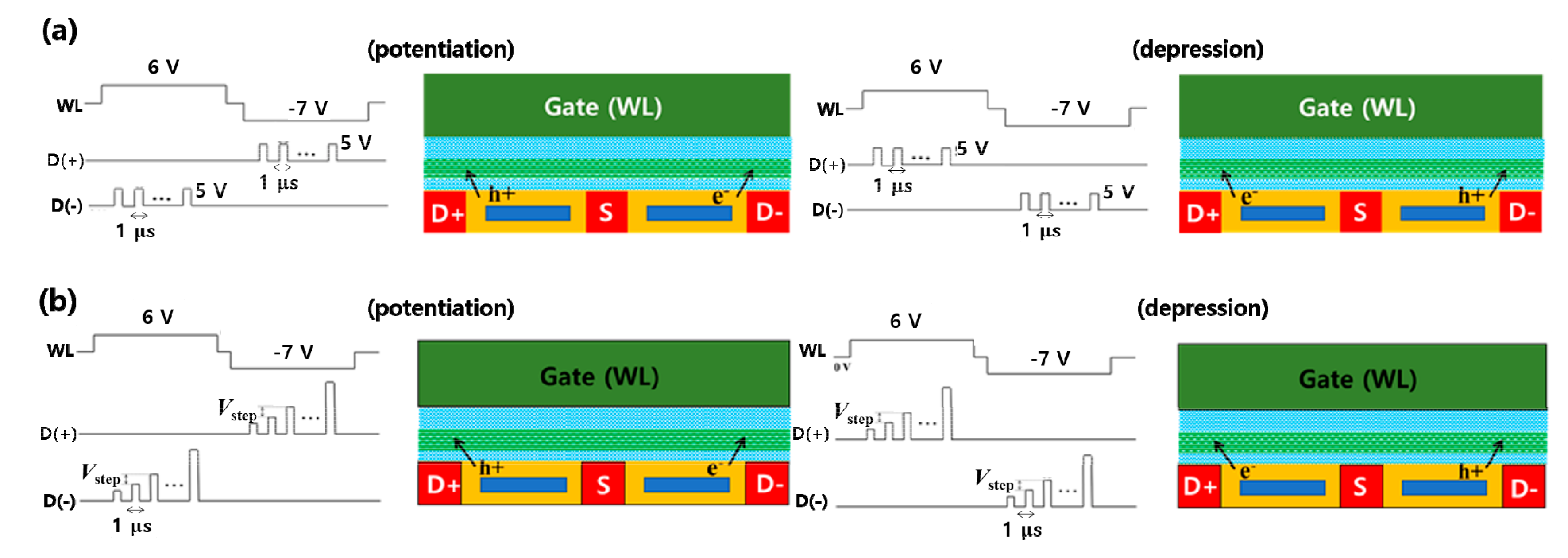

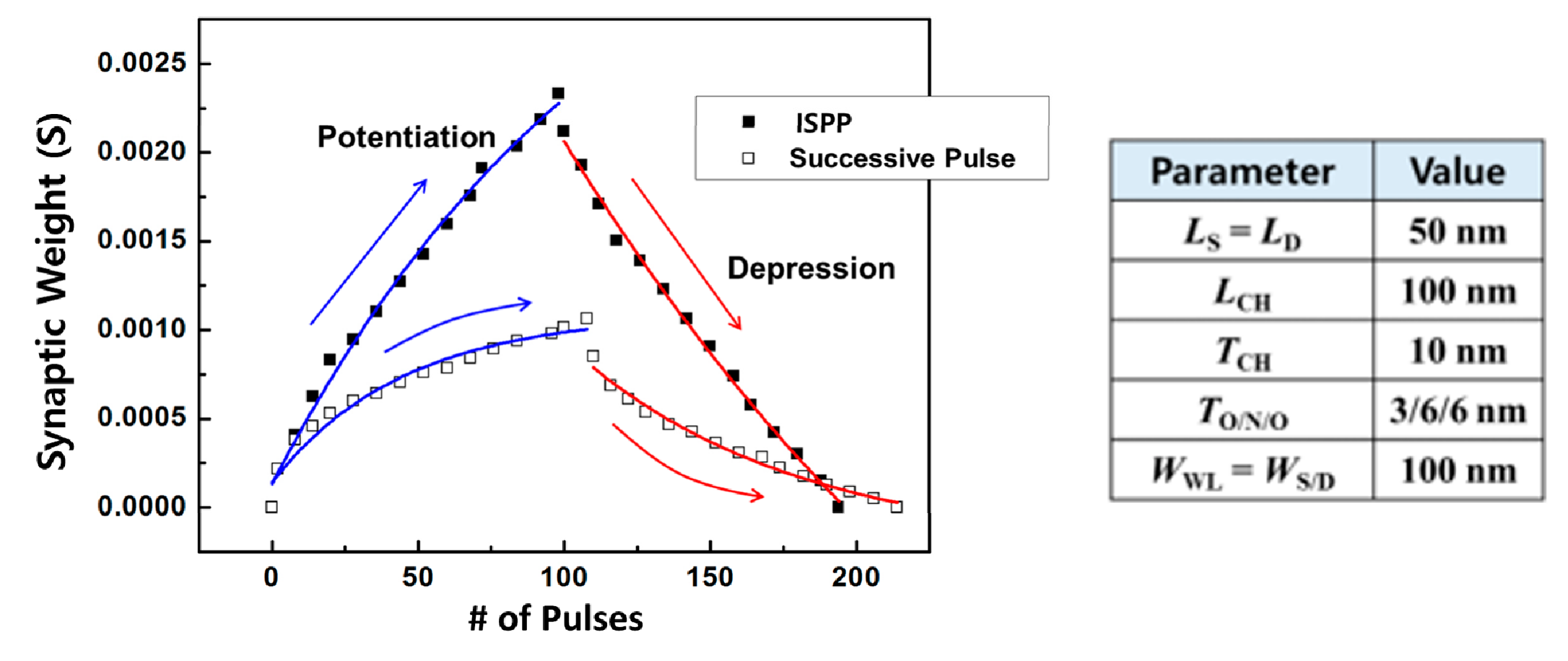

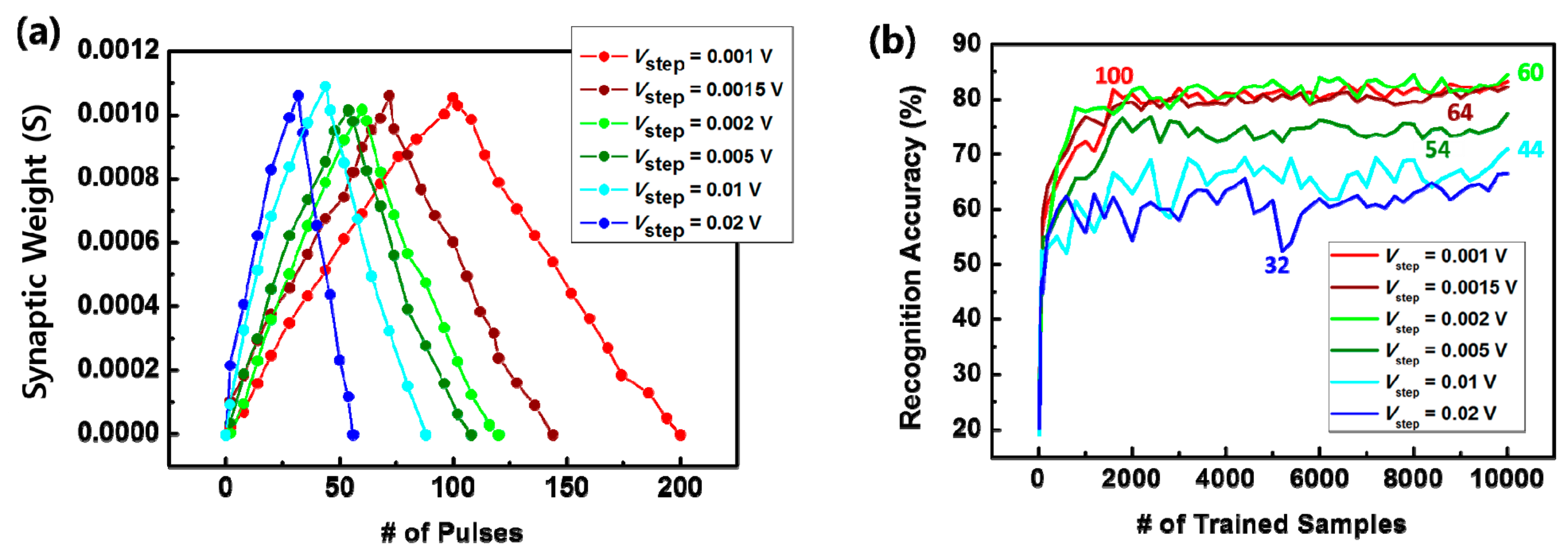

3.1. Synapse Device Operation

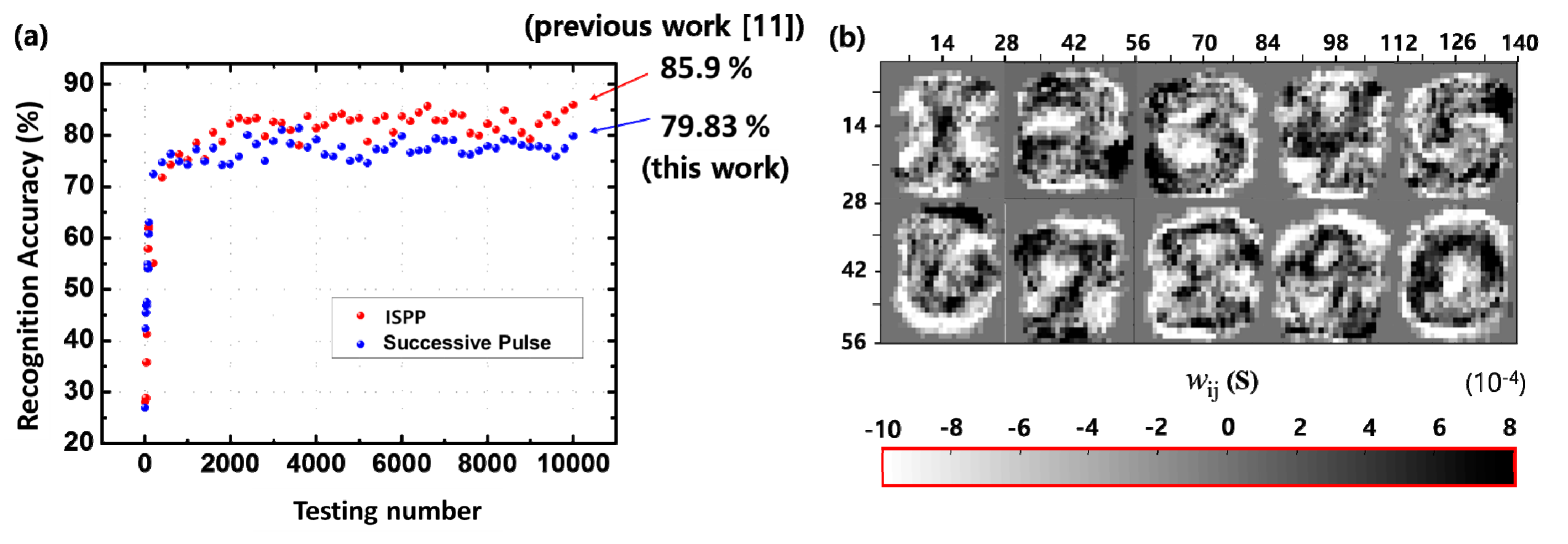

3.2. System-Level Simulation for Pattern Recognition

4. Discussion

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Yu, S.; Gao, B.; Fang, Z.; Yu, H.; Kang, J.; Wong, H.S. A low energy oxide-based electronic synaptic device for neuromorphic visual systems with tolerance to device variation. Adv. Mater. 2013, 25, 1774–1779. [Google Scholar] [CrossRef] [PubMed]

- Liu, X.; Mao, M.; Liu, B.; Li, H.; Chen, Y.; Li, B.; Wang, Y.; Jiang, H.; Barnell, M.; Wu, Q.; et al. RENO: A high-efficient reconfigurable neuromorphic computing accelerator design. In Proceedings of the 2015 52nd ACM/EDAC/IEEE Design Automation Conference (DAC), San Francisco, CA, USA, 8–12 June 2015; pp. 1–6. [Google Scholar]

- Mead, C. Neuromorphic electronic systems. Proc. IEEE 1990, 78, 1629–1636. [Google Scholar] [CrossRef]

- Burr, G.W.; Shelby, R.M.; Sebastian, A.; Kim, S.; Kim, S.; Sidler, S.; Virwani, K.; Ishii, M.; Narayanan, P.; Fumarola, A.; et al. Neuromorphic computing using non-volatile memory. Adv. Phys. X 2016, 2, 89–124. [Google Scholar] [CrossRef]

- Choi, H.S.; Wee, D.H.; Kim, H.; Kim, S.; Ryoo, K.C.; Park, B.G.; Kim, Y. 3-D Floating-Gate Synapse Array with Spike-Time-Dependent Plasticity. IEEE Trans. Electron. Devices 2018, 65, 101–107. [Google Scholar] [CrossRef]

- Roberts, P.D.; Bell, C.C. Spike timing dependent synaptic plasticity in biological systems. Biol. Cybern. 2002, 87, 392–403. [Google Scholar] [CrossRef] [PubMed]

- Akopyan, F.; Sawada, J.; Cassidy, A.; Alvarez-Icaza, R.; Arthur, J.; Merolla, P.; Imam, N.; Nakamura, Y.; Datta, P.; Nam, G.J.; et al. Truenorth: Design and tool flow of a 65 mW 1 million neuron programmable neurosynaptic chip. IEEE Trans. Comput. Aided Des. Integr. Circuits Syst. 2015, 34, 1537–1557. [Google Scholar] [CrossRef]

- Yu, S.; Wu, Y.; Jeyasingh, R.; Kuzum, D.; Wong, H.S. An Electronic Synapse Device Based on Metal Oxide Resistive Switching Memory for Neuromorphic Computation. IEEE Trans. Electron. Devices 2011, 58, 2729–2737. [Google Scholar] [CrossRef]

- Panwar, N.; Kumar, D.; Upadhyay, N.K.; Arya, P.; Ganguly, U.; Rajendran, B. Memristive synaptic plasticity in Pr0.7Ca0.3MnO3 RRAM by bio-mimetic programming. In Proceedings of the 72nd Device Research Conference, Santa Barbara, CA, USA, 22–25 June 2014; pp. 135–136. [Google Scholar]

- Diorio, C.; Hasler, P.; Minch, B.A.; Mead, C.A. A floating-gate MOS learning array with locally computed weight updates. IEEE Trans. Electron. Devices 1997, 44, 2281–2289. [Google Scholar] [CrossRef]

- Park, Y.J.; Kwon, H.T.; Kim, B.; Lee, W.J.; Wee, D.H.; Choi, H.S.; Park, B.G.; Lee, J.H.; Kim, Y. 3-D Stacked Synapse Array Based on Charge-Trap Flash Memory for Implementation of Deep Neural Networks. IEEE Trans. Electron. Devices 2019, 66, 420–427. [Google Scholar] [CrossRef]

- Lee, J.; Park, B.G.; Kim, Y. Implementation of Boolean Logic Functions in Charge Trap Flash for In-Memory Computing. IEEE Electron. Device Lett. 2019, 40, 1358–1361. [Google Scholar] [CrossRef]

- Kim, Y.; Kang, M. Down-coupling phenomenon of floating channel in 3D NAND flash memory. IEEE Electron. Device Lett. 2016, 37, 1566–1569. [Google Scholar] [CrossRef]

- Jeong, W.; Im, J.W.; Kim, D.H.; Nam, S.W.; Shim, D.K.; Choi, M.H.; Yoon, H.J.; Kim, D.H.; Kim, Y.S.; Park, H.W.; et al. A 128 Gb 3b/cell V-NAND flash memory with 1 Gb/s I/O rate. IEEE J. Solid-State Circuits 2016, 51, 204–212. [Google Scholar]

- Kang, M.; Kim, Y. Natural Local Self-Boosting Effect in 3D NAND Flash Memory. IEEE Electron. Device Lett. 2017, 38, 1236–1239. [Google Scholar] [CrossRef]

- Canziani, A.; Paszke, A.; Culurciello, E. An Analysis of Deep Neural Network Models for Practical Applications. arXiv 2017, arXiv:1605.07678. [Google Scholar]

- Sentaurus Device User Guide; Ver. I-2013.12; Synopsys: Mountain View, CA, USA, 2012.

- Kim, Y.; Seo, J.Y.; Lee, S.H.; Park, B.G. A New Programming Method to Alleviate the Program Speed Variation in Three-Dimensional Stacked Array NAND Flash Memory. JSTS 2014, 5, 566–571. [Google Scholar] [CrossRef]

- LeCun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef]

- Nair, V.; Hinton, G.E. Rectified linear units improve restricted Boltzmann machines. In Proceedings of the 27th International Conference on Machine Learning (ICML-10), Haifa, Israel, 21–24 June 2010; pp. 807–814. [Google Scholar]

- Burr, G.W.; Shelby, R.M.; Sidler, S.; Di Nolfo, C.; Jang, J.; Boybat, I.; Shenoy, R.S.; Narayanan, P.; Virwani, K.; Giacometti, E.U.; et al. Experimental Demonstration and Tolerancing of a Large-Scale Neural Network (165000 Synapses) Using Phase-Change Memory as the Synaptic Weight Element. IEEE Trans. Electron. Devices 2015, 62, 3498–3507. [Google Scholar] [CrossRef]

- Kim, H.; Hwang, S.; Park, J.; Park, B.G. Silicon synaptic transistor for hardware-based spiking neural network and neuromorphic system. Nanotechnology 2017, 28, 405202. [Google Scholar] [CrossRef] [PubMed]

- Kim, S.; Kim, H.; Hwang, S.; Kim, M.H.; Chang, Y.F.; Park, B.G. Analog Synaptic Behavior of a Silicon Nitride Memristor. ACS Appl. Mater. Interfaces 2017, 9, 40420–40427. [Google Scholar] [CrossRef] [PubMed]

- Ambrogio, S.; Ciocchini, N.; Laudato, M.; Milo, V.; Pirovano, A.; Fantini, P.; Ielmini, D. Unsupervised learning by spike timing dependent plasticity in phase change memory (PCM) synapses. Front. Neurosci. 2016, 10, 56. [Google Scholar] [CrossRef] [PubMed]

| RRAM | PCM | STT-MRAM | CTF | |

|---|---|---|---|---|

| Device Structure | 2 terminals | 2 terminals | 2 terminals | 3 terminals |

| Selector | needed | needed | needed | unneeded |

| Cell Size | 4 ~ 12 F2 | 4 ~ 12 F2 | 6 ~ 20 F2 | 4 ~ 8 F2 |

| CMOS Compatibility | good | good | moderate | very good |

| Multi-Level Operation | good | good | moderate | very good |

| Weight Change | abrupt SET | abrupt RESET | stochastic change | good symmetric |

| Write Latency | 20 ~ 100 ns | 40 ~ 150 ns | 2 ~ 20 ns | >1 μs |

| Write Energy | low | mid | low | mid~high |

| Retention | moderate | good | good | very good |

| Value | |

|---|---|

| LS = LD | 50 nm |

| LCH | 100 nm |

| TCH | 10 nm |

| TO/N/O | 3/6/6 nm |

| WWL = WS/D | 100 nm |

| This Work | Previous Work [11] | [22] | [23] | [24] | |

|---|---|---|---|---|---|

| Synapse Device | CTF | CTF | CTF | RRAM | PRAM |

| Array Architecture | 3-D array | 3-D array | 2-D array | 2-D array | 2-D array |

| Neuron Layer | single-layer | single-layer | single-layer | single-layer | multi-layer |

| Learning Type | supervised | supervised | supervised | supervised | unsupervised |

| Recognition Rate | 85.9% | 79.8% | 84% | 87.9% | 95.5% |

| Result Type | simulation | simulation | measurement | measurement | simulation |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Choi, H.-S.; Park, Y.J.; Lee, J.-H.; Kim, Y. 3-D Synapse Array Architecture Based on Charge-Trap Flash Memory for Neuromorphic Application. Electronics 2020, 9, 57. https://doi.org/10.3390/electronics9010057

Choi H-S, Park YJ, Lee J-H, Kim Y. 3-D Synapse Array Architecture Based on Charge-Trap Flash Memory for Neuromorphic Application. Electronics. 2020; 9(1):57. https://doi.org/10.3390/electronics9010057

Chicago/Turabian StyleChoi, Hyun-Seok, Yu Jeong Park, Jong-Ho Lee, and Yoon Kim. 2020. "3-D Synapse Array Architecture Based on Charge-Trap Flash Memory for Neuromorphic Application" Electronics 9, no. 1: 57. https://doi.org/10.3390/electronics9010057

APA StyleChoi, H.-S., Park, Y. J., Lee, J.-H., & Kim, Y. (2020). 3-D Synapse Array Architecture Based on Charge-Trap Flash Memory for Neuromorphic Application. Electronics, 9(1), 57. https://doi.org/10.3390/electronics9010057