An Algorithm for Natural Images Text Recognition Using Four Direction Features

Abstract

:1. Introduction

2. Related Works

2.1. Text Recognition

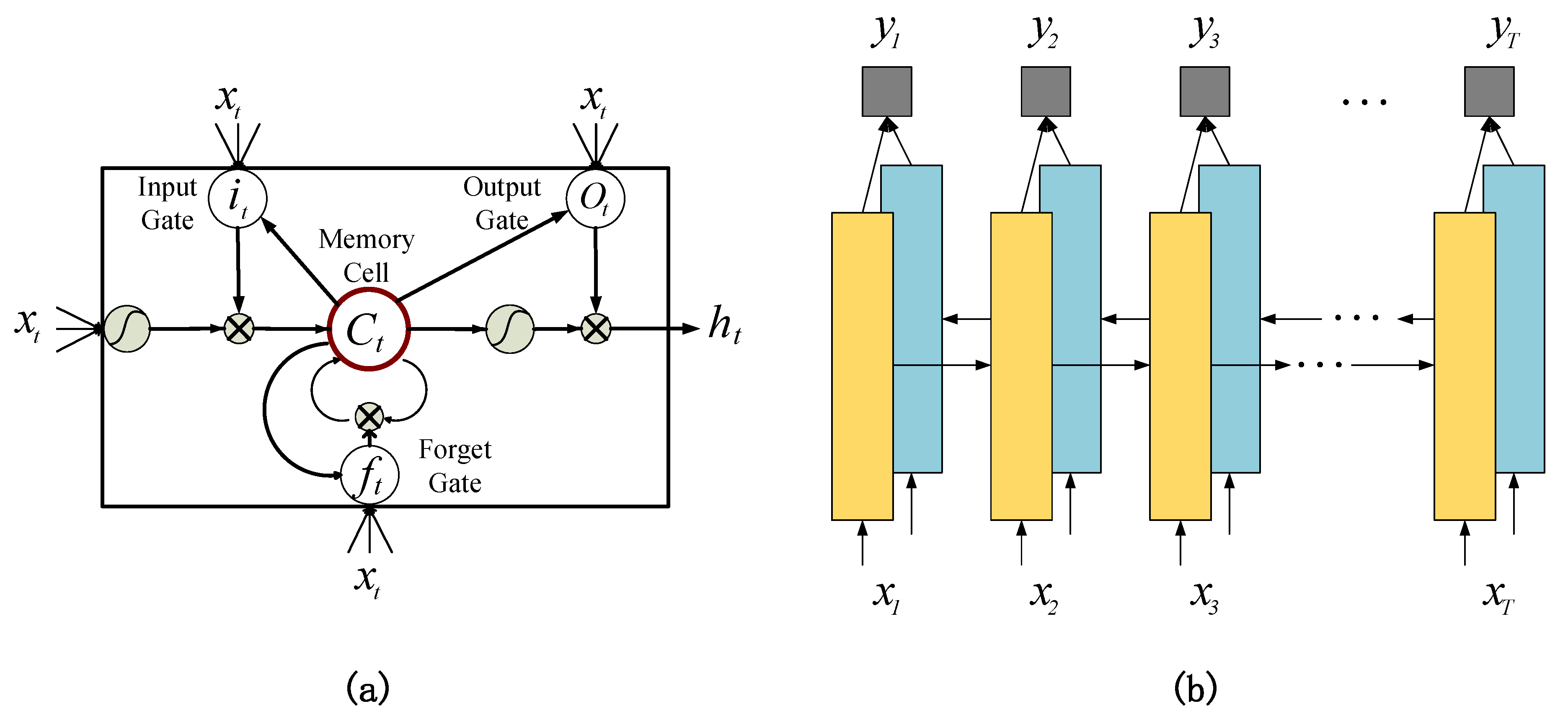

2.2. BiLSTM

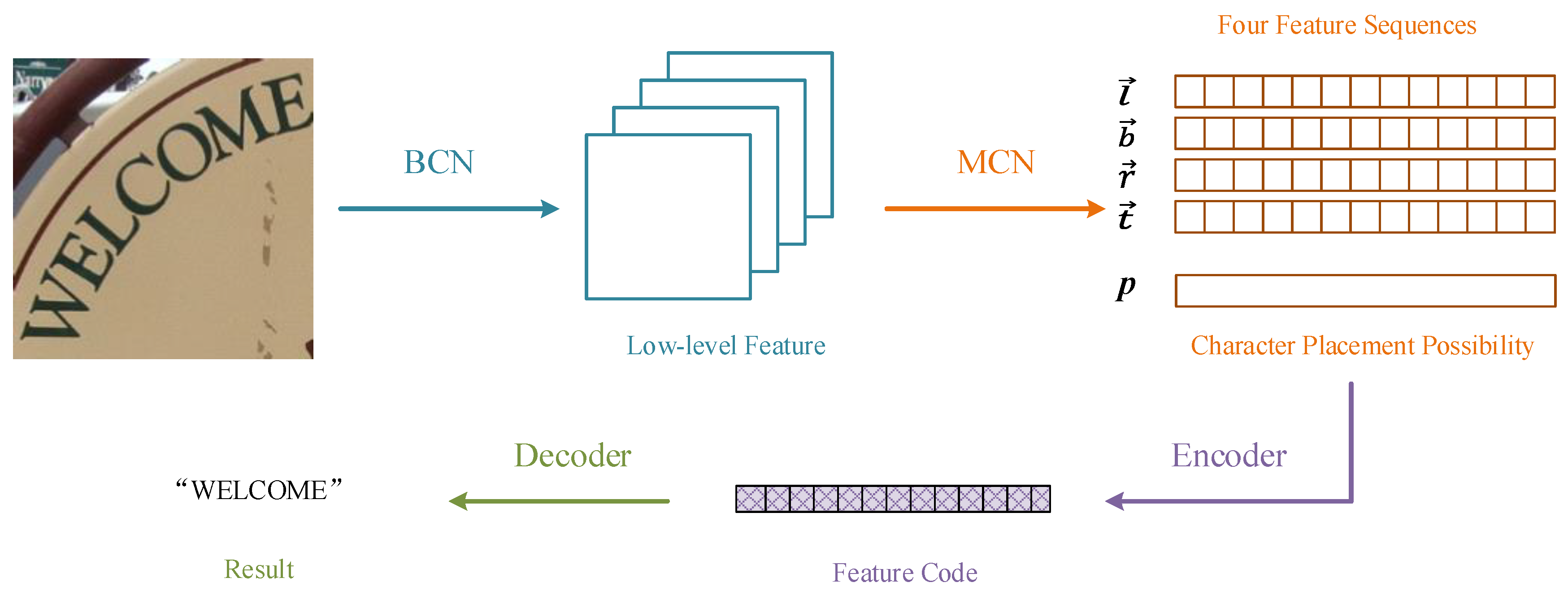

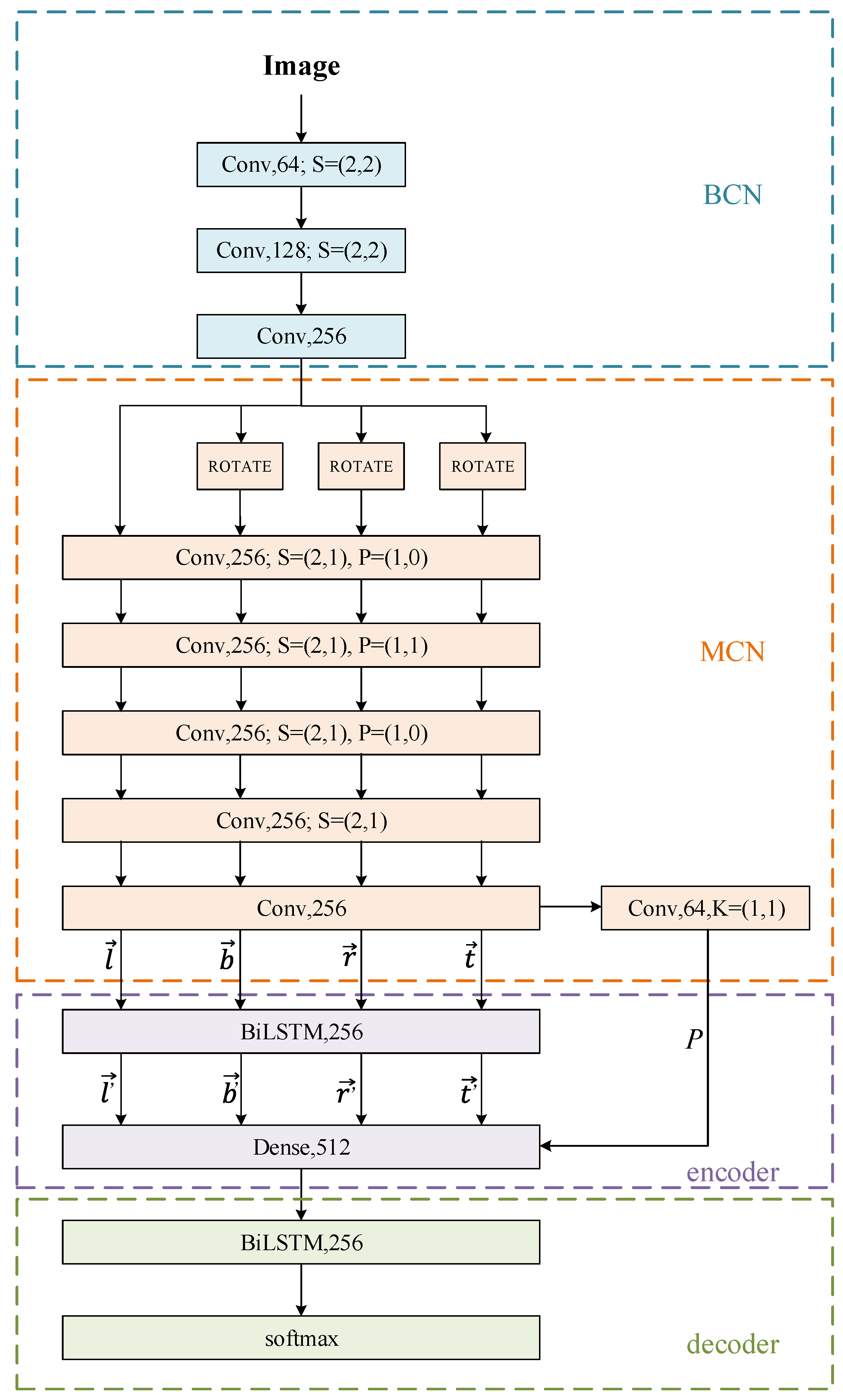

3. Framework

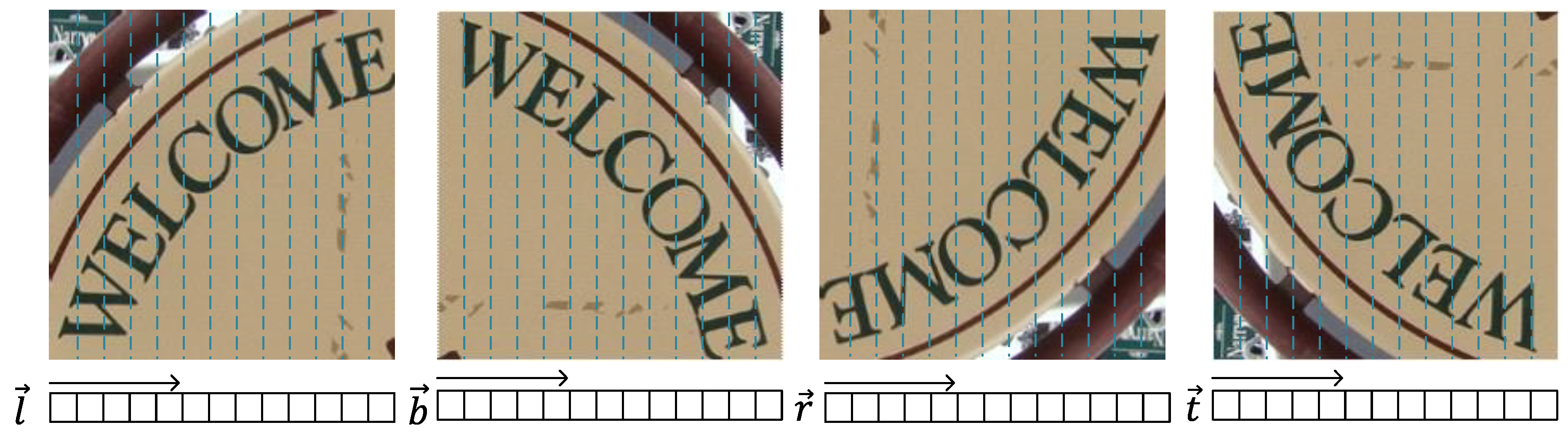

3.1. BCN and MCN

3.2. Encoder

3.3. Decoder

4. Experiments

4.1. Datasets

4.2. Details

4.3. Comparative Evaluation

4.4. Comparison with Baseline Models

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Bissacco, A.; Cummins, M.; Netzer, Y.; Neven, H. PhotoOCR: Reading Text in Uncontrolled Conditions. In Proceedings of the 2013 IEEE International Conference on Computer Vision, Sydney, NSW, Australia, 1–8 December 2013. [Google Scholar]

- Cheng, Z.; Bai, F.; Xu, Y.; Zheng, G.; Pu, S.; Zhou, S. Focusing Attention: Towards Accurate Text Recognition in Natural Images. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017. [Google Scholar]

- Jaderberg, M.; Simonyan, K.; Vedaldi, A.; Zisserman, A. Synthetic Data and Artificial Neural Networks for Natural Scene Text Recognition. arXiv. 2014. Available online: https://arxiv.org/abs/1406.2227 (accessed on 9 December 2014).

- Osindero, S.; Lee, C.Y. Recursive Recurrent Nets with Attention Modeling for OCR in the Wild. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Neumann, L.; Matas, J. Real-time scene text localization and recognition. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012. [Google Scholar]

- Shi, B.; Bai, X.; Yao, C. An End-to-End Trainable Neural Network for Image-based Sequence Recognition and Its Application to Scene Text Recognition. IEEE Trans. Pattern Anal. & Mach. Intell. 2016, 39, 2298–2304. [Google Scholar]

- Shi, B.; Lyu, P.; Wang, X.; Yao, C.; Bai, X. Robust Scene Text Recognition with Automatic Rectification. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Shi, B.; Yang, M.; Wang, X.; Lyu, P.; Yao, C.; Bai, X. ASTER: An Attentional Scene Text Recognizer with Flexible Rectification. IEEE Trans. Pattern Anal. & Mach. Intell. 2019, 41, 2035–2048. [Google Scholar]

- Gao, Y.; Chen, Y.; Wang, J.; Lei, Z.; Zhang, X.Y.; Lu, H. Recurrent Calibration Network for Irregular Text Recognition. arXiv. 2018. Available online: https://arxiv.org/abs/1812.07145 (accessed on 18 December 2018).

- Yang, X.; He, D.; Zhou, Z.; Kifer, D.; Giles, C.L. Learning to Read Irregular Text with Attention Mechanisms. In Proceedings of the Twenty-Sixth International Joint Conference on Artificial Intelligence (IJCAI-17), Melbourne, Australia, 19–25 August 2017. [Google Scholar]

- Wang, P.; Yang, L.; Li, H.; Deng, Y.; Shen, C.; Zhang, Y. A Simple and Robust Convolutional-Attention Network for Irregular Text Recognition. arXiv. 2019. Available online: https://arxiv.org/abs/1904.01375 (accessed on 2 April 2019).

- Bai, F.; Cheng, Z.; Niu, Y.; Pu, S.; Zhou, S. Edit Probability for Scene Text Recognition. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018. [Google Scholar]

- Jaderberg, M.; Simonyan, K.; Zisserman, A.; Kavukcuoglu, K. Spatial transformer networks in Advances in neural information processing systems. arXiv. 2016. Available online: https://arxiv.org/abs/1506.02025 (accessed on 4 February 2016).

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. , Boston, MA, USA, In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR) 2015, Boston, MA, USA, 8–10 June 2015. [Google Scholar]

- Ye, Q.; Doermann, D. Text detection and recognition in imagery: A survey. IEEE Trans. pattern Anal. Mach. Intell. 2014, 37, 1480–1500. [Google Scholar] [CrossRef] [PubMed]

- Jaderberg, M.; Simonyan, K.; Vedaldi, A.; Zisserman, A. Deep structured output learning for unconstrained text recognition. arXiv. 2015. Available online: https://arxiv.org/abs/1412.5903 (accessed on 10 April 2015).

- Wang, K.; Babenko, B.; Belongie, S. End-to-end scene text recognition. In Proceedings of the 2011 International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011. [Google Scholar]

- Wang, K.; Belongie, S. Word spotting in the wild. In Proceedings of the European Conference on Computer Vision, Heraklion, Crete, Greece, 5–11 September 2010. [Google Scholar]

- Jaderberg, M.; Simonyan, K.; Vedaldi, A.; Zisserman, A. Reading text in the wild with convolutional neural networks. Int. J. Comput. Vis. 2016, 116, 1–20. [Google Scholar] [CrossRef]

- He, P.; Huang, W.; Qiao, Y.; Loy, C.C.; Tang, X. Reading scene text in deep convolutional sequences. In Proceedings of the Thirtieth AAAI Conference on Artificial Intelligence, Phoenix, AZ, USA, 12–17 February 2016. [Google Scholar]

- Graves, A.; Fernández, S.; Gomez, F.; Schmidhuber, J. Connectionist temporal classification: labelling unsegmented sequence data with recurrent neural networks. In Proceedings of the 23rd international conference on Machine learning, Pittsburgh, PA, USA, 25–29 June 2006. [Google Scholar]

- Bengio, Y.; Simard, P.; Frasconi, P. Learning long-term dependencies with gradient descent is difficult. IEEE Trans. Neural Netw. 1994, 5, 157–166. [Google Scholar] [CrossRef] [PubMed]

- Gers, A.F.; Schraudolph, N.N.; Schmidhuber, J. Learning Precise Timing with LSTM Recurrent Networks. J. Mach. Learn. Res. 2002, 3, 115–143. [Google Scholar]

- Graves, A. Long Short-Term Memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar]

- Graves, A.; Mohamed, A.; Hinton, G. Speech recognition with deep recurrent neural networks. In Proceedings of the 2013 IEEE International Conference on Acoustics, Speech and Signal Processing, Vancouver, BC, Canada, 26–31 May 2013. [Google Scholar]

- Risnumawan, A.; Shivakumara, P.; Chan, C.S.; Tan, C.L. A robust arbitrary text detection system for natural scene images. Expert Syst. Appl. 2014, 41, 8027–8048. [Google Scholar] [CrossRef]

- Karatzas, D.; Gomez-Bigorda, L.; Nicolaou, A.; Ghosh, S.; Bagdanov, A.; Iwamura, M.; Matas, J.; Neumann, L.; Chandrasekhar, V.R.; Lu, S.; et al. ICDAR 2015 competition on robust reading. In Proceedings of the 2015 13th International Conference on Document Analysis and Recognition (ICDAR), Tunis, Tunisia, 23–26 August 2015. [Google Scholar]

- Mishra, A.; Alahari, K.; Jawahar, C. Scene text recognition using higher order language priors. In Proceedings of the BMVC-British Machine Vision Conference, Surrey, UK, 3–7 September 2012. [Google Scholar]

- Lucas, S.M.; Panaretos, A.; Sosa, L.; Tang, A.; Wong, S.; Young, R. ICDAR 2003 robust reading competitions. In Proceedings of the Seventh International Conference on Document Analysis and Recognition, Edinburgh, UK, 6 August 2003. [Google Scholar]

- Ioffe, S.; Szegedy, C. Batch Normalization: Batch Normalization: Accelerating Deep Network Training by Reducing Internal Covariate Shift. arXiv. 2015. Available online: https://arxiv.org/abs/1502.03167 (accessed on 2 March 2015).

- Zeiler, M.D. ADADELTA: An Adaptive Learning Rate Method. arXiv. 2012. Available online: https://arxiv.org/abs/1212.5701 (accessed on 22 December 2012).

- Abadi, M.; Barham, P.; Chen, J.; Chen, Z.; Davis, A.; Dean, J.; Devin, M.; Ghemawat, S.; Irving, G.; Isard, M.; et al. TensorFlow: A system for large-scale machine learning. In Proceedings of the 12th USENIX Symposium on Operating Systems Design and Implementation (OSDI ’16), Savannah, GA, USA, 2–4 November 2016. [Google Scholar]

- Wang, T.; Wu, D.J.; Coates, A.; Ng, A.Y. End-to-end text recognition with convolutional neural networks. In Proceedings of the 21st International Conference on Pattern Recognition (ICPR2012), Tsukuba, Japan, 11–15 November 2012. [Google Scholar]

- Goel, V.; Mishra, A.; Alahari, K.; Jawahar, C.V. Whole is greater than sum of parts: Recognizing scene text words. In Proceedings of the 2013 12th International Conference on Document Analysis and Recognition, Washington, DC, USA, 25–28 August 2013. [Google Scholar]

- Alsharif, O.; Pineau, J. End-to-End Text Recognition with Hybrid HMM Maxout Models. arXiv. 2013. Available online: https://arxiv.org/abs/1310.1811 (accessed on 7 October 2013).

- Almazán, J.; Gordo, A.; Fornés, A.; Valveny, E. Word Spotting and Recognition with Embedded Attributes. IEEE Trans. Pattern Anal. Mach. Intell. 2014, 36, 2552–2566. [Google Scholar] [CrossRef] [PubMed]

- Yao, C.; Bai, X.; Shi, B.; Liu, W. Strokelets: A Learned Multi-scale Representation for Scene Text Recognition. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 24–27 June 2014. [Google Scholar]

- Su, B.; Lu, S. Accurate scene text recognition based on recurrent neural network. In Proceedings of the Asian Conference on Computer Vision, Singapore, 1–5 November 2014. [Google Scholar]

- Gordo, A. Supervised mid-level features for word image representation. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 8–10 June 2015. [Google Scholar]

| Method | IIIT5K | SVT | IC03 | IC13 | |||||

|---|---|---|---|---|---|---|---|---|---|

| 50 | 1k | None | 50 | None | 50 | Full | None | None | |

| ABBYY [17] | 24.3 | - | - | 35.0 | - | 56.0 | 55.0 | - | - |

| Wang et al. [17] | - | - | - | 57.0 | - | 76.0 | 62.0 | - | - |

| Mishra et al. [25] | 64.1 | 57.5 | - | 73.0 | - | 81.8 | 67.8 | - | - |

| Wang et al. [33] | - | - | - | 70.0 | - | 90.0 | 84.0 | - | - |

| Goel et al. [34] | - | - | - | 77.3 | - | 89.7 | - | - | 87.6 |

| Bissacco et al. [1] | - | - | - | 90.4 | 78.0 | - | - | - | - |

| Alsharif [35] | - | - | - | 74.3 | - | 93.1 | 88.6 | - | - |

| Almazan et al. [36] | 91.2 | 82.1 | - | 89.2 | - | - | - | ||

| Yao et al. [37] | 80.2 | 69.3 | - | 75.9 | - | 88.5 | 80.3 | - | - |

| Jaderberg et al. [16] | - | - | - | 86.1 | - | 96.2 | 91.5 | - | - |

| Su and Lu [38] | - | - | - | 93.0 | - | 92.0 | 82.0 | - | - |

| Gordo [39] | 93.3 | 86.6 | - | 91.8 | - | - | - | - | - |

| Jaderberg et al. [19] | 97.1 | 92.7 | - | 95.4 | 80.7 | 98.7 | 98.6 | 93.1 | 90.8 |

| Jaderberg et al. [16] | 95.5 | 89.6 | - | 93.2 | 71.7 | 97.8 | 97.0 | 89.6 | 81.8 |

| Shi et al. [6] | 97.6 | 94.4 | 78.2 | 96.4 | 80.8 | 98.7 | 97.6 | 89.4 | 86.7 |

| Shi et al. [7] | 96.2 | 93.8 | 81.9 | 95.5 | 81.9 | 98.3 | 96.2 | 90.1 | 88.6 |

| Cheng’s baseline [2] | 98.9 | 96.8 | 83.7 | 95.7 | 82.2 | 98.5 | 96.7 | 91.5 | 89.4 |

| Cheng et al. [2] | 99.3 | 97.5 | 87.4 | 97.1 | 85.9 | 99.2 | 97.3 | 94.2 | 93.3 |

| Ours | 99.0 | 96.9 | 87.2 | 97.3 | 87.4 | 97.8 | 96.4 | 91.0 | 90.1 |

| Method | CT80 | IC15 |

|---|---|---|

| None | None | |

| Shi et al. [6] | 54.9 | - |

| Shi et al. [7] | 59.2 | - |

| Cheng et al. [2] | 63.9 | 63.3 |

| ours | 65.1 | 63.5 |

| Method | End-to-End | CharGT | Dictionary | Model Size |

|---|---|---|---|---|

| Wang et al. [17] | × | NEEDED | NOT NEED | - |

| Mishra et al. [25] | × | NEEDED | NEEDED | - |

| Wang et al. [33] | × | NEEDED | NOT NEED | - |

| Goel et al. [34] | × | NOT NEED | NEEDED | - |

| Bissacco et al. [1] | × | NEEDED | NOT NEED | - |

| Alsharif [35] | × | NEEDED | NOT NEED | - |

| Almazan et al. [36] | × | NOT NEED | NEEDED | - |

| Yao et al. [37] | × | NEEDED | NOT NEED | - |

| Jaderberg et al. [16] | × | NEEDED | NOT NEED | - |

| Su and Lu [38] | × | NOT NEED | NOT NEED | - |

| Gordo [39] | × | NEEDED | NEEDED | - |

| Jaderberg et al. [19] | √ | NOT NEED | NEEDED | 490 M |

| Jaderberg et al. [16] | √ | NOT NEED | NOT NEED | 304 M |

| Shi et al. [6] | √ | NOT NEED | NOT NEED | 8.3 M |

| ours | √ | NOT NEED | NOT NEED | 15.4 M |

| Method | Regular Dataset | Irregular Dataset | ||||

|---|---|---|---|---|---|---|

| IIIT5K | SVT | IC03 | IC13 | CT80 | IC15 | |

| Without_4 | 73.3 | 77.4 | 82.4 | 78.9 | 39.8 | 50.4 |

| Without_P | 79.6 | 81.2 | 85.7 | 82.5 | 54.5 | 54.6 |

| ours | 87.2 | 87.4 | 91.0 | 90.1 | 65.1 | 63.5 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, M.; Yan, Y.; Wang, H.; Zhao, W. An Algorithm for Natural Images Text Recognition Using Four Direction Features. Electronics 2019, 8, 971. https://doi.org/10.3390/electronics8090971

Zhang M, Yan Y, Wang H, Zhao W. An Algorithm for Natural Images Text Recognition Using Four Direction Features. Electronics. 2019; 8(9):971. https://doi.org/10.3390/electronics8090971

Chicago/Turabian StyleZhang, Min, Yujin Yan, Hai Wang, and Wei Zhao. 2019. "An Algorithm for Natural Images Text Recognition Using Four Direction Features" Electronics 8, no. 9: 971. https://doi.org/10.3390/electronics8090971

APA StyleZhang, M., Yan, Y., Wang, H., & Zhao, W. (2019). An Algorithm for Natural Images Text Recognition Using Four Direction Features. Electronics, 8(9), 971. https://doi.org/10.3390/electronics8090971