Online Learned Siamese Network with Auto-Encoding Constraints for Robust Multi-Object Tracking

Abstract

:1. Introduction

- (1)

- A simple structure Siamese network with an auto-encoding constraint is proposed to extract discriminative features efficiently for objects on the scene. An auto-encoding constraint is added to prevent overfitting when training data are limited.

- (2)

- A composite feature of tracklet, PAN, is defined, which combines appearance and motion through joint learning. To describe the sequential features of tracklets better, data association based on PAN is more reliable.

- (3)

- A tracking framework is established that includes reliable tracklet generation by incremental learning with SNAC for the detection response, tracklet growth to enhance PAN performance and deal with missing detections, and tracklet association with PAN to generate complete trajectories.

2. Related Works

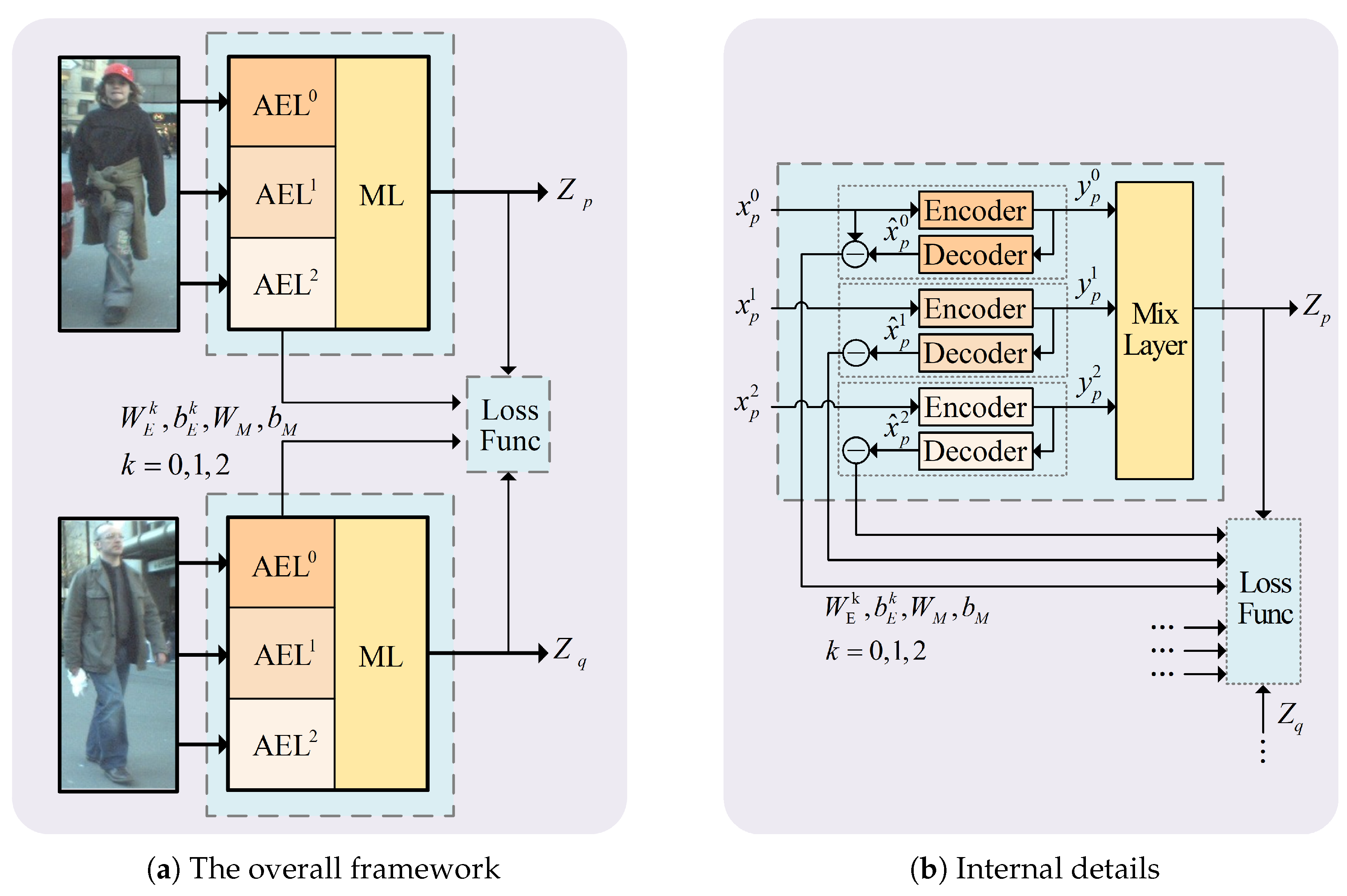

3. Online Learned Siamese Network with Auto-Encoding Constraint

3.1. The Structure of SNAC

3.2. Loss Function and Auto-Encoding Constraint

3.3. Denoising through the Collection of Training Samples

3.4. Iterative Tracklet Generation with SNAC by Incremental Learning

| Algorithm 1 Iterative tracklet building with SNAC by incremental learning. |

| Input:, detection set of each frame |

Output:, tracklet setup to frame t

|

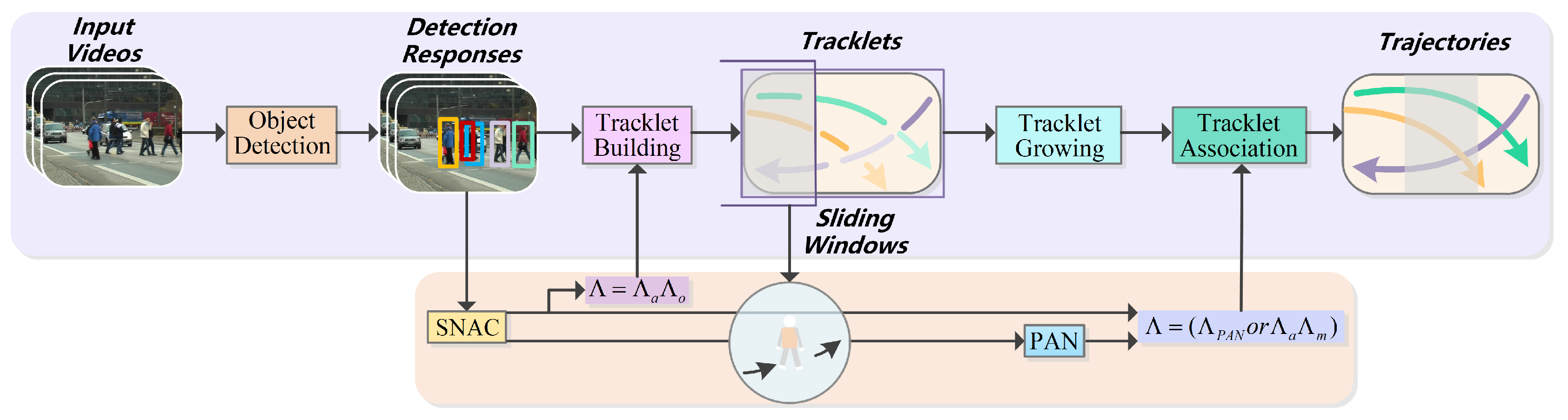

4. Multi-Object Tracking Framework

4.1. Overall Framework

4.2. Previous-Appearance-Next Feature of the Tracklet

4.3. Tracklet Growing

4.4. Tracklet Association in Sliding Windows

5. Experiments

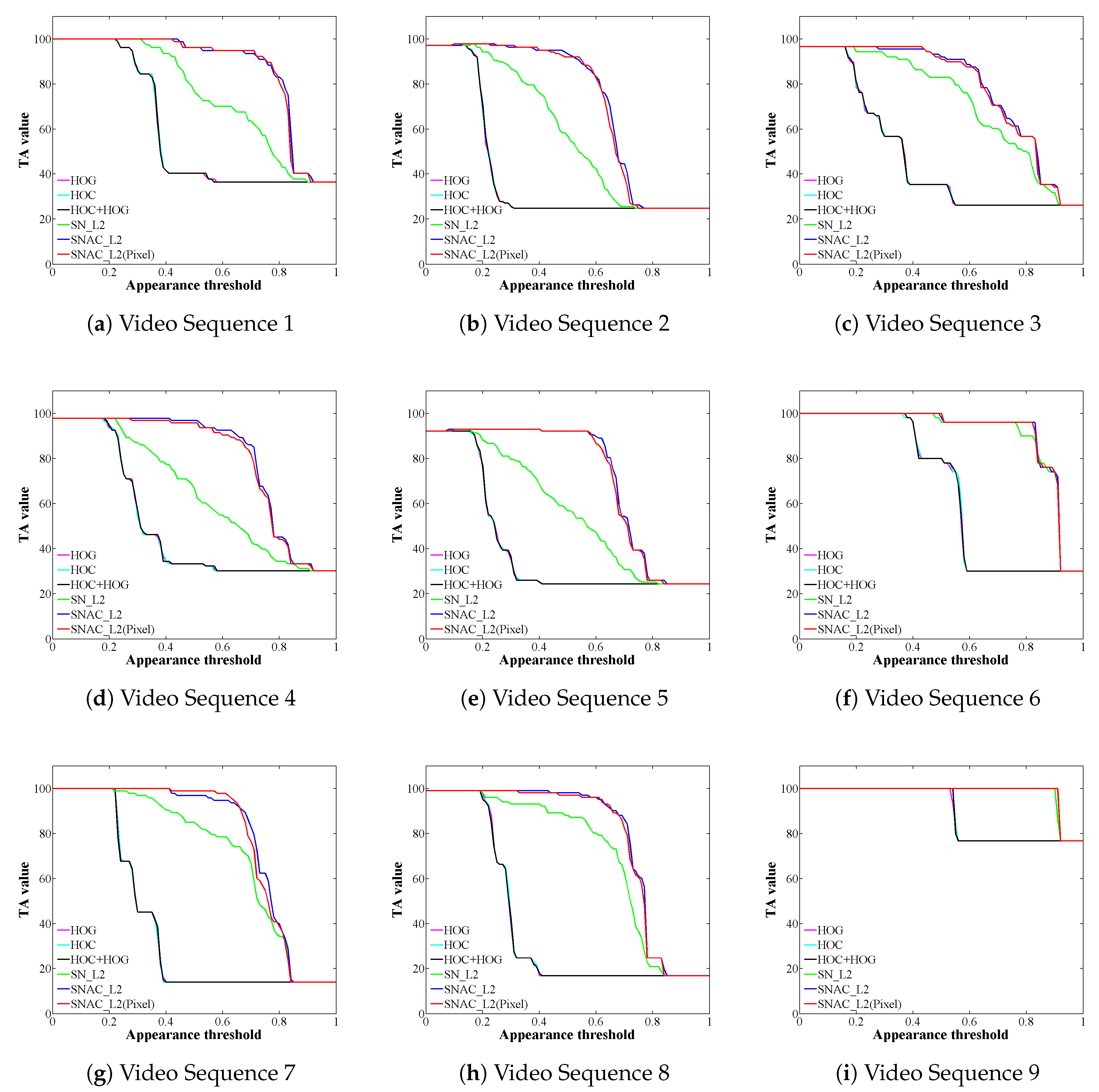

5.1. Evaluation of SNAC

5.1.1. SNAC for Detection Responses

5.1.2. SNAC for Tracklets

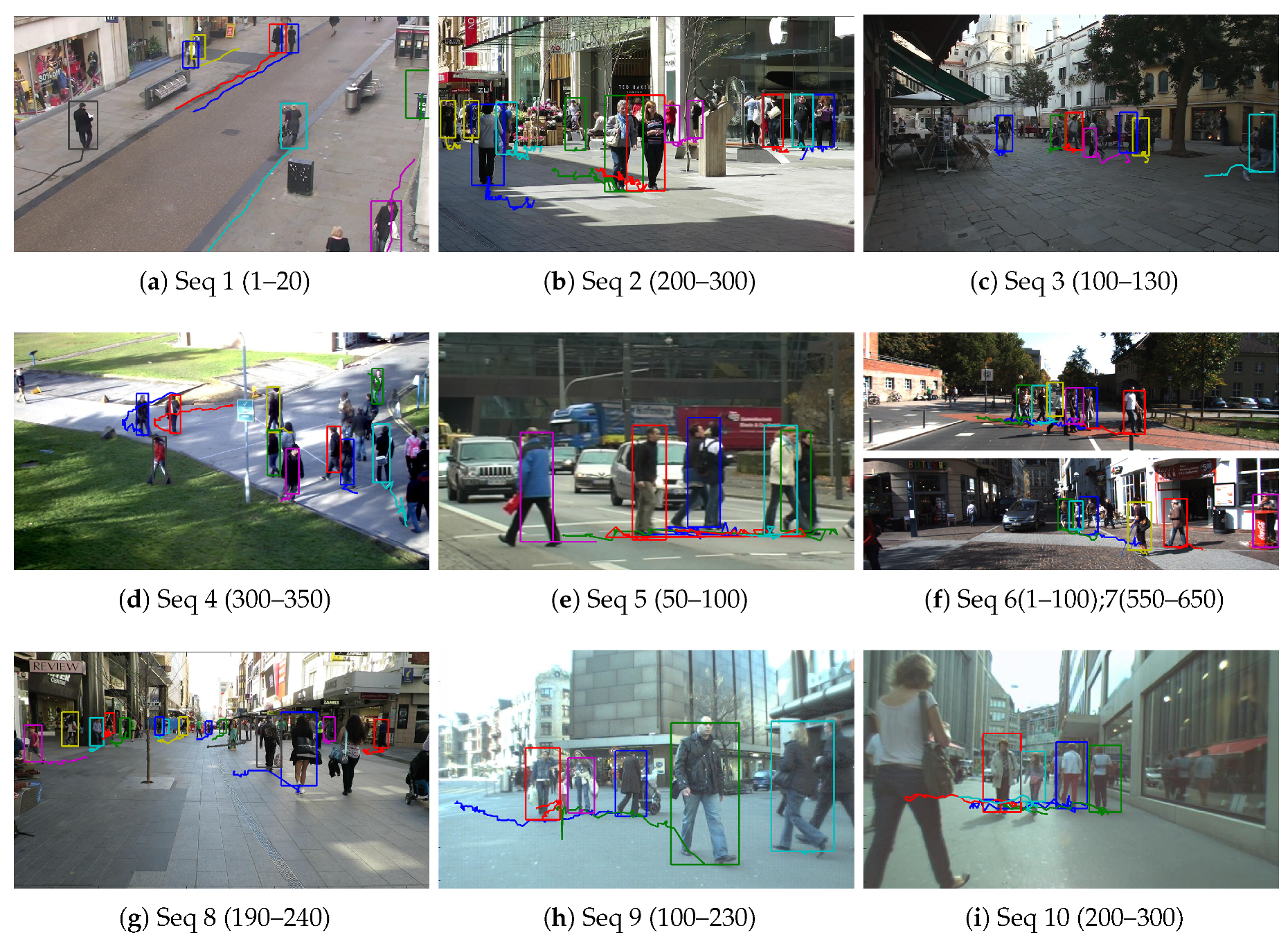

5.2. Evaluation of the MOT System

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Felzenszwalb, P.F.; Girshick, R.B.; Mcallester, D.; Ramanan, D. Object detection with discriminatively trained part-based models. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 32, 1627–1645. [Google Scholar] [CrossRef] [PubMed]

- Dollár, P.; Appel, R.; Belongie, S.; Perona, P. Fast feature pyramids for object detection. IEEE Trans. Pattern Anal. Mach. Intell. 2014, 36, 1532–1545. [Google Scholar] [CrossRef] [PubMed]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- Huang, C.; Li, Y.; Nevatia, R. Multiple target tracking by learning-based hierarchical association of detection responses. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 898–910. [Google Scholar] [CrossRef] [PubMed]

- Yang, B.; Nevatia, R. Multi-target tracking by online learning of non-linear motion patterns and robust appearance models. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 1918–1925. [Google Scholar]

- Zhang, L.; Li, Y.; Nevatia, R. Global data association for multi-object tracking using network flows. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Anchorage, AK, USA, 23–28 June 2008; pp. 1–8. [Google Scholar]

- Chari, V.; Lacoste-Julien, S.; Laptev, I.; Sivic, J. On pairwise costs for network flow multi-object tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 5537–5545. [Google Scholar]

- Pirsiavash, H.; Ramanan, D.; Fowlkes, C.C. Globally optimal greedy algorithms for tracking a variable number of objects. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Colorado Springs, CO, USA, 20–25 June 2011; pp. 1201–1208. [Google Scholar]

- Milan, A.; Schindler, K.; Roth, S. Multi-target tracking by discrete-continuous energy minimization. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 38, 2054–2068. [Google Scholar] [CrossRef] [PubMed]

- Milan, A.; Roth, S.; Schindler, K. Continuous energy minimization for multitarget tracking. IEEE Trans. Pattern Anal. Mach. Intell. 2014, 36, 58–72. [Google Scholar] [CrossRef]

- Yang, B.; Nevatia, R. Multi-target tracking by online learning a crf model of appearance and motion patterns. Int. J. Comput. Vis. 2014, 107, 203–217. [Google Scholar] [CrossRef]

- Zhou, H.; Ouyang, W.; Cheng, J.; Wang, X.; Li, H. Deep continuous conditional random fields with asymmetric inter-object constraints for online multi-object tracking. IEEE Trans. Pattern Anal. Mach. Intell. 2014, 36, 58–72. [Google Scholar] [CrossRef]

- Wen, L.; Lei, Z.; Lyu, S.; Li, S.Z.; Yang, M.H. Exploiting hierarchical dense structures on hypergraphs for multi-object tracking. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 38, 1983–1996. [Google Scholar] [CrossRef] [PubMed]

- Schulter, S.; Vernaza, P.; Choi, W.; Chandraker, M. Deep network flow for multi-object tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2730–2739. [Google Scholar]

- Wang, B.; Wang, L.; Shuai, B.; Zuo, Z.; Liu, T.; Chan, K.L.; Wang, G. Joint learning of convolutional neural networks and temporally constrained metrics for tracklet association. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Las Vegas, NV, USA, 26–30 June 2016; pp. 386–393. [Google Scholar]

- Yoon, K.; Kim, D.Y.; Yoon, Y.C.; Jeon, M. Data association for multi-object tracking via deep neural networks. Sensors 2019, 19, 559. [Google Scholar] [CrossRef] [PubMed]

- Yoon, Y.C.; Song, Y.M.; Yoon, K.; Jeon, M. Online multi-object tracking using selective deep appearance matching. In Proceedings of the IEEE Conference on Consumer Electronics-Asia, Jeju, Korea, 24–26 June 2018; pp. 206–212. [Google Scholar]

- Dalal, V.; Triggs, B. Histograms of oriented gradients for human detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, San Diego, CA, USA, 20–25 June 2005; pp. 886–893. [Google Scholar]

- Wang, X.; Han, T.X.; Yan, S. An hog-lbp human detector with partial occlusion handling. In Proceedings of the IEEE International Conference on Computer Vision, Kyoto, Japan, 29 September–2 October 2009; pp. 32–39. [Google Scholar]

- Kuo, C.H.; Nevatia, R. How does person identity recognition help multi-person tracking? In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Colorado Springs, CO, USA, 20–25 June 2011; pp. 1217–1224. [Google Scholar]

- Bae, S.H.; Yoon, K.J. Confidence-based data association and discriminative deep appearance learning for robust online multi-object tracking. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 40, 595–610. [Google Scholar] [CrossRef] [PubMed]

- Leal-Taixé, L.; Canton-Ferrer, C.; Schindler, K. Learning by tracking: Siamese cnn for robust target association. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26–30 June 2016; pp. 418–425. [Google Scholar]

- Yoon, Y.C.; Boragule, A.; Song, Y.M.; Yoon, K.; Jeon, M. Online multi-object tracking with historical appearance matching and scene adaptive detection filtering. In Proceedings of the IEEE International Conference on Advanced Video and Signal Based Surveillance, Auckland, New Zealand, 27–30 November 2018; pp. 1–6. [Google Scholar]

- Wang, N.; Yeung, D.Y. Learning a deep compact image representation for visual tracking. In Proceedings of the International Conference on Neural Information Processing Systems, Lake Tahoe, NV, USA, 5–10 December 2013; pp. 809–817. [Google Scholar]

- Feng, H.; Li, X.; Liu, P.; Zhou, N. Using stacked auto-encoder to get feature with continuity and distinguishability in multi-object tracking. In Proceedings of the International Conference on Image and Graphics, Shanghai, China, 13–15 September 2017; pp. 351–361. [Google Scholar]

- Kuo, C.H.; Huang, C.; Nevatia, R. Multi-target tracking by on-line learned discriminative appearance models. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, San Francisco, CA, USA, 13–18 June 2010; pp. 685–692. [Google Scholar]

- Butt, A.A.; Collins, R.T. Multiple target tracking using frame triplets. In Proceedings of the IEEE Conference on Asian Conference on Computer Vision, Daejeon, Korea, 5–9 November 2012; pp. 163–176. [Google Scholar]

- Wang, L.; Ouyang, W.; Wang, X.; Lu, H. Visual tracking with fully convolutional networks. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 3119–3127. [Google Scholar]

- Nam, H.; Han, B. Learning multi-domain convolutional neural networks for visual tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 4293–4302. [Google Scholar]

- Chen, X.; Zhang, X.; Tan, H.; Lan, L.; Luo, Z.; Huang, X. Multi-granularity hierarchical attention siamese network for visual tracking. In Proceedings of the 2018 International Joint Conference on Neural Networks, Rio de Janeiro, Brazil, 8–13 July 2018; pp. 1–8. [Google Scholar]

- Milan, A.; Rezatofighi, S.H.; Dick, A.; Reid, I.; Schindler, K. Online multi-target tracking using recurrent neural networks. In Proceedings of the AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 4–9 February 2017; pp. 4225–4232. [Google Scholar]

- Sadeghian, A.; Alahi, A.; Savarese, S. Tracking the untrackable: Learning to track multiple cues with long-term dependencies. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 300–311. [Google Scholar]

- Son, J.; Baek, M.; Cho, M.; Han, B. Multi-object tracking with quadruplet convolutional neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 3786–3795. [Google Scholar]

- Hu, J.; Lu, J.; Tan, Y.P. Discriminative deep metric learning for face verification in the wild. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 1875–1882. [Google Scholar]

- Li, W.; Zhao, R.; Xiao, T.; Wang, X. Deepreid: Deep filter pairing neural network for person re-identification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 152–159. [Google Scholar]

- Wen, L.; Lei, Z.; Chang, M.C.; Qi, H.; Lyu, S. Multi-camera multi-target tracking with space-time-view hyper-graph. Int. J. Comput. Vis. 2017, 112, 313–333. [Google Scholar] [CrossRef]

- Milan, A.; Leal-Taixé, L.; Schindler, K.; Cremers, D.; Roth, S.; Reid, I. Multiple Object Tracking Benchmark. 2015. Available online: https://motchallenge.net (accessed on 10 March 2019).

- Stiefelhagen, R.; Bernardin, K. Evaluating multiple object tracking performance: The clear mot metrics. EURASIP J. Image Video Process. 2008, 2008, 1–10. [Google Scholar]

- Deep Learning Tutorials. 2013. Available online: http://deeplearning.net/tutorial/ (accessed on 2 October 2018).

- Liu, P.; Li, X.; Feng, H.; Fu, Z. Multi-object tracking by virtual nodes added min-cost network flow. In Proceedings of the IEEE International Conference on Image Processing, Beijing, China, 17–20 September 2017; pp. 2577–2581. [Google Scholar]

- Fagot-Bouquet, L.; Audigier, R.; Dhome, Y.; Lerasle, F. Improving multi-frame data association with sparse representations for robust near-online multi-object tracking. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016. [Google Scholar]

- Wang, S.; Fowlkes, C.C. Learning optimal parameters for multi-target tracking with contextual interactions. Int. J. Comput. Vis. 2017, 122, 484–501. [Google Scholar] [CrossRef]

- Pang, Y.; Shi, X.; Jia, B.; Blasch, E.; Sheaff, C. Multiway histogram intersection for multi-target tracking. In Proceedings of the IEEE International Conference on Information Fusion, Washington, DC, USA, 6–9 July 2015; pp. 1938–1945. [Google Scholar]

- Shi, X.; Ling, H.; Pang, Y.; Hu, W.; Chu, P.; Xing, J. Rank-1 tensor approximation for high-order association in multi-target tracking. Int. J. Comput. Vis. 2019, 1–21. [Google Scholar] [CrossRef]

| Symbol | Definition |

|---|---|

| SNAC | Siamese network with an auto-encoding constraint |

| the detection response in frame t | |

| detection responses set in frame t | |

| sequence of for | |

| the tracklet up to frame t | |

| set of all tracklets up to frame t | |

| feature vector of SNAC for | |

| a set consisting of an element d | |

| the set with d deleted | |

| the sample set | |

| appearance similarity between T and d | |

| overall affinity between T and d |

| Methods | SNAC_L2 | SN_L2 | SNAC_L2(Pixel) | HOC | HOG | HOC + HOG | ||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Indicators | AD | Var | AD | Var | AD | Var | AD | Var | AD | Var | AD | Var |

| Sequence 1 | 0.8152 | 0.0568 | 0.8112 | 0.0600 | 0.6189 | 0.0989 | 0.1315 | 0.0029 | 0.1295 | 0.0030 | 0.1324 | 0.0027 |

| Sequence 2 | 0.7337 | 0.0490 | 0.7387 | 0.0539 | 0.6589 | 0.0811 | 0.1494 | 0.0041 | 0.1471 | 0.0041 | 0.1503 | 0.0040 |

| Sequence 3 | 0.7832 | 0.0347 | 0.7930 | 0.0362 | 0.7736 | 0.0397 | 0.1405 | 0.0017 | 0.1426 | 0.0016 | 0.1409 | 0.0017 |

| Sequence 4 | 0.7983 | 0.0392 | 0.8150 | 0.0295 | 0.5807 | 0.0785 | 0.1656 | 0.0029 | 0.1657 | 0.0031 | 0.1690 | 0.0030 |

| Sequence 5 | 0.8265 | 0.0194 | 0.8395 | 0.0184 | 0.6435 | 0.0759 | 0.1855 | 0.0032 | 0.1851 | 0.0036 | 0.1869 | 0.0033 |

| Sequence 6 | 0.8279 | 0.0333 | 0.8232 | 0.0352 | 0.8490 | 0.0358 | 0.2043 | 0.0022 | 0.2084 | 0.0029 | 0.2061 | 0.0025 |

| Sequence 7 | 0.7662 | 0.0196 | 0.7770 | 0.0280 | 0.7863 | 0.0403 | 0.1358 | 0.0015 | 0.1348 | 0.0015 | 0.1359 | 0.0015 |

| Sequence 8 | 0.8149 | 0.0200 | 0.8244 | 0.0160 | 0.7813 | 0.0530 | 0.1642 | 0.0021 | 0.1651 | 0.0021 | 0.1626 | 0.0022 |

| Sequence 9 | 0.9015 | 0.0001 | 0.9000 | 0.0001 | 0.9003 | 0.0001 | 0.1353 | 0.0005 | 0.1423 | 0.0003 | 0.1364 | 0.0001 |

| Sequence | 1 | 2 | 3 | 4 | 5 | 6 |

|---|---|---|---|---|---|---|

| HOC + HOG | 0.076 | 0.070 | 0.048 | 0.230 | 0.060 | 0.004 |

| SNAC | 0.151 | 0.256 | 0.200 | 0.193 | 0.128 | 0.246 |

| PAN | 0.208 | 0.404 | 0.369 | 0.289 | 0.224 | 0.379 |

| Method | MOTA↑ | MOTP↑ | MT↑ | ML↓ | FP↓ | FN↓ | IDs↓ | Frag↓ |

|---|---|---|---|---|---|---|---|---|

| Proposed | 29.3 | 68.6 | 12.9% | 36.3% | 9880 | 32173 | 1385 | 2226 |

| Siamese CNN [22] | 29.0 | 71.2 | 8.5% | 48.4% | 5160 | 37,798 | 639 | 1316 |

| HAM_INTP15 [23] | 28.6 | 71.1 | 10.0% | 44.0% | 7485 | 35,910 | 460 | 1038 |

| CEISP [40] | 25.8 | 70.9 | 10.0% | 44.0% | 6316 | 37,798 | 1493 | 2240 |

| LP_SSVM [42] | 25.2 | 71.7 | 5.8% | 53.0% | 8369 | 36,932 | 646 | 849 |

| LINF1 [41] | 24.5 | 71.3 | 5.5% | 64.6% | 5864 | 40,207 | 298 | 744 |

| TENSOR [44] | 24.3 | 71.6 | 5.5% | 46.6% | 6644 | 38,582 | 1271 | 1304 |

| DEEPDA_MOT [16] | 22.5 | 70.9 | 6.4% | 62.0% | 7346 | 39,092 | 1159 | 1538 |

| MTSTracker [43] | 20.6 | 70.3 | 9.0% | 63.9% | 15,161 | 32,212 | 1387 | 2357 |

| TC_Siamese [17] | 20.2 | 71.1 | 2.6% | 67.5% | 6127 | 42,596 | 294 | 825 |

| DCO_X [9] | 19.6 | 71.4 | 5.1% | 54.9% | 10,652 | 38,232 | 521 | 819 |

| RNN_LSTM [31] | 19.0 | 71.0 | 5.5% | 45.6% | 11,578 | 36,706 | 1490 | 2081 |

| DP_NMS [8] | 14.5 | 70.8 | 6.0% | 40.8% | 13,171 | 34,814 | 4537 | 3090 |

| Modules | Detections Training | Tracklets Generation | Tracklets Training | Trajectories Generation | Whole System | |||||

|---|---|---|---|---|---|---|---|---|---|---|

| Indicators | C (sec) | E (fps) | C (sec) | E (fps) | C (sec) | E (fps) | C (sec) | E (fps) | C (sec) | E (fps) |

| AVG-Town | 24.1358 | 0.0414 | 0.0255 | 39.2311 | 20.2524 | 0.0494 | 0.0383 | 26.1271 | 44.4519 | 0.0225 |

| ADL-1 | 26.9062 | 0.0372 | 0.0460 | 21.7297 | 12.0443 | 0.0830 | 0.0186 | 53.6481 | 39.0152 | 0.0256 |

| Venice | 12.0126 | 0.08328 | 0.0021 | 469.7286 | 3.4141 | 0.2929 | 0.0036 | 278.74 | 15.4324 | 0.0648 |

| PETS2L2 | 28.6360 | 0.0349 | 0.0328 | 30.4669 | 27.0256 | 0.0370 | 0.0622 | 16.0834 | 55.7565 | 0.0179 |

| TUD-Cro | 5.1749 | 0.1932 | 0.0063 | 157.8591 | 0.9073 | 1.1022 | 0.0012 | 840.3361 | 6.0897 | 0.1642 |

| KITTI16 | 14.7907 | 0.0676 | 0.0174 | 57.4918 | 3.6223 | 0.2761 | 0.0160 | 62.3750 | 18.4464 | 0.0542 |

| KITTI19 | 4.1255 | 0.2424 | 0.0059 | 170.6446 | 0.6580 | 1.5198 | 0.0034 | 292.0029 | 4.7928 | 0.2086 |

| ADL-3 | 15.1071 | 0.0662 | 0.0220 | 45.5284 | 1.2581 | 0.7949 | 0.0026 | 389.1656 | 16.3897 | 0.0610 |

| ETH-Jel | 5.9893 | 0.1670 | 0.0083 | 120.0808 | 0.6869 | 1.4559 | 0.0017 | 582.0106 | 6.6862 | 0.1496 |

| ETH-Lin | 4.9171 | 0.2034 | 0.0098 | 101.5885 | 0.6640 | 1.5059 | 0.0011 | 946.5673 | 5.5921 | 0.1788 |

| ETH-Cro | 3.6163 | 0.2765 | 0.0050 | 199.2754 | 0.3902 | 2.5631 | 0.0006 | 1657.8749 | 4.0121 | 0.2492 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, P.; Li, X.; Liu, H.; Fu, Z. Online Learned Siamese Network with Auto-Encoding Constraints for Robust Multi-Object Tracking. Electronics 2019, 8, 595. https://doi.org/10.3390/electronics8060595

Liu P, Li X, Liu H, Fu Z. Online Learned Siamese Network with Auto-Encoding Constraints for Robust Multi-Object Tracking. Electronics. 2019; 8(6):595. https://doi.org/10.3390/electronics8060595

Chicago/Turabian StyleLiu, Peixin, Xiaofeng Li, Han Liu, and Zhizhong Fu. 2019. "Online Learned Siamese Network with Auto-Encoding Constraints for Robust Multi-Object Tracking" Electronics 8, no. 6: 595. https://doi.org/10.3390/electronics8060595

APA StyleLiu, P., Li, X., Liu, H., & Fu, Z. (2019). Online Learned Siamese Network with Auto-Encoding Constraints for Robust Multi-Object Tracking. Electronics, 8(6), 595. https://doi.org/10.3390/electronics8060595