A CNN Based Automated Activity and Food Recognition Using Wearable Sensor for Preventive Healthcare

Abstract

1. Introduction

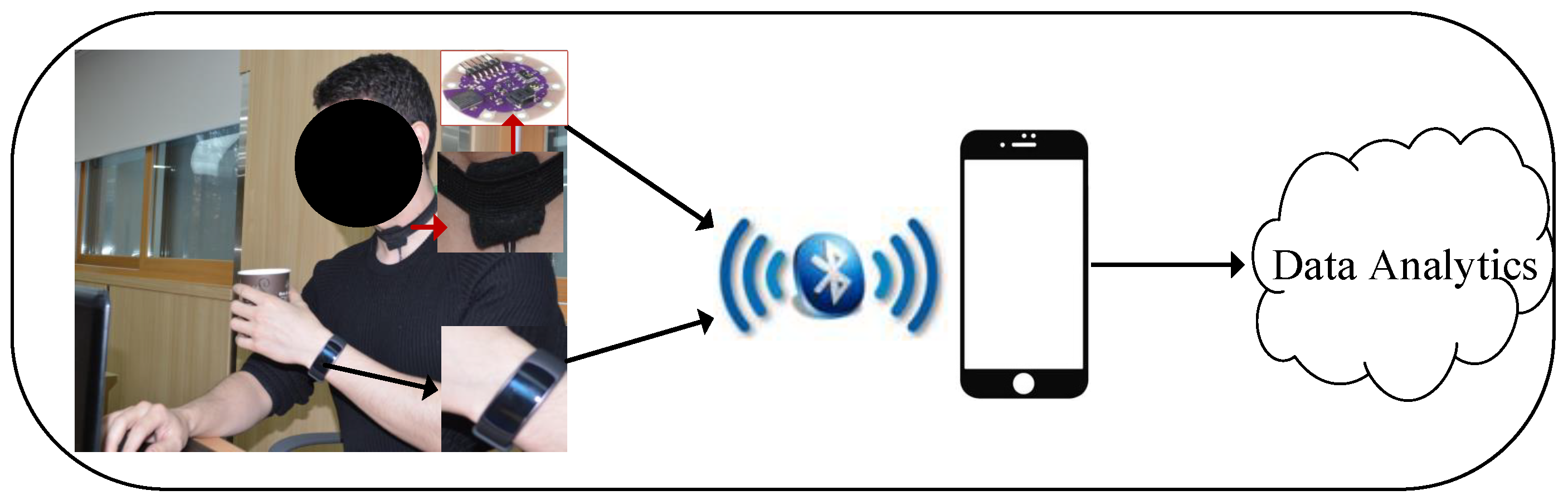

- First, we employ the motion sensors of a smartwatch and a piezoelectric sensor with a stretchable necklace to develop an automated system for monitoring the activities and food types. The motion sensors generate distinct patterns for the eight physical activities. Likewise, the piezoelectric sensor produces different patterns for ingestion of six food categories.

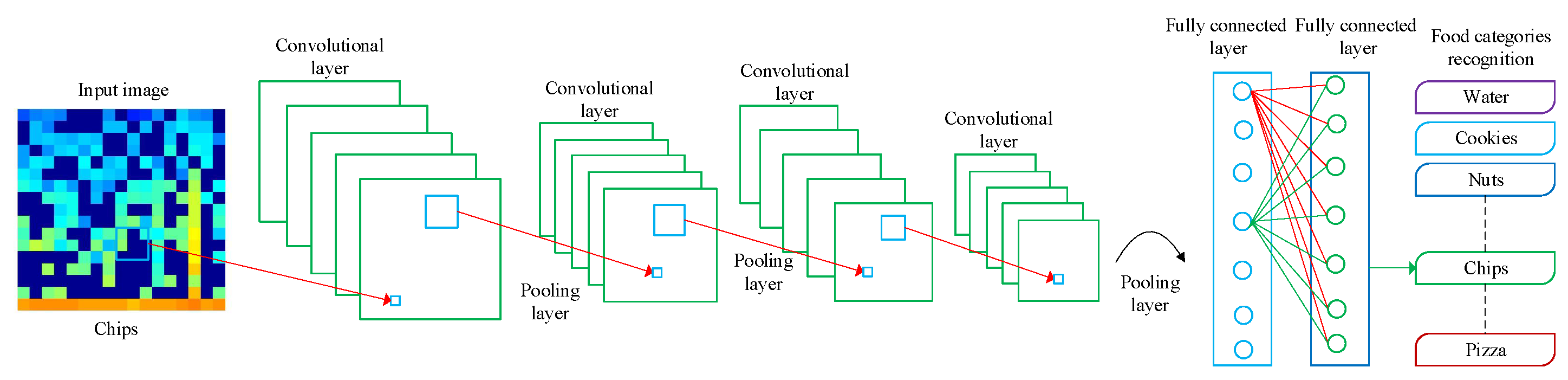

- Second, our food recognition approach based on CNN accurately classifies spectrogram-generated images of six food categories in real-life settings.

- Third, we propose a new algorithm named as event similarity search (ESS) that helps in the annotation of experimental data automatically. We choose a segment with dynamic length, which represents signal patterns with different complexities equally well.

- Fourth, our employed wearable sensors have better user-experience because their design does not limit the natural movements of the subjects and does not interfere with the subjects’ respiration process.

2. Related Work

2.1. Physical Activities

2.2. Dietary Behavior

2.3. Preliminary of CNN

3. Proposed System Architecture and Methods

3.1. System Architecture

3.2. Experimentation Protocol

3.3. Event Similarity Search Algorithm

| Algorithm 1 Event similarity search algorithm. |

|

4. Features and Classification

4.1. Features Extraction and Selection

4.2. Activity and Food Classification

5. Results and Discussion

6. Conclusions and Future Work

Author Contributions

Funding

Conflicts of Interest

References

- U.S. Department of Health and Human Services; U.S. Department of Agriculture. 2015–2020 Dietary Guidelines for Americans, 8th ed.; December 2015. Available online: https://health.gov/dietaryguidelines/2015/guidelines/ (accessed on 28 August 2019).

- Sazonov, E.S.; Schuckers, S.A.C.; Lopez-Meyer, P.; Makeyev, O.; Melanson, E.L.; Neuman, M.R.; Hill, J.O. Toward Objective Monitoring of Ingestive Behavior in Free-living Population. Obesity 2009, 17, 1971–1975. [Google Scholar] [CrossRef] [PubMed]

- Xu, K.; Lu, Y.; Takei, K. Multifunctional Skin-Inspired Flexible Sensor Systems for Wearable Electronics. Adv. Mater. Technol. 2019, 4, 1800628–1800652. [Google Scholar] [CrossRef]

- Xu, K.; Lu, Y.; Honda, S.; Arie, T.; Akitaa, S.; Takei, K. Highly Stable Kirigami-Structured Stretchable Strain Sensors for Perdurable Wearable Electronics. J. Mater. Chem. C 2019, 7, 9609–9617. [Google Scholar] [CrossRef]

- Sazonov, E.S.; Fontana, J.M. A sensor system for automatic detection of food intake through non-invasive monitoring of chewing. IEEE Sens. J. 2012, 12, 1340–1348. [Google Scholar] [CrossRef] [PubMed]

- Farooq, M.; Fontana, J.M.; Sazonov, E. A novel approach for food intake detection using electroglottography. Physiol. Meas. 2014, 35, 739. [Google Scholar] [CrossRef] [PubMed]

- Centers for Disease Control and Prevention. Adult Obesity Facts. 2014. Available online: http://www.cdc.gov/obesity/data/adult.html (accessed on 15 September 2019).

- World Health Organization. Obesity and Overweight. 2012. Available online: http://www.who.int/mediacentre/factsheets/fs311/en (accessed on 20 October 2019).

- Fontana, J.M.; Higgins, J.A.; Schuckers, S.C.; Bellisle, F.; Pan, Z.; Melanson, E.L.; Neuman, M.R.; Sazonov, E. Energy intake estimation from counts of chews and swallows. Appetite 2015, 85, 14–21. [Google Scholar] [CrossRef] [PubMed]

- Bray, G.A. How Do We Get Fat? An Epidemiological and Metabolic Approach. In The Metabolic Syndrome and Obesity; Humana Press: Totowa, NJ, USA, 2007; pp. 31–66. [Google Scholar]

- Lee, S.M.; Yoon, S.M.; Cho, H. Human activity recognition from accelerometer data using Convolutional Neural Network. In Proceedings of the IEEE Big Data and Smart Computing (BigComp), Jeju, Korea, 13–16 February 2017; pp. 131–134. [Google Scholar]

- Lara, O.D.; Labrador, M.A. A survey on human activity recognition using wearable sensors. IEEE Commun. Surv. Tutor. 2013, 15, 1192–1209. [Google Scholar] [CrossRef]

- Yang, A.Y.; Iyengar, S.; Sastry, S.; Bajcsy, R.; Kuryloski, P.; Jafari, R. Distributed segmentation and classification of human actions using a wearable motion sensor network. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition Workshops, Anchorage, AK, USA, 23–28 June 2008; pp. 1–8. [Google Scholar]

- Ward, J.A.; Lukowicz, P.; Troster, G.; Starner, T.E. Activity recognition of assembly tasks using body-worn microphones and accelerometers. IEEE Trans. Pattern Anal. Mach. Intell. 2006, 28, 1553–1567. [Google Scholar] [CrossRef]

- Veltink, P.H.; Bussmann, H.B.J.; Vries, W.D.; Martens, W.L.J.; Lummel, R.C.V. Detection of Static and Dynamic Activities Using Uniaxial Accelerometers. IEEE Trans. Rehabil. Eng. 1996, 4, 375–386. [Google Scholar] [CrossRef]

- Google APIs for Android: ActivityRecognitionAPI. Available online: https://developers.google.com/android/reference/com/google/android/gms/location/ActivityRecognitionApi (accessed on 11 October 2019).

- Rodriguez, C.; Castro, D.M.; Coral, W.; Cabra, J.L.; Velasquez, N.; Colorado, J.; Mendez, D.; Trujillo, L.C. IoT system for human activity recognition using BioHarness 3 and smartphone. In Proceedings of the International Conference on Future Networks and Distributed Systems, Cambridge, UK, 19–20 July 2017. [Google Scholar]

- Rueda, F.M.; Gernot, A.F. Learning Attribute Representation for Human Activity Recognition. In Proceedings of the 24th International Conference on Pattern Recognition (ICPR), Beijing, China, 20–24 August 2018. [Google Scholar]

- Jalal, A.; Kim, Y.H.; Kim, Y.J.; Kamal, S.; Kim, D. Robust human activity recognition from depth video using spatiotemporal multi-fused features. Pattern Recognit. 2017, 61, 295–308. [Google Scholar] [CrossRef]

- Chang, J.Y.; Shyu, J.J.; Cho, C.W. Fuzzy rule inference based human activity recognition. In Proceedings of the IEEE Control Applications, (CCA) & Intelligent Control, (ISIC), St. Petersburg, Russia, 8–10 July 2009; pp. 211–215. [Google Scholar]

- Farhadi, A.; Tabrizi, M.K. Learning to recognize activities from the wrong view point. In Proceedings of the European Conference on Computer Vision; Springer: Berlin, Germany, 2008; pp. 154–166. [Google Scholar]

- Amft, O.; Kusserow, M.; Troster, G. Bite weight prediction from acoustic recognition of chewing. IEEE Trans. Biomed. Eng. 2009, 56, 1663–1672. [Google Scholar] [CrossRef] [PubMed]

- Bi, Y.; Lv, M.; Song, C.; Xu, W.; Guan, N.; Yi, W. Autodietary: A wearable acoustic sensor system for food intake recognition in daily life. IEEE Sens. J. 2016, 16, 806–816. [Google Scholar] [CrossRef]

- Kalantarian, H.; Mortazavi, B.; Alshurafa, N.; Sideris, C.; Le, T.; Sarrafzadeh, M. A comparison of piezoelectric-based inertial sensing and audio-based detection of swallows. Obes. Med. 2016, 1, 6–14. [Google Scholar] [CrossRef]

- Kalantarian, H.; Sarrafzadeh, M. Audio-based detection and evaluation of eating behavior using the smartwatch platform. Comput. Biol. 2015, 65, 1–9. [Google Scholar] [CrossRef]

- Hussain, G.; Javed, K.; Cho, J.; Yi, J. Food intake detection and classification using a necklace-type piezoelectric wearable sensor system. IEICE Trans. Inf. Syst. 2018, 101, 2795–2807. [Google Scholar] [CrossRef]

- Kalantarian, H.; Alshurafa, N.; Le, T.; Sarrafzadeh, M. Monitoring eating habits using a piezoelectric sensor-based necklace. Comput. Biol. Med. 2015, 58, 46–55. [Google Scholar] [CrossRef]

- Alshurafa, N.; Kalantarian, H.; Pourhomayoun, M.; Liu, J.J.; Sarin, S.; Shahbazi, B.; Sarrafzadeh, M. Recognition of nutrition intake using time-frequency decomposition in a wearable necklace using a piezoelectric sensor. IEEE Sens. J. 2015, 15, 3909–3916. [Google Scholar] [CrossRef]

- Tamura, T.; Kimura, Y. Review of monitoring devices for food intake. CICSJ Bull. 2016, 34, 73–79. [Google Scholar]

- Starner, T.; Schiele, B.; Pentland, A. Visual Contextual Awareness in Wearable Computing. In Proceedings of the IEEE International Symposium on Wearable Computers, Pittsburgh, PA, USA, 19–20 October 1998; pp. 50–57. [Google Scholar]

- Khan, A.M.; Lee, Y.K.; Lee, S.Y.; Kim, T.-S. A triaxial accelerometer-based physical-activity recognition via augmented-signal reatures and a hierarchical recognizer. IEEE Trans. Inf. Technol. Biomed. 2010, 14, 1166–1172. [Google Scholar] [CrossRef]

- Day, N.; McKeown, N.; Wong, M.; Welch, A.; Bingham, S. Epidemiological assessment of diet: A comparison of a 7-day diary with a food frequency questionnaire using urinary markers of nitrogen, potassium and sodium. Int. J. Epidemiol. Oxf. 2001, 30, 309–317. [Google Scholar] [CrossRef]

- Coulston, A.; Boushey, C. Nutrition in the Prevention and Treatment of Disease, 2nd ed.; Academic Press, Elsevier: Cambridge, MA, USA, 2008. [Google Scholar]

- Horst, C.H.; Boer, G.L.O.D.; Kromhout, D. Validity of the 24-Hour Recall Method in Infancy: The Leiden Pre-School Children Study. Int. J. Epidemiol. 1988, 17, 217–221. [Google Scholar] [CrossRef] [PubMed]

- Amft, O.; Troster, G. Methods for Detection and Classification of Normal Swallowing from Muscle Activation and Sound. In Proceedings of the Pervasive Health Conference and Workshops, IEEE, Innsbruck, Austria, 29 November–1 December 2006; pp. 1–10. [Google Scholar]

- Dong, Y.; Scisco, J.; Wilson, M.; Muth, E.; Hoover, A. Detecting Periods of Eating during Free-Living by Tracking Wrist Motion. IEEE J. Biomed. Health Inform. 2014, 18, 1253–1260. [Google Scholar] [CrossRef] [PubMed]

- Amft, O.; Tröster, G. Recognition of dietary activity events using on-body sensors. Artif. Intell. Med. 2008, 42, 121–136. [Google Scholar] [CrossRef] [PubMed]

- Salley, J.; Hoover, A.; Wilson, M.; Muth, E.R. Comparison between Human and Bite-Based Methods of Estimating Caloric Intake. J. Acad. Nutr. Diet. 2016, 116, 1568–1577. [Google Scholar] [CrossRef] [PubMed]

- Mattfeld, R.; Muth, E.; Hoover, A. Measuring the consumption of individual solid and liquid bites using a table embedded scale during unrestricted eating. IEEE J. Biomed. Health Inform. 2016, 21, 1711–1718. [Google Scholar] [CrossRef] [PubMed]

- Zhou, B.; Cheng, J.; Sundholm, M.; Reiss, A.; Huang, W.; Amft, O.; Lukowicz, P. Smart Table Surface: A Novel Approach to Pervasive Dining Monitoring. In Proceedings of the IEEE International Conference on Pervasive Computing and Communications, St. Louis, MO, USA, 23–27 March 2015. [Google Scholar]

- Yao, N.; Sclabassi, R.J.; Liu, Q.; Sun, M. A video-based algorithm for food intake estimation in the study of obesity. In Proceedings of the IEEE Bioengineering Conference, Long Island, NY, USA, 10–11 March 2007. [Google Scholar]

- LeCun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef]

- Cao, W.; Wang, X.; Ming, Z.; Gao, J. A review on neural networks with random weights. Neurocomputing 2018, 275, 278–287. [Google Scholar] [CrossRef]

- Niu, J.; Liu, Y.; Guizani, M.; Ouyang, Z. Deep CNN-based Real-time Traffic Light Detector for Self-driving Vehicles. IEEE Trans. Mob. Comput. 2019. [Google Scholar] [CrossRef]

- He, R.; Wu, X.; Sun, Z.; Tan, T. Wasserstein cnn: Learning invariant features for nir-vis face recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 41, 1761–1773. [Google Scholar] [CrossRef]

- Sun, Y.; Wang, B.; Jin, J.; Wang, X. Deep Convolutional Network Method for Automatic Sleep Stage Classification Based on Neurophysiological Signals. In Proceedings of the 11th International Congress on Image and Signal Processing, BioMedical Engineering and Informatics (CISP-BMEI), Beijing, China, 13–15 October 2018. [Google Scholar]

- Caterini, A.L.; Chang, D.E. Deep Neural Networks in a Mathematical Framework; Springer: Cham, Switzerland, 2018. [Google Scholar]

- Hussain, M.; Bird, J.J.; Faria, D.R. A Study on CNN Transfer Learning for Image Classification. In Proceedings of the UK Workshop on Computational Intelligence, Nottingham, UK, 5–7 September 2018; Springer: Cham, Switzerland, 2018. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. In Proceedings of the Advances in Neural Information Processing Systems (NIPS), Lake Tahoe, NV, USA, 3–6 December 2012; pp. 1097–1105. [Google Scholar]

- LDT with Crimps Vibration Sensor/Switch. Available online: https://cdn.sparkfun.com/datasheets/Sensors/ForceFlex/LDT_Series.pdf (accessed on 12 November 2019).

- Samsung Gear Fit 2 Pro Fitness Band Teardown. Available online: https://www.techinsights.com/blog/samsung-gear-fit-2-pro-fitness-band-teardown (accessed on 10 Novemebr 2019).

| Proposed Algorithm | Sensor(s) & (Classes) | Accuracy (%) |

|---|---|---|

| In [11], the data for human activity collected using the triaxial accelerometer were employed to train one-dimensional CNN. The performance of the designed approach degraded due to the small sampling frequency and a small number of activities. | Triaxial accelerometer (3) | 92.1% |

| An assembly-related activity was recognized using LDA and HMM based on accelerometer and microphone as signal sources [14]. The model has a generalization problem. | Accelerometer and Microphone (9) | 75.9% |

| Recently, Google developed an API to recognize four physical activities, such as running, riding a bicycle, walking and stationary [16]. Smartphone sensors were used to gather the data. The developed API encountered the recognition error owing to poor signal segmentation technique. | The motion sensors of smartphone (6) | 89% |

| A deep learning architecture-based activity recognition system was designed to predict attributes that could represent signal segments relating to physical activities. The model has limitations, such as computational complexity and error-prone attributes [18]. | Seven inertial measurement units (5) | 90.8% |

| The authors designed an embedded hardware system to monitor food intake [23]. The system mainly consists of a throat microphone, which is worn around the neck of participants to collect food-related acoustic signals. The performance of the system drastically decreased as the surrounding noise interferes with the food-related acoustic signals. | Throat microphone (7) | 84.9% |

| In previous study [24], the performance of two different signal sources (piezoelectric and microphone) was compared for food dietary intake. The maximum accuracy for the microphone and the piezoelectric is 91.3% and 79.4%, respectively. The microphone despite being affected by surrounding noise performs better than the piezoelectric because the signal of the piezoelectric sensor is poorly processed. | Microphone and Piezoelectric (3) | 91.3% and 79.4% |

| A low-cost necklace embedded with the piezoelectric sensor was developed to monitor food-ingestion of the subjects [27]. The wearable system recognized chips, water, and sandwich with an accuracy of 85.3%, 81.4%, and 84.5%, respectively. | Piezoelectric(3) | 83.7% |

| A new method using a watch-like configuration of the sensors was presented to detect the periods of eating. The method manually segmented the data and classified eating and non-eating episodes [36]. | Accelerometer and gyroscope (2) | 81% |

| We proposed an activity and food recognition system that consists of the motion sensors in a smartwatch and a piezoelectric sensor. The system employed an event similarity search algorithm, a new technique for dynamic segmentation, to effectively segment the signals of the sensors and automatically annotate the segments. Our proposed system employed SVM and CNN models to accurately recognize the eight activities and six food classes (Proposed System). | Accelerometer, gyroscope, and Piezoelectric (8 and 6) | 94.3% and 91.9% |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hussain, G.; Maheshwari, M.K.; Memon, M.L.; Jabbar, M.S.; Javed, K. A CNN Based Automated Activity and Food Recognition Using Wearable Sensor for Preventive Healthcare. Electronics 2019, 8, 1425. https://doi.org/10.3390/electronics8121425

Hussain G, Maheshwari MK, Memon ML, Jabbar MS, Javed K. A CNN Based Automated Activity and Food Recognition Using Wearable Sensor for Preventive Healthcare. Electronics. 2019; 8(12):1425. https://doi.org/10.3390/electronics8121425

Chicago/Turabian StyleHussain, Ghulam, Mukesh Kumar Maheshwari, Mudasar Latif Memon, Muhammad Shahid Jabbar, and Kamran Javed. 2019. "A CNN Based Automated Activity and Food Recognition Using Wearable Sensor for Preventive Healthcare" Electronics 8, no. 12: 1425. https://doi.org/10.3390/electronics8121425

APA StyleHussain, G., Maheshwari, M. K., Memon, M. L., Jabbar, M. S., & Javed, K. (2019). A CNN Based Automated Activity and Food Recognition Using Wearable Sensor for Preventive Healthcare. Electronics, 8(12), 1425. https://doi.org/10.3390/electronics8121425