Experimental Validation of a Reliable Palmprint Recognition System Based on 2D Ultrasound Images

Abstract

:1. Introduction

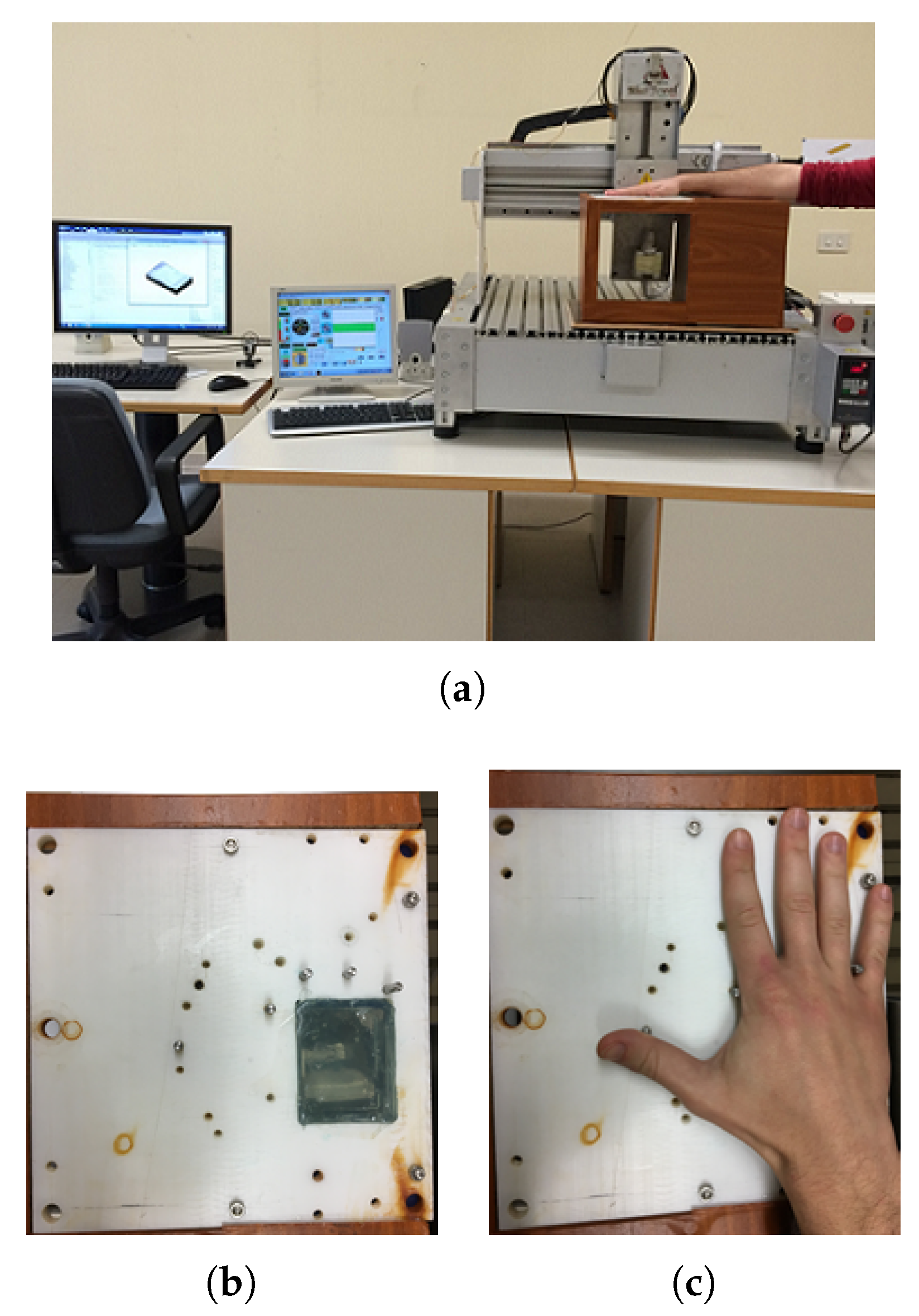

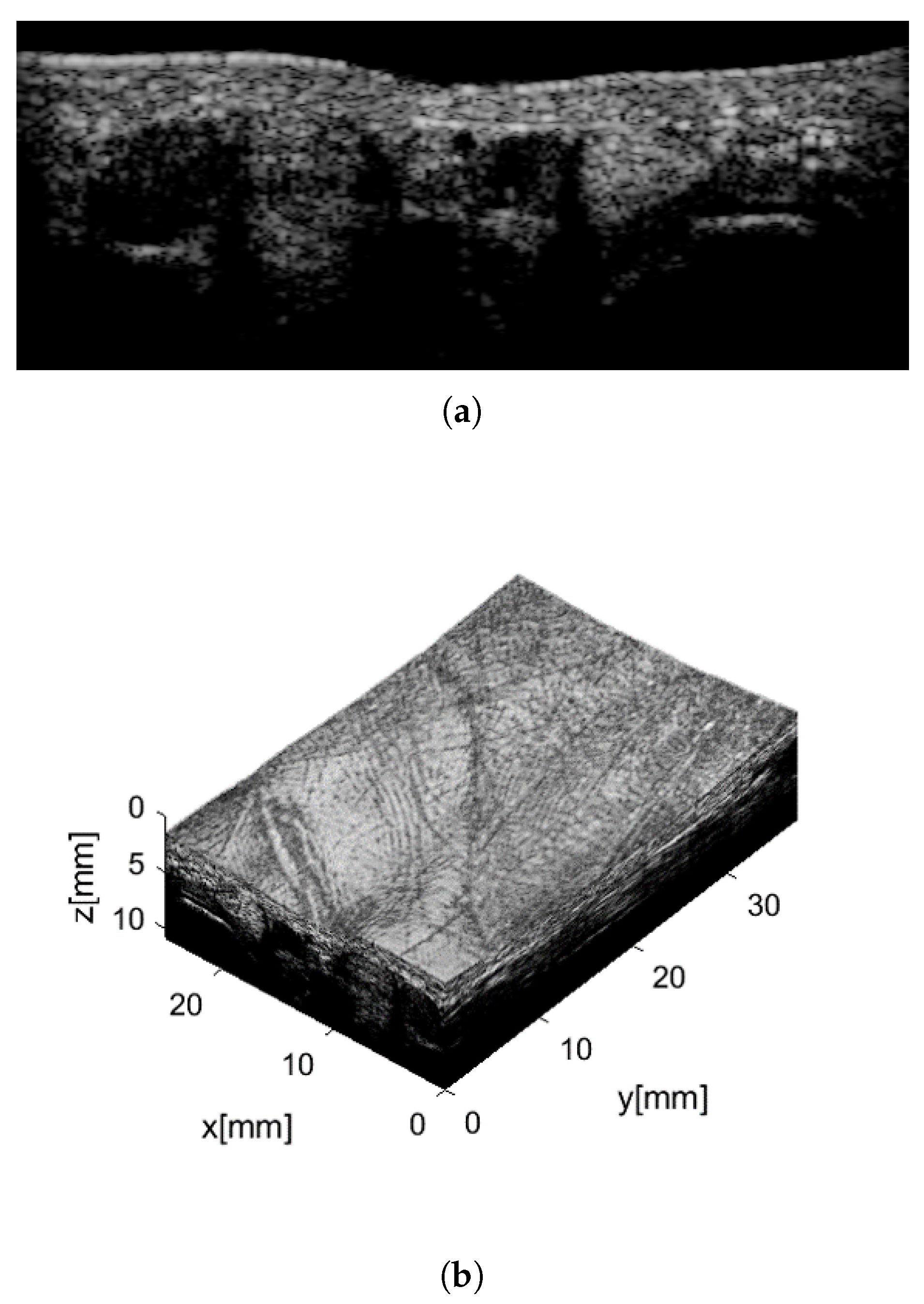

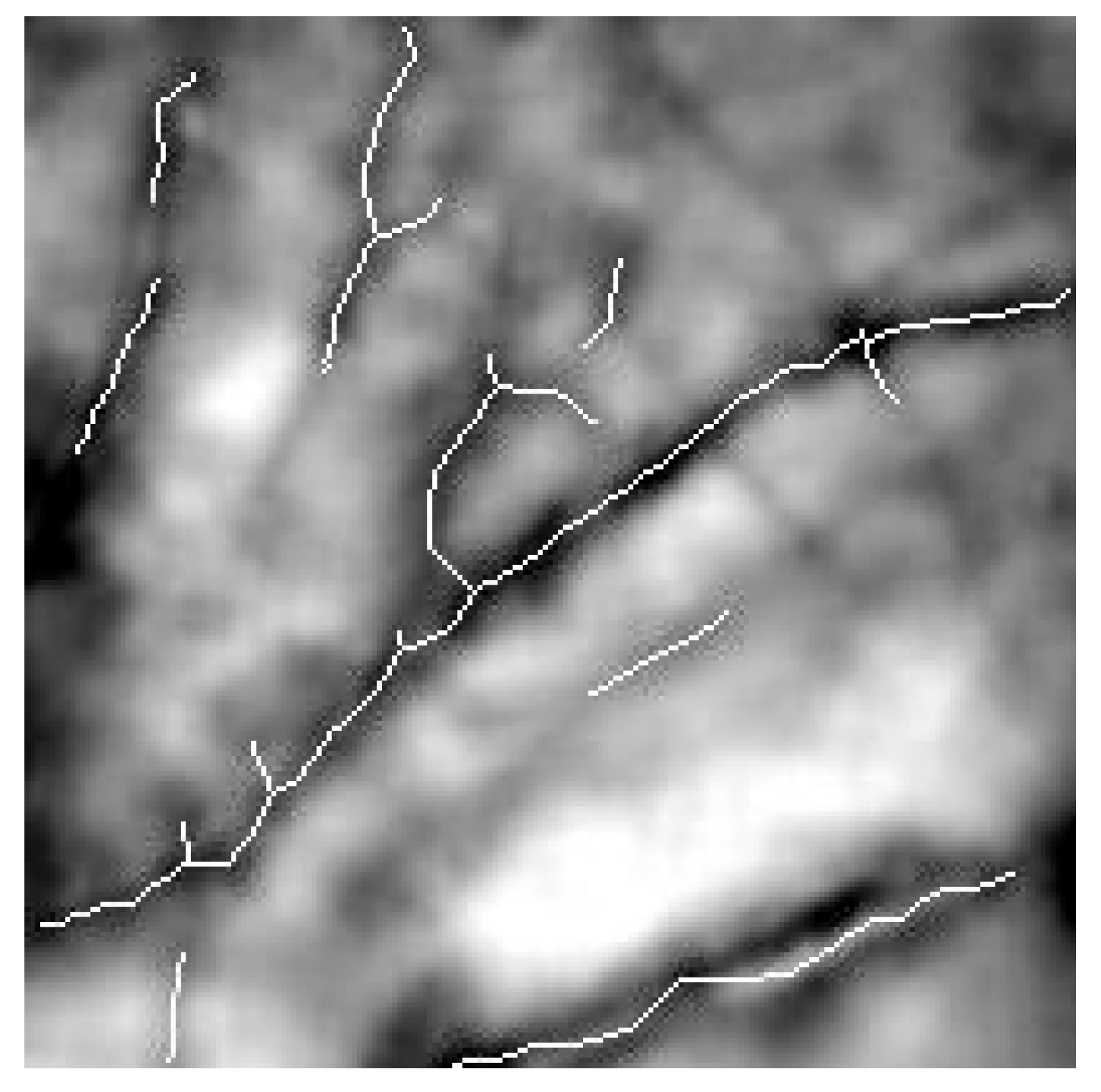

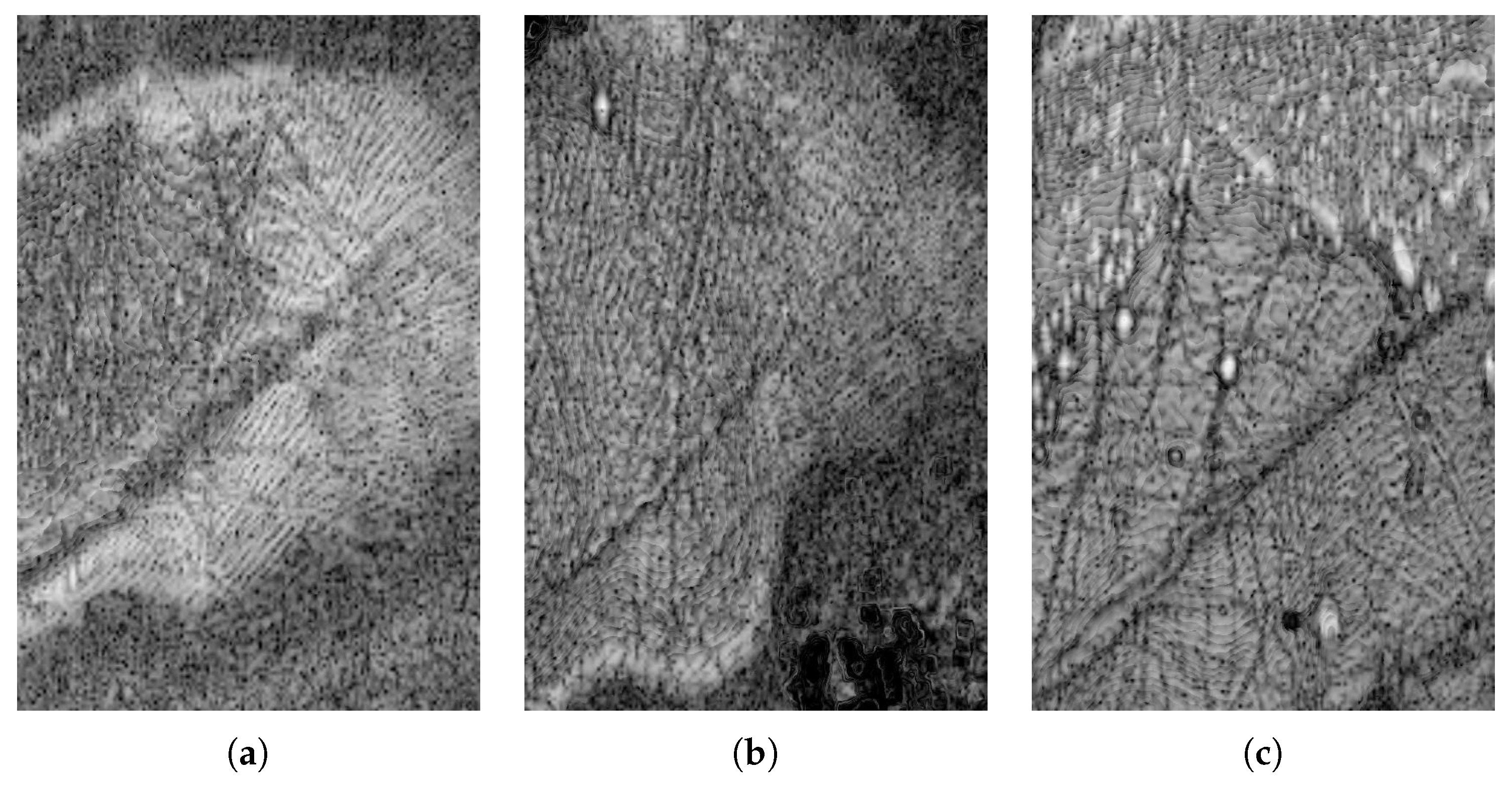

2. Ultrasound Imaging

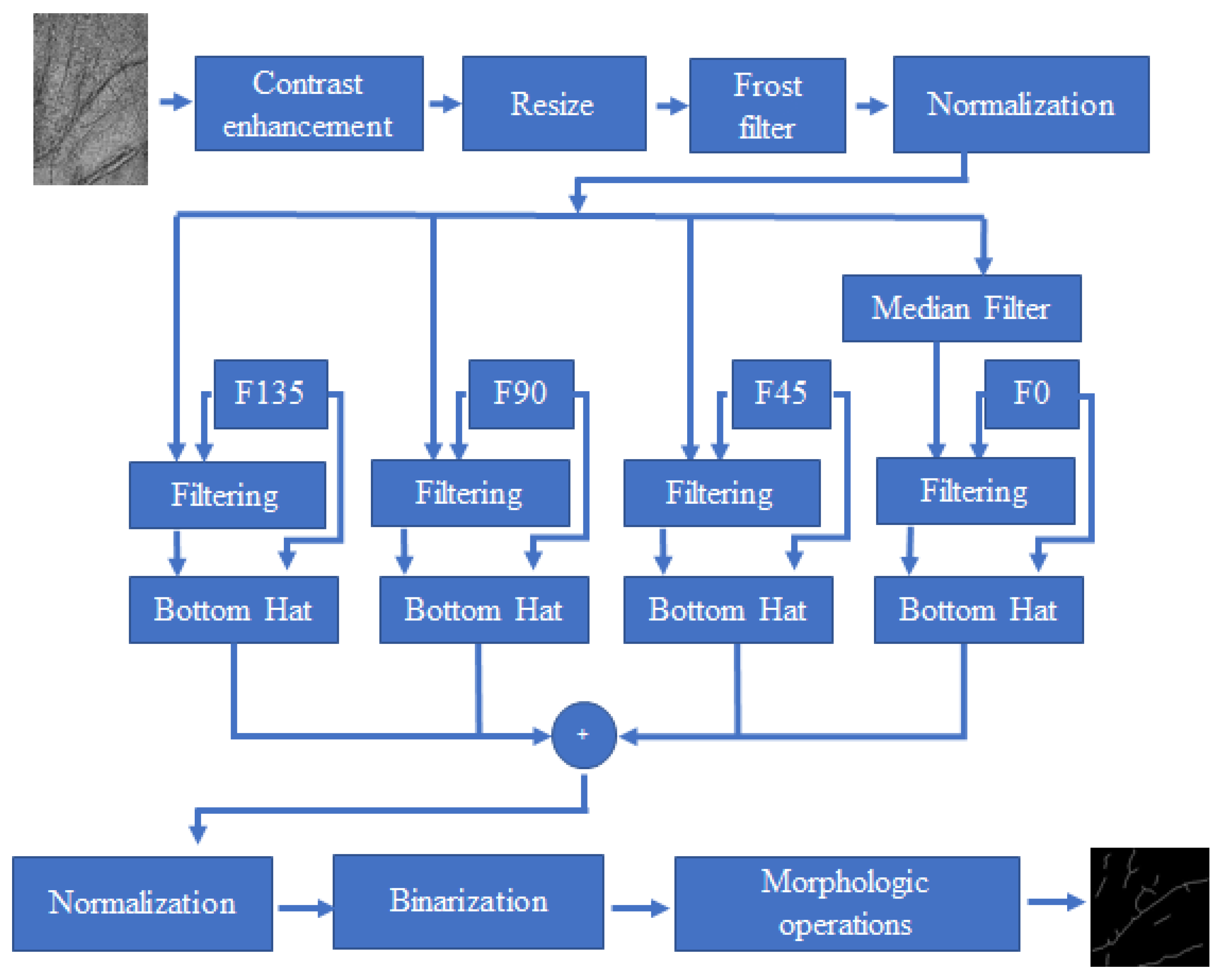

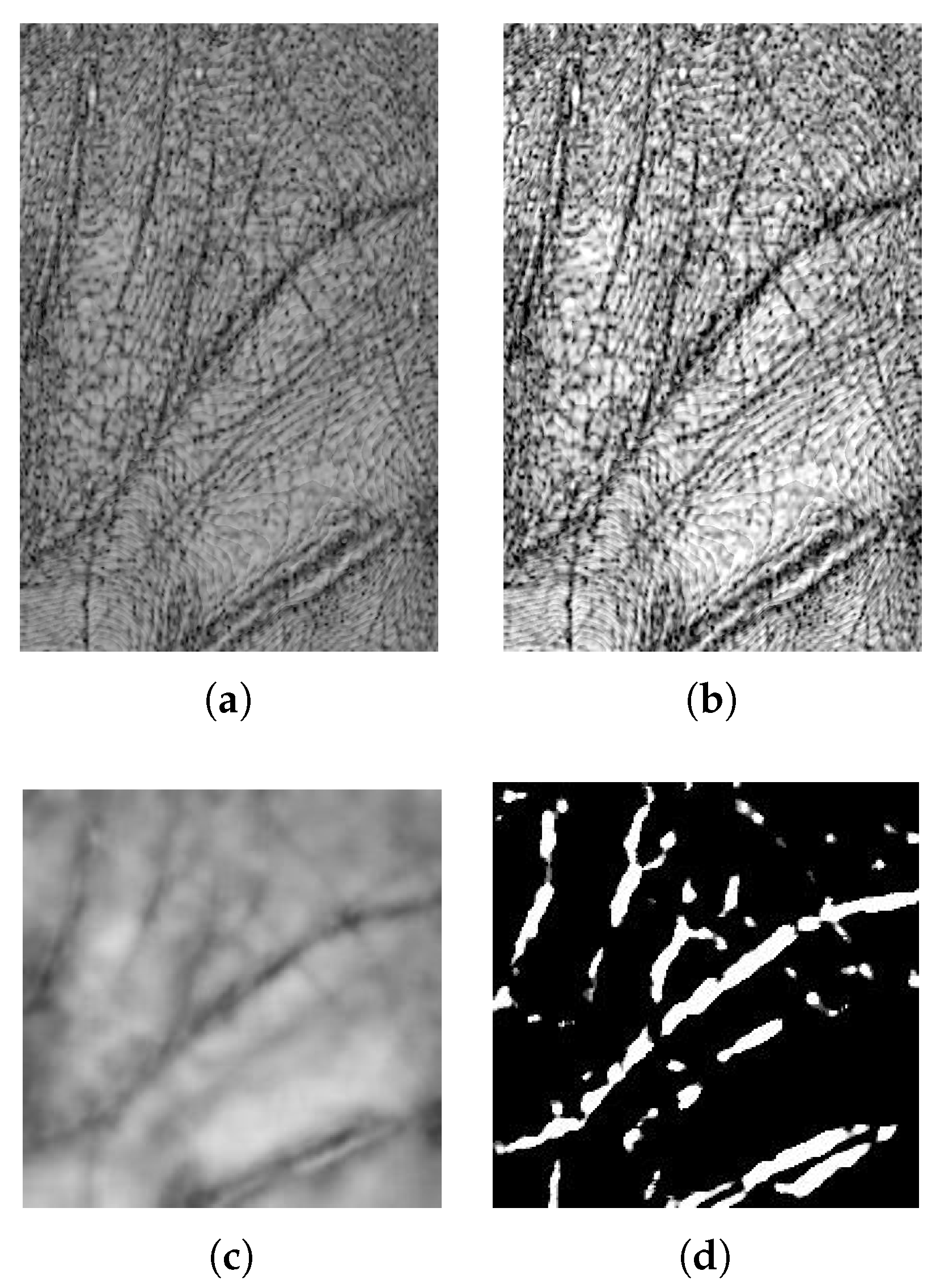

3. Features Extraction Procedure

4. Experimental Results

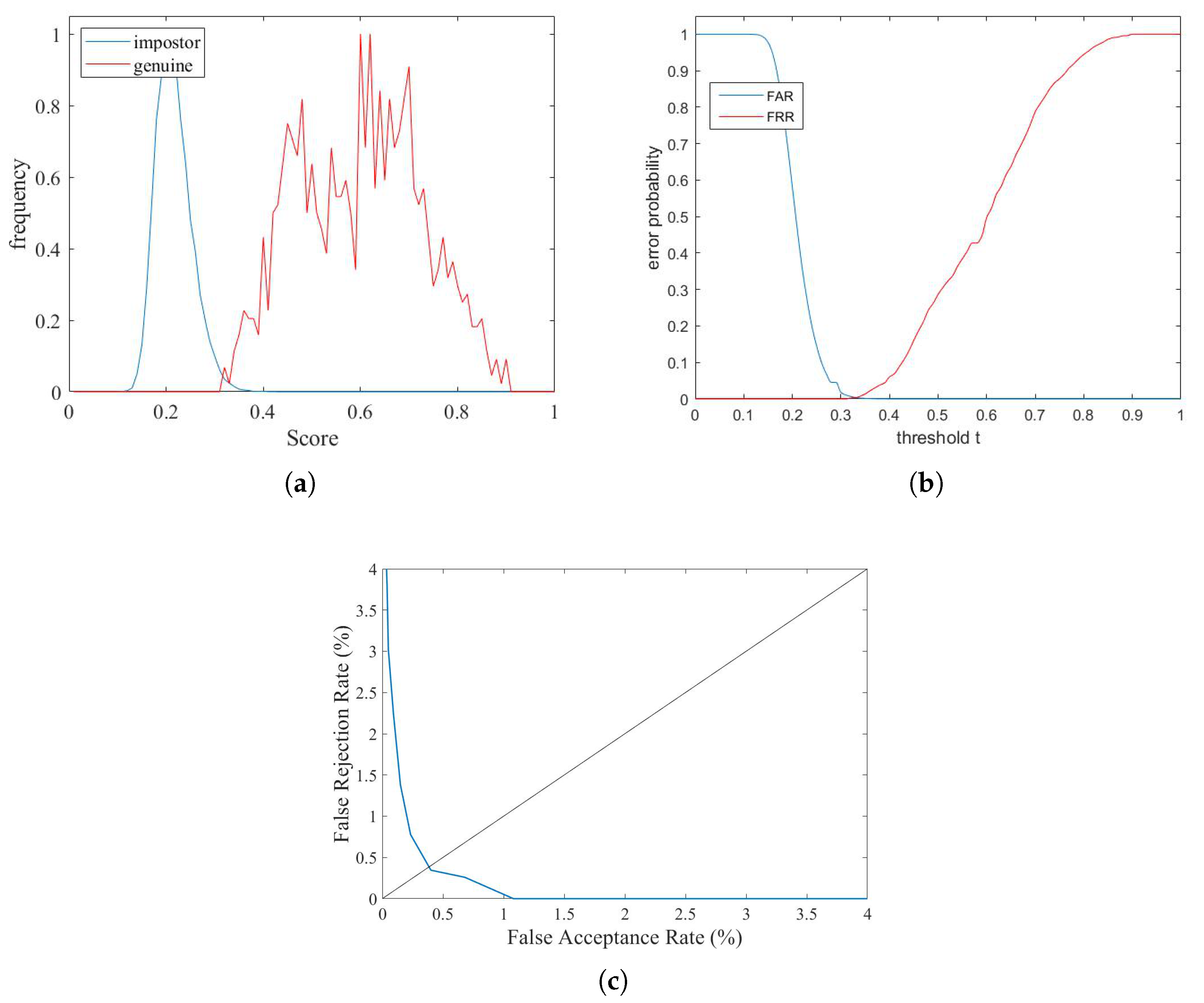

4.1. Verification Results

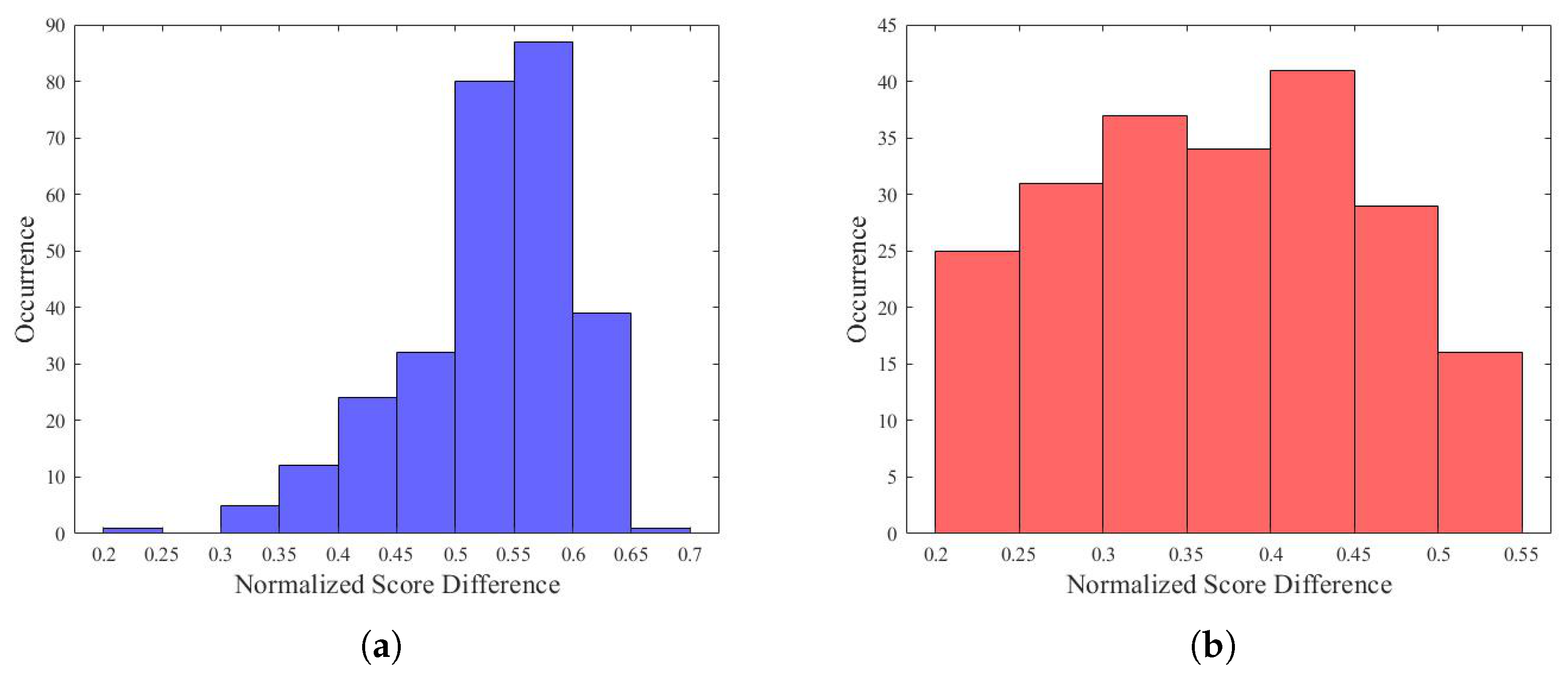

4.2. Identification Results

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Galbally, J.; Marcel, S.; Fierrez, J. Image quality assessment for fake biometric detection: Application to Iris, fingerprint, and face recognition. IEEE Trans. Image Process. 2014, 23, 710–724. [Google Scholar] [CrossRef]

- Zhang, D.; Lu, G.; Li, W.; Zhang, L.; Luo, N. Palmprint recognition using 3-D information. IEEE Trans. Syst. Man Cybern. Part C: Appl. Rev. 2009, 39, 505–519. [Google Scholar] [CrossRef]

- Soh, S.; Ibrahim, M.; Yakno, M. A review: Personal identification based on palm vein infrared pattern. J. Telecommun. Electron. Comput. Eng. 2018, 10, 175–180. [Google Scholar]

- Kakkirala, K.; Chalamala, S.; Jami, S. Thermal Infrared Face Recognition: A Review. In Proceedings of the 2017 UKSim-AMSS 19th International Conference on Computer Modelling & Simulation (UKSim), Cambridge, UK, 5–7 April 2017; pp. 55–60. [Google Scholar]

- Hierold, C.; Hribernig, G.; Scheiter, T. Biometric Capacitive CMOS Fingerprint Sensor Systems; Wiley-VCH: Weinheim, Germany, 2008; Volume 2, pp. 391–446. [Google Scholar]

- Choi, W.; Park, K. Fingerprint imaging of dry finger using photoacoustics. J. Acoust. Soc. Am. 2017, 141, EL205–EL209. [Google Scholar] [CrossRef]

- Wang, Y.; Li, Z.; Vu, T.; Nyayapathi, N.; Oh, K.; Xu, W.; Xia, J. A robust and secure palm vessel biometric sensing system based on photoacoustics. IEEE Sens. J. 2018, 18, 5993–6000. [Google Scholar] [CrossRef]

- Schneider, J.K.; Wobschall, D.C. Live scan fingerprint imagery using high resolution C-scan ultrasonography. In Proceedings of the 25th Annual 1991 IEEE International Carnahan Conference on Security Technology, Taipei, Taiwan, 1–3 October 1991; pp. 88–95. [Google Scholar]

- Bicz, W.; Banasiak, D.; Bruciak, P.; Gumienny, Z.; Gumuliński, S.; Kosz, D.; Krysiak, A.; Kuczyński, W.; Pluta, M.; Rabiej, G. Fingerprint structure imaging based on an ultrasound camera. Instrum. Sci. Technol. 1999, 27, 295–303. [Google Scholar] [CrossRef]

- Savoia, A.; Caliano, G.; Iula, A.; Longo, C.; Caronti, A.; Carotenuto, R.; Pappalardo, M. Design and fabrication of a cMUT probe for ultrasound imaging of fingerprints. In Proceedings of the IEEE Ultrasonics Symposium, San Diego, CA, USA, 11–14 October 2010; pp. 1877–1880. [Google Scholar]

- Maev, R.; Severin, F. High-speed biometrics ultrasonic system for 3D fingerprint imaging. In Proceedings of the SPIE—The International Society for Optical Engineering, Brussels, Belgium, 16–19 April 2012; Volume 8546. [Google Scholar]

- Lamberti, N.; Caliano, G.; Iula, A.; Savoia, A. A high frequency cMUT probe for ultrasound imaging of fingerprints. Sens. Actuators A: Phys. 2011, 172, 561–569. [Google Scholar] [CrossRef]

- Jiang, X.; Tang, H.Y.; Lu, Y.; Ng, E.J.; Tsai, J.M.; Boser, B.E.; Horsley, D.A. Ultrasonic fingerprint sensor with transmit beamforming based on a PMUT array bonded to CMOS circuitry. IEEE Trans. Ultrason. Ferroelectr. Freq. Control 2017, 64, 1401–1408. [Google Scholar] [CrossRef]

- Schmitt, R.; Zeichman, J.; Casanova, A.; Delong, D. Model based development of a commercial, acoustic fingerprint sensor. In Proceedings of the IEEE International Ultrasonics Symposium (IUS), Dresden, Germany, 7–10 October 2012; pp. 1075–1085. [Google Scholar]

- Sonavation, Inc. 2019. Available online: http://www.sonavation.com/ (accessed on 13 March 2019).

- Invensense, Inc. 2019. Available online: https://www.invensense.com (accessed on 13 March 2019).

- Qualcomm Technologies, Inc. 2019. Available online: https://www.qualcomm.com/solutions/mobile-computing/features/fingerprint-sensors (accessed on 13 March 2019).

- Iula, A. Ultrasound systems for biometric recognition. Sensors 2019, 19, 2317. [Google Scholar] [CrossRef]

- Iula, A.; De Santis, M. Experimental evaluation of an ultrasound technique for the biometric recognition of human hand anatomic elements. Ultrasonics 2011, 51, 683–688. [Google Scholar] [CrossRef]

- Narayanasamy, G.; Fowlkes, J.; Kripfgans, O.; Jacobson, J.; De Maeseneer, M.; Schmitt, R.; Carson, P. Ultrasound of the Fingers for Human Identification Using Biometrics. Ultrasound Med. Biol. 2008, 34, 392–399. [Google Scholar] [CrossRef] [PubMed]

- Zemp, R.; Bitton, R.; Li, M.L.; Shung, K.; Stoica, G.; Wang, L. Photoacoustic imaging of the microvasculature with a high-frequency ultrasound array transducer. J. Biomed. Opt. 2007, 12, 010501. [Google Scholar] [CrossRef] [PubMed]

- Iula, A.; Savoia, A.; Caliano, G. 3D Ultrasound palm vein pattern for biometric recognition. In Proceedings of the 2012 IEEE International Ultrasonics Symposium, Dresden, Germany, 7–10 October 2012; pp. 1–4. [Google Scholar]

- De Santis, M.; Agnelli, S.; Nardiello, D.; Iula, A. 3D Ultrasound Palm Vein recognition through the centroid method for biometric purposes. In Proceedings of the 2017 IEEE International Ultrasonics Symposium (IUS), Washington, DC, USA, 6–9 September 2017. [Google Scholar]

- Iula, A.; Hine, G.E.; Ramalli, A.; Guidi, F.; Boni, E.; Savoia, A.S.; Caliano, G. An enhanced ultrasound technique for 3D palmprint recognition. In Proceedings of the 2013 IEEE International Ultrasonics Symposium (IUS), Prague, Czech Republic, 21–25 July 2013; pp. 978–981. [Google Scholar]

- Iula, A.; Savoia, A.S.; Caliano, G. An ultrasound technique for 3D palmprint extraction. Sens. Actuators A: Phys. 2014, 212, 18–24. [Google Scholar] [CrossRef]

- Iula, A.; Nardiello, D. Three-dimensional ultrasound palmprint recognition using curvature methods. J. Electron. Imaging 2016, 25, 033009. [Google Scholar] [CrossRef]

- Bai, X.; Gao, N.; Zhang, Z.; Zhang, D. 3D palmprint identification combining blocked ST and PCA. Pattern Recognit. Lett. 2017, 100, 89–95. [Google Scholar] [CrossRef]

- Iula, A.; Nardiello, D. 3D Ultrasound Palmprint Recognition System Based on Principal Lines Extracted at Several under Skin Depths. IEEE Trans. Instrum. Meas. 2019. [Google Scholar] [CrossRef]

- Nardiello, D.; Calia, M.; Iula, A. An enhanced ultrasound technique for 3D palmprint recognition. In Proceedings of the 2016 IEEE International Ultrasonics Symposium (IUS), Tours, France, 18–21 September 2016. [Google Scholar]

- Iula, A.; Micucci, M. Palmprint recognition based on ultrasound imaging. In Proceedings of the 2019 42nd International Conference on Telecommunications and Signal Processing (TSP 2019), Budapest, Hungary, 1–3 July 2019; pp. 621–624. [Google Scholar]

- Han, J.; Gal, C.; Park, J.; Kim, J.; Lee, S.; Yeo, B.; Lee, B.; Park, S.; Park, S. Powder injection molding process for fabrication of piezoelectric 2D array ultrasound transducer. Smart Mater. Struct. 2018, 27, 075058. [Google Scholar] [CrossRef]

- Morgan, M.; Broder, J.; Dahl, J.; Herickhoff, C. Versatile Low-cost Volumetric 3D Ultrasound Platform for Existing Clinical 2D Systems. IEEE Trans. Med. Imaging 2018, 37, 2248–2256. [Google Scholar] [CrossRef]

- Tortoli, P.; Bassi, L.; Boni, E.; Dallai, A.; Guidi, F.; Ricci, S. ULA-OP: An advanced open platform for ultrasound research. IEEE Trans. Ultrason. Ferroelectr. Freq. Control 2009, 56, 2207–2216. [Google Scholar] [CrossRef]

- Dai, J.; Zhou, J. Multifeature-based high-resolution palmprint recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2011, 33, 945–957. [Google Scholar]

- Cappelli, R.; Ferrara, M.; Maio, D. A fast and accurate palmprint recognition system based on minutiae. IEEE Trans. Syst. Man Cybern. Part B: Cybern. 2012, 42, 956–962. [Google Scholar] [CrossRef] [PubMed]

- Huang, D.S.; Jia, W.; Zhang, D. Palmprint verification based on principal lines. Pattern Recognit. 2008, 41, 1316–1328. [Google Scholar] [CrossRef]

- Zhang, L.; Li, H.; Niu, J. Fragile Bits in Palmprint Recognition. IEEE Signal Process. Lett. 2012, 19, 663–666. [Google Scholar] [CrossRef]

- Ali, M.; Yannawar, P.; Gaikwad, A. Study of edge detection methods based on palmprint lines. In Proceedings of the International Conference on Electrical, Electronics, and Optimization Techniques (ICEEOT 2016), Chennai, India, 3–5 March 2016; pp. 1344–1350. [Google Scholar] [CrossRef]

- Cui, J.; Wen, J.; Fan, Z. Appearance-based bidirectional representation for palmprint recognition. Multimed. Tools Appl. 2015, 74, 10989–11001. [Google Scholar] [CrossRef]

- Kumar, A.; Zhang, D. Personal authentication using multiple palmprint representation. Pattern Recognit. 2005, 10, 1695–1704. [Google Scholar] [CrossRef]

- Xu, Y.; Fei, L.; Wen, J.; Zhang, D. Discriminative and Robust Competitive Code for Palmprint Recognition. IEEE Trans. Syst. Man Cybern. Syst. 2018, 48, 232–241. [Google Scholar] [CrossRef]

- Rida, I.; Al Maadeed, N.; Al Maadeed, S. A Novel Efficient Classwise Sparse and Collaborative Representation for Holistic Palmprint Recognition. In Proceedings of the 2018 NASA/ESA Conference on Adaptive Hardware and Systems (AHS), Edinburgh, UK, 6–9 August 2018; pp. 156–161. [Google Scholar]

- Mansourpour, M.; Rajabi, M.; Blais, J. Effects and performance of speckle noise reduction filters on active radar and SAR images. In Proceedings of the 2006 ISPRS Ankara Workshop, Ankara, Turkey, 14–16 February 2006. [Google Scholar]

- Frost, V.; Stiles, J.; Shanmugan, K.; Holtzman, J. A Model for Radar Images and Its Application to Adaptive Digital Filtering of Multiplicative Noise. IEEE Trans. Pattern Anal. Mach. Intell. 1982, 4, 157–166. [Google Scholar] [CrossRef]

- Nardiello, D.; Iula, A. A new recognition procedure for palmprint features extraction from ultrasound images. Lect. Notes Electr. Eng. 2019, 512, 113–118. [Google Scholar]

- Bruno, A.; Carminetti, P.; Gentile, V.; La Cascia, M.; Mancino, E. Palmprint principal lines extraction. In Proceedings of the 2014 IEEE Workshop on Biometric Measurements and Systems for Security and Medical Applications, Rome, Italy, 17 October 2014; pp. 50–56. [Google Scholar]

- Gonzalez, R.C.; Woods, R.E. Digital Image Processing, 3rd ed.; Prentice-Hall, Inc.: Upper Saddle River, NJ, USA, 2006. [Google Scholar]

- Kim, M.K. Palmprint Recognition Based on Line and Slope Orientation Features. J. Inf. Sci. Eng. 2011, 27, 1219–1232. [Google Scholar]

- Genovese, A.; Piuri, V.; Plataniotis, K.; Scotti, F. PalmNet: Gabor-PCA convolutional networks for touchless palmprint recognition. IEEE Trans. Inf. Forensics Secur. 2019, 14, 3160–3174. [Google Scholar] [CrossRef]

- Bensid, K.; Samai, D.; Laallam, F.; Meraoumia, A. Deep learning feature extraction for multispectral palmprint identification. J. Electron. Imaging 2018, 27, 033018. [Google Scholar] [CrossRef]

- Guo, X.; Zhou, W.; Zhang, Y. Collaborative representation with HM-LBP features for palmprint recognition. Mach. Vis. Appl. 2017, 28, 283–291. [Google Scholar] [CrossRef]

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Iula, A.; Micucci, M. Experimental Validation of a Reliable Palmprint Recognition System Based on 2D Ultrasound Images. Electronics 2019, 8, 1393. https://doi.org/10.3390/electronics8121393

Iula A, Micucci M. Experimental Validation of a Reliable Palmprint Recognition System Based on 2D Ultrasound Images. Electronics. 2019; 8(12):1393. https://doi.org/10.3390/electronics8121393

Chicago/Turabian StyleIula, Antonio, and Monica Micucci. 2019. "Experimental Validation of a Reliable Palmprint Recognition System Based on 2D Ultrasound Images" Electronics 8, no. 12: 1393. https://doi.org/10.3390/electronics8121393

APA StyleIula, A., & Micucci, M. (2019). Experimental Validation of a Reliable Palmprint Recognition System Based on 2D Ultrasound Images. Electronics, 8(12), 1393. https://doi.org/10.3390/electronics8121393