Design and Analysis for Early Warning of Rotor UAV Based on Data-Driven DBN

Abstract

:1. Introduction

2. Mathematical Model of the Rotor UAV Flight Control System

2.1. Four-Rotor UAV Model

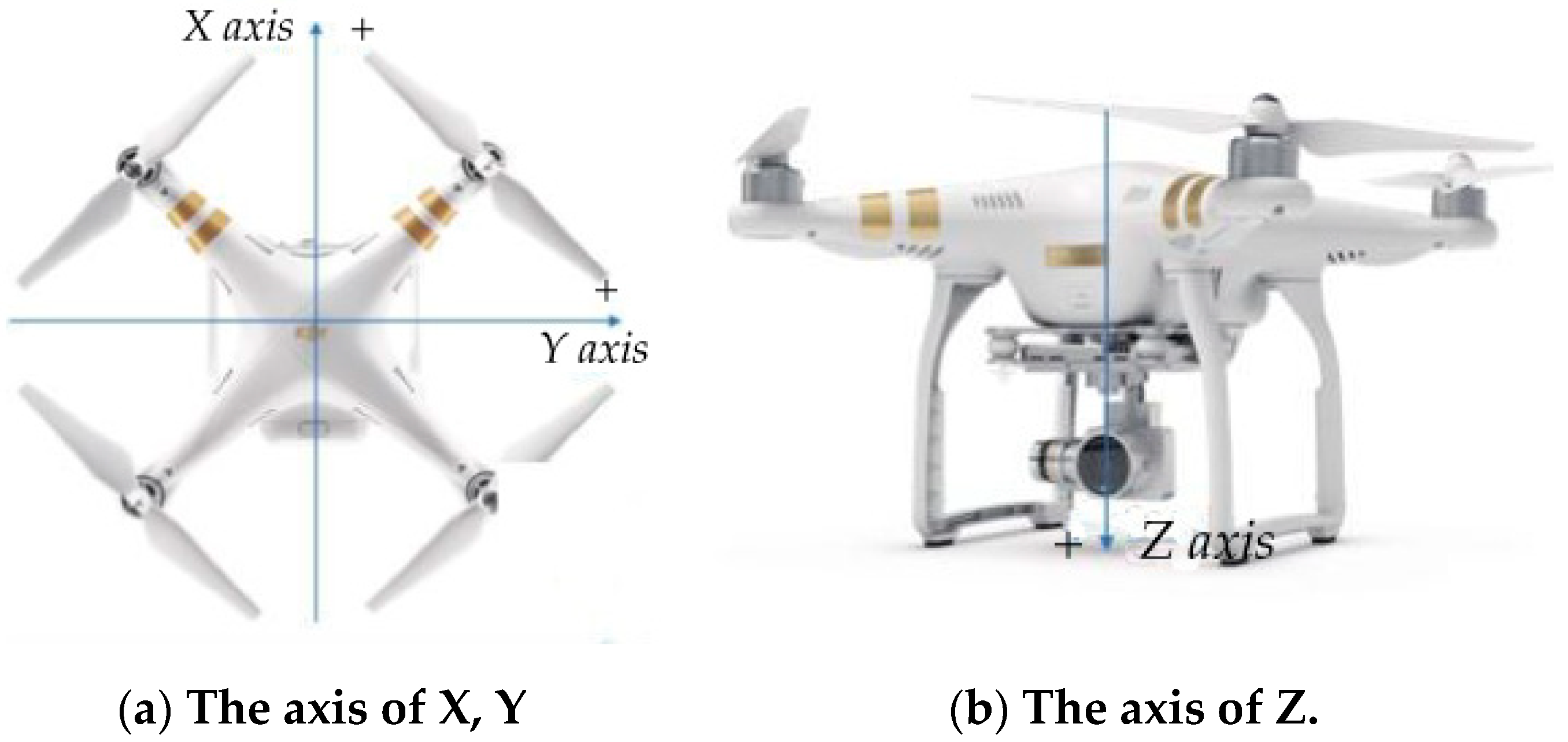

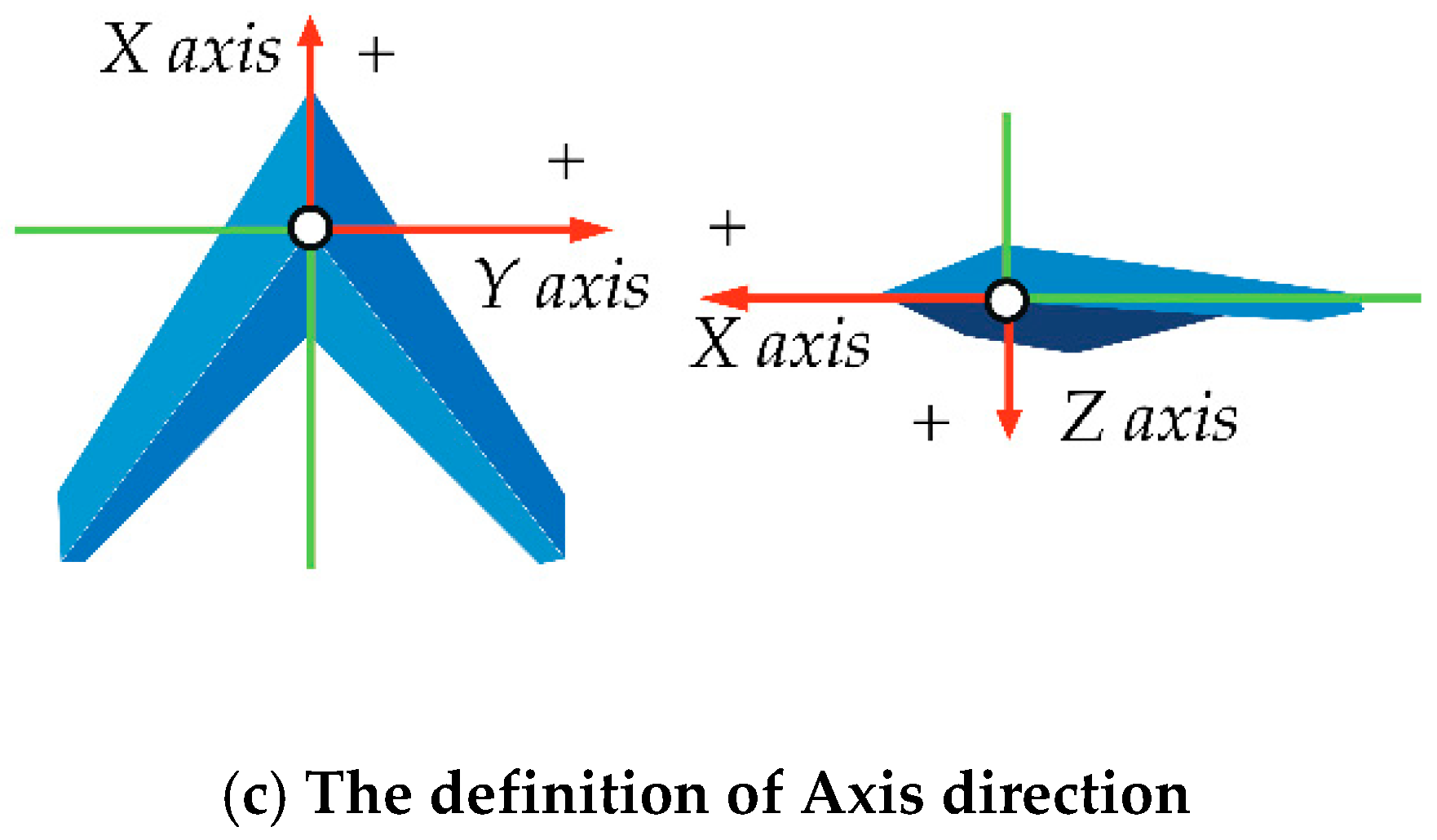

2.2. Flight Coordinate System Model

2.2.1. North East Coast Coordinate System

2.2.2. Aircraft Local Coordinate System

2.2.3. Speed Coordinate Systems

2.2.4. Kinematic Equations for Angular Velocity

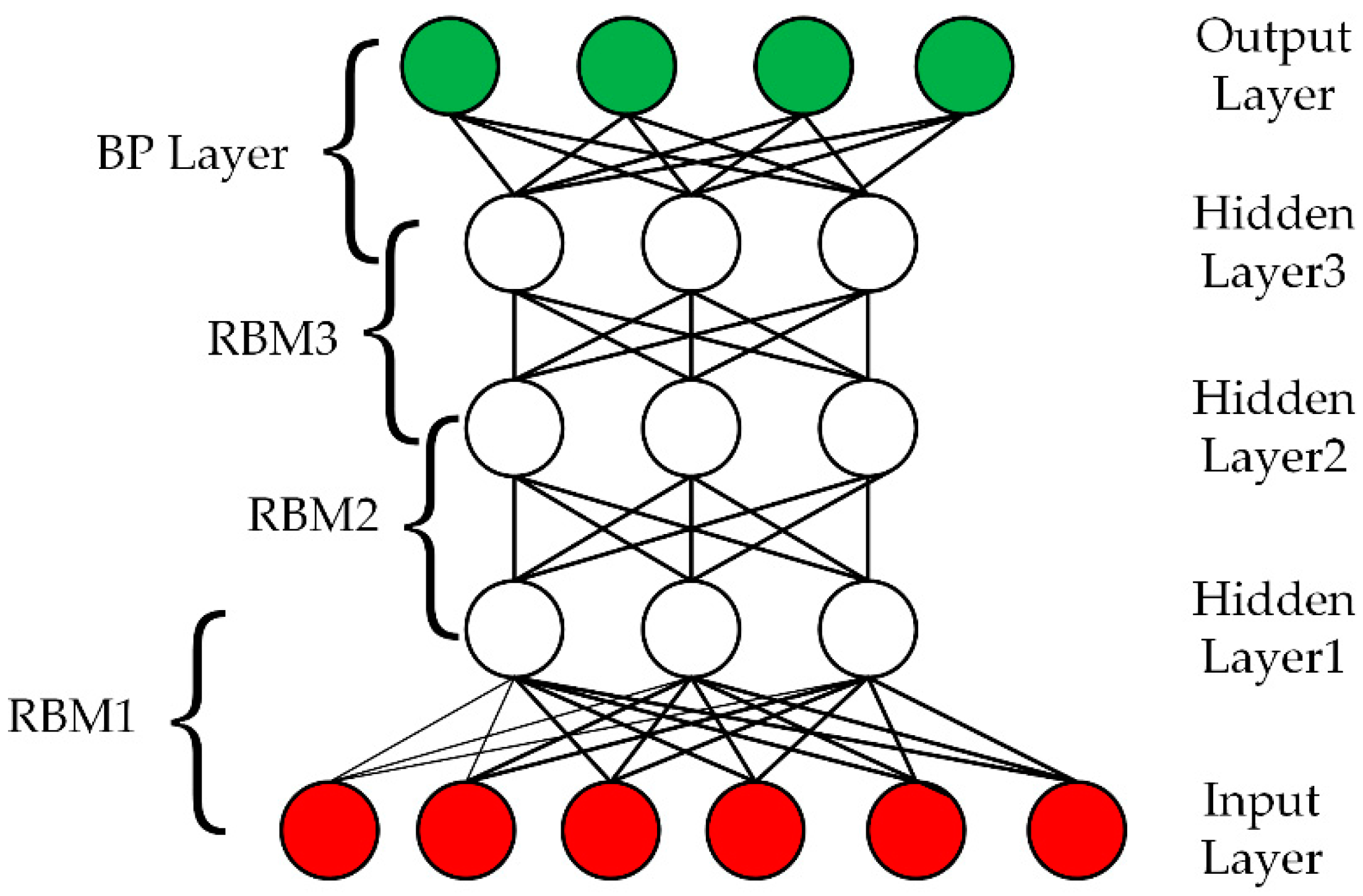

2.3. Deep Confidence Network Model

3. Fault Diagnosis Method Based on Deep Confidence Network

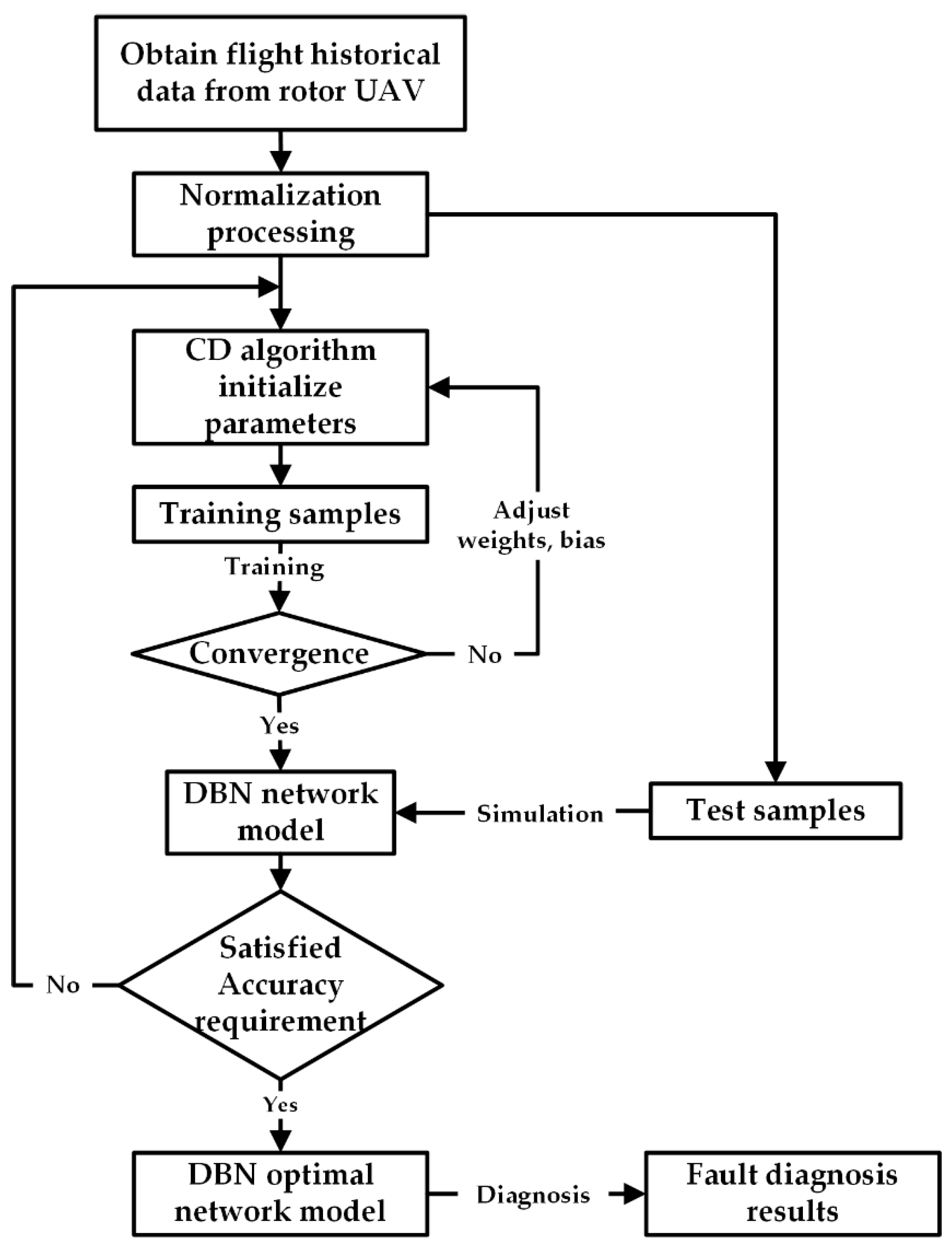

3.1. Off-Line Training Based on the DBN Model

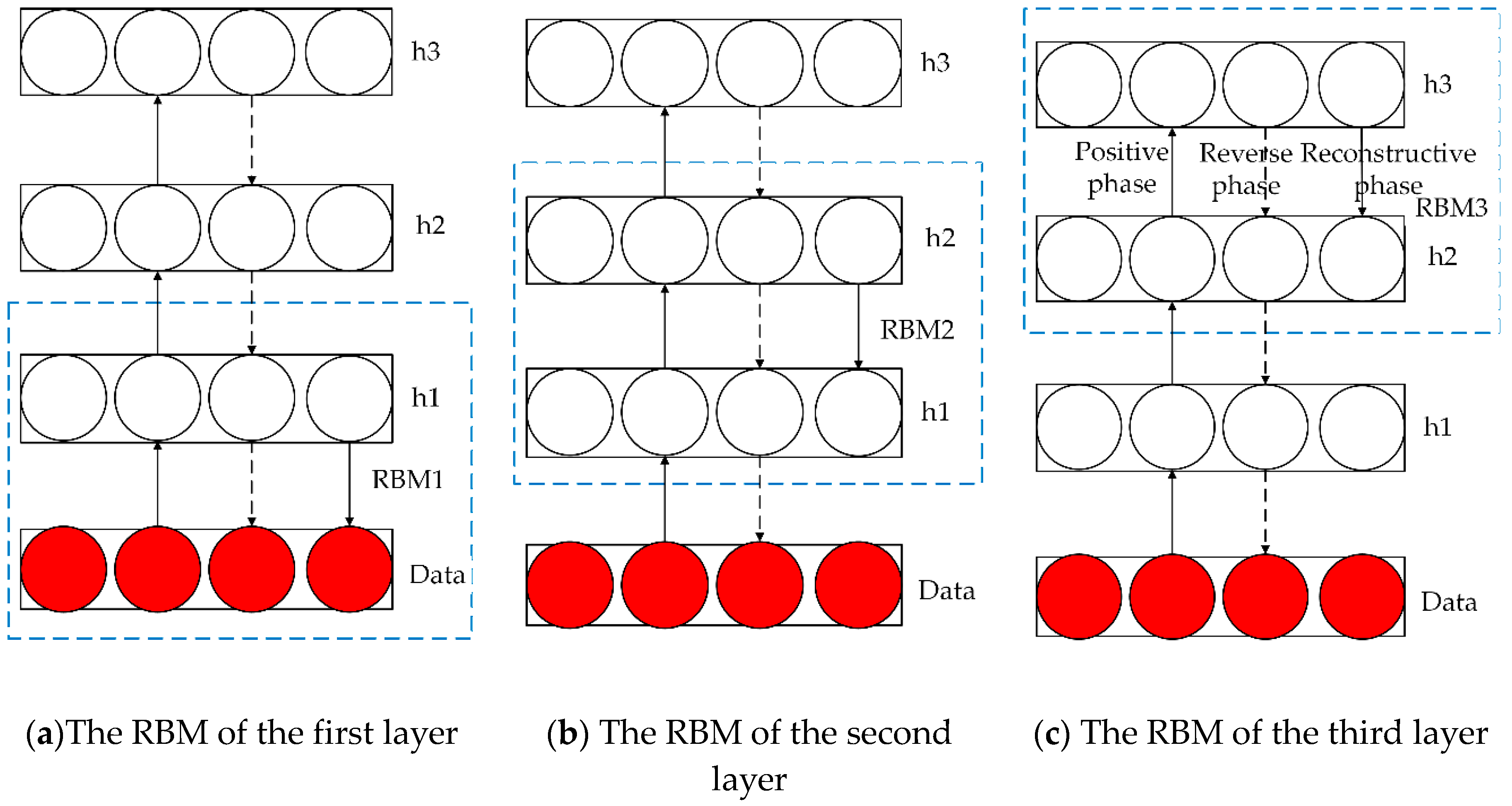

3.1.1. Deep Confidence Network Feature Extraction

| Algorithm 1. Description of RBM update algorithm. |

| This is the RBM update procedure for binomial units. It can easily adapted to other types of units. |

| is a sample from the training distribution for the RBM is a learning rate for the stochastic gradient descent in Contrastive Divergence is the RBM weight matrix, of dimension(number of hidden units, number of inputs) is the RBM offset vector for input units is the RBM offset vector for hidden units Notation: is the vector with elements for all hidden units do compute (for binomial units, sigm()) sample from end for for all visible units do compute (for binomial units, sigm sample from end for for all hidden units do compute (for binomial units, sigm end for |

3.1.2. Deep Confidence Network Training

| Algorithm 2. CD-k algorithm description. |

| Input:(), training batch Output: gradient approximation for . |

|

| Algorithm 3. Description of the train unsupervised DBN algorithm. |

| Train a DBN in a purely unsupervised way, with the greedy layer-wise procedure in which each added layer is trained as an RBM. |

| is the input training distribution for the network is a learning rate for the RBM training is the number of layers to train is the weight matrix for , for from 1 to is the visible units offset vector for RBM at level , for from 1 to is the hidden units offset vector for RBM at level, for from 1 to Mean_field_computation is Boolean that is true if training data at each additional level is obtained by a mean-field approximation instead of stochastic sampling for to do initialize while not stopping criterion do sample from for to do if mean_field_computation then assign to , for all elements of else sample from , for all elements of end if end for RBMupdate {thus providing for future use} end while end for |

3.2. Objective Function Establishment of DBN Fault Diagnosis Model

3.3. Online Diagnosis Based on the DBN Model

4. Experiment and Analysis

4.1. Experimental Platform

4.2. Model Evaluation Index Determination

4.2.1. The Description of the RMSE

4.2.2. The Description of the R2

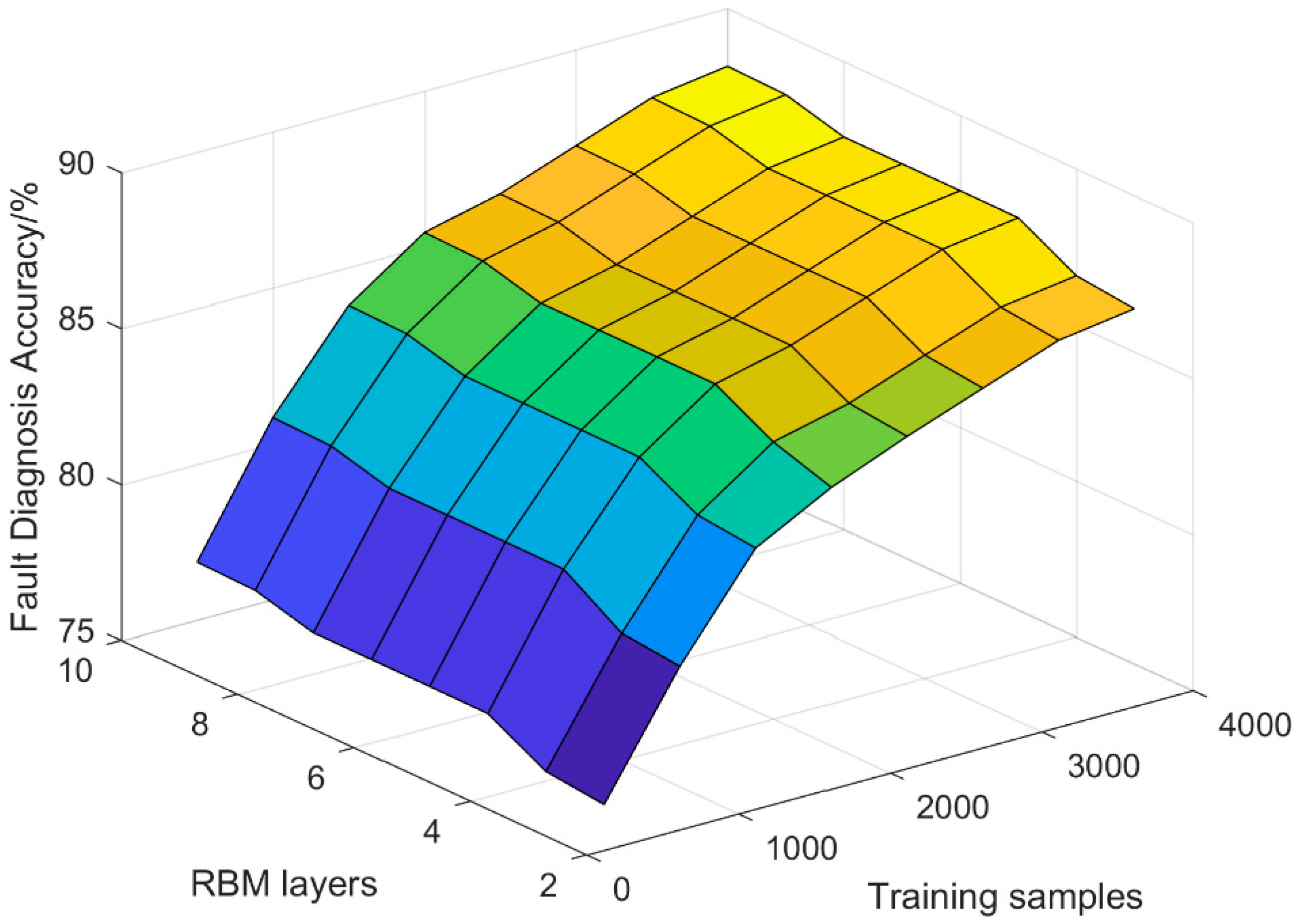

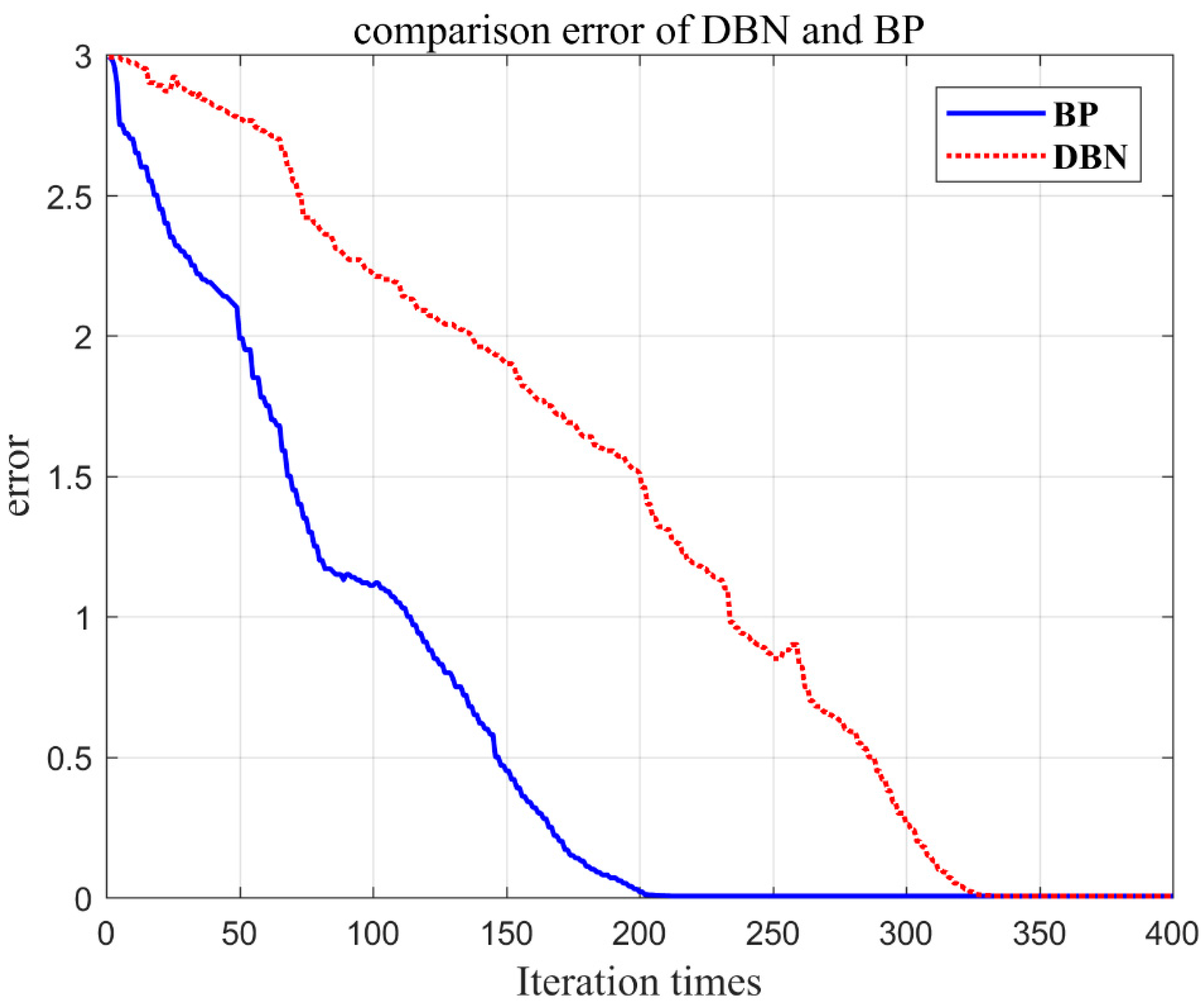

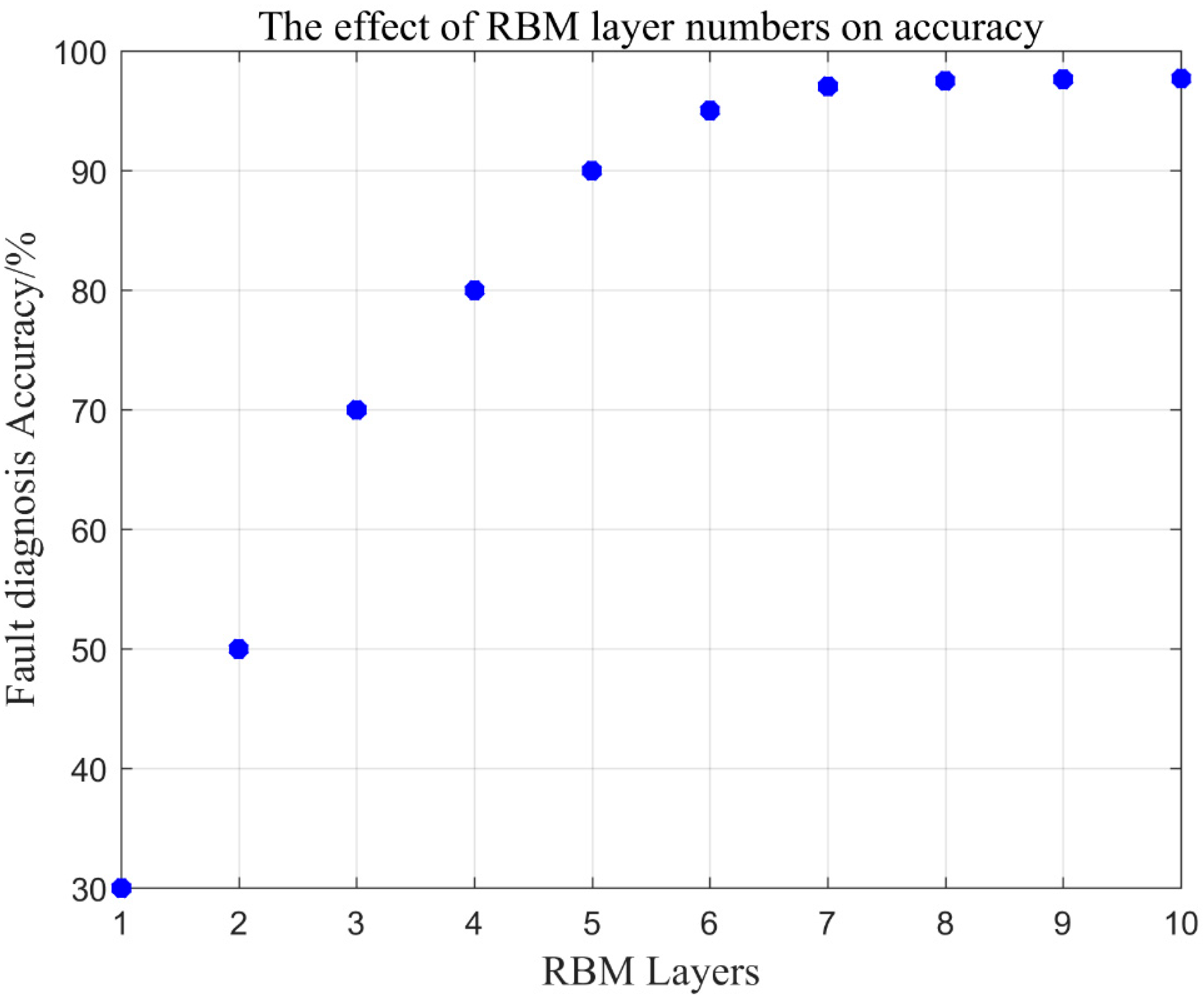

4.3. Model Structure Selection and Training Results

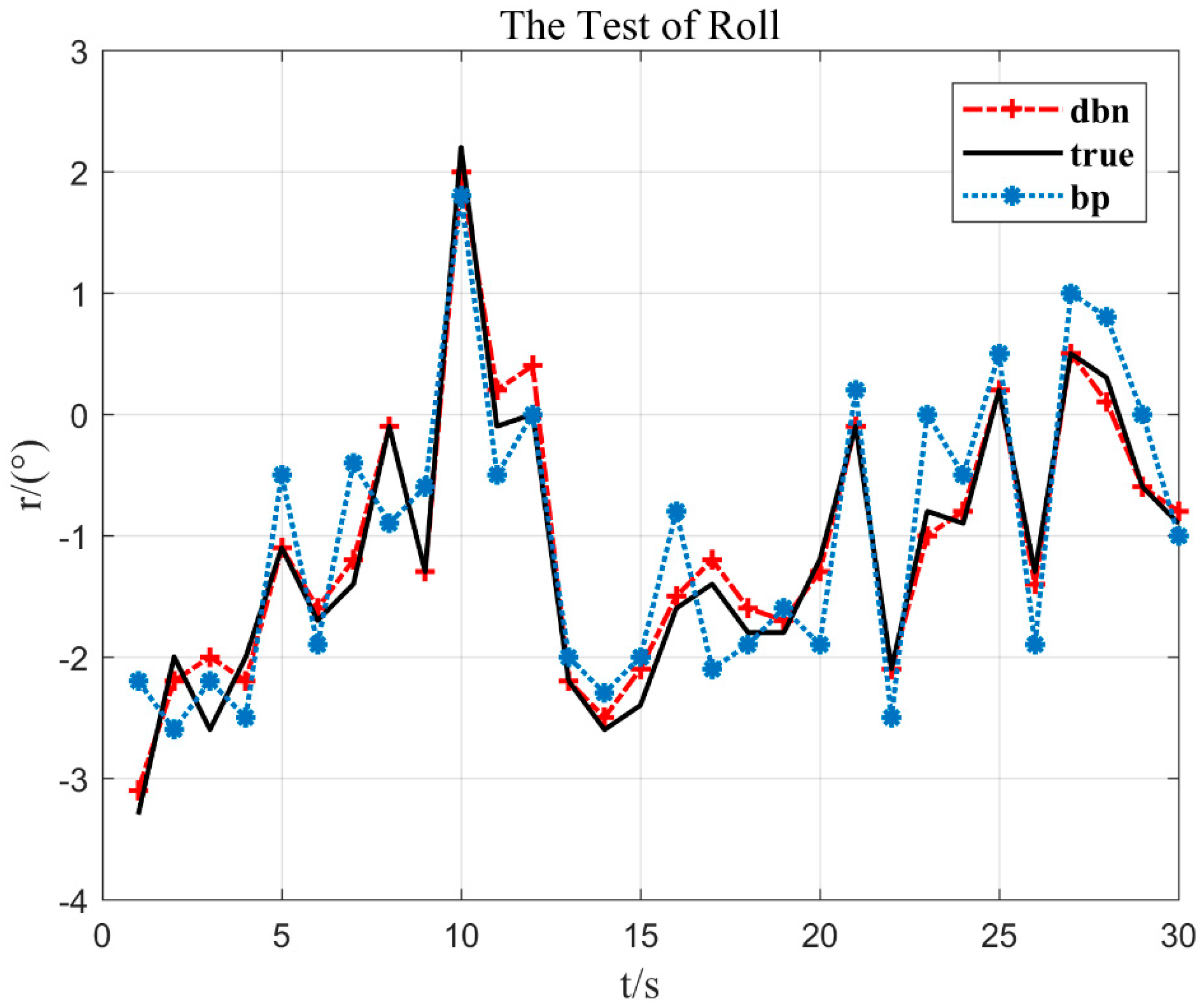

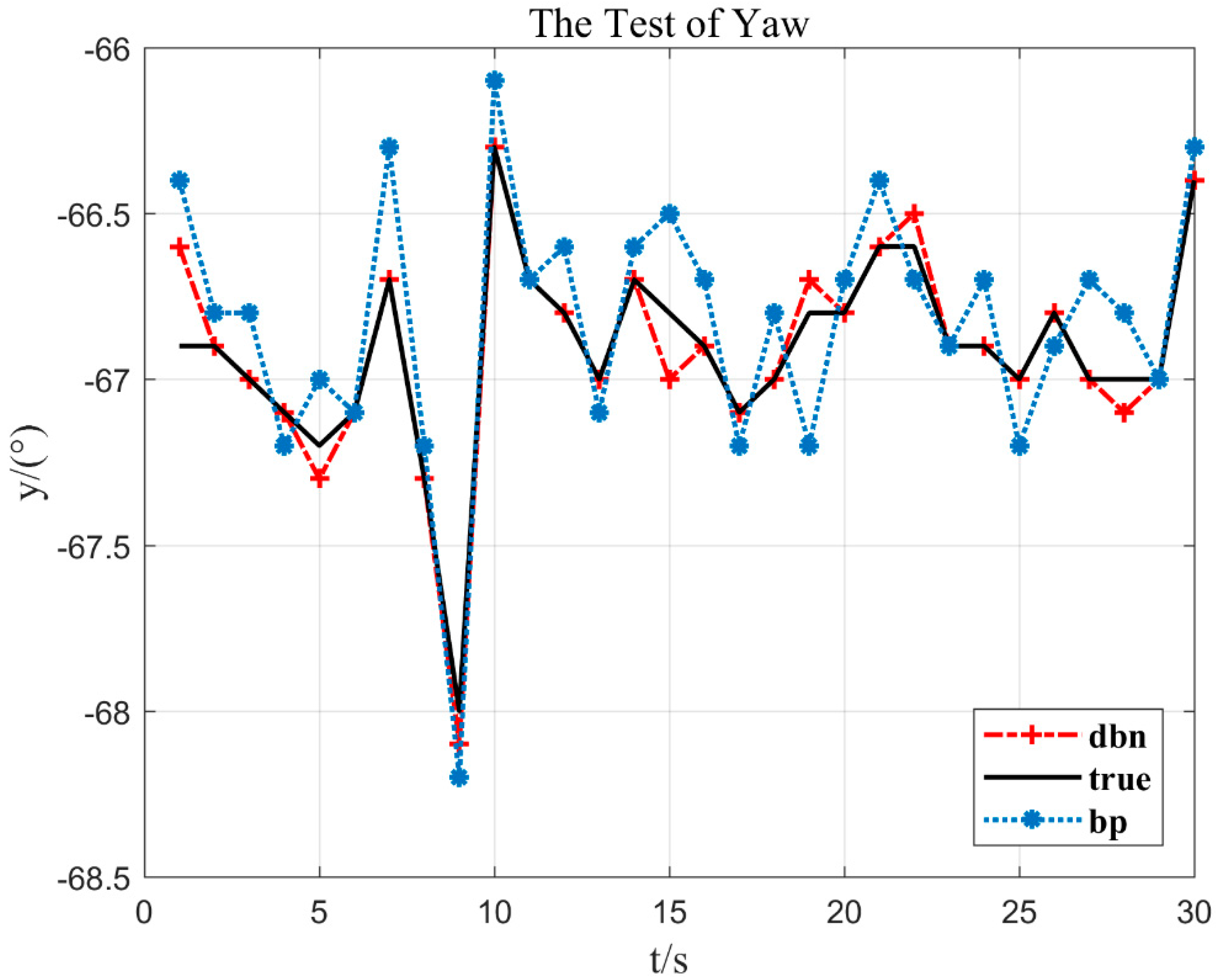

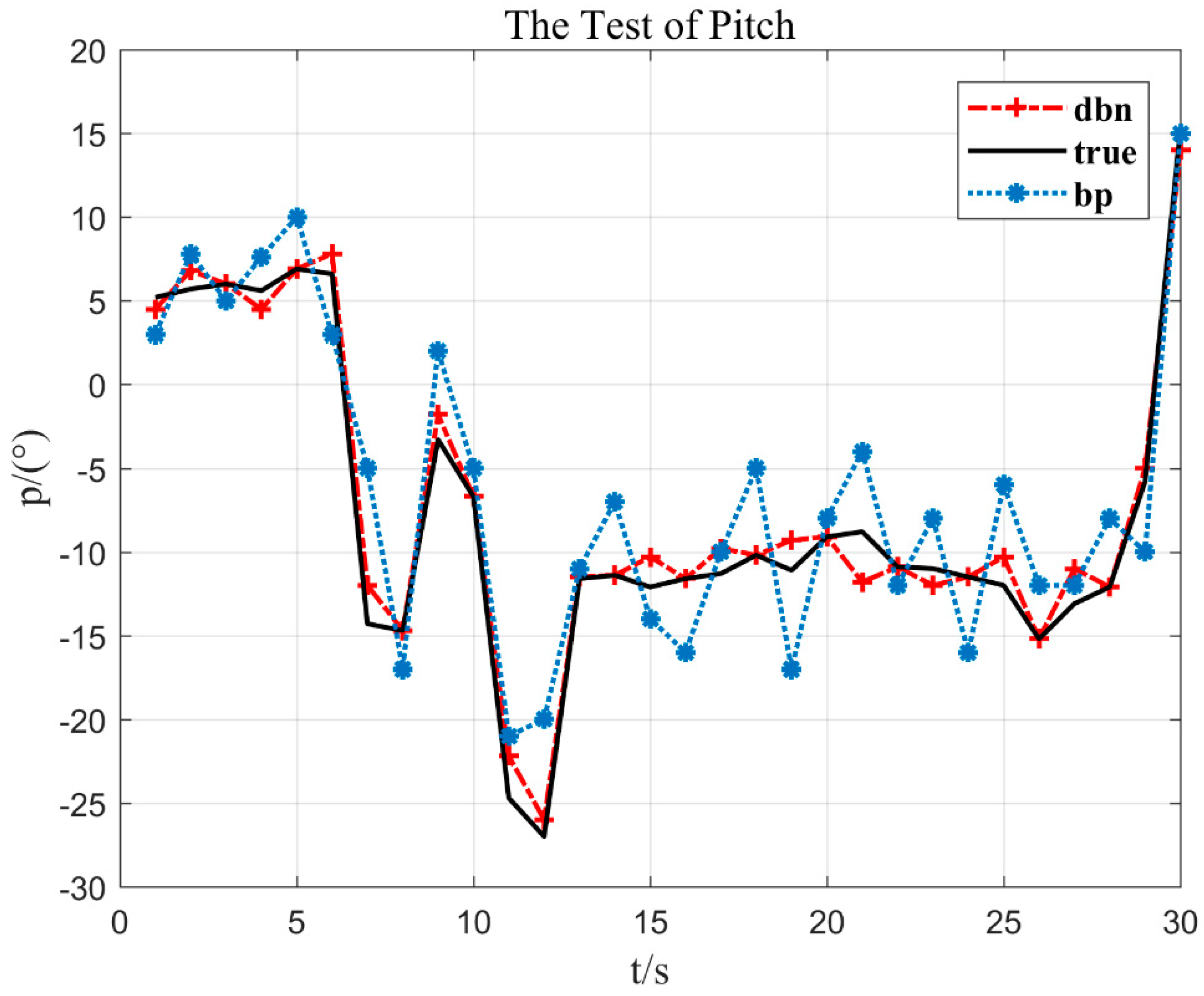

4.4. Experimental Results

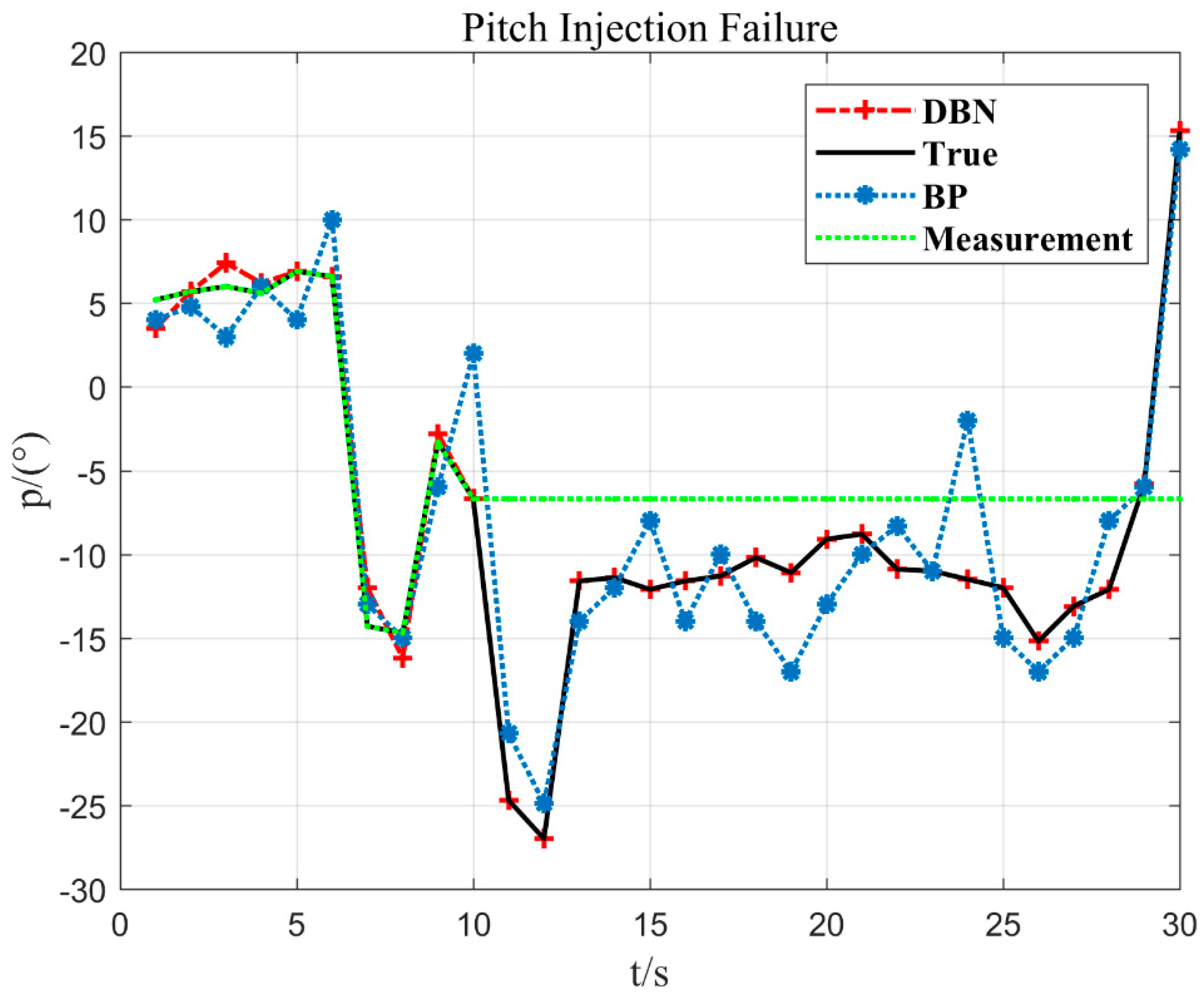

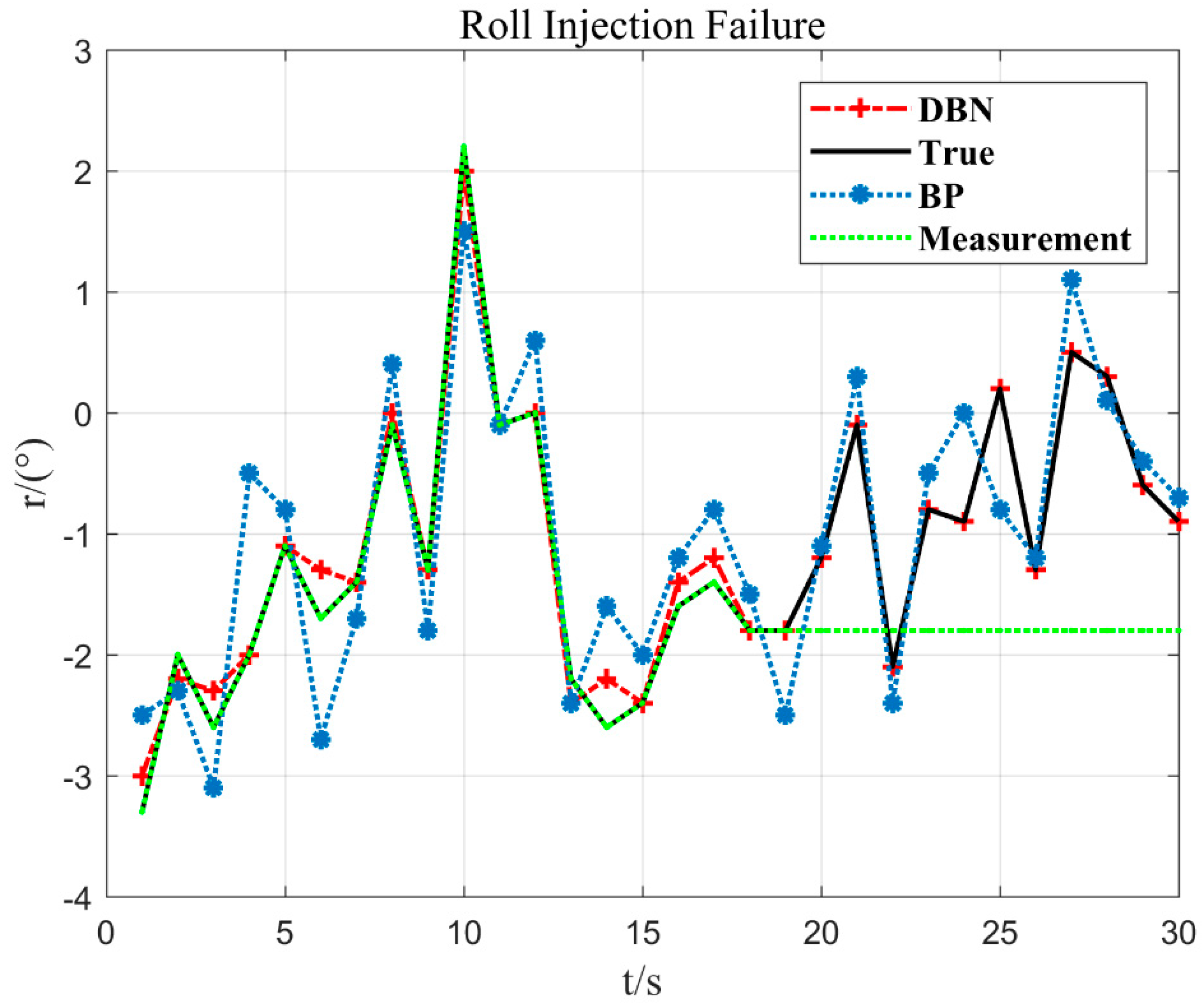

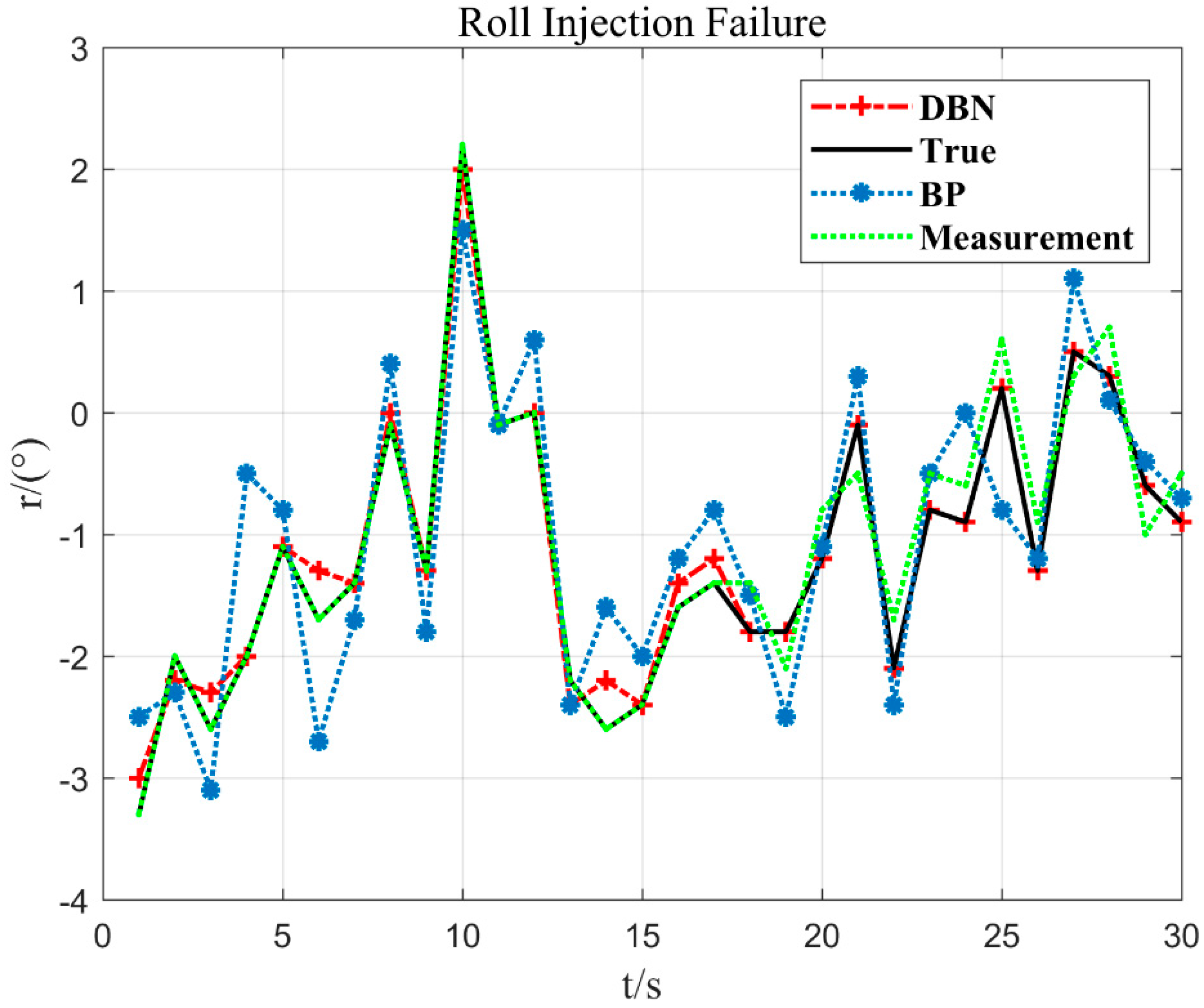

4.4.1. Sensor Stuck Fault Diagnosis

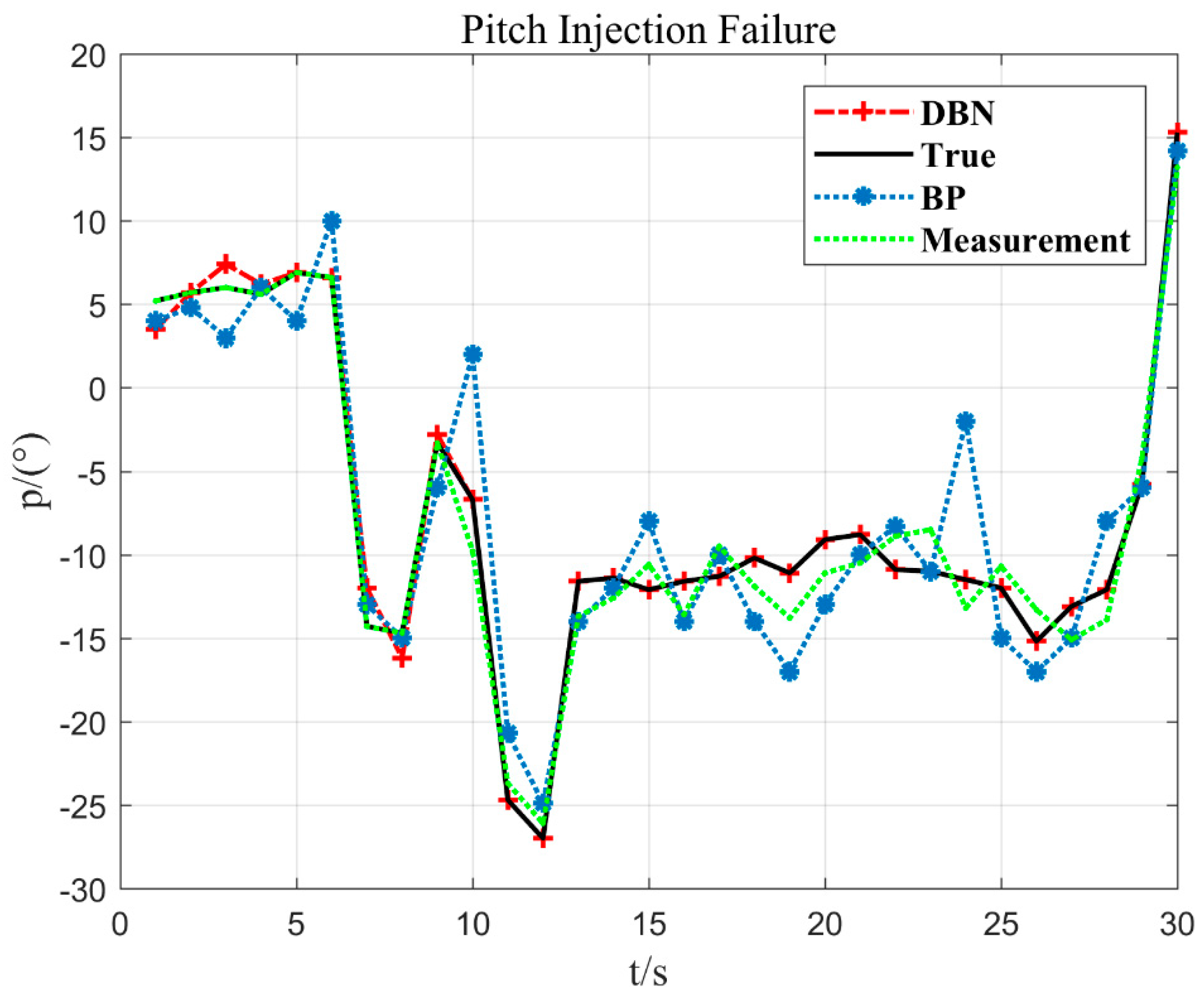

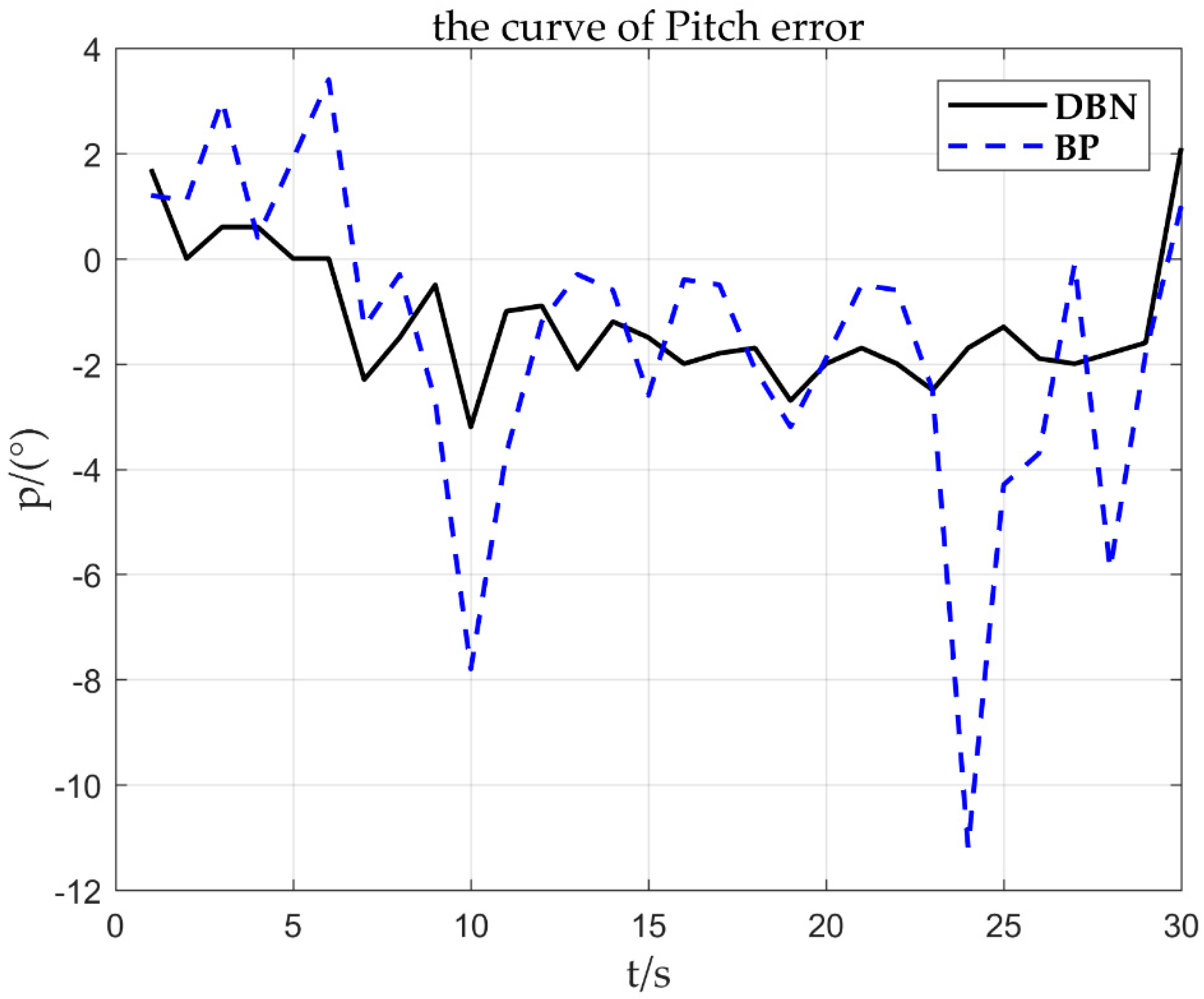

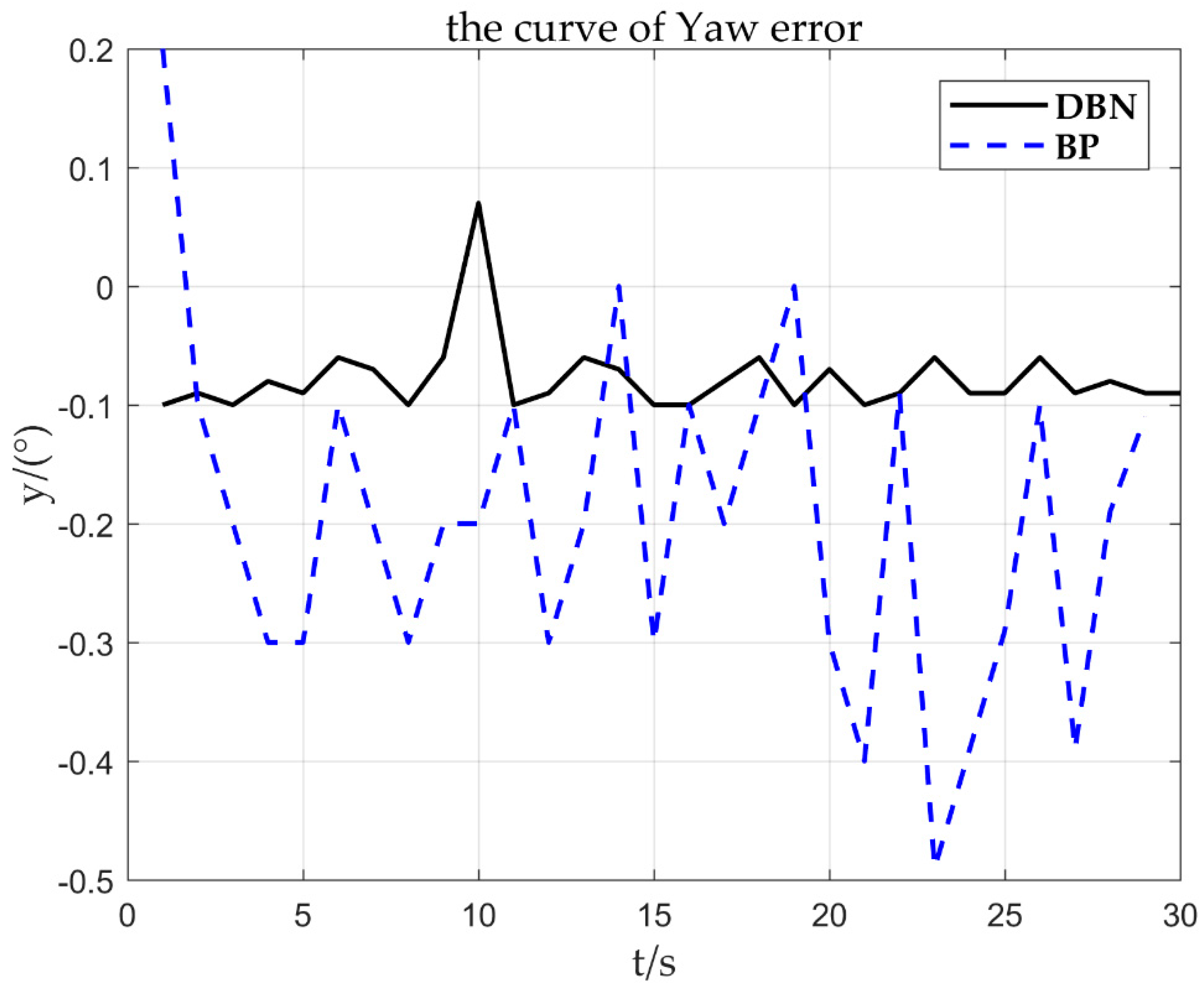

4.4.2. Sensor Constant Deviation Fault Diagnosis

5. Conclusions and Future Works

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Huo, Y.; Dong, X.; Lu, T.; Xu, W.; Yuen, M. Distributed and Multilayer UAV Networks for Next-Generation Wireless Communication and Power Transfer: A Feasibility Study. IEEE Internet Things J. 2019, 6, 7103–7115. [Google Scholar] [CrossRef]

- Gao, Y.H.; Zhao, D.; Li, Y.B. UAV Sensor Fault Diagnosis Technology: A Survey. Appl. Mech. Mater. 2012, 220, 1833–1837. [Google Scholar] [CrossRef]

- Ducard, G.; Rudin, K.; Omari, S.; Siegwart, R. Strategies for Sensor-fault Compensation on UAVs: Review, Discussions & Additions. In Proceedings of the 2014 European Control Conference (ECC), Strasbourg, France, 24–27 June 2014. [Google Scholar]

- Hansen, S.; Blanke, M. Diagnosis of Airspeed Measurement Faults for Unmanned Aerial Vehicles. IEEE Trans. Aerosp. Electron. Syst. 2014, 50, 224–239. [Google Scholar] [CrossRef]

- López-Estrada, F.R.; Ponsart, J.C.; Theilliol, D.; Astorga-Zaragoza, C.M.; Zhang, Y.M. Robust Sensor Fault Diagnosis and Tracking Controller for a UAV Modelled as LPV System. In Proceedings of the International Conference on Unmanned Aircraft Systems (ICUAS), Orlando, FL, USA, 27–30 May 2014. [Google Scholar]

- Ding, S.X. Model-Based Fault Diagnosis Techniques. IFAC Pap. 2016, 49, 50–56. [Google Scholar]

- Yoon, S.; Kim, S.; Bae, J.; Kim, Y.; Kim, E. Experimental evaluation of fault diagnosis in a skew-configured UAV sensor system. Control Eng. Pract. 2011, 19, 158–173. [Google Scholar] [CrossRef]

- Freeman, P.; Seiler, P.; Balas, G.J. Air data system fault modeling and detection. Control Eng. Pract. 2013, 21, 1290–1301. [Google Scholar] [CrossRef]

- Remus, A. Fault Diagnosis and Fault-Tolerant Control of Quadrotor UAVs. Ph.D. Thesis, Wright State University, Dayton, OH, USA, 2016. [Google Scholar]

- Yu, P.; Liu, D. Data-driven prognostics and health management: A review of recent advances. Chin. J. Sci. Instrum. 2014, 35, 481–495. [Google Scholar]

- Kim, J.H. Time Frequency Image and Artificial Neural Network Based Classification of Impact Noise for Machine Fault Diagnosis. Int. J. Precis. Eng. Manuf. 2018, 19, 821–827. [Google Scholar] [CrossRef]

- Guo, D.; Zhong, M.; Ji, H.; Liu, Y.; Yang, R. A hybrid feature model and deep learning based fault diagnosis for unmanned aerial vehicle sensors. Neurocomputing 2018, 319, 155–163. [Google Scholar] [CrossRef]

- Younes, Y.A.; Rabhi, A.; Noura, H.; Hajjaji, A.E. Sensor fault diagnosis and fault tolerant control using intelligent-output-estimator applied on quadrotor UAV. In Proceedings of the International Conference on Unmanned Aircraft Systems (ICUAS), Arlington, VA, USA, 7–10 June 2016. [Google Scholar]

- Sun, R.; Cheng, Q.; Wang, G.; Ochieng, W. A Novel Online Data-Driven Algorithm for Detecting UAV Navigation Sensor Faults. Sensors 2017, 17, 2243. [Google Scholar] [CrossRef] [PubMed]

- Qi, J.; Zhao, X.; Jiang, Z.; Han, J. An Adaptive Threshold Neural-Network Scheme for Rotorcraft UAV Sensor Failure Diagnosis; Springer: Berlin/Heidelberg, Germany, 2007. [Google Scholar]

- Shen, Y.; Wu, C.; Liu, C.; Wu, Y.; Xiong, N. Oriented Feature Selection SVM Applied to Cancer Prediction in Precision Medicine. IEEE Access 2018, 6, 48510–48521. [Google Scholar] [CrossRef]

- Guo, Y.; Shuang, W.; Gao, C.; Shi, D.; Zhang, D.; Hou, B. Wishart RBM based DBN for polarimetric synthetic radar data classification. In Proceedings of the 2015 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Milan, Italy, 26–31 July 2015. [Google Scholar]

- Senkul, F.; Altug, E. Adaptive control of a tilt—Roll rotor quadrotor UAV. In Proceedings of the 2014 International Conference on Unmanned Aircraft Systems (ICUAS), Orlando, FL, USA, 27–30 May 2014. [Google Scholar]

- Zeng, Y.; Xu, J.; Zhang, R. Energy Minimization for Wireless Communication With Rotary-Wing UAV. IEEE Trans. Wirel. Commun. 2019, 18, 2329–2345. [Google Scholar] [CrossRef]

- Mateo, G.; Luka, J. Gimbal Influence on the Stability of Exterior Orientation Parameters of UAV Acquired Images. Sensors 2017, 17, 401. [Google Scholar]

- Warsi, F.A.; Hazry, D.; Ahmed, S.F.; Joyo, M.K.; Tanveer, M.H.; Kamarudin, H.; Razlan, Z.M. Yaw, Pitch and Roll controller design for fixed-wing UAV under uncertainty and perturbed condition. In Proceedings of the 2014 IEEE 10th International Colloquium on Signal Processing and its Applications, Kuala Lumpur, Malaysia, 7–9 March 2014. [Google Scholar]

- Chao, Y.; Wu, J.; Wang, X. Roll and yaw control of unmanned helicopter based on adaptive neural networks. In Proceedings of the 2008 27th Chinese Control Conference, Kunming, China, 16–18 July 2008. [Google Scholar]

- Altan, A.; Hacioglu, R. Modeling of three-axis gimbal system on unmanned air vehicle (UAV) under external disturbances. In Proceedings of the 2017 25th Signal Processing and Communications Applications Conference (SIU), Antalya, Turkey, 15–18 May 2017. [Google Scholar]

- Rajesh, R.J.; Ananda, C.M. PSO tuned PID controller for controlling camera position in UAV using 2-axis gimbal. In Proceedings of the 2015 International Conference on Power and Advanced Control Engineering (ICPACE), Bangalore, India, 12–14 August 2015. [Google Scholar]

- Jie, K.; Li, Z.; Zhong, W. Design of Robust Roll Angle Control System of Unmanned Aerial Vehicles Based on Atmospheric Turbulence Attenuation. In Proceedings of the 2010 8th World Congress on Intelligent Control and Automation, Jinan, China, 7–9 July 2010. [Google Scholar]

- Wu, C.; Luo, C.; Xiong, N.; Zhang, W.; Kim, T. A Greedy Deep Learning Method for Medical Disease Analysis. IEEE Access 2018, 6, 20021–20030. [Google Scholar] [CrossRef]

- Nasersharif, B. Noise Adaptive Deep Belief Network For Robust Speech Features Extraction. In Proceedings of the 2017 Iranian Conference on Electrical Engineering (ICEE), Tehran, Iran, 2–4 May 2017. [Google Scholar]

- Chen, D.; Jiang, D.; Ravyse, I.; Sahli, H. Audio-Visual Emotion Recognition Based on a DBN Model with Constrained Asynchrony. In Proceedings of the 2009 Fifth International Conference on Image and Graphics, Xi’an, China, 20–23 September 2009. [Google Scholar]

- Alkhateeb, J.H.; Alseid, M. DBN—Based learning for Arabic handwritten digit recognition using DCT features. In Proceedings of the 2014 6th International Conference on Computer Science and Information Technology (CSIT), Amman, Jordan, 26–27 March 2014. [Google Scholar]

- Ke, W.; Chunxue, W.; Wu, Y.; Xiong, N. A New Filter Feature Selection Based on Criteria Fusion for Gene Microarray Data. IEEE Access 2018, 6, 61065–61076. [Google Scholar] [CrossRef]

- Hajinoroozi, M.; Jung, T.P.; Lin, C.T.; Huang, Y. Feature extraction with deep belief networks for driver’s cognitive states prediction from eeg data. In Proceedings of the IEEE China Summit & International Conference on Signal & Information Processing (ChinaSIP), Chengdu, China, 12–15 July 2015. [Google Scholar]

- Lei, Y.; Feng, J.; Jing, L.; Xing, S.; Ding, S. An intelligent fault diagnosis method using unsupervised feature learning towards mechanical big data. IEEE Trans. Ind. Electron. 2016, 63, 3137–3147. [Google Scholar]

- Lin, B.; Guo, W.; Xiong, N.; Chen, G.; Vasilakos, A.V.; Zhang, H. A Pretreatment Workflow Scheduling Approach for Big Data Applications in Multicloud Environments. IEEE Trans. Netw. Serv. Manag. 2016, 13, 581–594. [Google Scholar] [CrossRef]

- Liu, X.; Hu, Y.; Xu, Z.; Ren, Y.; Gao, T. Fault diagnosis for hydraulic system of naval gun based on BP-Adaboost model. In Proceedings of the 2017 Second International Conference on Reliability Systems Engineering (ICRSE), Beijing, China, 10–12 July 2017; pp. 1–6. [Google Scholar]

| Fault Type | Parameter |

|---|---|

| Constant deviation fault | |

| Constant gain fault |

| Models | Roll | Yaw | Pitch | R2 |

|---|---|---|---|---|

| BP | 10.34 | 156.34 | 74.75 | 0.82 |

| DBN | 1.65 | 89.4 | 25.64 | 0.94 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, X.-M.; Wu, C.-X.; Wu, Y.; Xiong, N.-x.; Han, R.; Ju, B.-B.; Zhang, S. Design and Analysis for Early Warning of Rotor UAV Based on Data-Driven DBN. Electronics 2019, 8, 1350. https://doi.org/10.3390/electronics8111350

Chen X-M, Wu C-X, Wu Y, Xiong N-x, Han R, Ju B-B, Zhang S. Design and Analysis for Early Warning of Rotor UAV Based on Data-Driven DBN. Electronics. 2019; 8(11):1350. https://doi.org/10.3390/electronics8111350

Chicago/Turabian StyleChen, Xue-Mei, Chun-Xue Wu, Yan Wu, Nai-xue Xiong, Ren Han, Bo-Bo Ju, and Sheng Zhang. 2019. "Design and Analysis for Early Warning of Rotor UAV Based on Data-Driven DBN" Electronics 8, no. 11: 1350. https://doi.org/10.3390/electronics8111350

APA StyleChen, X.-M., Wu, C.-X., Wu, Y., Xiong, N.-x., Han, R., Ju, B.-B., & Zhang, S. (2019). Design and Analysis for Early Warning of Rotor UAV Based on Data-Driven DBN. Electronics, 8(11), 1350. https://doi.org/10.3390/electronics8111350