Towards Location Independent Gesture Recognition with Commodity WiFi Devices

Abstract

1. Introduction

2. Related Work

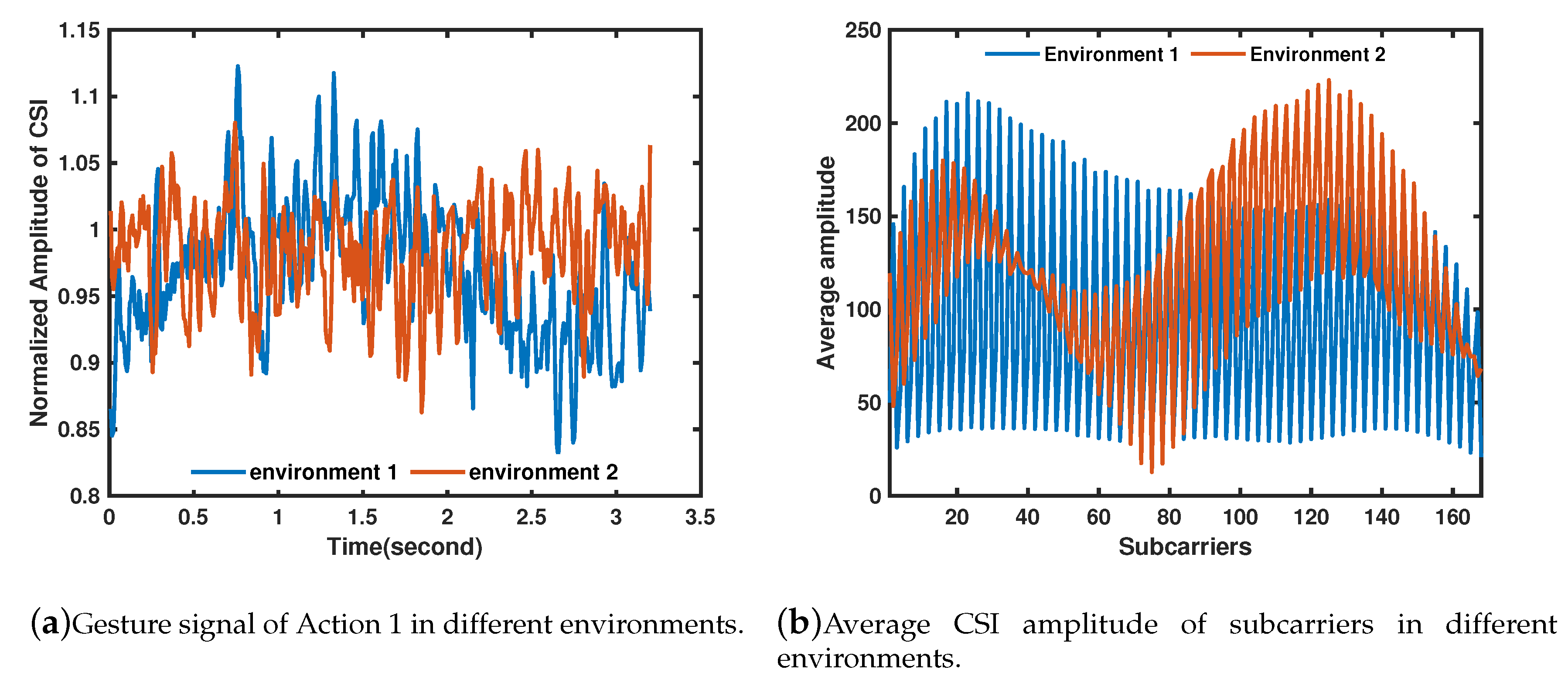

3. Basic Idea

3.1. Channel State Information

3.2. Problem Statement

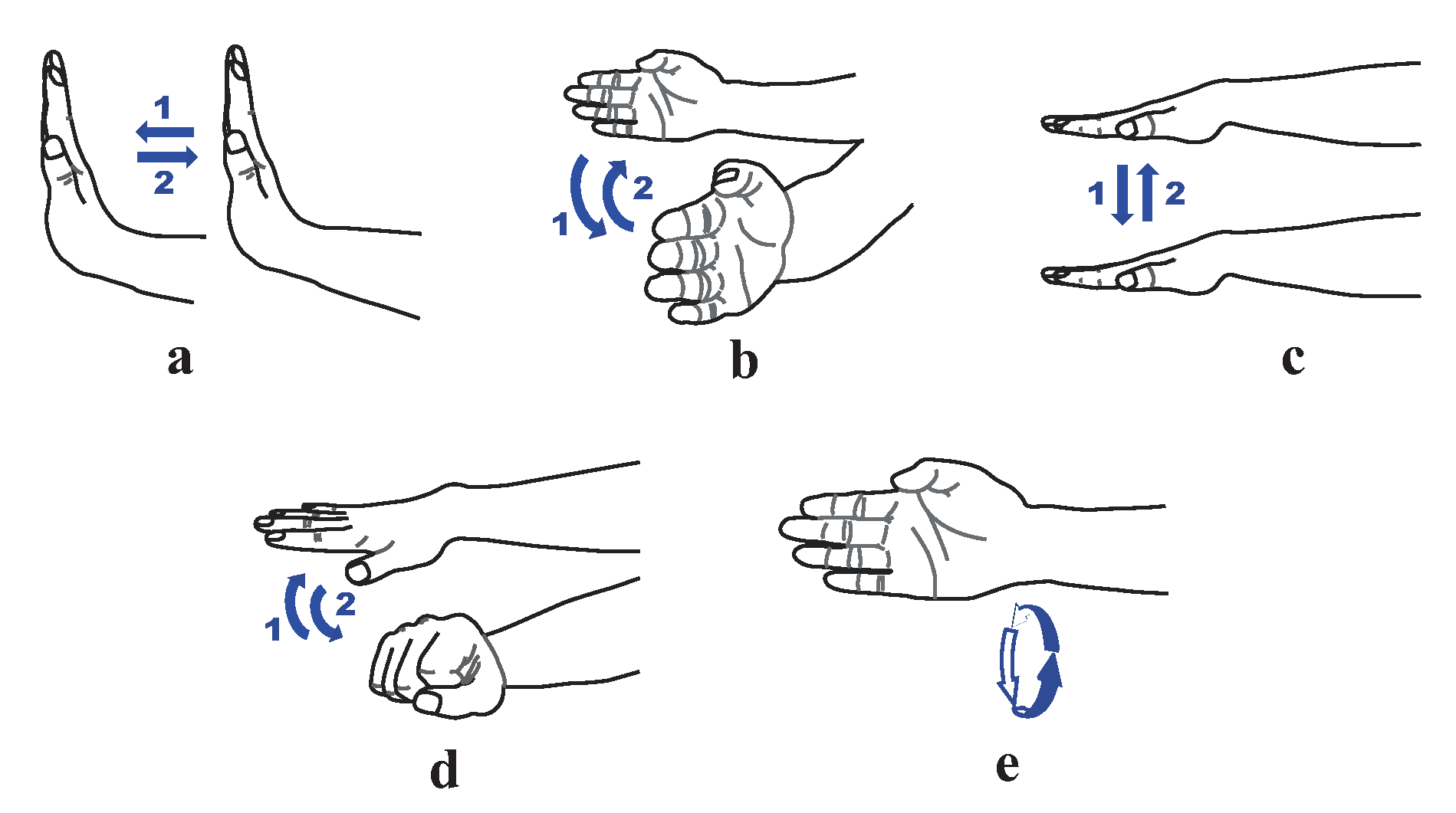

4. Design Details of WiHand

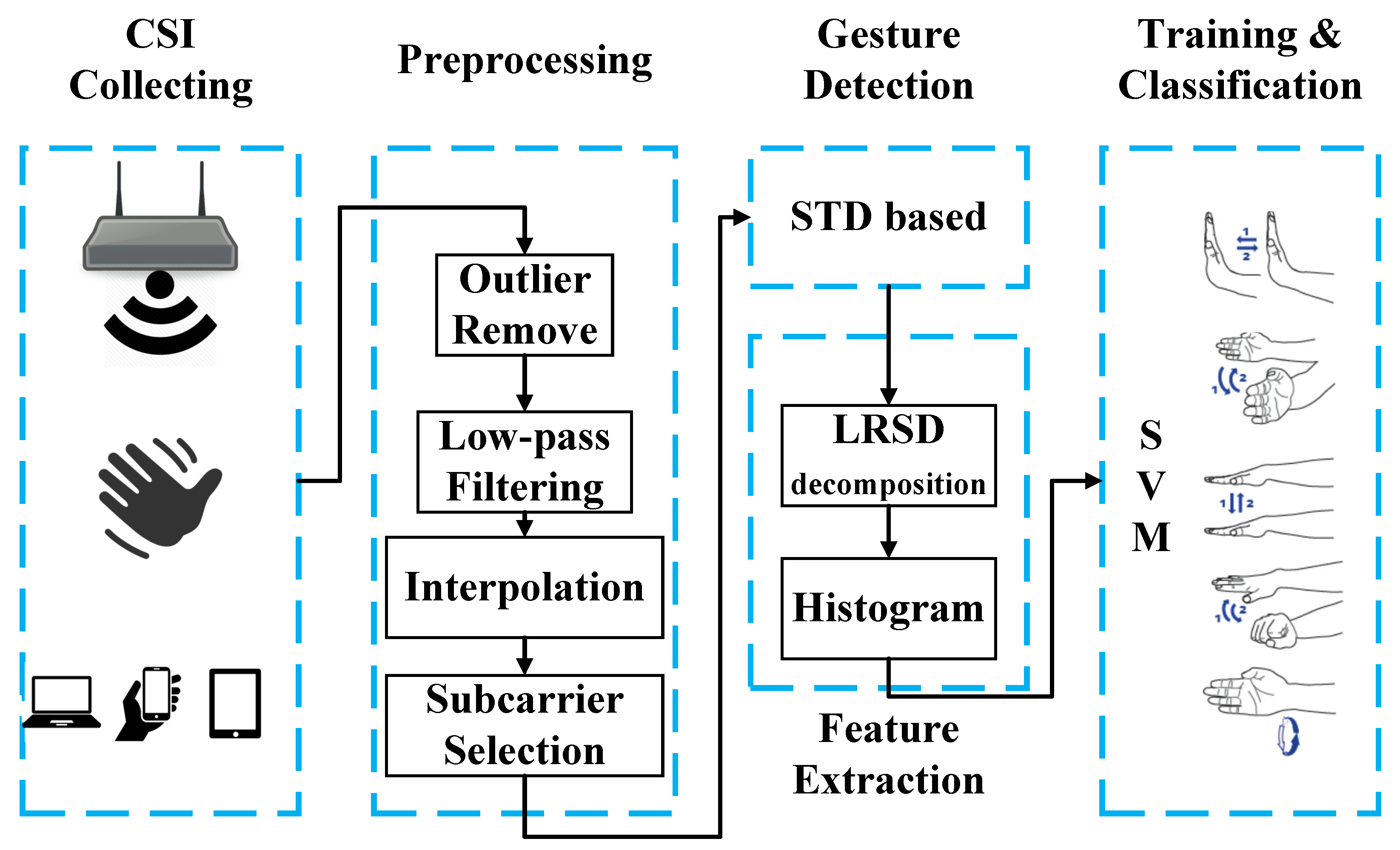

4.1. Overview

- Preprocessing. The CSI data collected from the commodity devices contain random noise. We should filter out the signals that are out of the frequency range of hand gestures. In addition, the time interval of the packages is not strictly equal. We should use interpolation to make the CSI stream more related to the gestures.

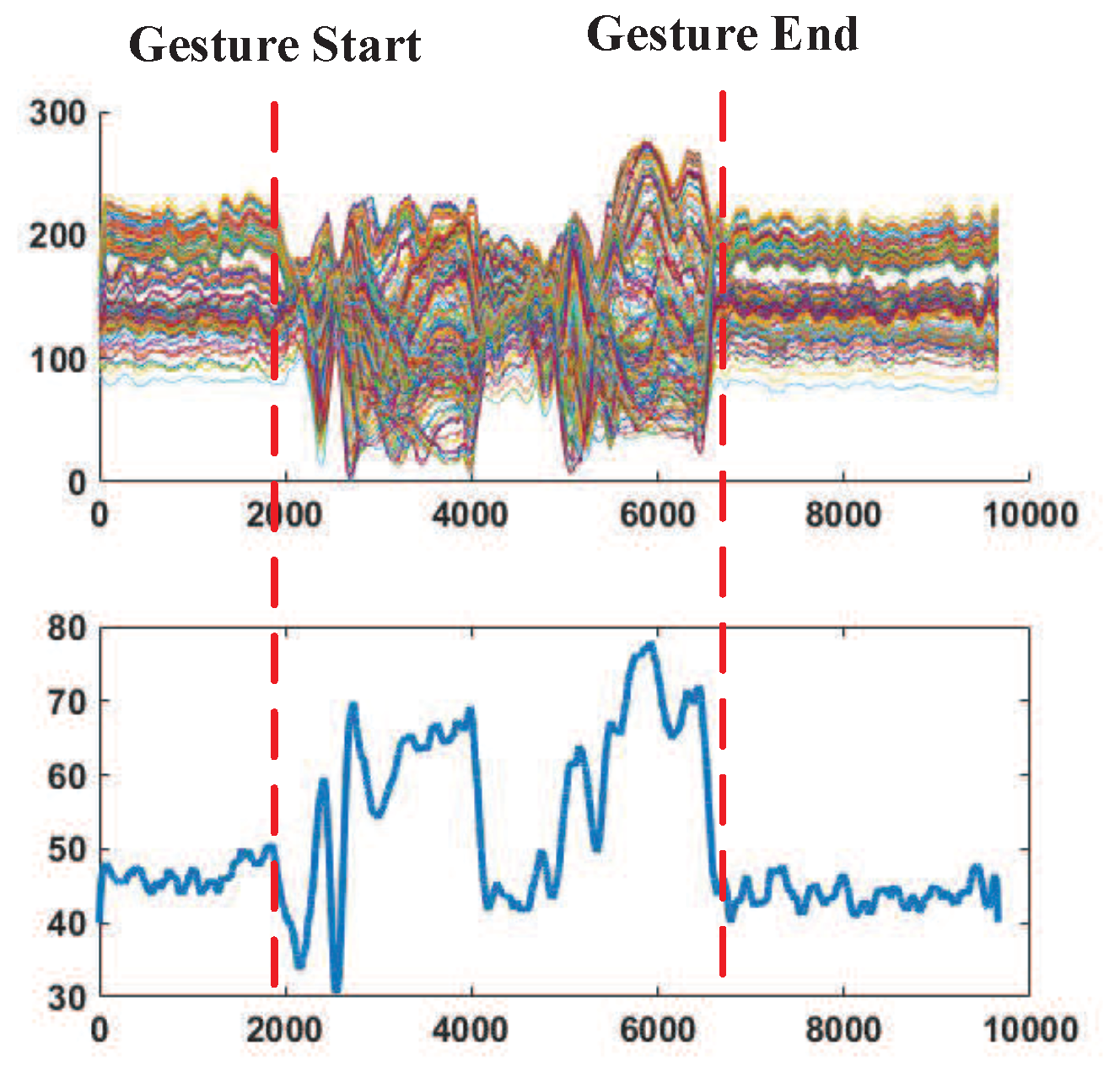

- Gesture Detection. The CSI streams are continuous; we should detect if there is a gesture being acted and at the same time extract the gesture related segments generating corresponding gesture profiles. With the standard deviation based algorithm, we can easily detect the appearance of gesture signal.

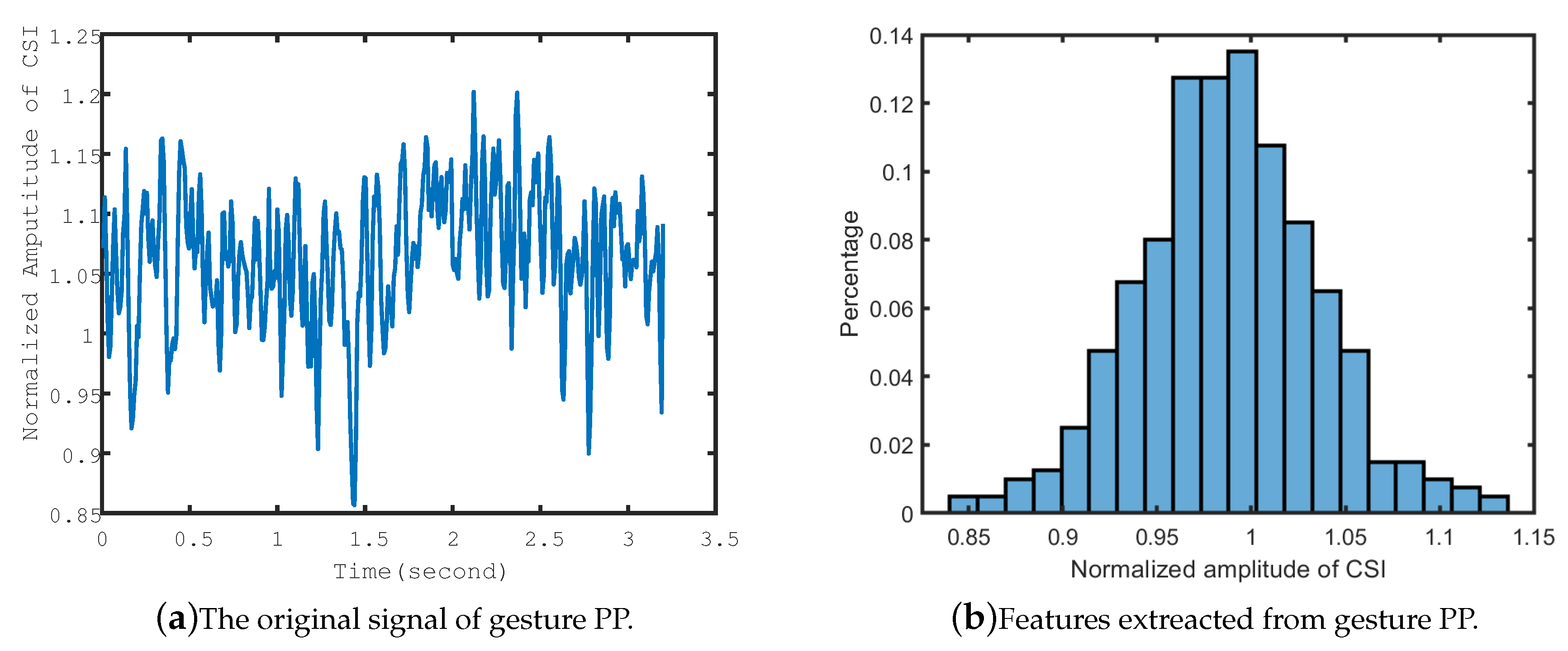

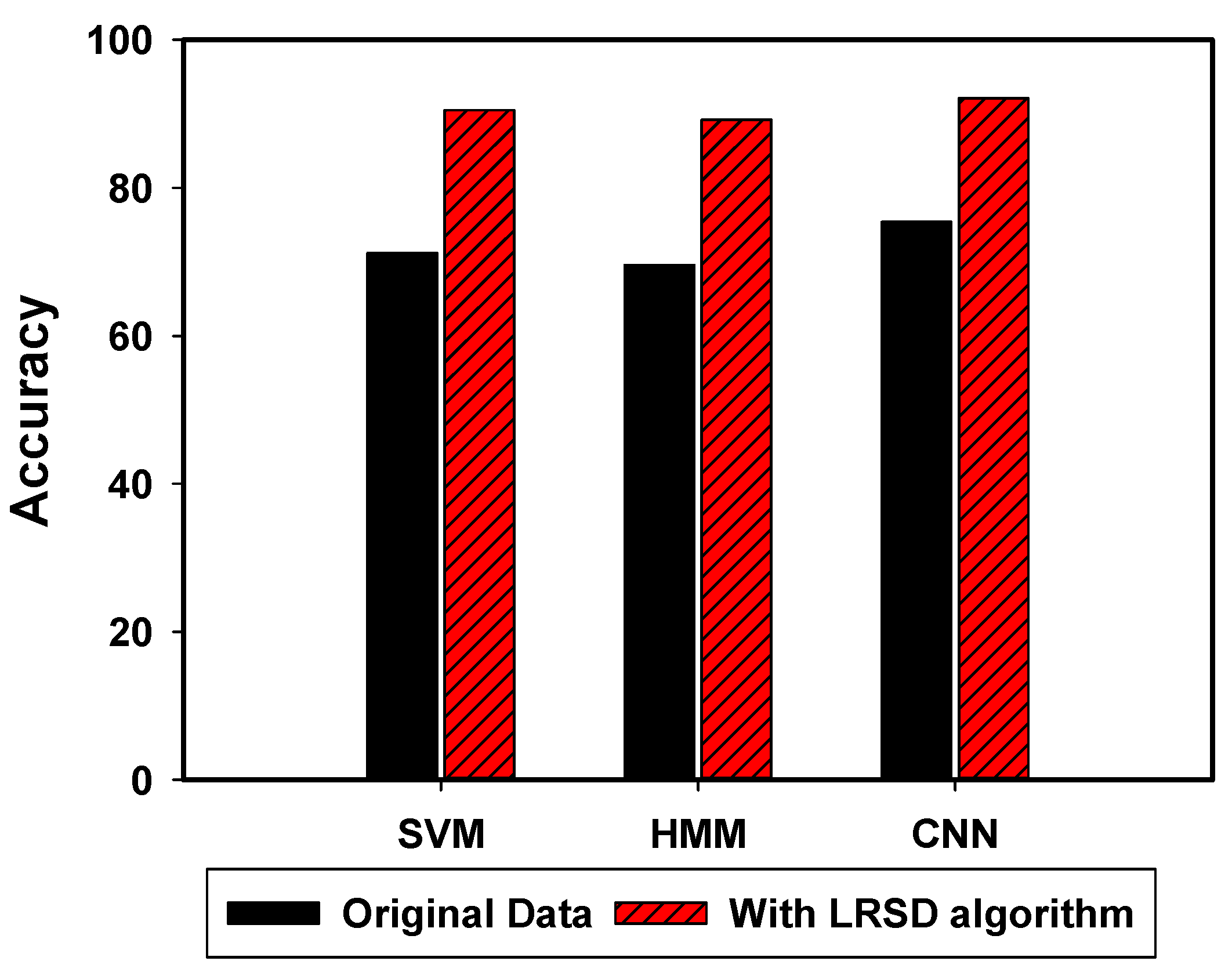

- Feature Extraction. To recognize the gestures, we need to first use the binned entropy based subcarrier selection algorithm to find the most affected subcarrier. Then, we take advantage of the low rank and sparse decomposition (LRSD) algorithm to extract location independent gestures signal from the original signal. Then, we extract features from the signal using the histogram.

- Classification. We use a one class Support Vector Machine (SVM) classifier to recognize the gestures. Firstly, collected gesture data are used to train a SVM machine. Then, the SVM machine is used to recognize the newly arrived gestures.

4.2. Preprocessing

4.2.1. Outliers Removal

4.2.2. Low-Pass Filtering

4.2.3. Interpolation

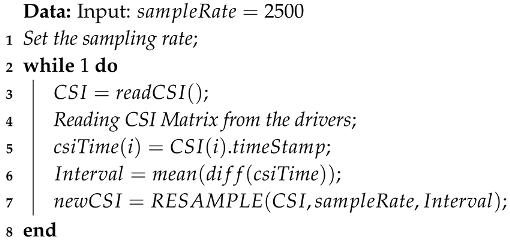

| Algorithm 1: Time-dependent Interpolation (TDI) Algorithm. |

|

4.3. Deviation-Based Gesture Boundary Detection

4.4. Binned Entropy Based Subcarrier Selection

4.5. Feature Extraction and Classification

4.6. Gesture Feature Extraction

4.6.1. Feature Extraction

4.6.2. Classification

4.7. Discussions

5. Performance Evaluation

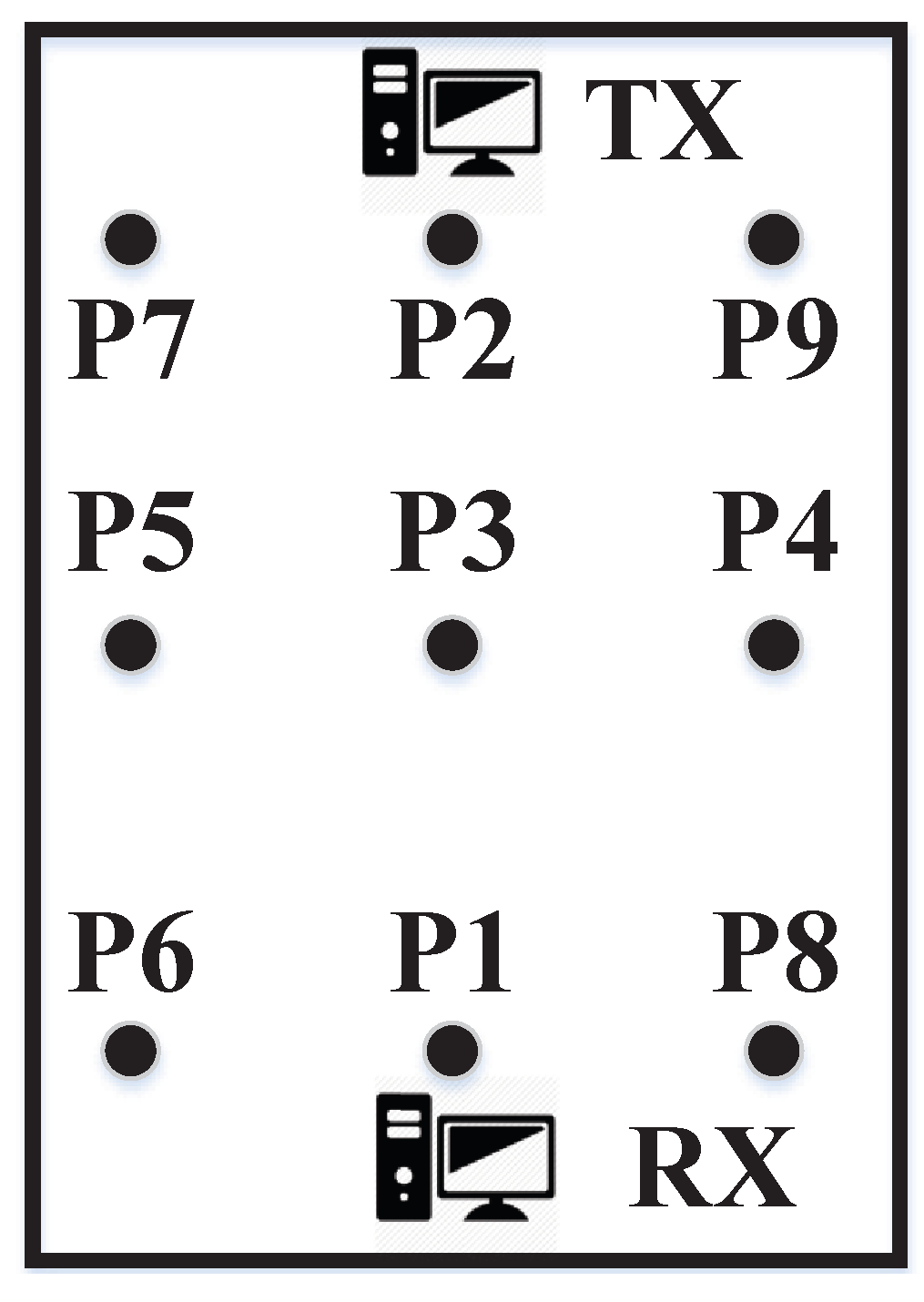

5.1. Experimental Setup

5.2. Performance of Gesture Detection

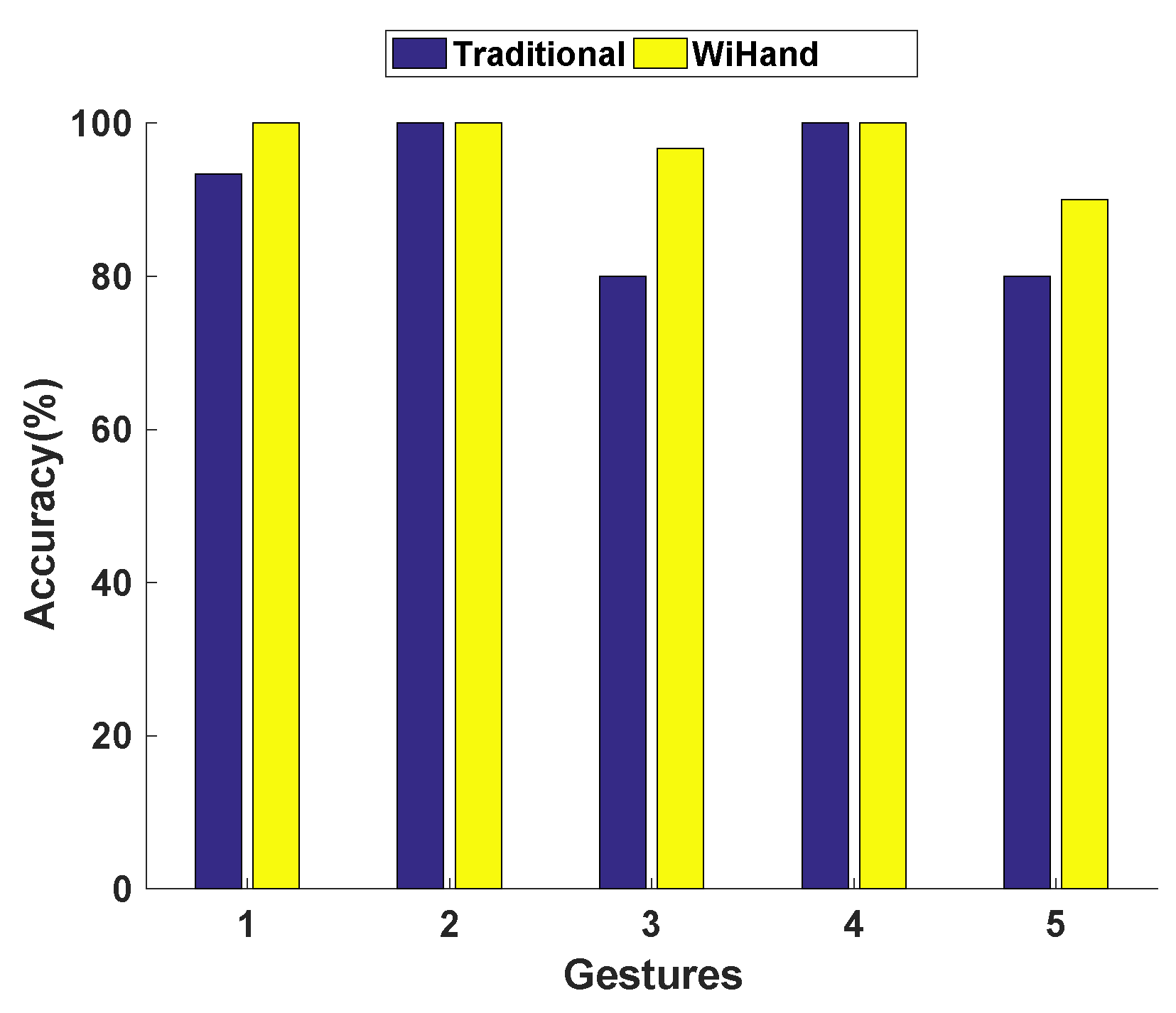

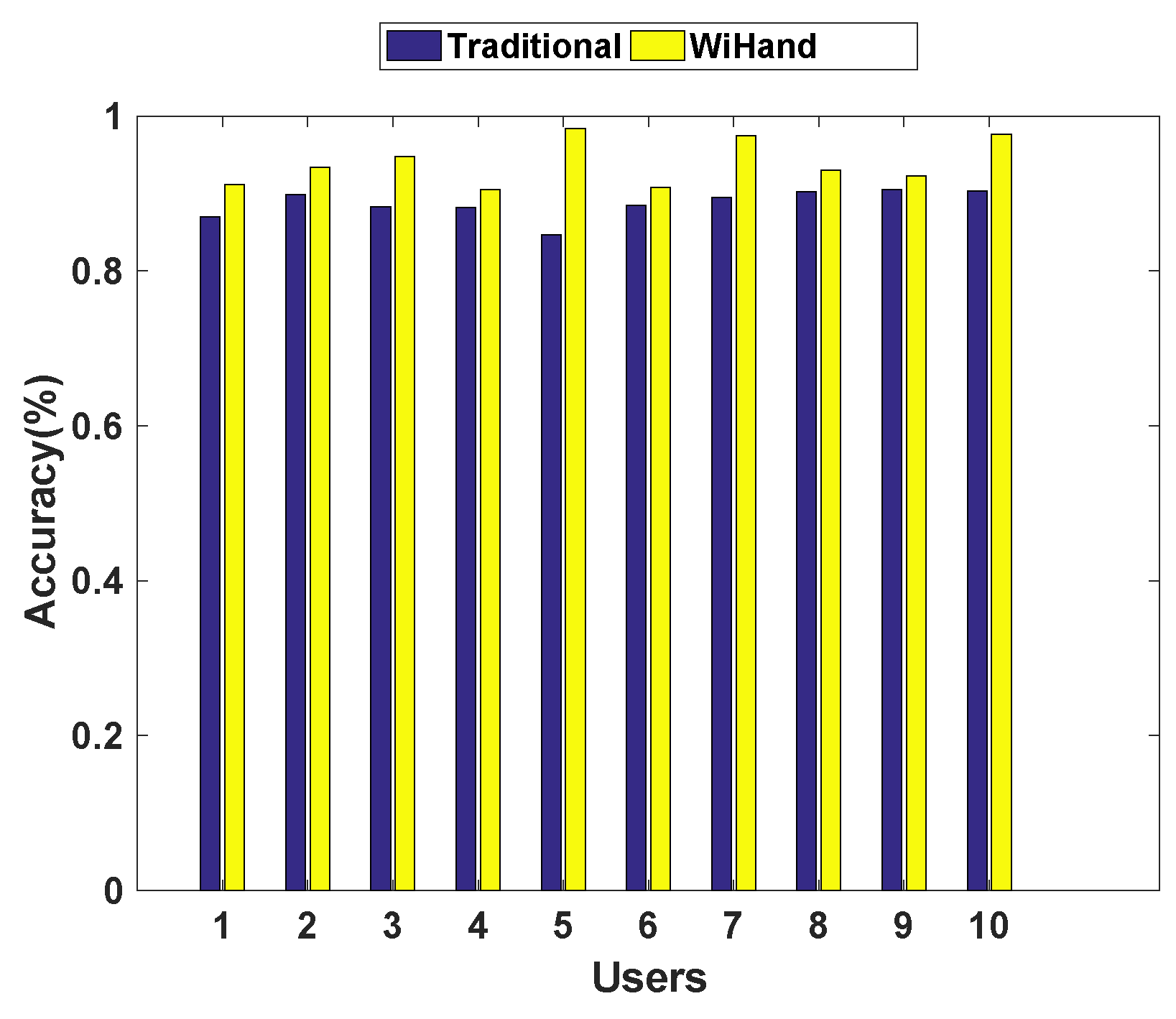

5.3. Performance of Gesture Recognition Accuracy at Fixed Location

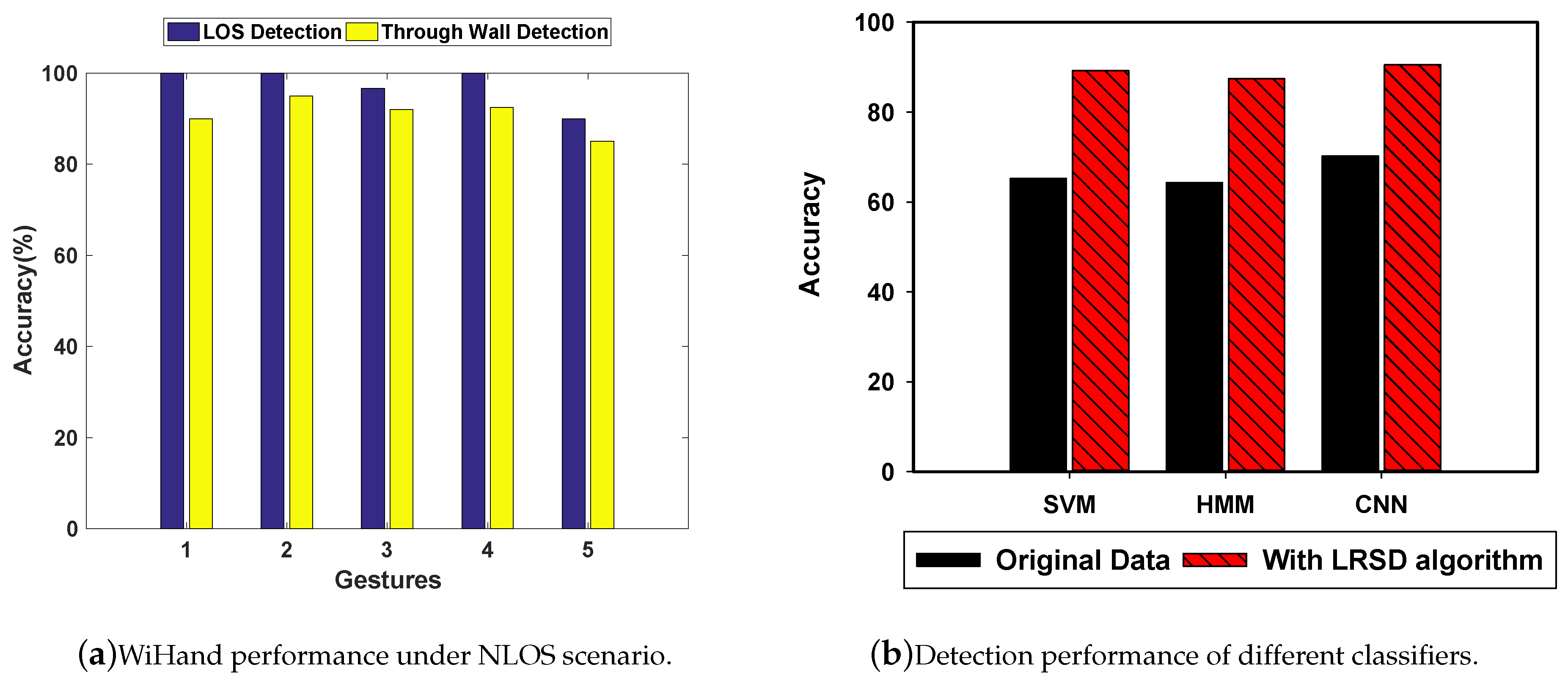

5.4. Performance of Gesture Recognition at Various Locations

5.5. Though Wall Detection

6. Conclusions and Future Work

Author Contributions

Funding

Conflicts of Interest

References

- Uddin, M.T.; Uddiny, M.A. Human activity recognition from wearable sensors using extremely randomized trees. In Proceedings of the 2015 International Conference on Electrical Engineering and Information Communication Technology (ICEEICT), Dhaka, Bangladesh, 21–23 May 2015; pp. 1–6. [Google Scholar]

- Jalal, A.; Zeb, M.A. Security and QoS optimization for distributed real time environment. In Proceedings of the 7th IEEE International Conference on Computer and Information Technology (CIT 2007), Fukushima, Japan, 16–19 October 2007; pp. 369–374. [Google Scholar]

- Jalal, A.; Kamal, S. Real-time life logging via a depth silhouette-based human activity recognition system for smart home services. In Proceedings of the 2014 11th IEEE International Conference on Advanced Video and Signal Based Surveillance (AVSS), Seoul, Korea, 26–29 August 2014; pp. 74–80. [Google Scholar]

- Ahad, M.A.R.; Kobashi, S.; Tavares, J.M.R. Advancements of image processing and vision in healthcare. J. Healthc. Eng. 2018, 2018. [Google Scholar] [CrossRef] [PubMed]

- Jalal, A.; Quaid, M.A.K.; Kim, K. A Wrist Worn Acceleration Based Human Motion Analysis and Classification for Ambient Smart Home System. J. Electr. Eng. Technol. 2019, 14, 1–7. [Google Scholar] [CrossRef]

- Jalal, A.; Nadeem, A.; Bobasu, S. Human Body Parts Estimation and Detection for Physical Sports Movements. In Proceedings of the 2019 2nd International Conference on Communication, Computing and Digital Systems (C-CODE), Islamabad, Pakistan, 6–7 March 2019; pp. 104–109. [Google Scholar]

- Jalal, A.; Mahmood, M. Students’ behavior mining in e-learning environment using cognitive processes with information technologies. Educ. Inf. Technol. 2019, 24, 2797–2821. [Google Scholar] [CrossRef]

- Wan, Q.; Li, Y.; Li, C.; Pal, R. Gesture recognition for smart home applications using portable radar sensors. In Proceedings of the 2014 36th Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Chicago, IL, USA, 26–30 August 2014; pp. 6414–6417. [Google Scholar] [CrossRef]

- Patsadu, O.; Nukoolkit, C.; Watanapa, B. Human gesture recognition using Kinect camera. In Proceedings of the 2012 Ninth International Conference on Computer Science and Software Engineering (JCSSE), Bangkok, Thailand, 30 May–1 June 2012; pp. 28–32. [Google Scholar] [CrossRef]

- Rahman, A.M.; Hossain, M.A.; Parra, J.; El Saddik, A. Motion-path Based Gesture Interaction with Smart Home Services. In Proceedings of the 17th ACM International Conference on Multimedia, Beijing, China, 19–24 October 2009; ACM: New York, NY, USA, 2009; pp. 761–764. [Google Scholar] [CrossRef]

- Jalal, A.; Uddin, M.Z.; Kim, T. Depth video-based human activity recognition system using translation and scaling invariant features for life logging at smart home. IEEE Trans. Consum. Electron. 2012, 58, 863–871. [Google Scholar] [CrossRef]

- Dinh, D.L.; Kim, J.T.; Kim, T.S. Hand Gesture Recognition and Interface via a Depth Imaging Sensor for Smart Home Appliances. Energy Procedia 2014, 62, 576–582. [Google Scholar] [CrossRef]

- Wu, Y.; Huang, T.S. Vision-based gesture recognition: A review. In International Gesture Workshop; Springer: Berlin/Heidelberg, Germany, 1999; pp. 103–115. [Google Scholar]

- Chen, I.K.; Chi, C.Y.; Hsu, S.L.; Chen, L.G. A real-time system for object detection and location reminding with rgb-d camera. In Proceedings of the 2014 IEEE International Conference on Consumer Electronics (ICCE), Las Vegas, NV, USA, 10–13 January 2014; pp. 412–413. [Google Scholar]

- Jalal, A.; Kim, S.; Yun, B. Assembled algorithm in the real-time H. 263 codec for advanced performance. In Proceedings of the 7th International Workshop on Enterprise Networking and Computing in Healthcare Industry, Busan, Korea, 23–25 June 2005; pp. 295–298. [Google Scholar]

- Fonseca, L.M.G.; Namikawa, L.M.; Castejon, E.F. Digital Image Processing in Remote Sensing. In Proceedings of the 2009 Tutorials of the XXII Brazilian Symposium on Computer Graphics and Image Processing, SIBGRAPI-TUTORIALS’09, Rio de Janeiro, Brazil, 11–14 October 2009; IEEE Computer Society: Washington, DC, USA, 2009; pp. 59–71. [Google Scholar] [CrossRef]

- Jalal, A.; Kim, S. The mechanism of edge detection using the block matching criteria for the motion estimation. In Proceedings of the Korea HCI Society Conference, Las Vegas, NV, USA, 22–27 July 2005; pp. 484–489. [Google Scholar]

- Jalal, A.; Uddin, M.Z.; Kim, J.T.; Kim, T.S. Daily Human Activity Recognition Using Depth Silhouettes and R Transformation for Smart Home. In Proceedings of the International Conference on Smart Homes and Health Telematics, Montreal, QC, Canada, 20–22 June 2011; pp. 25–32. [Google Scholar]

- Procházka, A.; Kolinova, M.; Fiala, J.; Hampl, P.; Hlavaty, K. Satellite image processing and air pollution detection. In Proceedings of the 2000 IEEE International Conference on Acoustics, Speech, and Signal Processing, Proceedings (Cat. No. 00CH37100), Istanbul, Turkey, 5–9 June 2000; Volume 4, pp. 2282–2285. [Google Scholar]

- Microsoft X-box Kinect. Available online: http://www.xbox.com (accessed on 18 September 2019).

- Leap Motion. Available online: https://www.leapmotion.com (accessed on 18 September 2019).

- Bo, C.; Jian, X.; Li, X.Y.; Mao, X.; Wang, Y.; Li, F. You’re driving and texting: detecting drivers using personal smart phones by leveraging inertial sensors. In Proceedings of the 19th Annual International Conference on Mobile Computing & Networking, Miami, FL, USA, 30 September–4 October 2013; pp. 199–202. [Google Scholar]

- Pu, Q.; Gupta, S.; Gollakota, S.; Patel, S. Whole-home Gesture Recognition Using Wireless Signals. In Proceedings of the 19th Annual International Conference on Mobile Computing & Networking, Miami, FL, USA, 30 September–4 October 2013; ACM: New York, NY, USA, 2013; pp. 27–38. [Google Scholar] [CrossRef]

- Abdelnasser, H.; Youssef, M.; Harras, K.A. WiGest: A ubiquitous WiFi-based gesture recognition system. In Proceedings of the 2015 IEEE Conference on Computer Communications, INFOCOM, Hong Kong, China, 26 April–1 May 2015; pp. 1472–1480. [Google Scholar] [CrossRef]

- He, W.; Wu, K.; Zou, Y.; Ming, Z. WIG: WiFi-Based Gesture Recognition System. In Proceedings of the 24th International Conference on Computer Communication and Networks, ICCCN 2015, Las Vegas, NV, USA, 3–6 August 2015; pp. 1–7. [Google Scholar] [CrossRef]

- Li, H.; Yang, W.; Wang, J.; Xu, Y.; Huang, L. WiFinger: Talk to Your Smart Devices with Finger-grained Gesture. In Proceedings of the 2016 ACM International Joint Conference on Pervasive and Ubiquitous Computing, Heidelberg, Germany, 12–16 September 2016; ACM: New York, NY, USA, 2016; pp. 250–261. [Google Scholar] [CrossRef]

- Tan, S.; Yang, J. WiFinger: Leveraging Commodity WiFi for Fine-grained Finger Gesture Recognition. In Proceedings of the 17th ACM International Symposium on Mobile Ad Hoc Networking and Computing, MobiHoc’16, Paderborn, Germany, 5–8 July 2016; ACM: New York, NY, USA, 2016; pp. 201–210. [Google Scholar] [CrossRef]

- Zhang, O.; Srinivasan, K. Mudra: User-friendly Fine-grained Gesture Recognition Using WiFi Signals. In Proceedings of the 12th International on Conference on Emerging Networking EXperiments and Technologies, CoNEXT’16, Irvine, CA, USA, 12–15 December 2016; ACM: New York, NY, USA, 2016; pp. 83–96. [Google Scholar] [CrossRef]

- CISCO. Cisco Visual Networking Index: Global Mobile Data Traffic Forecast Update, 2016–2021; Cisco VNI Forecast: New York, NY, USA, 2016. [Google Scholar]

- Peng, Y.; Ganesh, A.; Wright, J.; Xu, W.; Ma, Y. RASL: Robust alignment by sparse and low-rank decomposition for linearly correlated images. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 34, 2233–2246. [Google Scholar] [CrossRef]

- Liu, X.; Zhao, G.; Yao, J.; Qi, C. Background subtraction based on low-rank and structured sparse decomposition. IEEE Trans. Image Process. 2015, 24, 2502–2514. [Google Scholar] [CrossRef] [PubMed]

- Jiang, W.; Miao, C.; Ma, F.; Yao, S.; Wang, Y.; Yuan, Y.; Xue, H.; Song, C.; Ma, X.; Koutsonikolas, D.; et al. Towards Environment Independent Device Free Human Activity Recognition. In Proceedings of the 24th Annual International Conference on Mobile Computing and Networking, MobiCom’18, New Delhi, India, 29 October–2 November 2018; ACM: New York, NY, USA, 2018; pp. 289–304. [Google Scholar] [CrossRef]

- Virmani, A.; Shahzad, M. Position and Orientation Agnostic Gesture Recognition Using WiFi. In Proceedings of the 15th Annual International Conference on Mobile Systems, Applications, and Services, Niagara Falls, MobiSys’17, New York, NY, USA, 19–23 June 2017; ACM: New York, NY, USA, 2017; pp. 252–264. [Google Scholar] [CrossRef]

- Wang, Y.; Liu, J.; Chen, Y.; Gruteser, M.; Yang, J.; Liu, H. E-eyes: Device-free Location-oriented Activity Identification Using Fine-grained WiFi Signatures. In Proceedings of the 20th Annual International Conference on Mobile Computing and Networking, MobiCom’14, Maui, HI, USA, 7–11 September 2014; ACM: New York, NY, USA, 2014; pp. 617–628. [Google Scholar] [CrossRef]

- Adib, F.; Kabelac, Z.; Katabi, D. Multi-Person Motion Tracking via RF Body Reflections. CSAIL Technical Reports. Available online: http://hdl.handle.net/1721.1/86299 (accessed on 18 September 2019).

- Sigg, S.; Shi, S.; Ji, Y. RF-Based Device-free Recognition of Simultaneously Conducted Activities. In Proceedings of the 2013 ACM Conference on Pervasive and Ubiquitous Computing Adjunct Publication, UbiComp’13 Adjunct, Zurich, Switzerland, 8–12 September 2013; ACM: New York, NY, USA, 2013; pp. 531–540. [Google Scholar] [CrossRef]

- Zhu, H.; Xiao, F.; Sun, L.; Wang, R.; Yang, P. R-TTWD: Robust device-free through the wall detection of moving human with WiFi. IEEE J. Sel. Areas Commun. 2017, 35, 1090–1103. [Google Scholar] [CrossRef]

- Sigg, S.; Shi, S.; Büsching, F.; Ji, Y.; Wolf, L.C. Leveraging RF-channel fluctuation for activity recognition: Active and passive systems, continuous and RSSI-based signal features. In Proceedings of the 11th International Conference on Advances in Mobile Computing & Multimedia, MoMM’13, Vienna, Austria, 2–4 December 2013; p. 43. [Google Scholar] [CrossRef]

- Lv, S.; Lu, Y.; Dong, M.; Wang, X.; Dou, Y.; Zhuang, W. Qualitative action recognition by wireless radio signals in human–machine systems. IEEE Trans. Hum.-Mach. Syst. 2017, 47, 789–800. [Google Scholar] [CrossRef]

- Rathore, M.M.U.; Ahmad, A.; Paul, A.; Wu, J. Real-time continuous feature extraction in large size satellite images. J. Syst. Archit. 2016, 64, 122–132. [Google Scholar] [CrossRef]

- Jalal, A.; Kim, Y.; Kamal, S.; Farooq, A.; Kim, D. Human daily activity recognition with joints plus body features representation using Kinect sensor. In Proceedings of the 2015 International Conference on Informatics, Electronics & Vision (ICIEV), Fukuoka, Japan, 15–18 June 2015; pp. 1–6. [Google Scholar]

- Jalal, A.; Kamal, S.; Kim, D. Individual detection-tracking-recognition using depth activity images. In Proceedings of the 2015 12th International Conference on Ubiquitous Robots and Ambient Intelligence (URAI), Goyang, Korea, 28–30 October 2015; pp. 450–455. [Google Scholar]

- Yoshimoto, H.; Date, N.; Yonemoto, S. Vision-based real-time motion capture system using multiple cameras. In Proceedings of the IEEE International Conference on Multisensor Fusion and Integration for Intelligent Systems, MFI2003, Tokyo, Japan, 1 August 2003; pp. 247–251. [Google Scholar]

- Kamal, S.; Jalal, A.; Kim, D. Depth images-based human detection, tracking and activity recognition using spatiotemporal features and modified HMM. J. Electr. Eng. Technol. 2016, 11, 1921–1926. [Google Scholar] [CrossRef]

- Jalal, A.; Kamal, S.; Kim, D. Facial Expression recognition using 1D transform features and Hidden Markov Model. J. Electr. Eng. Technol. 2017, 12, 1657–1662. [Google Scholar]

- Huang, Q.; Yang, J.; Qiao, Y. Person re-identification across multi-camera system based on local descriptors. In Proceedings of the 2012 Sixth International Conference on Distributed Smart Cameras (ICDSC), Hong Kong, China, 30 October–2 November 2012; pp. 1–6. [Google Scholar]

- Zeng, Y.; Pathak, P.H.; Mohapatra, P. WiWho: WiFi-Based Person Identification in Smart Spaces. In Proceedings of the 2016 15th ACM/IEEE International Conference on Information Processing in Sensor Networks (IPSN), Vienna, Austria, 11–14 April 2016; pp. 1–12. [Google Scholar] [CrossRef]

- Jin, Y.; Soh, W.S.; Wong, W.C. Indoor Localization with Channel Impulse Response Based Fingerprint and Nonparametric Regression. Trans. Wireless. Comm. 2010, 9, 1120–1127. [Google Scholar] [CrossRef]

- Davies, L.; Gather, U. The identification of multiple outliers. J. Am. Stat. Assoc. 1993, 88, 782–792. [Google Scholar] [CrossRef]

- Wang, W.; Liu, A.X.; Shahzad, M.; Ling, K.; Lu, S. Understanding and Modeling of WiFi Signal Based Human Activity Recognition. In Proceedings of the 21st Annual International Conference on Mobile Computing and Networking, MobiCom 2015, Paris, France, 7–11 September 2015; pp. 65–76. [Google Scholar] [CrossRef]

- Zhang, J.; Wei, B.; Hu, W.; Kanhere, S.S. WiFi-ID: Human Identification Using WiFi Signal. In Proceedings of the 2016 International Conference on Distributed Computing in Sensor Systems (DCOSS), Washington, DC, USA, 26–28 May 2016; pp. 75–82. [Google Scholar] [CrossRef]

- Abdelnasser, H.; Harras, K.A.; Youssef, M. UbiBreathe: A Ubiquitous non-Invasive WiFi-based Breathing Estimator. In Proceedings of the 16th ACM International Symposium on Mobile Ad Hoc Networking and Computing, MobiHoc 2015, Hangzhou, China, 22–25 June 2015; pp. 277–286. [Google Scholar] [CrossRef]

- Wang, G.; Zou, Y.; Zhou, Z.; Wu, K.; Ni, L.M. We Can Hear You with Wi-Fi! In Proceedings of the 20th Annual International Conference on Mobile Computing and Networking, MobiCom’14, Maui, HI, USA, 7–11 September 2014; ACM: New York, NY, USA, 2014; pp. 593–604. [Google Scholar] [CrossRef]

- Zeng, Y.; Pathak, P.H.; Mohapatra, P. Analyzing Shopper’s Behavior Through WiFi Signals. In Proceedings of the 2nd Workshop on Workshop on Physical Analytics, WPA’15, Florence, Italy, 22 May 2015; ACM: New York, NY, USA, 2015; pp. 13–18. [Google Scholar] [CrossRef]

- Piyathilaka, L.; Kodagoda, S. Gaussian mixture based HMM for human daily activity recognition using 3D skeleton features. In Proceedings of the 2013 IEEE 8th Conference on Industrial Electronics and Applications (ICIEA), Melbourne, Australia, 19–21 June 2013; pp. 567–572. [Google Scholar]

- Jalal, A.; Kamal, S.; Kim, D. A Depth Video-based Human Detection and Activity Recognition using Multi-features and Embedded Hidden Markov Models for Health Care Monitoring Systems. Int. J. Interact. Multimed. Artif. Intell. 2017, 4, 54–62. [Google Scholar] [CrossRef]

- Mahmood, M.; Jalal, A.; Evans, H.A. Facial expression recognition in image sequences using 1D transform and gabor wavelet transform. In Proceedings of the 2018 International Conference on Applied and Engineering Mathematics (ICAEM), Taxila, Pakistan, 4–5 September 2018; pp. 1–6. [Google Scholar]

- Jalal, A.; Kamal, S. Improved Behavior Monitoring and Classification Using Cues Parameters Extraction from Camera Array Images. Int. J. Interact. Multimed. Artif. Intell. 2019, 5, 71–78. [Google Scholar] [CrossRef]

- Jalal, A.; Quaid, M.A.; Sidduqi, M. A Triaxial acceleration-based human motion detection for ambient smart home system. In Proceedings of the 2019 16th International Bhurban Conference on Applied Sciences and Technology (IBCAST), Islamabad, Pakistan, 8–12 January 2019; pp. 353–358. [Google Scholar]

- Jalal, A.; Mahmood, M.; Hasan, A.S. Multi-features descriptors for human activity tracking and recognition in Indoor-outdoor environments. In Proceedings of the 2019 16th International Bhurban Conference on Applied Sciences and Technology (IBCAST), Islamabad, Pakistan, 8–12 January 2019; pp. 371–376. [Google Scholar]

- Wu, H.; Pan, W.; Xiong, X.; Xu, S. Human activity recognition based on the combined svm&hmm. In Proceedings of the 2014 IEEE International Conference on Information and Automation (ICIA), Hailar, China, 28–30 July 2014; pp. 219–224. [Google Scholar]

- Chang, C.C.; Lin, C.J. LIBSVM: A library for support vector machines. ACM Trans. Intell. Syst. Technol. (TIST) 2011, 2, 27. [Google Scholar] [CrossRef]

- Xie, Y.; Li, Z.; Li, M. Precise Power Delay Profiling with Commodity WiFi. In Proceedings of the 21st Annual International Conference on Mobile Computing and Networking, MobiCom 2015, Paris, France, 7–11 September 2015; pp. 53–64. [Google Scholar] [CrossRef]

- Jalal, A.; Kamal, S.; Kim, D. A depth video sensor-based life-logging human activity recognition system for elderly care in smart indoor environments. Sensors 2014, 14, 11735–11759. [Google Scholar] [CrossRef] [PubMed]

- Jalal, A.; Kamal, S.; Kim, D. Shape and motion features approach for activity tracking and recognition from kinect video camera. In Proceedings of the 2015 IEEE 29th International Conference on Advanced Information Networking and Applications Workshops, Gwangiu, Korea, 24–27 March 2015; pp. 445–450. [Google Scholar]

- Jalal, A.; Quaid, M.A.K.; Hasan, A.S. Wearable Sensor-Based Human Behavior Understanding and Recognition in Daily Life for Smart Environments. In Proceedings of the 2018 International Conference on Frontiers of Information Technology (FIT), Islamabad, Pakistan, 17–19 December 2018; pp. 105–110. [Google Scholar]

- Mahmood, M.; Jalal, A.; Sidduqi, M. Robust Spatio-Temporal Features for Human Interaction Recognition Via Artificial Neural Network. In Proceedings of the 2018 International Conference on Frontiers of Information Technology (FIT), Islamabad, Pakistan, 17–19 December 2018; pp. 218–223. [Google Scholar]

| System | Activities | Frequency Range (Hz) |

|---|---|---|

| CARM [50] | body movements | 0.15∼300 |

| WiSee [23] | hand gestures | 8∼134 |

| WiFinger [27] | hand gestures | 0.2∼5 |

| WiFinger [26] | ASL gestures | 1∼60 |

| WiWho [47] | gaits | 0.3∼2 |

| WiFi-ID [51] | walking behavior | 20 ∼80 |

| UniBreathe [52] | respiration | 0.1∼0.5 |

| WiHear [53] | mouth | 2∼5 |

| Zeng [54] | shopping behavior | 0.3∼2 |

| P1 | P2 | P3 | P4 | P5 | P6 | P7 | P8 | P9 |

|---|---|---|---|---|---|---|---|---|

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lu, Y.; Lv, S.; Wang, X. Towards Location Independent Gesture Recognition with Commodity WiFi Devices. Electronics 2019, 8, 1069. https://doi.org/10.3390/electronics8101069

Lu Y, Lv S, Wang X. Towards Location Independent Gesture Recognition with Commodity WiFi Devices. Electronics. 2019; 8(10):1069. https://doi.org/10.3390/electronics8101069

Chicago/Turabian StyleLu, Yong, Shaohe Lv, and Xiaodong Wang. 2019. "Towards Location Independent Gesture Recognition with Commodity WiFi Devices" Electronics 8, no. 10: 1069. https://doi.org/10.3390/electronics8101069

APA StyleLu, Y., Lv, S., & Wang, X. (2019). Towards Location Independent Gesture Recognition with Commodity WiFi Devices. Electronics, 8(10), 1069. https://doi.org/10.3390/electronics8101069