Saliency Preprocessing Locality-Constrained Linear Coding for Remote Sensing Scene Classification

Abstract

:1. Introduction

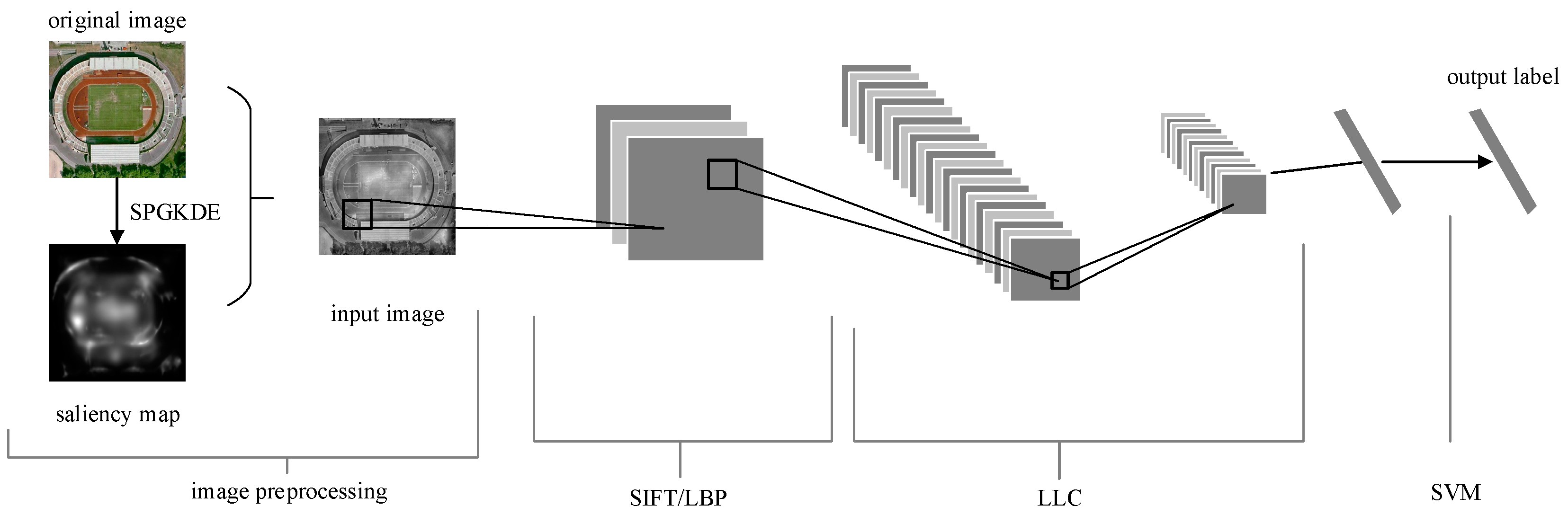

2. Methodology

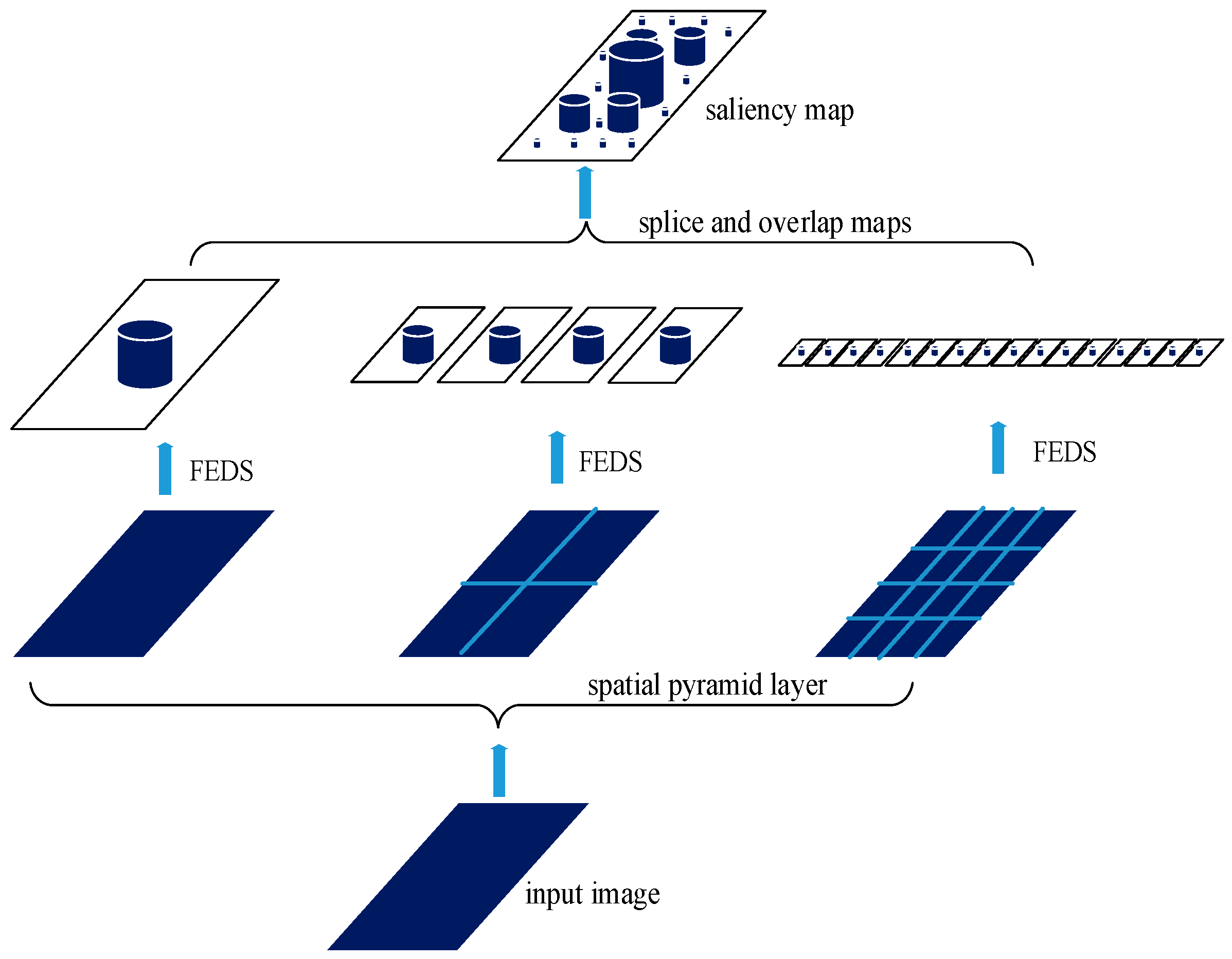

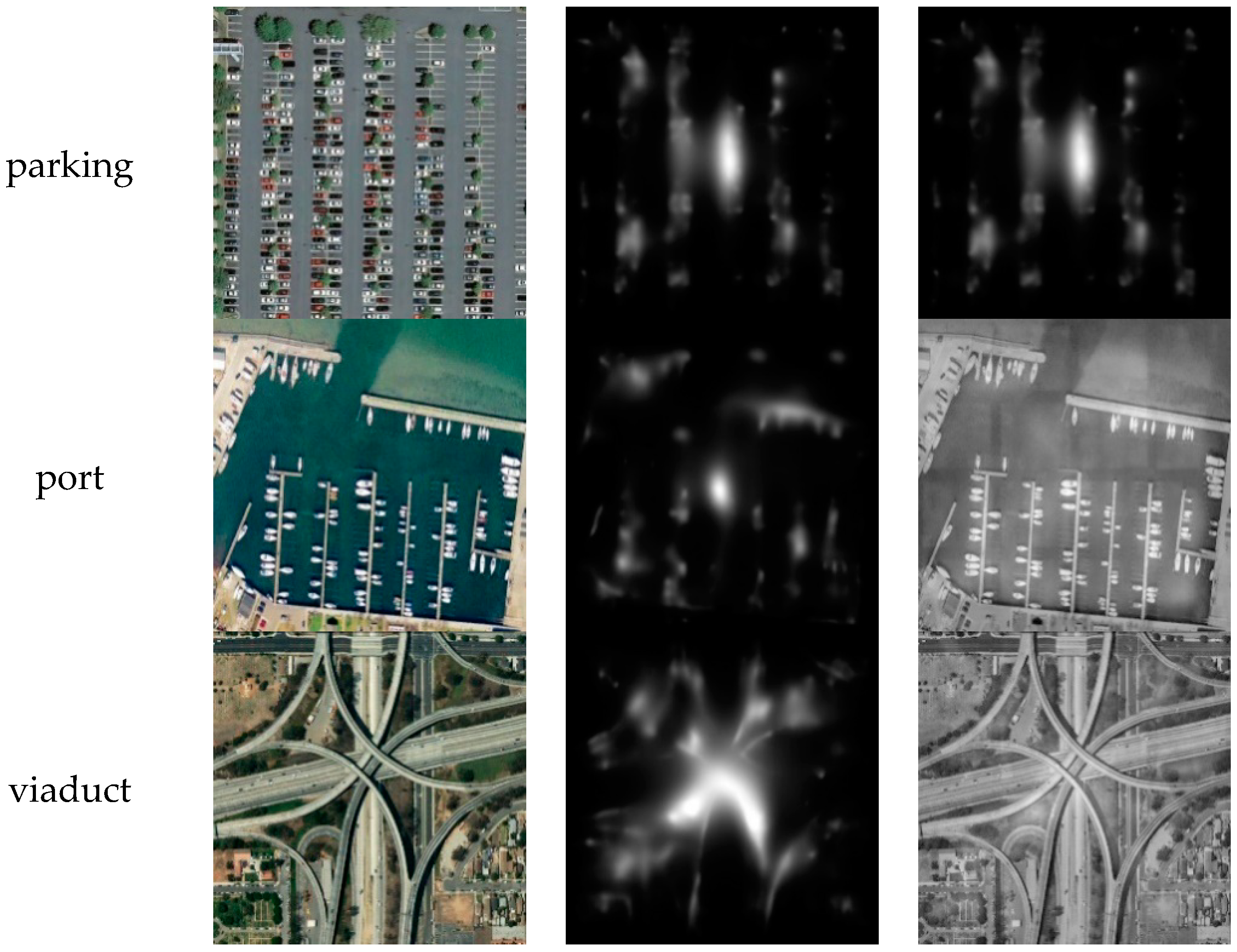

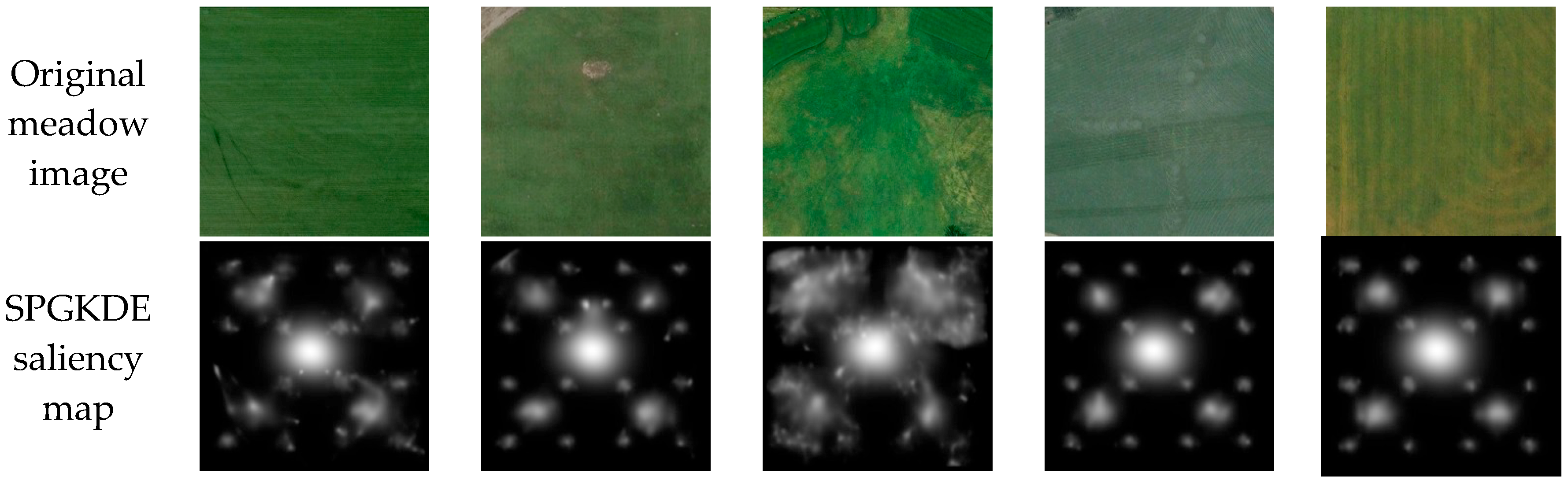

2.1. Spatial Pyramid Gaussian Kernel Density Estimation Saliency Detection Preprocessing

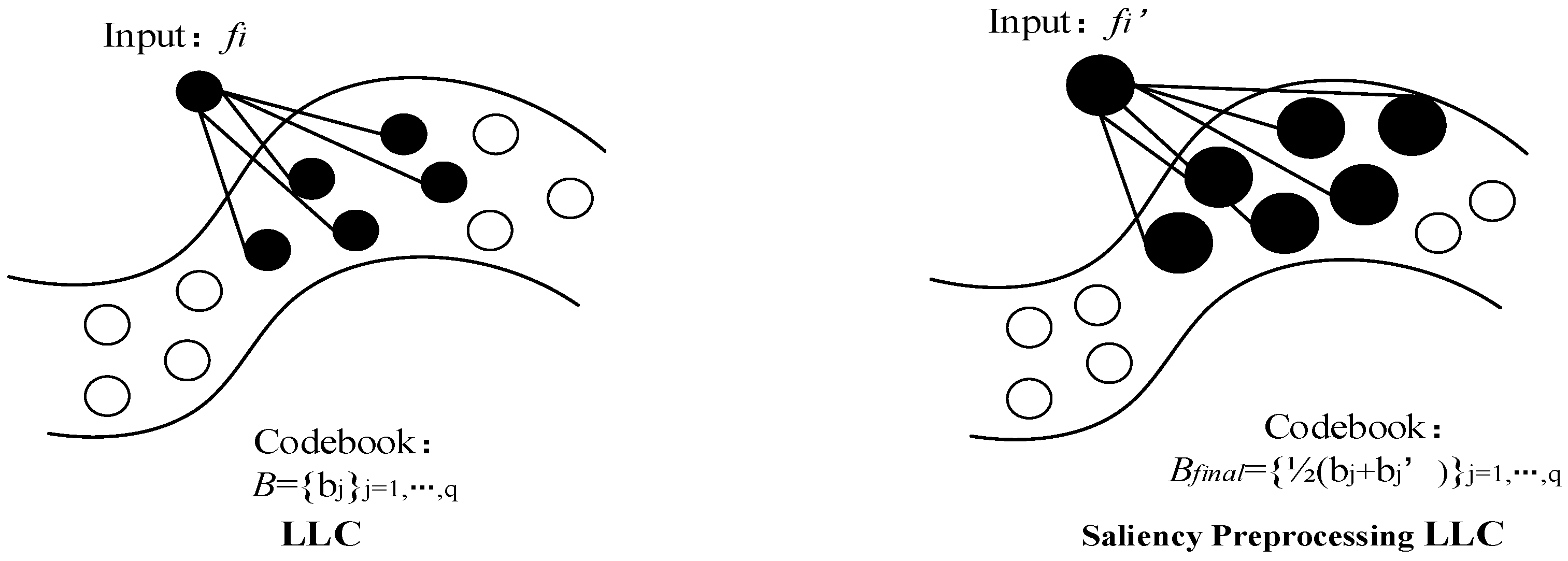

2.2. Saliency Preprocessing Locality-Constrained Linear Coding

3. Experiments and Discussion

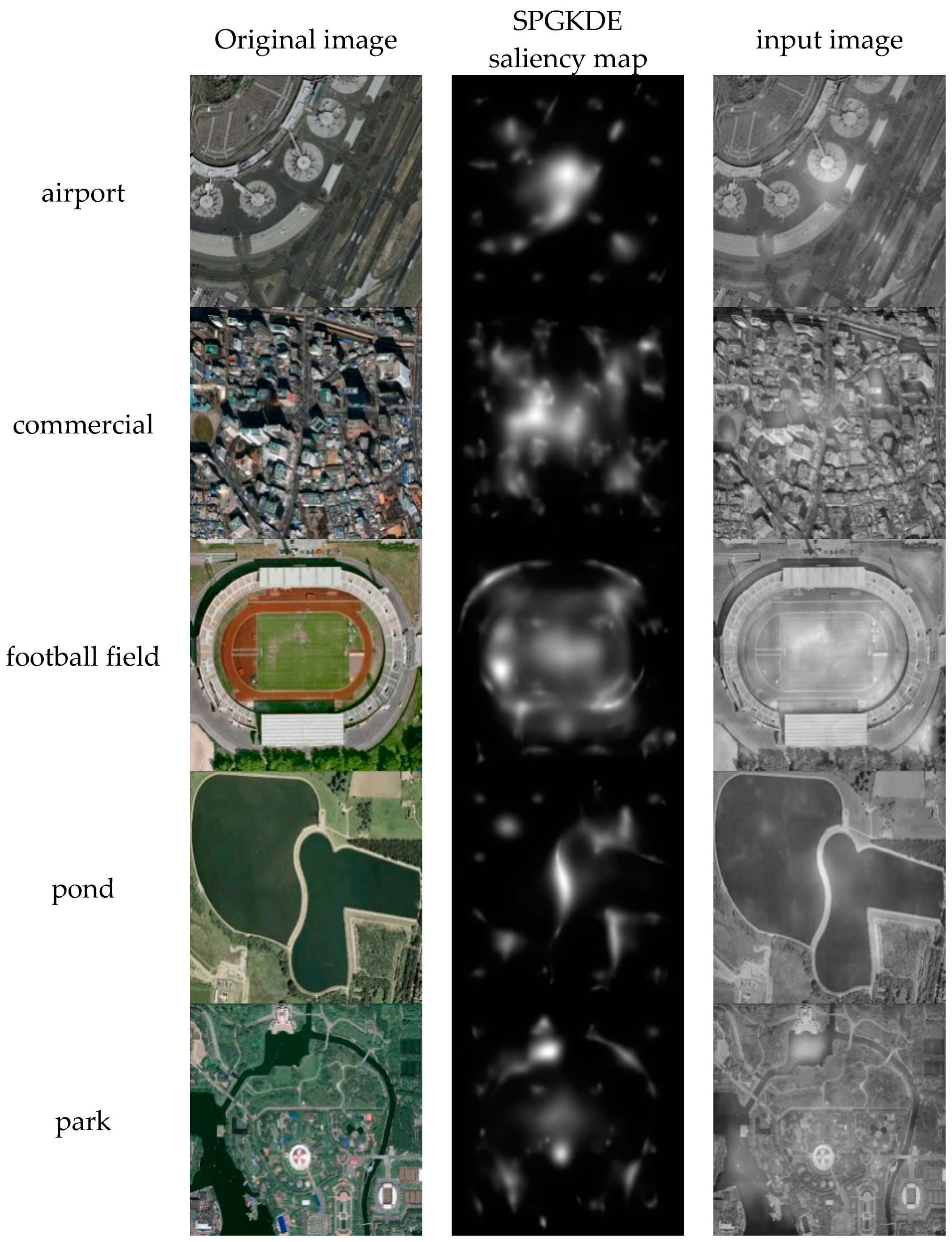

3.1. SPGKDE Preprocessing

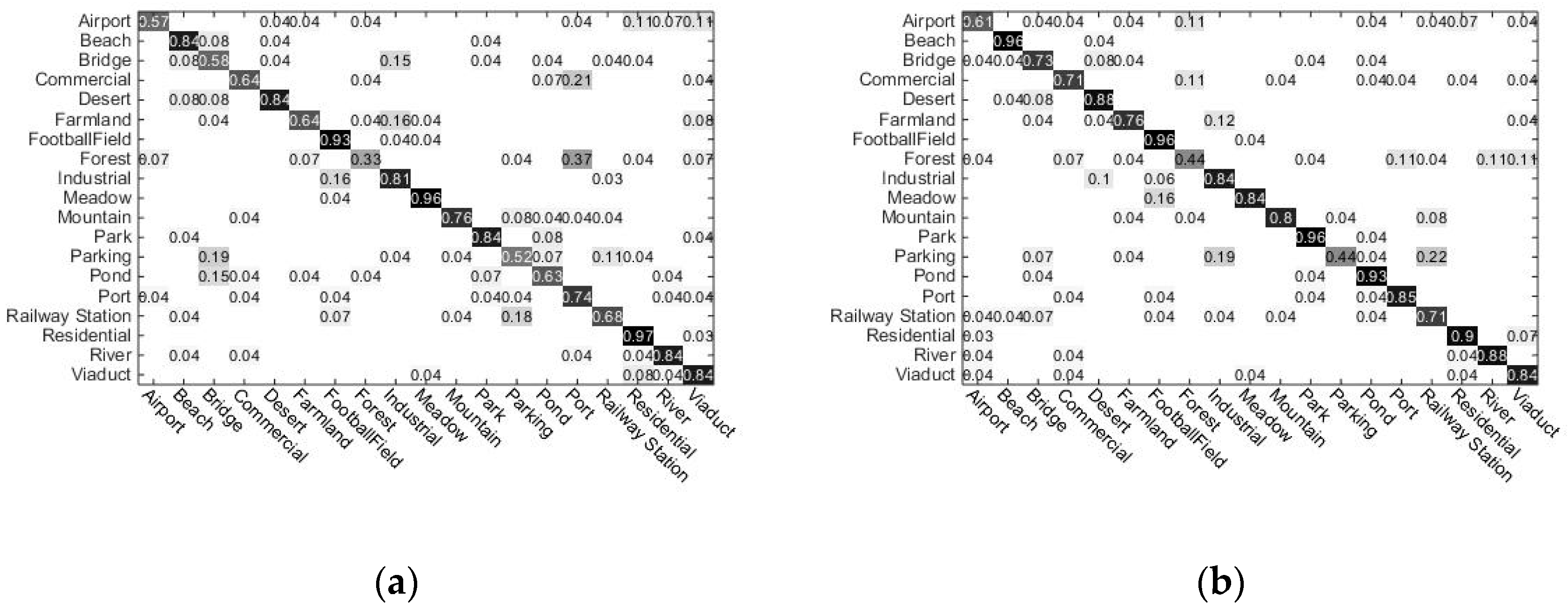

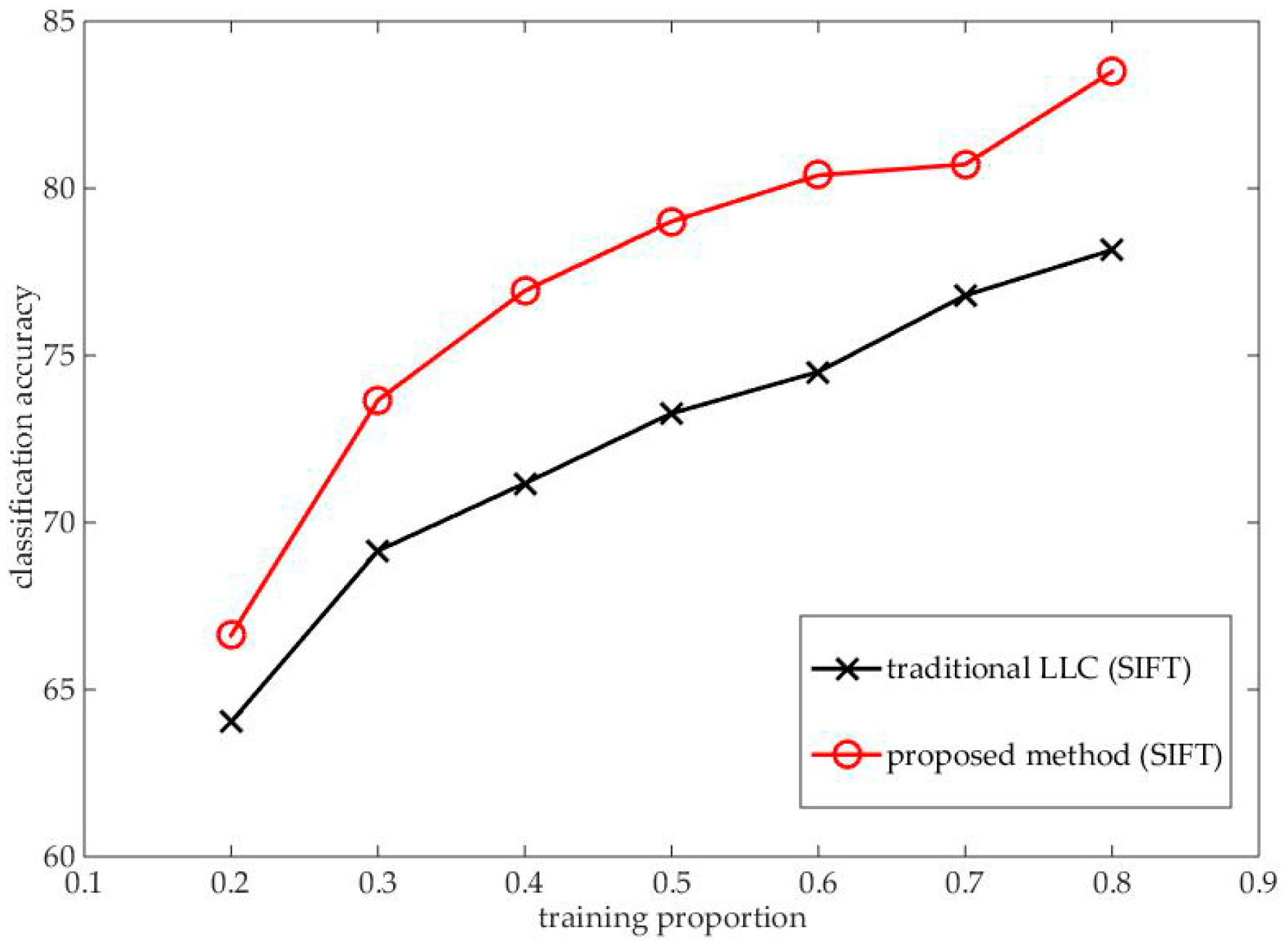

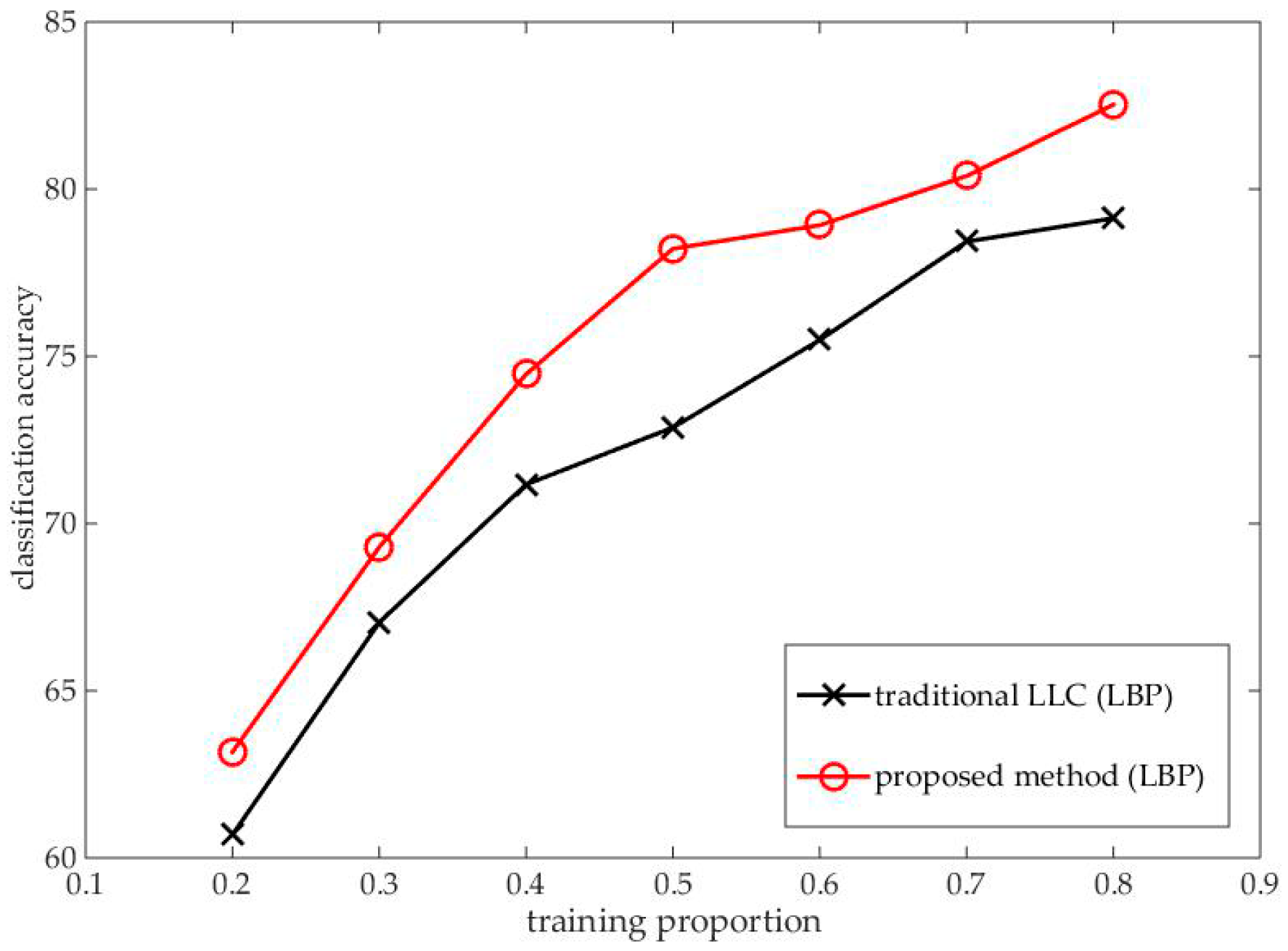

3.2. Performance of Traditional LLC and Proposed Method

3.2.1. Performance Based on SIFT Feature

3.2.2. Performance Based on LBP Feature

4. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Cheng, G.; Han, J.; Guo, L.; Liu, Z.; Bu, S.; Ren, J. Effective and efficient midlevel visual elements-oriented land-use classification using VHR remote sensing images. IEEE Trans. Geosci. Remote Sens. 2015, 53, 4238–4249. [Google Scholar] [CrossRef]

- Anwer, R.M.; Khan, F.S.; van de Weijer, J.; Molinier, M.; Laaksonen, J. Binary patterns encoded convolutional neural networks for texture recognition and remote sensing scene classification. ISPRS J. Photogramm. Remote Sens. 2018, 138, 74–85. [Google Scholar] [CrossRef] [Green Version]

- Sivic, J.; Zisserman, A. Video google: A text retrieval approach to object matching in videos. In Proceedings of the IEEE International Conference on Computer Vision, Nice, France, 13–16 October 2003; Volume 2, pp. 1470–1477. [Google Scholar]

- Csurka, G.; Dance, C.R.; Fan, L.; Bray, C.; Csurka, G. Visual categorization with bags of keypoints. Workshop Statist. Learn. Comput. Vis. Eccv. 2004, 44, 1–22. [Google Scholar]

- Bosch, A.; Zisserman, A.; Muñoz, X. Scene classification using a hybrid generative/discriminative approach. IEEE Trans. Pattern Anal. Mach. Intell. 2008, 30, 712–727. [Google Scholar] [CrossRef] [PubMed]

- Yang, L.; Jin, R.; Sukthankar, R.; Jurie, F. Unifying discriminative visual codebook generation with classifier training for object category recognition. In Proceedings of the 26th IEEE Conference on Computer Vision and Pattern Recognition, Anchorage, AK, USA, 23–28 June 2008. [Google Scholar]

- Lazebnik, S.; Schmid, C.; Ponce, J. Beyond bags of features: spatial pyramid matching for recognizing natural scene categories. In Proceedings of the Conference on Computer Vision and Pattern Recognition, New York, NY, USA, 17–22 June 2006; pp. 2169–2178. [Google Scholar]

- Wang, J.; Yang, J.; Yu, K.; Lv, F.; Huang, T.; Gong, Y. Locality-constrained linear coding for image classification. In Proceedings of the Computer Vision and Pattern Recognition, San Francisco, CA, USA, 13–18 June 2010; pp. 3360–3367. [Google Scholar]

- Koch, C.; Ullman, S. Shifts in selective visual attention: Towards the underlying neural circuitry. Human Neurobiol. 1985, 4, 219–227. [Google Scholar]

- Achanta, R.; Estrada, F.; Wils, P.; Süsstrunk, S. Salient region detection and segmentation. In Computer Vision Systems; Springer: Berlin, Germany, 2008; pp. 66–75. [Google Scholar]

- Seo, H.J.; Milanfar, P. Training-free, generic object detection using locally adaptive regression kernels. IEEE Trans. Pattern Anal. Mach. Intell. 2009, 32, 1688–1704. [Google Scholar]

- Rahtu, E.; Heikkilä, J. A Simple and efficient saliency detector for background subtraction. In Proceedings of the IEEE International Conference on Computer Vision Workshops, Kyoto, Japan, 27 September–4 October 2009; pp. 1137–1144. [Google Scholar]

- Tavakoli, H.R.; Rahtu, E. Fast and efficient saliency detection using sparse sampling and kernel density estimation. In Image Analysis; Springer-Verlag: Berlin, Germany, 2011; pp. 666–675. [Google Scholar]

- Guo, C.; Ma, Q.; Zhang, L. Spatio-temporal saliency detection using phase spectrum of quaternion fourier transform. In Proceedings of the 26th IEEE Conference on Computer Vision and Pattern Recognition, Anchorage, AK, USA, 23–28 June 2008. [Google Scholar]

- Hou, X.; Zhang, L. Saliency detection: A spectral residual approach. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Minneapolis, MN, USA, 17–22 June 2007. [Google Scholar]

- Achanta, R.; Hemami, S.; Estrada, F.; Susstrunk, S. Frequency-tuned salient region detection. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 1597–1604. [Google Scholar]

- Bruce, N.D.B.; Tsotsos, J.K. Saliency based on information maximization. In Proceedings of the NIPS’05 Proceedings of the 18th International Conference on Neural Information Processing Systems, Cambridge, MA, USA, 5–8 December 2005; pp. 155–162. [Google Scholar]

- Lin, Y.; Fang, B.; Tang, Y. A computational model for saliency maps by using local entropy. In Proceedings of the National Conference on Artificial Intelligence, Atlanta, GA, USA, 11–15 July 2010; Volume 2, pp. 967–973. [Google Scholar]

- Mahadevan, V.; Vasconcelos, N. Spatiotemporal saliency in dynamic scenes. IEEE Trans. Pattern Anal. Mach. Intell. 2009, 32, 171–177. [Google Scholar] [CrossRef] [PubMed]

- Aiazzi, B.; Alparone, L.; Baronti, S.; Garzelli, A.; Zoppetti, C. Nonparametric change detection in multitemporal SAR images based on mean-shift clustering. IEEE Trans. Geosci. Remote Sens. 2013, 51, 2022–2031. [Google Scholar] [CrossRef]

- Liu, J.; Tang, Z.; Cui, Y.; Wu, G. Local competition-based superpixel segmentation algorithm in remote sensing. Sensors 2017, 17, 1364. [Google Scholar] [CrossRef] [PubMed]

- Chen, J.; Li, Q.; Peng, Q.; Wong, K.H. CSIFT based locality-constrained linear coding for image classification. Pattern Anal. Appl. 2015, 18, 441–450. [Google Scholar] [CrossRef]

- Yu, K.; Zhang, T.; Gong, Y. Nonlinear learning using local coordinate coding. In Proceedings of the Advances in Neural Information Processing Systems 22, Vancouver, BC, Canada, 7–10 December 2009; pp. 2223–2231. [Google Scholar]

- Wang, S.; Ding, Z.; Fu, Y. Marginalized denoising dictionary learning with locality constraint. IEEE Trans. Image Process. 2018, 27, 500–510. [Google Scholar] [CrossRef] [PubMed]

- Lowe, D.G.; Lowe, D.G. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Ojala, T.; Pietikainen, M.; Maenpaa, T. Multiresolution gray-scale and rotation invariant texture classification with local binary patterns. Pattern Anal. Mach. Intell. IEEE Trans. 2002, 24, 971–987. [Google Scholar] [CrossRef] [Green Version]

- Boser, B.E.; Guyon, I.M.; Vapnik, V.N. Training algorithm for optimal margin classifiers. In Proceedings of the Fifth Annual ACM Workshop on Computational Learning Theory, New York, NY, USA, 27–29 July 1992; pp. 144–152. [Google Scholar]

- Fan, R.E.; Chang, K.W.; Hsieh, C.J.; Wang, X.-R.; Lin, C.-J. LIBLINEAR: A library for large linear classification. J. Mach. Learn. Res. 2008, 9, 1871–1874. [Google Scholar]

- Dai, D.; Yang, W. Satellite image classification via two-layer sparse coding with biased image representation. IEEE Geosci. Remote Sens. Lett. 2011, 8, 173–176. [Google Scholar] [CrossRef]

- Sheng, G.; Yang, W.; Xu, T.; Sun, H. High-resolution satellite scene classification using a sparse coding based multiple feature combination. Int. J. Remote Sens. 2012, 33, 2395–2412. [Google Scholar] [CrossRef]

| Methods | Traditional BoF | Traditional LLC | Proposed Method | |

|---|---|---|---|---|

| Descriptors | ||||

| SIFT | 72.87% | 73.27% | 79.01% | |

| Methods | Traditional BoF | Traditional LLC | Proposed Method | |

|---|---|---|---|---|

| Descriptors | ||||

| LBP | 68.71% | 72.87% | 78.22% | |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ji, L.; Hu, X.; Wang, M. Saliency Preprocessing Locality-Constrained Linear Coding for Remote Sensing Scene Classification. Electronics 2018, 7, 169. https://doi.org/10.3390/electronics7090169

Ji L, Hu X, Wang M. Saliency Preprocessing Locality-Constrained Linear Coding for Remote Sensing Scene Classification. Electronics. 2018; 7(9):169. https://doi.org/10.3390/electronics7090169

Chicago/Turabian StyleJi, Lipeng, Xiaohui Hu, and Mingye Wang. 2018. "Saliency Preprocessing Locality-Constrained Linear Coding for Remote Sensing Scene Classification" Electronics 7, no. 9: 169. https://doi.org/10.3390/electronics7090169

APA StyleJi, L., Hu, X., & Wang, M. (2018). Saliency Preprocessing Locality-Constrained Linear Coding for Remote Sensing Scene Classification. Electronics, 7(9), 169. https://doi.org/10.3390/electronics7090169