FacePET: Enhancing Bystanders’ Facial Privacy with Smart Wearables/Internet of Things

Abstract

1. Introduction

- We present a taxonomy of recently proposed techniques aimed at enhancing the facial privacy of bystanders.

- We describe and evaluate the design of a wearable device called Facial Privacy Enhancing Technology (FacePET) that enhances the facial privacy of its wearer. To the best of our knowledge, this is the first work that describes an IoT device to enhance the privacy of its wearer.

- We describe a protocol over Bluetooth that provides FacePET’s users a way to provide consent to third parties who may want to take photos of them.

2. Related Work

2.1. Location Dependent Methods

2.2. Obfuscation Dependent Methods

2.3. Evaluation of Methods for Facial Privacy Protection

- Usability: In human-computer interaction, usability is described as how easily a system can be used by a typical consumer/user to fulfill its objectives [23]. In systems that enhance bystanders’ facial privacy, minimal user intervention should be required by the bystander.

- Power consumption: In any type of battery-powered system, power consumption plays a substantial role because devices that deplete their battery in a fast manner need to be recharged often. Since many solutions for bystanders’ facial privacy protection involve the utilization of algorithms in mobile devices, power consumption is a design issue for such systems [24].

- Effectiveness: Solutions to protect bystanders’ facial privacy involve components and algorithms to identify contexts/faces (to blur or obfuscate them), while others involve extra devices or mechanisms combined with intelligent algorithms. Since these systems make use of artificial intelligence algorithms (i.e., classification algorithms) to detect these contexts and/or faces, the solutions may include false detections or misclassifications which affect the effectiveness and accuracy of the system.

3. FacePET: Enhancing Facial Privacy with Smart Wearables

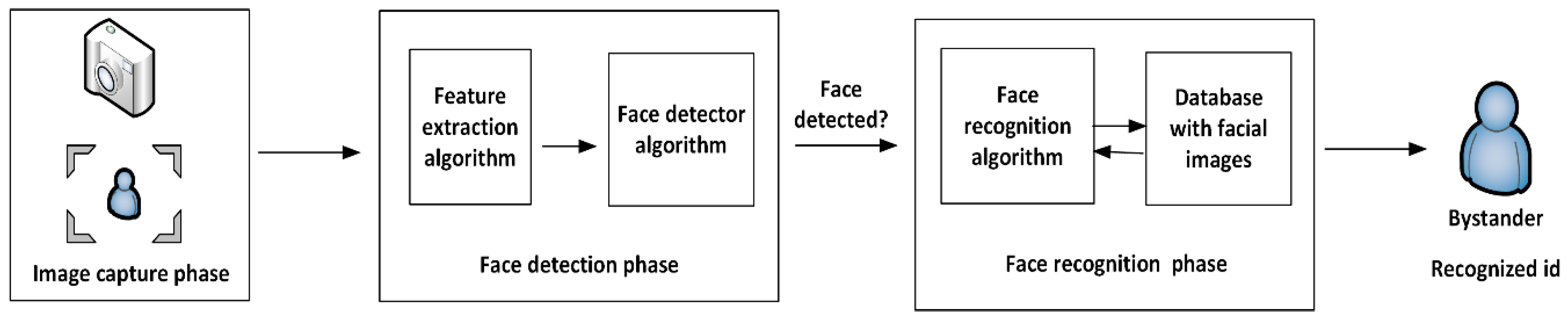

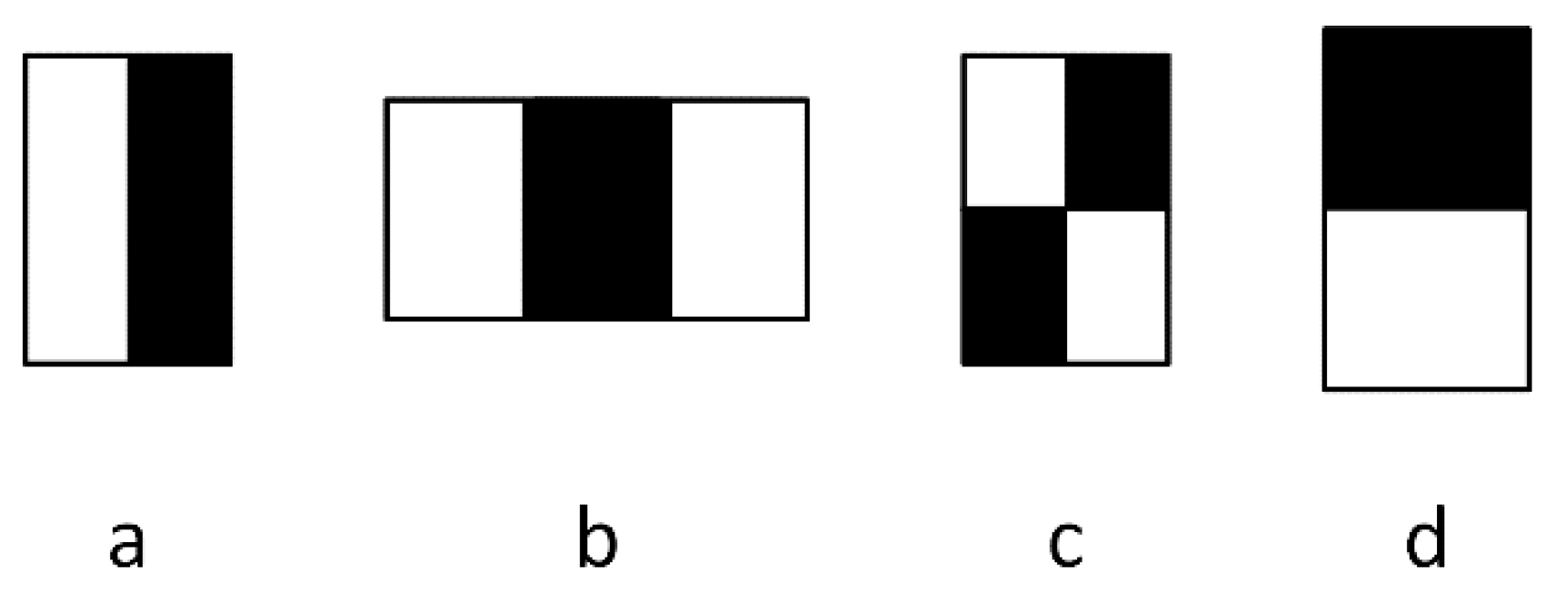

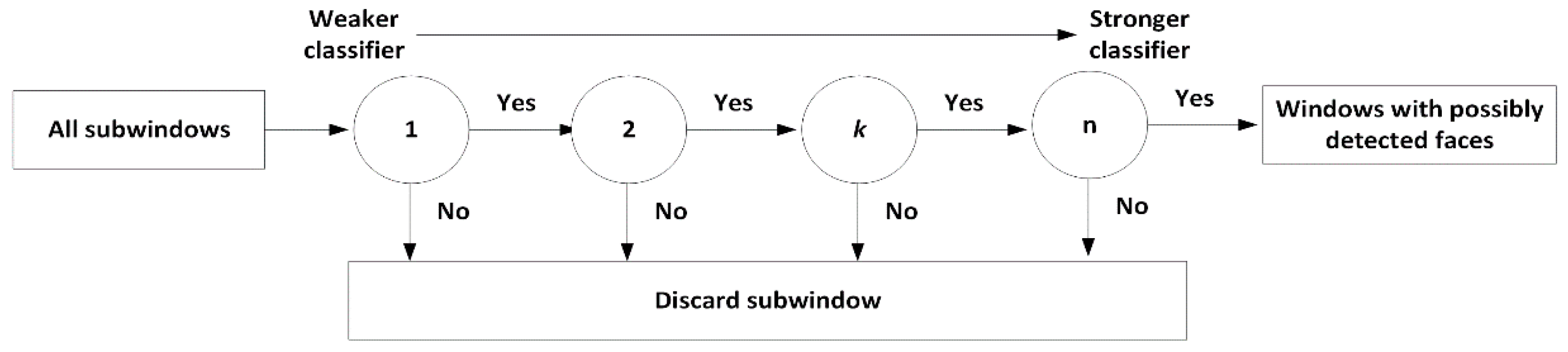

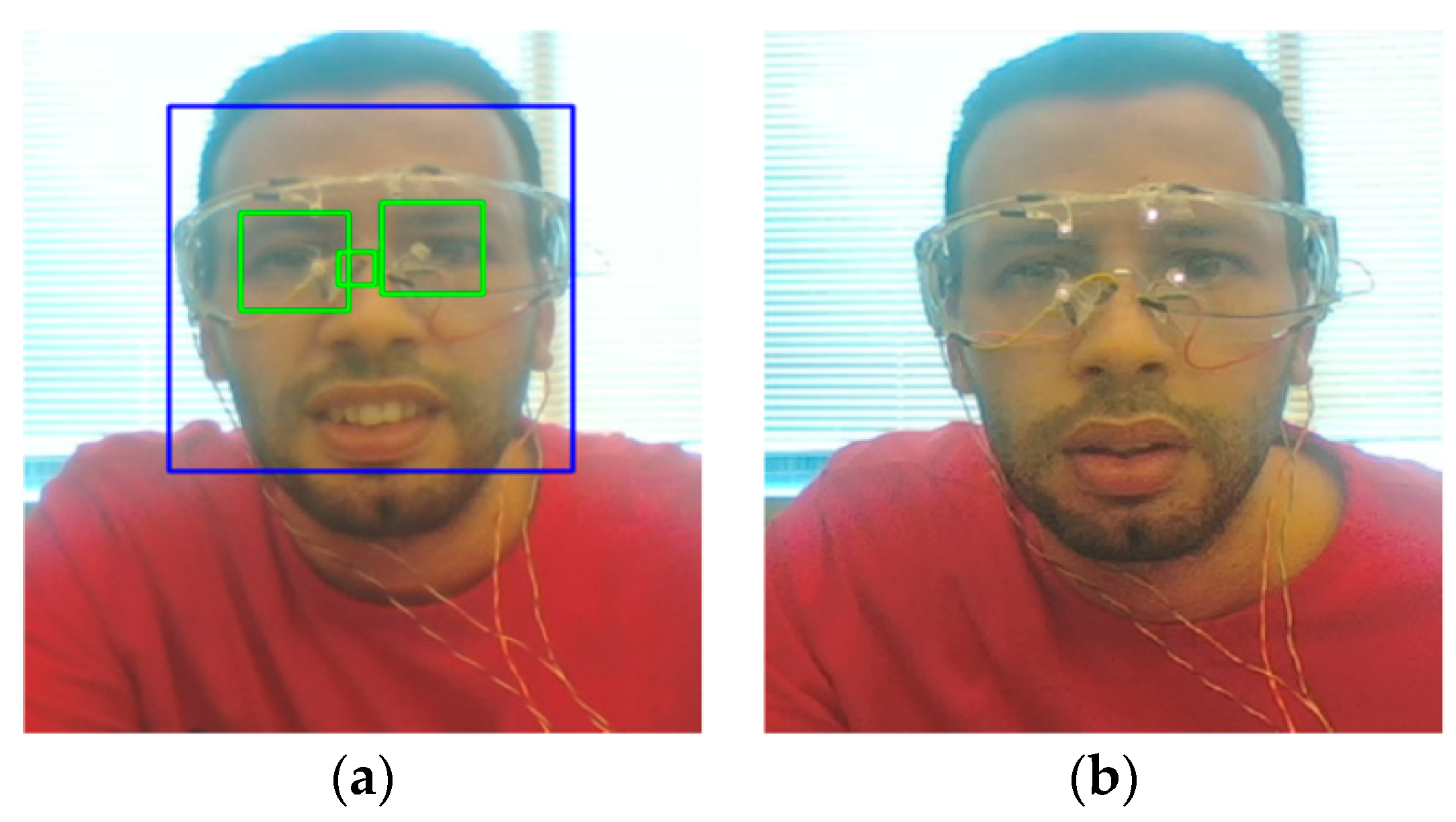

3.1. Face Detection and Recognition

3.2. Proposed FacePET System

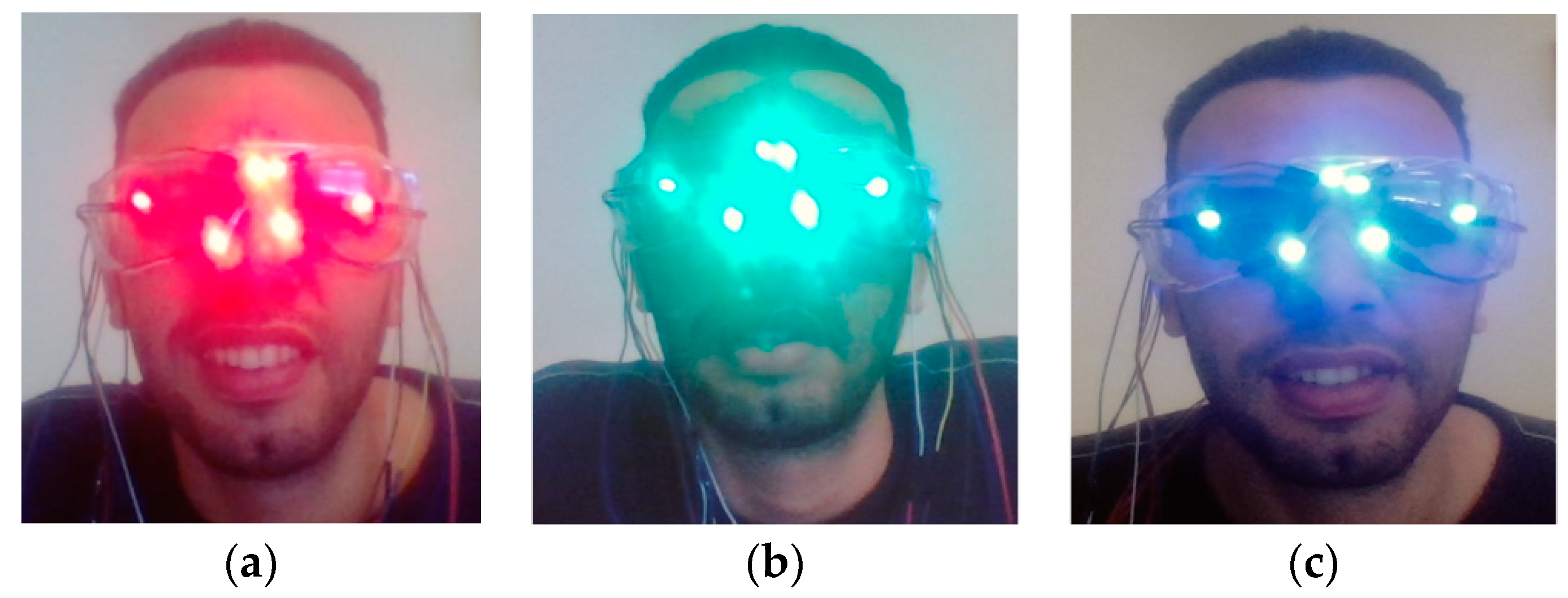

- In Yamada’s work [18] the authors proposed the use of near-infrared light to saturate the Charged-Coupled Device (CCD) sensor of digital cameras to distort the Haar-like features. In contrast, our work uses visible light. The reason for using visible light is that newer cameras in smart phones (e.g., Apple’s iPhone 4 and newer devices) and other devices may include an IR filter that blocks the intended noise if IR light is used. This makes their device unsuccessful in protecting bystanders’ facial privacy.

- Our system includes a BLE microcontroller for the bystander to control an Access Control List (ACL) in which the bystander can set up permissions for third-party devices to take photos without the noise (temporarily disabling the FacePET wearable), hence creating a “smart” wearable.

- We developed a bystander consent protocol over Bluetooth that enables communication between the bystander and third-party devices to provide and exchange privacy consents.

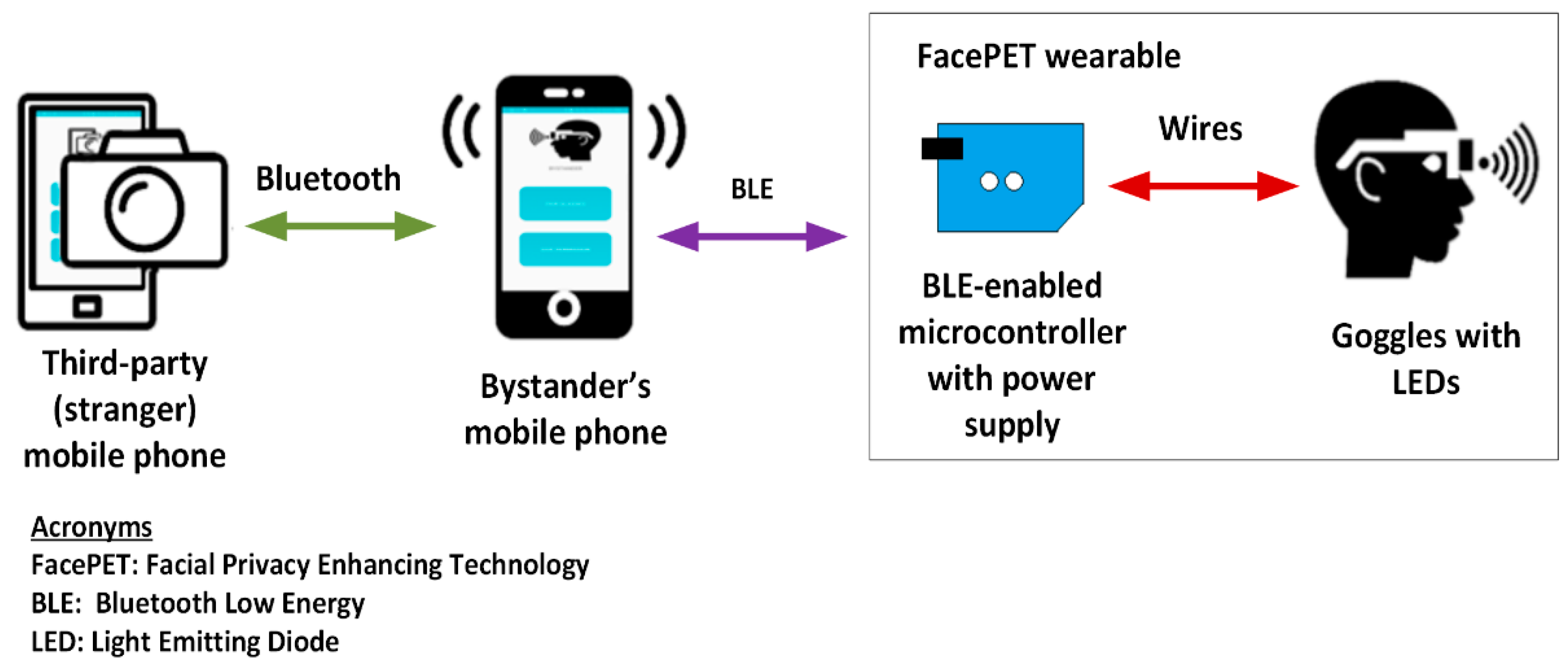

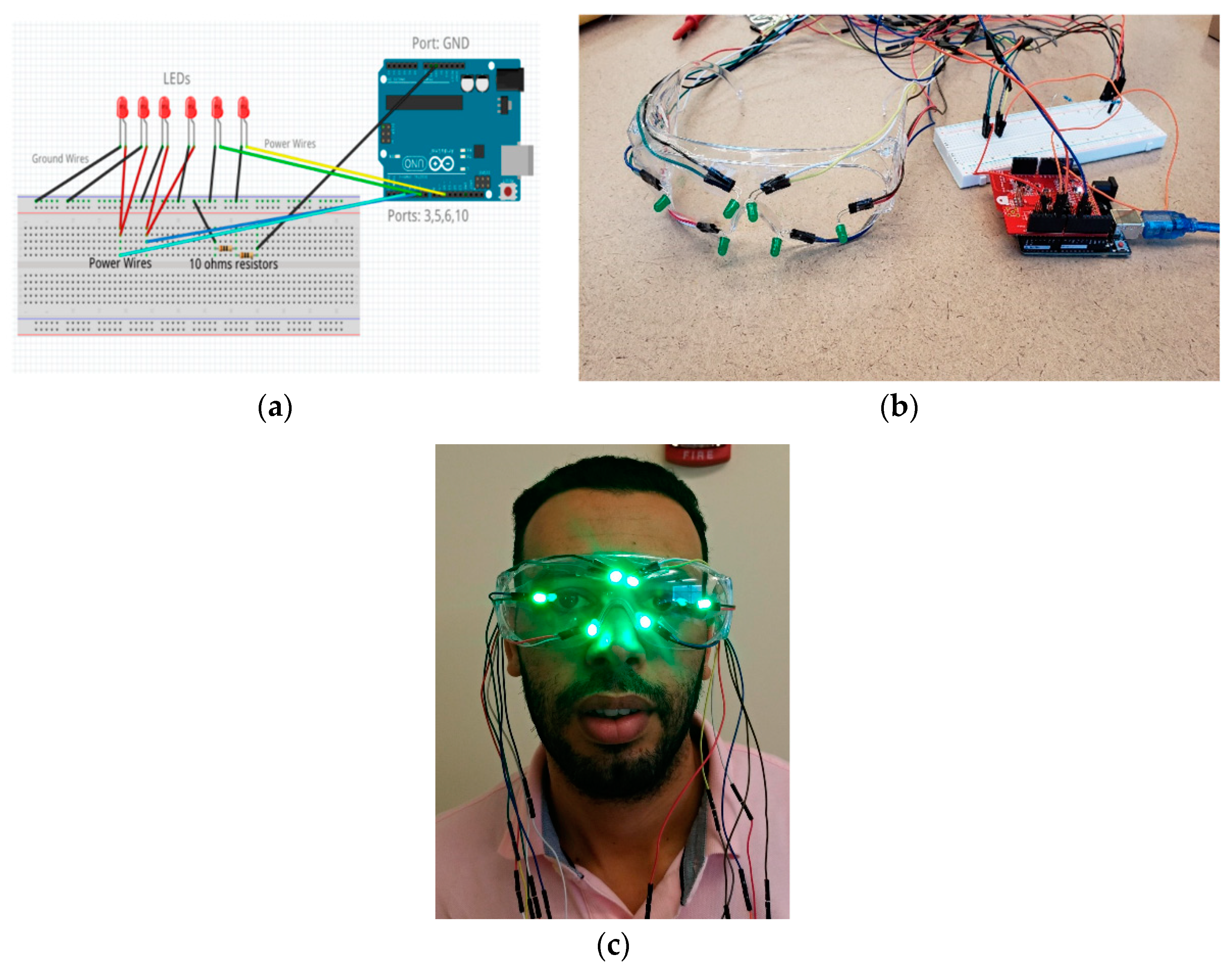

3.2.1. FacePET System’s Hardware Architecture

- Goggle with LEDs: The goggles are equipped with LEDs that are turn on/off by the microcontroller. To avoid physical discomfort to the bystander when using the goggles and the LEDs are turned on, the goggles’ lenses should have a filter tuned to the wavelength of the LEDs on the googles. The LEDs on the goggles are connected to the BLE-enabled microcontroller through wires which also provide power to them.

- BLE-enabled microcontroller: This component controls the LEDs on the goggle and connects to the bystander’s mobile phone via Bluetooth Low Energy (BLE). The microcontroller has its own power supply independent of the one in the bystanders’ mobile phone that also provides power to the LEDs. Depending on the privacy protocols implemented, the microcontroller may have the software that implements the Access Control List (ACL) to disable the LEDs, or the ACL may be implemented at the bystanders’ mobile phone software. The FacePET wearable device is composed of the BLE microcontroller and the googles (as shown in Figure 5).

- Bystanders’ mobile phone: The bystanders’ mobile phone executes software that configures the wearable’s microcontroller. In addition to configuring the wearable, the bystanders’ mobile phone executes software that provides consent to third-parties to turn off the LEDs when an authorized third party wishes to take a photo with the bystander in it. Depending on the privacy protocols implemented, when an authorized third-party wishes to take a photo with the bystander, the ACL may be implemented in the bystander’s mobile phone or the third-party may communicate directly with the wearable. The bystanders’ mobile phone communicates via BLE with the microcontroller and it communicates with third-party mobile phones via Bluetooth. In future implementations this communication between smartphones may also be Wi-Fi or IP-based.

- Third-party (stranger) mobile phone: The third-party (stranger) mobile phone is used by a third-party to request consent for photos to be taken about the bystander. In our current implementation these consents are requested via Bluetooth to the bystanders’ mobile phone prior to the third-party can take a photo of the bystander. If consent is given by the bystander, when the third-party mobile phone is about to take a photo of the bystander, it communicates with the bystander device again to request the LEDs of the goggle to be turned off (if consent has been previously given).

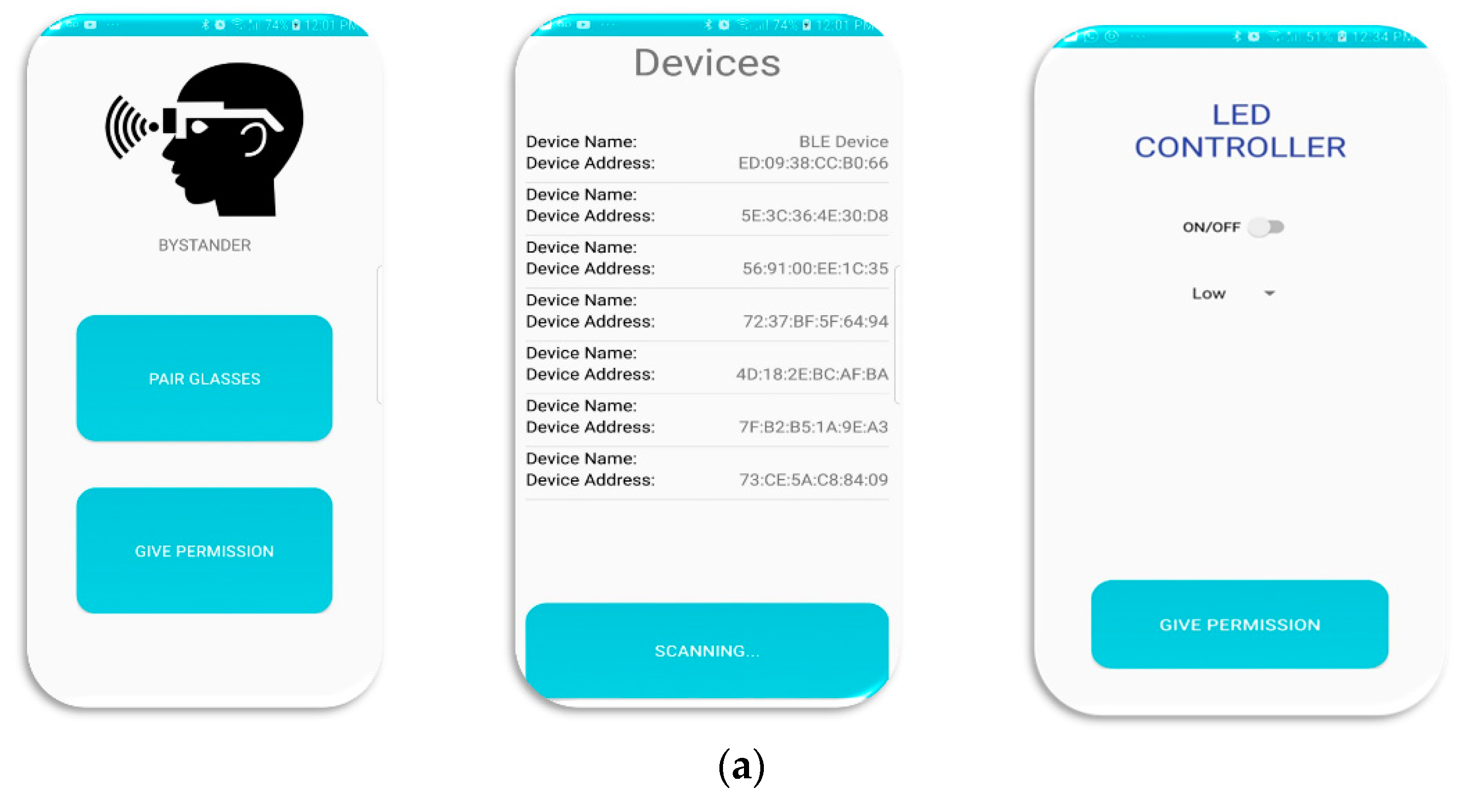

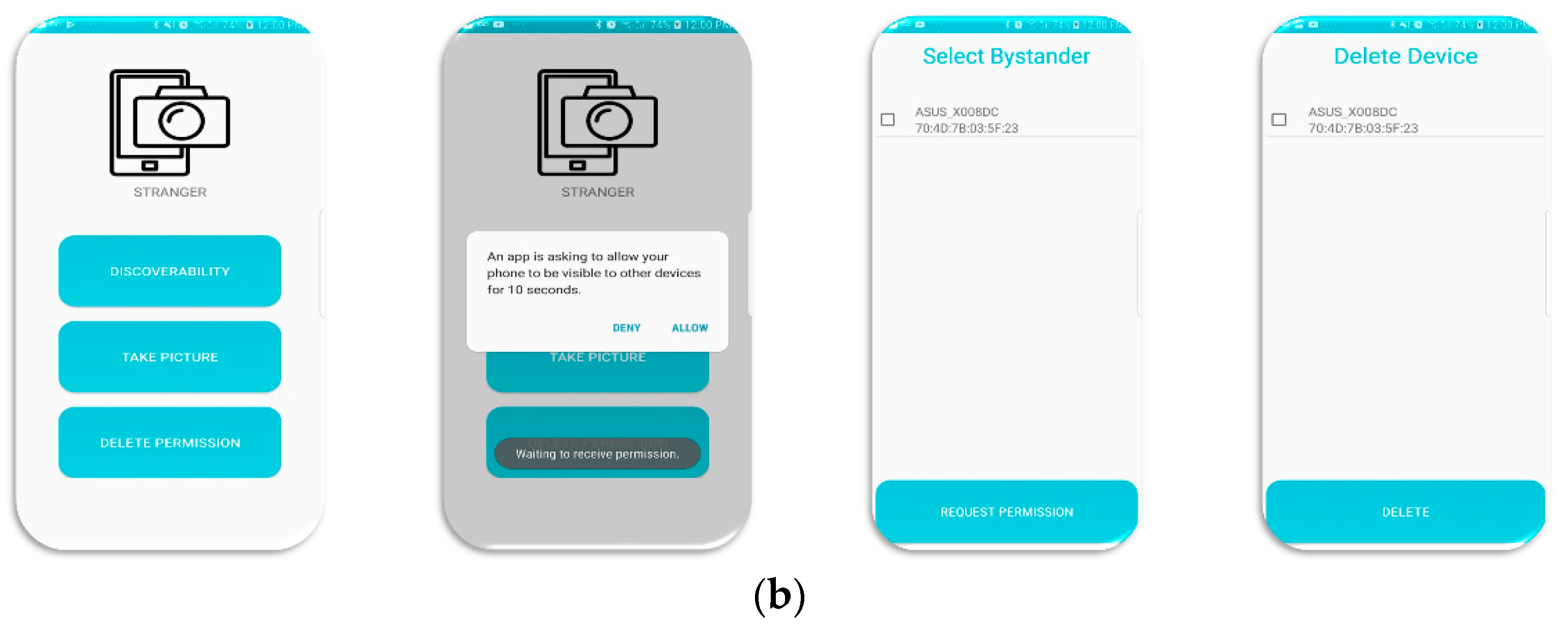

3.2.2. Proposed FacePET System’s Software Components

- FacePET microcontroller’s software: In the current implementation of the FacePET wearable device, this component allows to turn on/off and change the intensity of the goggle’s LEDs (in groups of two LEDs independently), and provides a mechanism to control these LEDs from the bystanders’ mobile phone via Bluetooth Low Energy (BLE). Since we built the wearable device with the Arduino Uno and the Seedstudio BLE Shield, the RBL_nf8001 and BLE-SDK Arduino libraries were used to create a Generic Attributes (GATT) BLE server that is used to receive commands from the bystander’s mobile phone.

- FacePET bystander’s mobile application: This application provides the bystander a controller for the FacePET wearable device via BLE to turn on/off and change the intensity of the LEDs, it implements the ACL for the FacePET wearable, and it also implements a Bluetooth protocol that provides the bystander wearing the FacePET wearable device a mechanism to give consent to third-parties who wish to take photos. Initially, the FacePET bystanders’ application scans for a FacePET wearable device in the area and once connected to it, it enables the LEDs in the wearable. The LEDs stay powered on until the bystander turn them off, or a third-party FacePET (stranger) mobile application with consent requests a photo to be taken. The protocol to provide consent is described in Section 3.2.3. Figure 7a shows a few screenshots of this mobile application.

- FacePET third-party (stranger) mobile application: This application provides a third-party (stranger) a mechanism to ask for consent to take photos from the bystander via Bluetooth. Once consent is given, the application sends a command to the FacePET bystander’s mobile application to temporarily disable the FacePET wearable device (as described in Section 3.2.3). Figure 7b shows a few screenshots of this mobile application.

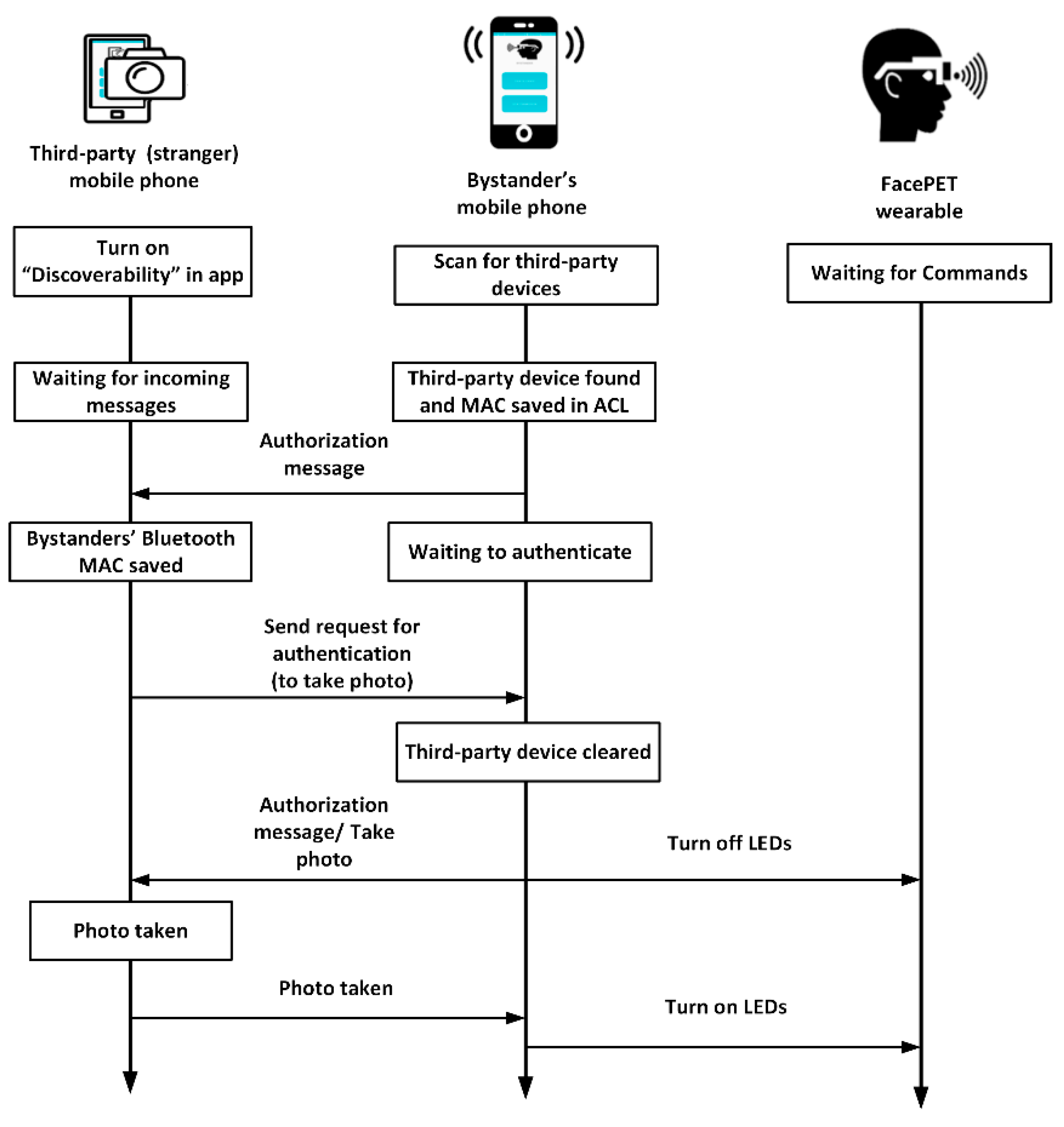

3.2.3. FacePET System’s Consent Protocol

- Betsy opens the FacePET bystander’s mobile application and scans for Bluetooth devices to get Trisha’s Bluetooth MAC address and device name.

- Once Trisha’s device is discovered via Bluetooth, Betsy authorizes Trisha’s device and the bystander’s application saves Trisha’s Bluetooth MAC address and device name in a file (Betsy’s application adds Trisha’s device to the ACL).

- Betsy’s FacePET bystanders’ application sends a message via Bluetooth to Trisha’s FacePET application notifying that her device is cleared to take photos of Betsy. At this point Betsy’s FacePET’s application creates a Bluetooth server socket to wait for photo requests from Trisha’s FacePET application.

- Trisha’s application saves Betsy’s Bluetooth address so that it can be used later to request Betsy’s FacePET wearable’s LEDs to be turned off (as long both mobile phone devices are in range and Betsy’s FacePET mobile application still has Trisha’s phone authorized in the ACL).

- Trisha opens her FacePET mobile application. She presses the “Take Photo button” and selects Betsy’s device from the list. Trisha’s device sends then an authentication message to Betsy’s device via Bluetooth.

- Betsy’s FacePET mobile application receives the authentication message. The mobile application then checks if the Trisha’s device is authorized in the ACL. If it is, then it notifies Trisha’s application that her device can take the photo, and it sends a message via BLE to Besty’s FacePET wearable device to turn off the device. Otherwise, Betsy’s application ignores the message and the LEDs stay on.

- Trisha takes the photo and then it sends a message back to Betsy’s FacePET’s mobile application to turn on the LEDs again.

4. Evaluation of the FacePET System

5. Limitations and Future Research

- People would ask why the user was wearing such a device.

- The current model is too big and draws attention.

- The model is not stylish.

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- The Ericcson Mobility Report. Available online: https://www.ericsson.com/en/mobility-report (accessed on 30 August 2018).

- Perez, A.J.; Zeadally, S.; Griffith, S. Bystanders’ Privacy. IT Prof. 2017, 19, 61–65. [Google Scholar] [CrossRef]

- Perez, A.J.; Zeadally, S. Privacy Issues and Solutions for Consumer Wearables. IT Prof. 2018, 20, 46–56. [Google Scholar] [CrossRef]

- This Russian Technology Can Identify You with Just a Picture of Your Face. Available online: http://www.businessinsider.com/findface-facial-recognition-can-identify-you-with-just-a-picture-of-your-face-2016-6 (accessed on 18 September 2018).

- Mitchell, R. Sensing mine, yours, theirs, and ours: Interpersonal ubiquitous interactions. In Proceedings of the 2015 ACM Int’l. Symposium Wearable Computers (ISWC 2015), Osaka, Japan, 7–11 September 2015; pp. 933–938. [Google Scholar]

- Denning, T.; Dehlawi, Z.; Kohno, T. In situ with bystanders of augmented reality glasses: Perspectives on recording and privacy-mediating technologies. In Proceedings of the 32nd SIGCHI Conference on Human Factors in Computing Systems, Toronto, ON, Canada, 26 April–1 May 2014; pp. 2377–2386. [Google Scholar]

- Flammer, I. Genteel Wearables: Bystander-Centered Design. IEEE Secur. Priv. 2016, 14, 73–79. [Google Scholar] [CrossRef]

- Hatuka, T.; Toch, E. Being visible in public space: The normalisation of asymmetrical visibility. Urban Stud. 2017, 54, 984–998. [Google Scholar] [CrossRef]

- Palen, L.; Salzman, M.; Youngs, E. Going wireless: Behavior & practice of new mobile phone users. In Proceedings of the 2000 ACM Conf. on Computer Supported Cooperative Work (CSCW’00), Philadelphia, PA, USA, 2–6 December 2000; pp. 201–210. [Google Scholar]

- Motti, V.G.; Caine, K. Users’ privacy concerns about wearables. In Proceedings of the International Conference on Financial Cryptography and Data Security, San Juan, Puerto Rico, 26–30 January 2015; pp. 231–244. [Google Scholar]

- Jarvis, J. Public Parts: How Sharing in the Digital Age Improves the Way We Work and Live, 1st ed.; Simon & Schuster: New York, NY, USA, 2011; pp. 1–272. ISBN 978-1451636000. [Google Scholar]

- Truong, K.N.; Patel, S.N.; Summet, J.W.; Abowd, G.D. Preventing camera recording by designing a capture-resistant environment. In Proceedings of the International Conference on Ubiquitous Computing, Tokio, Japan, 11–14 September 2005; pp. 73–86. [Google Scholar]

- Tiscareno, V.; Johnson, K.; Lawrence, C. Systems and Methods for Receiving Infrared Data with a Camera Designed to Detect Images Based on Visible Light. U.S. Patent 8,848,059, 30 September 2014. [Google Scholar]

- Wagstaff, J. Using Bluetooth to Disable Camera Phones. Available online: http://www.loosewireblog.com/2004/09/using_bluetooth.html (accessed on 21 September 2018).

- Kapadia, A.; Henderson, T.; Fielding, J.J.; Kotz, D. Virtual walls: Protecting digital privacy in pervasive environments. In Proceedings of the International Conference Pervasive Computing, LNCS 4480, Toronto, ON, Canada, 13–16 May 2007; pp. 162–179. [Google Scholar]

- Blank, P.; Kirrane, S.; Spiekermann, S. Privacy-Aware Restricted Areas for Unmanned Aerial Systems. IEEE Secur. Priv. 2018, 16, 70–79. [Google Scholar] [CrossRef]

- Pidcock, S.; Smits, R.; Hengartner, U.; Goldberg, I. Notisense: An urban sensing notification system to improve bystander privacy. In Proceedings of the 2nd International Workshop on Sensing Applications on Mobile Phones (PhoneSense), Seattle, WA, USA, 12–15 June 2011; pp. 1–5. [Google Scholar]

- Yamada, T.; Gohshi, S.; Echizen, I. Use of invisible noise signals to prevent privacy invasion through face recognition from camera images. In Proceedings of the ACM Multimedia 2012 (ACM MM 2012), Nara, Japan, 29 October 2012; pp. 1315–1316. [Google Scholar]

- Yamada, T.; Gohshi, S.; Echizen, I. Privacy visor: Method based on light absorbing and reflecting properties for preventing face image detection. In Proceedings of the 2013 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Manchester, UK, 13–16 October 2013; pp. 1572–1577. [Google Scholar]

- Sharif, M.; Bhagavatula, S.; Bauer, L.; Reiter, M.K. Accessorize to a crime: Real and stealthy attacks on state-of-the-art face recognition. In Proceedings of the 2016 ACM SIGSAC Conf. Computer and Communications Security (CCS 2016), Vienna, Austria, 24–28 October 2016; pp. 1528–1540. [Google Scholar]

- ObscuraCam: Secure Smart Camera. Available online: https://guardianproject.info/apps/obscuracam/ (accessed on 30 September 2018).

- Aditya, P.; Sen, R.; Druschel, P.; Joon Oh, S.; Benenson, R.; Fritz, M.; Schiele, B.; Bhattacharjee, B.; Wu, T.T. I-pic: A platform for privacy-compliant image capture. In Proceedings of the 14th Annual International Conference on Mobile Systems, Applications, and Services (MobiSys), Singapore, 25–30 June 2016; pp. 249–261. [Google Scholar]

- Nielsen, J. Usability Engineering, 1st ed.; Academic Press: Cambridge, MA, USA, 1993; ISBN 978-0125184052. [Google Scholar]

- Zeadally, S.; Khan, S.; Chilamkurti, N. Energy-efficient Networking: Past, present, and future. J. Supercomput. 2012, 62, 1093–1118. [Google Scholar] [CrossRef]

- Hjelmås, E.; Low, B.K. Face detection: A survey. Comput. Vis. Image Underst. 2001, 83, 236–274. [Google Scholar] [CrossRef]

- Yang, M.H.; Kriegman, D.J.; Ahuja, N. Detecting faces in images: A survey. IEEE Trans. Pattern Anal. Mach. Intell. 2002, 24, 34–58. [Google Scholar] [CrossRef]

- Facebook’s Push for Facial Recognition Prompts Privacy Alarms. Available online: https://www.nytimes.com/2018/07/09/technology/facebook-facial-recognition-privacy.html (accessed on 5 October 2018).

- Inside China’s Dystopian Dreams: A.I., Shame and Lots of Cameras. Available online: https://www.nytimes.com/2018/07/08/business/china-surveillance-technology.html (accessed on 5 October 2018).

- Face Recognition. Available online: https://www.fbi.gov/file-repository/about-us-cjis-fingerprints_biometrics-biometric-center-of-excellences-face-recognition.pdf/view (accessed on 5 October 2018).

- Roesner, F.; Molnar, D.; Moshchuk, A.; Kohno, T.; Wang, H.J. World-driven access control for continuous sensing. In Proceedings of the 2014 ACM SIGSAC Conference on Computer and Communications Security (CCS 2014), Scottsdale, AZ, USA, 3–7 November 2014; pp. 1169–1181. [Google Scholar]

- Maganis, G.; Jung, J.; Kohno, T.; Sheth, A.; Wetherall, D. Sensor Tricorder: What does that sensor know about me? In Proceedings of the 12th Workshop on Mobile Computing Systems and Applications (HotMobile ‘11), Phoenix, AZ, USA, 1–3 March 2011; pp. 98–103. [Google Scholar]

- Templeman, R.; Korayem, M.; Crandall, D.J.; Kapadia, A. PlaceAvoider: Steering First-Person Cameras away from Sensitive Spaces. In Proceedings of the 2014 Network and Distributed System Security (NDSS) Symposium, San Diego, CA, USA, 23–26 February 2014; pp. 23–26. [Google Scholar]

- AVG Reveals Invisibility Glasses at Pepcom Barcelona. Available online: http://now.avg.com/avg-reveals-invisibility-glasses-at-pepcom-barcelona. (accessed on 30 September 2018).

- Frome, A.; Cheung, G.; Abdulkader, A.; Zennaro, M.; Wu, B.; Bissacco, A.; Adam, H.; Neven, H.; Vincent, L. Large-scale privacy protection in Google Street View. In Proceedings of the 2009 12th International Conference on Computer Vision, Kyoto, Japan, 29 September–2 October 2009; pp. 2373–2380. [Google Scholar]

- Li, A.; Li, Q.; Gao, W. Privacycamera: Cooperative privacy-aware photographing with mobile phones. In Proceedings of the 2016 13th Annual IEEE International Conference on Sensing, Communication, and Networking (SECON), London, UK, 27–30 June 2016; pp. 1–9. [Google Scholar]

- Schiff, J.; Meingast, M.; Mulligan, D.K.; Sastry, S.; Goldberg, K. Respectful cameras: Detecting visual markers in real-time to address privacy concerns. In Proceedings of the 2007 IEEE/RSJ International Conference on Intelligent Robots and Systems, San Diego, CA, USA, 29 October–2 November 2007; pp. 65–89. [Google Scholar]

- Ra, M.R.; Lee, S.; Miluzzo, E.; Zavesky, E. Do Not Capture: Automated Obscurity for Pervasive Imaging. IEEE Internet Comput. 2017, 21, 82–87. [Google Scholar] [CrossRef]

- Ashok, A.; Nguyen, V.; Gruteser, M.; Mandayam, N.; Yuan, W.; Dana, K. Do not share!: Invisible light beacons for signaling preferences to privacy-respecting cameras. In Proceedings of the 1st ACM MobiCom Workshop on Visible Light Communication Systems, Maui, HI, USA, 7–11 September 2014; pp. 39–44. [Google Scholar]

- Ye, T.; Moynagh, B.; Albatal, R.; Gurrin, C. Negative face blurring: A privacy-by-design approach to visual lifelogging with google glass. In Proceedings of the 23rd ACM International Conference on Information and Knowledge Management, Shanghai, China, 3–7 November 2014; pp. 2036–2038. [Google Scholar]

- Zhang, C.; Zhengyou, Z. A Survey of Recent Advances in Face Detection; Microsoft Technical Report; Microsoft Corporation: Redmond, WA, USA, 2010. [Google Scholar]

- Viola, P.; Jones, M. Robust real-time face detection. Int. J. Comput. Vis. 2004, 57, 137–154. [Google Scholar] [CrossRef]

- Arduino Uno Rev3. Available online: https://store.arduino.cc/usa/arduino-uno-rev3 (accessed on 8 October 2018).

- Seedstudio Bluetooth Low Energy Shield Version 2.1. Available online: https://www.seeedstudio.com/Bluetooth-4.0-Low-Energy-BLE-Shield-v2.1-p-1995.html (accessed on 8 October 2018).

- Face Detection using Haar Cascades. Available online: https://docs.opencv.org/3.4.2/d7/d8b/tutorial_py_face_detection.html (accessed on 8 October 2018).

- Lienhart, R.; Kuranov, A.; Pisarevsky, V. Empirical analysis of detection cascades of boosted classifiers for rapid object detection. In Proceedings of the Joint Pattern Recognition Symposium, Magdeburg, Germany, 10–12 September 2003; pp. 297–304. [Google Scholar]

- Bayer, B.E. Color Imaging Array. U.S. Patent 3,971,065, 20 July 1976. [Google Scholar]

- KentOptronics e-TransFlector™. Available online: http://www.kentoptronics.com/solutions.html (accessed on 19 November 2018).

- Sun, X.; Wu, P.; Hoi, S.C. Face detection using deep learning: An improved faster RCNN approach. Neurocomputing 2018, 299, 42–50. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. In Proceedings of the Neural Information Processing Systems Conference, Montreal, QC, Canada, 7–12 December 2015; pp. 91–99. [Google Scholar]

| Privacy Concern | Description |

|---|---|

| Facial recognition | Association and recognition of a bystander to a place or a situation where the bystander would not wish to be recognized by others |

| Social implications | Unawareness by a network of friends regarding data being collected about them |

| Social media sync | Immediate publishing or sharing without the bystander’s knowledge |

| User’s fears: surveillance and Sousveillance | Continuous tracking of activities that might make a user/bystander feel that no matter what he or she does, everything is recorded |

| Speech disclosure | Capturing speech that a user or bystanders would not want to record or share |

| Surreptitious A/V recording | Recording audio or video without permission that might affect bystanders |

| Location disclosure | Fear of inadvertently sharing a location to third parties that should not have access to the location information |

| Design Issue | Description | Rating |

|---|---|---|

| Usability | Is the method easy to use? | Low, Medium, High |

| Power consumption | Does the method require high power consumption? | Low, Medium, High |

| Effectiveness | Is the method effective to protect bystanders? | Low, Medium, High |

| Method | Category | Usability | Power | Effectiveness | Limitations |

|---|---|---|---|---|---|

| BlindSpotCapture-resistant environment [12] | Location (disabling, sensor saturation) | High | Low | Low | Utilization of InfraRed (IR) light to disable CCD sensors may not be useful with IR filters on modern cameras. |

| Disabling devices via infrared [13] | Location (disabling, broadcasting of commands) | High | Low | Medium | Method requires third-party devices to receive IR commands and software to disable sensors which not all third-party devices may the capability. |

| Disabling devices via Bluetooth [14] | Location (disabling, broadcasting of commands) | High | Medium | Medium | Method requires third-party devices to receive Bluetooth commands and software to disable sensors which not all third-party devices may have the capability. |

| Virtual Walls [15] | Location (disabling, context-based) | Medium | High | Medium | Method requires bystanders to set up privacy rules that are accessed in third-party devices. Use of sensors in the mobile device to determine contexts may consume large amounts of power. |

| Privacy-restricted areas [16] | Location (disabling, context-based) | Medium | Medium | Medium | Method requires bystanders to set up privacy rules that are accessed in third-party devices. Proposed for unmanned aerial vehicles. |

| World-driven access control [30] | Location (disabling, context-based) | High | High | Medium | Method does not require bystanders’ intervention but device may not detect contexts correctly. |

| Sensor Tricorder [31] | Location (disabling, context-based) | High | High | Medium | Does not require bystanders’ intervention but device may not detect contexts correctly. Makes use of Quick Response (QR) codes to encode location privacy rules. |

| PlaceAvoider [32] | Location (disabling, context-based) | Medium | High | Medium | Requires machine learning algorithms to detect sensitive contexts. May not detect contexts correctly. Devices must have software to detect contexts. Requires third-party user intervention to check if areas are indeed sensitive. |

| NotiSense [17] | Obfuscation-based (bystander-based) | Medium | Low | Medium | Requires third-party devices to notify bystanders about possible privacy violations and have the bystander to take action to protect his/her facial privacy. |

| PrivacyVisor [18] | Obfuscation (bystander-based) | High | High | Low | Uses IR in wearables worn by bystanders to obfuscate facial features. IR can be blocked using filters. |

| PrivacyVisor III [19] | Obfuscation (bystander-based) | High | Low | High | Uses reflective materials in wearables used by bystanders to corrupt photos taken about them. |

| Perturbed eyeglass frames [20] | Obfuscation (bystander-based) | High | High | Medium | Uses patterns in glasses’ frames to confuse facial recognition algorithms. May be prone to re-identification. |

| Invisibility Glasses [33] | Obfuscation (bystander-based) | High | High | Low | Uses IR in wearables worn by bystanders to obfuscate facial features. Needs high power and IR can be blocked using IR filters which are available for mobile phones. |

| Privacy Protection in Google StreetView [34] | Obfuscation (device-based, default) | High | Low | High | This technology does not depend on the bystander but on the company collecting photos. Company performs obfuscation in the cloud after the photos have been forwarded from the device that captured them. |

| ObscuraCam [21] | Obfuscation (device-based, selective) | High | High | Medium | This technology blurs faces in photos through a mobile application. Face blurring occurs at the mobile phone and depending of the blurring technique bystanders could be re-identified. |

| I-pic [22] | Obfuscation (device-based, collaborative) | Medium | High | Medium | Uses protocols between bystander and third-party devices to allow/deny blurring based on privacy rules. Face blurring occurs at the mobile phone and depending on the blurring technique, bystanders could be re-identified. |

| PrivacyCamera [35] | Obfuscation (device-based, collaborative) | Medium | High | Medium | Uses protocols between the bystander and third-party device to allow/deny blurring based on privacy rules. Face blurring occurs at the mobile phone and depending on the blurring technique bystanders could be re-identified. |

| Respectful Cameras [36] | Obfuscation (device-based, selective) | High | Low | High | Bystanders use visual colored cues to inform capturing device of privacy rules. Developed for fixed cameras. Face is fully hidden. |

| Do Not Capture [37] | Obfuscation (device-based, collaborative) | Medium | High | Medium | Uses protocols between the bystander and third-party device to allow/deny blurring based on privacy rules. Face blurring occurs at the mobile phone and depending of the blurring technique the bystanders could be re-identified. |

| Invisible Light Beacons [38] | Obfuscation (device-based, selective) | High | High | Low | Bystanders use wearable IR beacons to inform capturing devices of privacy rules. Mobile devices with IR filters will ignore the signal sent by the beacons. |

| Negative face blurring [39] | Obfuscation (device-based selective) | Medium | Low | Medium | Once captured and stored, blurring of bystanders’ faces occur when photos are presented through social networks using stored privacy rules. |

| Mobile Phone | Basic Camera Features (Rear Camera; Front Camera; IR Filter) | Face Detected? |

|---|---|---|

| Apple iPhone 6 Plus | R: 8 MP; F: 1.2 MP; IR: Yes | No |

| Apple iPhone 7 Plus | R: 12 MP; F: 7 MP; IR: Yes | No |

| Apple iPhone 8 | R: 12 MP; F: 7 MP; IR: Yes | No |

| Apple iPhone 8 Plus | R: 12 MP + 12MP (dual cameras); F: 7 MP; IR: Yes | No |

| Apple iPhone X | R: 12 MP; F: 7 MP; IR: Yes | No |

| Samsung Galaxy S7 | R: 12 MP; F: 5 MP; IR: No | Yes |

| Samsung Galaxy S7 Edge | R: 12 MP; F: 5 MP; IR: No | No |

| Samsung Galaxy S8 | R: 12 MP; F: 8 MP; IR: No | No |

| Samsung Galaxy S9 | R: 12 MP; F: 8 MP; IR: No | No |

| Samsung Galaxy S9 Plus | R: 12 MP + 12MP (dual cameras); F: 8 MP; IR: No | No |

| Samsung Note 7 | R: 12 MP; F: 5 MP; IR: No | No |

| Samsung Note 8 | R: 12 MP + 12MP (dual cameras); F: 8 MP; IR: No | No |

| Asus ZenFone 3 Max | R: 16 MP; F: 8 MP; IR: No | No |

| Asus ZenFone 4 | R: 12 MP + 8MP (dual cameras); F: 8 MP; IR: No | No |

| OnePlus 6 | R: 16 MP + 8MP (dual cameras); F: 16 MP; IR: No | Yes |

| Motorola Moto G (2nd Gen) | R: 8 MP; F: 2 MP; IR: No | No |

| Method | Differences | Percentage of Successfully Blocked/De-Identified Faces |

|---|---|---|

| PrivacyVisor [18] | Method uses IR light in goggles and does not work if IR filters used. The method does not allow a bystander to give consent automatically to third parties to take photos to identify the bystander. | 100% (assuming a camera without IR filter) |

| PrivacyVisor III [19] | Method uses visible light through reflective/absorbing material in goggles to block facial features. It does not need power. The method does not allow a bystander to give consent automatically to third parties to take photos to identify the bystander. | Between 90% and 100% |

| Perturbed eyeglass frames [20] | Method uses patterns in glasses’ frames. The prototype was tested as patterns in goggles overlaid over photos. No physical device was developed. This approach is tailored towards face recognition instead of face detection. The method does not allow a bystander to give consent automatically to third parties to take photos to identify the bystander. | 80% |

| Invisibility Glasses [33] | Method uses IR light in goggles and does not work if IR filters used. This method does not allow a bystander to automatically and selectively allow who can take photos of him or her. | No accuracy reported |

| FacePET (this work) | Method uses visible light in goggles to block facial features and it also provides the bystander wearing the FacePET a way to give consent automatically to third parties to take photos and identify the bystander. | 87.5% |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Perez, A.J.; Zeadally, S.; Matos Garcia, L.Y.; Mouloud, J.A.; Griffith, S. FacePET: Enhancing Bystanders’ Facial Privacy with Smart Wearables/Internet of Things. Electronics 2018, 7, 379. https://doi.org/10.3390/electronics7120379

Perez AJ, Zeadally S, Matos Garcia LY, Mouloud JA, Griffith S. FacePET: Enhancing Bystanders’ Facial Privacy with Smart Wearables/Internet of Things. Electronics. 2018; 7(12):379. https://doi.org/10.3390/electronics7120379

Chicago/Turabian StylePerez, Alfredo J., Sherali Zeadally, Luis Y. Matos Garcia, Jaouad A. Mouloud, and Scott Griffith. 2018. "FacePET: Enhancing Bystanders’ Facial Privacy with Smart Wearables/Internet of Things" Electronics 7, no. 12: 379. https://doi.org/10.3390/electronics7120379

APA StylePerez, A. J., Zeadally, S., Matos Garcia, L. Y., Mouloud, J. A., & Griffith, S. (2018). FacePET: Enhancing Bystanders’ Facial Privacy with Smart Wearables/Internet of Things. Electronics, 7(12), 379. https://doi.org/10.3390/electronics7120379