1. Introduction

In recent years, with the sustained advancement of artificial intelligence technology, the demand for computing and storage resources among various application services has experienced a substantial surge. Cloud computing, as a pivotal technical paradigm, offers robust support for these services, enabling efficient handling of massive data-related tasks including storage, computation, and analysis. Notably, the inherent elastic scalability and high concurrency capabilities of cloud computing platforms exhibit considerable potential in critical scenarios such as AI model training and large-scale big data processing. Therefore, optimizing resource utilization is crucial to improve the energy efficiency of cloud data centers [

1]. While cloud computing is widely used, its security and privacy protection issues have also become an important research direction in the field of cloud computing.

With the development of cloud computing and virtualization technology, container technology came into being [

2]. As an efficient lightweight virtualization method, container technology is widely favored by the industry due to its advantages of rapid start-up, efficient operation and convenient environment configuration. At present, Docker has become one of the most representative container platforms, playing an important role in cloud computing, microservices and other fields [

3]. Docker-based microservices use containers as the basic unit of resource segmentation and scheduling [

4], encapsulate the entire software runtime environment, and meet the requirements of developers and system administrators for building, publishing and running distributed applications [

5]. However, amid the rapid development of container technology, it is concomitantly confronted with a plethora of security challenges. For instance, vulnerabilities in inter-service communication within containers—such as inadequate encryption, unauthorized access or data leakage risks—may pose significant impediments to the widespread adoption and further promotion of container technology.

In the traditional C/S architecture, the data transmission process is usually first uploaded by the sender to the server, and the receiver needs to first connect to a third-party server, and then obtain data through the server. The data transmission of the point-to-point architecture is a direct point-to-point transmission file, because it is simple and effective [

6], and is widely used in network communication. Cloud computing provides scalable application implementations by sharing Internet-based storage and computing resources. However, its ubiquitous nature brings security and privacy risks to sensitive data [

7]. How to ensure the security, integrity and reliability of data transmission in the process of point-to-point data transmission is a key issue in the development of cloud computing.

Nowadays, in the security research on cloud computing, cryptography is one of the common methods to ensure the security of cloud computing [

8]. Through encryption, identity authentication, data integrity verification, key management and other means, cryptographic technology can ensure the communication security in the cloud computing environment, ensure trust between users and services, and prevent data leakage, tampering and abuse. In previous studies, some scholars used homomorphic encryption technology to protect data in cloud computing, and proved that homomorphic encryption can solve security problems in cloud environments [

9]. And the study in [

10] proposes that identity-based encryption and equality testing support responsibility authorization in cloud computing, and gives specific construction methods and formal security proofs based on random oracles. Nowadays, microservices have become a common architecture model for building cloud-native applications. Creating microservices with container technology can achieve powerful and easy deployment in a cloud-native environment [

11]. Cryptography occupies a pivotal position in safeguarding the security of cloud-native architectures. However, a critical issue demanding urgent resolution has emerged: how to leverage the robust capabilities of cloud computing to not only ensure effective protection of users’ private information but also enhance the computing efficiency of mobile terminals. Meanwhile, it is equally essential to utilize encryption–decryption communication technologies to guarantee the security of data interactions, thereby further improving the overall experience of secure microservice communication in cloud environments.

In order to cope with the security and efficiency challenges of microservice communication in the cloud environment, this paper proposes a parallel encryption and decryption framework based on containerized deployment. Through comparative experiments designed by the control variable method, the performance adaptation characteristics of typical encryption algorithms in the cloud environment are systematically explored. In this paper, four encryption algorithms, AES, DES, SM4 and Ascon, are selected to implement encryption and decryption operations on randomly generated bit stream data. The key indicators such as encryption and decryption time, memory usage and ciphertext expansion rate are systematically collected and analyzed. The purpose is to reveal the optimal method for secure and efficient communication of microservice architecture in cloud environments. Through real-time data acquisition and statistical analysis, the performance differences of different algorithms in cloud resource dynamic allocation scenarios are quantified, which provides theoretical basis and practical reference for the formulation of security strategies for microservice communication in cloud-native scenarios. This study lays a certain foundation for the subsequent cloud security research.

2. Basic Knowledge

This section will briefly introduce the basic knowledge on the block encryption algorithms AES [

12], DES [

13] and SM4 [

14], as well as the lightweight encryption standard Ascon [

15], and containerization technology.

2.1. AES Encryption Algorithm

- (1)

The plaintext length of the AES encryption algorithm is fixed at 128 bits, and the key length can be 128, 196 and 256 bits. This paper takes 128 bits as an example. The AES algorithm first performs an initial transformation on the plaintext, then a 9-round loop operation, adds a 1-round final round, and finally obtains the ciphertext. The only difference between the final rounds here is that the final round is less than the column mixing in the third step of the 9-round main loop.

- (2)

Round key matrix

There is only one key matrix at the beginning. In the 9-round loop operation, each round needs to update the key column and make

become the round key matrix of the current round. If it is not a multiple of 4, then the column is determined by the following equation:

If it is a multiple of 4, then the column is determined by the following equation:

2.2. DES Encryption Algorithm

The DES algorithm is a symmetric cipher algorithm for block encryption. The key length is 64 bits, of which 56 bits are involved in the operation, and the remaining 8 bits are parity bits. The encryption process of DES revolves around the Feistel network, including 16 rounds of the same transformation operation. The whole process can be divided into the following key steps:

- (1)

IP replacement: Rearrange the input 64-bit plaintext according to the fixed initial replacement table, disrupt the order of the plaintext, break the original structure of the plaintext, and prepare for the subsequent round transformation.

- (2)

Round transformation (16-round iteration): This is the core part of DES encryption. Each round of transformation needs to be combined with sub-keys to hierarchically process the data:

Data segmentation: The 64-bit data is divided into two parts, 32 bits each, recorded as (left half) and (right half, n is the current round, starting from 1).

Extended permutation (S-box): The 32-bit is extended to 48-bit through the extended table to match the 48-bit sub-key and increase the diffusivity of the data.

XOR operation: The extended 48-bit data is XOR with the 48-bit subkey of the current round, and the 48-bit result is output.

S-box replacement: The XOR result is input into 8 S-boxes; each S-box receives 6 bits of data, outputs 4 bits of data, and finally compresses 48 bits to 32 bits.

P-box replacement: Permutation (P-box) is performed on the 32-bit data output by the S-box to further disrupt the order and enhance data diffusion.

- (3)

IP inverse permutation: Splice the and after 16 rounds of iteration, then rearrange them according to the inverse initial permutation table, and finally output 64-bit ciphertext.

2.3. SM4 Encryption Algorithm

- (1)

In the encryption process of SM4 algorithm, it is necessary to input a 128-bit key and adopt a 32-round iterative structure. Each round uses a 32-bit round key, and a total of 32 round keys are used.

- (2)

Wheel function introduction: Assume that the input of the wheel function is four 32-bit words

, with a total of 128 bits; the wheel key is a 32-bit word; and the output is a 32-bit word. Among them, the S-box is a byte-level nonlinear replacement component. The nonlinear transformation

is a word-based nonlinear substitution operation, which is composed of four S-boxes in parallel. The linear transformation component

L is a linear transformation in the unit of words, and the input and output of the processing are all 32-bit words. The composite transformation

T is composed of a nonlinear transformation

and a linear transformation

L, and the processing unit is words. Let the input be word

X. Firstly, the nonlinear

transform is performed on

X, and then the linear

L transform is performed, which is expressed as

According to the formula of nonlinear transformation

and the linear transformation component

L, it can be concluded that

2.4. Lightweight Encryption Standard Ascon

Ascon, designed by Dobraunig et al. [

15] is a family of permutation-based authenticated encryption with associated data (AEAD) algorithms. The core replacement of Ascon runs in a 320-bit state, arranged in five 64-bit words, defined as

. The state of the input end of the r round is represented by

, and

represents the state after the

layer. We use

(and

, respectively) to represent the jth position of

(and

, respectively).

Three steps , and are described in detail below.

Add constant (): In each round, an 8-bit constant is added to the 56–63 bits of word ;

Substitute layer (

): A 5-bit S-box is applied to 64 columns. Let the input of the S-box be

and the output be

. The algebraic canonical form of the S-box is defined by Equation (

5), which contains the combination of the product term and the linear term of the input bits. For example,

Linear diffusion layer (

): Perform linear operation

for each 64-bit word (implemented by a combination of cyclic right shift and addition). Take

as an example.

Other linear operations follow a similar form, but the number of bits of the cyclic shift is different. For example, corresponds to 19 and 28 bits, corresponds to 61 and 39 bits, etc.

2.5. Containerization and Microservices

Because of its advantages of fast start-up speed, high operation efficiency and low resource occupation, containers are widely used in cloud computing scenarios and have become the basic support technology of cloud computing platforms [

16]. Containerization is a lightweight virtualization technology. By packaging applications and their dependencies into independent, standardized units, it leverages namespaces and control groups provided by the operating system kernel to implement resource isolation and management. Compared with traditional virtual machines, this technology achieves higher resource utilization efficiency and faster startup speeds. Typical tools include Docker, which is a widely used open-source containerization platform that simplifies the process of building, deploying and managing containers. It provides a standardized way to bundle applications and their dependencies, which plays an important role in the widespread adoption of container technology [

17], and significantly improves development and deployment efficiency and system scalability.

The essential difference between containers and the traditional virtual machine is the virtualization level: the traditional virtual machine needs to simulate the complete hardware layer, for example the CPU, memory, hard disk, etc, and run an independent operating system on it; container technology is an operating system-level virtualization, which uses the namespace and control group functions of the operating system kernel to create an independent operating environment for the application. Compared to virtual machines, containers have the following advantages:

- (1)

Small size, fast start-up speed.

- (2)

Low resource consumption, no independent operating system kernel.

- (3)

Strong isolation can achieve a high degree of security isolation between applications [

18].

At the same time, due to the fine-grained division of microservices, service interface encapsulation, lightweight communication interaction, module autonomy, independent update and easy expansion, the container is a cross-platform, independently running micro-execution unit, which makes it a good running carrier for microservices. As a logical abstraction of physical resources, the container has lower resource occupation and faster operation, and is suitable for applications with sudden load changes [

19]. Therefore, containers are the preferred technology for running microservices.

3. The Construction of Microservice Communication Framework

In the field of traditional container encryption, serial chain encryption schemes have long been adopted as the mainstream solution for the encryption processing of plaintext data. By virtue of their intuitive workflow, clear execution logic and broad compatibility, effective fulfillment of encryption and decryption requirements across diverse data scales is enabled by these schemes. By contrast, the parallel chain processing framework proposed in this paper—while inheriting the performance advantages of traditional serial architectures—achieves a substantial reduction in encryption and decryption latency through innovative design, and a significant improvement in the throughput of encryption operations is thereby realized. At the same time, on the premise that the original security benchmark is maintained unaffected, further reduction in resource overhead (e.g., memory) is achieved via the optimal configuration of the algorithm, thereby enabling the formation of a synergistic enhancement effect between performance and security.

3.1. The Serial Microservice Communication Framework

In containerized single-threaded environments, a precise serialization architecture is employed, such that the orderly execution of the entire encryption and decryption process is achieved.

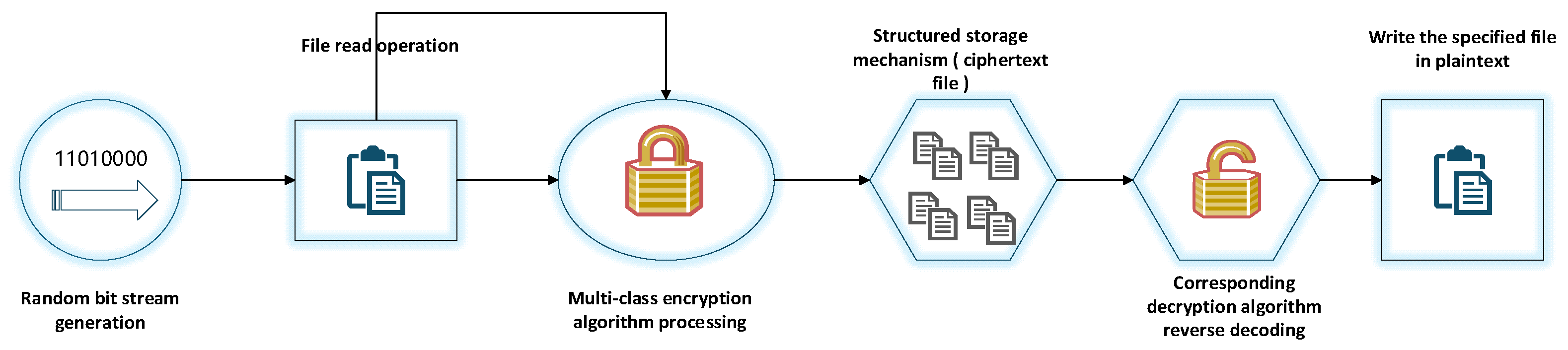

- (1)

In the initial stage of the process, based on the scenario-based demand-driven random number generator, five sets of differentiated bit streams (640 bit, 1280 bit, 1920 bit, 2560 bit, 3200 bit) are accurately generated.

- (2)

After reading in the container, four encryption algorithms are used to encrypt the five sets of data respectively, and the encryption results are written into the new ciphertext file through the structured storage mechanism.

- (3)

The decryption process follows the reverse serial logic. After extracting data from the ciphertext file, the corresponding decryption algorithm is matched to perform reverse decoding. Finally, the restored plaintext is written to the new file to complete the closed loop.

The entire link takes strict order as the core feature, and reads, encrypts, decrypts, and stores to form a serial processing chain that connects the head and tail, and realizes highly controllable encryption life cycle management in a single-threaded container. At this point, the encryption and decryption process ends. The whole flow chart is shown in

Figure 1.

3.2. The Parallel Microservice Communication Framework

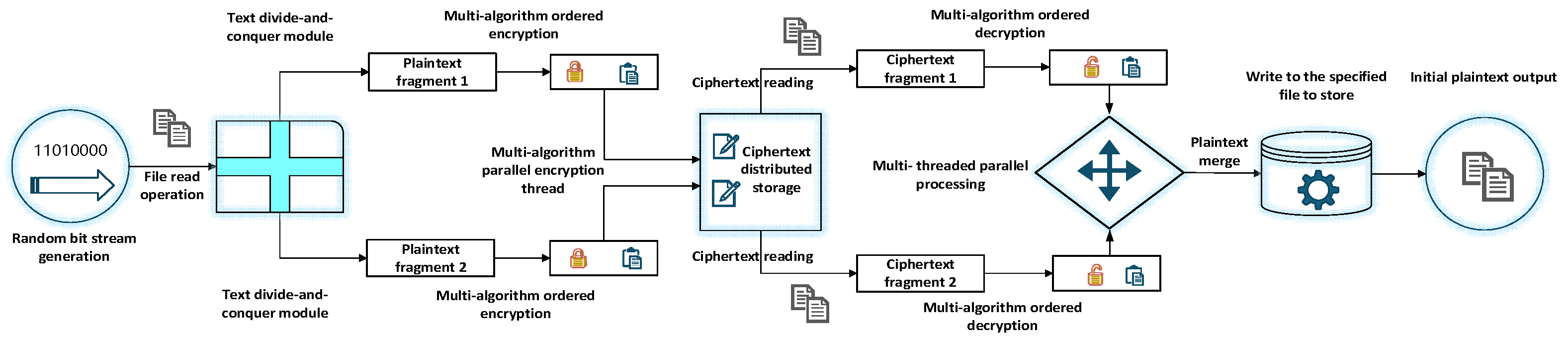

The parallel encryption architecture constructed in the cloud environment realizes the efficiency leap of the whole process of bit stream encryption and decryption through the divide-and-conquer strategy. The core covers four major collaborative operation modules: block processing, parallel encryption, parallel decryption and data fusion.

- (1)

In the process start-up phase, based on the scenario-based demand-driven random number generation engine, five sets of differentiated bitstreams (640 bit, 1280 bit, 1920 bit, 2560 bit, 3200 bit) are accurately generated.

- (2)

In the block processing stage, the plaintext is disassembled into two independent data units by the segmentation module, and the thread pool is allocated two core threads, which are bound to the two segments and the corresponding four types of encryption algorithms respectively.

- (3)

In the parallel encryption stage, the core thread binds to perform the encryption calculation, and the context switching time is controlled at the microsecond level; after the encryption is completed, the ciphertext is written to the distributed storage node, and the atomic variable is updated to mark the task state.

- (4)

In the parallel decryption stage, the thread pool allocates double decryption threads, reads the ciphertext from the exclusive storage node, and waits for synchronization at the barrier after completing the authentication check.

- (5)

In the data fusion stage, by fusing the buffer, the two plaintext fragments are reorganized into complete initial data in order, and finally written to the target storage unit to complete the closed loop.

The architecture significantly improves the processing throughput of bit stream encryption and decryption in the cloud environment through thread-level parallel design. At the same time, according to the difference in the applicability of different block encryption algorithms in cloud scenarios, a dynamic adaptation mechanism for algorithm selection is constructed to achieve accurate matching between efficiency and scene requirements. The overall architecture is shown in

Figure 2.

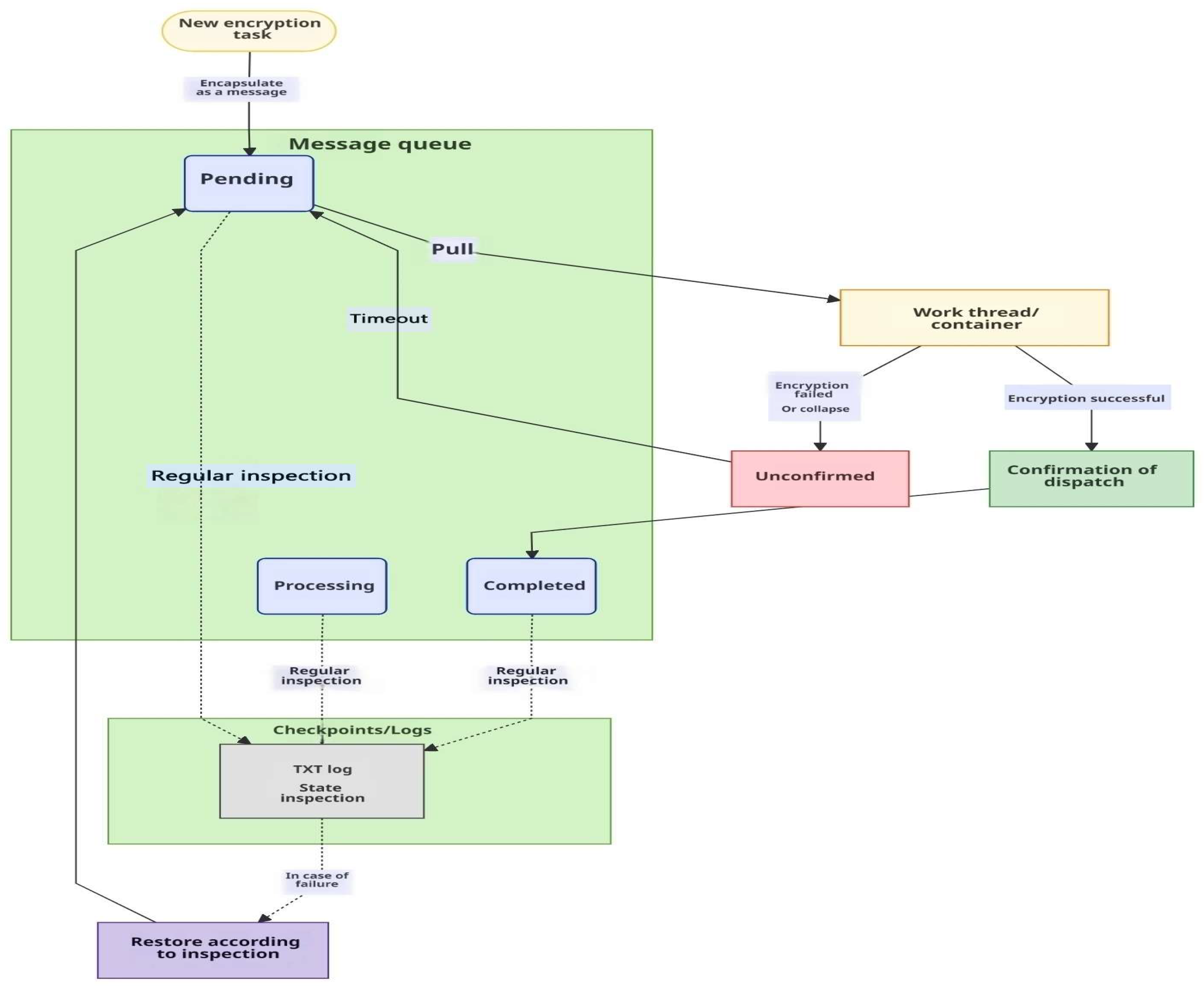

In the framework we designed, each encryption task is encapsulated as a separate message body, containing data blocks, sequence numbers, and verification information, and stored in a message queue. The thread obtains the task from the message queue, and sends confirmation to the queue after successful encryption, and the message is marked as processed. If a thread or container fails during processing, the original task message in the queue will not be deleted because it has not received confirmation, but will be automatically re-delivered to other available threads to perform a retry after a timeout. In addition, we also regularly write the current state of the message queue, such as pending, processing and processed, as a checkpoint to the TXT log file to provide clear state tracking. This design mechanism ensures that when a failure occurs, we can trace back through the log state of the queue to return to the previously stopped state, avoiding data loss or inconsistent encryption results. The whole thread flow chart is shown in

Figure 3.

4. Analysis of Experimental Results

In order to investigate the practical performance of different encryption–decryption algorithms under serial and parallel encryption architectures, this paper first establishes an experimental environment. Specifically, within this environment, projects for different algorithms were deployed in a real-world Docker environment—with the core algorithms integrated into the framework being AES, DES, SM4, and Ascon. Subsequently, diverse sets of plaintext data were designed, and a series of performance tests and evaluations were conducted. Comparative experiments were further performed to verify the practical effectiveness of different algorithms in supporting encryption–decryption communication within cloud environments.

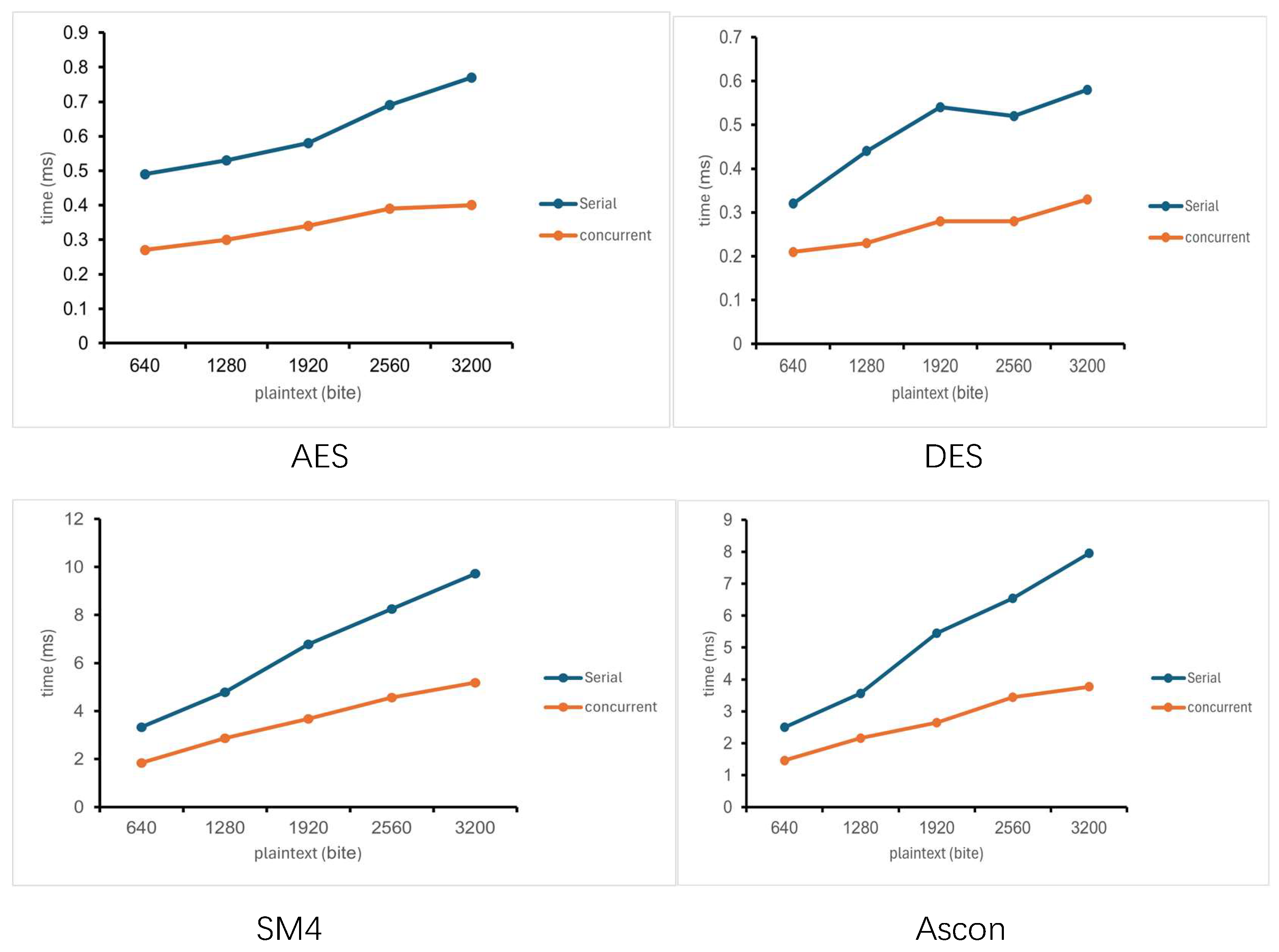

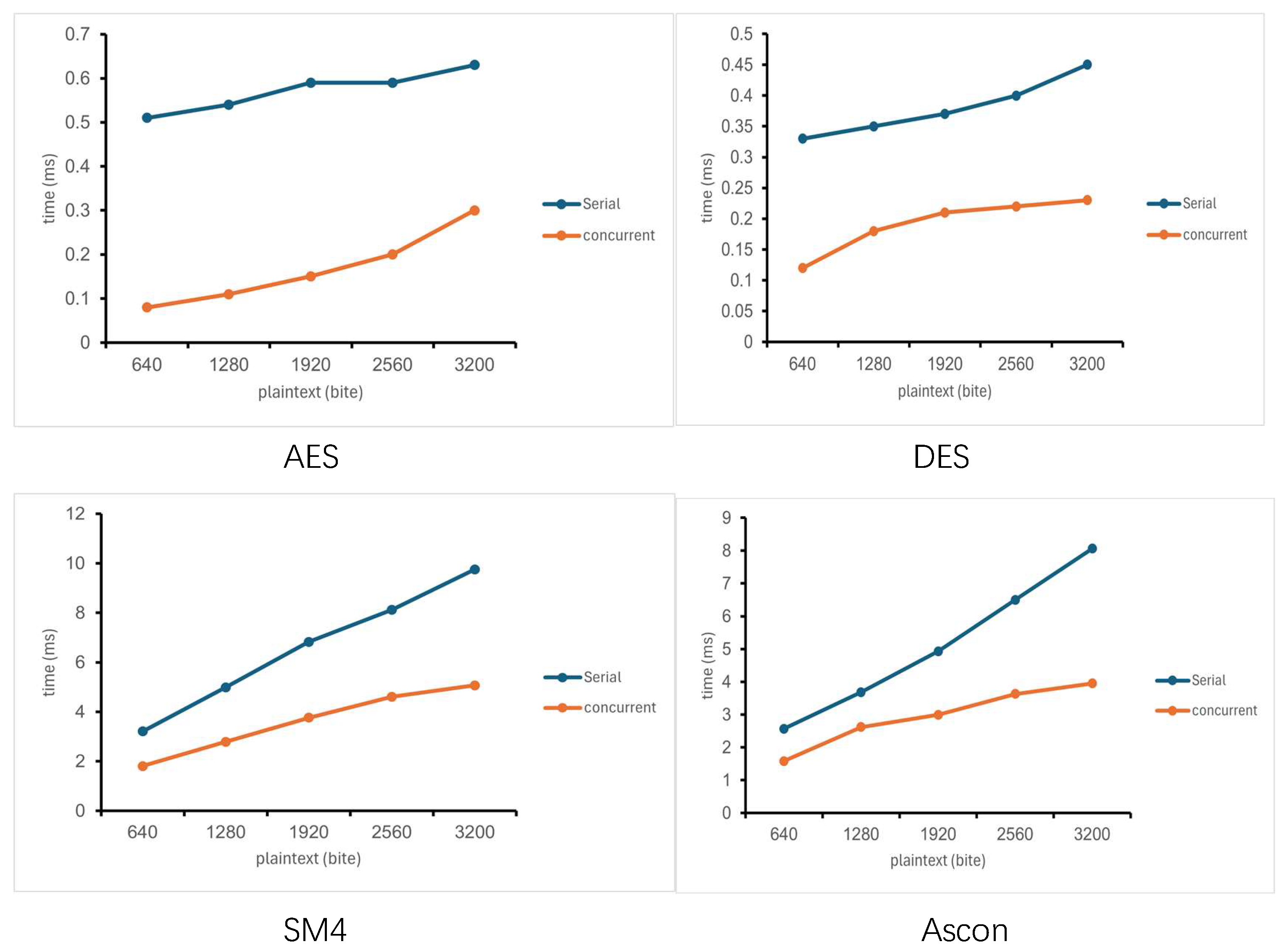

4.1. Encryption Time

Encryption time refers to the duration required for an encryption algorithm to convert plaintext into ciphertext. Specifically, the serial data in the figure below presents the encryption time of four encryption algorithms under the serial framework, across different plaintext sizes. As observed from the figure, the encryption time of all four algorithms within the serial framework increases with the growth of plaintext size. However, the rate of increase varies among the four algorithms.

The parallel data in the four figures represents the encryption time of each algorithm under the parallel framework. In a parallel environment, the encryption time of the four algorithms still increases with the growth of plaintext size. However, the magnitude of encryption time reduction differs among the four algorithms. This is because the two threads execute concurrently: only the longer thread execution time is recorded as the final data, while the shorter thread execution time is not included in the recorded data (as reflected in the table).

As observed from the

Figure 4, under the parallel framework, the encryption time of all algorithms still increases with the growth of plaintext size. Specifically, the overall encryption time of the AES and DES algorithms is lower than that of the SM4 and Ascon algorithms. Furthermore, the reduction trend of encryption time for AES and DES gradually becomes gentler, with their reduction degree ranging from 43% to 48%. In contrast, the reduction trend of encryption time for SM4 and Ascon gradually becomes steeper, and their reduction degree can basically reach 48% to 50%.

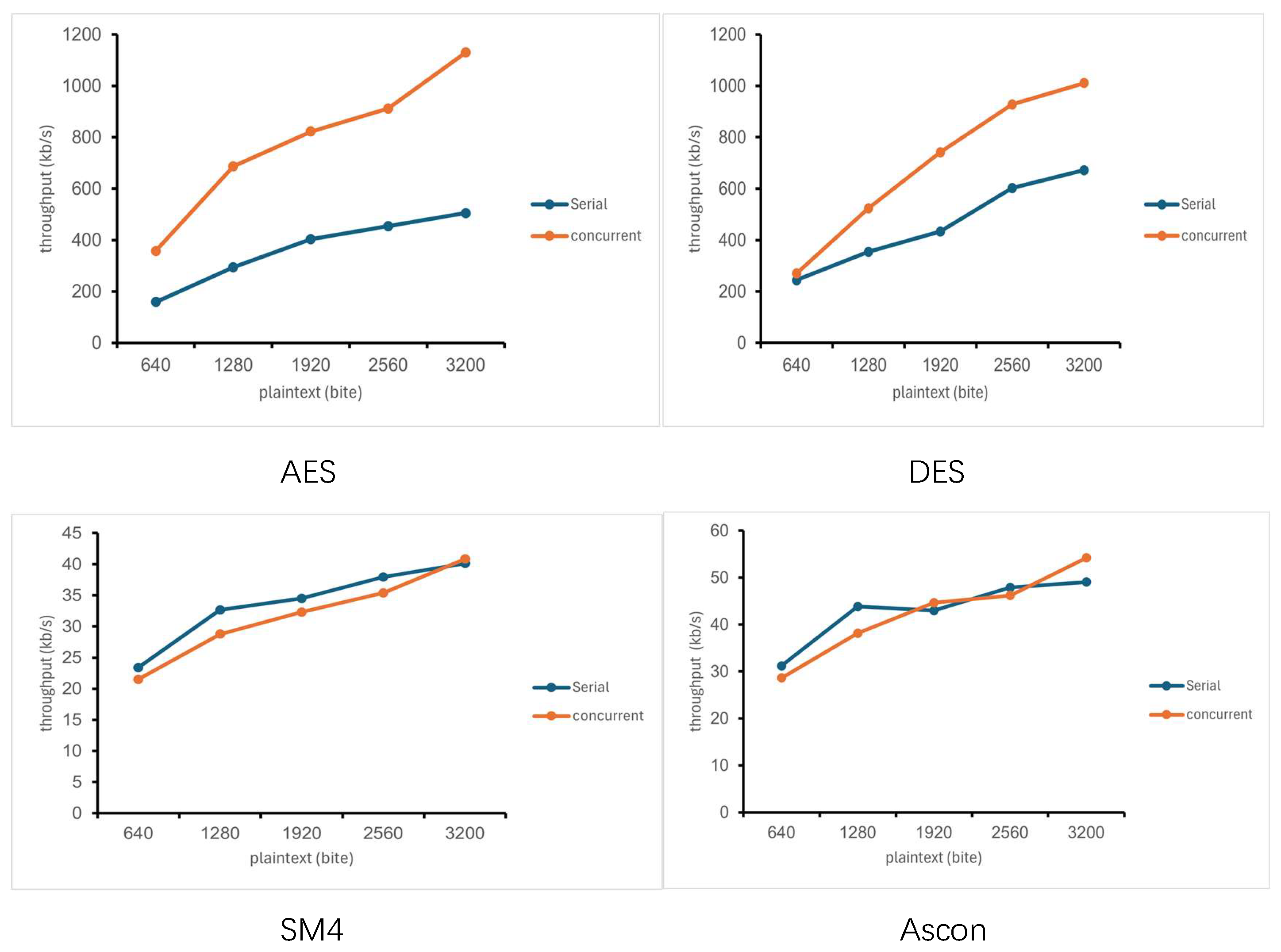

4.2. Decryption Time

Decryption time refers to the duration required for a decryption algorithm to convert ciphertext into plaintext. Specifically, the serial data presented in the figure below shows the decryption time of the four encryption algorithms under the serial framework, across different plaintext sizes. As observed from the figure, the decryption time of all four algorithms within the serial framework increases with the growth of plaintext size, yet the rate of increase varies among the four algorithms.

The parallel data is the decryption time of each algorithm under the parallel framework. In the parallel environment, the decryption time still increases with the increase in the plaintext, but the reduction forms of the four algorithms are different. Because the two threads are carried out at the same time, only the longer party is recorded as the data, and the shorter party is less than the data recorded in the table.

Based on the data analysis presented in the

Figure 5, the time reduction rate of the AES and DES algorithms is higher in the initial stage—with the AES algorithm exhibiting the highest rate in particular. The data further reveals that as the plaintext size increases, the reduction rate of AES and DES gradually decreases, eventually stabilizing below 50%. By contrast, while the SM4 and Ascon algorithms exhibit a lower reduction rate than the aforementioned two algorithms when processing initial plaintext data, calculations further indicate that the reduction rate of the national cryptographic standard SM4 algorithm and the Ascon algorithm gradually increases, ultimately approaching 50%.

4.3. Encrypted Throughput

The throughput of an algorithm is defined as the amount of data that can be processed within a unit time, which reflects the data processing capability of the algorithm. Specifically, a higher throughput corresponds to a faster encryption and decryption rate. The figure below presents the encryption throughput of the AES, DES, SM4 and Ascon encryption algorithms—under both serial and parallel modes—when processing plaintext of different sizes.

It can be seen from the following

Figure 6 that the throughputs of the AES algorithm and DES algorithm are much higher than those of the SM4 algorithm and Ascon algorithm in both serial mode and parallel mode. In parallel mode, the throughputs of the AES algorithm and DES algorithm increase even more than twice, while the throughputs of the other two algorithms, SM4 and Ascon, are almost the same in both serial mode and parallel mode, but they are steadily improving. Therefore, the AES algorithm and DES algorithm may be suitable for high-throughput environments. Throughput improvements in parallel environment are not obvious from the SM4 algorithm and the Ascon algorithm, especially the Ascon encryption algorithm, which may be due to its own sponge structure organization.

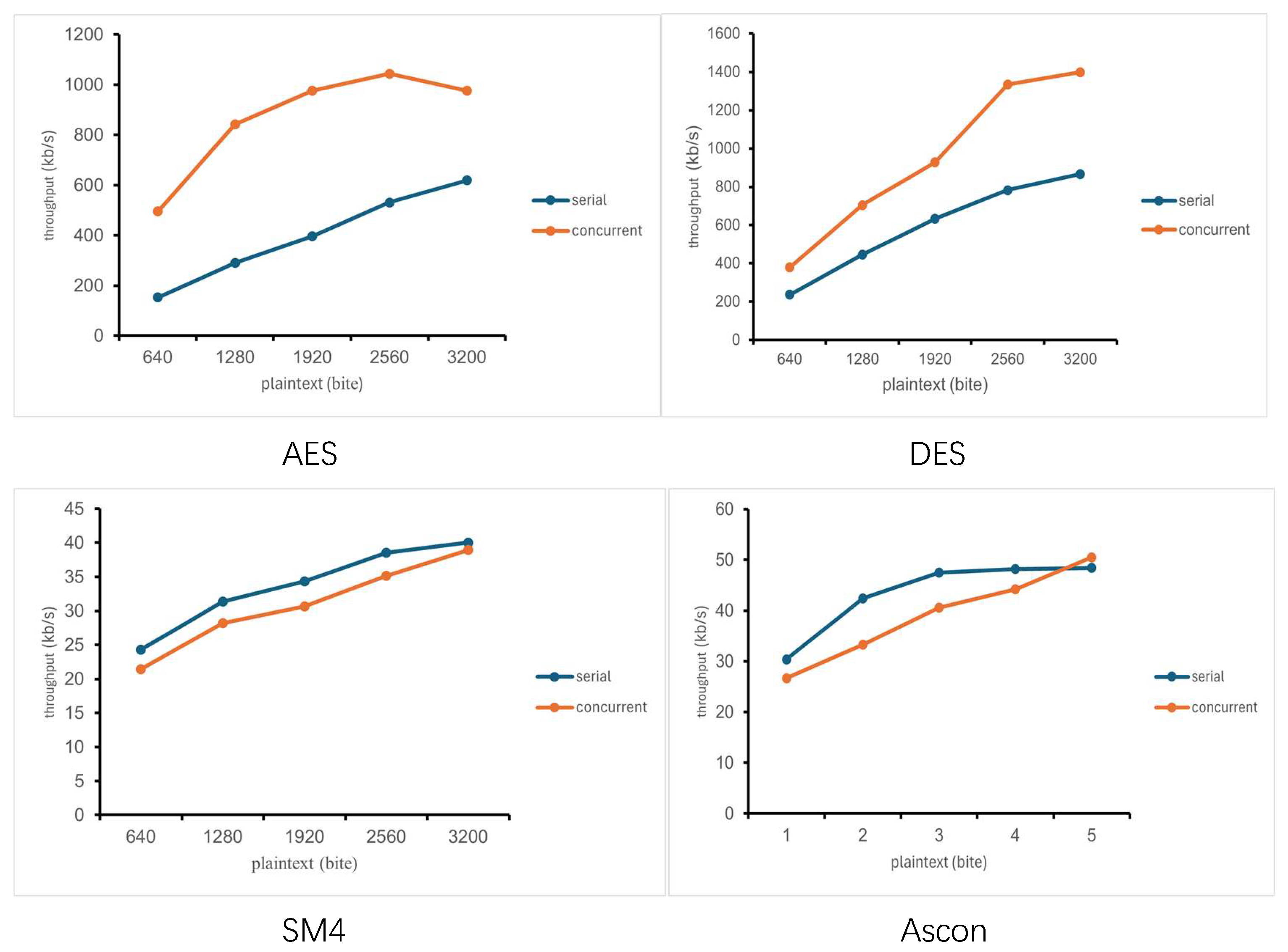

4.4. Decryption Throughput

According to the experimental data, the trends of decryption throughput and encryption throughput of the four encryption algorithms are almost the same. The throughputs of the AES algorithm and DES algorithm are higher than those of the other two algorithms, and the throughputs of the SM4 algorithm and Ascon algorithm are basically the same in serial and parallel modes. As shown in

Figure 7.

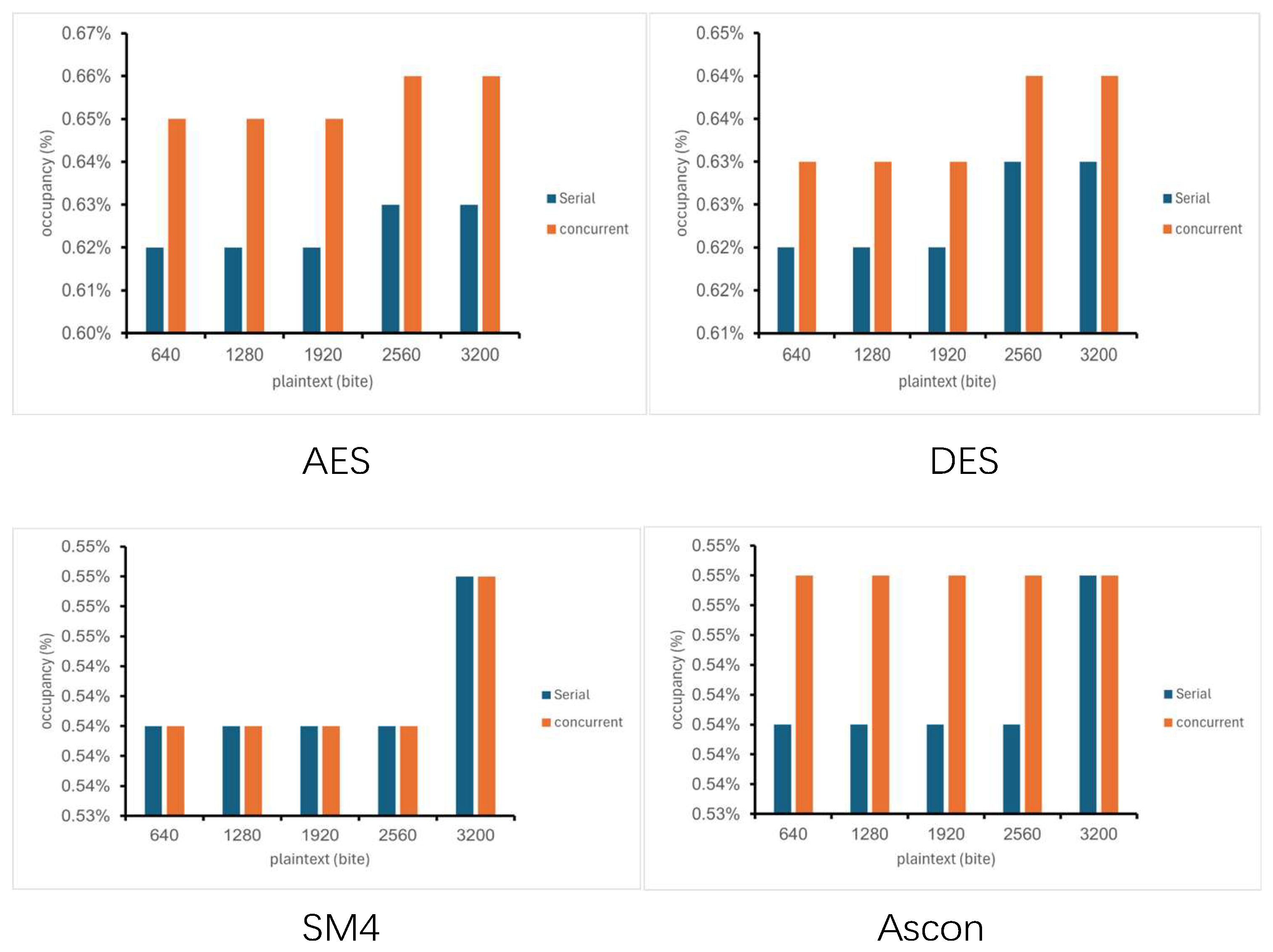

4.5. Memory Occupancy

In this paper, four different encryption algorithms, AES, DES, SM4 and Ascon, are used to calculate and analyze the memory occupancy rate under a range of different plaintext sizes in serial and parallel modes. This parameter reflects the memory occupancy of the encryption algorithm.

According to the data shown in

Figure 8, the serial data represents the occupancy rate of the encryption algorithm in the serial mode, and the parallel data represents the occupancy rate of the encryption algorithm in the parallel mode. It can be seen that the memory usage of the AES algorithm and DES algorithm is higher than that of SM4 algorithm and Ascon algorithm, and the AES algorithm and DES algorithm have a slight increase trend in parallel mode, but the SM4 algorithm and Ascon algorithm are almost the same in serial mode and parallel mode. It can be seen that the SM4 algorithm and the Ascon algorithm are more suitable for low-memory and low-consumption scenarios in the parallel mode of the container.

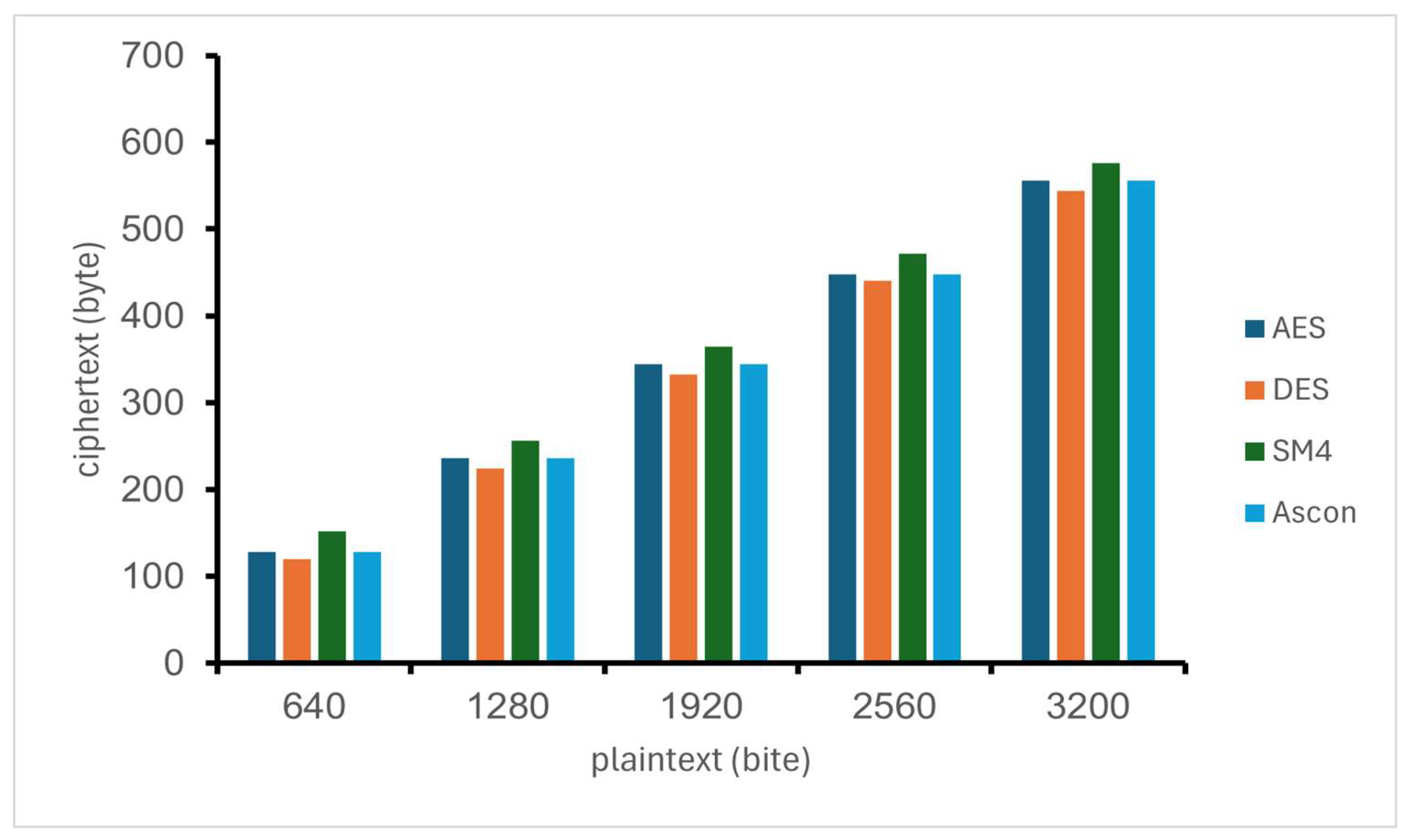

4.6. Ciphertext Size

In this experiment, the ciphertext size generated by the four encryption algorithms (AES, DES, SM4, and Ascon) was statistically analyzed, with the statistical results detailed below. As the plaintext size increases, the ciphertext size of all four algorithms also increases, and the size difference between ciphertext and plaintext gradually expands. Notably, since the four algorithms yield identical ciphertext sizes in both serial and parallel environments, only one set of data (either from the serial or parallel environment) is presented for comparative analysis.

From the analysis of the

Figure 9, it can be observed that for plaintext encrypted under both serial and parallel modes in the container environment, the DES encryption algorithm yields the smallest ciphertext size. This is followed by the AES algorithm and the Ascon algorithm, while the SM4 algorithm generates the largest ciphertext size. This is because when the length of the plaintext is not an integer multiple of the packet length, the last data block needs to be completed by the filling rule, which will lead to an increase in the length of the ciphertext. However, the increase in ciphertext length will affect the computational overhead in the process of encryption and decryption, which in turn affects the processing efficiency. Therefore, when selecting encryption algorithms in practical applications, it is necessary to comprehensively consider the balance between security attributes and efficiency.

5. Conclusions

To address the issue of synergistic optimization of efficiency and security confronting encryption algorithm-based communication in cloud environments, a systematic study is conducted. Specifically, through the construction of a dedicated comparative experimental framework for serial and parallel encryption in cloud environments, an encryption implementation scheme based on a parallel computing architecture is proposed. On the premise that the original security strength and reliability of the encryption algorithm are strictly guaranteed, the data fragmentation strategy and computing resource scheduling mechanism are optimized by this scheme. Compared with traditional serial encryption methods, the encryption and decryption time of the algorithm is reduced by approximately 50%, by which the time overhead associated with encryption and decryption operations is significantly decreased. Furthermore, the throughput of the AES and DES algorithms is doubled compared with their serial-mode counterparts, by which a significant improvement in communication efficiency is achieved. Meanwhile, while the original secure communication capability is ensured, the system resource overhead is effectively reduced by the SM4 and Ascon algorithms.

The experimental results show that compared with the existing research results, the parallel encryption method proposed in this paper achieves a performance breakthrough at the encryption and decryption efficiency level. Further, based on the comparative analysis of multi-dimensional performance evaluation indicators such as throughput, memory usage and security level, this study extracts the selection strategy of encryption algorithms in the cloud environment: the AES and DES encryption algorithms show better adaptability in scenarios with higher data transmission throughput requirements; in the application scenarios that are sensitive to memory resource consumption and need to guarantee the original security level, the SM4 and Ascon encryption algorithms have more significant performance advantages, which can provide key technical reference for the encryption scheme design of different demand scenarios in the cloud environment.