Weak-Cue Mixed Similarity Matrix and Boundary Expansion Clustering for Multi-Target Multi-Camera Tracking Systems in Highway Scenarios

Abstract

1. Introduction

- Low-quality trajectory features: In MTMCT systems, the trajectories are represented as a collection of ReID features that capture vehicles’ movements during a specific period. However, the quality of the trajectory features can be poor due to factors such as the high-speed movement of vehicles in highway environments and the dim lighting conditions in tunnels.

- Low-performance trajectory clustering: When clustering vehicle trajectories, clustering algorithms are commonly used to match trajectories across different cameras. However, the observed trajectories of vehicles can be influenced by multiple factors, such as camera perspectives and traffic conditions, when vehicles pass through different cameras.

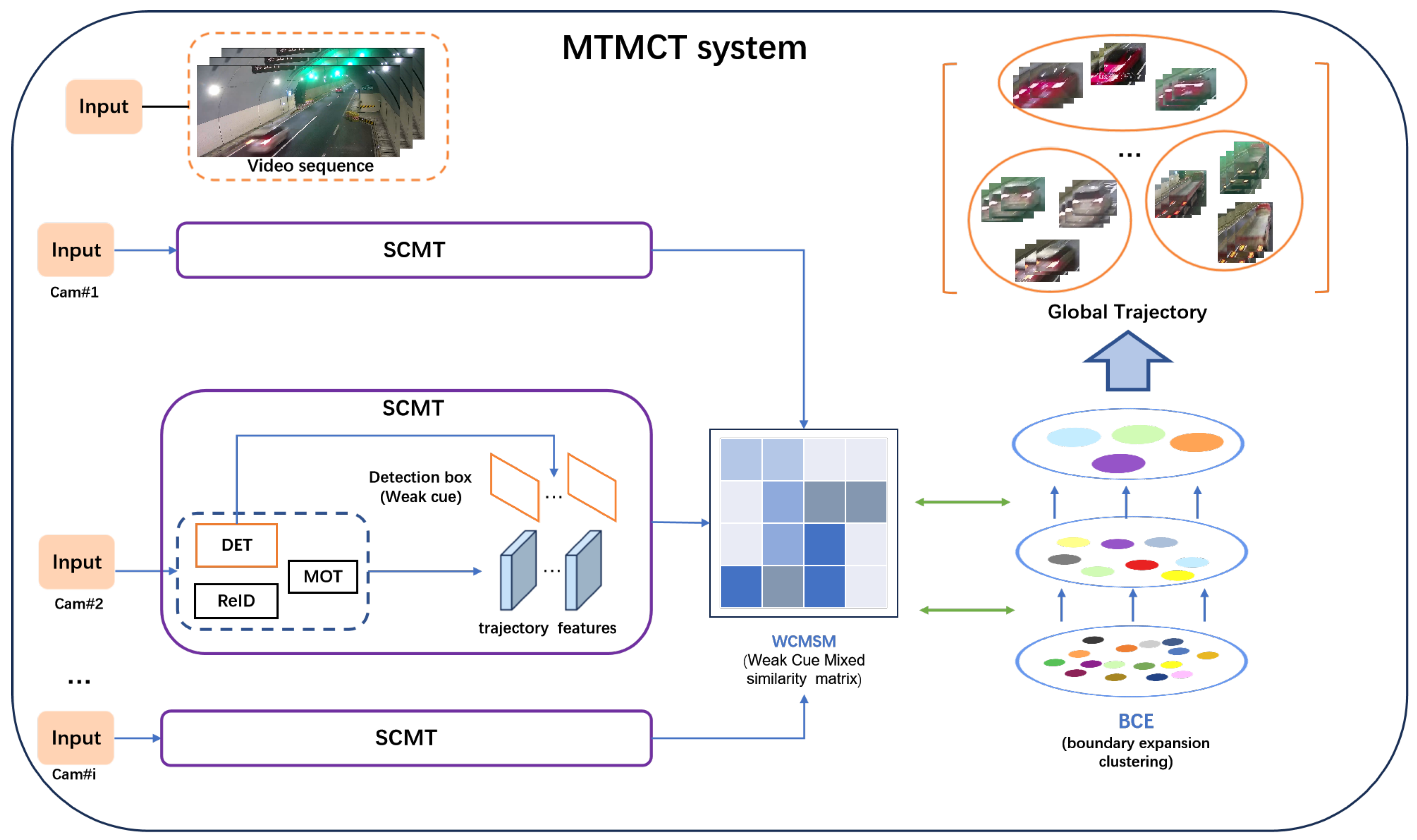

- The WCBE MTMCT system, which combines the WCMSM and BEC, is developed to address the impact of various characteristics of high-speed highway scenes on the most critical step of trajectory aggregation in an MTMCT system.

- The WCMSM module introduces weak cues during trajectory aggregation, enhances the discriminability of the similarity matrix for trajectories, and improves the effectiveness of subsequent clustering.

- The BEC algorithm is a clustering algorithm that is more suitable for the characteristics of the high-speed highway scenes we have studied, thereby enhancing the performance of our MTMCT system.

- The Highway Surveillance Traffic (HST) dataset validates the research on multi-target multi-camera tracking in highway scenarios. The dataset comprises videos from six different cameras, with a total duration of 182 min. These videos were primarily captured in highway tunnel scenes.

2. Related Works

2.1. Weak Cues

2.2. Trajectory Clustering

2.3. MTMCT Systems

3. Method

3.1. WCMSM: Weak-Cue Mixed Similarity Matrix

3.2. BEC: Boundary Expansion Clustering

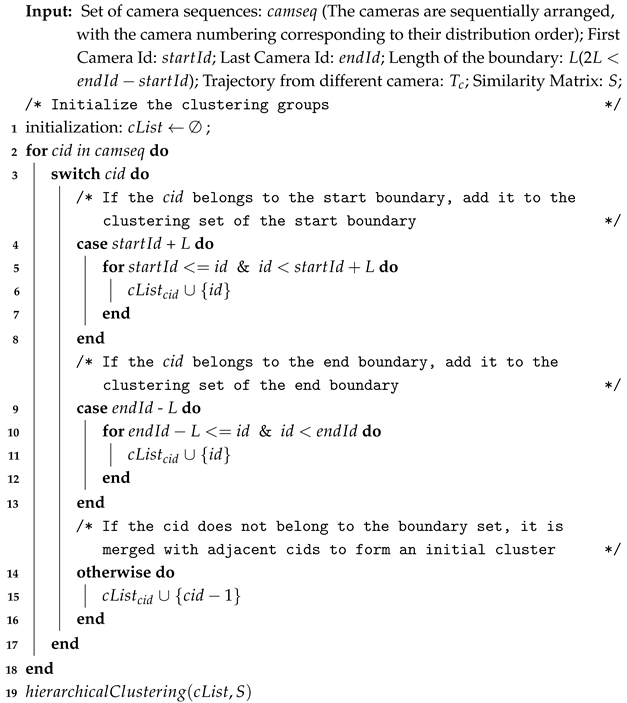

| Algorithm 1: Boundary expansion clustering |

|

3.3. MTMCT System

4. Experiments

4.1. Datasets

4.2. Evaluation Metrics

4.3. Experiments and Evaluation of the WCMSM

4.4. Experiments and Evaluation of the BEC Algorithm

4.5. Experiments and Evaluation of the MTMCT System

4.5.1. Ablation Study

4.5.2. Comparison with Other Methods on HST

4.5.3. Comparison with Other Methods on CityFlow

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Ristani, E.; Tomasi, C. Features for multi-target multi-camera tracking and re-identification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 6036–6046. [Google Scholar]

- Qian, Y.; Yu, L.; Liu, W.; Hauptmann, A.G. ELECTRICITY: An Efficient Multi-Camera Vehicle Tracking System for Intelligent City. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) Workshops, Seattle, WA, USA, 13–19 June 2020. [Google Scholar]

- Nguyen, T.T.; Nguyen, H.H.; Sartipi, M.; Fisichella, M. Multi-Vehicle Multi-Camera Tracking With Graph-Based Tracklet Features. IEEE Trans. Multimed. 2024, 26, 972–983. [Google Scholar] [CrossRef]

- Zhang, X.; Yu, H.; Qin, Y.; Zhou, X.; Chan, S. Video-Based Multi-Camera Vehicle Tracking via Appearance-Parsing Spatio-Temporal Trajectory Matching Network. IEEE Trans. Circuits Syst. Video Technol. 2024, 34, 10077–10091. [Google Scholar] [CrossRef]

- Xu, T.; Wu, X.J.; Zhu, X.; Kittler, J. Memory Prompt for Spatiotemporal Transformer Visual Object Tracking. IEEE Trans. Artif. Intell. 2024, 5, 3759–3764. [Google Scholar] [CrossRef]

- Ye, M.; Lan, X.; Yuen, P.C. Robust anchor embedding for unsupervised video person re-identification in the wild. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 170–186. [Google Scholar]

- Sikdar, A.; Chowdhury, A.S. Lightweight Learning for Partial and Occluded Person Re-Identification. IEEE Trans. Artif. Intell. 2024, 5, 3245–3256. [Google Scholar] [CrossRef]

- He, S.; Luo, H.; Wang, P.; Wang, F.; Li, H.; Jiang, W. Transreid: Transformer-based object re-identification. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 15013–15022. [Google Scholar]

- Bewley, A.; Ge, Z.; Ott, L.; Ramos, F.; Upcroft, B. Simple online and realtime tracking. In Proceedings of the 2016 IEEE International Conference on Image Processing (ICIP), Phoenix, AZ, USA, 25–28 September 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 3464–3468. [Google Scholar]

- Kalman, R.E. A new approach to linear filtering and prediction problems. J. Basic Eng. Mar. 1960, 82, 35–45. [Google Scholar] [CrossRef]

- Zhou, X.; Koltun, V.; Krähenbühl, P. Tracking objects as points. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; Springer: Berlin/Heidelberg, Germany, 2020; pp. 474–490. [Google Scholar]

- He, J.; Huang, Z.; Wang, N.; Zhang, Z. Learnable graph matching: Incorporating graph partitioning with deep feature learning for multiple object tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 19–25 June 2021; pp. 5299–5309. [Google Scholar]

- Cetintas, O.; Brasó, G.; Leal-Taixé, L. Unifying short and long-term tracking with graph hierarchies. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 18–22 June 2023; pp. 22877–22887. [Google Scholar]

- Yang, B.; Nevatia, R. Multi-target tracking by online learning of non-linear motion patterns and robust appearance models. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 1918–1925. [Google Scholar] [CrossRef]

- Alahi, A.; Goel, K.; Ramanathan, V.; Robicquet, A.; Fei-Fei, L.; Savarese, S. Social LSTM: Human Trajectory Prediction in Crowded Spaces. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 961–971. [Google Scholar] [CrossRef]

- Chen, S.; Yu, E.; Li, J.; Tao, W. Delving into the Trajectory Long-tail Distribution for Muti-object Tracking. In Proceedings of the 2024 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 17–21 June 2024; pp. 19341–19351. [Google Scholar] [CrossRef]

- Zhang, Y.; Sun, P.; Jiang, Y.; Yu, D.; Weng, F.; Yuan, Z.; Luo, P.; Liu, W.; Wang, X. Bytetrack: Multi-object tracking by associating every detection box. arXiv 2022, arXiv:2110.06864. [Google Scholar]

- Wang, Z.; Zheng, L.; Liu, Y.; Li, Y.; Wang, S. Towards real-time multi-object tracking. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; Springer: Berlin/Heidelberg, Germany, 2020; pp. 107–122. [Google Scholar]

- Cao, J.; Pang, J.; Weng, X.; Khirodkar, R.; Kitani, K. Observation-centric sort: Rethinking sort for robust multi-object tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 18–22 June 2023; pp. 9686–9696. [Google Scholar]

- Yang, M.; Han, G.; Yan, B.; Zhang, W.; Qi, J.; Lu, H.; Wang, D. Hybrid-sort: Weak cues matter for online multi-object tracking. In Proceedings of the AAAI Conference on Artificial Intelligence, Vancouver, BC, Canada, 20–27 February 2024; Volume 38, pp. 6504–6512. [Google Scholar]

- Han, G.; Yang, R.; Gao, H.; Kwong, S. Deep Decoupling Classification and Regression for Visual Tracking. IEEE Trans. Cogn. Dev. Syst. 2023, 15, 1239–1251. [Google Scholar] [CrossRef]

- Persson, A.; Zuidberg Dos Martires, P.; De Raedt, L.; Loutfi, A. Semantic Relational Object Tracking. IEEE Trans. Cogn. Dev. Syst. 2020, 12, 84–97. [Google Scholar] [CrossRef]

- Liu, C.; Zhang, Y.; Luo, H.; Tang, J.; Chen, W.; Xu, X.; Wang, F.; Li, H.; Shen, Y.D. City-scale multi-camera vehicle tracking guided by crossroad zones. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 19–25 June 2021; pp. 4129–4137. [Google Scholar]

- Hsu, H.M.; Cai, J.; Wang, Y.; Hwang, J.N.; Kim, K.J. Multi-target multi-camera tracking of vehicles using metadata-aided re-id and trajectory-based camera link model. IEEE Trans. Image Process. 2021, 30, 5198–5210. [Google Scholar] [CrossRef] [PubMed]

- Yao, H.; Duan, Z.; Xie, Z.; Chen, J.; Wu, X.; Xu, D.; Gao, Y. City-Scale Multi-Camera Vehicle Tracking Based on Space-Time-Appearance Features. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) Workshops, New Orleans, LA, USA, 18–24 June 2022; pp. 3310–3318. [Google Scholar]

- Gong, Q.; Ma, S.; Zhang, N.; Liu, H.; Gao, H.; Zhao, Y.; Jiang, X.; Tu, W.; Chen, C.; Yang, F. A Semi-Supervised Clustering Algorithm for Underground Disaster Monitoring and Early Warning. Electronics 2025, 14, 965. [Google Scholar] [CrossRef]

- Yang, H.; Cai, J.; Zhu, M.; Liu, C.; Wang, Y. Traffic-informed multi-camera sensing (TIMS) system based on vehicle re-identification. IEEE Trans. Intell. Transp. Syst. 2022, 23, 17189–17200. [Google Scholar] [CrossRef]

- Park, H.G.; Shin, K.S.; Kim, J.C. Efficient Clustering Method for Graph Images Using Two-Stage Clustering Technique. Electronics 2025, 14, 1232. [Google Scholar] [CrossRef]

- Yang, X.; Ye, J.; Lu, J.; Gong, C.; Jiang, M.; Lin, X.; Zhang, W.; Tan, X.; Li, Y.; Ye, X.; et al. Box-Grained Reranking Matching for Multi-Camera Multi-Target Tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) Workshops, New Orleans, LA, USA, 18–24 June 2022; pp. 3096–3106. [Google Scholar]

- Huang, H.W.; Yang, C.Y.; Jiang, Z.; Kim, P.K.; Lee, K.; Kim, K.; Ramkumar, S.; Mullapudi, C.; Jang, I.S.; Huang, C.I.; et al. Enhancing multi-camera people tracking with anchor-guided clustering and spatio-temporal consistency ID re-assignment. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 18–22 June 2023; pp. 5238–5248. [Google Scholar]

- Li, F.; Wang, Z.; Nie, D.; Zhang, S.; Jiang, X.; Zhao, X.; Hu, P. Multi-Camera Vehicle Tracking System for AI City Challenge 2022. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) Workshops, New Orleans, LA, USA, 18–24 June 2022; pp. 3265–3273. [Google Scholar]

- Naphade, M.; Wang, S.; Anastasiu, D.C.; Tang, Z.; Chang, M.C.; Yao, Y.; Zheng, L.; Rahman, M.S.; Venkatachalapathy, A.; Sharma, A.; et al. The 6th AI City Challenge. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) Workshops, New Orleans, LA, USA, 18–24 June 2022; pp. 3347–3356. [Google Scholar]

- Chang, M.C.; Wei, J.; Zhu, Z.A.; Chen, Y.M.; Hu, C.S.; Jiang, M.X.; Chiang, C.K. AI City Challenge 2019-City-Scale Video Analytics for Smart Transportation. In Proceedings of the CVPR Workshops, Long Beach, CA, USA, 16–20 June 2019; pp. 99–108. [Google Scholar]

- Wojke, N.; Bewley, A.; Paulus, D. Simple online and realtime tracking with a deep association metric. In Proceedings of the 2017 IEEE International Conference on Image Processing (ICIP), Beijing, China, 17–20 September 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 3645–3649. [Google Scholar]

- Zhang, Y.; Wang, C.; Wang, X.; Zeng, W.; Liu, W. Fairmot: On the fairness of detection and re-identification in multiple object tracking. Int. J. Comput. Vis. 2021, 129, 3069–3087. [Google Scholar] [CrossRef]

- Glenn, J.; Ayush, C.; Jing, Q. Ultralytics YOLOv8. Version 8.0.0. 2023. Available online: https://github.com/ultralytics/ultralytics (accessed on 5 May 2025).

- Glenn, J. Ultralytics YOLOv5. Version 7.0. 2020. Available online: https://github.com/ultralytics/yolov5 (accessed on 5 May 2025).

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Luo, H.; Chen, W.; Xu, X.; Gu, J.; Zhang, Y.; Liu, C.; Jiang, Y.; He, S.; Wang, F.; Li, H. An empirical study of vehicle re-identification on the AI City Challenge. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 19–25 June 2021; pp. 4095–4102. [Google Scholar]

- Aharon, N.; Orfaig, R.; Bobrovsky, B.Z. BoT-SORT: Robust Associations Multi-Pedestrian Tracking. arXiv 2022, arXiv:2206.14651. [Google Scholar]

- Tang, Z.; Naphade, M.; Liu, M.Y.; Yang, X.; Birchfield, S.; Wang, S.; Kumar, R.; Anastasiu, D.; Hwang, J.N. CityFlow: A City-Scale Benchmark for Multi-Target Multi-Camera Vehicle Tracking and Re-Identification. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019. [Google Scholar]

- Ristani, E.; Solera, F.; Zou, R.; Cucchiara, R.; Tomasi, C. Performance measures and a data set for multi-target, multi-camera tracking. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; Springer: Berlin/Heidelberg, Germany, 2016; pp. 17–35. [Google Scholar]

- Quach, K.G.; Nguyen, P.; Le, H.; Truong, T.D.; Duong, C.N.; Tran, M.T.; Luu, K. Dyglip: A dynamic graph model with link prediction for accurate multi-camera multiple object tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 19–25 June 2021; pp. 13784–13793. [Google Scholar]

| Name | Camera | Type | Length | Quality |

|---|---|---|---|---|

| VeRi-776 | 20 | image | - | - |

| VechicleID | 2 | image | - | - |

| PKU-VD1 | - | image | - | - |

| CityFlow | 40 | video | 195.03 min | 960p |

| LHTV | 16 | video | 1012.25 min | 1080p |

| HST (ours) | 6 | video | 182 min | 1080p |

| Backbone | WCMSM | IDF1↑ | IDP↑ | IDR↑ | MOTA↑ | Runtime |

|---|---|---|---|---|---|---|

| IBN-densenet169 | 28.18 | 30.08 | 26.51 | 57.73 | 19.23250 s | |

| IBN-densenet169 | ✓ | 32.07 | 34.24 | 30.16 | 57.17 | 19.43271 s |

| IBN-ResNet101 | 60.06 | 66.22 | 54.95 | 57.81 | 19.31444 s | |

| IBN-ResNet101 | ✓ | 62.02 | 68.36 | 68.63 | 57.76 | 19.65857 s |

| ResNet50 | 68.92 | 76.52 | 62.69 | 56.79 | 19.44994 s | |

| ResNet50 | ✓ | 72.96 | 80.24 | 66.89 | 58.20 | 19.58706 s |

| IBN-Se-ResNet101 | 70.13 | 77.44 | 64.09 | 57.93 | 19.10133 s | |

| IBN-Se-ResNet101 | ✓ | 72.92 | 80.34 | 66.75 | 58.24 | 19.50410 s |

| IBN-ResNet101 + ResNet50 + IBN-Se-ResNet101 | 73.17 | 80.76 | 66.88 | 58.34 | 19.27957 s | |

| IBN-ResNet101 + ResNet50 + IBN-Se-ResNet101 | ✓ | 73.67 | 81.09 | 67.50 | 58.38 | 19.46851 s |

| Backbone | BEC | IDF1↑ | IDP↑ | IDR↑ | MOTA↑ | Runtime |

|---|---|---|---|---|---|---|

| IBN-densenet169 | 28.18 | 30.08 | 26.51 | 57.73 | 19.23250 s | |

| IBN-densenet169 | ✓ | 29.07 | 41.08 | 22.49 | 36.07 | 17.73867 s |

| IBN-ResNet101 | 60.06 | 66.22 | 54.95 | 57.81 | 19.31444 s | |

| IBN-ResNet101 | ✓ | 65.20 | 64.40 | 66.02 | 53.85 | 17.64590 s |

| ResNet50 | 68.92 | 76.52 | 62.69 | 56.79 | 19.44994 s | |

| ResNet50 | ✓ | 70.42 | 77.54 | 64.49 | 57.98 | 17.59759 s |

| IBN-Se-ResNet101 | 70.13 | 77.44 | 64.09 | 57.93 | 19.10133 s | |

| IBN-Se-ResNet101 | ✓ | 72.46 | 79.98 | 66.23 | 58.27 | 17.69054 s |

| IBN-ResNet101 + ResNet50 + IBN-Se-ResNet101 | 73.17 | 80.76 | 66.88 | 58.34 | 19.27957 s | |

| IBN-ResNet101 + ResNet50 + IBN-Se-ResNet101 | ✓ | 74.24 | 81.90 | 67.89 | 58.25 | 17.71640 s |

| YOLOv8 | BotSort | WCMSM | BEC | IDF1↑ | IDP↑ | IDR↑ | MOTA↑ |

|---|---|---|---|---|---|---|---|

| ✓ | ✓ | 68.92 | 76.52 | 62.69 | 56.79 | ||

| ✓ | ✓ | ✓ | 70.42 | 77.54 | 64.49 | 57.98 | |

| ✓ | ✓ | ✓ | ✓ | 71.26 | 78.44 | 65.29 | 58.31 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chan, S.; Ni, S.; Wang, Z.; Yao, Y.; Hu, J.; Chen, X.; Li, S. Weak-Cue Mixed Similarity Matrix and Boundary Expansion Clustering for Multi-Target Multi-Camera Tracking Systems in Highway Scenarios. Electronics 2025, 14, 1896. https://doi.org/10.3390/electronics14091896

Chan S, Ni S, Wang Z, Yao Y, Hu J, Chen X, Li S. Weak-Cue Mixed Similarity Matrix and Boundary Expansion Clustering for Multi-Target Multi-Camera Tracking Systems in Highway Scenarios. Electronics. 2025; 14(9):1896. https://doi.org/10.3390/electronics14091896

Chicago/Turabian StyleChan, Sixian, Shenghao Ni, Zheng Wang, Yuan Yao, Jie Hu, Xiaoxiang Chen, and Suqiang Li. 2025. "Weak-Cue Mixed Similarity Matrix and Boundary Expansion Clustering for Multi-Target Multi-Camera Tracking Systems in Highway Scenarios" Electronics 14, no. 9: 1896. https://doi.org/10.3390/electronics14091896

APA StyleChan, S., Ni, S., Wang, Z., Yao, Y., Hu, J., Chen, X., & Li, S. (2025). Weak-Cue Mixed Similarity Matrix and Boundary Expansion Clustering for Multi-Target Multi-Camera Tracking Systems in Highway Scenarios. Electronics, 14(9), 1896. https://doi.org/10.3390/electronics14091896